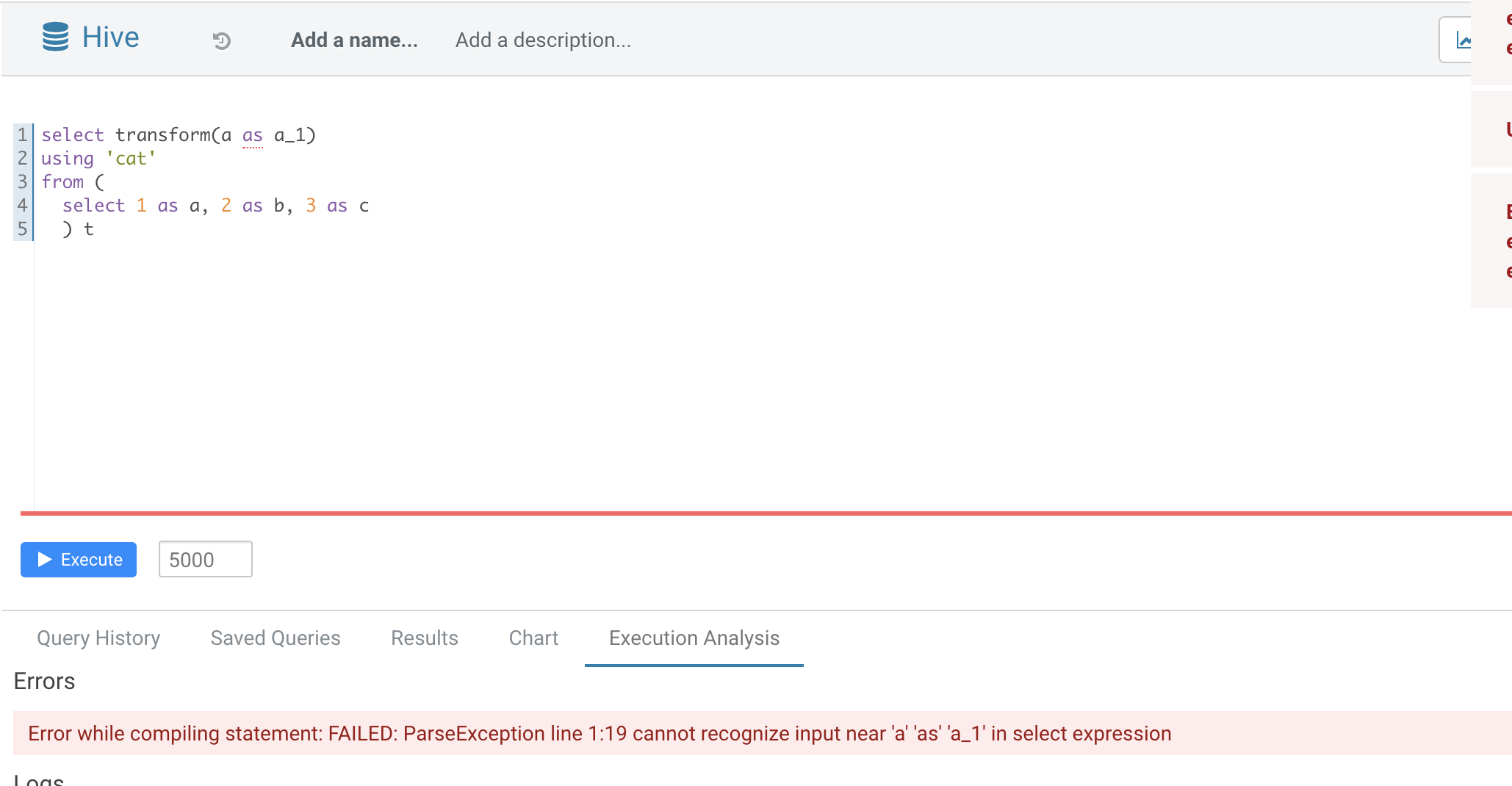

[SPARK-35070][SQL] TRANSFORM not support alias in inputs

### What changes were proposed in this pull request? Normal function parameters should not support alias, hive not support too  In this pr we forbid use alias in `TRANSFORM`'s inputs ### Why are the changes needed? Fix bug ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Added UT Closes #32165 from AngersZhuuuu/SPARK-35070. Authored-by: Angerszhuuuu <angers.zhu@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This commit is contained in:

parent

767ea86ecf

commit

71133e1c2a

|

|

@ -77,6 +77,8 @@ license: |

|

||||||

|

|

||||||

- In Spark 3.2, `CREATE TABLE .. LIKE ..` command can not use reserved properties. You need their specific clauses to specify them, for example, `CREATE TABLE test1 LIKE test LOCATION 'some path'`. You can set `spark.sql.legacy.notReserveProperties` to `true` to ignore the `ParseException`, in this case, these properties will be silently removed, for example: `TBLPROPERTIES('owner'='yao')` will have no effect. In Spark version 3.1 and below, the reserved properties can be used in `CREATE TABLE .. LIKE ..` command but have no side effects, for example, `TBLPROPERTIES('location'='/tmp')` does not change the location of the table but only create a headless property just like `'a'='b'`.

|

- In Spark 3.2, `CREATE TABLE .. LIKE ..` command can not use reserved properties. You need their specific clauses to specify them, for example, `CREATE TABLE test1 LIKE test LOCATION 'some path'`. You can set `spark.sql.legacy.notReserveProperties` to `true` to ignore the `ParseException`, in this case, these properties will be silently removed, for example: `TBLPROPERTIES('owner'='yao')` will have no effect. In Spark version 3.1 and below, the reserved properties can be used in `CREATE TABLE .. LIKE ..` command but have no side effects, for example, `TBLPROPERTIES('location'='/tmp')` does not change the location of the table but only create a headless property just like `'a'='b'`.

|

||||||

|

|

||||||

|

- In Spark 3.2, `TRANSFORM` operator can't support alias in inputs. In Spark 3.1 and earlier, we can write script transform like `SELECT TRANSFORM(a AS c1, b AS c2) USING 'cat' FROM TBL`.

|

||||||

|

|

||||||

## Upgrading from Spark SQL 3.0 to 3.1

|

## Upgrading from Spark SQL 3.0 to 3.1

|

||||||

|

|

||||||

- In Spark 3.1, statistical aggregation function includes `std`, `stddev`, `stddev_samp`, `variance`, `var_samp`, `skewness`, `kurtosis`, `covar_samp`, `corr` will return `NULL` instead of `Double.NaN` when `DivideByZero` occurs during expression evaluation, for example, when `stddev_samp` applied on a single element set. In Spark version 3.0 and earlier, it will return `Double.NaN` in such case. To restore the behavior before Spark 3.1, you can set `spark.sql.legacy.statisticalAggregate` to `true`.

|

- In Spark 3.1, statistical aggregation function includes `std`, `stddev`, `stddev_samp`, `variance`, `var_samp`, `skewness`, `kurtosis`, `covar_samp`, `corr` will return `NULL` instead of `Double.NaN` when `DivideByZero` occurs during expression evaluation, for example, when `stddev_samp` applied on a single element set. In Spark version 3.0 and earlier, it will return `Double.NaN` in such case. To restore the behavior before Spark 3.1, you can set `spark.sql.legacy.statisticalAggregate` to `true`.

|

||||||

|

|

|

||||||

|

|

@ -524,9 +524,9 @@ querySpecification

|

||||||

;

|

;

|

||||||

|

|

||||||

transformClause

|

transformClause

|

||||||

: (SELECT kind=TRANSFORM '(' setQuantifier? namedExpressionSeq ')'

|

: (SELECT kind=TRANSFORM '(' setQuantifier? expressionSeq ')'

|

||||||

| kind=MAP setQuantifier? namedExpressionSeq

|

| kind=MAP setQuantifier? expressionSeq

|

||||||

| kind=REDUCE setQuantifier? namedExpressionSeq)

|

| kind=REDUCE setQuantifier? expressionSeq)

|

||||||

inRowFormat=rowFormat?

|

inRowFormat=rowFormat?

|

||||||

(RECORDWRITER recordWriter=STRING)?

|

(RECORDWRITER recordWriter=STRING)?

|

||||||

USING script=STRING

|

USING script=STRING

|

||||||

|

|

@ -774,6 +774,10 @@ expression

|

||||||

: booleanExpression

|

: booleanExpression

|

||||||

;

|

;

|

||||||

|

|

||||||

|

expressionSeq

|

||||||

|

: expression (',' expression)*

|

||||||

|

;

|

||||||

|

|

||||||

booleanExpression

|

booleanExpression

|

||||||

: NOT booleanExpression #logicalNot

|

: NOT booleanExpression #logicalNot

|

||||||

| EXISTS '(' query ')' #exists

|

| EXISTS '(' query ')' #exists

|

||||||

|

|

|

||||||

|

|

@ -627,6 +627,12 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg

|

||||||

.map(typedVisit[Expression])

|

.map(typedVisit[Expression])

|

||||||

}

|

}

|

||||||

|

|

||||||

|

override def visitExpressionSeq(ctx: ExpressionSeqContext): Seq[Expression] = {

|

||||||

|

Option(ctx).toSeq

|

||||||

|

.flatMap(_.expression.asScala)

|

||||||

|

.map(typedVisit[Expression])

|

||||||

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Create a logical plan using a having clause.

|

* Create a logical plan using a having clause.

|

||||||

*/

|

*/

|

||||||

|

|

@ -680,8 +686,8 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg

|

||||||

|

|

||||||

val plan = visitCommonSelectQueryClausePlan(

|

val plan = visitCommonSelectQueryClausePlan(

|

||||||

relation,

|

relation,

|

||||||

|

visitExpressionSeq(transformClause.expressionSeq),

|

||||||

lateralView,

|

lateralView,

|

||||||

transformClause.namedExpressionSeq,

|

|

||||||

whereClause,

|

whereClause,

|

||||||

aggregationClause,

|

aggregationClause,

|

||||||

havingClause,

|

havingClause,

|

||||||

|

|

@ -726,8 +732,8 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg

|

||||||

|

|

||||||

val plan = visitCommonSelectQueryClausePlan(

|

val plan = visitCommonSelectQueryClausePlan(

|

||||||

relation,

|

relation,

|

||||||

|

visitNamedExpressionSeq(selectClause.namedExpressionSeq),

|

||||||

lateralView,

|

lateralView,

|

||||||

selectClause.namedExpressionSeq,

|

|

||||||

whereClause,

|

whereClause,

|

||||||

aggregationClause,

|

aggregationClause,

|

||||||

havingClause,

|

havingClause,

|

||||||

|

|

@ -740,8 +746,8 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg

|

||||||

|

|

||||||

def visitCommonSelectQueryClausePlan(

|

def visitCommonSelectQueryClausePlan(

|

||||||

relation: LogicalPlan,

|

relation: LogicalPlan,

|

||||||

|

expressions: Seq[Expression],

|

||||||

lateralView: java.util.List[LateralViewContext],

|

lateralView: java.util.List[LateralViewContext],

|

||||||

namedExpressionSeq: NamedExpressionSeqContext,

|

|

||||||

whereClause: WhereClauseContext,

|

whereClause: WhereClauseContext,

|

||||||

aggregationClause: AggregationClauseContext,

|

aggregationClause: AggregationClauseContext,

|

||||||

havingClause: HavingClauseContext,

|

havingClause: HavingClauseContext,

|

||||||

|

|

@ -753,8 +759,6 @@ class AstBuilder extends SqlBaseBaseVisitor[AnyRef] with SQLConfHelper with Logg

|

||||||

// Add where.

|

// Add where.

|

||||||

val withFilter = withLateralView.optionalMap(whereClause)(withWhereClause)

|

val withFilter = withLateralView.optionalMap(whereClause)(withWhereClause)

|

||||||

|

|

||||||

val expressions = visitNamedExpressionSeq(namedExpressionSeq)

|

|

||||||

|

|

||||||

// Add aggregation or a project.

|

// Add aggregation or a project.

|

||||||

val namedExpressions = expressions.map {

|

val namedExpressions = expressions.map {

|

||||||

case e: NamedExpression => e

|

case e: NamedExpression => e

|

||||||

|

|

|

||||||

|

|

@ -206,7 +206,7 @@ FROM script_trans

|

||||||

LIMIT 1;

|

LIMIT 1;

|

||||||

|

|

||||||

SELECT TRANSFORM(

|

SELECT TRANSFORM(

|

||||||

b AS d5, a,

|

b, a,

|

||||||

CASE

|

CASE

|

||||||

WHEN c > 100 THEN 1

|

WHEN c > 100 THEN 1

|

||||||

WHEN c < 100 THEN 2

|

WHEN c < 100 THEN 2

|

||||||

|

|

@ -225,45 +225,45 @@ SELECT TRANSFORM(*)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4;

|

WHERE a <= 4;

|

||||||

|

|

||||||

SELECT TRANSFORM(b AS d, MAX(a) as max_a, CAST(SUM(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a), CAST(SUM(c) AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

GROUP BY b;

|

GROUP BY b;

|

||||||

|

|

||||||

SELECT TRANSFORM(b AS d, MAX(a) FILTER (WHERE a > 3) AS max_a, CAST(SUM(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a) FILTER (WHERE a > 3), CAST(SUM(c) AS STRING))

|

||||||

USING 'cat' AS (a,b,c)

|

USING 'cat' AS (a,b,c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

GROUP BY b;

|

GROUP BY b;

|

||||||

|

|

||||||

SELECT TRANSFORM(b, MAX(a) as max_a, CAST(sum(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a), CAST(sum(c) AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 2

|

WHERE a <= 2

|

||||||

GROUP BY b;

|

GROUP BY b;

|

||||||

|

|

||||||

SELECT TRANSFORM(b, MAX(a) as max_a, CAST(SUM(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a), CAST(SUM(c) AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

GROUP BY b

|

GROUP BY b

|

||||||

HAVING max_a > 0;

|

HAVING MAX(a) > 0;

|

||||||

|

|

||||||

SELECT TRANSFORM(b, MAX(a) as max_a, CAST(SUM(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a), CAST(SUM(c) AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

GROUP BY b

|

GROUP BY b

|

||||||

HAVING max(a) > 1;

|

HAVING MAX(a) > 1;

|

||||||

|

|

||||||

SELECT TRANSFORM(b, MAX(a) OVER w as max_a, CAST(SUM(c) OVER w AS STRING))

|

SELECT TRANSFORM(b, MAX(a) OVER w, CAST(SUM(c) OVER w AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

WINDOW w AS (PARTITION BY b ORDER BY a);

|

WINDOW w AS (PARTITION BY b ORDER BY a);

|

||||||

|

|

||||||

SELECT TRANSFORM(b, MAX(a) as max_a, CAST(SUM(c) AS STRING), myCol, myCol2)

|

SELECT TRANSFORM(b, MAX(a), CAST(SUM(c) AS STRING), myCol, myCol2)

|

||||||

USING 'cat' AS (a, b, c, d, e)

|

USING 'cat' AS (a, b, c, d, e)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

LATERAL VIEW explode(array(array(1,2,3))) myTable AS myCol

|

LATERAL VIEW explode(array(array(1,2,3))) myTable AS myCol

|

||||||

|

|

@ -280,7 +280,7 @@ FROM(

|

||||||

SELECT a + 1;

|

SELECT a + 1;

|

||||||

|

|

||||||

FROM(

|

FROM(

|

||||||

SELECT TRANSFORM(a, SUM(b) b)

|

SELECT TRANSFORM(a, SUM(b))

|

||||||

USING 'cat' AS (`a` INT, b STRING)

|

USING 'cat' AS (`a` INT, b STRING)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

GROUP BY a

|

GROUP BY a

|

||||||

|

|

@ -308,14 +308,6 @@ HAVING true;

|

||||||

|

|

||||||

SET spark.sql.legacy.parser.havingWithoutGroupByAsWhere=false;

|

SET spark.sql.legacy.parser.havingWithoutGroupByAsWhere=false;

|

||||||

|

|

||||||

SET spark.sql.parser.quotedRegexColumnNames=true;

|

|

||||||

|

|

||||||

SELECT TRANSFORM(`(a|b)?+.+`)

|

|

||||||

USING 'cat' AS (c)

|

|

||||||

FROM script_trans;

|

|

||||||

|

|

||||||

SET spark.sql.parser.quotedRegexColumnNames=false;

|

|

||||||

|

|

||||||

-- SPARK-34634: self join using CTE contains transform

|

-- SPARK-34634: self join using CTE contains transform

|

||||||

WITH temp AS (

|

WITH temp AS (

|

||||||

SELECT TRANSFORM(a) USING 'cat' AS (b string) FROM t

|

SELECT TRANSFORM(a) USING 'cat' AS (b string) FROM t

|

||||||

|

|

@ -331,3 +323,22 @@ SELECT TRANSFORM(ALL b, a, c)

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4;

|

WHERE a <= 4;

|

||||||

|

|

||||||

|

-- SPARK-35070: TRANSFORM not support alias in inputs

|

||||||

|

SELECT TRANSFORM(b AS b_1, MAX(a), CAST(sum(c) AS STRING))

|

||||||

|

USING 'cat' AS (a, b, c)

|

||||||

|

FROM script_trans

|

||||||

|

WHERE a <= 2

|

||||||

|

GROUP BY b;

|

||||||

|

|

||||||

|

SELECT TRANSFORM(b b_1, MAX(a), CAST(sum(c) AS STRING))

|

||||||

|

USING 'cat' AS (a, b, c)

|

||||||

|

FROM script_trans

|

||||||

|

WHERE a <= 2

|

||||||

|

GROUP BY b;

|

||||||

|

|

||||||

|

SELECT TRANSFORM(b, MAX(a) AS max_a, CAST(sum(c) AS STRING))

|

||||||

|

USING 'cat' AS (a, b, c)

|

||||||

|

FROM script_trans

|

||||||

|

WHERE a <= 2

|

||||||

|

GROUP BY b;

|

||||||

|

|

|

||||||

|

|

@ -376,7 +376,7 @@ struct<a:int,b:int>

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

SELECT TRANSFORM(

|

SELECT TRANSFORM(

|

||||||

b AS d5, a,

|

b, a,

|

||||||

CASE

|

CASE

|

||||||

WHEN c > 100 THEN 1

|

WHEN c > 100 THEN 1

|

||||||

WHEN c < 100 THEN 2

|

WHEN c < 100 THEN 2

|

||||||

|

|

@ -416,7 +416,7 @@ struct<a:string,b:string,c:string>

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

SELECT TRANSFORM(b AS d, MAX(a) as max_a, CAST(SUM(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a), CAST(SUM(c) AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

|

|

@ -429,7 +429,7 @@ struct<a:string,b:string,c:string>

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

SELECT TRANSFORM(b AS d, MAX(a) FILTER (WHERE a > 3) AS max_a, CAST(SUM(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a) FILTER (WHERE a > 3), CAST(SUM(c) AS STRING))

|

||||||

USING 'cat' AS (a,b,c)

|

USING 'cat' AS (a,b,c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

|

|

@ -442,7 +442,7 @@ struct<a:string,b:string,c:string>

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

SELECT TRANSFORM(b, MAX(a) as max_a, CAST(sum(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a), CAST(sum(c) AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 2

|

WHERE a <= 2

|

||||||

|

|

@ -454,12 +454,12 @@ struct<a:string,b:string,c:string>

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

SELECT TRANSFORM(b, MAX(a) as max_a, CAST(SUM(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a), CAST(SUM(c) AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

GROUP BY b

|

GROUP BY b

|

||||||

HAVING max_a > 0

|

HAVING MAX(a) > 0

|

||||||

-- !query schema

|

-- !query schema

|

||||||

struct<a:string,b:string,c:string>

|

struct<a:string,b:string,c:string>

|

||||||

-- !query output

|

-- !query output

|

||||||

|

|

@ -468,12 +468,12 @@ struct<a:string,b:string,c:string>

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

SELECT TRANSFORM(b, MAX(a) as max_a, CAST(SUM(c) AS STRING))

|

SELECT TRANSFORM(b, MAX(a), CAST(SUM(c) AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

GROUP BY b

|

GROUP BY b

|

||||||

HAVING max(a) > 1

|

HAVING MAX(a) > 1

|

||||||

-- !query schema

|

-- !query schema

|

||||||

struct<a:string,b:string,c:string>

|

struct<a:string,b:string,c:string>

|

||||||

-- !query output

|

-- !query output

|

||||||

|

|

@ -481,7 +481,7 @@ struct<a:string,b:string,c:string>

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

SELECT TRANSFORM(b, MAX(a) OVER w as max_a, CAST(SUM(c) OVER w AS STRING))

|

SELECT TRANSFORM(b, MAX(a) OVER w, CAST(SUM(c) OVER w AS STRING))

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

|

|

@ -494,7 +494,7 @@ struct<a:string,b:string,c:string>

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

SELECT TRANSFORM(b, MAX(a) as max_a, CAST(SUM(c) AS STRING), myCol, myCol2)

|

SELECT TRANSFORM(b, MAX(a), CAST(SUM(c) AS STRING), myCol, myCol2)

|

||||||

USING 'cat' AS (a, b, c, d, e)

|

USING 'cat' AS (a, b, c, d, e)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

LATERAL VIEW explode(array(array(1,2,3))) myTable AS myCol

|

LATERAL VIEW explode(array(array(1,2,3))) myTable AS myCol

|

||||||

|

|

@ -527,7 +527,7 @@ struct<(a + 1):int>

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

FROM(

|

FROM(

|

||||||

SELECT TRANSFORM(a, SUM(b) b)

|

SELECT TRANSFORM(a, SUM(b))

|

||||||

USING 'cat' AS (`a` INT, b STRING)

|

USING 'cat' AS (`a` INT, b STRING)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

GROUP BY a

|

GROUP BY a

|

||||||

|

|

@ -600,34 +600,6 @@ struct<key:string,value:string>

|

||||||

spark.sql.legacy.parser.havingWithoutGroupByAsWhere false

|

spark.sql.legacy.parser.havingWithoutGroupByAsWhere false

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

|

||||||

SET spark.sql.parser.quotedRegexColumnNames=true

|

|

||||||

-- !query schema

|

|

||||||

struct<key:string,value:string>

|

|

||||||

-- !query output

|

|

||||||

spark.sql.parser.quotedRegexColumnNames true

|

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

|

||||||

SELECT TRANSFORM(`(a|b)?+.+`)

|

|

||||||

USING 'cat' AS (c)

|

|

||||||

FROM script_trans

|

|

||||||

-- !query schema

|

|

||||||

struct<c:string>

|

|

||||||

-- !query output

|

|

||||||

3

|

|

||||||

6

|

|

||||||

9

|

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

|

||||||

SET spark.sql.parser.quotedRegexColumnNames=false

|

|

||||||

-- !query schema

|

|

||||||

struct<key:string,value:string>

|

|

||||||

-- !query output

|

|

||||||

spark.sql.parser.quotedRegexColumnNames false

|

|

||||||

|

|

||||||

|

|

||||||

-- !query

|

-- !query

|

||||||

WITH temp AS (

|

WITH temp AS (

|

||||||

SELECT TRANSFORM(a) USING 'cat' AS (b string) FROM t

|

SELECT TRANSFORM(a) USING 'cat' AS (b string) FROM t

|

||||||

|

|

@ -679,3 +651,69 @@ SELECT TRANSFORM(ALL b, a, c)

|

||||||

USING 'cat' AS (a, b, c)

|

USING 'cat' AS (a, b, c)

|

||||||

FROM script_trans

|

FROM script_trans

|

||||||

WHERE a <= 4

|

WHERE a <= 4

|

||||||

|

|

||||||

|

|

||||||

|

-- !query

|

||||||

|

SELECT TRANSFORM(b AS b_1, MAX(a), CAST(sum(c) AS STRING))

|

||||||

|

USING 'cat' AS (a, b, c)

|

||||||

|

FROM script_trans

|

||||||

|

WHERE a <= 2

|

||||||

|

GROUP BY b

|

||||||

|

-- !query schema

|

||||||

|

struct<>

|

||||||

|

-- !query output

|

||||||

|

org.apache.spark.sql.catalyst.parser.ParseException

|

||||||

|

|

||||||

|

no viable alternative at input 'SELECT TRANSFORM(b AS'(line 1, pos 19)

|

||||||

|

|

||||||

|

== SQL ==

|

||||||

|

SELECT TRANSFORM(b AS b_1, MAX(a), CAST(sum(c) AS STRING))

|

||||||

|

-------------------^^^

|

||||||

|

USING 'cat' AS (a, b, c)

|

||||||

|

FROM script_trans

|

||||||

|

WHERE a <= 2

|

||||||

|

GROUP BY b

|

||||||

|

|

||||||

|

|

||||||

|

-- !query

|

||||||

|

SELECT TRANSFORM(b b_1, MAX(a), CAST(sum(c) AS STRING))

|

||||||

|

USING 'cat' AS (a, b, c)

|

||||||

|

FROM script_trans

|

||||||

|

WHERE a <= 2

|

||||||

|

GROUP BY b

|

||||||

|

-- !query schema

|

||||||

|

struct<>

|

||||||

|

-- !query output

|

||||||

|

org.apache.spark.sql.catalyst.parser.ParseException

|

||||||

|

|

||||||

|

no viable alternative at input 'SELECT TRANSFORM(b b_1'(line 1, pos 19)

|

||||||

|

|

||||||

|

== SQL ==

|

||||||

|

SELECT TRANSFORM(b b_1, MAX(a), CAST(sum(c) AS STRING))

|

||||||

|

-------------------^^^

|

||||||

|

USING 'cat' AS (a, b, c)

|

||||||

|

FROM script_trans

|

||||||

|

WHERE a <= 2

|

||||||

|

GROUP BY b

|

||||||

|

|

||||||

|

|

||||||

|

-- !query

|

||||||

|

SELECT TRANSFORM(b, MAX(a) AS max_a, CAST(sum(c) AS STRING))

|

||||||

|

USING 'cat' AS (a, b, c)

|

||||||

|

FROM script_trans

|

||||||

|

WHERE a <= 2

|

||||||

|

GROUP BY b

|

||||||

|

-- !query schema

|

||||||

|

struct<>

|

||||||

|

-- !query output

|

||||||

|

org.apache.spark.sql.catalyst.parser.ParseException

|

||||||

|

|

||||||

|

no viable alternative at input 'SELECT TRANSFORM(b, MAX(a) AS'(line 1, pos 27)

|

||||||

|

|

||||||

|

== SQL ==

|

||||||

|

SELECT TRANSFORM(b, MAX(a) AS max_a, CAST(sum(c) AS STRING))

|

||||||

|

---------------------------^^^

|

||||||

|

USING 'cat' AS (a, b, c)

|

||||||

|

FROM script_trans

|

||||||

|

WHERE a <= 2

|

||||||

|

GROUP BY b

|

||||||

|

|

|

||||||

Loading…

Reference in a new issue