### What changes were proposed in this pull request?

Currently, in DSv2, we are still using the deprecated `buildForBatch` and `buildForStreaming`.

This PR implements the `build`, `toBatch`, `toStreaming` interfaces to replace the deprecated ones.

### Why are the changes needed?

Code refactor

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

exsting UT

Closes#32497 from linhongliu-db/dsv2-writer.

Lead-authored-by: Linhong Liu <linhong.liu@databricks.com>

Co-authored-by: Linhong Liu <67896261+linhongliu-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This pull request proposes a new API for streaming sources to signal that they can report metrics, and adds a use case to support Kafka micro batch stream to report the stats of # of offsets for the current offset falling behind the latest.

A public interface is added.

`metrics`: returns the metrics reported by the streaming source with given offset.

### Why are the changes needed?

The new API can expose any custom metrics for the "current" offset for streaming sources. Different from #31398, this PR makes metrics available to user through progress report, not through spark UI. A use case is that people want to know how the current offset falls behind the latest offset.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test for Kafka micro batch source v2 are added to test the Kafka use case.

Closes#31944 from yijiacui-db/SPARK-34297.

Authored-by: Yijia Cui <yijia.cui@databricks.com>

Signed-off-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to introduce a new JDBC option `refreshKrb5Config` which allows to reflect the change of `krb5.conf`.

### Why are the changes needed?

In the current master, JDBC datasources can't accept `refreshKrb5Config` which is defined in `Krb5LoginModule`.

So even if we change the `krb5.conf` after establishing a connection, the change will not be reflected.

The similar issue happens when we run multiple `*KrbIntegrationSuites` at the same time.

`MiniKDC` starts and stops every KerberosIntegrationSuite and different port number is recorded to `krb5.conf`.

Due to `SecureConnectionProvider.JDBCConfiguration` doesn't take `refreshKrb5Config`, KerberosIntegrationSuites except the first running one see the wrong port so those suites fail.

You can easily confirm with the following command.

```

build/sbt -Phive Phive-thriftserver -Pdocker-integration-tests "testOnly org.apache.spark.sql.jdbc.*KrbIntegrationSuite"

```

### Does this PR introduce _any_ user-facing change?

Yes. Users can set `refreshKrb5Config` to refresh krb5 relevant configuration.

### How was this patch tested?

New test.

Closes#32344 from sarutak/kerberos-refresh-issue.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

This is a re-proposal of https://github.com/apache/spark/pull/23163. Currently spark always requires a [local sort](https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala#L188) before writing to output table with dynamic partition/bucket columns. The sort can be unnecessary if cardinality of partition/bucket values is small, and can be avoided by keeping multiple output writers concurrently.

This PR introduces a config `spark.sql.maxConcurrentOutputFileWriters` (which disables this feature by default), where user can tune the maximal number of concurrent writers. The config is needed here as we cannot keep arbitrary number of writers in task memory which can cause OOM (especially for Parquet/ORC vectorization writer).

The feature is to first use concurrent writers to write rows. If the number of writers exceeds the above config specified limit. Sort rest of rows and write rows one by one (See `DynamicPartitionDataConcurrentWriter.writeWithIterator()`).

In addition, interface `WriteTaskStatsTracker` and its implementation `BasicWriteTaskStatsTracker` are also changed because previously they are relying on the assumption that only one writer is active for writing dynamic partitions and bucketed table.

### Why are the changes needed?

Avoid the sort before writing output for dynamic partitioned query and bucketed table.

Help improve CPU and IO performance for these queries.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Added unit test in `DataFrameReaderWriterSuite.scala`.

Closes#32198 from c21/writer.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch moves DS v2 custom metric classes to `org.apache.spark.sql.connector.metric` package. Moving `CustomAvgMetric` and `CustomSumMetric` to above package and make them as public java abstract class too.

### Why are the changes needed?

`CustomAvgMetric` and `CustomSumMetric` should be public APIs for developers to extend. As there are a few metric classes, we should put them together in one package.

### Does this PR introduce _any_ user-facing change?

No, dev only and they are not released yet.

### How was this patch tested?

Unit tests.

Closes#32348 from viirya/move-custom-metric-classes.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR aims to upgrade Kafka client to 2.8.0.

Note that Kafka 2.8.0 uses ZSTD JNI 1.4.9-1 like Apache Spark 3.2.0.

### Why are the changes needed?

This will bring the latest client-side improvement and bug fixes like the following examples.

- KAFKA-10631 ProducerFencedException is not Handled on Offest Commit

- KAFKA-10134 High CPU issue during rebalance in Kafka consumer after upgrading to 2.5

- KAFKA-12193 Re-resolve IPs when a client is disconnected

- KAFKA-10090 Misleading warnings: The configuration was supplied but isn't a known config

- KAFKA-9263 The new hw is added to incorrect log when ReplicaAlterLogDirsThread is replacing log

- KAFKA-10607 Ensure the error counts contains the NONE

- KAFKA-10458 Need a way to update quota for TokenBucket registered with Sensor

- KAFKA-10503 MockProducer doesn't throw ClassCastException when no partition for topic

**RELEASE NOTE**

- https://downloads.apache.org/kafka/2.8.0/RELEASE_NOTES.html

- https://downloads.apache.org/kafka/2.7.0/RELEASE_NOTES.html

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs with the existing tests because this is a dependency change.

Closes#32325 from dongjoon-hyun/SPARK-33913.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch proposes to add a couple of metrics in scan node for Kafka batch streaming query.

### Why are the changes needed?

When testing SS, I found it is hard to track data loss of SS reading from Kafka. The micro batch scan node has only one metric, number of output rows. Users have no idea how many offsets to fetch are out of Kafka, how many times data loss happens. These metrics are important for users to know the quality of SS query running.

### Does this PR introduce _any_ user-facing change?

Yes, adding two metrics to micro batch scan node for Kafka batch streaming.

### How was this patch tested?

Currently I tested on internal cluster with Kafka:

<img width="1193" alt="Screen Shot 2021-04-22 at 7 16 29 PM" src="https://user-images.githubusercontent.com/68855/115808460-61bf8100-a39f-11eb-99a9-65d22c3f5fb0.png">

I was trying to add unit test. But as our batch streaming query disallows to specify ending offsets. If I only specify an out-of-range starting offset, when we get offset range in `getRanges`, any negative size range will be filtered out. So it cannot actually test the case of fetched non-existing offset.

Closes#31398 from viirya/micro-batch-metrics.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

This PR fixes a test failure in `OracleIntegrationSuite`.

After SPARK-34843 (#31965), the way to divide partitions is changed and `OracleIntegrationSuites` is affected.

```

[info] - SPARK-22814 support date/timestamp types in partitionColumn *** FAILED *** (230 milliseconds)

[info] Set(""D" < '2018-07-11' or "D" is null", ""D" >= '2018-07-11' AND "D" < '2018-07-15'", ""D" >= '2018-07-15'") did not equal Set(""D" < '2018-07-10' or "D" is null", ""D" >= '2018-07-10' AND "D" < '2018-07-14'", ""D" >= '2018-07-14'") (OracleIntegrationSuite.scala:448)

[info] Analysis:

[info] Set(missingInLeft: ["D" < '2018-07-10' or "D" is null, "D" >= '2018-07-10' AND "D" < '2018-07-14', "D" >= '2018-07-14'], missingInRight: ["D" < '2018-07-11' or "D" is null, "D" >= '2018-07-11' AND "D" < '2018-07-15', "D" >= '2018-07-15'])

```

### Why are the changes needed?

To follow the previous change.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

The modified test.

Closes#32186 from sarutak/fix-oracle-date-error.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

One of the main performance bottlenecks in query compilation is overly-generic tree transformation methods, namely `mapChildren` and `withNewChildren` (defined in `TreeNode`). These methods have an overly-generic implementation to iterate over the children and rely on reflection to create new instances. We have observed that, especially for queries with large query plans, a significant amount of CPU cycles are wasted in these methods. In this PR we make these methods more efficient, by delegating the iteration and instantiation to concrete node types. The benchmarks show that we can expect significant performance improvement in total query compilation time in queries with large query plans (from 30-80%) and about 20% on average.

#### Problem detail

The `mapChildren` method in `TreeNode` is overly generic and costly. To be more specific, this method:

- iterates over all the fields of a node using Scala’s product iterator. While the iteration is not reflection-based, thanks to the Scala compiler generating code for `Product`, we create many anonymous functions and visit many nested structures (recursive calls).

The anonymous functions (presumably compiled to Java anonymous inner classes) also show up quite high on the list in the object allocation profiles, so we are putting unnecessary pressure on GC here.

- does a lot of comparisons. Basically for each element returned from the product iterator, we check if it is a child (contained in the list of children) and then transform it. We can avoid that by just iterating over children, but in the current implementation, we need to gather all the fields (only transform the children) so that we can instantiate the object using the reflection.

- creates objects using reflection, by delegating to the `makeCopy` method, which is several orders of magnitude slower than using the constructor.

#### Solution

The proposed solution in this PR is rather straightforward: we rewrite the `mapChildren` method using the `children` and `withNewChildren` methods. The default `withNewChildren` method suffers from the same problems as `mapChildren` and we need to make it more efficient by specializing it in concrete classes. Similar to how each concrete query plan node already defines its children, it should also define how they can be constructed given a new list of children. Actually, the implementation is quite simple in most cases and is a one-liner thanks to the copy method present in Scala case classes. Note that we cannot abstract over the copy method, it’s generated by the compiler for case classes if no other type higher in the hierarchy defines it. For most concrete nodes, the implementation of `withNewChildren` looks like this:

```

override def withNewChildren(newChildren: Seq[LogicalPlan]): LogicalPlan = copy(children = newChildren)

```

The current `withNewChildren` method has two properties that we should preserve:

- It returns the same instance if the provided children are the same as its children, i.e., it preserves referential equality.

- It copies tags and maintains the origin links when a new copy is created.

These properties are hard to enforce in the concrete node type implementation. Therefore, we propose a template method `withNewChildrenInternal` that should be rewritten by the concrete classes and let the `withNewChildren` method take care of referential equality and copying:

```

override def withNewChildren(newChildren: Seq[LogicalPlan]): LogicalPlan = {

if (childrenFastEquals(children, newChildren)) {

this

} else {

CurrentOrigin.withOrigin(origin) {

val res = withNewChildrenInternal(newChildren)

res.copyTagsFrom(this)

res

}

}

}

```

With the refactoring done in a previous PR (https://github.com/apache/spark/pull/31932) most tree node types fall in one of the categories of `Leaf`, `Unary`, `Binary` or `Ternary`. These traits have a more efficient implementation for `mapChildren` and define a more specialized version of `withNewChildrenInternal` that avoids creating unnecessary lists. For example, the `mapChildren` method in `UnaryLike` is defined as follows:

```

override final def mapChildren(f: T => T): T = {

val newChild = f(child)

if (newChild fastEquals child) {

this.asInstanceOf[T]

} else {

CurrentOrigin.withOrigin(origin) {

val res = withNewChildInternal(newChild)

res.copyTagsFrom(this.asInstanceOf[T])

res

}

}

}

```

#### Results

With this PR, we have observed significant performance improvements in query compilation time, more specifically in the analysis and optimization phases. The table below shows the TPC-DS queries that had more than 25% speedup in compilation times. Biggest speedups are observed in queries with large query plans.

| Query | Speedup |

| ------------- | ------------- |

|q4 |29%|

|q9 |81%|

|q14a |31%|

|q14b |28%|

|q22 |33%|

|q33 |29%|

|q34 |25%|

|q39 |27%|

|q41 |27%|

|q44 |26%|

|q47 |28%|

|q48 |76%|

|q49 |46%|

|q56 |26%|

|q58 |43%|

|q59 |46%|

|q60 |50%|

|q65 |59%|

|q66 |46%|

|q67 |52%|

|q69 |31%|

|q70 |30%|

|q96 |26%|

|q98 |32%|

#### Binary incompatibility

Changing the `withNewChildren` in `TreeNode` breaks the binary compatibility of the code compiled against older versions of Spark because now it is expected that concrete `TreeNode` subclasses all implement the `withNewChildrenInternal` method. This is a problem, for example, when users write custom expressions. This change is the right choice, since it forces all newly added expressions to Catalyst implement it in an efficient manner and will prevent future regressions.

Please note that we have not completely removed the old implementation and renamed it to `legacyWithNewChildren`. This method will be removed in the future and for now helps the transition. There are expressions such as `UpdateFields` that have a complex way of defining children. Writing `withNewChildren` for them requires refactoring the expression. For now, these expressions use the old, slow method. In a future PR we address these expressions.

### Does this PR introduce _any_ user-facing change?

This PR does not introduce user facing changes but my break binary compatibility of the code compiled against older versions. See the binary compatibility section.

### How was this patch tested?

This PR is mainly a refactoring and passes existing tests.

Closes#32030 from dbaliafroozeh/ImprovedMapChildren.

Authored-by: Ali Afroozeh <ali.afroozeh@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

This PR aims to add zstandard codec to the `AvroOptions.compression` comment.

### Why are the changes needed?

SPARK-34479 added zstandard codec.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

N/A

Closes#32050 from williamhyun/avro.

Authored-by: William Hyun <williamhyun3@gmail.com>

Signed-off-by: Yuming Wang <yumwang@ebay.com>

### What changes were proposed in this pull request?

https://github.com/apache/spark/pull/32015 added a way to run benchmarks much more easily in the same GitHub Actions build. This PR updates the benchmark results by using the way.

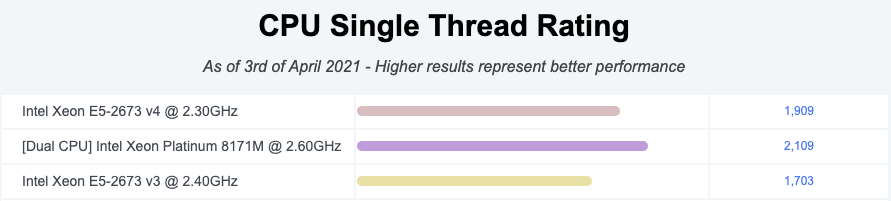

**NOTE** that looks like GitHub Actions use four types of CPU given my observations:

- Intel(R) Xeon(R) Platinum 8171M CPU 2.60GHz

- Intel(R) Xeon(R) CPU E5-2673 v4 2.30GHz

- Intel(R) Xeon(R) CPU E5-2673 v3 2.40GHz

- Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz

Given my quick research, seems like they perform roughly similarly:

I couldn't find enough information about Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz but the performance seems roughly similar given the numbers.

So shouldn't be a big deal especially given that this way is much easier, encourages contributors to run more and guarantee the same number of cores and same memory with the same softwares.

### Why are the changes needed?

To have a base line of the benchmarks accordingly.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

It was generated from:

- [Run benchmarks: * (JDK 11)](https://github.com/HyukjinKwon/spark/actions/runs/713575465)

- [Run benchmarks: * (JDK 8)](https://github.com/HyukjinKwon/spark/actions/runs/713154337)

Closes#32044 from HyukjinKwon/SPARK-34950.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Some `spark-submit` commands used to run benchmarks in the user's guide is wrong, we can't use these commands to run benchmarks successful.

So the major changes of this pr is correct these wrong commands, for example, run a benchmark which inherits from `SqlBasedBenchmark`, we must specify `--jars <spark core test jar>,<spark catalyst test jar>` because `SqlBasedBenchmark` based benchmark extends `BenchmarkBase(defined in spark core test jar)` and `SQLHelper(defined in spark catalyst test jar)`.

Another change of this pr is removed the `scalatest Assertions` dependency of Benchmarks because `scalatest-*.jar` are not in the distribution package, it will be troublesome to use.

### Why are the changes needed?

Make sure benchmarks can run using spark-submit cmd described in the guide

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Use the corrected `spark-submit` commands to run benchmarks successfully.

Closes#31995 from LuciferYang/fix-benchmark-guide.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This bug was introduced by SPARK-30428 at Apache Spark 3.0.0.

This PR fixes `FileScan.equals()`.

### Why are the changes needed?

- Without this fix `FileScan.equals` doesn't take `fileIndex` and `readSchema` into account.

- Partition filters and data filters added to `FileScan` (in #27112 and #27157) caused that canonicalized form of some `BatchScanExec` nodes don't match and this prevents some reuse possibilities.

### Does this PR introduce _any_ user-facing change?

Yes, before this fix incorrect reuse of `FileScan` and so `BatchScanExec` could have happed causing correctness issues.

### How was this patch tested?

Added new UTs.

Closes#31848 from peter-toth/SPARK-34756-fix-filescan-equality-check.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Update the Avro version to 1.10.2

### Why are the changes needed?

To stay up to date with upstream and catch compatibility issues with zstd

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit tests

Closes#31866 from iemejia/SPARK-27733-upgrade-avro-1.10.2.

Authored-by: Ismaël Mejía <iemejia@gmail.com>

Signed-off-by: Yuming Wang <yumwang@ebay.com>

### What changes were proposed in this pull request?

Consistently correct the spelling of `PushedFilters`

### Why are the changes needed?

bersprockets noted that it's wrong

### Does this PR introduce _any_ user-facing change?

Technically, I think it does. Practically, neither Google nor GitHub show anyone using `pushedFilers` outside of forks (or the discussion about fixing it started at https://github.com/apache/spark/pull/30323#issuecomment-725568719)

### How was this patch tested?

None beyond CI in the previous PR

Closes#30678 from jsoref/spelling-filters.

Authored-by: Josh Soref <jsoref@users.noreply.github.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR aims to update `PostgresIntegrationSuite` with the latest results.

### Why are the changes needed?

The latest PostgreSQL jar version is 42.2.19. Since 42.2.9, the test is broken because it returns `0.0` instead of `0.00`.

- https://jdbc.postgresql.org/documentation/changelog.html#version_42.2.19

42.2.9 (2019-12-06)

42.2.10 (2020-01-30)

42.2.11 (2020-03-09)

42.2.12 (2020-03-31)

42.2.13 (2020-06-04)

42.2.14 (2020-06-10)

42.2.15 (2020-08-14)

42.2.16 (2020-08-20)

42.2.17 (2020-10-09)

42.2.18 (2020-10-15)

42.2.19 (2021-02-18)

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CI with the updated test cases.

```

build/sbt -Pdocker-integration-tests 'docker-integration-tests/testOnly org.apache.spark.sql.jdbc.PostgresIntegrationSuite'

```

Closes#31910 from williamhyun/pg.

Authored-by: William Hyun <williamhyun3@gmail.com>

Signed-off-by: Yuming Wang <yumwang@ebay.com>

### What changes were proposed in this pull request?

This PR aims to exclude `zstd-jni` transitive dependency from kafka-client.

### Why are the changes needed?

To prevent future conflicts, the followings are removed. We should use Spark's zstd-jni dependency consistently.

```

$ build/sbt "token-provider-kafka-0-10/dependencyTree" | grep zstd

[info] | +-com.github.luben:zstd-jni:1.4.4-7

$ build/sbt "streaming-kafka-0-10/dependencyTree" | grep zstd

[info] | +-com.github.luben:zstd-jni:1.4.4-7

[info] | | +-com.github.luben:zstd-jni:1.4.4-7

$ build/sbt "sql-kafka-0-10/dependencyTree" | grep zstd

[info] | +-com.github.luben:zstd-jni:1.4.4-7

[info] | | +-com.github.luben:zstd-jni:1.4.4-7

```

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs.

Closes#31767 from dongjoon-hyun/SPARK-34650.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/31636. There was one place missed in `GangliaSink`, and we should also remove `SecurityManager`.

### Why are the changes needed?

To make `GangliaSink` work.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

It was found in the internal it tests in the company I work for.

Closes#31688 from HyukjinKwon/SPARK-34520-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Avro add zstandard codec since AVRO-2195. This pr add zstandard codec to Avro compression codec list.

### Why are the changes needed?

To make Avro support zstandard codec.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test.

Closes#31673 from wangyum/SPARK-34479.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Move the datetime rebase SQL configs from the `legacy` namespace by:

1. Renaming of the existing rebase configs like `spark.sql.legacy.parquet.datetimeRebaseModeInRead` -> `spark.sql.parquet.datetimeRebaseModeInRead`.

2. Add the legacy configs as alternatives

3. Deprecate the legacy rebase configs.

### Why are the changes needed?

The rebasing SQL configs like `spark.sql.legacy.parquet.datetimeRebaseModeInRead` can be used not only for migration from previous Spark versions but also to read/write datatime columns saved by other systems/frameworks/libs. So, the configs shouldn't be considered as legacy configs.

### Does this PR introduce _any_ user-facing change?

Should not. Users will see a warning if they still use one of the legacy configs.

### How was this patch tested?

1. Manually checking new configs:

```scala

scala> spark.conf.get("spark.sql.parquet.datetimeRebaseModeInRead")

res0: String = EXCEPTION

scala> spark.conf.set("spark.sql.legacy.parquet.datetimeRebaseModeInRead", "LEGACY")

21/02/17 14:57:10 WARN SQLConf: The SQL config 'spark.sql.legacy.parquet.datetimeRebaseModeInRead' has been deprecated in Spark v3.2 and may be removed in the future. Use 'spark.sql.parquet.datetimeRebaseModeInRead' instead.

scala> spark.conf.get("spark.sql.parquet.datetimeRebaseModeInRead")

res2: String = LEGACY

```

2. By running a datetime rebasing test suite:

```

$ build/sbt "test:testOnly *ParquetRebaseDatetimeV1Suite"

```

Closes#31576 from MaxGekk/rebase-confs-alternatives.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR changes the type mapping for `money` and `money[]` types for PostgreSQL.

Currently, those types are tried to convert to `DoubleType` and `ArrayType` of `double` respectively.

But the JDBC driver seems not to be able to handle those types properly.

https://github.com/pgjdbc/pgjdbc/issues/100https://github.com/pgjdbc/pgjdbc/issues/1405

Due to these issue, we can get the error like as follows.

money type.

```

[info] org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 1 times, most recent failure: Lost task 0.0 in stage 0.0 (TID 0) (192.168.1.204 executor driver): org.postgresql.util.PSQLException: Bad value for type double : 1,000.00

[info] at org.postgresql.jdbc.PgResultSet.toDouble(PgResultSet.java:3104)

[info] at org.postgresql.jdbc.PgResultSet.getDouble(PgResultSet.java:2432)

[info] at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.$anonfun$makeGetter$5(JdbcUtils.scala:418)

```

money[] type.

```

[info] org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 1 times, most recent failure: Lost task 0.0 in stage 0.0 (TID 0) (192.168.1.204 executor driver): org.postgresql.util.PSQLException: Bad value for type double : $2,000.00

[info] at org.postgresql.jdbc.PgResultSet.toDouble(PgResultSet.java:3104)

[info] at org.postgresql.jdbc.ArrayDecoding$5.parseValue(ArrayDecoding.java:235)

[info] at org.postgresql.jdbc.ArrayDecoding$AbstractObjectStringArrayDecoder.populateFromString(ArrayDecoding.java:122)

[info] at org.postgresql.jdbc.ArrayDecoding.readStringArray(ArrayDecoding.java:764)

[info] at org.postgresql.jdbc.PgArray.buildArray(PgArray.java:310)

[info] at org.postgresql.jdbc.PgArray.getArrayImpl(PgArray.java:171)

[info] at org.postgresql.jdbc.PgArray.getArray(PgArray.java:111)

```

For money type, a known workaround is to treat it as string so this PR do it.

For money[], however, there is no reasonable workaround so this PR remove the support.

### Why are the changes needed?

This is a bug.

### Does this PR introduce _any_ user-facing change?

Yes. As of this PR merged, money type is mapped to `StringType` rather than `DoubleType` and the support for money[] is stopped.

For money type, if the value is less than one thousand, `$100.00` for instance, it works without this change so I also updated the migration guide because it's a behavior change for such small values.

On the other hand, money[] seems not to work with any value but mentioned in the migration guide just in case.

### How was this patch tested?

New test.

Closes#31442 from sarutak/fix-for-money-type.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

Added option to provide Avro schema by URL.

### Why are the changes needed?

(copied from Jira ticket)

We have a use case in which we read a huge table in Avro format. About 30k columns.

using the default Hive reader - `AvroGenericRecordReader` it is just hangs forever. after 4 hours not even one task has finished.

We tried instead to use `spark.read.format("com.databricks.spark.avro").load(..)` but we failed on:

```

org.apache.spark.sql.AnalysisException: Found duplicate column(s) in the data schema

..

at org.apache.spark.sql.util.SchemaUtils$.checkColumnNameDuplication(SchemaUtils.scala:85)

at org.apache.spark.sql.util.SchemaUtils$.checkColumnNameDuplication(SchemaUtils.scala:67)

at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:421)

at org.apache.spark.sql.DataFrameReader.loadV1Source(DataFrameReader.scala:239)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:227)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:174)

... 53 elided

```

because files schema contain duplicate column names (when considering case-insensitive).

So we wanted to provide a user schema with non-duplicated fields, but the schema is huge. a few MBs. it is not practical to provide it in json format.

So we patched spark-avro to be able to get also `avroSchemaUrl` in addition to `avroSchema` and it worked perfectly.

### How was this patch tested?

added a unitest to AvroSuite and tested locally with patched version

Closes#31543 from uzadude/avro_schema.

Authored-by: oraviv <oraviv@paypal.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the PR, I propose new option `datetimeRebaseMode` for the Avro datasource. The option influences on loading ancient dates and timestamps column values from avro files.

The option supports the same values as the SQL config `spark.sql.legacy.avro.datetimeRebaseModeInRead` namely;

- `"LEGACY"`, when an option is set to this value, Spark rebases dates/timestamps from the legacy hybrid calendar (Julian + Gregorian) to the Proleptic Gregorian calendar.

- `"CORRECTED"`, dates/timestamps are read AS IS from avro files.

- `"EXCEPTION"`, when it is set as an option value, Spark will fail the reading if it sees ancient dates/timestamps that are ambiguous between the two calendars.

### Why are the changes needed?

1. New options will allow to load avro files from at least two sources in different rebasing modes in the same query. For instance:

```scala

val df1 = spark.read.option("datetimeRebaseMode", "legacy").format("avro").load(folder1)

val df2 = spark.read.option("datetimeRebaseMode", "corrected").format("avro").load(folder2)

df1.join(df2, ...)

```

Before the changes, it is impossible because the SQL config `spark.sql.legacy.avro.datetimeRebaseModeInRead` influences on both reads.

2. Mixing of Dataset/DataFrame and RDD APIs should become possible. Since SQL configs are not propagated through RDDs, the following code fails on ancient timestamps:

```scala

spark.conf.set("spark.sql.legacy.avro.datetimeRebaseModeInRead", "legacy")

spark.read.format("avro").load(folder).distinct.rdd.collect()

```

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

By running the modified test suites:

```

$ build/sbt "test:testOnly *AvroV1Suite"

$ build/sbt "test:testOnly *AvroV2Suite"

```

Closes#31529 from MaxGekk/avro-rebase-options.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes the issue that `PostgresDialect` can't treat arrays of some types.

Though PostgreSQL supports wide range of types (https://www.postgresql.org/docs/13/datatype.html), the current `PostgresDialect` can't treat arrays of the following types.

* xml

* tsvector

* tsquery

* macaddr

* macaddr8

* txid_snapshot

* pg_snapshot

* point

* line

* lseg

* box

* path

* polygon

* circle

* pg_lsn

* bit varying

* interval

NOTE: PostgreSQL doesn't implement arrays of serial types so this PR doesn't care about them.

### Why are the changes needed?

To provide better support with PostgreSQL.

### Does this PR introduce _any_ user-facing change?

Yes. PostgresDialect can handle arrays of types shown above.

### How was this patch tested?

New test.

Closes#31419 from sarutak/postgres-array-types.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR replaces `withTimeZone` defined and used in `OracleIntegrationSuite` with `DateTimeTestUtils.withDefaultTimeZone` which is defined as a utility method.

### Why are the changes needed?

Both methods are semantically the same so it might be better to use the utility one.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

`OracleIntegrationSuite` passes.

Closes#31465 from sarutak/oracle-timezone-util.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR added tests for some non-array types in PostgreSQL.

PostgreSQL supports wide range of types (https://www.postgresql.org/docs/13/datatype.html) and `PostgresIntegrationSuite` contains tests for some types but ones for the following types are missing.

* bit varying

* point

* line

* lseg

* box

* path

* polygon

* circle

* pg_lsn

* macaddr

* macaddr8

* numeric

* pg_snapshot

* real

* time

* timestamp

* tsquery

* tsvector

* txid_snapshot

* xml

NOTE: Handling money types can be buggy so this PR doesn't add tests for those types.

### Why are the changes needed?

To ensure those types work with Spark well.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Extended `PostgresIntegrationSuite`.

Closes#31456 from sarutak/test-for-some-types-postgresql.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR proposes to expose the number of total paths in Utils.buildLocationMetadata(), with relaxing space usage a bit (around 10+ chars).

Suppose the first 2 of 5 paths are only fit to the threshold, the outputs between the twos are below:

* before the change: `[path1, path2]`

* after the change: `(5 paths)[path1, path2, ...]`

### Why are the changes needed?

SPARK-31793 silently truncates the paths hence end users can't indicate how many paths are truncated, and even more, whether paths are truncated or not.

### Does this PR introduce _any_ user-facing change?

Yes, the location metadata will also show how many paths are truncated (not shown), instead of silently truncated.

### How was this patch tested?

Modified UTs

Closes#31464 from HeartSaVioR/SPARK-34339.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Due to user-experience (confusing to Spark users - java.sql.Time using milliseconds vs Spark using microseconds; and user losing useful functions like hour(), minute(), etc on the column), we have decided to revert back to use TimestampType but this time we will enforce the hour to be consistently across system timezone (via offset manipulation) and date part fixed to zero epoch.

Full Discussion with Wenchen Fan Wenchen Fan regarding this ticket is here https://github.com/apache/spark/pull/30902#discussion_r569186823

### Why are the changes needed?

Revert and improvement to sql.Time handling

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit tests and integration tests

Closes#31473 from saikocat/SPARK-34357.

Authored-by: Hoa <hoameomu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Improve the error messages for incompatibilities between Avro and Catalyst schemas. First, make `AvroSerializer` more similar to `AvroDeserializer` in printing out contextual information such as hierarchical field names. Standardize exception messages in both serializer and deserializer to always include such contextual information, and include a top-level exception which shows the full schemas which were being parsed when the incompatibility was found. Both now print out the hierarchical name for both the Avro and Catalyst fields, since they may be different due to case sensitivity and Avro union handling.

### Why are the changes needed?

The error messages in this type of failure scenario are very lacking in information on the write path (`AvroSerializer`). Below are two examples of messages that provide insufficient information to determine what went wrong (lacking in field names, context about the overall schema structure, etc.).

```

org.apache.spark.sql.avro.IncompatibleSchemaException: Cannot convert Catalyst type IntegerType to Avro type "float".

org.apache.spark.sql.avro.IncompatibleSchemaException: Cannot convert Catalyst type StructType(StructField(bar,IntegerType,true)) to Avro type {"type":"record","name":"test","fields":[{"name":"NOTbar","type":["null","int"],"default":null}]}.

```

The error messages currently existing in `AvroDeserializer` are much better, but still not very internally consistent, and it would be better if they were consistent with the newly added exception messages in `AvroSerializer`.

### Does this PR introduce _any_ user-facing change?

Error messages when there are incompatibilities between Avro and Catalyst schemas will be greatly improved on when writing Avro data using the `avroSchema` option, a little bit improved when reading Avro data, and much more consistent between the two.

Below is an example of a new message. See `AvroSerdeSuite` for more examples.

```

org.apache.spark.sql.avro.IncompatibleSchemaException: Cannot convert Catalyst type STRUCT<`foo`: STRUCT<`bar`: INT>> to Avro type {"type":"record","name":"top","fields":[{"name":"foo","type":"int"}]}

at org.apache.spark.sql.avro.AvroSerializer.liftedTree1$1(AvroSerializer.scala:83)

...

Caused by: org.apache.spark.sql.avro.IncompatibleSchemaException: Cannot convert Catalyst field 'foo' to Avro field 'foo' because schema is incompatible (sqlType = STRUCT<`bar`: INT>, avroType = "int")

at org.apache.spark.sql.avro.AvroSerializer.newConverter(AvroSerializer.scala:230)

...

```

### How was this patch tested?

New unit test suite, `AvroSerdeSuite`, was added to test corner cases on `AvroSerializer` and `AvroDeserializer` and verify that the exception messages are as expected. Existing tests in `AvroSuite` also continue to pass, with modifications in places where assertions were made about the exceptions that would be thrown.

Closes#31333 from xkrogen/xkrogen-SPARK-34182-avro-serde-errormessages.

Authored-by: Erik Krogen <xkrogen@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Change `AvroSuite."Ignore corrupt Avro file if flag IGNORE_CORRUPT_FILES"` to use `episodesAvro`, which is loaded as a resource using the classloader, instead of trying to read `episodes.avro` directly from a relative file path.

### Why are the changes needed?

This is the proper way to read resource files, and currently this test will fail when called from my IntelliJ IDE, though it will succeed when called from Maven/sbt, presumably due to different working directory handling.

### Does this PR introduce _any_ user-facing change?

No, unit test only.

### How was this patch tested?

Previous failure from IntelliJ:

```

Source 'src/test/resources/episodes.avro' does not exist

java.io.FileNotFoundException: Source 'src/test/resources/episodes.avro' does not exist

at org.apache.commons.io.FileUtils.checkFileRequirements(FileUtils.java:1405)

at org.apache.commons.io.FileUtils.copyFile(FileUtils.java:1072)

at org.apache.commons.io.FileUtils.copyFile(FileUtils.java:1040)

at org.apache.spark.sql.avro.AvroSuite.$anonfun$new$34(AvroSuite.scala:397)

at org.apache.spark.sql.avro.AvroSuite.$anonfun$new$34$adapted(AvroSuite.scala:388)

```

Now it succeeds.

Closes#31332 from xkrogen/xkrogen-SPARK-34231-avrosuite-testfix.

Authored-by: Erik Krogen <xkrogen@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Using a function like `.mkString` or `.getLines` directly on a `scala.io.Source` opened by `fromFile`, `fromURL`, `fromURI ` will leak the underlying file handle, this pr use the `Utils.tryWithResource` method wrap the `BufferedSource` to ensure these `BufferedSource` closed.

### Why are the changes needed?

Avoid file handle leak.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#31323 from LuciferYang/source-not-closed.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to fix Avro data source to use the decimal precision and scale of file schema.

### Why are the changes needed?

The decimal value should be interpreted with its original precision and scale. Otherwise, it returns incorrect result like the following. The schema mismatch happens when we use `userSpecifiedSchema` or there are multiple files with inconsistent schema or HiveMetastore schema is updated by the user.

```scala

scala> sql("SELECT 3.14 a").write.format("avro").save("/tmp/avro")

scala> spark.read.schema("a DECIMAL(4, 3)").format("avro").load("/tmp/avro").show

+-----+

| a|

+-----+

|0.314|

+-----+

```

### Does this PR introduce _any_ user-facing change?

Yes, this will return correct result.

### How was this patch tested?

Pass the CI with the newly added test case.

Closes#31329 from dongjoon-hyun/SPARK-34229.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Make the field name matching between Avro and Catalyst schemas, on both the reader and writer paths, respect the global SQL settings for case sensitivity (i.e. case-insensitive by default). `AvroSerializer` and `AvroDeserializer` share a common utility in `AvroUtils` to search for an Avro field to match a given Catalyst field.

### Why are the changes needed?

Spark SQL is normally case-insensitive (by default), but currently when `AvroSerializer` and `AvroDeserializer` perform matching between Catalyst schemas and Avro schemas, the matching is done in a case-sensitive manner. So for example the following will fail:

```scala

val avroSchema =

"""

|{

| "type" : "record",

| "name" : "test_schema",

| "fields" : [

| {"name": "foo", "type": "int"},

| {"name": "BAR", "type": "int"}

| ]

|}

""".stripMargin

val df = Seq((1, 3), (2, 4)).toDF("FOO", "bar")

df.write.option("avroSchema", avroSchema).format("avro").save(savePath)

```

The same is true on the read path, if we assume `testAvro` has been written using the schema above, the below will fail to match the fields:

```scala

df.read.schema(new StructType().add("FOO", IntegerType).add("bar", IntegerType))

.format("avro").load(testAvro)

```

### Does this PR introduce _any_ user-facing change?

When reading Avro data, or writing Avro data using the `avroSchema` option, field matching will be performed with case sensitivity respecting the global SQL settings.

### How was this patch tested?

New tests added to `AvroSuite` to validate the case sensitivity logic in an end-to-end manner through the SQL engine.

Closes#31201 from xkrogen/xkrogen-SPARK-34133-avro-serde-casesensitivity-errormessages.

Authored-by: Erik Krogen <xkrogen@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes the following integration suites to reflect the change of SPARK-33888.

* PostgresIntegrationSuite

* MySQLIntegrationSuite

* MsSqlServerIntegrationSuite

* DB2IntegrationSuite

### Why are the changes needed?

Those suites doesn't pass currently in `master` branch.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Those suites pass.

Closes#31274 from sarutak/fix-integration-suites.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch uses the available offset range obtained during polling Kafka records to do offset validation check.

### Why are the changes needed?

We support non-consecutive offsets for Kafka since 2.4.0. In `fetchRecord`, we do offset validation by checking if the offset is in available offset range. But currently we obtain latest available offset range to do the check. It looks not correct as the available offset range could be changed during the batch, so the available offset range is different than the one when we polling the records from Kafka.

It is possible that an offset is valid when polling, but at the time we do the above check, it is out of latest available offset range. We will wrongly consider it as data loss case and fail the query or drop the record.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

This should pass existing unit tests.

This is hard to have unit test as the Kafka producer and the consumer is asynchronous. Further, we also need to make the offset out of new available offset range.

Closes#31275 from viirya/SPARK-34187.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR fixes the issue that reading tables which contain spatial datatypes from MS SQL Server fails.

MS SQL server supports two non-standard spatial JDBC types, `geometry` and `geography` but Spark SQL can't treat them

```

java.sql.SQLException: Unrecognized SQL type -157

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.getCatalystType(JdbcUtils.scala:251)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.$anonfun$getSchema$1(JdbcUtils.scala:321)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.getSchema(JdbcUtils.scala:321)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCRDD$.resolveTable(JDBCRDD.scala:63)

at org.apache.spark.sql.execution.datasources.jdbc.JDBCRelation$.getSchema(JDBCRelation.scala:226)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcRelationProvider.createRelation(JdbcRelationProvider.scala:35)

at org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:364)

at org.apache.spark.sql.DataFrameReader.loadV1Source(DataFrameReader.scala:366)

at org.apache.spark.sql.DataFrameReader.$anonfun$load$2(DataFrameReader.scala:355)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:355)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:240)

at org.apache.spark.sql.DataFrameReader.jdbc(DataFrameReader.scala:381)

```

Considering the [data type mapping](https://docs.microsoft.com/ja-jp/sql/connect/jdbc/using-basic-data-types?view=sql-server-ver15) says, I think those spatial types can be mapped to Catalyst's `BinaryType`.

### Why are the changes needed?

To provide better support.

### Does this PR introduce _any_ user-facing change?

Yes. MS SQL Server users can use `geometry` and `geography` types in datasource tables.

### How was this patch tested?

New test case added to `MsSqlServerIntegrationSuite`.

Closes#31283 from sarutak/mssql-spatial-types.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes the regression bug brought by SPARK-33888 (#30902).

After that PR merged, `PostgresDIalect#getCatalystType` throws Exception for array types.

```

[info] - Type mapping for various types *** FAILED *** (551 milliseconds)

[info] java.util.NoSuchElementException: key not found: scale

[info] at scala.collection.immutable.Map$EmptyMap$.apply(Map.scala:106)

[info] at scala.collection.immutable.Map$EmptyMap$.apply(Map.scala:104)

[info] at org.apache.spark.sql.types.Metadata.get(Metadata.scala:111)

[info] at org.apache.spark.sql.types.Metadata.getLong(Metadata.scala:51)

[info] at org.apache.spark.sql.jdbc.PostgresDialect$.getCatalystType(PostgresDialect.scala:43)

[info] at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.getSchema(JdbcUtils.scala:321)

```

### Why are the changes needed?

To fix the regression bug.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I confirmed the test case `SPARK-22291: Conversion error when transforming array types of uuid, inet and cidr to StingType in PostgreSQL` in `PostgresIntegrationSuite` passed.

I also confirmed whether all the `v2.*IntegrationSuite` pass because this PR changed them and they passed.

Closes#31262 from sarutak/fix-postgres-dialect-regression.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Update Avro dependency to version 1.10.1

### Why are the changes needed?

To catch up multiple improvements of Avro as well as fix security issues on transitive dependencies.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Since there were no API changes required we just run the tests

Closes#31232 from iemejia/SPARK-27733-avro-upgrade.

Authored-by: Ismaël Mejía <iemejia@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR is the 3rd try to upgrade Scala 2.12.x in order to see the feasibility.

- https://github.com/apache/spark/pull/27929 (Upgrade Scala to 2.12.11, wangyum )

- https://github.com/apache/spark/pull/30940 (Upgrade Scala to 2.12.12, viirya )

`silencer` library is updated accordingly. And, Kafka version upgrade is required because it fails like the following.

```

[info] KafkaDataConsumerSuite:

[info] org.apache.spark.streaming.kafka010.KafkaDataConsumerSuite *** ABORTED *** (1 second, 580 milliseconds)

[info] java.lang.NoClassDefFoundError: scala/math/Ordering$$anon$7

[info] at kafka.api.ApiVersion$.orderingByVersion(ApiVersion.scala:45)

```

### Why are the changes needed?

Apache Spark was stuck to 2.12.10 due to the regression in Scala 2.12.11 and 2.12.12. This will bring all the bug fixes.

- https://github.com/scala/scala/releases/tag/v2.12.13

- https://github.com/scala/scala/releases/tag/v2.12.12

- https://github.com/scala/scala/releases/tag/v2.12.11

### Does this PR introduce _any_ user-facing change?

Yes, but this is a bug-fixed version.

### How was this patch tested?

Pass the CIs.

Closes#31223 from dongjoon-hyun/SPARK-31168.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This:

1. switches Spark to use shaded Hadoop clients, namely hadoop-client-api and hadoop-client-runtime, for Hadoop 3.x.

2. upgrade built-in version for Hadoop 3.x to Hadoop 3.2.2

Note that for Hadoop 2.7, we'll still use the same modules such as hadoop-client.

In order to still keep default Hadoop profile to be hadoop-3.2, this defines the following Maven properties:

```

hadoop-client-api.artifact

hadoop-client-runtime.artifact

hadoop-client-minicluster.artifact

```

which default to:

```

hadoop-client-api

hadoop-client-runtime

hadoop-client-minicluster

```

but all switch to `hadoop-client` when the Hadoop profile is hadoop-2.7. A side affect from this is we'll import the same dependency multiple times. For this I have to disable Maven enforcer `banDuplicatePomDependencyVersions`.

Besides above, there are the following changes:

- explicitly add a few dependencies which are imported via transitive dependencies from Hadoop jars, but are removed from the shaded client jars.

- removed the use of `ProxyUriUtils.getPath` from `ApplicationMaster` which is a server-side/private API.

- modified `IsolatedClientLoader` to exclude `hadoop-auth` jars when Hadoop version is 3.x. This change should only matter when we're not sharing Hadoop classes with Spark (which is _mostly_ used in tests).

### Why are the changes needed?

Hadoop 3.2.2 is released with new features and bug fixes, so it's good for the Spark community to adopt it. However, latest Hadoop versions starting from Hadoop 3.2.1 have upgraded to use Guava 27+. In order to resolve Guava conflicts, this takes the approach by switching to shaded client jars provided by Hadoop. This also has the benefits of avoid pulling other 3rd party dependencies from Hadoop side so as to avoid more potential future conflicts.

### Does this PR introduce _any_ user-facing change?

When people use Spark with `hadoop-provided` option, they should make sure class path contains `hadoop-client-api` and `hadoop-client-runtime` jars. In addition, they may need to make sure these jars appear before other Hadoop jars in the order. Otherwise, classes may be loaded from the other non-shaded Hadoop jars and cause potential conflicts.

### How was this patch tested?

Relying on existing tests.

Closes#30701 from sunchao/test-hadoop-3.2.2.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Some local variables are declared as `var`, but they are never reassigned and should be declared as `val`, so this pr turn these from `var` to `val` except for `mockito` related cases.

### Why are the changes needed?

Use `val` instead of `var` when possible.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#31142 from LuciferYang/SPARK-33346.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

There are some redundant collection conversion can be removed, for version compatibility, clean up these with Scala-2.13 profile.

### Why are the changes needed?

Remove redundant collection conversion

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

- Pass the Jenkins or GitHub Action

- Manual test `core`, `graphx`, `mllib`, `mllib-local`, `sql`, `yarn`,`kafka-0-10` in Scala 2.13 passed

Closes#31125 from LuciferYang/SPARK-34068.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

`HadoopDelegationTokenManager.isServiceEnabled` is quite a time consuming operation which is called in `KafkaTokenUtil.needTokenUpdate` often which slowed down Kafka processing heavily. SPARK-33635 changed the if condition in order to overcome this issue when no delegation token is used but in case of delegation token usage the problem still exists. In this PR I'm caching the `HadoopDelegationTokenManager.isServiceEnabled` result in the `KafkaDataConsumer` instances which solves the issue. There would be another solution, namely caching the result inside `HadoopDelegationTokenManager` but since it's an object function and several application is running inside a JVM, different `SparkConf` instances will arrive. Caching the result per `SparkConf` instance would be an overkill.

### Why are the changes needed?

Kafka stream processing is slow with delegation token.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

* Existing unit tests

* In Kafka to Kafka live query I've double checked that `HadoopDelegationTokenManager.isServiceEnabled` call executed only when new `KafkaDataConsumer` created (new delegation token arrives or task failure).

Closes#31154 from gaborgsomogyi/SPARK-34090.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR is basically a followup of https://github.com/apache/spark/pull/14332.

Calling `map` alone might leave it not executed due to lazy evaluation, e.g.)

```

scala> val foo = Seq(1,2,3)

foo: Seq[Int] = List(1, 2, 3)

scala> foo.map(println)

1

2

3

res0: Seq[Unit] = List((), (), ())

scala> foo.view.map(println)

res1: scala.collection.SeqView[Unit,Seq[_]] = SeqViewM(...)

scala> foo.view.foreach(println)

1

2

3

```

We should better use `foreach` to make sure it's executed where the output is unused or `Unit`.

### Why are the changes needed?

To prevent the potential issues by not executing `map`.

### Does this PR introduce _any_ user-facing change?

No, the current codes look not causing any problem for now.

### How was this patch tested?

I found these item by running IntelliJ inspection, double checked one by one, and fixed them. These should be all instances across the codebase ideally.

Closes#31110 from HyukjinKwon/SPARK-34059.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

Kafka delegation token is obtained with `AdminClient` where security settings can be set. Keystore and trustrore type however can't be set. In this PR I've added these new configurations. This can be useful when the type is different. A good example is to make Spark FIPS compliant where the default JKS is not accepted.

### Why are the changes needed?

Missing configurations.

### Does this PR introduce _any_ user-facing change?

Yes, adding 2 additional config parameters.

### How was this patch tested?

Existing + modified unit tests + simple Kafka to Kafka app on cluster.

Closes#31070 from gaborgsomogyi/SPARK-34032.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to adjust the order of check in KafkaTokenUtil.needTokenUpdate, so that short-circuit applies on the non-delegation token cases (insecure + secured without delegation token) and remedies the performance regression heavily.

### Why are the changes needed?

There's a serious performance regression between Spark 2.4 vs Spark 3.0 on read path against Kafka data source.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually ran a reproducer (https://github.com/codegorillauk/spark-kafka-read with modification to just count instead of writing to Kafka topic) with measuring the time.

> the branch applying the change with adding measurement

https://github.com/HeartSaVioR/spark/commits/debug-SPARK-33635-v3.0.1

> the branch only adding measurement

https://github.com/HeartSaVioR/spark/commits/debug-original-ver-SPARK-33635-v3.0.1

> the result (before the fix)

count: 10280000

Took 41.634007047 secs

21/01/06 13:16:07 INFO KafkaDataConsumer: debug ver. 17-original

21/01/06 13:16:07 INFO KafkaDataConsumer: Total time taken to retrieve: 82118 ms

> the result (after the fix)

count: 10280000

Took 7.964058475 secs

21/01/06 13:08:22 INFO KafkaDataConsumer: debug ver. 17

21/01/06 13:08:22 INFO KafkaDataConsumer: Total time taken to retrieve: 987 ms

Closes#31056 from HeartSaVioR/SPARK-33635.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch proposes to add latest offset to source progress for streaming queries.

### Why are the changes needed?

Currently we record start and end offsets per source in streaming process. Latest offset is an important information for streaming process but the progress lacks of this info. We can use it to track the process lag and adjust streaming queries. We should add latest offset to source progress.

### Does this PR introduce _any_ user-facing change?

Yes, for new metric about latest source offset in source progress.

### How was this patch tested?

Unit test. Manually test in Spark cluster:

```

"description" : "KafkaV2[Subscribe[page_view_events]]",

"startOffset" : {

"page_view_events" : {

"2" : 582370921,

"4" : 391910836,

"1" : 631009201,

"3" : 406601346,

"0" : 195799112

}

},

"endOffset" : {

"page_view_events" : {

"2" : 583764414,

"4" : 392338002,

"1" : 632183480,

"3" : 407101489,

"0" : 197304028

}

},

"latestOffset" : {

"page_view_events" : {

"2" : 589852545,

"4" : 394204277,

"1" : 637313869,

"3" : 409286602,

"0" : 203878962

}

},

"numInputRows" : 4999997,

"inputRowsPerSecond" : 29287.70501405811,

```

Closes#30988 from viirya/latest-offset.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch fixes an incorrect condition when comparing offset range size and min partition config.

### Why are the changes needed?

When calculating offset ranges, we consider `minPartitions` configuration. If `minPartitions` is not set or is less than or equal the size of given ranges, it means there are enough partitions at Kafka so we don't need to split offsets to satisfy min partition requirement. But the current condition is `offsetRanges.size > minPartitions.get` and is not correct. Currently `getRanges` will split offsets in unnecessary case.

Besides, in non-split case, we can assign preferred executor location and reuse `KafkaConsumer`. So unnecessary splitting offset range will miss the chance to reuse `KafkaConsumer`.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test.

Manual test in Spark cluster with Kafka.

Closes#30994 from viirya/ss-minor4.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This minor patch changes two variables where calling `fetchEarliestOffsets` to `lazy` because these values are not always necessary.

### Why are the changes needed?

To avoid unnecessary Kafka RPC calls.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test.

Closes#30969 from viirya/ss-minor3.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>