## What changes were proposed in this pull request?

To drop `exprId`s for `Alias` in user-facing info., this pr added an entry for `Alias` in `NonSQLExpression.sql`

## How was this patch tested?

Added tests in `UDFSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#20827 from maropu/SPARK-23666.

## What changes were proposed in this pull request?

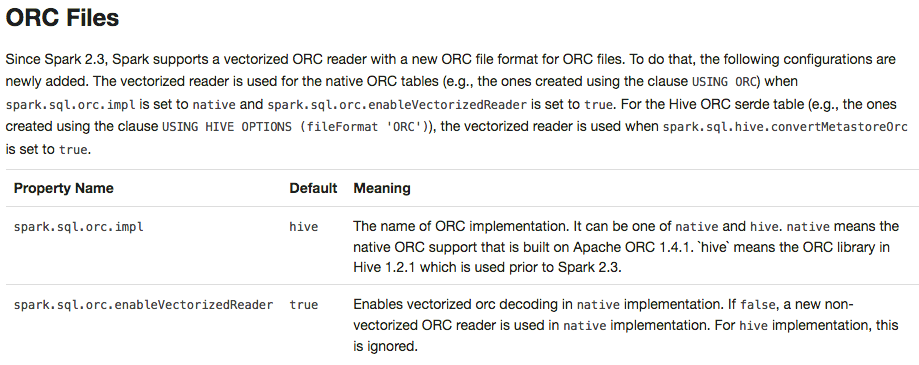

In the PR https://github.com/apache/spark/pull/20671, I forgot to update the doc about this new support.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20789 from gatorsmile/docUpdate.

## What changes were proposed in this pull request?

Below are the two cases.

``` SQL

case 1

scala> List.empty[String].toDF().rdd.partitions.length

res18: Int = 1

```

When we write the above data frame as parquet, we create a parquet file containing

just the schema of the data frame.

Case 2

``` SQL

scala> val anySchema = StructType(StructField("anyName", StringType, nullable = false) :: Nil)

anySchema: org.apache.spark.sql.types.StructType = StructType(StructField(anyName,StringType,false))

scala> spark.read.schema(anySchema).csv("/tmp/empty_folder").rdd.partitions.length

res22: Int = 0

```

For the 2nd case, since number of partitions = 0, we don't call the write task (the task has logic to create the empty metadata only parquet file)

The fix is to create a dummy single partition RDD and set up the write task based on it to ensure

the metadata-only file.

## How was this patch tested?

A new test is added to DataframeReaderWriterSuite.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#20525 from dilipbiswal/spark-23271.

## What changes were proposed in this pull request?

This PR adds a configuration to control the fallback of Arrow optimization for `toPandas` and `createDataFrame` with Pandas DataFrame.

## How was this patch tested?

Manually tested and unit tests added.

You can test this by:

**`createDataFrame`**

```python

spark.conf.set("spark.sql.execution.arrow.enabled", False)

pdf = spark.createDataFrame([[{'a': 1}]]).toPandas()

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", True)

spark.createDataFrame(pdf, "a: map<string, int>")

```

```python

spark.conf.set("spark.sql.execution.arrow.enabled", False)

pdf = spark.createDataFrame([[{'a': 1}]]).toPandas()

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", False)

spark.createDataFrame(pdf, "a: map<string, int>")

```

**`toPandas`**

```python

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", True)

spark.createDataFrame([[{'a': 1}]]).toPandas()

```

```python

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", False)

spark.createDataFrame([[{'a': 1}]]).toPandas()

```

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20678 from HyukjinKwon/SPARK-23380-conf.

## What changes were proposed in this pull request?

Apache Spark 2.3 introduced `native` ORC supports with vectorization and many fixes. However, it's shipped as a not-default option. This PR enables `native` ORC implementation and predicate-pushdown by default for Apache Spark 2.4. We will improve and stabilize ORC data source before Apache Spark 2.4. And, eventually, Apache Spark will drop old Hive-based ORC code.

## How was this patch tested?

Pass the Jenkins with existing tests.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20634 from dongjoon-hyun/SPARK-23456.

## What changes were proposed in this pull request?

To prevent any regressions, this PR changes ORC implementation to `hive` by default like Spark 2.2.X.

Users can enable `native` ORC. Also, ORC PPD is also restored to `false` like Spark 2.2.X.

## How was this patch tested?

Pass all test cases.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20610 from dongjoon-hyun/SPARK-ORC-DISABLE.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/19579 introduces a behavior change. We need to document it in the migration guide.

## How was this patch tested?

Also update the HiveExternalCatalogVersionsSuite to verify it.

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20606 from gatorsmile/addMigrationGuide.

## What changes were proposed in this pull request?

This PR targets to explicitly specify supported types in Pandas UDFs.

The main change here is to add a deduplicated and explicit type checking in `returnType` ahead with documenting this; however, it happened to fix multiple things.

1. Currently, we don't support `BinaryType` in Pandas UDFs, for example, see:

```python

from pyspark.sql.functions import pandas_udf

pudf = pandas_udf(lambda x: x, "binary")

df = spark.createDataFrame([[bytearray(1)]])

df.select(pudf("_1")).show()

```

```

...

TypeError: Unsupported type in conversion to Arrow: BinaryType

```

We can document this behaviour for its guide.

2. Also, the grouped aggregate Pandas UDF fails fast on `ArrayType` but seems we can support this case.

```python

from pyspark.sql.functions import pandas_udf, PandasUDFType

foo = pandas_udf(lambda v: v.mean(), 'array<double>', PandasUDFType.GROUPED_AGG)

df = spark.range(100).selectExpr("id", "array(id) as value")

df.groupBy("id").agg(foo("value")).show()

```

```

...

NotImplementedError: ArrayType, StructType and MapType are not supported with PandasUDFType.GROUPED_AGG

```

3. Since we can check the return type ahead, we can fail fast before actual execution.

```python

# we can fail fast at this stage because we know the schema ahead

pandas_udf(lambda x: x, BinaryType())

```

## How was this patch tested?

Manually tested and unit tests for `BinaryType` and `ArrayType(...)` were added.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20531 from HyukjinKwon/pudf-cleanup.

## What changes were proposed in this pull request?

This PR proposes to disallow default value None when 'to_replace' is not a dictionary.

It seems weird we set the default value of `value` to `None` and we ended up allowing the case as below:

```python

>>> df.show()

```

```

+----+------+-----+

| age|height| name|

+----+------+-----+

| 10| 80|Alice|

...

```

```python

>>> df.na.replace('Alice').show()

```

```

+----+------+----+

| age|height|name|

+----+------+----+

| 10| 80|null|

...

```

**After**

This PR targets to disallow the case above:

```python

>>> df.na.replace('Alice').show()

```

```

...

TypeError: value is required when to_replace is not a dictionary.

```

while we still allow when `to_replace` is a dictionary:

```python

>>> df.na.replace({'Alice': None}).show()

```

```

+----+------+----+

| age|height|name|

+----+------+----+

| 10| 80|null|

...

```

## How was this patch tested?

Manually tested, tests were added in `python/pyspark/sql/tests.py` and doctests were fixed.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20499 from HyukjinKwon/SPARK-19454-followup.

## What changes were proposed in this pull request?

Rename the public APIs and names of pandas udfs.

- `PANDAS SCALAR UDF` -> `SCALAR PANDAS UDF`

- `PANDAS GROUP MAP UDF` -> `GROUPED MAP PANDAS UDF`

- `PANDAS GROUP AGG UDF` -> `GROUPED AGG PANDAS UDF`

## How was this patch tested?

The existing tests

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20428 from gatorsmile/renamePandasUDFs.

## What changes were proposed in this pull request?

Adding user facing documentation for working with Arrow in Spark

Author: Bryan Cutler <cutlerb@gmail.com>

Author: Li Jin <ice.xelloss@gmail.com>

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#19575 from BryanCutler/arrow-user-docs-SPARK-2221.

## What changes were proposed in this pull request?

Fix spelling in quick-start doc.

## How was this patch tested?

Doc only.

Author: Shashwat Anand <me@shashwat.me>

Closes#20336 from ashashwat/SPARK-23165.

## What changes were proposed in this pull request?

When there is an operation between Decimals and the result is a number which is not representable exactly with the result's precision and scale, Spark is returning `NULL`. This was done to reflect Hive's behavior, but it is against SQL ANSI 2011, which states that "If the result cannot be represented exactly in the result type, then whether it is rounded or truncated is implementation-defined". Moreover, Hive now changed its behavior in order to respect the standard, thanks to HIVE-15331.

Therefore, the PR propose to:

- update the rules to determine the result precision and scale according to the new Hive's ones introduces in HIVE-15331;

- round the result of the operations, when it is not representable exactly with the result's precision and scale, instead of returning `NULL`

- introduce a new config `spark.sql.decimalOperations.allowPrecisionLoss` which default to `true` (ie. the new behavior) in order to allow users to switch back to the previous one.

Hive behavior reflects SQLServer's one. The only difference is that the precision and scale are adjusted for all the arithmetic operations in Hive, while SQL Server is said to do so only for multiplications and divisions in the documentation. This PR follows Hive's behavior.

A more detailed explanation is available here: https://mail-archives.apache.org/mod_mbox/spark-dev/201712.mbox/%3CCAEorWNAJ4TxJR9NBcgSFMD_VxTg8qVxusjP%2BAJP-x%2BJV9zH-yA%40mail.gmail.com%3E.

## How was this patch tested?

modified and added UTs. Comparisons with results of Hive and SQLServer.

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20023 from mgaido91/SPARK-22036.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/18164 introduces the behavior changes. We need to document it.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20234 from gatorsmile/docBehaviorChange.

## What changes were proposed in this pull request?

doc update

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#20198 from felixcheung/rrefreshdoc.

[SPARK-21786][SQL] When acquiring 'compressionCodecClassName' in 'ParquetOptions', `parquet.compression` needs to be considered.

## What changes were proposed in this pull request?

Since Hive 1.1, Hive allows users to set parquet compression codec via table-level properties parquet.compression. See the JIRA: https://issues.apache.org/jira/browse/HIVE-7858 . We do support orc.compression for ORC. Thus, for external users, it is more straightforward to support both. See the stackflow question: https://stackoverflow.com/questions/36941122/spark-sql-ignores-parquet-compression-propertie-specified-in-tblproperties

In Spark side, our table-level compression conf compression was added by #11464 since Spark 2.0.

We need to support both table-level conf. Users might also use session-level conf spark.sql.parquet.compression.codec. The priority rule will be like

If other compression codec configuration was found through hive or parquet, the precedence would be compression, parquet.compression, spark.sql.parquet.compression.codec. Acceptable values include: none, uncompressed, snappy, gzip, lzo.

The rule for Parquet is consistent with the ORC after the change.

Changes:

1.Increased acquiring 'compressionCodecClassName' from `parquet.compression`,and the precedence order is `compression`,`parquet.compression`,`spark.sql.parquet.compression.codec`, just like what we do in `OrcOptions`.

2.Change `spark.sql.parquet.compression.codec` to support "none".Actually in `ParquetOptions`,we do support "none" as equivalent to "uncompressed", but it does not allowed to configured to "none".

3.Change `compressionCode` to `compressionCodecClassName`.

## How was this patch tested?

Add test.

Author: fjh100456 <fu.jinhua6@zte.com.cn>

Closes#20076 from fjh100456/ParquetOptionIssue.

## What changes were proposed in this pull request?

This pr modified `elt` to output binary for binary inputs.

`elt` in the current master always output data as a string. But, in some databases (e.g., MySQL), if all inputs are binary, `elt` also outputs binary (Also, this might be a small surprise).

This pr is related to #19977.

## How was this patch tested?

Added tests in `SQLQueryTestSuite` and `TypeCoercionSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#20135 from maropu/SPARK-22937.

## What changes were proposed in this pull request?

Currently, we do not guarantee an order evaluation of conjuncts in either Filter or Join operator. This is also true to the mainstream RDBMS vendors like DB2 and MS SQL Server. Thus, we should also push down the deterministic predicates that are after the first non-deterministic, if possible.

## How was this patch tested?

Updated the existing test cases.

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20069 from gatorsmile/morePushDown.

## What changes were proposed in this pull request?

This pr modified `concat` to concat binary inputs into a single binary output.

`concat` in the current master always output data as a string. But, in some databases (e.g., PostgreSQL), if all inputs are binary, `concat` also outputs binary.

## How was this patch tested?

Added tests in `SQLQueryTestSuite` and `TypeCoercionSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#19977 from maropu/SPARK-22771.

## What changes were proposed in this pull request?

Easy fix in the link.

## How was this patch tested?

Tested manually

Author: Mahmut CAVDAR <mahmutcvdr@gmail.com>

Closes#19996 from mcavdar/master.

## What changes were proposed in this pull request?

Update broadcast behavior changes in migration section.

## How was this patch tested?

N/A

Author: Yuming Wang <wgyumg@gmail.com>

Closes#19858 from wangyum/SPARK-22489-migration.

## What changes were proposed in this pull request?

How to reproduce:

```scala

import org.apache.spark.sql.execution.joins.BroadcastHashJoinExec

spark.createDataFrame(Seq((1, "4"), (2, "2"))).toDF("key", "value").createTempView("table1")

spark.createDataFrame(Seq((1, "1"), (2, "2"))).toDF("key", "value").createTempView("table2")

val bl = sql("SELECT /*+ MAPJOIN(t1) */ * FROM table1 t1 JOIN table2 t2 ON t1.key = t2.key").queryExecution.executedPlan

println(bl.children.head.asInstanceOf[BroadcastHashJoinExec].buildSide)

```

The result is `BuildRight`, but should be `BuildLeft`. This PR fix this issue.

## How was this patch tested?

unit tests

Author: Yuming Wang <wgyumg@gmail.com>

Closes#19714 from wangyum/SPARK-22489.

## What changes were proposed in this pull request?

When converting Pandas DataFrame/Series from/to Spark DataFrame using `toPandas()` or pandas udfs, timestamp values behave to respect Python system timezone instead of session timezone.

For example, let's say we use `"America/Los_Angeles"` as session timezone and have a timestamp value `"1970-01-01 00:00:01"` in the timezone. Btw, I'm in Japan so Python timezone would be `"Asia/Tokyo"`.

The timestamp value from current `toPandas()` will be the following:

```

>>> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

>>> df = spark.createDataFrame([28801], "long").selectExpr("timestamp(value) as ts")

>>> df.show()

+-------------------+

| ts|

+-------------------+

|1970-01-01 00:00:01|

+-------------------+

>>> df.toPandas()

ts

0 1970-01-01 17:00:01

```

As you can see, the value becomes `"1970-01-01 17:00:01"` because it respects Python timezone.

As we discussed in #18664, we consider this behavior is a bug and the value should be `"1970-01-01 00:00:01"`.

## How was this patch tested?

Added tests and existing tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#19607 from ueshin/issues/SPARK-22395.

## What changes were proposed in this pull request?

Add incompatible Hive UDF describe to DOC.

## How was this patch tested?

N/A

Author: Yuming Wang <wgyumg@gmail.com>

Closes#18833 from wangyum/SPARK-21625.

## What changes were proposed in this pull request?

Easy fix in the documentation, which is reporting that only numeric types and string are supported in type inference for partition columns, while Date and Timestamp are supported too since 2.1.0, thanks to SPARK-17388.

## How was this patch tested?

n/a

Author: Marco Gaido <mgaido@hortonworks.com>

Closes#19628 from mgaido91/SPARK-22398.

## What changes were proposed in this pull request?

Added documentation for loading csv files into Dataframes

## How was this patch tested?

/dev/run-tests

Author: Jorge Machado <jorge.w.machado@hotmail.com>

Closes#19429 from jomach/master.

## What changes were proposed in this pull request?

The `percentile_approx` function previously accepted numeric type input and output double type results.

But since all numeric types, date and timestamp types are represented as numerics internally, `percentile_approx` can support them easily.

After this PR, it supports date type, timestamp type and numeric types as input types. The result type is also changed to be the same as the input type, which is more reasonable for percentiles.

This change is also required when we generate equi-height histograms for these types.

## How was this patch tested?

Added a new test and modified some existing tests.

Author: Zhenhua Wang <wangzhenhua@huawei.com>

Closes#19321 from wzhfy/approx_percentile_support_types.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/18266 add a new feature to support read JDBC table use custom schema, but we must specify all the fields. For simplicity, this PR support specify partial fields.

## How was this patch tested?

unit tests

Author: Yuming Wang <wgyumg@gmail.com>

Closes#19231 from wangyum/SPARK-22002.

## What changes were proposed in this pull request?

Auto generated Oracle schema some times not we expect:

- `number(1)` auto mapped to BooleanType, some times it's not we expect, per [SPARK-20921](https://issues.apache.org/jira/browse/SPARK-20921).

- `number` auto mapped to Decimal(38,10), It can't read big data, per [SPARK-20427](https://issues.apache.org/jira/browse/SPARK-20427).

This PR fix this issue by custom schema as follows:

```scala

val props = new Properties()

props.put("customSchema", "ID decimal(38, 0), N1 int, N2 boolean")

val dfRead = spark.read.schema(schema).jdbc(jdbcUrl, "tableWithCustomSchema", props)

dfRead.show()

```

or

```sql

CREATE TEMPORARY VIEW tableWithCustomSchema

USING org.apache.spark.sql.jdbc

OPTIONS (url '$jdbcUrl', dbTable 'tableWithCustomSchema', customSchema'ID decimal(38, 0), N1 int, N2 boolean')

```

## How was this patch tested?

unit tests

Author: Yuming Wang <wgyumg@gmail.com>

Closes#18266 from wangyum/SPARK-20427.

## What changes were proposed in this pull request?

```

echo '{"field": 1}

{"field": 2}

{"field": "3"}' >/tmp/sample.json

```

```scala

import org.apache.spark.sql.types._

val schema = new StructType()

.add("field", ByteType)

.add("_corrupt_record", StringType)

val file = "/tmp/sample.json"

val dfFromFile = spark.read.schema(schema).json(file)

scala> dfFromFile.show(false)

+-----+---------------+

|field|_corrupt_record|

+-----+---------------+

|1 |null |

|2 |null |

|null |{"field": "3"} |

+-----+---------------+

scala> dfFromFile.filter($"_corrupt_record".isNotNull).count()

res1: Long = 0

scala> dfFromFile.filter($"_corrupt_record".isNull).count()

res2: Long = 3

```

When the `requiredSchema` only contains `_corrupt_record`, the derived `actualSchema` is empty and the `_corrupt_record` are all null for all rows. This PR captures above situation and raise an exception with a reasonable workaround messag so that users can know what happened and how to fix the query.

## How was this patch tested?

Added test case.

Author: Jen-Ming Chung <jenmingisme@gmail.com>

Closes#18865 from jmchung/SPARK-21610.

## What changes were proposed in this pull request?

Since [SPARK-15639](https://github.com/apache/spark/pull/13701), `spark.sql.parquet.cacheMetadata` and `PARQUET_CACHE_METADATA` is not used. This PR removes from SQLConf and docs.

## How was this patch tested?

Pass the existing Jenkins.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#19129 from dongjoon-hyun/SPARK-13656.

## What changes were proposed in this pull request?

All built-in data sources support `Partition Discovery`. We had better update the document to give the users more benefit clearly.

**AFTER**

<img width="906" alt="1" src="https://user-images.githubusercontent.com/9700541/30083628-14278908-9244-11e7-98dc-9ad45fe233a9.png">

## How was this patch tested?

```

SKIP_API=1 jekyll serve --watch

```

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#19139 from dongjoon-hyun/partitiondiscovery.

Add an option to the JDBC data source to initialize the environment of the remote database session

## What changes were proposed in this pull request?

This proposes an option to the JDBC datasource, tentatively called " sessionInitStatement" to implement the functionality of session initialization present for example in the Sqoop connector for Oracle (see https://sqoop.apache.org/docs/1.4.6/SqoopUserGuide.html#_oraoop_oracle_session_initialization_statements ) . After each database session is opened to the remote DB, and before starting to read data, this option executes a custom SQL statement (or a PL/SQL block in the case of Oracle).

See also https://issues.apache.org/jira/browse/SPARK-21519

## How was this patch tested?

Manually tested using Spark SQL data source and Oracle JDBC

Author: LucaCanali <luca.canali@cern.ch>

Closes#18724 from LucaCanali/JDBC_datasource_sessionInitStatement.

## What changes were proposed in this pull request?

This commit adds a new argument for IllegalArgumentException message. This recent commit added the argument:

[dcac1d57f0)

## How was this patch tested?

Unit test have been passed

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Marcos P. Sanchez <mpenate@stratio.com>

Closes#18862 from mpenate/feature/exception-errorifexists.

## What changes were proposed in this pull request?

This pr added documents about unsupported functions in Hive UDF/UDTF/UDAF.

This pr relates to #18768 and #18527.

## How was this patch tested?

N/A

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#18792 from maropu/HOTFIX-20170731.

## What changes were proposed in this pull request?

Few changes to the Structured Streaming documentation

- Clarify that the entire stream input table is not materialized

- Add information for Ganglia

- Add Kafka Sink to the main docs

- Removed a couple of leftover experimental tags

- Added more associated reading material and talk videos.

In addition, https://github.com/apache/spark/pull/16856 broke the link to the RDD programming guide in several places while renaming the page. This PR fixes those sameeragarwal cloud-fan.

- Added a redirection to avoid breaking internal and possible external links.

- Removed unnecessary redirection pages that were there since the separate scala, java, and python programming guides were merged together in 2013 or 2014.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#18485 from tdas/SPARK-21267.

## What changes were proposed in this pull request?

doc only change

## How was this patch tested?

manually

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#18312 from felixcheung/sqljsonwholefiledoc.

## What changes were proposed in this pull request?

- Add Scala, Python and Java examples for `partitionBy`, `sortBy` and `bucketBy`.

- Add _Bucketing, Sorting and Partitioning_ section to SQL Programming Guide

- Remove bucketing from Unsupported Hive Functionalities.

## How was this patch tested?

Manual tests, docs build.

Author: zero323 <zero323@users.noreply.github.com>

Closes#17938 from zero323/DOCS-BUCKETING-AND-PARTITIONING.

(Link to Jira: https://issues.apache.org/jira/browse/SPARK-20888)

## What changes were proposed in this pull request?

Document change of default setting of spark.sql.hive.caseSensitiveInferenceMode configuration key from NEVER_INFO to INFER_AND_SAVE in the Spark SQL 2.1 to 2.2 migration notes.

Author: Michael Allman <michael@videoamp.com>

Closes#18112 from mallman/spark-20888-document_infer_and_save.

## What changes were proposed in this pull request?

Typos at a couple of place in the docs.

## How was this patch tested?

build including docs

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: ymahajan <ymahajan@snappydata.io>

Closes#17690 from ymahajan/master.

## What changes were proposed in this pull request?

This PR proposes corrections related to JSON APIs as below:

- Rendering links in Python documentation

- Replacing `RDD` to `Dataset` in programing guide

- Adding missing description about JSON Lines consistently in `DataFrameReader.json` in Python API

- De-duplicating little bit of `DataFrameReader.json` in Scala/Java API

## How was this patch tested?

Manually build the documentation via `jekyll build`. Corresponding snapstops will be left on the codes.

Note that currently there are Javadoc8 breaks in several places. These are proposed to be handled in https://github.com/apache/spark/pull/17477. So, this PR does not fix those.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#17602 from HyukjinKwon/minor-json-documentation.

## What changes were proposed in this pull request?

Since SPARK-18112 and SPARK-13446, Apache Spark starts to support reading Hive metastore 2.0 ~ 2.1.1. This updates the docs.

## How was this patch tested?

N/A

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#17612 from dongjoon-hyun/metastore.

## What changes were proposed in this pull request?

Currently JDBC data source creates tables in the target database using the default type mapping, and the JDBC dialect mechanism. If users want to specify different database data type for only some of columns, there is no option available. In scenarios where default mapping does not work, users are forced to create tables on the target database before writing. This workaround is probably not acceptable from a usability point of view. This PR is to provide a user-defined type mapping for specific columns.

The solution is to allow users to specify database column data type for the create table as JDBC datasource option(createTableColumnTypes) on write. Data type information can be specified in the same format as table schema DDL format (e.g: `name CHAR(64), comments VARCHAR(1024)`).

All supported target database types can not be specified , the data types has to be valid spark sql data types also. For example user can not specify target database CLOB data type. This will be supported in the follow-up PR.

Example:

```Scala

df.write

.option("createTableColumnTypes", "name CHAR(64), comments VARCHAR(1024)")

.jdbc(url, "TEST.DBCOLTYPETEST", properties)

```

## How was this patch tested?

Added new test cases to the JDBCWriteSuite

Author: sureshthalamati <suresh.thalamati@gmail.com>

Closes#16209 from sureshthalamati/jdbc_custom_dbtype_option_json-spark-10849.

## What changes were proposed in this pull request?

Update doc for R, programming guide. Clarify default behavior for all languages.

## How was this patch tested?

manually

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#17128 from felixcheung/jsonwholefiledoc.

## What changes were proposed in this pull request?

Removed duplicated lines in sql python example and found a typo.

## How was this patch tested?

Searched for other typo's in the page to minimize PR's.

Author: Boaz Mohar <boazmohar@gmail.com>

Closes#17066 from boazmohar/doc-fix.

## What changes were proposed in this pull request?

https://spark.apache.org/docs/latest/sql-programming-guide.html#caching-data-in-memory

In the doc, the call spark.cacheTable(“tableName”) and spark.uncacheTable(“tableName”) actually needs to be spark.catalog.cacheTable and spark.catalog.uncacheTable

## How was this patch tested?

Built the docs and verified the change shows up fine.

Author: Sunitha Kambhampati <skambha@us.ibm.com>

Closes#16919 from skambha/docChange.

### What changes were proposed in this pull request?

The case are not sensitive in JDBC options, after the PR https://github.com/apache/spark/pull/15884 is merged to Spark 2.1.

### How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#16734 from gatorsmile/fixDocCaseInsensitive.

## What changes were proposed in this pull request?

- A separate subsection for Aggregations under “Getting Started” in the Spark SQL programming guide. It mentions which aggregate functions are predefined and how users can create their own.

- Examples of using the `UserDefinedAggregateFunction` abstract class for untyped aggregations in Java and Scala.

- Examples of using the `Aggregator` abstract class for type-safe aggregations in Java and Scala.

- Python is not covered.

- The PR might not resolve the ticket since I do not know what exactly was planned by the author.

In total, there are four new standalone examples that can be executed via `spark-submit` or `run-example`. The updated Spark SQL programming guide references to these examples and does not contain hard-coded snippets.

## How was this patch tested?

The patch was tested locally by building the docs. The examples were run as well.

Author: aokolnychyi <okolnychyyanton@gmail.com>

Closes#16329 from aokolnychyi/SPARK-16046.

## What changes were proposed in this pull request?

This PR adds a new behavior change description on `CREATE TABLE ... LOCATION` at `sql-programming-guide.md` clearly under `Upgrading From Spark SQL 1.6 to 2.0`. This change is introduced at Apache Spark 2.0.0 as [SPARK-15276](https://issues.apache.org/jira/browse/SPARK-15276).

## How was this patch tested?

```

SKIP_API=1 jekyll build

```

**Newly Added Description**

<img width="913" alt="new" src="https://cloud.githubusercontent.com/assets/9700541/21743606/7efe2b12-d4ba-11e6-8a0d-551222718ea2.png">

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#16400 from dongjoon-hyun/SPARK-18941.

## What changes were proposed in this pull request?

Today we have different syntax to create data source or hive serde tables, we should unify them to not confuse users and step forward to make hive a data source.

Please read https://issues.apache.org/jira/secure/attachment/12843835/CREATE-TABLE.pdf for details.

TODO(for follow-up PRs):

1. TBLPROPERTIES is not added to the new syntax, we should decide if we wanna add it later.

2. `SHOW CREATE TABLE` should be updated to use the new syntax.

3. we should decide if we wanna change the behavior of `SET LOCATION`.

## How was this patch tested?

new tests

Author: Wenchen Fan <wenchen@databricks.com>

Closes#16296 from cloud-fan/create-table.

## What changes were proposed in this pull request?

This PR documents the scalable partition handling feature in the body of the programming guide.

Before this PR, we only mention it in the migration guide. It's not super clear that external datasource tables require an extra `MSCK REPAIR TABLE` command is to have per-partition information persisted since 2.1.

## How was this patch tested?

N/A.

Author: Cheng Lian <lian@databricks.com>

Closes#16424 from liancheng/scalable-partition-handling-doc.

## What changes were proposed in this pull request?

Although, currently, the saveAsTable does not provide an API to save the table as an external table from a DataFrame, we can achieve this functionality by using options on DataFrameWriter where the key for the map is the String: "path" and the value is another String which is the location of the external table itself. This can be provided before the call to saveAsTable is performed.

## How was this patch tested?

Documentation was reviewed for formatting and content after the push was performed on the branch.

Author: c-sahuja <sahuja@cloudera.com>

Closes#16185 from c-sahuja/createExternalTable.

## What changes were proposed in this pull request?

This documents the partition handling changes for Spark 2.1 and how to migrate existing tables.

## How was this patch tested?

Built docs locally.

rxin

Author: Eric Liang <ekl@databricks.com>

Closes#16074 from ericl/spark-18145.

## What changes were proposed in this pull request?

This PR is to fix incorrect `code` tag in `sql-programming-guide.md`

## How was this patch tested?

Manually.

Author: Weiqing Yang <yangweiqing001@gmail.com>

Closes#15941 from weiqingy/fixtag.

## What changes were proposed in this pull request?

This is a follow-up PR of #15868 to merge `maxConnections` option into `numPartitions` options.

## How was this patch tested?

Pass the existing tests.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#15966 from dongjoon-hyun/SPARK-18413-2.

## What changes were proposed in this pull request?

This PR adds a new JDBCOption `maxConnections` which means the maximum number of simultaneous JDBC connections allowed. This option applies only to writing with coalesce operation if needed. It defaults to the number of partitions of RDD. Previously, SQL users cannot cannot control this while Scala/Java/Python users can use `coalesce` (or `repartition`) API.

**Reported Scenario**

For the following cases, the number of connections becomes 200 and database cannot handle all of them.

```sql

CREATE OR REPLACE TEMPORARY VIEW resultview

USING org.apache.spark.sql.jdbc

OPTIONS (

url "jdbc:oracle:thin:10.129.10.111:1521:BKDB",

dbtable "result",

user "HIVE",

password "HIVE"

);

-- set spark.sql.shuffle.partitions=200

INSERT OVERWRITE TABLE resultview SELECT g, count(1) AS COUNT FROM tnet.DT_LIVE_INFO GROUP BY g

```

## How was this patch tested?

Manual. Do the followings and see Spark UI.

**Step 1 (MySQL)**

```

CREATE TABLE t1 (a INT);

CREATE TABLE data (a INT);

INSERT INTO data VALUES (1);

INSERT INTO data VALUES (2);

INSERT INTO data VALUES (3);

```

**Step 2 (Spark)**

```scala

SPARK_HOME=$PWD bin/spark-shell --driver-memory 4G --driver-class-path mysql-connector-java-5.1.40-bin.jar

scala> sql("SET spark.sql.shuffle.partitions=3")

scala> sql("CREATE OR REPLACE TEMPORARY VIEW data USING org.apache.spark.sql.jdbc OPTIONS (url 'jdbc:mysql://localhost:3306/t', dbtable 'data', user 'root', password '')")

scala> sql("CREATE OR REPLACE TEMPORARY VIEW t1 USING org.apache.spark.sql.jdbc OPTIONS (url 'jdbc:mysql://localhost:3306/t', dbtable 't1', user 'root', password '', maxConnections '1')")

scala> sql("INSERT OVERWRITE TABLE t1 SELECT a FROM data GROUP BY a")

scala> sql("CREATE OR REPLACE TEMPORARY VIEW t1 USING org.apache.spark.sql.jdbc OPTIONS (url 'jdbc:mysql://localhost:3306/t', dbtable 't1', user 'root', password '', maxConnections '2')")

scala> sql("INSERT OVERWRITE TABLE t1 SELECT a FROM data GROUP BY a")

scala> sql("CREATE OR REPLACE TEMPORARY VIEW t1 USING org.apache.spark.sql.jdbc OPTIONS (url 'jdbc:mysql://localhost:3306/t', dbtable 't1', user 'root', password '', maxConnections '3')")

scala> sql("INSERT OVERWRITE TABLE t1 SELECT a FROM data GROUP BY a")

scala> sql("CREATE OR REPLACE TEMPORARY VIEW t1 USING org.apache.spark.sql.jdbc OPTIONS (url 'jdbc:mysql://localhost:3306/t', dbtable 't1', user 'root', password '', maxConnections '4')")

scala> sql("INSERT OVERWRITE TABLE t1 SELECT a FROM data GROUP BY a")

```

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#15868 from dongjoon-hyun/SPARK-18413.

## What changes were proposed in this pull request?

Fix typos in the 'configuration', 'monitoring' and 'sql-programming-guide' documentation.

## How was this patch tested?

Manually.

Author: Weiqing Yang <yangweiqing001@gmail.com>

Closes#15886 from weiqingy/fixTypo.

## What changes were proposed in this pull request?

API and programming guide doc changes for Scala, Python and R.

## How was this patch tested?

manual test

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#15629 from felixcheung/jsondoc.

## What changes were proposed in this pull request?

Always resolve spark.sql.warehouse.dir as a local path, and as relative to working dir not home dir

## How was this patch tested?

Existing tests.

Author: Sean Owen <sowen@cloudera.com>

Closes#15382 from srowen/SPARK-17810.

## What changes were proposed in this pull request?

In http://spark.apache.org/docs/latest/sql-programming-guide.html, Section "Untyped Dataset Operations (aka DataFrame Operations)"

Link to R DataFrame doesn't work that return

The requested URL /docs/latest/api/R/DataFrame.html was not found on this server.

Correct link is SparkDataFrame.html for spark 2.0

## How was this patch tested?

Manual checked.

Author: Tommy YU <tummyyu@163.com>

Closes#15543 from Wenpei/spark-18001.

## What changes were proposed in this pull request?

Add more built-in sources in sql-programming-guide.md.

## How was this patch tested?

Manually.

Author: Weiqing Yang <yangweiqing001@gmail.com>

Closes#15522 from weiqingy/dsDoc.

## What changes were proposed in this pull request?

This PR proposes to fix arbitrary usages among `Map[String, String]`, `Properties` and `JDBCOptions` instances for options in `execution/jdbc` package and make the connection properties exclude Spark-only options.

This PR includes some changes as below:

- Unify `Map[String, String]`, `Properties` and `JDBCOptions` in `execution/jdbc` package to `JDBCOptions`.

- Move `batchsize`, `fetchszie`, `driver` and `isolationlevel` options into `JDBCOptions` instance.

- Document `batchSize` and `isolationlevel` with marking both read-only options and write-only options. Also, this includes minor types and detailed explanation for some statements such as url.

- Throw exceptions fast by checking arguments first rather than in execution time (e.g. for `fetchsize`).

- Exclude Spark-only options in connection properties.

## How was this patch tested?

Existing tests should cover this.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#15292 from HyukjinKwon/SPARK-17719.

## What changes were proposed in this pull request?

Global temporary view is a cross-session temporary view, which means it's shared among all sessions. Its lifetime is the lifetime of the Spark application, i.e. it will be automatically dropped when the application terminates. It's tied to a system preserved database `global_temp`(configurable via SparkConf), and we must use the qualified name to refer a global temp view, e.g. SELECT * FROM global_temp.view1.

changes for `SessionCatalog`:

1. add a new field `gloabalTempViews: GlobalTempViewManager`, to access the shared global temp views, and the global temp db name.

2. `createDatabase` will fail if users wanna create `global_temp`, which is system preserved.

3. `setCurrentDatabase` will fail if users wanna set `global_temp`, which is system preserved.

4. add `createGlobalTempView`, which is used in `CreateViewCommand` to create global temp views.

5. add `dropGlobalTempView`, which is used in `CatalogImpl` to drop global temp view.

6. add `alterTempViewDefinition`, which is used in `AlterViewAsCommand` to update the view definition for local/global temp views.

7. `renameTable`/`dropTable`/`isTemporaryTable`/`lookupRelation`/`getTempViewOrPermanentTableMetadata`/`refreshTable` will handle global temp views.

changes for SQL commands:

1. `CreateViewCommand`/`AlterViewAsCommand` is updated to support global temp views

2. `ShowTablesCommand` outputs a new column `database`, which is used to distinguish global and local temp views.

3. other commands can also handle global temp views if they call `SessionCatalog` APIs which accepts global temp views, e.g. `DropTableCommand`, `AlterTableRenameCommand`, `ShowColumnsCommand`, etc.

changes for other public API

1. add a new method `dropGlobalTempView` in `Catalog`

2. `Catalog.findTable` can find global temp view

3. add a new method `createGlobalTempView` in `Dataset`

## How was this patch tested?

new tests in `SQLViewSuite`

Author: Wenchen Fan <wenchen@databricks.com>

Closes#14897 from cloud-fan/global-temp-view.

## What changes were proposed in this pull request?

This change modifies the implementation of DataFrameWriter.save such that it works with jdbc, and the call to jdbc merely delegates to save.

## How was this patch tested?

This was tested via unit tests in the JDBCWriteSuite, of which I added one new test to cover this scenario.

## Additional details

rxin This seems to have been most recently touched by you and was also commented on in the JIRA.

This contribution is my original work and I license the work to the project under the project's open source license.

Author: Justin Pihony <justin.pihony@gmail.com>

Author: Justin Pihony <justin.pihony@typesafe.com>

Closes#12601 from JustinPihony/jdbc_reconciliation.

## What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

This is the document for previous JDBC Writer options.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

Unit test has been added in previous PR.

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Author: GraceH <jhuang1@paypal.com>

Closes#14683 from GraceH/jdbc_options.

## What changes were proposed in this pull request?

default value for spark.sql.broadcastTimeout is 300s. and this property do not show in any docs of spark. so add "spark.sql.broadcastTimeout" into docs/sql-programming-guide.md to help people to how to fix this timeout error when it happenned

## How was this patch tested?

not need

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

…ide.md

JIRA_ID:SPARK-16870

Description:default value for spark.sql.broadcastTimeout is 300s. and this property do not show in any docs of spark. so add "spark.sql.broadcastTimeout" into docs/sql-programming-guide.md to help people to how to fix this timeout error when it happenned

Test:done

Author: keliang <keliang@cmss.chinamobile.com>

Closes#14477 from biglobster/keliang.

## What changes were proposed in this pull request?

This PR makes various minor updates to examples of all language bindings to make sure they are consistent with each other. Some typos and missing parts (JDBC example in Scala/Java/Python) are also fixed.

## How was this patch tested?

Manually tested.

Author: Cheng Lian <lian@databricks.com>

Closes#14368 from liancheng/revise-examples.

## What changes were proposed in this pull request?

This pr is to fix a wrong description for parquet default compression.

Author: Takeshi YAMAMURO <linguin.m.s@gmail.com>

Closes#14351 from maropu/FixParquetDoc.

This PR is based on PR #14098 authored by wangmiao1981.

## What changes were proposed in this pull request?

This PR replaces the original Python Spark SQL example file with the following three files:

- `sql/basic.py`

Demonstrates basic Spark SQL features.

- `sql/datasource.py`

Demonstrates various Spark SQL data sources.

- `sql/hive.py`

Demonstrates Spark SQL Hive interaction.

This PR also removes hard-coded Python example snippets in the SQL programming guide by extracting snippets from the above files using the `include_example` Liquid template tag.

## How was this patch tested?

Manually tested.

Author: wm624@hotmail.com <wm624@hotmail.com>

Author: Cheng Lian <lian@databricks.com>

Closes#14317 from liancheng/py-examples-update.

## What changes were proposed in this pull request?

update `refreshTable` API in python code of the sql-programming-guide.

This API is added in SPARK-15820

## How was this patch tested?

N/A

Author: WeichenXu <WeichenXu123@outlook.com>

Closes#14220 from WeichenXu123/update_sql_doc_catalog.

## What changes were proposed in this pull request?

This PR moves one and the last hard-coded Scala example snippet from the SQL programming guide into `SparkSqlExample.scala`. It also renames all Scala/Java example files so that all "Sql" in the file names are updated to "SQL".

## How was this patch tested?

Manually verified the generated HTML page.

Author: Cheng Lian <lian@databricks.com>

Closes#14245 from liancheng/minor-scala-example-update.

## What changes were proposed in this pull request?

Fixes a typo in the sql programming guide

## How was this patch tested?

Building docs locally

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Author: Shivaram Venkataraman <shivaram@cs.berkeley.edu>

Closes#14208 from shivaram/spark-sql-doc-fix.

- Hard-coded Spark SQL sample snippets were moved into source files under examples sub-project.

- Removed the inconsistency between Scala and Java Spark SQL examples

- Scala and Java Spark SQL examples were updated

The work is still in progress. All involved examples were tested manually. An additional round of testing will be done after the code review.

Author: aokolnychyi <okolnychyyanton@gmail.com>

Closes#14119 from aokolnychyi/spark_16303.

## What changes were proposed in this pull request?

when query only use metadata (example: partition key), it can return results based on metadata without scanning files. Hive did it in HIVE-1003.

## How was this patch tested?

add unit tests

Author: Lianhui Wang <lianhuiwang09@gmail.com>

Author: Wenchen Fan <wenchen@databricks.com>

Author: Lianhui Wang <lianhuiwang@users.noreply.github.com>

Closes#13494 from lianhuiwang/metadata-only.

## What changes were proposed in this pull request?

This PR adds labelling support for the `include_example` Jekyll plugin, so that we may split a single source file into multiple line blocks with different labels, and include them in multiple code snippets in the generated HTML page.

## How was this patch tested?

Manually tested.

<img width="923" alt="screenshot at jun 29 19-53-21" src="https://cloud.githubusercontent.com/assets/230655/16451099/66a76db2-3e33-11e6-84fb-63104c2f0688.png">

Author: Cheng Lian <lian@databricks.com>

Closes#13972 from liancheng/include-example-with-labels.

## What changes were proposed in this pull request?

This PR makes several updates to SQL programming guide.

Author: Yin Huai <yhuai@databricks.com>

Closes#13938 from yhuai/doc.

## What changes were proposed in this pull request?

Doc changes

## How was this patch tested?

manual

liancheng

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#13827 from felixcheung/sqldocdeprecate.

## What changes were proposed in this pull request?

Update docs for two parameters `spark.sql.files.maxPartitionBytes` and `spark.sql.files.openCostInBytes ` in Other Configuration Options.

## How was this patch tested?

N/A

Author: Takeshi YAMAMURO <linguin.m.s@gmail.com>

Closes#13797 from maropu/SPARK-15894-2.

## What changes were proposed in this pull request?

Update doc as per discussion in PR #13592

## How was this patch tested?

manual

shivaram liancheng

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#13799 from felixcheung/rsqlprogrammingguide.

## What changes were proposed in this pull request?

Initial SQL programming guide update for Spark 2.0. Contents like 1.6 to 2.0 migration guide are still incomplete.

We may also want to add more examples for Scala/Java Dataset typed transformations.

## How was this patch tested?

N/A

Author: Cheng Lian <lian@databricks.com>

Closes#13592 from liancheng/sql-programming-guide-2.0.

## What changes were proposed in this pull request?

fixing documentation for the groupby/agg example in python

## How was this patch tested?

the existing example in the documentation dose not contain valid syntax (missing parenthesis) and is not using `Column` in the expression for `agg()`

after the fix here's how I tested it:

```

In [1]: from pyspark.sql import Row

In [2]: import pyspark.sql.functions as func

In [3]: %cpaste

Pasting code; enter '--' alone on the line to stop or use Ctrl-D.

:records = [{'age': 19, 'department': 1, 'expense': 100},

: {'age': 20, 'department': 1, 'expense': 200},

: {'age': 21, 'department': 2, 'expense': 300},

: {'age': 22, 'department': 2, 'expense': 300},

: {'age': 23, 'department': 3, 'expense': 300}]

:--

In [4]: df = sqlContext.createDataFrame([Row(**d) for d in records])

In [5]: df.groupBy("department").agg(df["department"], func.max("age"), func.sum("expense")).show()

+----------+----------+--------+------------+

|department|department|max(age)|sum(expense)|

+----------+----------+--------+------------+

| 1| 1| 20| 300|

| 2| 2| 22| 600|

| 3| 3| 23| 300|

+----------+----------+--------+------------+

Author: Mortada Mehyar <mortada.mehyar@gmail.com>

Closes#13587 from mortada/groupby_agg_doc_fix.

## What changes were proposed in this pull request?

Update the unit test code, examples, and documents to remove calls to deprecated method `dataset.registerTempTable`.

## How was this patch tested?

This PR only changes the unit test code, examples, and comments. It should be safe.

This is a follow up of PR https://github.com/apache/spark/pull/12945 which was merged.

Author: Sean Zhong <seanzhong@databricks.com>

Closes#13098 from clockfly/spark-15171-remove-deprecation.

## What changes were proposed in this pull request?

dapply() applies an R function on each partition of a DataFrame and returns a new DataFrame.

The function signature is:

dapply(df, function(localDF) {}, schema = NULL)

R function input: local data.frame from the partition on local node

R function output: local data.frame

Schema specifies the Row format of the resulting DataFrame. It must match the R function's output.

If schema is not specified, each partition of the result DataFrame will be serialized in R into a single byte array. Such resulting DataFrame can be processed by successive calls to dapply().

## How was this patch tested?

SparkR unit tests.

Author: Sun Rui <rui.sun@intel.com>

Author: Sun Rui <sunrui2016@gmail.com>

Closes#12493 from sun-rui/SPARK-12919.

## What changes were proposed in this pull request?

This issue aims to fix some errors in R examples and make them up-to-date in docs and example modules.

- Remove the wrong usage of `map`. We need to use `lapply` in `sparkR` if needed. However, `lapply` is private so far. The corrected example will be added later.

- Fix the wrong example in Section `Generic Load/Save Functions` of `docs/sql-programming-guide.md` for consistency

- Fix datatypes in `sparkr.md`.

- Update a data result in `sparkr.md`.

- Replace deprecated functions to remove warnings: jsonFile -> read.json, parquetFile -> read.parquet

- Use up-to-date R-like functions: loadDF -> read.df, saveDF -> write.df, saveAsParquetFile -> write.parquet

- Replace `SparkR DataFrame` with `SparkDataFrame` in `dataframe.R` and `data-manipulation.R`.

- Other minor syntax fixes and a typo.

## How was this patch tested?

Manual.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#12649 from dongjoon-hyun/SPARK-14883.

## What changes were proposed in this pull request?

Removing references to assembly jar in documentation.

Adding an additional (previously undocumented) usage of spark-submit to run examples.

## How was this patch tested?

Ran spark-submit usage to ensure formatting was fine. Ran examples using SparkSubmit.

Author: Mark Grover <mark@apache.org>

Closes#12365 from markgrover/spark-14601.

## What changes were proposed in this pull request?

This PR fixes the `age` data types from `integer` to `long` in `SQL Programming Guide: JSON Datasets`.

## How was this patch tested?

Manual.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#12290 from dongjoon-hyun/minor_fix_type_in_json_example.

## What changes were proposed in this pull request?

This patch removes DirectParquetOutputCommitter. This was initially created by Databricks as a faster way to write Parquet data to S3. However, given how the underlying S3 Hadoop implementation works, this committer only works when there are no failures. If there are multiple attempts of the same task (e.g. speculation or task failures or node failures), the output data can be corrupted. I don't think this performance optimization outweighs the correctness issue.

## How was this patch tested?

Removed the related tests also.

Author: Reynold Xin <rxin@databricks.com>

Closes#12229 from rxin/SPARK-10063.

This change modifies the "assembly/" module to just copy needed

dependencies to its build directory, and modifies the packaging

script to pick those up (and remove duplicate jars packages in the

examples module).

I also made some minor adjustments to dependencies to remove some

test jars from the final packaging, and remove jars that conflict with each

other when packaged separately (e.g. servlet api).

Also note that this change restores guava in applications' classpaths, even

though it's still shaded inside Spark. This is now needed for the Hadoop

libraries that are packaged with Spark, which now are not processed by

the shade plugin.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#11796 from vanzin/SPARK-13579.

## What changes were proposed in this pull request?

Since developer API of plug-able parser has been removed in #10801 , docs should be updated accordingly.

## How was this patch tested?

This patch will not affect the real code path.

Author: Daoyuan Wang <daoyuan.wang@intel.com>

Closes#11758 from adrian-wang/spark12855.

## What changes were proposed in this pull request?

`Shark` was merged into `Spark SQL` since [July 2014](https://databricks.com/blog/2014/07/01/shark-spark-sql-hive-on-spark-and-the-future-of-sql-on-spark.html). The followings seem to be the only legacy. For Spark 2.x, we had better clean up those docs.

**Migration Guide**

```

- ## Migration Guide for Shark Users

- ...

- ### Scheduling

- ...

- ### Reducer number

- ...

- ### Caching

```

## How was this patch tested?

Pass the Jenkins test.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#11770 from dongjoon-hyun/SPARK-13942.

## What changes were proposed in this pull request?

In order to make `docs/examples` (and other related code) more simple/readable/user-friendly, this PR replaces existing codes like the followings by using `diamond` operator.

```

- final ArrayList<Product2<Object, Object>> dataToWrite =

- new ArrayList<Product2<Object, Object>>();

+ final ArrayList<Product2<Object, Object>> dataToWrite = new ArrayList<>();

```

Java 7 or higher supports **diamond** operator which replaces the type arguments required to invoke the constructor of a generic class with an empty set of type parameters (<>). Currently, Spark Java code use mixed usage of this.

## How was this patch tested?

Manual.

Pass the existing tests.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#11541 from dongjoon-hyun/SPARK-13702.