### What changes were proposed in this pull request?

This PR aims to deprecate old Java 8 versions prior to 8u201.

### Why are the changes needed?

This is a preparation of using G1GC during the migration among Java LTS versions (8/11/17).

8u162 has the following fix.

- JDK-8205376: JVM Crash during G1 GC

8u201 has the following fix.

- JDK-8208873: C1: G1 barriers don't preserve FP registers

### Does this PR introduce _any_ user-facing change?

No, Today's Java8 is usually 1.8.0_292 and this is just a deprecation in documentation.

### How was this patch tested?

N/A

Closes#33166 from dongjoon-hyun/SPARK-35962.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Deprecate Python 3.6 in Spark documentation

### Why are the changes needed?

According to https://endoflife.date/python, Python 3.6 will be EOL on 23 Dec, 2021.

We should prepare for the deprecation of Python 3.6 support in Spark in advance.

### Does this PR introduce _any_ user-facing change?

N/A.

### How was this patch tested?

Manual tests.

Closes#33141 from xinrong-databricks/deprecate3.6_doc.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Soften security warning and keep it in cluster management docs only, not in the main doc page, where it's not necessarily relevant.

### Why are the changes needed?

The statement is perhaps unnecessarily 'frightening' as the first section in the main docs page. It applies to clusters not local mode, anyhow.

### Does this PR introduce _any_ user-facing change?

Just a docs change.

### How was this patch tested?

N/A

Closes#32206 from srowen/SecurityStatement.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Deprecate Apache Mesos support for Spark 3.2.0 by adding documentation to this effect.

### Why are the changes needed?

Apache Mesos is ceasing development (https://lists.apache.org/thread.html/rab2a820507f7c846e54a847398ab20f47698ec5bce0c8e182bfe51ba%40%3Cdev.mesos.apache.org%3E) ; at some point we'll want to drop support, so, deprecate it now.

This doesn't mean it'll go away in 3.3.0.

### Does this PR introduce _any_ user-facing change?

No, docs only.

### How was this patch tested?

N/A

Closes#32150 from srowen/SPARK-35050.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to add a note for Apache Arrow project's `PyArrow` compatibility for Python 3.9.

### Why are the changes needed?

Although Apache Spark documentation claims `Spark runs on Java 8/11, Scala 2.12, Python 3.6+ and R 3.5+.`,

Apache Arrow's `PyArrow` is not compatible with Python 3.9.x yet. Without installing `PyArrow` library, PySpark UTs passed without any problem. So, it would be enough to add a note for this limitation and the compatibility link of Apache Arrow website.

- https://arrow.apache.org/docs/python/install.html#python-compatibility

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

**BEFORE**

<img width="804" alt="Screen Shot 2021-01-19 at 1 45 07 PM" src="https://user-images.githubusercontent.com/9700541/105096867-8fbdbe00-5a5c-11eb-88f7-8caae2427583.png">

**AFTER**

<img width="908" alt="Screen Shot 2021-01-19 at 7 06 41 PM" src="https://user-images.githubusercontent.com/9700541/105121661-85fe7f80-5a89-11eb-8af7-1b37e12c55c1.png">

Closes#31251 from dongjoon-hyun/SPARK-34162.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR proposes to:

- Add a link of quick start in PySpark docs into "Programming Guides" in Spark main docs

- `ML` / `MLlib` -> `MLlib (DataFrame-based)` / `MLlib (RDD-based)` in API reference page

- Mention other user guides as well because the guide such as [ML](http://spark.apache.org/docs/latest/ml-guide.html) and [SQL](http://spark.apache.org/docs/latest/sql-programming-guide.html).

- Mention other migration guides as well because PySpark can get affected by it.

### Why are the changes needed?

For better documentation.

### Does this PR introduce _any_ user-facing change?

It fixes user-facing docs. However, it's not released out yet.

### How was this patch tested?

Manually tested by running:

```bash

cd docs

SKIP_SCALADOC=1 SKIP_RDOC=1 SKIP_SQLDOC=1 jekyll serve --watch

```

Closes#31082 from HyukjinKwon/SPARK-34041.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to drop Python 2.7, 3.4 and 3.5.

Roughly speaking, it removes all the widely known Python 2 compatibility workarounds such as `sys.version` comparison, `__future__`. Also, it removes the Python 2 dedicated codes such as `ArrayConstructor` in Spark.

### Why are the changes needed?

1. Unsupport EOL Python versions

2. Reduce maintenance overhead and remove a bit of legacy codes and hacks for Python 2.

3. PyPy2 has a critical bug that causes a flaky test, SPARK-28358 given my testing and investigation.

4. Users can use Python type hints with Pandas UDFs without thinking about Python version

5. Users can leverage one latest cloudpickle, https://github.com/apache/spark/pull/28950. With Python 3.8+ it can also leverage C pickle.

### Does this PR introduce _any_ user-facing change?

Yes, users cannot use Python 2.7, 3.4 and 3.5 in the upcoming Spark version.

### How was this patch tested?

Manually tested and also tested in Jenkins.

Closes#28957 from HyukjinKwon/SPARK-32138.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Spark 3.0 accidentally dropped R < 3.5. It is built by R 3.6.3 which not support R < 3.5:

```

Error in readRDS(pfile) : cannot read workspace version 3 written by R 3.6.3; need R 3.5.0 or newer version.

```

In fact, with SPARK-31918, we will have to drop R < 3.5 entirely to support R 4.0.0. This is inevitable to release on CRAN because they require to make the tests pass with the latest R.

### Why are the changes needed?

To show the supported versions correctly, and support R 4.0.0 to unblock the releases.

### Does this PR introduce _any_ user-facing change?

In fact, no because Spark 3.0.0 already does not work with R < 3.5.

Compared to Spark 2.4, yes. R < 3.5 would not work.

### How was this patch tested?

Jenkins should test it out.

Closes#28908 from HyukjinKwon/SPARK-32073.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Change the link to the Scala API document.

```

$ git grep "#org.apache.spark.package"

docs/_layouts/global.html: <li><a href="api/scala/index.html#org.apache.spark.package">Scala</a></li>

docs/index.md:* [Spark Scala API (Scaladoc)](api/scala/index.html#org.apache.spark.package)

docs/rdd-programming-guide.md:[Scala](api/scala/#org.apache.spark.package), [Java](api/java/), [Python](api/python/) and [R](api/R/).

```

### Why are the changes needed?

The home page link for Scala API document is incorrect after upgrade to 3.0

### Does this PR introduce any user-facing change?

Document UI change only.

### How was this patch tested?

Local test, attach screenshots below:

Before:

After:

Closes#27549 from xuanyuanking/scala-doc.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

Closes#26690 from huangtianhua/add-note-spark-runs-on-arm64.

Authored-by: huangtianhua <huangtianhua@huawei.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR aims to deprecate `Python 3.4 ~ 3.5`, which is prior to version 3.6 additionally.

### Why are the changes needed?

Since `Python 3.8` is already out, we will focus on to support Python 3.6/3.7/3.8.

### Does this PR introduce any user-facing change?

Yes. It's highly recommended to use Python 3.6/3.7. We will verify Python 3.8 before Apache Spark 3.0.0 release.

### How was this patch tested?

NA (This is a doc-only change).

Closes#26326 from dongjoon-hyun/SPARK-29668.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR aims to deprecate old Java 8 versions prior to 8u92.

### Why are the changes needed?

This is a preparation to use JVM Option `ExitOnOutOfMemoryError`.

- https://www.oracle.com/technetwork/java/javase/8u92-relnotes-2949471.html

### Does this PR introduce any user-facing change?

Yes. It's highly recommended for users to use the latest JDK versions of Java 8/11.

### How was this patch tested?

NA (This is a doc change).

Closes#26249 from dongjoon-hyun/SPARK-29597.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PRs add Java 11 version to the document.

### Why are the changes needed?

Apache Spark 3.0.0 starts to support JDK11 officially.

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

Manually. Doc generation.

Closes#25875 from dongjoon-hyun/SPARK-29196.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

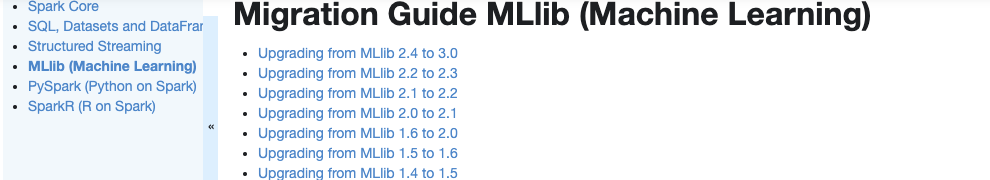

### What changes were proposed in this pull request?

Currently, there is no migration section for PySpark, SparkCore and Structured Streaming.

It is difficult for users to know what to do when they upgrade.

This PR proposes to create create a "Migration Guide" tap at Spark documentation.

This page will contain migration guides for Spark SQL, PySpark, SparkR, MLlib, Structured Streaming and Core. Basically it is a refactoring.

There are some new information added, which I will leave a comment inlined for easier review.

1. **MLlib**

Merge [ml-guide.html#migration-guide](https://spark.apache.org/docs/latest/ml-guide.html#migration-guide) and [ml-migration-guides.html](https://spark.apache.org/docs/latest/ml-migration-guides.html)

```

'docs/ml-guide.md'

↓ Merge new/old migration guides

'docs/ml-migration-guide.md'

```

2. **PySpark**

Extract PySpark specific items from https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html

```

'docs/sql-migration-guide-upgrade.md'

↓ Extract PySpark specific items

'docs/pyspark-migration-guide.md'

```

3. **SparkR**

Move [sparkr.html#migration-guide](https://spark.apache.org/docs/latest/sparkr.html#migration-guide) into a separate file, and extract from [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html)

```

'docs/sparkr.md' 'docs/sql-migration-guide-upgrade.md'

Move migration guide section ↘ ↙ Extract SparkR specific items

docs/sparkr-migration-guide.md

```

4. **Core**

Newly created at `'docs/core-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note.

5. **Structured Streaming**

Newly created at `'docs/ss-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note.

6. **SQL**

Merged [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html) and [sql-migration-guide-hive-compatibility.html](https://spark.apache.org/docs/latest/sql-migration-guide-hive-compatibility.html)

```

'docs/sql-migration-guide-hive-compatibility.md' 'docs/sql-migration-guide-upgrade.md'

Move Hive compatibility section ↘ ↙ Left over after filtering PySpark and SparkR items

'docs/sql-migration-guide.md'

```

### Why are the changes needed?

In order for users in production to effectively migrate to higher versions, and detect behaviour or breaking changes before upgrading and/or migrating.

### Does this PR introduce any user-facing change?

Yes, this changes Spark's documentation at https://spark.apache.org/docs/latest/index.html.

### How was this patch tested?

Manually build the doc. This can be verified as below:

```bash

cd docs

SKIP_API=1 jekyll build

open _site/index.html

```

Closes#25757 from HyukjinKwon/migration-doc.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

After Apache Spark 3.0.0 supports JDK11 officially, people will try JDK11 on old Spark releases (especially 2.4.4/2.3.4) in the same way because our document says `Java 8+`. We had better avoid that misleading situation.

This PR aims to remove `+` from `Java 8+` in the documentation (master/2.4/2.3). Especially, 2.4.4 release and 2.3.4 release (cc kiszk )

On master branch, we will add JDK11 after [SPARK-24417.](https://issues.apache.org/jira/browse/SPARK-24417)

## How was this patch tested?

This is a documentation only change.

<img width="923" alt="java8" src="https://user-images.githubusercontent.com/9700541/63116589-e1504800-bf4e-11e9-8904-b160ec7a42c0.png">

Closes#25466 from dongjoon-hyun/SPARK-DOC-JDK8.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

We updated our website a long time ago to describe Spark as the unified analytics engine, which is also how Spark is described in the community now. But our README and docs page still use the same description from 2011 ... This patch updates them.

The patch also updates the README example to use more modern APIs, and refer to Structured Streaming rather than Spark Streaming.

Closes#24573 from rxin/consistent-message.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Add AL2 license to metadata of all .md files.

This seemed to be the tidiest way as it will get ignored by .md renderers and other tools. Attempts to write them as markdown comments revealed that there is no such standard thing.

## How was this patch tested?

Doc build

Closes#24243 from srowen/SPARK-26918.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Remove Scala 2.11 support in build files and docs, and in various parts of code that accommodated 2.11. See some targeted comments below.

## How was this patch tested?

Existing tests.

Closes#23098 from srowen/SPARK-26132.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Docs still say that Spark will be available on PyPi "in the future"; just needs to be updated.

## How was this patch tested?

Doc build

Closes#23933 from srowen/SPARK-26807.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Fix Typos.

## How was this patch tested?

NA

Closes#23145 from kjmrknsn/docUpdate.

Authored-by: Keiji Yoshida <kjmrknsn@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR proposes to bump up the minimum versions of R from 3.1 to 3.4.

R version. 3.1.x is too old. It's released 4.5 years ago. R 3.4.0 is released 1.5 years ago. Considering the timing for Spark 3.0, deprecating lower versions, bumping up R to 3.4 might be reasonable option.

It should be good to deprecate and drop < R 3.4 support.

## How was this patch tested?

Jenkins tests.

Closes#23012 from HyukjinKwon/SPARK-26014.

Authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Clarify documentation about security.

## How was this patch tested?

None, just documentation

Closes#22852 from tgravescs/SPARK-25023.

Authored-by: Thomas Graves <tgraves@thirteenroutine.corp.gq1.yahoo.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

Remove Hadoop 2.6 references and make 2.7 the default.

Obviously, this is for master/3.0.0 only.

After this we can also get rid of the separate test jobs for Hadoop 2.6.

## How was this patch tested?

Existing tests

Closes#22615 from srowen/SPARK-25016.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Fix a few broken links and typos, and, nit, use HTTPS more consistently esp. on scripts and Apache links

## How was this patch tested?

Doc build

Closes#22172 from srowen/DocTypo.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

What changes were proposed in this pull request?

This PR contains documentation on the usage of Kubernetes scheduler in Spark 2.3, and a shell script to make it easier to build docker images required to use the integration. The changes detailed here are covered by https://github.com/apache/spark/pull/19717 and https://github.com/apache/spark/pull/19468 which have merged already.

How was this patch tested?

The script has been in use for releases on our fork. Rest is documentation.

cc rxin mateiz (shepherd)

k8s-big-data SIG members & contributors: foxish ash211 mccheah liyinan926 erikerlandson ssuchter varunkatta kimoonkim tnachen ifilonenko

reviewers: vanzin felixcheung jiangxb1987 mridulm

TODO:

- [x] Add dockerfiles directory to built distribution. (https://github.com/apache/spark/pull/20007)

- [x] Change references to docker to instead say "container" (https://github.com/apache/spark/pull/19995)

- [x] Update configuration table.

- [x] Modify spark.kubernetes.allocation.batch.delay to take time instead of int (#20032)

Author: foxish <ramanathana@google.com>

Closes#19946 from foxish/update-k8s-docs.

## What changes were proposed in this pull request?

This generates a documentation for Spark SQL built-in functions.

One drawback is, this requires a proper build to generate built-in function list.

Once it is built, it only takes few seconds by `sql/create-docs.sh`.

Please see https://spark-test.github.io/sparksqldoc/ that I hosted to show the output documentation.

There are few more works to be done in order to make the documentation pretty, for example, separating `Arguments:` and `Examples:` but I guess this should be done within `ExpressionDescription` and `ExpressionInfo` rather than manually parsing it. I will fix these in a follow up.

This requires `pip install mkdocs` to generate HTMLs from markdown files.

## How was this patch tested?

Manually tested:

```

cd docs

jekyll build

```

,

```

cd docs

jekyll serve

```

and

```

cd sql

create-docs.sh

```

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#18702 from HyukjinKwon/SPARK-21485.

## What changes were proposed in this pull request?

Update internal references from programming-guide to rdd-programming-guide

See 5ddf243fd8 and https://github.com/apache/spark/pull/18485#issuecomment-314789751

Let's keep the redirector even if it's problematic to build, but not rely on it internally.

## How was this patch tested?

(Doc build)

Author: Sean Owen <sowen@cloudera.com>

Closes#18625 from srowen/SPARK-21267.2.

## What changes were proposed in this pull request?

- Remove Scala 2.10 build profiles and support

- Replace some 2.10 support in scripts with commented placeholders for 2.12 later

- Remove deprecated API calls from 2.10 support

- Remove usages of deprecated context bounds where possible

- Remove Scala 2.10 workarounds like ScalaReflectionLock

- Other minor Scala warning fixes

## How was this patch tested?

Existing tests

Author: Sean Owen <sowen@cloudera.com>

Closes#17150 from srowen/SPARK-19810.

## What changes were proposed in this pull request?

Few changes to the Structured Streaming documentation

- Clarify that the entire stream input table is not materialized

- Add information for Ganglia

- Add Kafka Sink to the main docs

- Removed a couple of leftover experimental tags

- Added more associated reading material and talk videos.

In addition, https://github.com/apache/spark/pull/16856 broke the link to the RDD programming guide in several places while renaming the page. This PR fixes those sameeragarwal cloud-fan.

- Added a redirection to avoid breaking internal and possible external links.

- Removed unnecessary redirection pages that were there since the separate scala, java, and python programming guides were merged together in 2013 or 2014.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#18485 from tdas/SPARK-21267.

## What changes were proposed in this pull request?

Add a new `spark-hadoop-cloud` module and maven profile to pull in object store support from `hadoop-openstack`, `hadoop-aws` and `hadoop-azure` (Hadoop 2.7+) JARs, along with their dependencies, fixing up the dependencies so that everything works, in particular Jackson.

It restores `s3n://` access to S3, adds its `s3a://` replacement, OpenStack `swift://` and azure `wasb://`.

There's a documentation page, `cloud_integration.md`, which covers the basic details of using Spark with object stores, referring the reader to the supplier's own documentation, with specific warnings on security and the possible mismatch between a store's behavior and that of a filesystem. In particular, users are advised be very cautious when trying to use an object store as the destination of data, and to consult the documentation of the storage supplier and the connector.

(this is the successor to #12004; I can't re-open it)

## How was this patch tested?

Downstream tests exist in [https://github.com/steveloughran/spark-cloud-examples/tree/master/cloud-examples](https://github.com/steveloughran/spark-cloud-examples/tree/master/cloud-examples)

Those verify that the dependencies are sufficient to allow downstream applications to work with s3a, azure wasb and swift storage connectors, and perform basic IO & dataframe operations thereon. All seems well.

Manually clean build & verify that assembly contains the relevant aws-* hadoop-* artifacts on Hadoop 2.6; azure on a hadoop-2.7 profile.

SBT build: `build/sbt -Phadoop-cloud -Phadoop-2.7 package`

maven build `mvn install -Phadoop-cloud -Phadoop-2.7`

This PR *does not* update `dev/deps/spark-deps-hadoop-2.7` or `dev/deps/spark-deps-hadoop-2.6`, because unless the hadoop-cloud profile is enabled, no extra JARs show up in the dependency list. The dependency check in Jenkins isn't setting the property, so the new JARs aren't visible.

Author: Steve Loughran <stevel@apache.org>

Author: Steve Loughran <stevel@hortonworks.com>

Closes#17834 from steveloughran/cloud/SPARK-7481-current.

## What changes were proposed in this pull request?

Adding documentation to point to Kubernetes cluster scheduler being developed out-of-repo in https://github.com/apache-spark-on-k8s/spark

cc rxin srowen tnachen ash211 mccheah erikerlandson

## How was this patch tested?

Docs only change

Author: Anirudh Ramanathan <foxish@users.noreply.github.com>

Author: foxish <ramanathana@google.com>

Closes#17522 from foxish/upstream-doc.

- Move external/java8-tests tests into core, streaming, sql and remove

- Remove MaxPermGen and related options

- Fix some reflection / TODOs around Java 8+ methods

- Update doc references to 1.7/1.8 differences

- Remove Java 7/8 related build profiles

- Update some plugins for better Java 8 compatibility

- Fix a few Java-related warnings

For the future:

- Update Java 8 examples to fully use Java 8

- Update Java tests to use lambdas for simplicity

- Update Java internal implementations to use lambdas

## How was this patch tested?

Existing tests

Author: Sean Owen <sowen@cloudera.com>

Closes#16871 from srowen/SPARK-19493.

## What changes were proposed in this pull request?

Fix typo in docs

## How was this patch tested?

Author: uncleGen <hustyugm@gmail.com>

Closes#16658 from uncleGen/typo-issue.

## What changes were proposed in this pull request?

According to the notice of the following Wiki front page, we can remove the obsolete wiki pointer safely in `README.md` and `docs/index.md`, too. These two lines are the last occurrence of that links.

```

All current wiki content has been merged into pages at http://spark.apache.org as of November 2016.

Each page links to the new location of its information on the Spark web site.

Obsolete wiki content is still hosted here, but carries a notice that it is no longer current.

```

## How was this patch tested?

Manual.

- `README.md`: https://github.com/dongjoon-hyun/spark/tree/remove_wiki_from_readme

- `docs/index.md`:

```

cd docs

SKIP_API=1 jekyll build

```

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#16239 from dongjoon-hyun/remove_wiki_from_readme.

## What changes were proposed in this pull request?

Updates links to the wiki to links to the new location of content on spark.apache.org.

## How was this patch tested?

Doc builds

Author: Sean Owen <sowen@cloudera.com>

Closes#15967 from srowen/SPARK-18073.1.

## What changes were proposed in this pull request?

This PR aims to provide a pip installable PySpark package. This does a bunch of work to copy the jars over and package them with the Python code (to prevent challenges from trying to use different versions of the Python code with different versions of the JAR). It does not currently publish to PyPI but that is the natural follow up (SPARK-18129).

Done:

- pip installable on conda [manual tested]

- setup.py installed on a non-pip managed system (RHEL) with YARN [manual tested]

- Automated testing of this (virtualenv)

- packaging and signing with release-build*

Possible follow up work:

- release-build update to publish to PyPI (SPARK-18128)

- figure out who owns the pyspark package name on prod PyPI (is it someone with in the project or should we ask PyPI or should we choose a different name to publish with like ApachePySpark?)

- Windows support and or testing ( SPARK-18136 )

- investigate details of wheel caching and see if we can avoid cleaning the wheel cache during our test

- consider how we want to number our dev/snapshot versions

Explicitly out of scope:

- Using pip installed PySpark to start a standalone cluster

- Using pip installed PySpark for non-Python Spark programs

*I've done some work to test release-build locally but as a non-committer I've just done local testing.

## How was this patch tested?

Automated testing with virtualenv, manual testing with conda, a system wide install, and YARN integration.

release-build changes tested locally as a non-committer (no testing of upload artifacts to Apache staging websites)

Author: Holden Karau <holden@us.ibm.com>

Author: Juliet Hougland <juliet@cloudera.com>

Author: Juliet Hougland <not@myemail.com>

Closes#15659 from holdenk/SPARK-1267-pip-install-pyspark.

## What changes were proposed in this pull request?

Document that Java 7, Python 2.6, Scala 2.10, Hadoop < 2.6 are deprecated in Spark 2.1.0. This does not actually implement any of the change in SPARK-18138, just peppers the documentation with notices about it.

## How was this patch tested?

Doc build

Author: Sean Owen <sowen@cloudera.com>

Closes#15733 from srowen/SPARK-18138.

## What changes were proposed in this pull request?

Point references to spark-packages.org to https://cwiki.apache.org/confluence/display/SPARK/Third+Party+Projects

This will be accompanied by a parallel change to the spark-website repo, and additional changes to this wiki.

## How was this patch tested?

Jenkins tests.

Author: Sean Owen <sowen@cloudera.com>

Closes#15075 from srowen/SPARK-17445.

## What changes were proposed in this pull request?

Made DataFrame-based API primary

* Spark doc menu bar and other places now link to ml-guide.html, not mllib-guide.html

* mllib-guide.html keeps RDD-specific list of features, with a link at the top redirecting people to ml-guide.html

* ml-guide.html includes a "maintenance mode" announcement about the RDD-based API

* **Reviewers: please check this carefully**

* (minor) Titles for DF API no longer include "- spark.ml" suffix. Titles for RDD API have "- RDD-based API" suffix

* Moved migration guide to ml-guide from mllib-guide

* Also moved past guides from mllib-migration-guides to ml-migration-guides, with a redirect link on mllib-migration-guides

* **Reviewers**: I did not change any of the content of the migration guides.

Reorganized DataFrame-based guide:

* ml-guide.html mimics the old mllib-guide.html page in terms of content: overview, migration guide, etc.

* Moved Pipeline description into ml-pipeline.html and moved tuning into ml-tuning.html

* **Reviewers**: I did not change the content of these guides, except some intro text.

* Sidebar remains the same, but with pipeline and tuning sections added

Other:

* ml-classification-regression.html: Moved text about linear methods to new section in page

## How was this patch tested?

Generated docs locally

Author: Joseph K. Bradley <joseph@databricks.com>

Closes#14213 from jkbradley/ml-guide-2.0.

This PR:

* Clarifies that Spark *does* support Python 3, starting with Python 3.4.

Author: Nicholas Chammas <nicholas.chammas@gmail.com>

Closes#13017 from nchammas/supported-python-versions.

## What changes were proposed in this pull request?

This PR updates Scala and Hadoop versions in the build description and commands in `Building Spark` documents.

## How was this patch tested?

N/A

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#11838 from dongjoon-hyun/fix_doc_building_spark.

Remove Hadoop third party distro page, and move Hadoop cluster config info to configuration page

CC pwendell

Author: Sean Owen <sowen@cloudera.com>

Closes#9298 from srowen/SPARK-11305.