### What changes were proposed in this pull request?

This patch fixes the edge case of streaming left/right outer join described below:

Suppose query is provided as

`select * from A join B on A.id = B.id AND (A.ts <= B.ts AND B.ts <= A.ts + interval 5 seconds)`

and there're two rows for L1 (from A) and R1 (from B) which ensures L1.id = R1.id and L1.ts = R1.ts.

(we can simply imagine it from self-join)

Then Spark processes L1 and R1 as below:

- row L1 and row R1 are joined at batch 1

- row R1 is evicted at batch 2 due to join and watermark condition, whereas row L1 is not evicted

- row L1 is evicted at batch 3 due to join and watermark condition

When determining outer rows to match with null, Spark applies some assumption commented in codebase, as below:

```

Checking whether the current row matches a key in the right side state, and that key

has any value which satisfies the filter function when joined. If it doesn't,

we know we can join with null, since there was never (including this batch) a match

within the watermark period. If it does, there must have been a match at some point, so

we know we can't join with null.

```

But as explained the edge-case earlier, the assumption is not correct. As we don't have any good assumption to optimize which doesn't have edge-case, we have to track whether such row is matched with others before, and match with null row only when the row is not matched.

To track the matching of row, the patch adds a new state to streaming join state manager, and mark whether the row is matched to others or not. We leverage the information when dealing with eviction of rows which would be candidates to match with null rows.

This approach introduces new state format which is not compatible with old state format - queries with old state format will be still running but they will still have the issue and be required to discard checkpoint and rerun to take this patch in effect.

### Why are the changes needed?

This patch fixes a correctness issue.

### Does this PR introduce any user-facing change?

No for compatibility viewpoint, but we'll encourage end users to discard the old checkpoint and rerun the query if they run stream-stream outer join query with old checkpoint, which might be "yes" for the question.

### How was this patch tested?

Added UT which fails on current Spark and passes with this patch. Also passed existing streaming join UTs.

Closes#26108 from HeartSaVioR/SPARK-26154-shorten-alternative.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

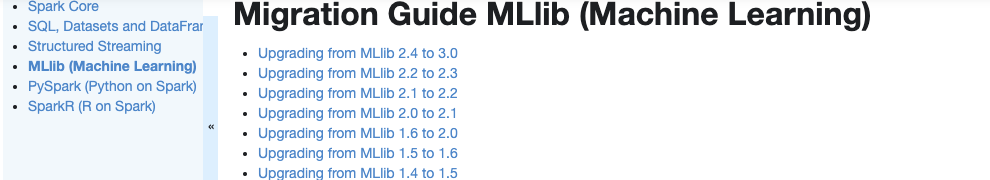

Currently, there is no migration section for PySpark, SparkCore and Structured Streaming.

It is difficult for users to know what to do when they upgrade.

This PR proposes to create create a "Migration Guide" tap at Spark documentation.

This page will contain migration guides for Spark SQL, PySpark, SparkR, MLlib, Structured Streaming and Core. Basically it is a refactoring.

There are some new information added, which I will leave a comment inlined for easier review.

1. **MLlib**

Merge [ml-guide.html#migration-guide](https://spark.apache.org/docs/latest/ml-guide.html#migration-guide) and [ml-migration-guides.html](https://spark.apache.org/docs/latest/ml-migration-guides.html)

```

'docs/ml-guide.md'

↓ Merge new/old migration guides

'docs/ml-migration-guide.md'

```

2. **PySpark**

Extract PySpark specific items from https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html

```

'docs/sql-migration-guide-upgrade.md'

↓ Extract PySpark specific items

'docs/pyspark-migration-guide.md'

```

3. **SparkR**

Move [sparkr.html#migration-guide](https://spark.apache.org/docs/latest/sparkr.html#migration-guide) into a separate file, and extract from [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html)

```

'docs/sparkr.md' 'docs/sql-migration-guide-upgrade.md'

Move migration guide section ↘ ↙ Extract SparkR specific items

docs/sparkr-migration-guide.md

```

4. **Core**

Newly created at `'docs/core-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note.

5. **Structured Streaming**

Newly created at `'docs/ss-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note.

6. **SQL**

Merged [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html) and [sql-migration-guide-hive-compatibility.html](https://spark.apache.org/docs/latest/sql-migration-guide-hive-compatibility.html)

```

'docs/sql-migration-guide-hive-compatibility.md' 'docs/sql-migration-guide-upgrade.md'

Move Hive compatibility section ↘ ↙ Left over after filtering PySpark and SparkR items

'docs/sql-migration-guide.md'

```

### Why are the changes needed?

In order for users in production to effectively migrate to higher versions, and detect behaviour or breaking changes before upgrading and/or migrating.

### Does this PR introduce any user-facing change?

Yes, this changes Spark's documentation at https://spark.apache.org/docs/latest/index.html.

### How was this patch tested?

Manually build the doc. This can be verified as below:

```bash

cd docs

SKIP_API=1 jekyll build

open _site/index.html

```

Closes#25757 from HyukjinKwon/migration-doc.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>