### What changes were proposed in this pull request?

This PR changes the behavior of `Column.getItem` to call `Column.getItem` on Scala side instead of `Column.apply`.

### Why are the changes needed?

The current behavior is not consistent with that of Scala.

In PySpark:

```Python

df = spark.range(2)

map_col = create_map(lit(0), lit(100), lit(1), lit(200))

df.withColumn("mapped", map_col.getItem(col('id'))).show()

# +---+------+

# | id|mapped|

# +---+------+

# | 0| 100|

# | 1| 200|

# +---+------+

```

In Scala:

```Scala

val df = spark.range(2)

val map_col = map(lit(0), lit(100), lit(1), lit(200))

// The following getItem results in the following exception, which is the right behavior:

// java.lang.RuntimeException: Unsupported literal type class org.apache.spark.sql.Column id

// at org.apache.spark.sql.catalyst.expressions.Literal$.apply(literals.scala:78)

// at org.apache.spark.sql.Column.getItem(Column.scala:856)

// ... 49 elided

df.withColumn("mapped", map_col.getItem(col("id"))).show

```

### Does this PR introduce any user-facing change?

Yes. If the use wants to pass `Column` object to `getItem`, he/she now needs to use the indexing operator to achieve the previous behavior.

```Python

df = spark.range(2)

map_col = create_map(lit(0), lit(100), lit(1), lit(200))

df.withColumn("mapped", map_col[col('id'))].show()

# +---+------+

# | id|mapped|

# +---+------+

# | 0| 100|

# | 1| 200|

# +---+------+

```

### How was this patch tested?

Existing tests.

Closes#26351 from imback82/spark-29664.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Currently, there is no migration section for PySpark, SparkCore and Structured Streaming.

It is difficult for users to know what to do when they upgrade.

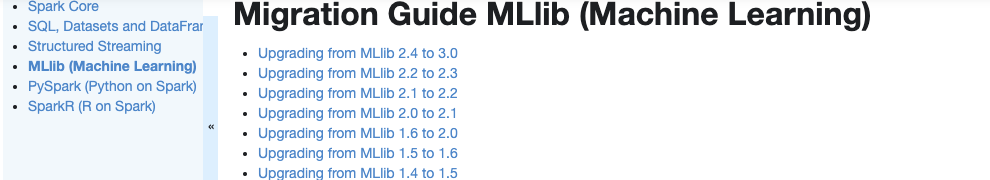

This PR proposes to create create a "Migration Guide" tap at Spark documentation.

This page will contain migration guides for Spark SQL, PySpark, SparkR, MLlib, Structured Streaming and Core. Basically it is a refactoring.

There are some new information added, which I will leave a comment inlined for easier review.

1. **MLlib**

Merge [ml-guide.html#migration-guide](https://spark.apache.org/docs/latest/ml-guide.html#migration-guide) and [ml-migration-guides.html](https://spark.apache.org/docs/latest/ml-migration-guides.html)

```

'docs/ml-guide.md'

↓ Merge new/old migration guides

'docs/ml-migration-guide.md'

```

2. **PySpark**

Extract PySpark specific items from https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html

```

'docs/sql-migration-guide-upgrade.md'

↓ Extract PySpark specific items

'docs/pyspark-migration-guide.md'

```

3. **SparkR**

Move [sparkr.html#migration-guide](https://spark.apache.org/docs/latest/sparkr.html#migration-guide) into a separate file, and extract from [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html)

```

'docs/sparkr.md' 'docs/sql-migration-guide-upgrade.md'

Move migration guide section ↘ ↙ Extract SparkR specific items

docs/sparkr-migration-guide.md

```

4. **Core**

Newly created at `'docs/core-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note.

5. **Structured Streaming**

Newly created at `'docs/ss-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note.

6. **SQL**

Merged [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html) and [sql-migration-guide-hive-compatibility.html](https://spark.apache.org/docs/latest/sql-migration-guide-hive-compatibility.html)

```

'docs/sql-migration-guide-hive-compatibility.md' 'docs/sql-migration-guide-upgrade.md'

Move Hive compatibility section ↘ ↙ Left over after filtering PySpark and SparkR items

'docs/sql-migration-guide.md'

```

### Why are the changes needed?

In order for users in production to effectively migrate to higher versions, and detect behaviour or breaking changes before upgrading and/or migrating.

### Does this PR introduce any user-facing change?

Yes, this changes Spark's documentation at https://spark.apache.org/docs/latest/index.html.

### How was this patch tested?

Manually build the doc. This can be verified as below:

```bash

cd docs

SKIP_API=1 jekyll build

open _site/index.html

```

Closes#25757 from HyukjinKwon/migration-doc.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>