### What changes were proposed in this pull request?

```sql

spark-sql> set spark.sql.adaptive.coalescePartitions.initialPartitionNum=1;

spark.sql.adaptive.coalescePartitions.initialPartitionNum 1

Time taken: 2.18 seconds, Fetched 1 row(s)

spark-sql> set mapred.reduce.tasks;

21/04/21 14:27:11 WARN SetCommand: Property mapred.reduce.tasks is deprecated, showing spark.sql.shuffle.partitions instead.

spark.sql.shuffle.partitions 1

Time taken: 0.03 seconds, Fetched 1 row(s)

spark-sql> set spark.sql.shuffle.partitions;

spark.sql.shuffle.partitions 200

Time taken: 0.024 seconds, Fetched 1 row(s)

spark-sql> set mapred.reduce.tasks=2;

21/04/21 14:31:52 WARN SetCommand: Property mapred.reduce.tasks is deprecated, automatically converted to spark.sql.shuffle.partitions instead.

spark.sql.shuffle.partitions 2

Time taken: 0.017 seconds, Fetched 1 row(s)

spark-sql> set mapred.reduce.tasks;

21/04/21 14:31:55 WARN SetCommand: Property mapred.reduce.tasks is deprecated, showing spark.sql.shuffle.partitions instead.

spark.sql.shuffle.partitions 1

Time taken: 0.017 seconds, Fetched 1 row(s)

spark-sql>

```

`mapred.reduce.tasks` is mapping to `spark.sql.shuffle.partitions` at write-side, but `spark.sql.adaptive.coalescePartitions.initialPartitionNum` might take precede of `spark.sql.shuffle.partitions`

### Why are the changes needed?

roundtrip for `mapred.reduce.tasks`

### Does this PR introduce _any_ user-facing change?

yes, `mapred.reduce.tasks` will always report `spark.sql.shuffle.partitions` whether `spark.sql.adaptive.coalescePartitions.initialPartitionNum` exists or not.

### How was this patch tested?

a new test

Closes#32265 from yaooqinn/SPARK-35168.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Kent Yao <yao@apache.org>

### What changes were proposed in this pull request?

This PR aims to upgrade Kafka client to 2.8.0.

Note that Kafka 2.8.0 uses ZSTD JNI 1.4.9-1 like Apache Spark 3.2.0.

### Why are the changes needed?

This will bring the latest client-side improvement and bug fixes like the following examples.

- KAFKA-10631 ProducerFencedException is not Handled on Offest Commit

- KAFKA-10134 High CPU issue during rebalance in Kafka consumer after upgrading to 2.5

- KAFKA-12193 Re-resolve IPs when a client is disconnected

- KAFKA-10090 Misleading warnings: The configuration was supplied but isn't a known config

- KAFKA-9263 The new hw is added to incorrect log when ReplicaAlterLogDirsThread is replacing log

- KAFKA-10607 Ensure the error counts contains the NONE

- KAFKA-10458 Need a way to update quota for TokenBucket registered with Sensor

- KAFKA-10503 MockProducer doesn't throw ClassCastException when no partition for topic

**RELEASE NOTE**

- https://downloads.apache.org/kafka/2.8.0/RELEASE_NOTES.html

- https://downloads.apache.org/kafka/2.7.0/RELEASE_NOTES.html

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs with the existing tests because this is a dependency change.

Closes#32325 from dongjoon-hyun/SPARK-33913.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

1, remove existing agg, and use a new agg supporting virtual centering

2, add related testsuites

### Why are the changes needed?

centering vectors should accelerate convergence, and generate solution more close to R

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

updated testsuites and added testsuites

Closes#32124 from zhengruifeng/svc_agg_refactor.

Authored-by: Ruifeng Zheng <ruifengz@foxmail.com>

Signed-off-by: Ruifeng Zheng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

Avoid to recompute the pending speculative tasks in the ExecutorAllocationManager, and remove some unnecessary code.

### Why are the changes needed?

The number of the pending speculative tasks is recomputed in the ExecutorAllocationManager to calculate the maximum number of executors required. While , it only needs to be computed once to improve performance.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests.

Closes#32306 from weixiuli/SPARK-35200.

Authored-by: weixiuli <weixiuli@jd.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

A simple test that writes and reads an encrypted parquet and verifies that it's encrypted by checking its magic string (in encrypted footer mode).

### Why are the changes needed?

To provide a test coverage for Parquet encryption.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

- [x] [SBT / Hadoop 3.2 / Java8 (the default)](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/137785/testReport)

- [ ] ~SBT / Hadoop 3.2 / Java11 by adding [test-java11] to the PR title.~ (Jenkins Java11 build is broken due to missing JDK11 installation)

- [x] [SBT / Hadoop 2.7 / Java8 by adding [test-hadoop2.7] to the PR title.](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/137836/testReport)

- [x] Maven / Hadoop 3.2 / Java8 by adding [test-maven] to the PR title.

- [x] Maven / Hadoop 2.7 / Java8 by adding [test-maven][test-hadoop2.7] to the PR title.

Closes#32146 from andersonm-ibm/pme_testing.

Authored-by: Maya Anderson <mayaa@il.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR proposes to upgrade Jetty to 9.4.40.

### Why are the changes needed?

SPARK-34988 (#32091) upgraded Jetty to 9.4.39 for CVE-2021-28165.

But after the upgrade, Jetty 9.4.40 was released to fix the ERR_CONNECTION_RESET issue (https://github.com/eclipse/jetty.project/issues/6152).

This issue seems to affect Jetty 9.4.39 when POST method is used with SSL.

For Spark, job submission using REST and ThriftServer with HTTPS protocol can be affected.

### Does this PR introduce _any_ user-facing change?

No. No released version uses Jetty 9.3.39.

### How was this patch tested?

CI.

Closes#32318 from sarutak/upgrade-jetty-9.4.40.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

This patch proposes to add a couple of metrics in scan node for Kafka batch streaming query.

### Why are the changes needed?

When testing SS, I found it is hard to track data loss of SS reading from Kafka. The micro batch scan node has only one metric, number of output rows. Users have no idea how many offsets to fetch are out of Kafka, how many times data loss happens. These metrics are important for users to know the quality of SS query running.

### Does this PR introduce _any_ user-facing change?

Yes, adding two metrics to micro batch scan node for Kafka batch streaming.

### How was this patch tested?

Currently I tested on internal cluster with Kafka:

<img width="1193" alt="Screen Shot 2021-04-22 at 7 16 29 PM" src="https://user-images.githubusercontent.com/68855/115808460-61bf8100-a39f-11eb-99a9-65d22c3f5fb0.png">

I was trying to add unit test. But as our batch streaming query disallows to specify ending offsets. If I only specify an out-of-range starting offset, when we get offset range in `getRanges`, any negative size range will be filtered out. So it cannot actually test the case of fetched non-existing offset.

Closes#31398 from viirya/micro-batch-metrics.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

`CatalystTypeConverters` is useful when the type of the input data classes are not known statically (otherwise we can use `ExpressionEncoder`). However, the current `CatalystTypeConverters` requires you to know the datetime data class statically, which makes it hard to use.

This PR improves the `CatalystTypeConverters` for date/timestamp, to support the old and new Java time classes at the same time.

### Why are the changes needed?

Make `CatalystTypeConverters` easier to use.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

new test

Closes#32312 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Format empty grouping set exception in CUBE/ROLLUP

### Why are the changes needed?

Format empty grouping set exception in CUBE/ROLLUP

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Not need

Closes#32307 from AngersZhuuuu/SPARK-35201.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Added the following TreePattern enums:

- AND_OR

- BINARY_ARITHMETIC

- BINARY_COMPARISON

- CASE_WHEN

- CAST

- CONCAT

- COUNT

- IF

- LIKE_FAMLIY

- NOT

- NULL_CHECK

- UNARY_POSITIVE

- UPPER_OR_LOWER

Used them in the following rules:

- ConstantPropagation

- ReorderAssociativeOperator

- BooleanSimplification

- SimplifyBinaryComparison

- SimplifyCaseConversionExpressions

- SimplifyConditionals

- PushFoldableIntoBranches

- LikeSimplification

- NullPropagation

- SimplifyCasts

- RemoveDispensableExpressions

- CombineConcats

### Why are the changes needed?

Reduce the number of tree traversals and hence improve the query compilation latency.

### How was this patch tested?

Existing tests.

Closes#32280 from sigmod/expression.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

Extract common doc about hive format for `sql-ref-syntax-ddl-create-table-hiveformat.md` and `sql-ref-syntax-qry-select-transform.md` to refer.

### Why are the changes needed?

Improve doc

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Not need

Closes#32264 from AngersZhuuuu/SPARK-35159.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add default log config for spark-sql

### Why are the changes needed?

The default log level for spark-sql is `WARN`. How to change the log level is confusing, we need a default config.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Change config `log4j.logger.org.apache.spark.sql.hive.thriftserver.SparkSQLCLIDriver=INFO` in log4j.properties, then spark-sql's default log level changed.

Closes#32248 from hddong/spark-35413.

Lead-authored-by: hongdongdong <hongdongdong@cmss.chinamobile.com>

Co-authored-by: Hyukjin Kwon <gurwls223@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

For partial hash aggregation (code-gen path), we have two level of hash map for aggregation. First level is from `RowBasedHashMapGenerator`, which is computation faster compared to the second level from `UnsafeFixedWidthAggregationMap`. The introducing of two level hash map can help improve CPU performance of query as the first level hash map normally fits in hardware cache and has cheaper hash function for key lookup.

For final hash aggregation, we can also support two level of hash map, to improve query performance further.

The original two level of hash map code works for final aggregation mostly out of box. The major change here is to support testing fall back of final aggregation (see change related to `bitMaxCapacity` and `checkFallbackForGeneratedHashMap`).

Example:

An aggregation query:

```

spark.sql(

"""

|SELECT key, avg(value)

|FROM agg1

|GROUP BY key

""".stripMargin)

```

The generated code for final aggregation is [here](https://gist.github.com/c21/20c10cc8e2c7e561aafbe9b8da055242).

An aggregation query with testing fallback:

```

withSQLConf("spark.sql.TungstenAggregate.testFallbackStartsAt" -> "2, 3") {

spark.sql(

"""

|SELECT key, avg(value)

|FROM agg1

|GROUP BY key

""".stripMargin)

}

```

The generated code for final aggregation is [here](https://gist.github.com/c21/dabf176cbc18a5e2138bc0a29e81c878). Note the no more counter condition for first level fast map.

### Why are the changes needed?

Improve the CPU performance of hash aggregation query in general.

For `AggregateBenchmark."Aggregate w multiple keys"`, seeing query performance improved by 10%.

`codegen = T` means whole stage code-gen is enabled.

`hashmap = T` means two level maps is enabled for partial aggregation.

`finalhashmap = T` means two level maps is enabled for final aggregation.

```

Running benchmark: Aggregate w multiple keys

Running case: codegen = F

Stopped after 2 iterations, 8284 ms

Running case: codegen = T hashmap = F

Stopped after 2 iterations, 5424 ms

Running case: codegen = T hashmap = T finalhashmap = F

Stopped after 2 iterations, 4753 ms

Running case: codegen = T hashmap = T finalhashmap = T

Stopped after 2 iterations, 4508 ms

Java HotSpot(TM) 64-Bit Server VM 1.8.0_181-b13 on Mac OS X 10.15.7

Intel(R) Core(TM) i9-9980HK CPU 2.40GHz

Aggregate w multiple keys: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------------------------------

codegen = F 3881 4142 370 5.4 185.1 1.0X

codegen = T hashmap = F 2701 2712 16 7.8 128.8 1.4X

codegen = T hashmap = T finalhashmap = F 2363 2377 19 8.9 112.7 1.6X

codegen = T hashmap = T finalhashmap = T 2252 2254 3 9.3 107.4 1.7X

```

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing unit test in `HashAggregationQuerySuite` and `HashAggregationQueryWithControlledFallbackSuite` already cover the test.

Closes#32242 from c21/agg.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Move the following classes:

- `InMemoryAtomicPartitionTable`

- `InMemoryPartitionTable`

- `InMemoryPartitionTableCatalog`

- `InMemoryTable`

- `InMemoryTableCatalog`

- `StagingInMemoryTableCatalog`

from `org.apache.spark.sql.connector` to `org.apache.spark.sql.connector.catalog`.

### Why are the changes needed?

These classes implement catalog related interfaces but reside in `org.apache.spark.sql.connector`. A more suitable place should be `org.apache.spark.sql.connector.catalog`.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

N/A

Closes#32302 from sunchao/SPARK-35195.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

Added the following TreePattern enums:

- DYNAMIC_PRUNING_SUBQUERY

- EXISTS_SUBQUERY

- IN_SUBQUERY

- LIST_SUBQUERY

- PLAN_EXPRESSION

- SCALAR_SUBQUERY

- FILTER

Used them in the following rules:

- ResolveSubquery

- UpdateOuterReferences

- OptimizeSubqueries

- RewritePredicateSubquery

- PullupCorrelatedPredicates

- RewriteCorrelatedScalarSubquery (not the rule itself but an internal transform call, the full support is in SPARK-35148)

- InsertAdaptiveSparkPlan

- PlanAdaptiveSubqueries

### Why are the changes needed?

Reduce the number of tree traversals and hence improve the query compilation latency.

### How was this patch tested?

Existing tests.

Closes#32247 from sigmod/subquery.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

Removes PySpark version dependent codes from pyspark.pandas test codes.

### Why are the changes needed?

There are several places to check the PySpark version and switch the logic, but now those are not necessary.

We should remove them.

We will do the same thing after we finish porting tests.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#32300 from xinrong-databricks/port.rmv_spark_version_chk_in_tests.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

### What changes were proposed in this pull request?

This PR aims to support driver-owned on-demand PVC(Persistent Volume Claim)s. It means dynamically-created PVCs will have the `ownerReference` to `driver` pod instead of `executor` pod.

### Why are the changes needed?

This allows K8s backend scheduler can reuse this later.

**BEFORE**

```

$ k get pvc tpcds-pvc-exec-1-pvc-0 -oyaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

...

ownerReferences:

- apiVersion: v1

controller: true

kind: Pod

name: tpcds-pvc-exec-1

```

**AFTER**

```

$ k get pvc tpcds-pvc-exec-1-pvc-0 -oyaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

...

ownerReferences:

- apiVersion: v1

controller: true

kind: Pod

name: tpcds-pvc

```

### Does this PR introduce _any_ user-facing change?

No. (The default is `false`)

### How was this patch tested?

Manually check the above and pass K8s IT.

```

KubernetesSuite:

- Run SparkPi with no resources

- Run SparkPi with a very long application name.

- Use SparkLauncher.NO_RESOURCE

- Run SparkPi with a master URL without a scheme.

- Run SparkPi with an argument.

- Run SparkPi with custom labels, annotations, and environment variables.

- All pods have the same service account by default

- Run extraJVMOptions check on driver

- Run SparkRemoteFileTest using a remote data file

- Verify logging configuration is picked from the provided SPARK_CONF_DIR/log4j.properties

- Run SparkPi with env and mount secrets.

- Run PySpark on simple pi.py example

- Run PySpark to test a pyfiles example

- Run PySpark with memory customization

- Run in client mode.

- Start pod creation from template

- PVs with local storage

- Launcher client dependencies

- SPARK-33615: Launcher client archives

- SPARK-33748: Launcher python client respecting PYSPARK_PYTHON

- SPARK-33748: Launcher python client respecting spark.pyspark.python and spark.pyspark.driver.python

- Launcher python client dependencies using a zip file

- Test basic decommissioning

- Test basic decommissioning with shuffle cleanup

- Test decommissioning with dynamic allocation & shuffle cleanups

- Test decommissioning timeouts

- Run SparkR on simple dataframe.R example

Run completed in 16 minutes, 40 seconds.

Total number of tests run: 27

Suites: completed 2, aborted 0

Tests: succeeded 27, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

Closes#32288 from dongjoon-hyun/SPARK-35182.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Consolidate PySpark testing utils by removing `python/pyspark/pandas/testing`, and then creating a file `pandasutils` under `python/pyspark/testing` for test utilities used in `pyspark/pandas`.

### Why are the changes needed?

`python/pyspark/pandas/testing` hold test utilites for pandas-on-spark, and `python/pyspark/testing` contain test utilities for pyspark. Consolidating them makes code cleaner and easier to maintain.

Updated import statements are as shown below:

- from pyspark.testing.sqlutils import SQLTestUtils

- from pyspark.testing.pandasutils import PandasOnSparkTestCase, TestUtils

(PandasOnSparkTestCase is the original ReusedSQLTestCase in `python/pyspark/pandas/testing/utils.py`)

Minor improvements include:

- Usage of missing library's requirement_message

- `except ImportError` rather than `except`

- import pyspark.pandas alias as `ps` rather than `pp`

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit tests under python/pyspark/pandas/tests.

Closes#32177 from xinrong-databricks/port.merge_utils.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

### What changes were proposed in this pull request?

If the sign '-' inside of interval string, everything is fine after bb5459fb26:

```

spark-sql> SELECT INTERVAL '-178956970-8' YEAR TO MONTH;

-178956970-8

```

but the sign outside of interval string is not handled properly:

```

spark-sql> SELECT INTERVAL -'178956970-8' YEAR TO MONTH;

Error in query:

Error parsing interval year-month string: integer overflow(line 1, pos 16)

== SQL ==

SELECT INTERVAL -'178956970-8' YEAR TO MONTH

----------------^^^

```

This pr fix this issue

### Why are the changes needed?

Fix bug

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added UT

Closes#32296 from AngersZhuuuu/SPARK-35187.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR makes window frame could support `YearMonthIntervalType` and `DayTimeIntervalType`.

### Why are the changes needed?

Extend the function of window frame

### Does this PR introduce _any_ user-facing change?

Yes. Users could use `YearMonthIntervalType` or `DayTimeIntervalType` as the sort expression for window frame.

### How was this patch tested?

New tests

Closes#32294 from beliefer/SPARK-35110.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Use transformAllExpressions instead of transformExpressionsDown in CombineConcats. The latter only transforms the root plan node.

### Why are the changes needed?

It allows CombineConcats to cover more cases where `concat` are not in the root plan node.

### How was this patch tested?

Unit test. The updated tests would fail without the code change.

Closes#32290 from sigmod/concat.

Authored-by: Yingyi Bu <yingyi.bu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

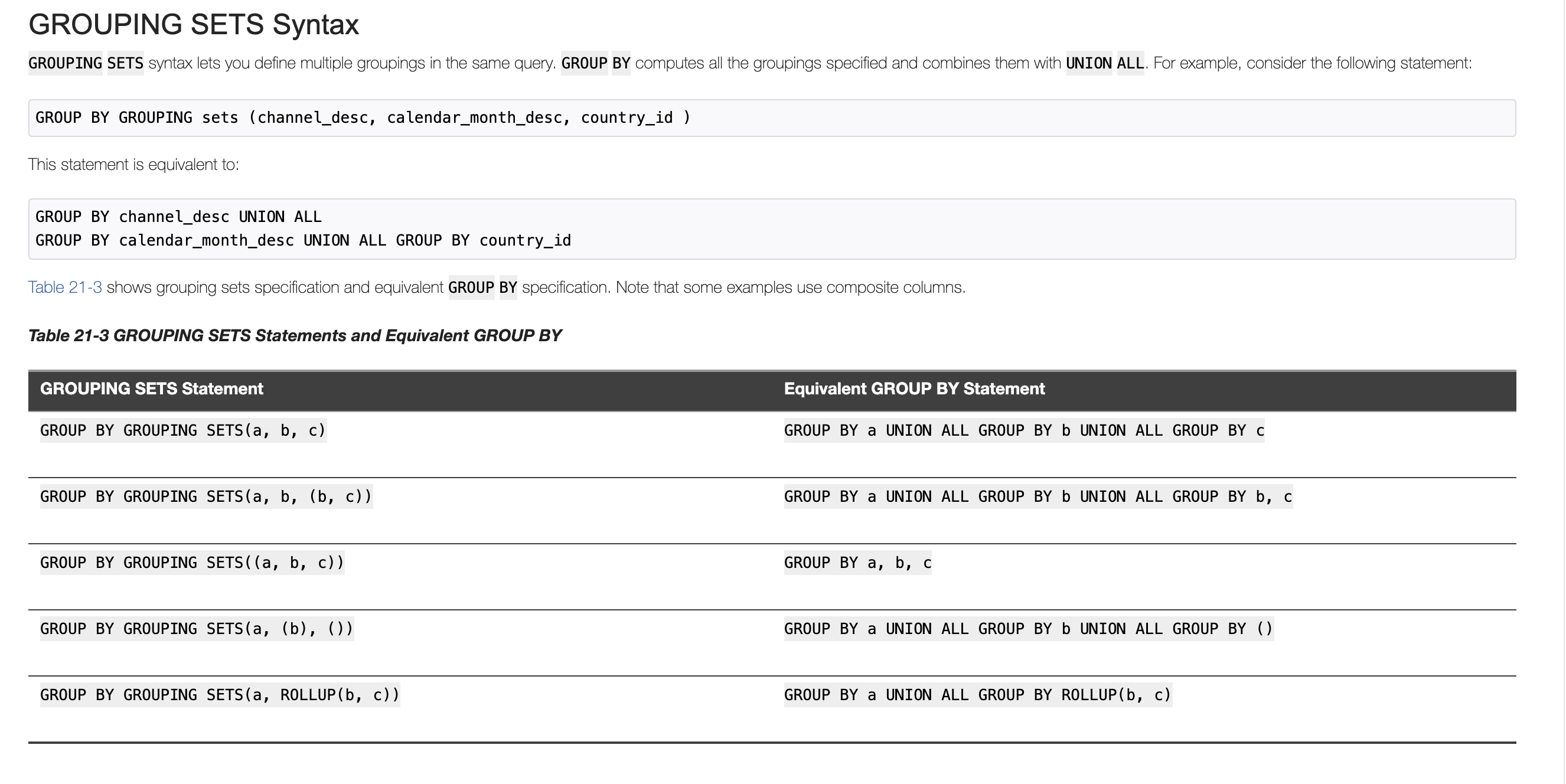

### What changes were proposed in this pull request?

PG and Oracle both support use CUBE/ROLLUP/GROUPING SETS in GROUPING SETS's grouping set as a sugar syntax.

In this PR, we support it in Spark SQL too

### Why are the changes needed?

Keep consistent with PG and oracle

### Does this PR introduce _any_ user-facing change?

User can write grouping analytics like

```

SELECT a, b, count(1) FROM testData GROUP BY a, GROUPING SETS(ROLLUP(a, b));

SELECT a, b, count(1) FROM testData GROUP BY a, GROUPING SETS((a, b), (a), ());

SELECT a, b, count(1) FROM testData GROUP BY a, GROUPING SETS(GROUPING SETS((a, b), (a), ()));

```

### How was this patch tested?

Added Test

Closes#32201 from AngersZhuuuu/SPARK-35026.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes a change that allows us to build SparkR with SBT.

### Why are the changes needed?

In the current master, SparkR can be built only with Maven.

It's helpful if we can built it with SBT.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I confirmed that I can build SparkR on Ubuntu 20.04 with the following command.

```

build/sbt -Psparkr package

```

Closes#32285 from sarutak/sbt-sparkr.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

IntervalUtils.fromYearMonthString should handle Int.MinValue months correctly.

In current logic, just use `Math.addExact(Math.multiplyExact(years, 12), months)` to calculate negative total months will overflow when actual total months is Int.MinValue, this pr fixes this bug.

### Why are the changes needed?

IntervalUtils.fromYearMonthString should handle Int.MinValue months correctly

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added UT

Closes#32281 from AngersZhuuuu/SPARK-35177.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Close SparkContext after the Main method has finished, to allow SparkApplication on K8S to complete.

This is fixed version of [merged and reverted PR](https://github.com/apache/spark/pull/32081).

### Why are the changes needed?

if I don't call the method sparkContext.stop() explicitly, then a Spark driver process doesn't terminate even after its Main method has been completed. This behaviour is different from spark on yarn, where the manual sparkContext stopping is not required. It looks like, the problem is in using non-daemon threads, which prevent the driver jvm process from terminating.

So I have inserted code that closes sparkContext automatically.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Manually on the production AWS EKS environment in my company.

Closes#32283 from kotlovs/close-spark-context-on-exit-2.

Authored-by: skotlov <skotlov@joom.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Use new Apache 'closer.lua' syntax to obtain Maven

### Why are the changes needed?

The current closer.lua redirector, which redirects to download Maven from a local mirror, has a new syntax. build/mvn does not work properly otherwise now.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manual testing.

Closes#32277 from srowen/SPARK-35178.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

It will remove `StructField` when [pruning nested columns](0f2c0b53e8/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/SchemaPruning.scala (L28-L42)). For example:

```scala

spark.sql(

"""

|CREATE TABLE t1 (

| _col0 INT,

| _col1 STRING,

| _col2 STRUCT<c1: STRING, c2: STRING, c3: STRING, c4: BIGINT>)

|USING ORC

|""".stripMargin)

spark.sql("INSERT INTO t1 values(1, '2', struct('a', 'b', 'c', 10L))")

spark.sql("SELECT _col0, _col2.c1 FROM t1").show

```

Before this pr. The returned schema is: ``` `_col0` INT,`_col2` STRUCT<`c1`: STRING> ``` add it will throw exception:

```

java.lang.AssertionError: assertion failed: The given data schema struct<_col0:int,_col2:struct<c1:string>> has less fields than the actual ORC physical schema, no idea which columns were dropped, fail to read.

at scala.Predef$.assert(Predef.scala:223)

at org.apache.spark.sql.execution.datasources.orc.OrcUtils$.requestedColumnIds(OrcUtils.scala:160)

```

After this pr. The returned schema is: ``` `_col0` INT,`_col1` STRING,`_col2` STRUCT<`c1`: STRING> ```.

The finally schema is ``` `_col0` INT,`_col2` STRUCT<`c1`: STRING> ``` after the complete column pruning:

7a5647a93a/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileSourceStrategy.scala (L208-L213)e64eb75aed/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/PushDownUtils.scala (L96-L97)

### Why are the changes needed?

Fix bug.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test.

Closes#31993 from wangyum/SPARK-34897.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

* Add `Not(In)` and `Not(InSet)` check in `NullPropagation` rule.

* Add more test for `In` and `Not(In)` in `Project` level.

### Why are the changes needed?

The semantics of `Not(In)` could be seen like `And(a != b, a != c)` that match the `NullIntolerant`.

As we already simplify the `NullIntolerant` expression to null if it's children have null. E.g. `a != null` => `null`. It's safe to do this with `Not(In)`/`Not(InSet)`.

Note that, we can only do the simplify in predicate which `ReplaceNullWithFalseInPredicate` rule do.

Let's say we have two sqls:

```

select 1 not in (2, null);

select 1 where 1 not in (2, null);

```

The first sql we cannot optimize since it would return `NULL` instead of `false`. The second is postive.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Add test.

Closes#31797 from ulysses-you/SPARK-34692.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Adds a link to the [error message guidelines](https://spark.apache.org/error-message-guidelines.html) to the PR template to increase visibility.

### Why are the changes needed?

Increases visibility of the error message guidelines, which are otherwise hidden in the [Contributing guidelines](https://spark.apache.org/contributing.html).

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Not needed.

Closes#32241 from karenfeng/spark-35140.

Authored-by: Karen Feng <karen.feng@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Declare the markdown package as a dependency of the SparkR package

### Why are the changes needed?

If we didn't install pandoc locally, running make-distribution.sh will fail with the following message:

```

— re-building ‘sparkr-vignettes.Rmd’ using rmarkdown

Warning in engine$weave(file, quiet = quiet, encoding = enc) :

Pandoc (>= 1.12.3) not available. Falling back to R Markdown v1.

Error: processing vignette 'sparkr-vignettes.Rmd' failed with diagnostics:

The 'markdown' package should be declared as a dependency of the 'SparkR' package (e.g., in the 'Suggests' field of DESCRIPTION), because the latter contains vignette(s) built with the 'markdown' package. Please see https://github.com/yihui/knitr/issues/1864 for more information.

— failed re-building ‘sparkr-vignettes.Rmd’

```

### Does this PR introduce _any_ user-facing change?

Yes. Workaround for R packaging.

### How was this patch tested?

Manually test. After the fix, the command `sh dev/make-distribution.sh -Psparkr -Phadoop-2.7 -Phive -Phive-thriftserver -Pyarn` in the environment without pandoc will pass.

Closes#32270 from xuanyuanking/SPARK-35171.

Authored-by: Yuanjian Li <yuanjian.li@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Fixes incorrect return type for `rawPredictionUDF` in `OneVsRestModel`.

### Why are the changes needed?

Bugfix

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test.

Closes#32245 from harupy/SPARK-35142.

Authored-by: harupy <17039389+harupy@users.noreply.github.com>

Signed-off-by: Weichen Xu <weichen.xu@databricks.com>

### What changes were proposed in this pull request?

IntegralDivide should throw an exception on overflow in ANSI mode.

There is only one case that can cause that:

```

Long.MinValue div -1

```

### Why are the changes needed?

ANSI compliance

### Does this PR introduce _any_ user-facing change?

Yes, IntegralDivide throws an exception on overflow in ANSI mode

### How was this patch tested?

Unit test

Closes#32260 from gengliangwang/integralDiv.

Authored-by: Gengliang Wang <ltnwgl@gmail.com>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

As a part of the SPARK-26837 pruning of nested fields from object serializers are supported. But it is missed to handle case insensitivity nature of spark

In this PR I have resolved the column names to be pruned based on `spark.sql.caseSensitive ` config

**Exception Before Fix**

```

Caused by: java.lang.ArrayIndexOutOfBoundsException: 0

at org.apache.spark.sql.types.StructType.apply(StructType.scala:414)

at org.apache.spark.sql.catalyst.optimizer.ObjectSerializerPruning$$anonfun$apply$4.$anonfun$applyOrElse$3(objects.scala:216)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:238)

at scala.collection.immutable.List.foreach(List.scala:392)

at scala.collection.TraversableLike.map(TraversableLike.scala:238)

at scala.collection.TraversableLike.map$(TraversableLike.scala:231)

at scala.collection.immutable.List.map(List.scala:298)

at org.apache.spark.sql.catalyst.optimizer.ObjectSerializerPruning$$anonfun$apply$4.applyOrElse(objects.scala:215)

at org.apache.spark.sql.catalyst.optimizer.ObjectSerializerPruning$$anonfun$apply$4.applyOrElse(objects.scala:203)

at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDown$1(TreeNode.scala:309)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:72)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDown(TreeNode.scala:309)

at

```

### Why are the changes needed?

After Upgrade to Spark 3 `foreachBatch` API throws` java.lang.ArrayIndexOutOfBoundsException`. This issue will be fixed using this PR

### Does this PR introduce _any_ user-facing change?

No, Infact fixes the regression

### How was this patch tested?

Added tests and also tested verified manually

Closes#32194 from sandeep-katta/SPARK-35096.

Authored-by: sandeep.katta <sandeep.katta2007@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to handle 404 not found, see https://github.com/apache/spark/pull/32255/checks?check_run_id=2390446579 as an example.

If a fork does not have any previous workflow runs, it seems throwing 404 error instead of empty runs.

### Why are the changes needed?

To show the correct guidance to contributors.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually tested at https://github.com/HyukjinKwon/spark/pull/48. See https://github.com/HyukjinKwon/spark/runs/2391469416 as an example.

Closes#32258 from HyukjinKwon/SPARK-35120-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request?

Support ANSI interval in HashExpression and add UT

### Why are the changes needed?

Support ANSI interval in HashExpression

### Does this PR introduce _any_ user-facing change?

User can pass ANSI interval in HashExpression function

### How was this patch tested?

Added UT

Closes#32259 from AngersZhuuuu/SPARK-35113.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

dfs.replication is inconsistent from hadoop 2.x to 3.x, so in this PR we use `dfs.hosts` to verify per https://github.com/apache/spark/pull/32144#discussion_r616833099

```

== Results ==

!== Correct Answer - 1 == == Spark Answer - 1 ==

!struct<> struct<key:string,value:string>

![dfs.replication,<undefined>] [dfs.replication,3]

```

### Why are the changes needed?

fix Jenkins job with Hadoop 2.7

### Does this PR introduce _any_ user-facing change?

test only change

### How was this patch tested?

test only change

Closes#32263 from yaooqinn/SPARK-35044-F.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to override the `sql` and `toString` methods of the expressions that implement operators over ANSI intervals (`YearMonthIntervalType`/`DayTimeIntervalType`), and replace internal expression class names by operators like `*`, `/` and `-`.

### Why are the changes needed?

Proposed methods should make the textual representation of such operators more readable, and potentially parsable by Spark SQL parser.

### Does this PR introduce _any_ user-facing change?

Yes. This can influence on column names.

### How was this patch tested?

By running existing test suites for interval and datetime expressions, and re-generating the `*.sql` tests:

```

$ build/sbt "sql/testOnly *SQLQueryTestSuite -- -z interval.sql"

$ build/sbt "sql/testOnly *SQLQueryTestSuite -- -z datetime.sql"

```

Closes#32262 from MaxGekk/interval-operator-sql.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

In YARN, ship the `spark.jars.ivySettings` file to the driver when using `cluster` deploy mode so that `addJar` is able to find it in order to resolve ivy paths.

### Why are the changes needed?

SPARK-33084 introduced support for Ivy paths in `sc.addJar` or Spark SQL `ADD JAR`. If we use a custom ivySettings file using `spark.jars.ivySettings`, it is loaded at b26e7b510b/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala (L1280). However, this file is only accessible on the client machine. In YARN cluster mode, this file is not available on the driver and so `addJar` fails to find it.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added unit tests to verify that the `ivySettings` file is localized by the YARN client and that a YARN cluster mode application is able to find to load the `ivySettings` file.

Closes#31591 from shardulm94/SPARK-34472.

Authored-by: Shardul Mahadik <smahadik@linkedin.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

`CurrentOrigin` is a thread-local variable to track the original SQL line position in plan/expression. Usually, we set `CurrentOrigin`, create `TreeNode` instances, and reset `CurrentOrigin`.

This PR updates the last step to set `CurrentOrigin` to its previous value, instead of resetting it. This is necessary when we invoke `CurrentOrigin` in a nested way, like with subqueries.

### Why are the changes needed?

To keep the original SQL line position in the error message in more cases.

### Does this PR introduce _any_ user-facing change?

No, only minor error message changes.

### How was this patch tested?

existing tests

Closes#32249 from cloud-fan/origin.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch proposes to leverage `CustomMetric`, `CustomTaskMetric` API to report custom metrics from DS v2 scan to Spark.

### Why are the changes needed?

This is related to #31398. In SPARK-34297, we want to add a couple of metrics when reading from Kafka in SS. We need some public API change in DS v2 to make it possible. This extracts only DS v2 change and make it general for DS v2 instead of micro-batch DS v2 API.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test.

Implement a simple test DS v2 class locally and run it:

```scala

scala> import org.apache.spark.sql.execution.datasources.v2._

import org.apache.spark.sql.execution.datasources.v2._

scala> classOf[CustomMetricDataSourceV2].getName

res0: String = org.apache.spark.sql.execution.datasources.v2.CustomMetricDataSourceV2

scala> val df = spark.read.format(res0).load()

df: org.apache.spark.sql.DataFrame = [i: int, j: int]

scala> df.collect

```

<img width="703" alt="Screen Shot 2021-03-30 at 11 07 13 PM" src="https://user-images.githubusercontent.com/68855/113098080-d8a49800-91ac-11eb-8681-be408a0f2e69.png">

Closes#31451 from viirya/dsv2-metrics.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Refactor ScriptTransformation to remove input parameter and replace it by child.output

### Why are the changes needed?

refactor code

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existed UT

Closes#32228 from AngersZhuuuu/SPARK-34035.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

On Running Spark job with yarn and deployment mode as client, Spark Driver and Spark Application master launch in two separate containers. In various scenarios there is need to see Spark Application master logs to see the resource allocation, Decommissioning status and other information shared between yarn RM and Spark Application master.

In Cluster mode Spark driver and Spark AM is on same container, So Log link of the driver already there to see the logs in Spark UI

This PR is for adding the spark AM log link for spark job running in the client mode for yarn. Instead of searching the container id and then find the logs. We can directly check in the Spark UI

This change is only for showing the AM log links in the Client mode when resource manager is yarn.

### Why are the changes needed?

Till now the only way to check this by finding the container id of the AM and check the logs either using Yarn utility or Yarn RM Application History server.

This PR is for adding the spark AM log link for spark job running in the client mode for yarn. Instead of searching the container id and then find the logs. We can directly check in the Spark UI

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added the unit test also checked the Spark UI

**In Yarn Client mode**

Before Change

After the Change - The AM info is there

AM Log

**In Yarn Cluster Mode** - The AM log link will not be there

Closes#31974 from SaurabhChawla100/SPARK-34877.

Authored-by: SaurabhChawla <s.saurabhtim@gmail.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Add doc about `TRANSFORM` and related function.

### Why are the changes needed?

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Not need

Closes#31010 from AngersZhuuuu/SPARK-33976.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: angerszhu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

After the PR https://github.com/apache/spark/pull/32209, this should be possible now.

We can add test case for ANSI intervals to HiveThriftBinaryServerSuite

### Why are the changes needed?

Add more test case

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added UT

Closes#32250 from AngersZhuuuu/SPARK-35068.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR implements the decorrelation technique in the paper "Unnesting Arbitrary Queries" by T. Neumann; A. Kemper

(http://www.btw-2015.de/res/proceedings/Hauptband/Wiss/Neumann-Unnesting_Arbitrary_Querie.pdf). It currently supports Filter, Project, Aggregate, Join, and UnaryNode that passes CheckAnalysis.

This feature can be controlled by the config `spark.sql.optimizer.decorrelateInnerQuery.enabled` (default: true).

A few notes:

1. This PR does not relax any constraints in CheckAnalysis for correlated subqueries, even though some cases can be supported by this new framework, such as aggregate with correlated non-equality predicates. This PR focuses on adding the new framework and making sure all existing cases can be supported. Constraints can be relaxed gradually in the future via separate PRs.

2. The new framework is only enabled for correlated scalar subqueries, as the first step. EXISTS/IN subqueries can be supported in the future.

### Why are the changes needed?

Currently, Spark has limited support for correlated subqueries. It only allows `Filter` to reference outer query columns and does not support non-equality predicates when the subquery is aggregated. This new framework will allow more operators to host outer column references and support correlated non-equality predicates and more types of operators in correlated subqueries.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing unit and SQL query tests and new optimizer plan tests.

Closes#32072 from allisonwang-db/spark-34974-decorrelation.

Authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. Add a test to check that Thrift server is able to collect year-month intervals and transfer them via thrift protocol.

2. Improve similar test for day-time intervals. After the changes, the test doesn't depend on the result of date subtractions. In the future, the type of date subtract can be changed. So, current PR should make the test tolerant to the changes.

### Why are the changes needed?

To improve test coverage.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running the modified test suite:

```

$ ./build/sbt -Phive -Phive-thriftserver "test:testOnly *SparkThriftServerProtocolVersionsSuite"

```

Closes#32240 from MaxGekk/year-month-interval-thrift-protocol.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>