## What changes were proposed in this pull request?

SparkContext submitted a map stage from `submitMapStage` to `DAGScheduler`,

`markMapStageJobAsFinished` is called only in (https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L933 and https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/scheduler/DAGScheduler.scala#L1314);

But think about below scenario:

1. stage0 and stage1 are all `ShuffleMapStage` and stage1 depends on stage0;

2. We submit stage1 by `submitMapStage`;

3. When stage 1 running, `FetchFailed` happened, stage0 and stage1 got resubmitted as stage0_1 and stage1_1;

4. When stage0_1 running, speculated tasks in old stage1 come as succeeded, but stage1 is not inside `runningStages`. So even though all splits(including the speculated tasks) in stage1 succeeded, job listener in stage1 will not be called;

5. stage0_1 finished, stage1_1 starts running. When `submitMissingTasks`, there is no missing tasks. But in current code, job listener is not triggered.

We should call the job listener for map stage in `5`.

## How was this patch tested?

Not added yet.

Author: jinxing <jinxing6042@126.com>

Closes#21019 from jinxing64/SPARK-23948.

## What changes were proposed in this pull request?

In current code, it will scanning all partition paths when spark.sql.hive.verifyPartitionPath=true.

e.g. table like below:

```

CREATE TABLE `test`(

`id` int,

`age` int,

`name` string)

PARTITIONED BY (

`A` string,

`B` string)

load data local inpath '/tmp/data0' into table test partition(A='00', B='00')

load data local inpath '/tmp/data1' into table test partition(A='01', B='01')

load data local inpath '/tmp/data2' into table test partition(A='10', B='10')

load data local inpath '/tmp/data3' into table test partition(A='11', B='11')

```

If I query with SQL – "select * from test where A='00' and B='01' ", current code will scan all partition paths including '/data/A=00/B=00', '/data/A=00/B=00', '/data/A=01/B=01', '/data/A=10/B=10', '/data/A=11/B=11'. It costs much time and memory cost.

This pr proposes to avoid iterating all partition paths. Add a config `spark.files.ignoreMissingFiles` and ignore the `file not found` when `getPartitions/compute`(for hive table scan). This is much like the logic brought by

`spark.sql.files.ignoreMissingFiles`(which is for datasource scan).

## How was this patch tested?

UT

Author: jinxing <jinxing6042@126.com>

Closes#19868 from jinxing64/SPARK-22676.

## What changes were proposed in this pull request?

Update

```scala

SparkEnv.get.conf.getLong("spark.shuffle.spill.numElementsForceSpillThreshold", Long.MaxValue)

```

to

```scala

SparkEnv.get.conf.get(SHUFFLE_SPILL_NUM_ELEMENTS_FORCE_SPILL_THRESHOLD)

```

because of `SHUFFLE_SPILL_NUM_ELEMENTS_FORCE_SPILL_THRESHOLD`'s default value is `Integer.MAX_VALUE`:

c99fc9ad9b/core/src/main/scala/org/apache/spark/internal/config/package.scala (L503-L511)

## How was this patch tested?

N/A

Author: Yuming Wang <yumwang@ebay.com>

Closes#21077 from wangyum/SPARK-21033.

## What changes were proposed in this pull request?

Spark introduced new writer mode to overwrite only related partitions in SPARK-20236. While we are using this feature in our production cluster, we found a bug when writing multi-level partitions on HDFS.

A simple test case to reproduce this issue:

val df = Seq(("1","2","3")).toDF("col1", "col2","col3")

df.write.partitionBy("col1","col2").mode("overwrite").save("/my/hdfs/location")

If HDFS location "/my/hdfs/location" does not exist, there will be no output.

This seems to be caused by the job commit change in SPARK-20236 in HadoopMapReduceCommitProtocol.

In the commit job process, the output has been written into staging dir /my/hdfs/location/.spark-staging.xxx/col1=1/col2=2, and then the code calls fs.rename to rename /my/hdfs/location/.spark-staging.xxx/col1=1/col2=2 to /my/hdfs/location/col1=1/col2=2. However, in our case the operation will fail on HDFS because /my/hdfs/location/col1=1 does not exists. HDFS rename can not create directory for more than one level.

This does not happen in the new unit test added with SPARK-20236 which uses local file system.

We are proposing a fix. When cleaning current partition dir /my/hdfs/location/col1=1/col2=2 before the rename op, if the delete op fails (because /my/hdfs/location/col1=1/col2=2 may not exist), we call mkdirs op to create the parent dir /my/hdfs/location/col1=1 (if the parent dir does not exist) so the following rename op can succeed.

Reference: in official HDFS document(https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/filesystem/filesystem.html), the rename command has precondition "dest must be root, or have a parent that exists"

## How was this patch tested?

We have tested this patch on our production cluster and it fixed the problem

Author: Fangshi Li <fli@linkedin.com>

Closes#20931 from fangshil/master.

## What changes were proposed in this pull request?

Get the count of dropped events for output in log message.

## How was this patch tested?

The fix is pretty trivial, but `./dev/run-tests` were run and were successful.

Please review http://spark.apache.org/contributing.html before opening a pull request.

vanzin cloud-fan

The contribution is my original work and I license the work to the project under the project’s open source license.

Author: Patrick Pisciuneri <Patrick.Pisciuneri@target.com>

Closes#20977 from phpisciuneri/fix-log-warning.

The current in-process launcher implementation just calls the SparkSubmit

object, which, in case of errors, will more often than not exit the JVM.

This is not desirable since this launcher is meant to be used inside other

applications, and that would kill the application.

The change turns SparkSubmit into a class, and abstracts aways some of

the functionality used to print error messages and abort the submission

process. The default implementation uses the logging system for messages,

and throws exceptions for errors. As part of that I also moved some code

that doesn't really belong in SparkSubmit to a better location.

The command line invocation of spark-submit now uses a special implementation

of the SparkSubmit class that overrides those behaviors to do what is expected

from the command line version (print to the terminal, exit the JVM, etc).

A lot of the changes are to replace calls to methods such as "printErrorAndExit"

with the new API.

As part of adding tests for this, I had to fix some small things in the

launcher option parser so that things like "--version" can work when

used in the launcher library.

There is still code that prints directly to the terminal, like all the

Ivy-related code in SparkSubmitUtils, and other areas where some re-factoring

would help, like the CommandLineUtils class, but I chose to leave those

alone to keep this change more focused.

Aside from existing and added unit tests, I ran command line tools with

a bunch of different arguments to make sure messages and errors behave

like before.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20925 from vanzin/SPARK-22941.

This change introduces two optimizations to help speed up generation

of listing data when parsing events logs.

The first one allows the parser to be stopped when enough data to

create the listing entry has been read. This is currently the start

event plus environment info, to capture UI ACLs. If the end event is

needed, the code will skip to the end of the log to try to find that

information, instead of parsing the whole log file.

Unfortunately this works better with uncompressed logs. Skipping bytes

on compressed logs only saves the work of parsing lines and some events,

so not a lot of gains are observed.

The second optimization deals with in-progress logs. It works in two

ways: first, it completely avoids parsing the rest of the log for

these apps when enough listing data is read. This, unlike the above,

also speeds things up for compressed logs, since only the very beginning

of the log has to be read.

On top of that, the code that decides whether to re-parse logs to get

updated listing data will ignore in-progress applications until they've

completed.

Both optimizations can be disabled but are enabled by default.

I tested this on some fake event logs to see the effect. I created

500 logs of about 60M each (so ~30G uncompressed; each log was 1.7M

when compressed with zstd). Below, C = completed, IP = in-progress,

the size means the amount of data re-parsed at the end of logs

when necessary.

```

none/C none/IP zstd/C zstd/IP

On / 16k 2s 2s 22s 2s

On / 1m 3s 2s 24s 2s

Off 1.1m 1.1m 26s 24s

```

This was with 4 threads on a single local SSD. As expected from the

previous explanations, there are considerable gains for in-progress

logs, and for uncompressed logs, but not so much when looking at the

full compressed log.

As a side note, I removed the custom code to get the scan time by

creating a file on HDFS; since file mod times are not used to detect

changed logs anymore, local time is enough for the current use of

the SHS.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20952 from vanzin/SPARK-6951.

SPARK-19276 ensured that FetchFailures do not get swallowed by other

layers of exception handling, but it also meant that a killed task could

look like a fetch failure. This is particularly a problem with

speculative execution, where we expect to kill tasks as they are reading

shuffle data. The fix is to ensure that we always check for killed

tasks first.

Added a new unit test which fails before the fix, ran it 1k times to

check for flakiness. Full suite of tests on jenkins.

Author: Imran Rashid <irashid@cloudera.com>

Closes#20987 from squito/SPARK-23816.

## What changes were proposed in this pull request?

The test case JobCancellationSuite."interruptible iterator of shuffle reader" has been flaky because `KillTask` event is handled asynchronously, so it can happen that the semaphore is released but the task is still running.

Actually we only have to check if the total number of processed elements is less than the input elements number, so we know the task get cancelled.

## How was this patch tested?

The new test case still fails without the purposed patch, and succeeded in current master.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20993 from jiangxb1987/JobCancellationSuite.

## What changes were proposed in this pull request?

This PR avoids possible overflow at an operation `long = (long)(int * int)`. The multiplication of large positive integer values may set one to MSB. This leads to a negative value in long while we expected a positive value (e.g. `0111_0000_0000_0000 * 0000_0000_0000_0010`).

This PR performs long cast before the multiplication to avoid this situation.

## How was this patch tested?

Existing UTs

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#21002 from kiszk/SPARK-23893.

## What changes were proposed in this pull request?

This PR allows us to use one of several types of `MemoryBlock`, such as byte array, int array, long array, or `java.nio.DirectByteBuffer`. To use `java.nio.DirectByteBuffer` allows to have off heap memory which is automatically deallocated by JVM. `MemoryBlock` class has primitive accessors like `Platform.getInt()`, `Platform.putint()`, or `Platform.copyMemory()`.

This PR uses `MemoryBlock` for `OffHeapColumnVector`, `UTF8String`, and other places. This PR can improve performance of operations involving memory accesses (e.g. `UTF8String.trim`) by 1.8x.

For now, this PR does not use `MemoryBlock` for `BufferHolder` based on cloud-fan's [suggestion](https://github.com/apache/spark/pull/11494#issuecomment-309694290).

Since this PR is a successor of #11494, close#11494. Many codes were ported from #11494. Many efforts were put here. **I think this PR should credit to yzotov.**

This PR can achieve **1.1-1.4x performance improvements** for operations in `UTF8String` or `Murmur3_x86_32`. Other operations are almost comparable performances.

Without this PR

```

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Hash byte arrays with length 268435487: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Murmur3_x86_32 526 / 536 0.0 131399881.5 1.0X

UTF8String benchmark: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

hashCode 525 / 552 1022.6 1.0 1.0X

substring 414 / 423 1298.0 0.8 1.3X

```

With this PR

```

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Hash byte arrays with length 268435487: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Murmur3_x86_32 474 / 488 0.0 118552232.0 1.0X

UTF8String benchmark: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

hashCode 476 / 480 1127.3 0.9 1.0X

substring 287 / 291 1869.9 0.5 1.7X

```

Benchmark program

```

test("benchmark Murmur3_x86_32") {

val length = 8192 * 32768 + 31

val seed = 42L

val iters = 1 << 2

val random = new Random(seed)

val arrays = Array.fill[MemoryBlock](numArrays) {

val bytes = new Array[Byte](length)

random.nextBytes(bytes)

new ByteArrayMemoryBlock(bytes, Platform.BYTE_ARRAY_OFFSET, length)

}

val benchmark = new Benchmark("Hash byte arrays with length " + length,

iters * numArrays, minNumIters = 20)

benchmark.addCase("HiveHasher") { _: Int =>

var sum = 0L

for (_ <- 0L until iters) {

sum += HiveHasher.hashUnsafeBytesBlock(

arrays(i), Platform.BYTE_ARRAY_OFFSET, length)

}

}

benchmark.run()

}

test("benchmark UTF8String") {

val N = 512 * 1024 * 1024

val iters = 2

val benchmark = new Benchmark("UTF8String benchmark", N, minNumIters = 20)

val str0 = new java.io.StringWriter() { { for (i <- 0 until N) { write(" ") } } }.toString

val s0 = UTF8String.fromString(str0)

benchmark.addCase("hashCode") { _: Int =>

var h: Int = 0

for (_ <- 0L until iters) { h += s0.hashCode }

}

benchmark.addCase("substring") { _: Int =>

var s: UTF8String = null

for (_ <- 0L until iters) { s = s0.substring(N / 2 - 5, N / 2 + 5) }

}

benchmark.run()

}

```

I run [this benchmark program](https://gist.github.com/kiszk/94f75b506c93a663bbbc372ffe8f05de) using [the commit](ee5a79861c). I got the following results:

```

OpenJDK 64-Bit Server VM 1.8.0_151-8u151-b12-0ubuntu0.16.04.2-b12 on Linux 4.4.0-66-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Memory access benchmarks: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

ByteArrayMemoryBlock get/putInt() 220 / 221 609.3 1.6 1.0X

Platform get/putInt(byte[]) 220 / 236 610.9 1.6 1.0X

Platform get/putInt(Object) 492 / 494 272.8 3.7 0.4X

OnHeapMemoryBlock get/putLong() 322 / 323 416.5 2.4 0.7X

long[] 221 / 221 608.0 1.6 1.0X

Platform get/putLong(long[]) 321 / 321 418.7 2.4 0.7X

Platform get/putLong(Object) 561 / 563 239.2 4.2 0.4X

```

I also run [this benchmark program](https://gist.github.com/kiszk/5fdb4e03733a5d110421177e289d1fb5) for comparing performance of `Platform.copyMemory()`.

```

OpenJDK 64-Bit Server VM 1.8.0_151-8u151-b12-0ubuntu0.16.04.2-b12 on Linux 4.4.0-66-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Platform copyMemory: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Object to Object 1961 / 1967 8.6 116.9 1.0X

System.arraycopy Object to Object 1917 / 1921 8.8 114.3 1.0X

byte array to byte array 1961 / 1968 8.6 116.9 1.0X

System.arraycopy byte array to byte array 1909 / 1937 8.8 113.8 1.0X

int array to int array 1921 / 1990 8.7 114.5 1.0X

double array to double array 1918 / 1923 8.7 114.3 1.0X

Object to byte array 1961 / 1967 8.6 116.9 1.0X

Object to short array 1965 / 1972 8.5 117.1 1.0X

Object to int array 1910 / 1915 8.8 113.9 1.0X

Object to float array 1971 / 1978 8.5 117.5 1.0X

Object to double array 1919 / 1944 8.7 114.4 1.0X

byte array to Object 1959 / 1967 8.6 116.8 1.0X

int array to Object 1961 / 1970 8.6 116.9 1.0X

double array to Object 1917 / 1924 8.8 114.3 1.0X

```

These results show three facts:

1. According to the second/third or sixth/seventh results in the first experiment, if we use `Platform.get/putInt(Object)`, we achieve more than 2x worse performance than `Platform.get/putInt(byte[])` with concrete type (i.e. `byte[]`).

2. According to the second/third or fourth/fifth/sixth results in the first experiment, the fastest way to access an array element on Java heap is `array[]`. **Cons of `array[]` is that it is not possible to support unaligned-8byte access.**

3. According to the first/second/third or fourth/sixth/seventh results in the first experiment, `getInt()/putInt() or getLong()/putLong()` in subclasses of `MemoryBlock` can achieve comparable performance to `Platform.get/putInt()` or `Platform.get/putLong()` with concrete type (second or sixth result). There is no overhead regarding virtual call.

4. According to results in the second experiment, for `Platform.copy()`, to pass `Object` can achieve the same performance as to pass any type of primitive array as source or destination.

5. According to second/fourth results in the second experiment, `Platform.copy()` can achieve the same performance as `System.arrayCopy`. **It would be good to use `Platform.copy()` since `Platform.copy()` can take any types for src and dst.**

We are incrementally replace `Platform.get/putXXX` with `MemoryBlock.get/putXXX`. This is because we have two advantages.

1) Achieve better performance due to having a concrete type for an array.

2) Use simple OO design instead of passing `Object`

It is easy to use `MemoryBlock` in `InternalRow`, `BufferHolder`, `TaskMemoryManager`, and others that are already abstracted. It is not easy to use `MemoryBlock` in utility classes related to hashing or others.

Other candidates are

- UnsafeRow, UnsafeArrayData, UnsafeMapData, SpecificUnsafeRowJoiner

- UTF8StringBuffer

- BufferHolder

- TaskMemoryManager

- OnHeapColumnVector

- BytesToBytesMap

- CachedBatch

- classes for hash

- others.

## How was this patch tested?

Added `UnsafeMemoryAllocator`

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#19222 from kiszk/SPARK-10399.

## What changes were proposed in this pull request?

Address https://github.com/apache/spark/pull/20924#discussion_r177987175, show block manager id when remove RDD/Broadcast fails.

## How was this patch tested?

N/A

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20960 from jiangxb1987/bmid.

These tests can fail with a timeout if the remote repos are not responding,

or slow. The tests don't need anything from those repos, so use an empty

ivy config file to avoid setting up the defaults.

The tests are passing reliably for me locally now, and failing more often

than not today without this change since http://dl.bintray.com/spark-packages/maven

doesn't seem to be loading from my machine.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20916 from vanzin/SPARK-19964.

## What changes were proposed in this pull request?

Address https://github.com/apache/spark/pull/20449#discussion_r172414393, If `resultIter` is already a `InterruptibleIterator`, don't double wrap it.

## How was this patch tested?

Existing tests.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20920 from jiangxb1987/SPARK-23040.

## What changes were proposed in this pull request?

It may be get `spark.shuffle.service.port` from 9745ec3a61/core/src/main/scala/org/apache/spark/deploy/SparkHadoopUtil.scala (L459)

Therefore, the client configuration `spark.shuffle.service.port` does not working unless the configuration is `spark.hadoop.spark.shuffle.service.port`.

- This configuration is not working:

```

bin/spark-sql --master yarn --conf spark.shuffle.service.port=7338

```

- This configuration works:

```

bin/spark-sql --master yarn --conf spark.hadoop.spark.shuffle.service.port=7338

```

This PR fix this issue.

## How was this patch tested?

It's difficult to carry out unit testing. But I've tested it manually.

Author: Yuming Wang <yumwang@ebay.com>

Closes#20785 from wangyum/SPARK-23640.

## What changes were proposed in this pull request?

In SparkSQLCLI, SessionState generates before SparkContext instantiating. When we use --proxy-user to impersonate, it's unable to initializing a metastore client to talk to the secured metastore for no kerberos ticket.

This PR use real user ugi to obtain token for owner before talking to kerberized metastore.

## How was this patch tested?

Manually verified with kerberized hive metasotre / hdfs.

Author: Kent Yao <yaooqinn@hotmail.com>

Closes#20784 from yaooqinn/SPARK-23639.

## What changes were proposed in this pull request?

Changed `LauncherBackend` `set` method so that it checks if the connection is open or not before writing to it (uses `isConnected`).

## How was this patch tested?

None

Author: Sahil Takiar <stakiar@cloudera.com>

Closes#20893 from sahilTakiar/master.

… with dynamic allocation

## What changes were proposed in this pull request?

ignore errors when you are waiting for a broadcast.unpersist. This is handling it the same way as doing rdd.unpersist in https://issues.apache.org/jira/browse/SPARK-22618

## How was this patch tested?

Patch was tested manually against a couple jobs that exhibit this behavior, with the change the application no longer dies due to this and just prints the warning.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Thomas Graves <tgraves@unharmedunarmed.corp.ne1.yahoo.com>

Closes#20924 from tgravescs/SPARK-23806.

This change basically rewrites the security documentation so that it's

up to date with new features, more correct, and more complete.

Because security is such an important feature, I chose to move all the

relevant configuration documentation to the security page, instead of

having them peppered all over the place in the configuration page. This

allows an almost one-stop shop for security configuration in Spark. The

only exceptions are some YARN-specific minor features which I left in

the YARN page.

I also re-organized the page's topics, since they didn't make a lot of

sense. You had kerberos features described inside paragraphs talking

about UI access control, and other oddities. It should be easier now

to find information about specific Spark security features. I also

enabled TOCs for both the Security and YARN pages, since that makes it

easier to see what is covered.

I removed most of the comments from the SecurityManager javadoc since

they just replicated information in the security doc, with different

levels of out-of-dateness.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20742 from vanzin/SPARK-23572.

This particular test assumed that Hadoop libraries did not support

http as a file system. Hadoop 2.9 does, so the test failed. The test

now forces a non-existent implementation for the http fs, which

forces the expected error.

There were also a couple of other issues in the same test: SparkSubmit

arguments in the wrong order, and the wrong check later when asserting,

which was being masked by the previous issues.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20895 from vanzin/SPARK-23787.

## What changes were proposed in this pull request?

Fixes SPARK-23759 by moving connector.start() after connector.setHost()

Problem was created due connector.setHost(hostName) call was after connector.start()

## How was this patch tested?

Patch was tested after build and deployment. This patch requires SPARK_LOCAL_IP environment variable to be set on spark-env.sh

Author: bag_of_tricks <falbani@hortonworks.com>

Closes#20883 from felixalbani/SPARK-23759.

## What changes were proposed in this pull request?

We re-enabled the Scalastyle checker on a line of code. It was previously disabled, but it does not violate any of the rules. So there's no reason to disable the Scalastyle checker here.

## How was this patch tested?

We tested this by running `build/mvn scalastyle:check` after removing the comments that disable the checker. This check passed with no errors or warnings for Spark Core

```

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building Spark Project Core 2.4.0-SNAPSHOT

[INFO] ------------------------------------------------------------------------

[INFO]

[INFO] --- scalastyle-maven-plugin:1.0.0:check (default-cli) spark-core_2.11 ---

Saving to outputFile=<path to local dir>/spark/core/target/scalastyle-output.xml

Processed 485 file(s)

Found 0 errors

Found 0 warnings

Found 0 infos

```

We did not run all tests (with `dev/run-tests`) since this Scalastyle check seemed sufficient.

## Co-contributors:

chialun-yeh

Hrayo712

vpourquie

Author: arucard21 <arucard21@gmail.com>

Closes#20880 from arucard21/scalastyle_util.

Currently, the Spark AM relies on the initial set of tokens created by

the submission client to be able to talk to HDFS and other services that

require delegation tokens. This means that after those tokens expire, a

new AM will fail to start (e.g. when there is an application failure and

re-attempts are enabled).

This PR makes it so that the first thing the AM does when the user provides

a principal and keytab is to create new delegation tokens for use. This

makes sure that the AM can be started irrespective of how old the original

token set is. It also allows all of the token management to be done by the

AM - there is no need for the submission client to set configuration values

to tell the AM when to renew tokens.

Note that even though in this case the AM will not be using the delegation

tokens created by the submission client, those tokens still need to be provided

to YARN, since they are used to do log aggregation.

To be able to re-use the code in the AMCredentialRenewal for the above

purposes, I refactored that class a bit so that it can fetch tokens into

a pre-defined UGI, insted of always logging in.

Another issue with re-attempts is that, after the fix that allows the AM

to restart correctly, new executors would get confused about when to

update credentials, because the credential updater used the update time

initially set up by the submission code. This could make the executor

fail to update credentials in time, since that value would be very out

of date in the situation described in the bug.

To fix that, I changed the YARN code to use the new RPC-based mechanism

for distributing tokens to executors. This allowed the old credential

updater code to be removed, and a lot of code in the renewer to be

simplified.

I also made two currently hardcoded values (the renewal time ratio, and

the retry wait) configurable; while this probably never needs to be set

by anyone in a production environment, it helps with testing; that's also

why they're not documented.

Tested on real cluster with a specially crafted application to test this

functionality: checked proper access to HDFS, Hive and HBase in cluster

mode with token renewal on and AM restarts. Tested things still work in

client mode too.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20657 from vanzin/SPARK-23361.

Firstly, glob resolution will not result in swallowing the remote name part (that is preceded by the `#` sign) in case of `--files` or `--archives` options

Moreover in the special case of multiple resolutions when the remote naming does not make sense and error is returned.

Enhanced current test and wrote additional test for the error case

Author: Mihaly Toth <misutoth@gmail.com>

Closes#20853 from misutoth/glob-with-remote-name.

## What changes were proposed in this pull request?

Removal of the init-container for downloading remote dependencies. Built off of the work done by vanzin in an attempt to refactor driver/executor configuration elaborated in [this](https://issues.apache.org/jira/browse/SPARK-22839) ticket.

## How was this patch tested?

This patch was tested with unit and integration tests.

Author: Ilan Filonenko <if56@cornell.edu>

Closes#20669 from ifilonenko/remove-init-container.

## What changes were proposed in this pull request?

Minor modification.Comment below is not right.

```

/**

* Adds a shutdown hook with the given priority. Hooks with lower priority values run

* first.

*

* param hook The code to run during shutdown.

* return A handle that can be used to unregister the shutdown hook.

*/

def addShutdownHook(priority: Int)(hook: () => Unit): AnyRef = {

shutdownHooks.add(priority, hook)

}

```

## How was this patch tested?

UT

Author: zhoukang <zhoukang199191@gmail.com>

Closes#20845 from caneGuy/zhoukang/fix-shutdowncomment.

## What changes were proposed in this pull request?

With SPARK-20236, `FileCommitProtocol.instantiate()` looks for a three argument constructor, passing in the `dynamicPartitionOverwrite` parameter. If there is no such constructor, it falls back to the classic two-arg one.

When `InsertIntoHadoopFsRelationCommand` passes down that `dynamicPartitionOverwrite` flag `to FileCommitProtocol.instantiate(`), it assumes that the instantiated protocol supports the specific requirements of dynamic partition overwrite. It does not notice when this does not hold, and so the output generated may be incorrect.

This patch changes `FileCommitProtocol.instantiate()` so when `dynamicPartitionOverwrite == true`, it requires the protocol implementation to have a 3-arg constructor. Classic two arg constructors are supported when it is false.

Also it adds some debug level logging for anyone trying to understand what's going on.

## How was this patch tested?

Unit tests verify that

* classes with only 2-arg constructor cannot be used with dynamic overwrite

* classes with only 2-arg constructor can be used without dynamic overwrite

* classes with 3 arg constructors can be used with both.

* the fallback to any two arg ctor takes place after the attempt to load the 3-arg ctor,

* passing in invalid class types fail as expected (regression tests on expected behavior)

Author: Steve Loughran <stevel@hortonworks.com>

Closes#20824 from steveloughran/stevel/SPARK-23683-protocol-instantiate.

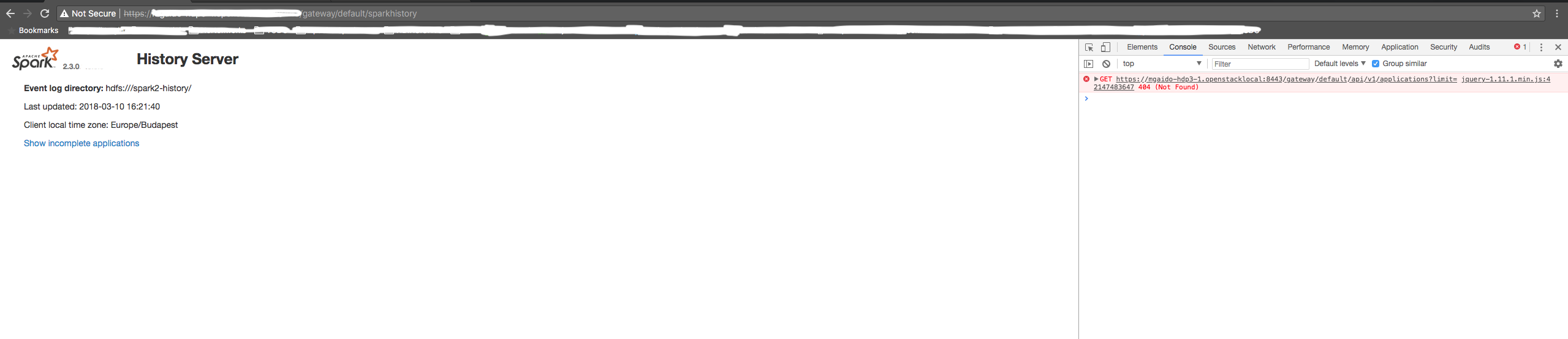

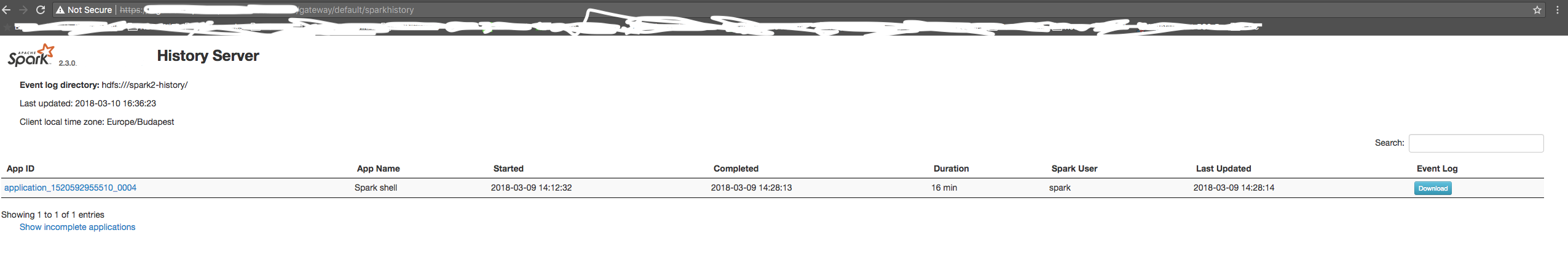

## What changes were proposed in this pull request?

SHS is using a relative path for the REST API call to get the list of the application is a relative path call. In case of the SHS being consumed through a proxy, it can be an issue if the path doesn't end with a "/".

Therefore, we should use an absolute path for the REST call as it is done for all the other resources.

## How was this patch tested?

manual tests

Before the change:

After the change:

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20794 from mgaido91/SPARK-23644.

Jetty handlers are dynamically attached/detached while SHS is running. But the attach and detach operations might be taking place at the same time due to the async in load/clear in Guava Cache.

## What changes were proposed in this pull request?

Add synchronization between attachSparkUI and detachSparkUI in SHS.

## How was this patch tested?

With this patch, the jetty handlers missing issue never happens again in our production cluster SHS.

Author: Ye Zhou <yezhou@linkedin.com>

Closes#20744 from zhouyejoe/SPARK-23608.

Added/corrected scaladoc for isZero on the DoubleAccumulator, CollectionAccumulator, and LongAccumulator subclasses of AccumulatorV2, particularly noting where there are requirements in addition to having a value of zero in order to return true.

## What changes were proposed in this pull request?

Three scaladoc comments are updated in AccumulatorV2.scala

No changes outside of comment blocks were made.

## How was this patch tested?

Running "sbt unidoc", fixing style errors found, and reviewing the resulting local scaladoc in firefox.

Author: smallory <s.mallory@gmail.com>

Closes#20790 from smallory/patch-1.

This change restores functionality that was inadvertently removed as part

of the fix for SPARK-22372.

Also modified an existing unit test to make sure the feature works as intended.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20776 from vanzin/SPARK-23630.

## What changes were proposed in this pull request?

I propose to replace `'\n'` by the `<br>` tag in generated html of thread dump page. The `<br>` tag will split thread lines in more reliable way. For now it could look like on

<img width="1265" alt="the screen shot" src="https://user-images.githubusercontent.com/1580697/37118202-bcd98fc0-2253-11e8-9e61-c2f946869ee0.png">

if the html is proxied and `'\n'` is replaced by another whitespace. The changes allow to more easily read and copy stack traces.

## How was this patch tested?

I tested it manually by checking the thread dump page and its source.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#20762 from MaxGekk/br-thread-dump.

A few different things going on:

- Remove unused methods.

- Move JSON methods to the only class that uses them.

- Move test-only methods to TestUtils.

- Make getMaxResultSize() a config constant.

- Reuse functionality from existing libraries (JRE or JavaUtils) where possible.

The change also includes changes to a few tests to call `Utils.createTempFile` correctly,

so that temp dirs are created under the designated top-level temp dir instead of

potentially polluting git index.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20706 from vanzin/SPARK-23550.

These options were used to configure the built-in JRE SSL libraries

when downloading files from HTTPS servers. But because they were also

used to set up the now (long) removed internal HTTPS file server,

their default configuration chose convenience over security by having

overly lenient settings.

This change removes the configuration options that affect the JRE SSL

libraries. The JRE trust store can still be configured via system

properties (or globally in the JRE security config). The only lost

functionality is not being able to disable the default hostname

verifier when using spark-submit, which should be fine since Spark

itself is not using https for any internal functionality anymore.

I also removed the HTTP-related code from the REPL class loader, since

we haven't had a HTTP server for REPL-generated classes for a while.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20723 from vanzin/SPARK-23538.

## What changes were proposed in this pull request?

Before this commit, a non-interruptible iterator is returned if aggregator or ordering is specified.

This commit also ensures that sorter is closed even when task is cancelled(killed) in the middle of sorting.

## How was this patch tested?

Add a unit test in JobCancellationSuite

Author: Xianjin YE <advancedxy@gmail.com>

Closes#20449 from advancedxy/SPARK-23040.

## What changes were proposed in this pull request?

The algorithm in `DefaultPartitionCoalescer.setupGroups` is responsible for picking preferred locations for coalesced partitions. It analyzes the preferred locations of input partitions. It starts by trying to create one partition for each unique location in the input. However, if the the requested number of coalesced partitions is higher that the number of unique locations, it has to pick duplicate locations.

Previously, the duplicate locations would be picked by iterating over the input partitions in order, and copying their preferred locations to coalesced partitions. If the input partitions were clustered by location, this could result in severe skew.

With the fix, instead of iterating over the list of input partitions in order, we pick them at random. It's not perfectly balanced, but it's much better.

## How was this patch tested?

Unit test reproducing the behavior was added.

Author: Ala Luszczak <ala@databricks.com>

Closes#20664 from ala/SPARK-23496.

## What changes were proposed in this pull request?

When the shuffle dependency specifies aggregation ,and `dependency.mapSideCombine=false`, in the map side,there is no need for aggregation and sorting, so we should be able to use serialized sorting.

## How was this patch tested?

Existing unit test

Author: liuxian <liu.xian3@zte.com.cn>

Closes#20576 from 10110346/mapsidecombine.

… cause oom

## What changes were proposed in this pull request?

blockManagerIdCache in BlockManagerId will not remove old values which may cause oom

`val blockManagerIdCache = new ConcurrentHashMap[BlockManagerId, BlockManagerId]()`

Since whenever we apply a new BlockManagerId, it will put into this map.

This patch will use guava cahce for blockManagerIdCache instead.

A heap dump show in [SPARK-23508](https://issues.apache.org/jira/browse/SPARK-23508)

## How was this patch tested?

Exist tests.

Author: zhoukang <zhoukang199191@gmail.com>

Closes#20667 from caneGuy/zhoukang/fix-history.

As suggested in #20651, the code is very redundant in `AllStagesPage` and modifying it is a copy-and-paste work. We should avoid such a pattern, which is error prone, and have a cleaner solution which avoids code redundancy.

existing UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20663 from mgaido91/SPARK-23475_followup.

The ExecutorAllocationManager should not adjust the target number of

executors when killing idle executors, as it has already adjusted the

target number down based on the task backlog.

The name `replace` was misleading with DynamicAllocation on, as the target number

of executors is changed outside of the call to `killExecutors`, so I adjusted that name. Also separated out the logic of `countFailures` as you don't always want that tied to `replace`.

While I was there I made two changes that weren't directly related to this:

1) Fixed `countFailures` in a couple cases where it was getting an incorrect value since it used to be tied to `replace`, eg. when killing executors on a blacklisted node.

2) hard error if you call `sc.killExecutors` with dynamic allocation on, since that's another way the ExecutorAllocationManager and the CoarseGrainedSchedulerBackend would get out of sync.

Added a unit test case which verifies that the calls to ExecutorAllocationClient do not adjust the number of executors.

Author: Imran Rashid <irashid@cloudera.com>

Closes#20604 from squito/SPARK-23365.

## What changes were proposed in this pull request?

If spark is run with "spark.authenticate=true", then it will fail to start in local mode.

This PR generates secret in local mode when authentication on.

## How was this patch tested?

Modified existing unit test.

Manually started spark-shell.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes#20652 from gaborgsomogyi/SPARK-23476.

## What changes were proposed in this pull request?

SPARK-20648 introduced the status `SKIPPED` for the stages. On the UI, previously, skipped stages were shown as `PENDING`; after this change, they are not shown on the UI.

The PR introduce a new section in order to show also `SKIPPED` stages in a proper table.

## How was this patch tested?

manual tests

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20651 from mgaido91/SPARK-23475.

## What changes were proposed in this pull request?

The root cause of missing completed stages is because `cleanupStages` will never remove skipped stages.

This PR changes the logic to always remove skipped stage first. This is safe since the job itself contains enough information to render skipped stages in the UI.

## How was this patch tested?

The new unit tests.

Author: Shixiong Zhu <zsxwing@gmail.com>

Closes#20656 from zsxwing/SPARK-23475.

## What changes were proposed in this pull request?

The issue here is `AppStatusStore.lastStageAttempt` will return the next available stage in the store when a stage doesn't exist.

This PR adds `last(stageId)` to ensure it returns a correct `StageData`

## How was this patch tested?

The new unit test.

Author: Shixiong Zhu <zsxwing@gmail.com>

Closes#20654 from zsxwing/SPARK-23481.

The secret is used as a string in many parts of the code, so it has

to be turned into a hex string to avoid issues such as the random

byte sequence not containing a valid UTF8 sequence.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20643 from vanzin/SPARK-23468.

This is much faster than finding out what the last attempt is, and the

data should be the same.

There's room for improvement in this page (like only loading data for

the jobs being shown, instead of loading all available jobs and sorting

them), but this should bring performance on par with the 2.2 version.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20644 from vanzin/SPARK-23470.

## What changes were proposed in this pull request?

Print more helpful message when daemon module's stdout is empty or contains a bad port number.

## How was this patch tested?

Manually recreated the environmental issues that caused the mysterious exceptions at one site. Tested that the expected messages are logged.

Also, ran all scala unit tests.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Bruce Robbins <bersprockets@gmail.com>

Closes#20424 from bersprockets/SPARK-23240_prop2.