The commons-crypto library does some questionable error handling internally,

which can lead to JVM crashes if some call into native code fails and cleans

up state it should not.

While the library is not fixed, this change adds some workarounds in Spark code

so that when an error is detected in the commons-crypto side, Spark avoids

calling into the library further.

Tested with existing and added unit tests.

Closes#22557 from vanzin/SPARK-25535.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

While working on another PR, I noticed that there is quite some legacy Java in there that can be beautified. For example the use of features from Java8, such as:

- Collection libraries

- Try-with-resource blocks

No logic has been changed. I think it is important to have a solid codebase with examples that will inspire next PR's to follow up on the best practices.

What are your thoughts on this?

This makes code easier to read, and using try-with-resource makes is less likely to forget to close something.

## What changes were proposed in this pull request?

No changes in the logic of Spark, but more in the aesthetics of the code.

## How was this patch tested?

Using the existing unit tests. Since no logic is changed, the existing unit tests should pass.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22637 from Fokko/SPARK-25408.

Authored-by: Fokko Driesprong <fokkodriesprong@godatadriven.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

While working on another PR, I noticed that there is quite some legacy Java in there that can be beautified. For example the use og features from Java8, such as:

- Collection libraries

- Try-with-resource blocks

No code has been changed

What are your thoughts on this?

This makes code easier to read, and using try-with-resource makes is less likely to forget to close something.

## What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22399 from Fokko/SPARK-25408.

Authored-by: Fokko Driesprong <fokkodriesprong@godatadriven.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Rename method `benchmark` in `BenchmarkBase` as `runBenchmarkSuite `. Also add comments.

Currently the method name `benchmark` is a bit confusing. Also the name is the same as instances of `Benchmark`:

f246813afb/sql/hive/src/test/scala/org/apache/spark/sql/hive/orc/OrcReadBenchmark.scala (L330-L339)

## How was this patch tested?

Unit test.

Closes#22599 from gengliangwang/renameBenchmarkSuite.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

This PR adds a rule to force `.toLowerCase(Locale.ROOT)` or `toUpperCase(Locale.ROOT)`.

It produces an error as below:

```

[error] Are you sure that you want to use toUpperCase or toLowerCase without the root locale? In most cases, you

[error] should use toUpperCase(Locale.ROOT) or toLowerCase(Locale.ROOT) instead.

[error] If you must use toUpperCase or toLowerCase without the root locale, wrap the code block with

[error] // scalastyle:off caselocale

[error] .toUpperCase

[error] .toLowerCase

[error] // scalastyle:on caselocale

```

This PR excludes the cases above for SQL code path for external calls like table name, column name and etc.

For test suites, or when it's clear there's no locale problem like Turkish locale problem, it uses `Locale.ROOT`.

One minor problem is, `UTF8String` has both methods, `toLowerCase` and `toUpperCase`, and the new rule detects them as well. They are ignored.

## How was this patch tested?

Manually tested, and Jenkins tests.

Closes#22581 from HyukjinKwon/SPARK-25565.

Authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Since we don't fail a job when `AccumulatorV2.merge` fails, we should try to update the remaining accumulators so that they can still report correct values.

## How was this patch tested?

The new unit test.

Closes#22586 from zsxwing/SPARK-25568.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Heartbeat shouldn't include accumulators for zero metrics.

Heartbeats sent from executors to the driver every 10 seconds contain metrics and are generally on the order of a few KBs. However, for large jobs with lots of tasks, heartbeats can be on the order of tens of MBs, causing tasks to die with heartbeat failures. We can mitigate this by not sending zero metrics to the driver.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22473 from mukulmurthy/25449-heartbeat.

Authored-by: Mukul Murthy <mukul.murthy@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

The specified test in OpenHashMapSuite to test large items is somehow flaky to throw OOM.

By considering the original work #6763 that added this test, the test can be against OpenHashSetSuite. And by doing this should be to save memory because OpenHashMap allocates two more arrays when growing the map/set.

## How was this patch tested?

Existing tests.

Closes#22569 from viirya/SPARK-25542.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Special case the situation where we know the partioner and the number of requested partions output is the same as the current partioner to avoid a shuffle and instead compute distinct inside of each partion.

## How was this patch tested?

New unit test that verifies partitioner does not change if the partitioner is known and distinct is called with the same target # of partition.

Closes#22010 from holdenk/SPARK-21436-take-advantage-of-known-partioner-for-distinct-on-rdds.

Authored-by: Holden Karau <holden@pigscanfly.ca>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Previously SPARK-24519 created a modifiable config SHUFFLE_MIN_NUM_PARTS_TO_HIGHLY_COMPRESS. However, the config is being parsed for every creation of MapStatus, which could be very expensive. Another problem with the previous approach is that it created the illusion that this can be changed dynamically at runtime, which was not true. This PR changes it so the config is computed only once.

## How was this patch tested?

Removed a test case that's no longer valid.

Closes#22521 from rxin/SPARK-24519.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Currently there are two classes with the same naming BenchmarkBase:

1. `org.apache.spark.util.BenchmarkBase`

2. `org.apache.spark.sql.execution.benchmark.BenchmarkBase`

This is very confusing. And the benchmark object `org.apache.spark.sql.execution.benchmark.FilterPushdownBenchmark` is using the one in `org.apache.spark.util.BenchmarkBase`, while there is another class `BenchmarkBase` in the same package of it...

Here I propose:

1. the package `org.apache.spark.util.BenchmarkBase` should be in test package of core module. Move it to package `org.apache.spark.benchmark` .

2. Move `org.apache.spark.util.Benchmark` to test package of core module. Move it to package `org.apache.spark.benchmark` .

3. Rename the class `org.apache.spark.sql.execution.benchmark.BenchmarkBase` as `BenchmarkWithCodegen`

## How was this patch tested?

Unit test

Closes#22513 from gengliangwang/refactorBenchmarkBase.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

A continuation of squito's executor plugin task. By his request I took his code and added testing and moved the plugin initialization to a separate thread.

Executor plugins now run on one separate thread, so the executor does not wait on them. Added testing.

## How was this patch tested?

Added test cases that test using a sample plugin.

Closes#22192 from NiharS/executorPlugin.

Lead-authored-by: Nihar Sheth <niharrsheth@gmail.com>

Co-authored-by: NiharS <niharrsheth@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This goes to revert sequential PRs based on some discussion and comments at https://github.com/apache/spark/pull/16677#issuecomment-422650759.

#22344#22330#22239#16677

## How was this patch tested?

Existing tests.

Closes#22481 from viirya/revert-SPARK-19355-1.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

We've seen some flakiness in jenkins in SchedulerIntegrationSuite which looks like it just needs a

longer timeout.

Closes#22385 from squito/SPARK-25400.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR ensures to call `super.afterAll()` in `override afterAll()` method for test suites.

* Some suites did not call `super.afterAll()`

* Some suites may call `super.afterAll()` only under certain condition

* Others never call `super.afterAll()`.

This PR also ensures to call `super.beforeAll()` in `override beforeAll()` for test suites.

## How was this patch tested?

Existing UTs

Closes#22337 from kiszk/SPARK-25338.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Correct some comparisons between unrelated types to what they seem to… have been trying to do

## How was this patch tested?

Existing tests.

Closes#22384 from srowen/SPARK-25398.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

We will update block info coming from executors, at the timing like caching a RDD. However, when removing RDDs with unpersisting, we don't ask to update block info. So the block info is not updated.

We can fix this with few options:

1. Ask to update block info when unpersisting

This is simplest but changes driver-executor communication a bit.

2. Update block info when processing the event of unpersisting RDD

We send a `SparkListenerUnpersistRDD` event when unpersisting RDD. When processing this event, we can update block info of the RDD. This only changes event processing code so the risk seems to be lower.

Currently this patch takes option 2 for lower risk. If we agree first option has no risk, we can change to it.

## How was this patch tested?

Unit tests.

Closes#22341 from viirya/SPARK-24889.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Stop trimming values of properties loaded from a file

## How was this patch tested?

Added unit test demonstrating the issue hit in production.

Closes#22213 from gerashegalov/gera/SPARK-25221.

Authored-by: Gera Shegalov <gera@apache.org>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

When running TPC-DS benchmarks on 2.4 release, npoggi and winglungngai saw more than 10% performance regression on the following queries: q67, q24a and q24b. After we applying the PR https://github.com/apache/spark/pull/22338, the performance regression still exists. If we revert the changes in https://github.com/apache/spark/pull/19222, npoggi and winglungngai found the performance regression was resolved. Thus, this PR is to revert the related changes for unblocking the 2.4 release.

In the future release, we still can continue the investigation and find out the root cause of the regression.

## How was this patch tested?

The existing test cases

Closes#22361 from gatorsmile/revertMemoryBlock.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Add new executor level memory metrics (JVM used memory, on/off heap execution memory, on/off heap storage memory, on/off heap unified memory, direct memory, and mapped memory), and expose via the executors REST API. This information will help provide insight into how executor and driver JVM memory is used, and for the different memory regions. It can be used to help determine good values for spark.executor.memory, spark.driver.memory, spark.memory.fraction, and spark.memory.storageFraction.

## What changes were proposed in this pull request?

An ExecutorMetrics class is added, with jvmUsedHeapMemory, jvmUsedNonHeapMemory, onHeapExecutionMemory, offHeapExecutionMemory, onHeapStorageMemory, and offHeapStorageMemory, onHeapUnifiedMemory, offHeapUnifiedMemory, directMemory and mappedMemory. The new ExecutorMetrics is sent by executors to the driver as part of the Heartbeat. A heartbeat is added for the driver as well, to collect these metrics for the driver.

The EventLoggingListener store information about the peak values for each metric, per active stage and executor. When a StageCompleted event is seen, a StageExecutorsMetrics event will be logged for each executor, with peak values for the stage.

The AppStatusListener records the peak values for each memory metric.

The new memory metrics are added to the executors REST API.

## How was this patch tested?

New unit tests have been added. This was also tested on our cluster.

Author: Edwina Lu <edlu@linkedin.com>

Author: Imran Rashid <irashid@cloudera.com>

Author: edwinalu <edwina.lu@gmail.com>

Closes#21221 from edwinalu/SPARK-23429.2.

## What changes were proposed in this pull request?

This adds a test following https://github.com/apache/spark/pull/21638

## How was this patch tested?

Existing tests and new test.

Closes#22356 from srowen/SPARK-22357.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

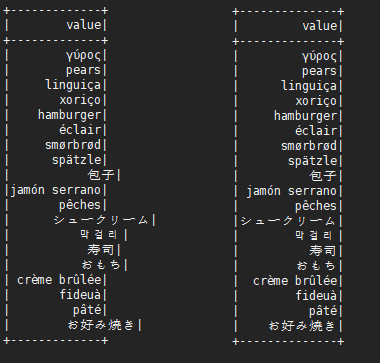

This is not a perfect solution. It is designed to minimize complexity on the basis of solving problems.

It is effective for English, Chinese characters, Japanese, Korean and so on.

```scala

before:

+---+---------------------------+-------------+

|id |中国 |s2 |

+---+---------------------------+-------------+

|1 |ab |[a] |

|2 |null |[中国, abc] |

|3 |ab1 |[hello world]|

|4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] |

|5 |中国(你好)a |[“中(国), 312] |

|6 |中国山(东)服务区 |[“中(国)] |

|7 |中国山东服务区 |[中(国)] |

|8 | |[中国] |

+---+---------------------------+-------------+

after:

+---+-----------------------------------+----------------+

|id |中国 |s2 |

+---+-----------------------------------+----------------+

|1 |ab |[a] |

|2 |null |[中国, abc] |

|3 |ab1 |[hello world] |

|4 |か行 きゃ(kya) きゅ(kyu) きょ(kyo) |[“中国] |

|5 |中国(你好)a |[“中(国), 312]|

|6 |中国山(东)服务区 |[“中(国)] |

|7 |中国山东服务区 |[中(国)] |

|8 | |[中国] |

+---+-----------------------------------+----------------+

```

## What changes were proposed in this pull request?

When there are wide characters such as Chinese characters or Japanese characters in the data, the show method has a alignment problem.

Try to fix this problem.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22048 from xuejianbest/master.

Authored-by: xuejianbest <384329882@qq.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Upgrade chill to 0.9.3, Kryo to 4.0.2, to get bug fixes and improvements.

The resolved tickets includes:

- SPARK-25258 Upgrade kryo package to version 4.0.2

- SPARK-23131 Kryo raises StackOverflow during serializing GLR model

- SPARK-25176 Kryo fails to serialize a parametrised type hierarchy

More details:

https://github.com/twitter/chill/releases/tag/v0.9.3cc3910d501

## How was this patch tested?

Existing tests.

Closes#22179 from wangyum/SPARK-23131.

Lead-authored-by: Yuming Wang <yumwang@ebay.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

An alternative fix for https://github.com/apache/spark/pull/21698

When Spark rerun tasks for an RDD, there are 3 different behaviors:

1. determinate. Always return the same result with same order when rerun.

2. unordered. Returns same data set in random order when rerun.

3. indeterminate. Returns different result when rerun.

Normally Spark doesn't need to care about it. Spark runs stages one by one, when a task is failed, just rerun it. Although the rerun task may return a different result, users will not be surprised.

However, Spark may rerun a finished stage when seeing fetch failures. When this happens, Spark needs to rerun all the tasks of all the succeeding stages if the RDD output is indeterminate, because the input of the succeeding stages has been changed.

If the RDD output is determinate, we only need to rerun the failed tasks of the succeeding stages, because the input doesn't change.

If the RDD output is unordered, it's same as determinate, because shuffle partitioner is always deterministic(round-robin partitioner is not a shuffle partitioner that extends `org.apache.spark.Partitioner`), so the reducers will still get the same input data set.

This PR fixed the failure handling for `repartition`, to avoid correctness issues.

For `repartition`, it applies a stateful map function to generate a round-robin id, which is order sensitive and makes the RDD's output indeterminate. When the stage contains `repartition` reruns, we must also rerun all the tasks of all the succeeding stages.

**future improvement:**

1. Currently we can't rollback and rerun a shuffle map stage, and just fail. We should fix it later. https://issues.apache.org/jira/browse/SPARK-25341

2. Currently we can't rollback and rerun a result stage, and just fail. We should fix it later. https://issues.apache.org/jira/browse/SPARK-25342

3. We should provide public API to allow users to tag the random level of the RDD's computing function.

## How is this pull request tested?

a new test case

Closes#22112 from cloud-fan/repartition.

Lead-authored-by: Wenchen Fan <wenchen@databricks.com>

Co-authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

Running a large Spark job with speculation turned on was causing executor heartbeats to time out on the driver end after sometime and eventually, after hitting the max number of executor failures, the job would fail.

## What changes were proposed in this pull request?

The main reason for the heartbeat timeouts was that the heartbeat-receiver-event-loop-thread was blocked waiting on the TaskSchedulerImpl object which was being held by one of the dispatcher-event-loop threads executing the method dequeueSpeculativeTasks() in TaskSetManager.scala. On further analysis of the heartbeat receiver method executorHeartbeatReceived() in TaskSchedulerImpl class, we found out that instead of waiting to acquire the lock on the TaskSchedulerImpl object, we can remove that lock and make the operations to the global variables inside the code block to be atomic. The block of code in that method only uses one global HashMap taskIdToTaskSetManager. Making that map a ConcurrentHashMap, we are ensuring atomicity of operations and speeding up the heartbeat receiver thread operation.

## How was this patch tested?

Screenshots of the thread dump have been attached below:

**heartbeat-receiver-event-loop-thread:**

<img width="1409" alt="screen shot 2018-08-24 at 9 19 57 am" src="https://user-images.githubusercontent.com/22228190/44593413-e25df780-a788-11e8-9520-176a18401a59.png">

**dispatcher-event-loop-thread:**

<img width="1409" alt="screen shot 2018-08-24 at 9 21 56 am" src="https://user-images.githubusercontent.com/22228190/44593484-13d6c300-a789-11e8-8d88-34b1d51d4541.png">

Closes#22221 from pgandhi999/SPARK-25231.

Authored-by: pgandhi <pgandhi@oath.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

The problem occurs because stage object is removed from liveStages in

AppStatusListener onStageCompletion. Because of this any onTaskEnd event

received after onStageCompletion event do not update stage metrics.

The fix is to retain stage objects in liveStages until all tasks are complete.

1. Fixed the reproducible example posted in the JIRA

2. Added unit test

Closes#22209 from ankuriitg/ankurgupta/SPARK-24415.

Authored-by: ankurgupta <ankur.gupta@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

The configuration parameter "spark.shuffle.service.enabled" has defined in `package.scala`, and it is also used in many place, so we can replace it with `SHUFFLE_SERVICE_ENABLED`.

and unified this configuration parameter "spark.shuffle.service.port" together.

## How was this patch tested?

N/A

Closes#22306 from 10110346/unifiedserviceenable.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

`BytesToBytesMapOnHeapSuite`.`randomizedStressTest` caused `OutOfMemoryError` on several test runs. Seems better to reduce memory usage in this test.

## How was this patch tested?

Unit tests.

Closes#22297 from viirya/SPARK-25290.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Spark scheduler can hang when fetch failures, executor lost, task running on lost executor, and multiple stage attempts. To fix this we change to always unregister the pending partition on task completion.

## What changes were proposed in this pull request?

this PR is actually reverting the change in SPARK-19263, so that it always does shuffleStage.pendingPartitions -= task.partitionId. The change in SPARK-23433, should fix the issue originally from SPARK-19263.

## How was this patch tested?

Unit tests. The condition happens on a race which I haven't reproduced on a real customer, just see it sometimes on customers jobs in a real cluster.

I am also working on adding spark scheduler integration tests.

Closes#21976 from tgravescs/SPARK-24909.

Authored-by: Thomas Graves <tgraves@unharmedunarmed.corp.ne1.yahoo.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This PR adds a unit test for OpenHashMap , this can help developers to distinguish between the 0/0.0/0L and null

## How was this patch tested?

Closes#22241 from 10110346/openhashmap.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

When replicating large cached RDD blocks, it can be helpful to replicate

them as a stream, to avoid using large amounts of memory during the

transfer. This also allows blocks larger than 2GB to be replicated.

Added unit tests in DistributedSuite. Also ran tests on a cluster for

blocks > 2gb.

Closes#21451 from squito/clean_replication.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Fix several bugs in failure handling of barrier execution mode:

* Mark TaskSet for a barrier stage as zombie when a task attempt fails;

* Multiple barrier task failures from a single barrier stage should not trigger multiple stage retries;

* Barrier task failure from a previous failed stage attempt should not trigger stage retry;

* Fail the job when a task from a barrier ResultStage failed;

* RDD.isBarrier() should not rely on `ShuffleDependency`s.

## How was this patch tested?

Added corresponding test cases in `DAGSchedulerSuite` and `TaskSchedulerImplSuite`.

Closes#22158 from jiangxb1987/failure.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

We shall check whether the barrier stage requires more slots (to be able to launch all tasks in the barrier stage together) than the total number of active slots currently, and fail fast if trying to submit a barrier stage that requires more slots than current total number.

This PR proposes to add a new method `getNumSlots()` to try to get the total number of currently active slots in `SchedulerBackend`, support of this new method has been added to all the first-class scheduler backends except `MesosFineGrainedSchedulerBackend`.

## How was this patch tested?

Added new test cases in `BarrierStageOnSubmittedSuite`.

Closes#22001 from jiangxb1987/SPARK-24819.

Lead-authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Co-authored-by: Xiangrui Meng <meng@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

This PR solves [SPARK-22713](https://issues.apache.org/jira/browse/SPARK-22713) which describes a memory leak that occurs when and ExternalAppendOnlyMap is spilled during iteration (opposed to insertion).

(Please fill in changes proposed in this fix)

ExternalAppendOnlyMap's iterator supports spilling but it kept a reference to the internal map (via an internal iterator) after spilling, it seems that the original code was actually supposed to 'get rid' of this reference on the next iteration but according to the elaborate investigation described in the JIRA this didn't happen.

the fix was simply replacing the internal iterator immediately after spilling.

## How was this patch tested?

I've introduced a new test to test suite ExternalAppendOnlyMapSuite, this test asserts that neither the external map itself nor its iterator hold any reference to the internal map after a spill.

These approach required some access relaxation of some members variables and nested classes of ExternalAppendOnlyMap, this members are now package provate and annotated with VisibleForTesting.

Closes#21369 from eyalfa/SPARK-22713__ExternalAppendOnlyMap_effective_spill.

Authored-by: Eyal Farago <eyal@nrgene.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Fixing typos is sometimes very hard. It's not so easy to visually review them. Recently, I discovered a very useful tool for it, [misspell](https://github.com/client9/misspell).

This pull request fixes minor typos detected by [misspell](https://github.com/client9/misspell) except for the false positives. If you would like me to work on other files as well, let me know.

## How was this patch tested?

### before

```

$ misspell . | grep -v '.js'

R/pkg/R/SQLContext.R:354:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:424:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:445:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:495:43: "definiton" is a misspelling of "definition"

NOTICE-binary:454:16: "containd" is a misspelling of "contained"

R/pkg/R/context.R:46:43: "definiton" is a misspelling of "definition"

R/pkg/R/context.R:74:43: "definiton" is a misspelling of "definition"

R/pkg/R/DataFrame.R:591:48: "persistance" is a misspelling of "persistence"

R/pkg/R/streaming.R:166:44: "occured" is a misspelling of "occurred"

R/pkg/inst/worker/worker.R:65:22: "ouput" is a misspelling of "output"

R/pkg/tests/fulltests/test_utils.R:106:25: "environemnt" is a misspelling of "environment"

common/kvstore/src/test/java/org/apache/spark/util/kvstore/InMemoryStoreSuite.java:38:39: "existant" is a misspelling of "existent"

common/kvstore/src/test/java/org/apache/spark/util/kvstore/LevelDBSuite.java:83:39: "existant" is a misspelling of "existent"

common/network-common/src/main/java/org/apache/spark/network/crypto/TransportCipher.java:243:46: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:234:19: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:238:63: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:244:46: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:276:39: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/util/AbstractFileRegion.java:27:20: "transfered" is a misspelling of "transferred"

common/unsafe/src/test/scala/org/apache/spark/unsafe/types/UTF8StringPropertyCheckSuite.scala:195:15: "orgin" is a misspelling of "origin"

core/src/main/scala/org/apache/spark/api/python/PythonRDD.scala:621:39: "gauranteed" is a misspelling of "guaranteed"

core/src/main/scala/org/apache/spark/status/storeTypes.scala:113:29: "ect" is a misspelling of "etc"

core/src/main/scala/org/apache/spark/storage/DiskStore.scala:282:18: "transfered" is a misspelling of "transferred"

core/src/main/scala/org/apache/spark/util/ListenerBus.scala:64:17: "overriden" is a misspelling of "overridden"

core/src/test/scala/org/apache/spark/ShuffleSuite.scala:211:7: "substracted" is a misspelling of "subtracted"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:1922:49: "agriculteur" is a misspelling of "agriculture"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:2468:84: "truely" is a misspelling of "truly"

core/src/test/scala/org/apache/spark/storage/FlatmapIteratorSuite.scala:25:18: "persistance" is a misspelling of "persistence"

core/src/test/scala/org/apache/spark/storage/FlatmapIteratorSuite.scala:26:69: "persistance" is a misspelling of "persistence"

data/streaming/AFINN-111.txt:1219:0: "humerous" is a misspelling of "humorous"

dev/run-pip-tests:55:28: "enviroments" is a misspelling of "environments"

dev/run-pip-tests:91:37: "virutal" is a misspelling of "virtual"

dev/merge_spark_pr.py:377:72: "accross" is a misspelling of "across"

dev/merge_spark_pr.py:378:66: "accross" is a misspelling of "across"

dev/run-pip-tests:126:25: "enviroments" is a misspelling of "environments"

docs/configuration.md:1830:82: "overriden" is a misspelling of "overridden"

docs/structured-streaming-programming-guide.md:525:45: "processs" is a misspelling of "processes"

docs/structured-streaming-programming-guide.md:1165:61: "BETWEN" is a misspelling of "BETWEEN"

docs/sql-programming-guide.md:1891:810: "behaivor" is a misspelling of "behavior"

examples/src/main/python/sql/arrow.py:98:8: "substract" is a misspelling of "subtract"

examples/src/main/python/sql/arrow.py:103:27: "substract" is a misspelling of "subtract"

licenses/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/hungarian.txt:170:0: "teh" is a misspelling of "the"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/portuguese.txt:53:0: "eles" is a misspelling of "eels"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:99:20: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:539:11: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala:77:36: "Teh" is a misspelling of "The"

mllib/src/main/scala/org/apache/spark/mllib/clustering/StreamingKMeans.scala:230:24: "inital" is a misspelling of "initial"

mllib/src/main/scala/org/apache/spark/mllib/stat/MultivariateOnlineSummarizer.scala:276:9: "Euclidian" is a misspelling of "Euclidean"

mllib/src/test/scala/org/apache/spark/ml/clustering/KMeansSuite.scala:237:26: "descripiton" is a misspelling of "descriptions"

python/pyspark/find_spark_home.py:30:13: "enviroment" is a misspelling of "environment"

python/pyspark/context.py:937:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:938:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:939:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:940:12: "supress" is a misspelling of "suppress"

python/pyspark/heapq3.py:6:63: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:7:2: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:263:29: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:263:39: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:270:49: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:270:59: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:275:2: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:275:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:277:29: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:277:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:713:8: "probabilty" is a misspelling of "probability"

python/pyspark/ml/clustering.py:1038:8: "Currenlty" is a misspelling of "Currently"

python/pyspark/ml/stat.py:339:23: "Euclidian" is a misspelling of "Euclidean"

python/pyspark/ml/regression.py:1378:20: "paramter" is a misspelling of "parameter"

python/pyspark/mllib/stat/_statistics.py:262:8: "probabilty" is a misspelling of "probability"

python/pyspark/rdd.py:1363:32: "paramter" is a misspelling of "parameter"

python/pyspark/streaming/tests.py:825:42: "retuns" is a misspelling of "returns"

python/pyspark/sql/tests.py:768:29: "initalization" is a misspelling of "initialization"

python/pyspark/sql/tests.py:3616:31: "initalize" is a misspelling of "initialize"

resource-managers/mesos/src/main/scala/org/apache/spark/scheduler/cluster/mesos/MesosSchedulerBackendUtil.scala:120:39: "arbitary" is a misspelling of "arbitrary"

resource-managers/mesos/src/test/scala/org/apache/spark/deploy/mesos/MesosClusterDispatcherArgumentsSuite.scala:26:45: "sucessfully" is a misspelling of "successfully"

resource-managers/mesos/src/main/scala/org/apache/spark/scheduler/cluster/mesos/MesosSchedulerUtils.scala:358:27: "constaints" is a misspelling of "constraints"

resource-managers/yarn/src/test/scala/org/apache/spark/deploy/yarn/YarnClusterSuite.scala:111:24: "senstive" is a misspelling of "sensitive"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/SessionCatalog.scala:1063:5: "overwirte" is a misspelling of "overwrite"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala:1348:17: "compatability" is a misspelling of "compatibility"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/basicLogicalOperators.scala:77:36: "paramter" is a misspelling of "parameter"

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:1374:22: "precendence" is a misspelling of "precedence"

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/analysis/AnalysisSuite.scala:238:27: "unnecassary" is a misspelling of "unnecessary"

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ConditionalExpressionSuite.scala:212:17: "whn" is a misspelling of "when"

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/StreamingSymmetricHashJoinHelper.scala:147:60: "timestmap" is a misspelling of "timestamp"

sql/core/src/test/scala/org/apache/spark/sql/TPCDSQuerySuite.scala:150:45: "precentage" is a misspelling of "percentage"

sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVInferSchemaSuite.scala:135:29: "infered" is a misspelling of "inferred"

sql/hive/src/test/resources/golden/udf_instr-1-2e76f819563dbaba4beb51e3a130b922:1:52: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_instr-2-32da357fc754badd6e3898dcc8989182:1:52: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_locate-1-6e41693c9c6dceea4d7fab4c02884e4e:1:63: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_locate-2-d9b5934457931447874d6bb7c13de478:1:63: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_translate-2-f7aa38a33ca0df73b7a1e6b6da4b7fe8:9:79: "occurence" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_translate-2-f7aa38a33ca0df73b7a1e6b6da4b7fe8:13:110: "occurence" is a misspelling of "occurrence"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/annotate_stats_join.q:46:105: "distint" is a misspelling of "distinct"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/auto_sortmerge_join_11.q:29:3: "Currenly" is a misspelling of "Currently"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/avro_partitioned.q:72:15: "existant" is a misspelling of "existent"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/decimal_udf.q:25:3: "substraction" is a misspelling of "subtraction"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/groupby2_map_multi_distinct.q:16:51: "funtion" is a misspelling of "function"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/groupby_sort_8.q:15:30: "issueing" is a misspelling of "issuing"

sql/hive/src/test/scala/org/apache/spark/sql/sources/HadoopFsRelationTest.scala:669:52: "wiht" is a misspelling of "with"

sql/hive-thriftserver/src/main/java/org/apache/hive/service/cli/session/HiveSessionImpl.java:474:9: "Refering" is a misspelling of "Referring"

```

### after

```

$ misspell . | grep -v '.js'

common/network-common/src/main/java/org/apache/spark/network/util/AbstractFileRegion.java:27:20: "transfered" is a misspelling of "transferred"

core/src/main/scala/org/apache/spark/status/storeTypes.scala:113:29: "ect" is a misspelling of "etc"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:1922:49: "agriculteur" is a misspelling of "agriculture"

data/streaming/AFINN-111.txt:1219:0: "humerous" is a misspelling of "humorous"

licenses/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/hungarian.txt:170:0: "teh" is a misspelling of "the"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/portuguese.txt:53:0: "eles" is a misspelling of "eels"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:99:20: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:539:11: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala:77:36: "Teh" is a misspelling of "The"

mllib/src/main/scala/org/apache/spark/mllib/stat/MultivariateOnlineSummarizer.scala:276:9: "Euclidian" is a misspelling of "Euclidean"

python/pyspark/heapq3.py:6:63: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:7:2: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:263:29: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:263:39: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:270:49: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:270:59: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:275:2: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:275:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:277:29: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:277:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/ml/stat.py:339:23: "Euclidian" is a misspelling of "Euclidean"

```

Closes#22070 from seratch/fix-typo.

Authored-by: Kazuhiro Sera <seratch@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

## What changes were proposed in this pull request?

This issue is pretty similar to [SPARK-21907](https://issues.apache.org/jira/browse/SPARK-21907).

"allocateArray" in [ShuffleInMemorySorter.reset](9b8521e53e/core/src/main/java/org/apache/spark/shuffle/sort/ShuffleInMemorySorter.java (L99)) may trigger a spill and cause ShuffleInMemorySorter access the released `array`. Another task may get the same memory page from the pool. This will cause two tasks access the same memory page. When a task reads memory written by another task, many types of failures may happen. Here are some examples I have seen:

- JVM crash. (This is easy to reproduce in a unit test as we fill newly allocated and deallocated memory with 0xa5 and 0x5a bytes which usually points to an invalid memory address)

- java.lang.IllegalArgumentException: Comparison method violates its general contract!

- java.lang.NullPointerException at org.apache.spark.memory.TaskMemoryManager.getPage(TaskMemoryManager.java:384)

- java.lang.UnsupportedOperationException: Cannot grow BufferHolder by size -536870912 because the size after growing exceeds size limitation 2147483632

This PR resets states in `ShuffleInMemorySorter.reset` before calling `allocateArray` to fix the issue.

## How was this patch tested?

The new unit test will make JVM crash without the fix.

Closes#22062 from zsxwing/SPARK-25081.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

A logical `Limit` is performed physically by two operations `LocalLimit` and `GlobalLimit`.

Most of time, we gather all data into a single partition in order to run `GlobalLimit`. If we use a very big limit number, shuffling data causes performance issue also reduces parallelism.

We can avoid shuffling into single partition if we don't care data ordering. This patch implements this idea by doing a map stage during global limit. It collects the info of row numbers at each partition. For each partition, we locally retrieves limited data without any shuffling to finish this global limit.

For example, we have three partitions with rows (100, 100, 50) respectively. In global limit of 100 rows, we may take (34, 33, 33) rows for each partition locally. After global limit we still have three partitions.

If the data partition has certain ordering, we can't distribute required rows evenly to each partitions because it could change data ordering. But we still can avoid shuffling.

## How was this patch tested?

Jenkins tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#16677 from viirya/improve-global-limit-parallelism.

## What changes were proposed in this pull request?

This PR fixes typo regarding `auxiliary verb + verb[s]`. This is a follow-on of #21956.

## How was this patch tested?

N/A

Closes#22040 from kiszk/spellcheck1.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Signature of the function passed to `RDDBarrier.mapPartitions()` is different from that of `RDD.mapPartitions`. The later doesn’t take a `TaskContext`. We shall make the function signature the same to avoid confusion and misusage.

This PR proposes the following API changes:

- In `RDDBarrier`, migrate `mapPartitions` from

```

def mapPartitions[S: ClassTag](

f: (Iterator[T], BarrierTaskContext) => Iterator[S],

preservesPartitioning: Boolean = false): RDD[S]

}

```

to

```

def mapPartitions[S: ClassTag](

f: Iterator[T] => Iterator[S],

preservesPartitioning: Boolean = false): RDD[S]

}

```

- Add new static method to get a `BarrierTaskContext`:

```

object BarrierTaskContext {

def get(): BarrierTaskContext

}

```

## How was this patch tested?

Existing test cases.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#22026 from jiangxb1987/mapPartitions.

## What changes were proposed in this pull request?

Fixes for test issues that arose after Scala 2.12 support was added -- ones that only affect the 2.12 build.

## How was this patch tested?

Existing tests.

Closes#22004 from srowen/SPARK-25029.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

## What changes were proposed in this pull request?

In the PR, I propose to replace Scala parallel collections by new methods `parmap()`. The methods use futures to transform a sequential collection by applying a lambda function to each element in parallel. The result of `parmap` is another regular (sequential) collection.

The proposed `parmap` method aims to solve the problem of impossibility to interrupt parallel Scala collection. This possibility is needed for reliable task preemption.

## How was this patch tested?

A test was added to `ThreadUtilsSuite`

Closes#21913 from MaxGekk/par-map.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Implement BarrierTaskContext.barrier(), to support global sync between all the tasks in a barrier stage.

The function set a global barrier and waits until all tasks in this stage hit this barrier. Similar to MPI_Barrier function in MPI, the barrier() function call blocks until all tasks in the same stage have reached this routine. The global sync shall finish immediately once all tasks in the same barrier stage reaches the same barrier.

This PR implements BarrierTaskContext.barrier() based on netty-based RPC client, introduces new `BarrierCoordinator` and new `BarrierCoordinatorMessage`, and new config to handle timeout issue.

## How was this patch tested?

Add `BarrierTaskContextSuite` to test `BarrierTaskContext.barrier()`

Closes#21898 from jiangxb1987/taskcontext.barrier.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In `SparkHadoopUtil. checkAccessPermission`, we consider only basic permissions in order to check wether a user can access a file or not. This is not a complete check, as it ignores ACLs and other policies a file system may apply in its internal. So this can result in returning wrongly that a user cannot access a file (despite he actually can).

The PR proposes to delegate to the filesystem the check whether a file is accessible or not, in order to return the right result. A caching layer is added for performance reasons.

## How was this patch tested?

modified UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21895 from mgaido91/SPARK-24948.

## What changes were proposed in this pull request?

There are many warnings in the current build (for instance see https://amplab.cs.berkeley.edu/jenkins/job/spark-master-test-sbt-hadoop-2.7/4734/console).

**common**:

```

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/kvstore/src/main/java/org/apache/spark/util/kvstore/LevelDB.java:237: warning: [rawtypes] found raw type: LevelDBIterator

[warn] void closeIterator(LevelDBIterator it) throws IOException {

[warn] ^

[warn] missing type arguments for generic class LevelDBIterator<T>

[warn] where T is a type-variable:

[warn] T extends Object declared in class LevelDBIterator

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportServer.java:151: warning: [deprecation] group() in AbstractBootstrap has been deprecated

[warn] if (bootstrap != null && bootstrap.group() != null) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportServer.java:152: warning: [deprecation] group() in AbstractBootstrap has been deprecated

[warn] bootstrap.group().shutdownGracefully();

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportServer.java:154: warning: [deprecation] childGroup() in ServerBootstrap has been deprecated

[warn] if (bootstrap != null && bootstrap.childGroup() != null) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportServer.java:155: warning: [deprecation] childGroup() in ServerBootstrap has been deprecated

[warn] bootstrap.childGroup().shutdownGracefully();

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/util/NettyUtils.java:112: warning: [deprecation] PooledByteBufAllocator(boolean,int,int,int,int,int,int,int) in PooledByteBufAllocator has been deprecated

[warn] return new PooledByteBufAllocator(

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/client/TransportClient.java:321: warning: [rawtypes] found raw type: Future

[warn] public void operationComplete(Future future) throws Exception {

[warn] ^

[warn] missing type arguments for generic class Future<V>

[warn] where V is a type-variable:

[warn] V extends Object declared in interface Future

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/client/TransportResponseHandler.java:215: warning: [rawtypes] found raw type: StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, resp.streamId, resp.byteCount,

[warn] ^

[warn] missing type arguments for generic class StreamInterceptor<T>

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/client/TransportResponseHandler.java:215: warning: [rawtypes] found raw type: StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, resp.streamId, resp.byteCount,

[warn] ^

[warn] missing type arguments for generic class StreamInterceptor<T>

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/client/TransportResponseHandler.java:215: warning: [unchecked] unchecked call to StreamInterceptor(MessageHandler<T>,String,long,StreamCallback) as a member of the raw type StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, resp.streamId, resp.byteCount,

[warn] ^

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportRequestHandler.java:255: warning: [rawtypes] found raw type: StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, wrappedCallback.getID(),

[warn] ^

[warn] missing type arguments for generic class StreamInterceptor<T>

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportRequestHandler.java:255: warning: [rawtypes] found raw type: StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, wrappedCallback.getID(),

[warn] ^

[warn] missing type arguments for generic class StreamInterceptor<T>

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportRequestHandler.java:255: warning: [unchecked] unchecked call to StreamInterceptor(MessageHandler<T>,String,long,StreamCallback) as a member of the raw type StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, wrappedCallback.getID(),

[warn] ^

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/crypto/TransportCipher.java:270: warning: [deprecation] transfered() in FileRegion has been deprecated

[warn] region.transferTo(byteRawChannel, region.transfered());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:304: warning: [deprecation] transfered() in FileRegion has been deprecated

[warn] region.transferTo(byteChannel, region.transfered());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/test/java/org/apache/spark/network/ProtocolSuite.java:119: warning: [deprecation] transfered() in FileRegion has been deprecated

[warn] while (in.transfered() < in.count()) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/test/java/org/apache/spark/network/ProtocolSuite.java:120: warning: [deprecation] transfered() in FileRegion has been deprecated

[warn] in.transferTo(channel, in.transfered());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/unsafe/src/test/java/org/apache/spark/unsafe/hash/Murmur3_x86_32Suite.java:80: warning: [static] static method should be qualified by type name, Murmur3_x86_32, instead of by an expression

[warn] Assert.assertEquals(-300363099, hasher.hashUnsafeWords(bytes, offset, 16, 42));

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/unsafe/src/test/java/org/apache/spark/unsafe/hash/Murmur3_x86_32Suite.java:84: warning: [static] static method should be qualified by type name, Murmur3_x86_32, instead of by an expression

[warn] Assert.assertEquals(-1210324667, hasher.hashUnsafeWords(bytes, offset, 16, 42));

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/unsafe/src/test/java/org/apache/spark/unsafe/hash/Murmur3_x86_32Suite.java:88: warning: [static] static method should be qualified by type name, Murmur3_x86_32, instead of by an expression

[warn] Assert.assertEquals(-634919701, hasher.hashUnsafeWords(bytes, offset, 16, 42));

[warn] ^

```

**launcher**:

```

[warn] Pruning sources from previous analysis, due to incompatible CompileSetup.

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/launcher/src/main/java/org/apache/spark/launcher/AbstractLauncher.java:31: warning: [rawtypes] found raw type: AbstractLauncher

[warn] public abstract class AbstractLauncher<T extends AbstractLauncher> {

[warn] ^

[warn] missing type arguments for generic class AbstractLauncher<T>

[warn] where T is a type-variable:

[warn] T extends AbstractLauncher declared in class AbstractLauncher

```

**core**:

```

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/main/scala/org/apache/spark/api/r/RBackend.scala:99: method group in class AbstractBootstrap is deprecated: see corresponding Javadoc for more information.

[warn] if (bootstrap != null && bootstrap.group() != null) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/main/scala/org/apache/spark/api/r/RBackend.scala💯 method group in class AbstractBootstrap is deprecated: see corresponding Javadoc for more information.

[warn] bootstrap.group().shutdownGracefully()

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/main/scala/org/apache/spark/api/r/RBackend.scala:102: method childGroup in class ServerBootstrap is deprecated: see corresponding Javadoc for more information.

[warn] if (bootstrap != null && bootstrap.childGroup() != null) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/main/scala/org/apache/spark/api/r/RBackend.scala:103: method childGroup in class ServerBootstrap is deprecated: see corresponding Javadoc for more information.

[warn] bootstrap.childGroup().shutdownGracefully()

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/util/ClosureCleanerSuite.scala:151: reflective access of structural type member method getData should be enabled

[warn] by making the implicit value scala.language.reflectiveCalls visible.

[warn] This can be achieved by adding the import clause 'import scala.language.reflectiveCalls'

[warn] or by setting the compiler option -language:reflectiveCalls.

[warn] See the Scaladoc for value scala.language.reflectiveCalls for a discussion

[warn] why the feature should be explicitly enabled.

[warn] val rdd = sc.parallelize(1 to 1).map(concreteObject.getData)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/util/ClosureCleanerSuite.scala:175: reflective access of structural type member value innerObject2 should be enabled

[warn] by making the implicit value scala.language.reflectiveCalls visible.

[warn] val rdd = sc.parallelize(1 to 1).map(concreteObject.innerObject2.getData)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/util/ClosureCleanerSuite.scala:175: reflective access of structural type member method getData should be enabled

[warn] by making the implicit value scala.language.reflectiveCalls visible.

[warn] val rdd = sc.parallelize(1 to 1).map(concreteObject.innerObject2.getData)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/LocalSparkContext.scala:32: constructor Slf4JLoggerFactory in class Slf4JLoggerFactory is deprecated: see corresponding Javadoc for more information.

[warn] InternalLoggerFactory.setDefaultFactory(new Slf4JLoggerFactory())

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:218: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] assert(wrapper.stageAttemptId === stages.head.attemptId)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:261: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] stageAttemptId = stages.head.attemptId))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:287: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] stageAttemptId = stages.head.attemptId))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:471: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] stageAttemptId = stages.last.attemptId))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:966: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] listener.onTaskStart(SparkListenerTaskStart(dropped.stageId, dropped.attemptId, task))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:972: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] listener.onTaskEnd(SparkListenerTaskEnd(dropped.stageId, dropped.attemptId,

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:976: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] .taskSummary(dropped.stageId, dropped.attemptId, Array(0.25d, 0.50d, 0.75d))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:1146: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] SparkListenerTaskEnd(stage1.stageId, stage1.attemptId, "taskType", Success, tasks(1), null))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:1150: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] SparkListenerTaskEnd(stage1.stageId, stage1.attemptId, "taskType", Success, tasks(0), null))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/storage/DiskStoreSuite.scala:197: method transfered in trait FileRegion is deprecated: see corresponding Javadoc for more information.

[warn] while (region.transfered() < region.count()) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/storage/DiskStoreSuite.scala:198: method transfered in trait FileRegion is deprecated: see corresponding Javadoc for more information.

[warn] region.transferTo(byteChannel, region.transfered())

[warn] ^

```

**sql**:

```

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/analysis/AnalysisSuite.scala:534: abstract type T is unchecked since it is eliminated by erasure

[warn] assert(partitioning.isInstanceOf[T])

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/analysis/AnalysisSuite.scala:534: abstract type T is unchecked since it is eliminated by erasure

[warn] assert(partitioning.isInstanceOf[T])

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ObjectExpressionsSuite.scala:323: inferred existential type Option[Class[_$1]]( forSome { type _$1 }), which cannot be expressed by wildcards, should be enabled

[warn] by making the implicit value scala.language.existentials visible.

[warn] This can be achieved by adding the import clause 'import scala.language.existentials'

[warn] or by setting the compiler option -language:existentials.

[warn] See the Scaladoc for value scala.language.existentials for a discussion

[warn] why the feature should be explicitly enabled.

[warn] val optClass = Option(collectionCls)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/SpecificParquetRecordReaderBase.java:226: warning: [deprecation] ParquetFileReader(Configuration,FileMetaData,Path,List<BlockMetaData>,List<ColumnDescriptor>) in ParquetFileReader has been deprecated

[warn] this.reader = new ParquetFileReader(

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:178: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] (descriptor.getType() == PrimitiveType.PrimitiveTypeName.INT32 ||

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:179: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] (descriptor.getType() == PrimitiveType.PrimitiveTypeName.INT64 &&

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:181: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] descriptor.getType() == PrimitiveType.PrimitiveTypeName.FLOAT ||

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:182: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] descriptor.getType() == PrimitiveType.PrimitiveTypeName.DOUBLE ||

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:183: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] descriptor.getType() == PrimitiveType.PrimitiveTypeName.BINARY))) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:198: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] switch (descriptor.getType()) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:221: warning: [deprecation] getTypeLength() in ColumnDescriptor has been deprecated

[warn] readFixedLenByteArrayBatch(rowId, num, column, descriptor.getTypeLength());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:224: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] throw new IOException("Unsupported type: " + descriptor.getType());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:246: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] descriptor.getType().toString(),

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:258: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] switch (descriptor.getType()) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:384: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] throw new UnsupportedOperationException("Unsupported type: " + descriptor.getType());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/vectorized/ArrowColumnVector.java:458: warning: [static] static variable should be qualified by type name, BaseRepeatedValueVector, instead of by an expression

[warn] int index = rowId * accessor.OFFSET_WIDTH;

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/vectorized/ArrowColumnVector.java:460: warning: [static] static variable should be qualified by type name, BaseRepeatedValueVector, instead of by an expression

[warn] int end = offsets.getInt(index + accessor.OFFSET_WIDTH);

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/BenchmarkQueryTest.scala:57: a pure expression does nothing in statement position; you may be omitting necessary parentheses

[warn] case s => s

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetInteroperabilitySuite.scala:182: inferred existential type org.apache.parquet.column.statistics.Statistics[?0]( forSome { type ?0 <: Comparable[?0] }), which cannot be expressed by wildcards, should be enabled

[warn] by making the implicit value scala.language.existentials visible.

[warn] This can be achieved by adding the import clause 'import scala.language.existentials'

[warn] or by setting the compiler option -language:existentials.

[warn] See the Scaladoc for value scala.language.existentials for a discussion

[warn] why the feature should be explicitly enabled.

[warn] val columnStats = oneBlockColumnMeta.getStatistics

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/execution/streaming/sources/ForeachBatchSinkSuite.scala:146: implicit conversion method conv should be enabled

[warn] by making the implicit value scala.language.implicitConversions visible.

[warn] This can be achieved by adding the import clause 'import scala.language.implicitConversions'

[warn] or by setting the compiler option -language:implicitConversions.

[warn] See the Scaladoc for value scala.language.implicitConversions for a discussion

[warn] why the feature should be explicitly enabled.

[warn] implicit def conv(x: (Int, Long)): KV = KV(x._1, x._2)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/streaming/continuous/shuffle/ContinuousShuffleSuite.scala:48: implicit conversion method unsafeRow should be enabled

[warn] by making the implicit value scala.language.implicitConversions visible.

[warn] private implicit def unsafeRow(value: Int) = {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetInteroperabilitySuite.scala:178: method getType in class ColumnDescriptor is deprecated: see corresponding Javadoc for more information.

[warn] assert(oneFooter.getFileMetaData.getSchema.getColumns.get(0).getType() ===

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetTest.scala:154: method readAllFootersInParallel in object ParquetFileReader is deprecated: see corresponding Javadoc for more information.

[warn] ParquetFileReader.readAllFootersInParallel(configuration, fs.getFileStatus(path)).asScala.toSeq

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/hive/src/test/java/org/apache/spark/sql/hive/test/Complex.java:679: warning: [cast] redundant cast to Complex

[warn] Complex typedOther = (Complex)other;

[warn] ^

```

**mllib**:

```

[warn] Pruning sources from previous analysis, due to incompatible CompileSetup.

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/mllib/src/test/scala/org/apache/spark/ml/recommendation/ALSSuite.scala:597: match may not be exhaustive.

[warn] It would fail on the following inputs: None, Some((x: Tuple2[?, ?] forSome x not in (?, ?)))

[warn] val df = dfs.find {

[warn] ^

```

This PR does not target fix all of them since some look pretty tricky to fix and there look too many warnings including false positive (like deprecated API but it's used in its test, etc.)

## How was this patch tested?

Existing tests should cover this.

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21975 from HyukjinKwon/remove-build-warnings.

## What changes were proposed in this pull request?