## What changes were proposed in this pull request?

```Scala

val tablePath = new File(s"${path.getCanonicalPath}/cOl3=c/cOl1=a/cOl5=e")

Seq(("a", "b", "c", "d", "e")).toDF("cOl1", "cOl2", "cOl3", "cOl4", "cOl5")

.write.json(tablePath.getCanonicalPath)

val df = spark.read.json(path.getCanonicalPath).select("CoL1", "CoL5", "CoL3").distinct()

df.show()

```

It generates a wrong result.

```

[c,e,a]

```

We have a bug in the rule `OptimizeMetadataOnlyQuery `. We should respect the attribute order in the original leaf node. This PR is to fix it.

## How was this patch tested?

Added a test case

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20684 from gatorsmile/optimizeMetadataOnly.

## What changes were proposed in this pull request?

Refactor ColumnStat to be more flexible.

* Split `ColumnStat` and `CatalogColumnStat` just like `CatalogStatistics` is split from `Statistics`. This detaches how the statistics are stored from how they are processed in the query plan. `CatalogColumnStat` keeps `min` and `max` as `String`, making it not depend on dataType information.

* For `CatalogColumnStat`, parse column names from property names in the metastore (`KEY_VERSION` property), not from metastore schema. This means that `CatalogColumnStat`s can be created for columns even if the schema itself is not stored in the metastore.

* Make all fields optional. `min`, `max` and `histogram` for columns were optional already. Having them all optional is more consistent, and gives flexibility to e.g. drop some of the fields through transformations if they are difficult / impossible to calculate.

The added flexibility will make it possible to have alternative implementations for stats, and separates stats collection from stats and estimation processing in plans.

## How was this patch tested?

Refactored existing tests to work with refactored `ColumnStat` and `CatalogColumnStat`.

New tests added in `StatisticsSuite` checking that backwards / forwards compatibility is not broken.

Author: Juliusz Sompolski <julek@databricks.com>

Closes#20624 from juliuszsompolski/SPARK-23445.

## What changes were proposed in this pull request?

Remove queryExecutionThread.interrupt() from ContinuousExecution. As detailed in the JIRA, interrupting the thread is only relevant in the microbatch case; for continuous processing the query execution can quickly clean itself up without.

## How was this patch tested?

existing tests

Author: Jose Torres <jose@databricks.com>

Closes#20622 from jose-torres/SPARK-23441.

## What changes were proposed in this pull request?

This PR avoids to print schema internal information when unknown column is specified in partition columns. This PR prints column names in the schema with more readable format.

The following is an example.

Source code

```

test("save with an unknown partition column") {

withTempDir { dir =>

val path = dir.getCanonicalPath

Seq(1L -> "a").toDF("i", "j").write

.format("parquet")

.partitionBy("unknownColumn")

.save(path)

}

```

Output without this PR

```

Partition column unknownColumn not found in schema StructType(StructField(i,LongType,false), StructField(j,StringType,true));

```

Output with this PR

```

Partition column unknownColumn not found in schema struct<i:bigint,j:string>;

```

## How was this patch tested?

Manually tested

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#20653 from kiszk/SPARK-23459.

**The best way to review this PR is to ignore whitespace/indent changes. Use this link - https://github.com/apache/spark/pull/20650/files?w=1**

## What changes were proposed in this pull request?

The stream-stream join tests add data to multiple sources and expect it all to show up in the next batch. But there's a race condition; the new batch might trigger when only one of the AddData actions has been reached.

Prior attempt to solve this issue by jose-torres in #20646 attempted to simultaneously synchronize on all memory sources together when consecutive AddData was found in the actions. However, this carries the risk of deadlock as well as unintended modification of stress tests (see the above PR for a detailed explanation). Instead, this PR attempts the following.

- A new action called `StreamProgressBlockedActions` that allows multiple actions to be executed while the streaming query is blocked from making progress. This allows data to be added to multiple sources that are made visible simultaneously in the next batch.

- An alias of `StreamProgressBlockedActions` called `MultiAddData` is explicitly used in the `Streaming*JoinSuites` to add data to two memory sources simultaneously.

This should avoid unintentional modification of the stress tests (or any other test for that matter) while making sure that the flaky tests are deterministic.

## How was this patch tested?

Modified test cases in `Streaming*JoinSuites` where there are consecutive `AddData` actions.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#20650 from tdas/SPARK-23408.

## What changes were proposed in this pull request?

For CreateTable with Append mode, we should check if `storage.locationUri` is the same with existing table in `PreprocessTableCreation`

In the current code, there is only a simple exception if the `storage.locationUri` is different with existing table:

`org.apache.spark.sql.AnalysisException: Table or view not found:`

which can be improved.

## How was this patch tested?

Unit test

Author: Wang Gengliang <gengliang.wang@databricks.com>

Closes#20660 from gengliangwang/locationUri.

## What changes were proposed in this pull request?

DataSourceV2 initially allowed user-supplied schemas when a source doesn't implement `ReadSupportWithSchema`, as long as the schema was identical to the source's schema. This is confusing behavior because changes to an underlying table can cause a previously working job to fail with an exception that user-supplied schemas are not allowed.

This reverts commit adcb25a0624, which was added to #20387 so that it could be removed in a separate JIRA issue and PR.

## How was this patch tested?

Existing tests.

Author: Ryan Blue <blue@apache.org>

Closes#20603 from rdblue/SPARK-23418-revert-adcb25a0624.

## What changes were proposed in this pull request?

This PR always adds `codegenStageId` in comment of the generated class. This is a replication of #20419 for post-Spark 2.3.

Closes#20419

```

/* 001 */ public Object generate(Object[] references) {

/* 002 */ return new GeneratedIteratorForCodegenStage1(references);

/* 003 */ }

/* 004 */

/* 005 */ // codegenStageId=1

/* 006 */ final class GeneratedIteratorForCodegenStage1 extends org.apache.spark.sql.execution.BufferedRowIterator {

/* 007 */ private Object[] references;

...

```

## How was this patch tested?

Existing tests

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#20612 from kiszk/SPARK-23424.

## What changes were proposed in this pull request?

In a kerberized cluster, when Spark reads a file path (e.g. `people.json`), it warns with a wrong warning message during looking up `people.json/_spark_metadata`. The root cause of this situation is the difference between `LocalFileSystem` and `DistributedFileSystem`. `LocalFileSystem.exists()` returns `false`, but `DistributedFileSystem.exists` raises `org.apache.hadoop.security.AccessControlException`.

```scala

scala> spark.version

res0: String = 2.4.0-SNAPSHOT

scala> spark.read.json("file:///usr/hdp/current/spark-client/examples/src/main/resources/people.json").show

+----+-------+

| age| name|

+----+-------+

|null|Michael|

| 30| Andy|

| 19| Justin|

+----+-------+

scala> spark.read.json("hdfs:///tmp/people.json")

18/02/15 05:00:48 WARN streaming.FileStreamSink: Error while looking for metadata directory.

18/02/15 05:00:48 WARN streaming.FileStreamSink: Error while looking for metadata directory.

```

After this PR,

```scala

scala> spark.read.json("hdfs:///tmp/people.json").show

+----+-------+

| age| name|

+----+-------+

|null|Michael|

| 30| Andy|

| 19| Justin|

+----+-------+

```

## How was this patch tested?

Manual.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20616 from dongjoon-hyun/SPARK-23434.

## What changes were proposed in this pull request?

SPARK-23203: DataSourceV2 should use immutable catalyst trees instead of wrapping a mutable DataSourceV2Reader. This commit updates DataSourceV2Relation and consolidates much of the DataSourceV2 API requirements for the read path in it. Instead of wrapping a reader that changes, the relation lazily produces a reader from its configuration.

This commit also updates the predicate and projection push-down. Instead of the implementation from SPARK-22197, this reuses the rule matching from the Hive and DataSource read paths (using `PhysicalOperation`) and copies most of the implementation of `SparkPlanner.pruneFilterProject`, with updates for DataSourceV2. By reusing the implementation from other read paths, this should have fewer regressions from other read paths and is less code to maintain.

The new push-down rules also supports the following edge cases:

* The output of DataSourceV2Relation should be what is returned by the reader, in case the reader can only partially satisfy the requested schema projection

* The requested projection passed to the DataSourceV2Reader should include filter columns

* The push-down rule may be run more than once if filters are not pushed through projections

## How was this patch tested?

Existing push-down and read tests.

Author: Ryan Blue <blue@apache.org>

Closes#20387 from rdblue/SPARK-22386-push-down-immutable-trees.

## What changes were proposed in this pull request?

Before the patch, Spark could infer as Date a partition value which cannot be casted to Date (this can happen when there are extra characters after a valid date, like `2018-02-15AAA`).

When this happens and the input format has metadata which define the schema of the table, then `null` is returned as a value for the partition column, because the `cast` operator used in (`PartitioningAwareFileIndex.inferPartitioning`) is unable to convert the value.

The PR checks in the partition inference that values can be casted to Date and Timestamp, in order to infer that datatype to them.

## How was this patch tested?

added UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20621 from mgaido91/SPARK-23436.

## What changes were proposed in this pull request?

ParquetFileFormat leaks opened files in some cases. This PR prevents that by registering task completion listers first before initialization.

- [spark-branch-2.3-test-sbt-hadoop-2.7](https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-branch-2.3-test-sbt-hadoop-2.7/205/testReport/org.apache.spark.sql/FileBasedDataSourceSuite/_It_is_not_a_test_it_is_a_sbt_testing_SuiteSelector_/)

- [spark-master-test-sbt-hadoop-2.6](https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-sbt-hadoop-2.6/4228/testReport/junit/org.apache.spark.sql.execution.datasources.parquet/ParquetQuerySuite/_It_is_not_a_test_it_is_a_sbt_testing_SuiteSelector_/)

```

Caused by: sbt.ForkMain$ForkError: java.lang.Throwable: null

at org.apache.spark.DebugFilesystem$.addOpenStream(DebugFilesystem.scala:36)

at org.apache.spark.DebugFilesystem.open(DebugFilesystem.scala:70)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:769)

at org.apache.parquet.hadoop.ParquetFileReader.<init>(ParquetFileReader.java:538)

at org.apache.spark.sql.execution.datasources.parquet.SpecificParquetRecordReaderBase.initialize(SpecificParquetRecordReaderBase.java:149)

at org.apache.spark.sql.execution.datasources.parquet.VectorizedParquetRecordReader.initialize(VectorizedParquetRecordReader.java:133)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anonfun$buildReaderWithPartitionValues$1.apply(ParquetFileFormat.scala:400)

at

```

## How was this patch tested?

Manual. The following test case generates the same leakage.

```scala

test("SPARK-23457 Register task completion listeners first in ParquetFileFormat") {

withSQLConf(SQLConf.PARQUET_VECTORIZED_READER_BATCH_SIZE.key -> s"${Int.MaxValue}") {

withTempDir { dir =>

val basePath = dir.getCanonicalPath

Seq(0).toDF("a").write.format("parquet").save(new Path(basePath, "first").toString)

Seq(1).toDF("a").write.format("parquet").save(new Path(basePath, "second").toString)

val df = spark.read.parquet(

new Path(basePath, "first").toString,

new Path(basePath, "second").toString)

val e = intercept[SparkException] {

df.collect()

}

assert(e.getCause.isInstanceOf[OutOfMemoryError])

}

}

}

```

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20619 from dongjoon-hyun/SPARK-23390.

## What changes were proposed in this pull request?

This PR updates Apache ORC dependencies to 1.4.3 released on February 9th. Apache ORC 1.4.2 release removes unnecessary dependencies and 1.4.3 has 5 more patches (https://s.apache.org/Fll8).

Especially, the following ORC-285 is fixed at 1.4.3.

```scala

scala> val df = Seq(Array.empty[Float]).toDF()

scala> df.write.format("orc").save("/tmp/floatarray")

scala> spark.read.orc("/tmp/floatarray")

res1: org.apache.spark.sql.DataFrame = [value: array<float>]

scala> spark.read.orc("/tmp/floatarray").show()

18/02/12 22:09:10 ERROR Executor: Exception in task 0.0 in stage 1.0 (TID 1)

java.io.IOException: Error reading file: file:/tmp/floatarray/part-00000-9c0b461b-4df1-4c23-aac1-3e4f349ac7d6-c000.snappy.orc

at org.apache.orc.impl.RecordReaderImpl.nextBatch(RecordReaderImpl.java:1191)

at org.apache.orc.mapreduce.OrcMapreduceRecordReader.ensureBatch(OrcMapreduceRecordReader.java:78)

...

Caused by: java.io.EOFException: Read past EOF for compressed stream Stream for column 2 kind DATA position: 0 length: 0 range: 0 offset: 0 limit: 0

```

## How was this patch tested?

Pass the Jenkins test.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20511 from dongjoon-hyun/SPARK-23340.

## What changes were proposed in this pull request?

Migrating KafkaSource (with data source v1) to KafkaMicroBatchReader (with data source v2).

Performance comparison:

In a unit test with in-process Kafka broker, I tested the read throughput of V1 and V2 using 20M records in a single partition. They were comparable.

## How was this patch tested?

Existing tests, few modified to be better tests than the existing ones.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#20554 from tdas/SPARK-23362.

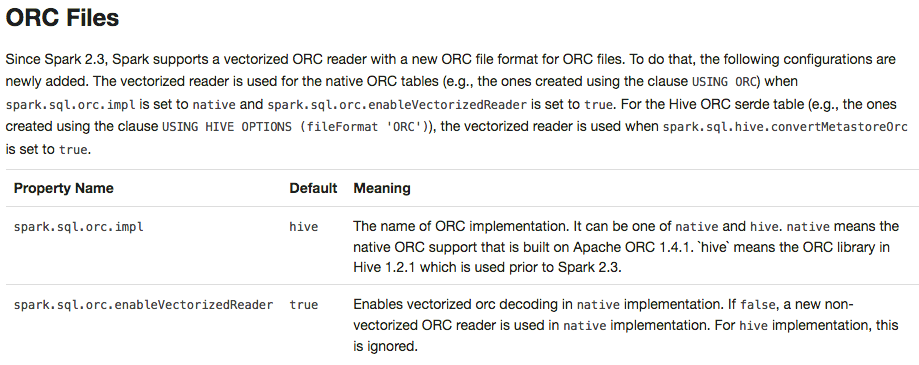

## What changes were proposed in this pull request?

To prevent any regressions, this PR changes ORC implementation to `hive` by default like Spark 2.2.X.

Users can enable `native` ORC. Also, ORC PPD is also restored to `false` like Spark 2.2.X.

## How was this patch tested?

Pass all test cases.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20610 from dongjoon-hyun/SPARK-ORC-DISABLE.

## What changes were proposed in this pull request?

Streaming execution has a list of exceptions that means interruption, and handle them specially. `WriteToDataSourceV2Exec` should also respect this list and not wrap them with `SparkException`.

## How was this patch tested?

existing test.

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20605 from cloud-fan/write.

## What changes were proposed in this pull request?

Solved two bugs to enable stream-stream self joins.

### Incorrect analysis due to missing MultiInstanceRelation trait

Streaming leaf nodes did not extend MultiInstanceRelation, which is necessary for the catalyst analyzer to convert the self-join logical plan DAG into a tree (by creating new instances of the leaf relations). This was causing the error `Failure when resolving conflicting references in Join:` (see JIRA for details).

### Incorrect attribute rewrite when splicing batch plans in MicroBatchExecution

When splicing the source's batch plan into the streaming plan (by replacing the StreamingExecutionPlan), we were rewriting the attribute reference in the streaming plan with the new attribute references from the batch plan. This was incorrectly handling the scenario when multiple StreamingExecutionRelation point to the same source, and therefore eventually point to the same batch plan returned by the source. Here is an example query, and its corresponding plan transformations.

```

val df = input.toDF

val join =

df.select('value % 5 as "key", 'value).join(

df.select('value % 5 as "key", 'value), "key")

```

Streaming logical plan before splicing the batch plan

```

Project [key#6, value#1, value#12]

+- Join Inner, (key#6 = key#9)

:- Project [(value#1 % 5) AS key#6, value#1]

: +- StreamingExecutionRelation Memory[#1], value#1

+- Project [(value#12 % 5) AS key#9, value#12]

+- StreamingExecutionRelation Memory[#1], value#12 // two different leaves pointing to same source

```

Batch logical plan after splicing the batch plan and before rewriting

```

Project [key#6, value#1, value#12]

+- Join Inner, (key#6 = key#9)

:- Project [(value#1 % 5) AS key#6, value#1]

: +- LocalRelation [value#66] // replaces StreamingExecutionRelation Memory[#1], value#1

+- Project [(value#12 % 5) AS key#9, value#12]

+- LocalRelation [value#66] // replaces StreamingExecutionRelation Memory[#1], value#12

```

Batch logical plan after rewriting the attributes. Specifically, for spliced, the new output attributes (value#66) replace the earlier output attributes (value#12, and value#1, one for each StreamingExecutionRelation).

```

Project [key#6, value#66, value#66] // both value#1 and value#12 replaces by value#66

+- Join Inner, (key#6 = key#9)

:- Project [(value#66 % 5) AS key#6, value#66]

: +- LocalRelation [value#66]

+- Project [(value#66 % 5) AS key#9, value#66]

+- LocalRelation [value#66]

```

This causes the optimizer to eliminate value#66 from one side of the join.

```

Project [key#6, value#66, value#66]

+- Join Inner, (key#6 = key#9)

:- Project [(value#66 % 5) AS key#6, value#66]

: +- LocalRelation [value#66]

+- Project [(value#66 % 5) AS key#9] // this does not generate value, incorrect join results

+- LocalRelation [value#66]

```

**Solution**: Instead of rewriting attributes, use a Project to introduce aliases between the output attribute references and the new reference generated by the spliced plans. The analyzer and optimizer will take care of the rest.

```

Project [key#6, value#1, value#12]

+- Join Inner, (key#6 = key#9)

:- Project [(value#1 % 5) AS key#6, value#1]

: +- Project [value#66 AS value#1] // solution: project with aliases

: +- LocalRelation [value#66]

+- Project [(value#12 % 5) AS key#9, value#12]

+- Project [value#66 AS value#12] // solution: project with aliases

+- LocalRelation [value#66]

```

## How was this patch tested?

New unit test

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#20598 from tdas/SPARK-23406.

## What changes were proposed in this pull request?

This PR aims to resolve an open file leakage issue reported at [SPARK-23390](https://issues.apache.org/jira/browse/SPARK-23390) by moving the listener registration position. Currently, the sequence is like the following.

1. Create `batchReader`

2. `batchReader.initialize` opens a ORC file.

3. `batchReader.initBatch` may take a long time to alloc memory in some environment and cause errors.

4. `Option(TaskContext.get()).foreach(_.addTaskCompletionListener(_ => iter.close()))`

This PR moves 4 before 2 and 3. To sum up, the new sequence is 1 -> 4 -> 2 -> 3.

## How was this patch tested?

Manual. The following test case makes OOM intentionally to cause leaked filesystem connection in the current code base. With this patch, leakage doesn't occurs.

```scala

// This should be tested manually because it raises OOM intentionally

// in order to cause `Leaked filesystem connection`.

test("SPARK-23399 Register a task completion listener first for OrcColumnarBatchReader") {

withSQLConf(SQLConf.ORC_VECTORIZED_READER_BATCH_SIZE.key -> s"${Int.MaxValue}") {

withTempDir { dir =>

val basePath = dir.getCanonicalPath

Seq(0).toDF("a").write.format("orc").save(new Path(basePath, "first").toString)

Seq(1).toDF("a").write.format("orc").save(new Path(basePath, "second").toString)

val df = spark.read.orc(

new Path(basePath, "first").toString,

new Path(basePath, "second").toString)

val e = intercept[SparkException] {

df.collect()

}

assert(e.getCause.isInstanceOf[OutOfMemoryError])

}

}

}

```

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20590 from dongjoon-hyun/SPARK-23399.

## What changes were proposed in this pull request?

Added flag ignoreNullability to DataType.equalsStructurally.

The previous semantic is for ignoreNullability=false.

When ignoreNullability=true equalsStructurally ignores nullability of contained types (map key types, value types, array element types, structure field types).

In.checkInputTypes calls equalsStructurally to check if the children types match. They should match regardless of nullability (which is just a hint), so it is now called with ignoreNullability=true.

## How was this patch tested?

New test in SubquerySuite

Author: Bogdan Raducanu <bogdan@databricks.com>

Closes#20548 from bogdanrdc/SPARK-23316.

## What changes were proposed in this pull request?

DataSourceV2 batch writes should use the output commit coordinator if it is required by the data source. This adds a new method, `DataWriterFactory#useCommitCoordinator`, that determines whether the coordinator will be used. If the write factory returns true, `WriteToDataSourceV2` will use the coordinator for batch writes.

## How was this patch tested?

This relies on existing write tests, which now use the commit coordinator.

Author: Ryan Blue <blue@apache.org>

Closes#20490 from rdblue/SPARK-23323-add-commit-coordinator.

When hive.default.fileformat is other kinds of file types, create textfile table cause a serde error.

We should take the default type of textfile and sequencefile both as org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe.

```

set hive.default.fileformat=orc;

create table tbl( i string ) stored as textfile;

desc formatted tbl;

Serde Library org.apache.hadoop.hive.ql.io.orc.OrcSerde

InputFormat org.apache.hadoop.mapred.TextInputFormat

OutputFormat org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

```

Author: sychen <sychen@ctrip.com>

Closes#20406 from cxzl25/default_serde.

## What changes were proposed in this pull request?

This is a follow-up pr of #19231 which modified the behavior to remove metadata from JDBC table schema.

This pr adds a test to check if the schema doesn't have metadata.

## How was this patch tested?

Added a test and existing tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#20585 from ueshin/issues/SPARK-22002/fup1.

## What changes were proposed in this pull request?

Re-add support for parquet binary DecimalType in VectorizedColumnReader

## How was this patch tested?

Existing test suite

Author: James Thompson <jamesthomp@users.noreply.github.com>

Closes#20580 from jamesthomp/jt/add-back-binary-decimal.

## What changes were proposed in this pull request?

In the `getBlockData`,`blockId.reduceId` is the `Int` type, when it is greater than 2^28, `blockId.reduceId*8` will overflow

In the `decompress0`, `len` and `unitSize` are Int type, so `len * unitSize` may lead to overflow

## How was this patch tested?

N/A

Author: liuxian <liu.xian3@zte.com.cn>

Closes#20581 from 10110346/overflow2.

## What changes were proposed in this pull request?

This is a regression in Spark 2.3.

In Spark 2.2, we have a fragile UI support for SQL data writing commands. We only track the input query plan of `FileFormatWriter` and display its metrics. This is not ideal because we don't know who triggered the writing(can be table insertion, CTAS, etc.), but it's still useful to see the metrics of the input query.

In Spark 2.3, we introduced a new mechanism: `DataWritigCommand`, to fix the UI issue entirely. Now these writing commands have real children, and we don't need to hack into the `FileFormatWriter` for the UI. This also helps with `explain`, now `explain` can show the physical plan of the input query, while in 2.2 the physical writing plan is simply `ExecutedCommandExec` and it has no child.

However there is a regression in CTAS. CTAS commands don't extend `DataWritigCommand`, and we don't have the UI hack in `FileFormatWriter` anymore, so the UI for CTAS is just an empty node. See https://issues.apache.org/jira/browse/SPARK-22977 for more information about this UI issue.

To fix it, we should apply the `DataWritigCommand` mechanism to CTAS commands.

TODO: In the future, we should refactor this part and create some physical layer code pieces for data writing, and reuse them in different writing commands. We should have different logical nodes for different operators, even some of them share some same logic, e.g. CTAS, CREATE TABLE, INSERT TABLE. Internally we can share the same physical logic.

## How was this patch tested?

manually tested.

For data source table

<img width="644" alt="1" src="https://user-images.githubusercontent.com/3182036/35874155-bdffab28-0ba6-11e8-94a8-e32e106ba069.png">

For hive table

<img width="666" alt="2" src="https://user-images.githubusercontent.com/3182036/35874161-c437e2a8-0ba6-11e8-98ed-7930f01432c5.png">

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20521 from cloud-fan/UI.

## What changes were proposed in this pull request?

This test only fails with sbt on Hadoop 2.7, I can't reproduce it locally, but here is my speculation by looking at the code:

1. FileSystem.delete doesn't delete the directory entirely, somehow we can still open the file as a 0-length empty file.(just speculation)

2. ORC intentionally allow empty files, and the reader fails during reading without closing the file stream.

This PR improves the test to make sure all files are deleted and can't be opened.

## How was this patch tested?

N/A

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20584 from cloud-fan/flaky-test.

## What changes were proposed in this pull request?

This is a long-standing bug in `UnsafeKVExternalSorter` and was reported in the dev list multiple times.

When creating `UnsafeKVExternalSorter` with `BytesToBytesMap`, we need to create a `UnsafeInMemorySorter` to sort the data in `BytesToBytesMap`. The data format of the sorter and the map is same, so no data movement is required. However, both the sorter and the map need a point array for some bookkeeping work.

There is an optimization in `UnsafeKVExternalSorter`: reuse the point array between the sorter and the map, to avoid an extra memory allocation. This sounds like a reasonable optimization, the length of the `BytesToBytesMap` point array is at least 4 times larger than the number of keys(to avoid hash collision, the hash table size should be at least 2 times larger than the number of keys, and each key occupies 2 slots). `UnsafeInMemorySorter` needs the pointer array size to be 4 times of the number of entries, so we are safe to reuse the point array.

However, the number of keys of the map doesn't equal to the number of entries in the map, because `BytesToBytesMap` supports duplicated keys. This breaks the assumption of the above optimization and we may run out of space when inserting data into the sorter, and hit error

```

java.lang.IllegalStateException: There is no space for new record

at org.apache.spark.util.collection.unsafe.sort.UnsafeInMemorySorter.insertRecord(UnsafeInMemorySorter.java:239)

at org.apache.spark.sql.execution.UnsafeKVExternalSorter.<init>(UnsafeKVExternalSorter.java:149)

...

```

This PR fixes this bug by creating a new point array if the existing one is not big enough.

## How was this patch tested?

a new test

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20561 from cloud-fan/bug.

## What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/20435.

While reorganizing the packages for streaming data source v2, the top level stream read/write support interfaces should not be in the reader/writer package, but should be in the `sources.v2` package, to follow the `ReadSupport`, `WriteSupport`, etc.

## How was this patch tested?

N/A

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20509 from cloud-fan/followup.

## What changes were proposed in this pull request?

For inserting/appending data to an existing table, Spark should adjust the data types of the input query according to the table schema, or fail fast if it's uncastable.

There are several ways to insert/append data: SQL API, `DataFrameWriter.insertInto`, `DataFrameWriter.saveAsTable`. The first 2 ways create `InsertIntoTable` plan, and the last way creates `CreateTable` plan. However, we only adjust input query data types for `InsertIntoTable`, and users may hit weird errors when appending data using `saveAsTable`. See the JIRA for the error case.

This PR fixes this bug by adjusting data types for `CreateTable` too.

## How was this patch tested?

new test.

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20527 from cloud-fan/saveAsTable.

## What changes were proposed in this pull request?

This PR migrates the MemoryStream to DataSourceV2 APIs.

One additional change is in the reported keys in StreamingQueryProgress.durationMs. "getOffset" and "getBatch" replaced with "setOffsetRange" and "getEndOffset" as tracking these make more sense. Unit tests changed accordingly.

## How was this patch tested?

Existing unit tests, few updated unit tests.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Author: Burak Yavuz <brkyvz@gmail.com>

Closes#20445 from tdas/SPARK-23092.

## What changes were proposed in this pull request?

When `DebugFilesystem` closes opened stream, if any exception occurs, we still need to remove the open stream record from `DebugFilesystem`. Otherwise, it goes to report leaked filesystem connection.

## How was this patch tested?

Existing tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20524 from viirya/SPARK-23345.

## What changes were proposed in this pull request?

Replace `registerTempTable` by `createOrReplaceTempView`.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20523 from gatorsmile/updateExamples.

## What changes were proposed in this pull request?

Update the description and tests of three external API or functions `createFunction `, `length` and `repartitionByRange `

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20495 from gatorsmile/updateFunc.

## What changes were proposed in this pull request?

`DataSourceV2Relation` keeps a `fullOutput` and resolves the real output on demand by column name lookup. i.e.

```

lazy val output: Seq[Attribute] = reader.readSchema().map(_.name).map { name =>

fullOutput.find(_.name == name).get

}

```

This will be broken after we canonicalize the plan, because all attribute names become "None", see https://github.com/apache/spark/blob/v2.3.0-rc1/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Canonicalize.scala#L42

To fix this, `DataSourceV2Relation` should just keep `output`, and update the `output` when doing column pruning.

## How was this patch tested?

a new test case

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20485 from cloud-fan/canonicalize.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/20483 tried to provide a way to turn off the new columnar cache reader, to restore the behavior in 2.2. However even we turn off that config, the behavior is still different than 2.2.

If the output data are rows, we still enable whole stage codegen for the scan node, which is different with 2.2, we should also fix it.

## How was this patch tested?

existing tests.

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20513 from cloud-fan/cache.

## What changes were proposed in this pull request?

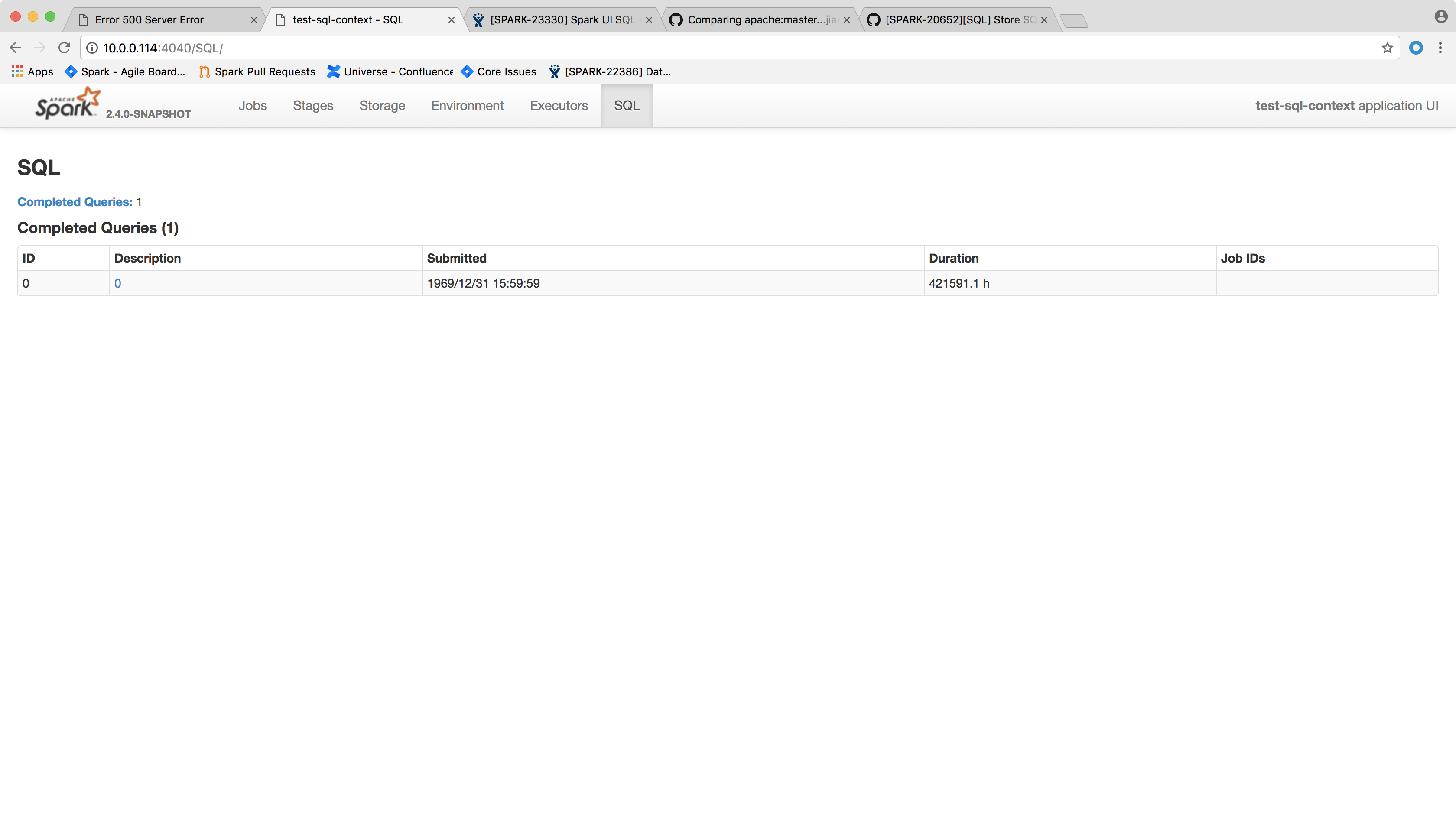

Spark SQL executions page throws the following error and the page crashes:

```

HTTP ERROR 500

Problem accessing /SQL/. Reason:

Server Error

Caused by:

java.lang.NullPointerException

at scala.collection.immutable.StringOps$.length$extension(StringOps.scala:47)

at scala.collection.immutable.StringOps.length(StringOps.scala:47)

at scala.collection.IndexedSeqOptimized$class.isEmpty(IndexedSeqOptimized.scala:27)

at scala.collection.immutable.StringOps.isEmpty(StringOps.scala:29)

at scala.collection.TraversableOnce$class.nonEmpty(TraversableOnce.scala:111)

at scala.collection.immutable.StringOps.nonEmpty(StringOps.scala:29)

at org.apache.spark.sql.execution.ui.ExecutionTable.descriptionCell(AllExecutionsPage.scala:182)

at org.apache.spark.sql.execution.ui.ExecutionTable.row(AllExecutionsPage.scala:155)

at org.apache.spark.sql.execution.ui.ExecutionTable$$anonfun$8.apply(AllExecutionsPage.scala:204)

at org.apache.spark.sql.execution.ui.ExecutionTable$$anonfun$8.apply(AllExecutionsPage.scala:204)

at org.apache.spark.ui.UIUtils$$anonfun$listingTable$2.apply(UIUtils.scala:339)

at org.apache.spark.ui.UIUtils$$anonfun$listingTable$2.apply(UIUtils.scala:339)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.AbstractTraversable.map(Traversable.scala:104)

at org.apache.spark.ui.UIUtils$.listingTable(UIUtils.scala:339)

at org.apache.spark.sql.execution.ui.ExecutionTable.toNodeSeq(AllExecutionsPage.scala:203)

at org.apache.spark.sql.execution.ui.AllExecutionsPage.render(AllExecutionsPage.scala:67)

at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82)

at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82)

at org.apache.spark.ui.JettyUtils$$anon$3.doGet(JettyUtils.scala:90)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:687)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:790)

at org.eclipse.jetty.servlet.ServletHolder.handle(ServletHolder.java:848)

at org.eclipse.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:584)

at org.eclipse.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1180)

at org.eclipse.jetty.servlet.ServletHandler.doScope(ServletHandler.java:512)

at org.eclipse.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1112)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141)

at org.eclipse.jetty.server.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:213)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:134)

at org.eclipse.jetty.server.Server.handle(Server.java:534)

at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:320)

at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:251)

at org.eclipse.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:283)

at org.eclipse.jetty.io.FillInterest.fillable(FillInterest.java:108)

at org.eclipse.jetty.io.SelectChannelEndPoint$2.run(SelectChannelEndPoint.java:93)

at org.eclipse.jetty.util.thread.strategy.ExecuteProduceConsume.executeProduceConsume(ExecuteProduceConsume.java:303)

at org.eclipse.jetty.util.thread.strategy.ExecuteProduceConsume.produceConsume(ExecuteProduceConsume.java:148)

at org.eclipse.jetty.util.thread.strategy.ExecuteProduceConsume.run(ExecuteProduceConsume.java:136)

at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:671)

at org.eclipse.jetty.util.thread.QueuedThreadPool$2.run(QueuedThreadPool.java:589)

at java.lang.Thread.run(Thread.java:748)

```

One of the possible reason that this page fails may be the `SparkListenerSQLExecutionStart` event get dropped before processed, so the execution description and details don't get updated.

This was not a issue in 2.2 because it would ignore any job start event that arrives before the corresponding execution start event, which doesn't sound like a good decision.

We shall try to handle the null values in the front page side, that is, try to give a default value when `execution.details` or `execution.description` is null.

Another possible approach is not to spill the `LiveExecutionData` in `SQLAppStatusListener.update(exec: LiveExecutionData)` if `exec.details` is null. This is not ideal because this way you will not see the execution if `SparkListenerSQLExecutionStart` event is lost, because `AllExecutionsPage` only read executions from KVStore.

## How was this patch tested?

After the change, the page shows the following:

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20502 from jiangxb1987/executionPage.

## What changes were proposed in this pull request?

Sort jobs/stages/tasks/queries with the completed timestamp before cleaning up them to make the behavior consistent with 2.2.

## How was this patch tested?

- Jenkins.

- Manually ran the following codes and checked the UI for jobs/stages/tasks/queries.

```

spark.ui.retainedJobs 10

spark.ui.retainedStages 10

spark.sql.ui.retainedExecutions 10

spark.ui.retainedTasks 10

```

```

new Thread() {

override def run() {

spark.range(1, 2).foreach { i =>

Thread.sleep(10000)

}

}

}.start()

Thread.sleep(5000)

for (_ <- 1 to 20) {

new Thread() {

override def run() {

spark.range(1, 2).foreach { i =>

}

}

}.start()

}

Thread.sleep(15000)

spark.range(1, 2).foreach { i =>

}

sc.makeRDD(1 to 100, 100).foreach { i =>

}

```

Author: Shixiong Zhu <zsxwing@gmail.com>

Closes#20481 from zsxwing/SPARK-23307.

## What changes were proposed in this pull request?

Fix decimalArithmeticOperations.sql test

## How was this patch tested?

N/A

Author: Yuming Wang <wgyumg@gmail.com>

Author: wangyum <wgyumg@gmail.com>

Author: Yuming Wang <yumwang@ebay.com>

Closes#20498 from wangyum/SPARK-22036.

## What changes were proposed in this pull request?

Like Parquet, all file-based data source handles `spark.sql.files.ignoreMissingFiles` correctly. We had better have a test coverage for feature parity and in order to prevent future accidental regression for all data sources.

## How was this patch tested?

Pass Jenkins with a newly added test case.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20479 from dongjoon-hyun/SPARK-23305.

## What changes were proposed in this pull request?

In the document of `ContinuousReader.setOffset`, we say this method is used to specify the start offset. We also have a `ContinuousReader.getStartOffset` to get the value back. I think it makes more sense to rename `ContinuousReader.setOffset` to `setStartOffset`.

## How was this patch tested?

N/A

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20486 from cloud-fan/rename.

## What changes were proposed in this pull request?

This patch adds a small example to the schema string definition of schema function. It isn't obvious how to use it, so an example would be useful.

## How was this patch tested?

N/A - doc only.

Author: Reynold Xin <rxin@databricks.com>

Closes#20491 from rxin/schema-doc.

## What changes were proposed in this pull request?

https://issues.apache.org/jira/browse/SPARK-23309 reported a performance regression about cached table in Spark 2.3. While the investigating is still going on, this PR adds a conf to turn off the vectorized cache reader, to unblock the 2.3 release.

## How was this patch tested?

a new test

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20483 from cloud-fan/cache.

## What changes were proposed in this pull request?

This PR fixes a mistake in the `PushDownOperatorsToDataSource` rule, the column pruning logic is incorrect about `Project`.

## How was this patch tested?

a new test case for column pruning with arbitrary expressions, and improve the existing tests to make sure the `PushDownOperatorsToDataSource` really works.

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20476 from cloud-fan/push-down.

## What changes were proposed in this pull request?

For some ColumnVector get APIs such as getDecimal, getBinary, getStruct, getArray, getInterval, getUTF8String, we should clearly document their behaviors when accessing null slot. They should return null in this case. Then we can remove null checks from the places using above APIs.

For the APIs of primitive values like getInt, getInts, etc., this also documents their behaviors when accessing null slots. Their returning values are undefined and can be anything.

## How was this patch tested?

Added tests into `ColumnarBatchSuite`.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20455 from viirya/SPARK-23272-followup.

## What changes were proposed in this pull request?

`DataSourceV2Relation` should extend `MultiInstanceRelation`, to take care of self-join.

## How was this patch tested?

a new test

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20466 from cloud-fan/dsv2-selfjoin.

## What changes were proposed in this pull request?

The current DataSourceWriter API makes it hard to implement `onTaskCommit(taskCommit: TaskCommitMessage)` in `FileCommitProtocol`.

In general, on receiving commit message, driver can start processing messages(e.g. persist messages into files) before all the messages are collected.

The proposal to add a new API:

`add(WriterCommitMessage message)`: Handles a commit message on receiving from a successful data writer.

This should make the whole API of DataSourceWriter compatible with `FileCommitProtocol`, and more flexible.

There was another radical attempt in #20386. This one should be more reasonable.

## How was this patch tested?

Unit test

Author: Wang Gengliang <ltnwgl@gmail.com>

Closes#20454 from gengliangwang/write_api.