### What changes were proposed in this pull request?

Add documentation to SQL programming guide to use PyArrow >= 0.15.0 with current versions of Spark.

### Why are the changes needed?

Arrow 0.15.0 introduced a change in format which requires an environment variable to maintain compatibility.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Ran pandas_udfs tests using PyArrow 0.15.0 with environment variable set.

Closes#26045 from BryanCutler/arrow-document-legacy-IPC-fix-SPARK-29367.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

This adds an entry about PrometheusServlet to the documentation, following SPARK-29032

### Why are the changes needed?

The monitoring documentation lists all the available metrics sinks, this should be added to the list for completeness.

Closes#26081 from LucaCanali/FollowupSpark29032.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

This is just a followup on https://github.com/apache/spark/pull/26062 -- see it for more detail.

I think we will eventually find more cases of this. It's hard to get them all at once as there are many different types of compile errors in earlier modules. I'm trying to address them in as a big a chunk as possible.

Closes#26074 from srowen/SPARK-29401.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

The commit 4e6d31f570 changed default behavior of `size()` for the `NULL` input. In this PR, I propose to update the SQL migration guide.

### Why are the changes needed?

To inform users about new behavior of the `size()` function for the `NULL` input.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#26066 from MaxGekk/size-null-migration-guide.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Invocations like `sc.parallelize(Array((1,2)))` cause a compile error in 2.13, like:

```

[ERROR] [Error] /Users/seanowen/Documents/spark_2.13/core/src/test/scala/org/apache/spark/ShuffleSuite.scala:47: overloaded method value apply with alternatives:

(x: Unit,xs: Unit*)Array[Unit] <and>

(x: Double,xs: Double*)Array[Double] <and>

(x: Float,xs: Float*)Array[Float] <and>

(x: Long,xs: Long*)Array[Long] <and>

(x: Int,xs: Int*)Array[Int] <and>

(x: Char,xs: Char*)Array[Char] <and>

(x: Short,xs: Short*)Array[Short] <and>

(x: Byte,xs: Byte*)Array[Byte] <and>

(x: Boolean,xs: Boolean*)Array[Boolean]

cannot be applied to ((Int, Int), (Int, Int), (Int, Int), (Int, Int))

```

Using a `Seq` instead appears to resolve it, and is effectively equivalent.

### Why are the changes needed?

To better cross-build for 2.13.

### Does this PR introduce any user-facing change?

None.

### How was this patch tested?

Existing tests.

Closes#26062 from srowen/SPARK-29401.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Fix a config name typo from the resource scheduling user docs. In case users might get confused with the wrong config name, we'd better fix this typo.

### How was this patch tested?

Document change, no need to run test.

Closes#26047 from jiangxb1987/doc.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

Document SHOW CREATE TABLE statement in SQL Reference

### Why are the changes needed?

To complete the SQL reference.

### Does this PR introduce any user-facing change?

Yes.

after the change:

### How was this patch tested?

Tested using jykyll build --serve

Closes#25885 from huaxingao/spark-28813.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes:

1. Use `is.data.frame` to check if it is a DataFrame.

2. to install Arrow and test Arrow optimization in AppVeyor build. We're currently not testing this in CI.

### Why are the changes needed?

1. To support SparkR with Arrow 0.14

2. To check if there's any regression and if it works correctly.

### Does this PR introduce any user-facing change?

```r

df <- createDataFrame(mtcars)

collect(dapply(df, function(rdf) { data.frame(rdf$gear + 1) }, structType("gear double")))

```

**Before:**

```

Error in readBin(con, raw(), as.integer(dataLen), endian = "big") :

invalid 'n' argument

```

**After:**

```

gear

1 5

2 5

3 5

4 4

5 4

6 4

7 4

8 5

9 5

...

```

### How was this patch tested?

AppVeyor

Closes#25993 from HyukjinKwon/arrow-r-appveyor.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR exposes USE CATALOG/USE SQL commands as described in this [SPIP](https://docs.google.com/document/d/1jEcvomPiTc5GtB9F7d2RTVVpMY64Qy7INCA_rFEd9HQ/edit#)

It also exposes `currentCatalog` in `CatalogManager`.

Finally, it changes `SHOW NAMESPACES` and `SHOW TABLES` to use the current catalog if no catalog is specified (instead of default catalog).

### Why are the changes needed?

There is currently no mechanism to change current catalog/namespace thru SQL commands.

### Does this PR introduce any user-facing change?

Yes, you can perform the following:

```scala

// Sets the current catalog to 'testcat'

spark.sql("USE CATALOG testcat")

// Sets the current catalog to 'testcat' and current namespace to 'ns1.ns2'.

spark.sql("USE ns1.ns2 IN testcat")

// Now, the following will use 'testcat' as the current catalog and 'ns1.ns2' as the current namespace.

spark.sql("SHOW NAMESPACES")

```

### How was this patch tested?

Added new unit tests.

Closes#25771 from imback82/use_namespace.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Please refer [the link on dev. mailing list](https://lists.apache.org/thread.html/cc6489a19316e7382661d305fabd8c21915e5faf6a928b4869ac2b4a%3Cdev.spark.apache.org%3E) to see rationalization of this patch.

This patch adds the functionality to detect the possible correct issue on multiple stateful operations in single streaming query and logs warning message to inform end users.

This patch also documents some notes to inform caveats when using multiple stateful operations in single query, and provide one known alternative.

## How was this patch tested?

Added new UTs in UnsupportedOperationsSuite to test various combination of stateful operators on streaming query.

Closes#24890 from HeartSaVioR/SPARK-28074.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Updated the SQL migration guide regarding to recently supported special date and timestamp values, see https://github.com/apache/spark/pull/25716 and https://github.com/apache/spark/pull/25708.

Closes#25834

### Why are the changes needed?

To let users know about new feature in Spark 3.0.

### Does this PR introduce any user-facing change?

No

Closes#25948 from MaxGekk/special-values-migration-guide.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Changed 'Phive-thriftserver' to ' -Phive-thriftserver'.

### Why are the changes needed?

Typo

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

Manually tested.

Closes#25937 from TomokoKomiyama/fix-build-doc.

Authored-by: Tomoko Komiyama <btkomiyamatm@oss.nttdata.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Copy any "spark.hive.foo=bar" spark properties into hadoop conf as "hive.foo=bar"

### Why are the changes needed?

Providing spark side config entry for hive configurations.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

UT.

Closes#25661 from WeichenXu123/add_hive_conf.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This patch introduces new options "startingOffsetsByTimestamp" and "endingOffsetsByTimestamp" to set specific timestamp per topic (since we're unlikely to set the different value per partition) to let source starts reading from offsets which have equal of greater timestamp, and ends reading until offsets which have equal of greater timestamp.

The new option would be optional of course, and take preference over existing offset options.

## How was this patch tested?

New unit tests added. Also manually tested basic functionality with Kafka 2.0.0 server.

Running query below

```

val df = spark.read.format("kafka")

.option("kafka.bootstrap.servers", "localhost:9092")

.option("subscribe", "spark_26848_test_v1,spark_26848_test_2_v1")

.option("startingOffsetsByTimestamp", """{"spark_26848_test_v1": 1549669142193, "spark_26848_test_2_v1": 1549669240965}""")

.option("endingOffsetsByTimestamp", """{"spark_26848_test_v1": 1549669265676, "spark_26848_test_2_v1": 1549699265676}""")

.load().selectExpr("CAST(value AS STRING)")

df.show()

```

with below records (one string which number part remarks when they're put after such timestamp) in

topic `spark_26848_test_v1`

```

hello1 1549669142193

world1 1549669142193

hellow1 1549669240965

world1 1549669240965

hello1 1549669265676

world1 1549669265676

```

topic `spark_26848_test_2_v1`

```

hello2 1549669142193

world2 1549669142193

hello2 1549669240965

world2 1549669240965

hello2 1549669265676

world2 1549669265676

```

the result of `df.show()` follows:

```

+--------------------+

| value|

+--------------------+

|world1 1549669240965|

|world1 1549669142193|

|world2 1549669240965|

|hello2 1549669240965|

|hellow1 154966924...|

|hello2 1549669265676|

|hello1 1549669142193|

|world2 1549669265676|

+--------------------+

```

Note that endingOffsets (as well as endingOffsetsByTimestamp) are exclusive.

Closes#23747 from HeartSaVioR/SPARK-26848.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR supports UPDATE in the parser and add the corresponding logical plan. The SQL syntax is a standard UPDATE statement:

```

UPDATE tableName tableAlias SET colName=value [, colName=value]+ WHERE predicate?

```

### Why are the changes needed?

With this change, we can start to implement UPDATE in builtin sources and think about how to design the update API in DS v2.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New test cases added.

Closes#25626 from xianyinxin/SPARK-28892.

Authored-by: xy_xin <xianyin.xxy@alibaba-inc.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Credit to vanzin as he found and commented on this while reviewing #25670 - [comment](https://github.com/apache/spark/pull/25670#discussion_r325383512).

This patch proposes to specify UTF-8 explicitly while reading/writer event log file.

### Why are the changes needed?

The event log file is being read/written as default character set of JVM process which may open the chance to bring some problems on reading event log files from another machines. Spark's de facto standard character set is UTF-8, so it should be explicitly set to.

### Does this PR introduce any user-facing change?

Yes, if end users have been running Spark process with different default charset than "UTF-8", especially their driver JVM processes. No otherwise.

### How was this patch tested?

Existing UTs, as ReplayListenerSuite contains "end-to-end" event logging/reading tests (both uncompressed/compressed).

Closes#25845 from HeartSaVioR/SPARK-29160.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PRs add Java 11 version to the document.

### Why are the changes needed?

Apache Spark 3.0.0 starts to support JDK11 officially.

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

Manually. Doc generation.

Closes#25875 from dongjoon-hyun/SPARK-29196.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Added document reference for USE databse sql command

### Why are the changes needed?

For USE database command usage

### Does this PR introduce any user-facing change?

It is adding the USE database sql command refernce information in the doc

### How was this patch tested?

Attached the test snap

Closes#25572 from shivusondur/jiraUSEDaBa1.

Lead-authored-by: shivusondur <shivusondur@gmail.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

This PR aims to increase the JVM CodeCacheSize from 0.5G to 1G.

### Why are the changes needed?

After upgrading to `Scala 2.12.10`, the following is observed during building.

```

2019-09-18T20:49:23.5030586Z OpenJDK 64-Bit Server VM warning: CodeCache is full. Compiler has been disabled.

2019-09-18T20:49:23.5032920Z OpenJDK 64-Bit Server VM warning: Try increasing the code cache size using -XX:ReservedCodeCacheSize=

2019-09-18T20:49:23.5034959Z CodeCache: size=524288Kb used=521399Kb max_used=521423Kb free=2888Kb

2019-09-18T20:49:23.5035472Z bounds [0x00007fa62c000000, 0x00007fa64c000000, 0x00007fa64c000000]

2019-09-18T20:49:23.5035781Z total_blobs=156549 nmethods=155863 adapters=592

2019-09-18T20:49:23.5036090Z compilation: disabled (not enough contiguous free space left)

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually check the Jenkins or GitHub Action build log (which should not have the above).

Closes#25836 from dongjoon-hyun/SPARK-CODE-CACHE-1G.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently, there are new configurations for compatibility with ANSI SQL:

* `spark.sql.parser.ansi.enabled`

* `spark.sql.decimalOperations.nullOnOverflow`

* `spark.sql.failOnIntegralTypeOverflow`

This PR is to add new configuration `spark.sql.ansi.enabled` and remove the 3 options above. When the configuration is true, Spark tries to conform to the ANSI SQL specification. It will be disabled by default.

### Why are the changes needed?

Make it simple and straightforward.

### Does this PR introduce any user-facing change?

The new features for ANSI compatibility will be set via one configuration `spark.sql.ansi.enabled`.

### How was this patch tested?

Existing unit tests.

Closes#25693 from gengliangwang/ansiEnabled.

Lead-authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR upgrade Scala to **2.12.10**.

Release notes:

- Fix regression in large string interpolations with non-String typed splices

- Revert "Generate shallower ASTs in pattern translation"

- Fix regression in classpath when JARs have 'a.b' entries beside 'a/b'

- Faster compiler: 5–10% faster since 2.12.8

- Improved compatibility with JDK 11, 12, and 13

- Experimental support for build pipelining and outline type checking

More details:

https://github.com/scala/scala/releases/tag/v2.12.10https://github.com/scala/scala/releases/tag/v2.12.9

## How was this patch tested?

Existing tests

Closes#25404 from wangyum/SPARK-28683.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This proposes to improve Spark instrumentation by adding a hook for user-defined metrics, extending Spark’s Dropwizard/Codahale metrics system.

The original motivation of this work was to add instrumentation for S3 filesystem access metrics by Spark job. Currently, [[ExecutorSource]] instruments HDFS and local filesystem metrics. Rather than extending the code there, we proposes with this JIRA to add a metrics plugin system which is of more flexible and general use.

Context: The Spark metrics system provides a large variety of metrics, see also , useful to monitor and troubleshoot Spark workloads. A typical workflow is to sink the metrics to a storage system and build dashboards on top of that.

Highlights:

- The metric plugin system makes it easy to implement instrumentation for S3 access by Spark jobs.

- The metrics plugin system allows for easy extensions of how Spark collects HDFS-related workload metrics. This is currently done using the Hadoop Filesystem GetAllStatistics method, which is deprecated in recent versions of Hadoop. Recent versions of Hadoop Filesystem recommend using method GetGlobalStorageStatistics, which also provides several additional metrics. GetGlobalStorageStatistics is not available in Hadoop 2.7 (had been introduced in Hadoop 2.8). Using a metric plugin for Spark would allow an easy way to “opt in” using such new API calls for those deploying suitable Hadoop versions.

- We also have the use case of adding Hadoop filesystem monitoring for a custom Hadoop compliant filesystem in use in our organization (EOS using the XRootD protocol). The metrics plugin infrastructure makes this easy to do. Others may have similar use cases.

- More generally, this method makes it straightforward to plug in Filesystem and other metrics to the Spark monitoring system. Future work on plugin implementation can address extending monitoring to measure usage of external resources (OS, filesystem, network, accelerator cards, etc), that maybe would not normally be considered general enough for inclusion in Apache Spark code, but that can be nevertheless useful for specialized use cases, tests or troubleshooting.

Implementation:

The proposed implementation extends and modifies the work on Executor Plugin of SPARK-24918. Additionally, this is related to recent work on extending Spark executor metrics, such as SPARK-25228.

As discussed during the review, the implementaiton of this feature modifies the Developer API for Executor Plugins, such that the new version is incompatible with the original version in Spark 2.4.

## How was this patch tested?

This modifies existing tests for ExecutorPluginSuite to adapt them to the API changes. In addition, the new funtionality for registering pluginMetrics has been manually tested running Spark on YARN and K8S clusters, in particular for monitoring S3 and for extending HDFS instrumentation with the Hadoop Filesystem “GetGlobalStorageStatistics” metrics. Executor metric plugin example and code used for testing are available, for example at: https://github.com/cerndb/SparkExecutorPluginsCloses#24901 from LucaCanali/executorMetricsPlugin.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

HDFS doesn't update the file size reported by the NM if you just keep

writing to the file; this makes the SHS believe the file is inactive,

and so it may delete it after the configured max age for log files.

This change uses hsync to keep the log file as up to date as possible

when using HDFS. It also disables erasure coding by default for these

logs, since hsync (& friends) does not work with EC.

Tested with a SHS configured to aggressively clean up logs; verified

a spark-shell session kept updating the log, which was not deleted by

the SHS.

Closes#25819 from vanzin/SPARK-29105.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

Updating unit description in configurations, inorder to maintain consistency across configurations.

### Why are the changes needed?

the description does not mention about suffix that can be mentioned while configuring this value.

For better user understanding

### Does this PR introduce any user-facing change?

yes. Doc description

### How was this patch tested?

generated document and checked.

Closes#25689 from PavithraRamachandran/heapsize_config.

Authored-by: Pavithra Ramachandran <pavi.rams@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Document CREATE DATABASE statement in SQL Reference Guide.

### Why are the changes needed?

Currently Spark lacks documentation on the supported SQL constructs causing

confusion among users who sometimes have to look at the code to understand the

usage. This is aimed at addressing this issue.

### Does this PR introduce any user-facing change?

Yes.

### Before:

There was no documentation for this.

### After:

### How was this patch tested?

Manual Review and Tested using jykyll build --serve

Closes#25595 from sharangk/createDbDoc.

Lead-authored-by: sharangk <sharan.gk@gmail.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

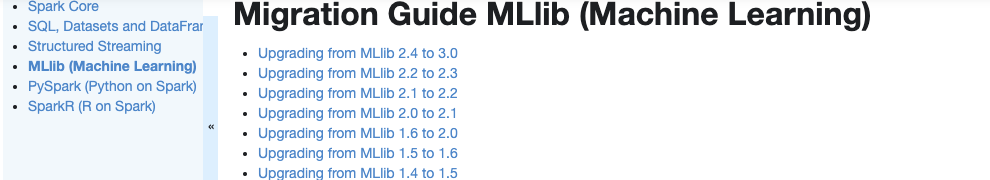

### What changes were proposed in this pull request?

Currently, there is no migration section for PySpark, SparkCore and Structured Streaming.

It is difficult for users to know what to do when they upgrade.

This PR proposes to create create a "Migration Guide" tap at Spark documentation.

This page will contain migration guides for Spark SQL, PySpark, SparkR, MLlib, Structured Streaming and Core. Basically it is a refactoring.

There are some new information added, which I will leave a comment inlined for easier review.

1. **MLlib**

Merge [ml-guide.html#migration-guide](https://spark.apache.org/docs/latest/ml-guide.html#migration-guide) and [ml-migration-guides.html](https://spark.apache.org/docs/latest/ml-migration-guides.html)

```

'docs/ml-guide.md'

↓ Merge new/old migration guides

'docs/ml-migration-guide.md'

```

2. **PySpark**

Extract PySpark specific items from https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html

```

'docs/sql-migration-guide-upgrade.md'

↓ Extract PySpark specific items

'docs/pyspark-migration-guide.md'

```

3. **SparkR**

Move [sparkr.html#migration-guide](https://spark.apache.org/docs/latest/sparkr.html#migration-guide) into a separate file, and extract from [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html)

```

'docs/sparkr.md' 'docs/sql-migration-guide-upgrade.md'

Move migration guide section ↘ ↙ Extract SparkR specific items

docs/sparkr-migration-guide.md

```

4. **Core**

Newly created at `'docs/core-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note.

5. **Structured Streaming**

Newly created at `'docs/ss-migration-guide.md'`. I skimmed resolved JIRAs at 3.0.0 and found some items to note.

6. **SQL**

Merged [sql-migration-guide-upgrade.html](https://spark.apache.org/docs/latest/sql-migration-guide-upgrade.html) and [sql-migration-guide-hive-compatibility.html](https://spark.apache.org/docs/latest/sql-migration-guide-hive-compatibility.html)

```

'docs/sql-migration-guide-hive-compatibility.md' 'docs/sql-migration-guide-upgrade.md'

Move Hive compatibility section ↘ ↙ Left over after filtering PySpark and SparkR items

'docs/sql-migration-guide.md'

```

### Why are the changes needed?

In order for users in production to effectively migrate to higher versions, and detect behaviour or breaking changes before upgrading and/or migrating.

### Does this PR introduce any user-facing change?

Yes, this changes Spark's documentation at https://spark.apache.org/docs/latest/index.html.

### How was this patch tested?

Manually build the doc. This can be verified as below:

```bash

cd docs

SKIP_API=1 jekyll build

open _site/index.html

```

Closes#25757 from HyukjinKwon/migration-doc.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This update adds support for Kafka Headers functionality in Structured Streaming.

## How was this patch tested?

With following unit tests:

- KafkaRelationSuite: "default starting and ending offsets with headers" (new)

- KafkaSinkSuite: "batch - write to kafka" (updated)

Closes#22282 from dongjinleekr/feature/SPARK-23539.

Lead-authored-by: Lee Dongjin <dongjin@apache.org>

Co-authored-by: Jungtaek Lim <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Added document for CREATE VIEW command.

### Why are the changes needed?

As a reference to syntax and examples of CREATE VIEW command.

### How was this patch tested?

Documentation update. Verified manually.

Closes#25543 from amanomer/spark-28795.

Lead-authored-by: aman_omer <amanomer1996@gmail.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Co-authored-by: Aman Omer <amanomer1996@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Document DROP DATABASE statement in SQL Reference

### Why are the changes needed?

Currently from spark there is no complete sql guide is present, so it is better to document all the sql commands, this jira is sub part of this task.

### Does this PR introduce any user-facing change?

Yes, Before there was no documentation about drop database syntax

After Fix

### How was this patch tested?

tested with jenkyll build

Closes#25554 from sandeep-katta/dropDbDoc.

Authored-by: sandeep katta <sandeep.katta2007@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Document REFRESH TABLE statement in the SQL Reference Guide.

### Why are the changes needed?

Currently there is no documentation in the SPARK SQL to describe how to use this command, it is to address this issue.

### Does this PR introduce any user-facing change?

Yes.

#### Before:

There is no documentation for this.

#### After:

<img width="826" alt="Screen Shot 2019-09-12 at 11 39 21 AM" src="https://user-images.githubusercontent.com/7550280/64811385-01752600-d552-11e9-876d-91ebb005b851.png">

### How was this patch tested?

Using jykll build --serve

Closes#25549 from kevinyu98/spark-28828-refreshTable.

Authored-by: Kevin Yu <qyu@us.ibm.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

JIRA :https://issues.apache.org/jira/browse/SPARK-29050

'a hdfs' change into 'an hdfs'

'an unique' change into 'a unique'

'an url' change into 'a url'

'a error' change into 'an error'

Closes#25756 from dengziming/feature_fix_typos.

Authored-by: dengziming <dengziming@growingio.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Document the resource scheduling feature - https://issues.apache.org/jira/browse/SPARK-24615

Add general docs, yarn, kubernetes, and standalone cluster specific ones.

### Why are the changes needed?

Help users understand the feature

### Does this PR introduce any user-facing change?

docs

### How was this patch tested?

N/A

Closes#25698 from tgravescs/SPARK-27492-gpu-sched-docs.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Add links to IBM Cloud Storage connector in cloud-integration.md

### Why are the changes needed?

This page mentions the connectors to cloud providers. Currently connector to

IBM cloud storage is not specified. This PR adds the necessary links for

completeness.

### Does this PR introduce any user-facing change?

Yes.

**Before:**

<img width="1234" alt="Screen Shot 2019-09-09 at 3 52 44 PM" src="https://user-images.githubusercontent.com/14225158/64571863-11a2c080-d31a-11e9-82e3-78c02675adb9.png">

**After.**

<img width="1234" alt="Screen Shot 2019-09-10 at 8 16 49 AM" src="https://user-images.githubusercontent.com/14225158/64626857-663e4e00-d3a3-11e9-8fa3-15ebf52ea832.png">

### How was this patch tested?

Tested using jykyll build --serve

Closes#25737 from dilipbiswal/ibm-cloud-storage.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Implement the SHOW DATABASES logical and physical plans for data source v2 tables.

### Why are the changes needed?

To support `SHOW DATABASES` SQL commands for v2 tables.

### Does this PR introduce any user-facing change?

`spark.sql("SHOW DATABASES")` will return namespaces if the default catalog is set:

```

+---------------+

| namespace|

+---------------+

| ns1|

| ns1.ns1_1|

|ns1.ns1_1.ns1_2|

+---------------+

```

### How was this patch tested?

Added unit tests to `DataSourceV2SQLSuite`.

Closes#25601 from imback82/show_databases.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

At the moment there are 3 places where communication protocol with Kafka cluster has to be set when delegation token used:

* On delegation token

* On source

* On sink

Most of the time users are using the same protocol on all these places (within one Kafka cluster). It would be better to declare it in one place (delegation token side) and Kafka sources/sinks can take this config over.

In this PR I've I've modified the code in a way that Kafka sources/sinks are taking over delegation token side `security.protocol` configuration when the token and the source/sink matches in `bootstrap.servers` configuration. This default configuration can be overwritten on each source/sink independently by using `kafka.security.protocol` configuration.

### Why are the changes needed?

The actual configuration's default behavior represents the minority of the use-cases and inconvenient.

### Does this PR introduce any user-facing change?

Yes, with this change users need to provide less configuration parameters by default.

### How was this patch tested?

Existing + additional unit tests.

Closes#25631 from gaborgsomogyi/SPARK-28928.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

Document CLEAR CACHE statement in SQL Reference

### Why are the changes needed?

To complete SQL Reference

### Does this PR introduce any user-facing change?

Yes

After change:

### How was this patch tested?

Tested using jykyll build --serve

Closes#25541 from huaxingao/spark-28831-n.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

- Remove SQLContext.createExternalTable and Catalog.createExternalTable, deprecated in favor of createTable since 2.2.0, plus tests of deprecated methods

- Remove HiveContext, deprecated in 2.0.0, in favor of `SparkSession.builder.enableHiveSupport`

- Remove deprecated KinesisUtils.createStream methods, plus tests of deprecated methods, deprecate in 2.2.0

- Remove deprecated MLlib (not Spark ML) linear method support, mostly utility constructors and 'train' methods, and associated docs. This includes methods in LinearRegression, LogisticRegression, Lasso, RidgeRegression. These have been deprecated since 2.0.0

- Remove deprecated Pyspark MLlib linear method support, including LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD

- Remove 'runs' argument in KMeans.train() method, which has been a no-op since 2.0.0

- Remove deprecated ChiSqSelector isSorted protected method

- Remove deprecated 'yarn-cluster' and 'yarn-client' master argument in favor of 'yarn' and deploy mode 'cluster', etc

Notes:

- I was not able to remove deprecated DataFrameReader.json(RDD) in favor of DataFrameReader.json(Dataset); the former was deprecated in 2.2.0, but, it is still needed to support Pyspark's .json() method, which can't use a Dataset.

- Looks like SQLContext.createExternalTable was not actually deprecated in Pyspark, but, almost certainly was meant to be? Catalog.createExternalTable was.

- I afterwards noted that the toDegrees, toRadians functions were almost removed fully in SPARK-25908, but Felix suggested keeping just the R version as they hadn't been technically deprecated. I'd like to revisit that. Do we really want the inconsistency? I'm not against reverting it again, but then that implies leaving SQLContext.createExternalTable just in Pyspark too, which seems weird.

- I *kept* LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD, RidgeRegressionWithSGD in Pyspark, though deprecated, as it is hard to remove them (still used by StreamingLogisticRegressionWithSGD?) and they are not fully removed in Scala. Maybe should not have been deprecated.

### Why are the changes needed?

Deprecated items are easiest to remove in a major release, so we should do so as much as possible for Spark 3. This does not target items deprecated 'recently' as of Spark 2.3, which is still 18 months old.

### Does this PR introduce any user-facing change?

Yes, in that deprecated items are removed from some public APIs.

### How was this patch tested?

Existing tests.

Closes#25684 from srowen/SPARK-28980.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

KinesisInputDStream currently does not provide a way to disable

CloudWatch metrics push. Its default level is "DETAILED" which pushes

10s of metrics every 10 seconds. When dealing with multiple streaming

jobs this add up pretty quickly, leading to thousands of dollars in cost.

To address this problem, this PR adds interfaces for accessing

KinesisClientLibConfiguration's `withMetrics` and

`withMetricsEnabledDimensions` methods to KinesisInputDStream

so that users can configure KCL's metrics levels and dimensions.

## How was this patch tested?

By running updated unit tests in KinesisInputDStreamBuilderSuite.

In addition, I ran a Streaming job with MetricsLevel.NONE and confirmed:

* there's no data point for the "Operation", "Operation, ShardId" and "WorkerIdentifier" dimensions on the AWS management console

* there's no DEBUG level message from Amazon KCL, such as "Successfully published xx datums."

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#24651 from sekikn/SPARK-27420.

Authored-by: Kengo Seki <sekikn@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This patch adds the description of common SQL metrics in web ui document.

### Why are the changes needed?

The current web ui document describes query plan but does not describe the meaning SQL metrics. For end users, they might not understand the meaning of the metrics.

### Does this PR introduce any user-facing change?

No. This is just documentation change.

### How was this patch tested?

Built the docs locally.

Closes#25658 from viirya/SPARK-28935.

Lead-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Document DESCRIBE DATABASE statement in SQL Reference

### Why are the changes needed?

To complete the SQL Reference

### Does this PR introduce any user-facing change?

Yes

#### Before

There is no documentation for this command in sql reference

#### After

### How was this patch tested?

Used jekyll build and serve to verify

Closes#25528 from kevinyu98/sql-ref-describe.

Lead-authored-by: Kevin Yu <qyu@us.ibm.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Document UNCACHE TABLE statement in SQL Reference

### Why are the changes needed?

To complete SQL Reference

### Does this PR introduce any user-facing change?

Yes.

After change:

### How was this patch tested?

Tested using jykyll build --serve

Closes#25540 from huaxingao/spark-28830.

Lead-authored-by: Huaxin Gao <huaxing@us.ibm.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Document SHOW TBLPROPERTIES statement in SQL Reference Guide.

### Why are the changes needed?

Currently Spark lacks documentation on the supported SQL constructs causing

confusion among users who sometimes have to look at the code to understand the

usage. This is aimed at addressing this issue.

### Does this PR introduce any user-facing change?

Yes.

**Before:**

There was no documentation for this.

**After.**

### How was this patch tested?

Tested using jykyll build --serve

Closes#25571 from dilipbiswal/ref-show-tblproperties.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This patch does pooling for both kafka consumers as well as fetched data. The overall benefits of the patch are following:

* Both pools support eviction on idle objects, which will help closing invalid idle objects which topic or partition are no longer be assigned to any tasks.

* It also enables applying different policies on pool, which helps optimization of pooling for each pool.

* We concerned about multiple tasks pointing same topic partition as well as same group id, and existing code can't handle this hence excess seek and fetch could happen. This patch properly handles the case.

* It also makes the code always safe to leverage cache, hence no need to maintain reuseCache parameter.

Moreover, pooling kafka consumers is implemented based on Apache Commons Pool, which also gives couple of benefits:

* We can get rid of synchronization of KafkaDataConsumer object while acquiring and returning InternalKafkaConsumer.

* We can extract the feature of object pool to outside of the class, so that the behaviors of the pool can be tested easily.

* We can get various statistics for the object pool, and also be able to enable JMX for the pool.

FetchedData instances are pooled by custom implementation of pool instead of leveraging Apache Commons Pool, because they have CacheKey as first key and "desired offset" as second key which "desired offset" is changing - I haven't found any general pool implementations supporting this.

This patch brings additional dependency, Apache Commons Pool 2.6.0 into `spark-sql-kafka-0-10` module.

## How was this patch tested?

Existing unit tests as well as new tests for object pool.

Also did some experiment regarding proving concurrent access of consumers for same topic partition.

* Made change on both sides (master and patch) to log when creating Kafka consumer or fetching records from Kafka is happening.

* branches

* master: https://github.com/HeartSaVioR/spark/tree/SPARK-25151-master-ref-debugging

* patch: https://github.com/HeartSaVioR/spark/tree/SPARK-25151-debugging

* Test query (doing self-join)

* https://gist.github.com/HeartSaVioR/d831974c3f25c02846f4b15b8d232cc2

* Ran query from spark-shell, with using `local[*]` to maximize the chance to have concurrent access

* Collected the count of fetch requests on Kafka via command: `grep "creating new Kafka consumer" logfile | wc -l`

* Collected the count of creating Kafka consumers via command: `grep "fetching data from Kafka consumer" logfile | wc -l`

Topic and data distribution is follow:

```

truck_speed_events_stream_spark_25151_v1:0:99440

truck_speed_events_stream_spark_25151_v1:1:99489

truck_speed_events_stream_spark_25151_v1:2:397759

truck_speed_events_stream_spark_25151_v1:3:198917

truck_speed_events_stream_spark_25151_v1:4:99484

truck_speed_events_stream_spark_25151_v1:5:497320

truck_speed_events_stream_spark_25151_v1:6:99430

truck_speed_events_stream_spark_25151_v1:7:397887

truck_speed_events_stream_spark_25151_v1:8:397813

truck_speed_events_stream_spark_25151_v1:9:0

```

The experiment only used smallest 4 partitions (0, 1, 4, 6) from these partitions to finish the query earlier.

The result of experiment is below:

branch | create Kafka consumer | fetch request

-- | -- | --

master | 1986 | 2837

patch | 8 | 1706

Closes#22138 from HeartSaVioR/SPARK-25151.

Lead-authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Co-authored-by: Jungtaek Lim <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

If MEMORY_OFFHEAP_ENABLED is true, add MEMORY_OFFHEAP_SIZE to resource requested for executor to ensure instance has enough memory to use.

In this pr add a helper method `executorOffHeapMemorySizeAsMb` in `YarnSparkHadoopUtil`.

## How was this patch tested?

Add 3 new test suite to test `YarnSparkHadoopUtil#executorOffHeapMemorySizeAsMb`

Closes#25309 from LuciferYang/spark-28577.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Document DESCRIBE FUNCTION statement in SQL Reference Guide.

### Why are the changes needed?

Currently Spark lacks documentation on the supported SQL constructs causing

confusion among users who sometimes have to look at the code to understand the

usage. This is aimed at addressing this issue.

### Does this PR introduce any user-facing change?

Yes.

**Before:**

There was no documentation for this.

**After.**

<img width="1234" alt="Screen Shot 2019-09-02 at 11 14 09 PM" src="https://user-images.githubusercontent.com/14225158/64148193-85534380-cdd7-11e9-9c07-5956b5e8276e.png">

<img width="1234" alt="Screen Shot 2019-09-02 at 11 14 29 PM" src="https://user-images.githubusercontent.com/14225158/64148201-8a17f780-cdd7-11e9-93d8-10ad9932977c.png">

<img width="1234" alt="Screen Shot 2019-09-02 at 11 14 42 PM" src="https://user-images.githubusercontent.com/14225158/64148208-8dab7e80-cdd7-11e9-97c5-3a4ce12cac7a.png">

### How was this patch tested?

Tested using jykyll build --serve

Closes#25530 from dilipbiswal/ref-doc-desc-function.

Lead-authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Document SHOW COLUMNS statement in SQL Reference Guide.

### Why are the changes needed?

Currently Spark lacks documentation on the supported SQL constructs causing

confusion among users who sometimes have to look at the code to understand the

usage. This is aimed at addressing this issue.

### Does this PR introduce any user-facing change?

Yes.

**Before:**

There was no documentation for this.

**After.**

<img width="1234" alt="Screen Shot 2019-09-02 at 11 07 48 PM" src="https://user-images.githubusercontent.com/14225158/64148033-0fe77300-cdd7-11e9-93ee-e5951c7ed33c.png">

<img width="1234" alt="Screen Shot 2019-09-02 at 11 08 08 PM" src="https://user-images.githubusercontent.com/14225158/64148039-137afa00-cdd7-11e9-8bec-634ea9d2594c.png">

<img width="1234" alt="Screen Shot 2019-09-02 at 11 11 45 PM" src="https://user-images.githubusercontent.com/14225158/64148046-17a71780-cdd7-11e9-91c3-95a9c97e7a77.png">

### How was this patch tested?

Tested using jykyll build --serve

Closes#25531 from dilipbiswal/ref-doc-show-columns.

Lead-authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Document DESCRIBE QUERY statement in SQL Reference Guide.

### Why are the changes needed?

Currently Spark lacks documentation on the supported SQL constructs causing

confusion among users who sometimes have to look at the code to understand the

usage. This is aimed at addressing this issue.

### Does this PR introduce any user-facing change?

Yes.

**Before:**

There was no documentation for this.

**After.**

<img width="1234" alt="Screen Shot 2019-08-29 at 5 47 51 PM" src="https://user-images.githubusercontent.com/14225158/63985609-43e43080-ca85-11e9-8a1a-c9c15d988e24.png">

<img width="1234" alt="Screen Shot 2019-08-29 at 5 48 06 PM" src="https://user-images.githubusercontent.com/14225158/63985610-46468a80-ca85-11e9-882a-7163784f72c6.png">

<img width="1234" alt="Screen Shot 2019-08-29 at 5 48 18 PM" src="https://user-images.githubusercontent.com/14225158/63985617-49da1180-ca85-11e9-9e77-a6d6c7042a85.png">

### How was this patch tested?

Tested using jykyll build --serve

Closes#25529 from dilipbiswal/ref-doc-desc-query.

Lead-authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Document ALTER DATABSE statement in SQL Reference Guide.

### Why are the changes needed?

Currently Spark lacks documentation on the supported SQL constructs causing

confusion among users who sometimes have to look at the code to understand the

usage. This is aimed at addressing this issue.

### Does this PR introduce any user-facing change?

Yes.

**Before:**

There was no documentation for this.

**After.**

<img width="1234" alt="Screen Shot 2019-08-28 at 1 51 13 PM" src="https://user-images.githubusercontent.com/14225158/63891854-fc817580-c99a-11e9-918e-6b305edf92e6.png">

<img width="1234" alt="Screen Shot 2019-08-28 at 1 51 27 PM" src="https://user-images.githubusercontent.com/14225158/63891869-0acf9180-c99b-11e9-91a4-04d870474a40.png">

### How was this patch tested?

Tested using jykyll build --serve

Closes#25523 from dilipbiswal/ref-doc-alterdb.

Lead-authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Hive 3.1.2 has been released. This PR upgrades the Hive Metastore Client to 3.1.2 for Hive 3.1.

Hive 3.1.2 release notes:

https://issues.apache.org/jira/secure/ReleaseNote.jspa?version=12344397&styleName=Html&projectId=12310843

### Why are the changes needed?

This is an improvement to support a newly release 3.1.2. Otherwise, it will throws `UnsupportedOperationException` if user `set spark.sql.hive.metastore.version=3.1.2`:

```scala

Exception in thread "main" java.lang.UnsupportedOperationException: Unsupported Hive Metastore version (3.1.2). Please set spark.sql.hive.metastore.version with a valid version.

at org.apache.spark.sql.hive.client.IsolatedClientLoader$.hiveVersion(IsolatedClientLoader.scala:109)

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing UT

Closes#25604 from wangyum/SPARK-28890.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

1, add a basic doc for executor page

2, btw, move the version number in the document of SQL page outside

### Why are the changes needed?

Spark web UIs are being used to monitor the status and resource consumption of your Spark applications and clusters. However, we do not have the corresponding document. It is hard for end users to use and understand them.

### Does this PR introduce any user-facing change?

yes, the doc is changed

### How was this patch tested?

locally build

<img width="468" alt="图片" src="https://user-images.githubusercontent.com/7322292/63758724-d2727980-c8ee-11e9-8380-cbae51453629.png">

Closes#25596 from zhengruifeng/doc_ui_exe.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Make `spark.sql.crossJoin.enabled` default value true

### Why are the changes needed?

For implicit cross join, we can set up a watchdog to cancel it if running for a long time.

When "spark.sql.crossJoin.enabled" is false, because `CheckCartesianProducts` is implemented in logical plan stage, it may generate some mismatching error which may confuse end user:

* it's done in logical phase, so we may fail queries that can be executed via broadcast join, which is very fast.

* if we move the check to the physical phase, then a query may success at the beginning, and begin to fail when the table size gets larger (other people insert data to the table). This can be quite confusing.

* the CROSS JOIN syntax doesn't work well if join reorder happens.

* some non-equi-join will generate plan using cartesian product, but `CheckCartesianProducts` do not detect it and raise error.

So that in order to address this in simpler way, we can turn off showing this cross-join error by default.

For reference, I list some cases raising mismatching error here:

Providing:

```

spark.range(2).createOrReplaceTempView("sm1") // can be broadcast

spark.range(50000000).createOrReplaceTempView("bg1") // cannot be broadcast

spark.range(60000000).createOrReplaceTempView("bg2") // cannot be broadcast

```

1) Some join could be convert to broadcast nested loop join, but CheckCartesianProducts raise error. e.g.

```

select sm1.id, bg1.id from bg1 join sm1 where sm1.id < bg1.id

```

2) Some join will run by CartesianJoin but CheckCartesianProducts DO NOT raise error. e.g.

```

select bg1.id, bg2.id from bg1 join bg2 where bg1.id < bg2.id

```

### Does this PR introduce any user-facing change?

### How was this patch tested?

Closes#25520 from WeichenXu123/SPARK-28621.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The code style in the 'Policy for handling multiple watermarks' in structured-streaming-programming-guide.md

### Why are the changes needed?

Making it look friendly to user.

### Does this PR introduce any user-facing change?

NO

### How was this patch tested?

cd docs

SKIP_API=1 jekyll build

Closes#25580 from cyq89051127/master.

Authored-by: cyq89051127 <chaiyq@asiainfo.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

add an example for storage tab

## How was this patch tested?

locally building

Closes#25445 from zhengruifeng/doc_ui_storage.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This change reverts the logic which was introduced as a part of SPARK-24149 and a subsequent followup PR.

With existing logic:

- Spark fails to launch with HDFS federation enabled while trying to get a path to a logical nameservice.

- It gets tokens for unrelated namespaces if they are used in HDFS Federation

- Automatic namespace discovery is supported only if these are on the same cluster.

Rationale for change:

- For accessing data from related namespaces, viewfs should handle getting tokens for spark

- For accessing data from unrelated namespaces(user explicitly specifies them using existing configs) as these could be on the same or different cluster.

(Please fill in changes proposed in this fix)

Revert the changes.

## How was this patch tested?

Ran few manual tests and unit test.

Closes#24785 from dhruve/bug/SPARK-27937.

Authored-by: Dhruve Ashar <dhruveashar@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

# What changes were proposed in this pull request?

This patch modifies the explanation of guarantee for ForeachWriter as it doesn't guarantee same output for `(partitionId, epochId)`. Refer the description of [SPARK-28650](https://issues.apache.org/jira/browse/SPARK-28650) for more details.

Spark itself still guarantees same output for same epochId (batch) if the preconditions are met, 1) source is always providing the same input records for same offset request. 2) the query is idempotent in overall (indeterministic calculation like now(), random() can break this).

Assuming breaking preconditions as an exceptional case (the preconditions are implicitly required even before), we still can describe the guarantee with `epochId`, though it will be harder to leverage the guarantee: 1) ForeachWriter should implement a feature to track whether all the partitions are written successfully for given `epochId` 2) There's pretty less chance to leverage the fact, as the chance for Spark to successfully write all partitions and fail to checkpoint the batch is small.

Credit to zsxwing on discovering the broken guarantee.

## How was this patch tested?

This is just a documentation change, both on javadoc and guide doc.

Closes#25407 from HeartSaVioR/SPARK-28650.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

This is a initial PR that creates the table of content for SQL reference guide. The left side bar will displays additional menu items corresponding to supported SQL constructs. One this PR is merged, we will fill in the content incrementally. Additionally this PR contains a minor change to make the left sidebar scrollable. Currently it is not possible to scroll in the left hand side window.

## How was this patch tested?

Used jekyll build and serve to verify.

Closes#25459 from dilipbiswal/ref-doc.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR aims to fix CTAS fails after we closed a session of ThriftServer.

- sql-distributed-sql-engine.md

It seems the simplest way to fix [[SPARK-21067]](https://issues.apache.org/jira/browse/SPARK-21067).

For example :

If we use HDFS, we can set the following property in hive-site.xml.

`<property>`

` <name>fs.hdfs.impl.disable.cache</name>`

` <value>true</value>`

`</property>`

## How was this patch tested

Manual.

Closes#25364 from Deegue/fix_add_doc_file_system.

Authored-by: Yizhong Zhang <zyzzxycj@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

After Apache Spark 3.0.0 supports JDK11 officially, people will try JDK11 on old Spark releases (especially 2.4.4/2.3.4) in the same way because our document says `Java 8+`. We had better avoid that misleading situation.

This PR aims to remove `+` from `Java 8+` in the documentation (master/2.4/2.3). Especially, 2.4.4 release and 2.3.4 release (cc kiszk )

On master branch, we will add JDK11 after [SPARK-24417.](https://issues.apache.org/jira/browse/SPARK-24417)

## How was this patch tested?

This is a documentation only change.

<img width="923" alt="java8" src="https://user-images.githubusercontent.com/9700541/63116589-e1504800-bf4e-11e9-8904-b160ec7a42c0.png">

Closes#25466 from dongjoon-hyun/SPARK-DOC-JDK8.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

This patch adds the binding classes to enable spark to switch dataframe output to using the S3A zero-rename committers shipping in Hadoop 3.1+. It adds a source tree into the hadoop-cloud-storage module which only compiles with the hadoop-3.2 profile, and contains a binding for normal output and a specific bridge class for Parquet (as the parquet output format requires a subclass of `ParquetOutputCommitter`.

Commit algorithms are a critical topic. There's no formal proof of correctness, but the algorithms are documented an analysed in [A Zero Rename Committer](https://github.com/steveloughran/zero-rename-committer/releases). This also reviews the classic v1 and v2 algorithms, IBM's swift committer and the one from EMRFS which they admit was based on the concepts implemented here.

Test-wise

* There's a public set of scala test suites [on github](https://github.com/hortonworks-spark/cloud-integration)

* We have run integration tests against Spark on Yarn clusters.

* This code has been shipping for ~12 months in HDP-3.x.

Closes#24970 from steveloughran/cloud/SPARK-23977-s3a-committer.

Authored-by: Steve Loughran <stevel@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Here is the problem description from the JIRA.

```

When the inputs contain the constant 'infinity', Spark SQL does not generate the expected results.

SELECT avg(CAST(x AS DOUBLE)), var_pop(CAST(x AS DOUBLE))

FROM (VALUES ('1'), (CAST('infinity' AS DOUBLE))) v(x);

SELECT avg(CAST(x AS DOUBLE)), var_pop(CAST(x AS DOUBLE))

FROM (VALUES ('infinity'), ('1')) v(x);

SELECT avg(CAST(x AS DOUBLE)), var_pop(CAST(x AS DOUBLE))

FROM (VALUES ('infinity'), ('infinity')) v(x);

SELECT avg(CAST(x AS DOUBLE)), var_pop(CAST(x AS DOUBLE))

FROM (VALUES ('-infinity'), ('infinity')) v(x);

The root cause: Spark SQL does not recognize the special constants in a case insensitive way. In PostgreSQL, they are recognized in a case insensitive way.

Link: https://www.postgresql.org/docs/9.3/datatype-numeric.html

```

In this PR, the casting code is enhanced to handle these `special` string literals in case insensitive manner.

## How was this patch tested?

Added tests in CastSuite and modified existing test suites.

Closes#25331 from dilipbiswal/double_infinity.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

SPARK-24817 and SPARK-24819 introduced new 3 non-internal properties for barrier-execution mode but they are not documented.

So I've added a section into configuration.md for barrier-mode execution.

## How was this patch tested?

Built using jekyll and confirm the layout by browser.

Closes#25370 from sarutak/barrier-exec-mode-conf-doc.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In this PR, we implements a complete process of GPU-aware resources scheduling

in Standalone. The whole process looks like: Worker sets up isolated resources

when it starts up and registers to master along with its resources. And, Master

picks up usable workers according to driver/executor's resource requirements to

launch driver/executor on them. Then, Worker launches the driver/executor after

preparing resources file, which is created under driver/executor's working directory,

with specified resource addresses(told by master). When driver/executor finished,

their resources could be recycled to worker. Finally, if a worker stops, it

should always release its resources firstly.

For the case of Workers and Drivers in **client** mode run on the same host, we introduce

a config option named `spark.resources.coordinate.enable`(default true) to indicate

whether Spark should coordinate resources for user. If `spark.resources.coordinate.enable=false`, user should be responsible for configuring different resources for Workers and Drivers when use resourcesFile or discovery script. If true, Spark would help user to assign different resources for Workers and Drivers.

The solution for Spark to coordinate resources among Workers and Drivers is:

Generally, use a shared file named *____allocated_resources____.json* to sync allocated

resources info among Workers and Drivers on the same host.

After a Worker or Driver found all resources using the configured resourcesFile and/or

discovery script during launching, it should filter out available resources by excluding resources already allocated in *____allocated_resources____.json* and acquire resources from available resources according to its own requirement. After that, it should write its allocated resources along with its process id (pid) into *____allocated_resources____.json*. Pid (proposed by tgravescs) here used to check whether the allocated resources are still valid in case of Worker or Driver crashes and doesn't release resources properly. And when a Worker or Driver finished, normally, it would always clean up its own allocated resources in *____allocated_resources____.json*.

Note that we'll always get a file lock before any access to file *____allocated_resources____.json*

and release the lock finally.

Futhermore, we appended resources info in `WorkerSchedulerStateResponse` to work

around master change behaviour in HA mode.

## How was this patch tested?

Added unit tests in WorkerSuite, MasterSuite, SparkContextSuite.

Manually tested with client/cluster mode (e.g. multiple workers) in a single node Standalone.

Closes#25047 from Ngone51/SPARK-27371.

Authored-by: wuyi <ngone_5451@163.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

This is an alternative solution of https://github.com/apache/spark/pull/24442 . It fails the query if ambiguous self join is detected, instead of trying to disambiguate it. The problem is that, it's hard to come up with a reasonable rule to disambiguate, the rule proposed by #24442 is mostly a heuristic.

### background of the self-join problem:

This is a long-standing bug and I've seen many people complaining about it in JIRA/dev list.

A typical example:

```

val df1 = …

val df2 = df1.filter(...)

df1.join(df2, df1("a") > df2("a")) // returns empty result

```

The root cause is, `Dataset.apply` is so powerful that users think it returns a column reference which can point to the column of the Dataset at anywhere. This is not true in many cases. `Dataset.apply` returns an `AttributeReference` . Different Datasets may share the same `AttributeReference`. In the example above, `df2` adds a Filter operator above the logical plan of `df1`, and the Filter operator reserves the output `AttributeReference` of its child. This means, `df1("a")` is exactly the same as `df2("a")`, and `df1("a") > df2("a")` always evaluates to false.

### The rule to detect ambiguous column reference caused by self join:

We can reuse the infra in #24442 :

1. each Dataset has a globally unique id.

2. the `AttributeReference` returned by `Dataset.apply` carries the ID and column position(e.g. 3rd column of the Dataset) via metadata.

3. the logical plan of a `Dataset` carries the ID via `TreeNodeTag`

When self-join happens, the analyzer asks the right side plan of join to re-generate output attributes with new exprIds. Based on it, a simple rule to detect ambiguous self join is:

1. find all column references (i.e. `AttributeReference`s with Dataset ID and col position) in the root node of a query plan.

2. for each column reference, traverse the query plan tree, find a sub-plan that carries Dataset ID and the ID is the same as the one in the column reference.

3. get the corresponding output attribute of the sub-plan by the col position in the column reference.

4. if the corresponding output attribute has a different exprID than the column reference, then it means this sub-plan is on the right side of a self-join and has regenerated its output attributes. This is an ambiguous self join because the column reference points to a table being self-joined.

## How was this patch tested?

existing tests and new test cases

Closes#25107 from cloud-fan/new-self-join.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

`minPartitions` has been used as a hint and relevant method (KafkaOffsetRangeCalculator.getRanges) doesn't guarantee the behavior that partitions will be equal or more than given value.

d67b98ea01/external/kafka-0-10-sql/src/main/scala/org/apache/spark/sql/kafka010/KafkaOffsetRangeCalculator.scala (L32-L46)

This patch makes clear the configuration is a hint, and actual partitions could be less or more.

## How was this patch tested?

Just a documentation change.

Closes#25332 from HeartSaVioR/MINOR-correct-kafka-structured-streaming-doc-minpartition.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

See discussion on the JIRA (and dev). At heart, we find that math.log and math.pow can actually return slightly different results across platforms because of hardware optimizations. For the actual SQL log and pow functions, I propose that we should use StrictMath instead to ensure the answers are already the same. (This should have the benefit of helping tests pass on aarch64.)

Further, the atanh function (which is not part of java.lang.Math) can be implemented in a slightly different and more accurate way.

## How was this patch tested?

Existing tests (which will need to be changed).

Some manual testing locally to understand the numeric issues.

Closes#25279 from srowen/SPARK-28519.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Today all registered metric sources are reported to GraphiteSink with no filtering mechanism, although the codahale project does support it.

GraphiteReporter (ScheduledReporter) from the codahale project requires you implement and supply the MetricFilter interface (there is only a single implementation by default in the codahale project, MetricFilter.ALL).

Propose to add an additional regex config to match and filter metrics to the GraphiteSink

## How was this patch tested?

Included a GraphiteSinkSuite that tests:

1. Absence of regex filter (existing default behavior maintained)

2. Presence of `regex=<regexexpr>` correctly filters metric keys

Closes#25232 from nkarpov/graphite_regex.

Authored-by: Nick Karpov <nick@nickkarpov.com>

Signed-off-by: jerryshao <jerryshao@tencent.com>

## What changes were proposed in this pull request?

This PR aims to support ANSI SQL `Boolean-Predicate` syntax.

```sql

expression IS [NOT] TRUE

expression IS [NOT] FALSE

expression IS [NOT] UNKNOWN

```

There are some mainstream database support this syntax.

- **PostgreSQL:** https://www.postgresql.org/docs/9.1/functions-comparison.html

- **Hive:** https://issues.apache.org/jira/browse/HIVE-13583

- **Redshift:** https://docs.aws.amazon.com/redshift/latest/dg/r_Boolean_type.html

- **Vertica:** https://www.vertica.com/docs/9.2.x/HTML/Content/Authoring/SQLReferenceManual/LanguageElements/Predicates/Boolean-predicate.htm

For example:

```sql

spark-sql> select null is true, null is not true;

false true

spark-sql> select false is true, false is not true;

false true

spark-sql> select true is true, true is not true;

true false

spark-sql> select null is false, null is not false;

false true

spark-sql> select false is false, false is not false;

true false

spark-sql> select true is false, true is not false;

false true

spark-sql> select null is unknown, null is not unknown;

true false

spark-sql> select false is unknown, false is not unknown;

false true

spark-sql> select true is unknown, true is not unknown;

false true

```

**Note**: A null input is treated as the logical value "unknown".

## How was this patch tested?

Pass the Jenkins with the newly added test cases.

Closes#25074 from beliefer/ansi-sql-boolean-test.

Lead-authored-by: gengjiaan <gengjiaan@360.cn>

Co-authored-by: Jiaan Geng <beliefer@163.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

The latest docs http://spark.apache.org/docs/latest/configuration.html contains some description as below:

spark.ui.showConsoleProgress | true | Show the progress bar in the console. The progress bar shows the progress of stages that run for longer than 500ms. If multiple stages run at the same time, multiple progress bars will be displayed on the same line.

-- | -- | --

But the class `org.apache.spark.internal.config.UI` define the config `spark.ui.showConsoleProgress` as below:

```

val UI_SHOW_CONSOLE_PROGRESS = ConfigBuilder("spark.ui.showConsoleProgress")

.doc("When true, show the progress bar in the console.")

.booleanConf

.createWithDefault(false)

```

So I think there are exists some little mistake and lead to confuse reader.

## How was this patch tested?

No need UT.

Closes#25297 from beliefer/inconsistent-desc-showConsoleProgress.

Authored-by: gengjiaan <gengjiaan@360.cn>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Implement `RobustScaler`

Since the transformation is quite similar to `StandardScaler`, I refactor the transform function so that it can be reused in both scalers.

## How was this patch tested?

existing and added tests

Closes#25160 from zhengruifeng/robust_scaler.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This pr is used to support using hostpath/PV volume mounts as local storage. In KubernetesExecutorBuilder.scala, the LocalDrisFeatureStep is built before MountVolumesFeatureStep which means we cannot use any volumes mount later. This pr adjust the order of feature building steps which moves localDirsFeature at last so that we can check if directories in SPARK_LOCAL_DIRS are set to volumes mounted such as hostPath, PV, or others.

## How was this patch tested?

Unit tests

Closes#24879 from chenjunjiedada/SPARK-28042.

Lead-authored-by: Junjie Chen <jimmyjchen@tencent.com>

Co-authored-by: Junjie Chen <cjjnjust@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

These are what I found during working on #22282.

- Remove unused value: `UnsafeArraySuite#defaultTz`

- Remove redundant new modifier to the case class, `KafkaSourceRDDPartition`

- Remove unused variables from `RDD.scala`

- Remove trailing space from `structured-streaming-kafka-integration.md`

- Remove redundant parameter from `ArrowConvertersSuite`: `nullable` is `true` by default.

- Remove leading empty line: `UnsafeRow`

- Remove trailing empty line: `KafkaTestUtils`

- Remove unthrown exception type: `UnsafeMapData`

- Replace unused declarations: `expressions`

- Remove duplicated default parameter: `AnalysisErrorSuite`

- `ObjectExpressionsSuite`: remove duplicated parameters, conversions and unused variable

Closes#25251 from dongjinleekr/cleanup/201907.

Authored-by: Lee Dongjin <dongjin@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The motivation for these additional metrics is to help in troubleshooting and monitoring task execution workload when running on a cluster. Currently available metrics include executor threadpool metrics for task completed and for active tasks. The addition of threadpool taskStarted metric will allow for example to collect info on the (approximate) number of failed tasks by computing the difference thread started – (active threads + completed tasks and/or successfully finished tasks).

The proposed metric finishedTasks is also intended for this type of troubleshooting. The difference between finshedTasks and threadpool.completeTasks, is that the latter is a (dropwizard library) gauge taken from the threadpool, while the former is a (dropwizard) counter computed in the [[Executor]] class, when a task successfully finishes, together with several other task metrics counters.

Note, there are similarities with some of the metrics introduced in SPARK-24398, however there are key differences, coming from the fact that this PR concerns the executor source, therefore providing metric values per executor + metric values do not require to pass through the listerner bus in this case.

## How was this patch tested?

Manually tested on a YARN cluster

Closes#22290 from LucaCanali/AddMetricExecutorStartedTasks.

Lead-authored-by: Luca Canali <luca.canali@cern.ch>

Co-authored-by: LucaCanali <luca.canali@cern.ch>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Change the format of the build command in the README to start with a `./` prefix

./build/mvn -DskipTests clean package

This increases stylistic consistency across the README- all the other commands have a `./` prefix. Having a visible `./` prefix also makes it clear to the user that the shell command requires the current working directory to be at the repository root.

## How was this patch tested?

README.md was reviewed both in raw markdown and in the Github rendered landing page for stylistic consistency.

Closes#25231 from Mister-Meeseeks/master.

Lead-authored-by: Douglas R Colkitt <douglas.colkitt@gmail.com>

Co-authored-by: Mister-Meeseeks <douglas.colkitt@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This PR proposes to add a note in the migration guide. See https://github.com/apache/spark/pull/25108#issuecomment-513526585

## How was this patch tested?

N/A

Closes#25224 from HyukjinKwon/SPARK-28321-doc.

Lead-authored-by: HyukjinKwon <gurwls223@apache.org>

Co-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?