### What changes were proposed in this pull request?

This PR adds support for passing `Column`s as input to PySpark sorting functions.

### Why are the changes needed?

According to SPARK-26979, PySpark functions should support both Column and str arguments, when possible.

### Does this PR introduce _any_ user-facing change?

PySpark users can now provide both `Column` and `str` as an argument for `asc*` and `desc*` functions.

### How was this patch tested?

New unit tests.

Closes#30227 from zero323/SPARK-33257.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to migrate to [NumPy documentation style](https://numpydoc.readthedocs.io/en/latest/format.html), see also SPARK-33243.

While I am migrating, I also fixed some Python type hints accordingly.

### Why are the changes needed?

For better documentation as text itself, and generated HTMLs

### Does this PR introduce _any_ user-facing change?

Yes, they will see a better format of HTMLs, and better text format. See SPARK-33243.

### How was this patch tested?

Manually tested via running `./dev/lint-python`.

Closes#30181 from HyukjinKwon/SPARK-33250.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Remove the JSON formatted schema from comments for `from_json()` in Scala/Python APIs.

Closes#30201

### Why are the changes needed?

Schemas in JSON format is internal (not documented). It shouldn't be recommenced for usage.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By linters.

Closes#30226 from MaxGekk/from_json-common-schema-parsing-2.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Return schema in SQL format instead of Catalog string from the SchemaOfCsv expression.

### Why are the changes needed?

To unify output of the `schema_of_json()` and `schema_of_csv()`.

### Does this PR introduce _any_ user-facing change?

Yes, they can but `schema_of_csv()` is usually used in combination with `from_csv()`, so, the format of schema shouldn't be much matter.

Before:

```

> SELECT schema_of_csv('1,abc');

struct<_c0:int,_c1:string>

```

After:

```

> SELECT schema_of_csv('1,abc');

STRUCT<`_c0`: INT, `_c1`: STRING>

```

### How was this patch tested?

By existing test suites `CsvFunctionsSuite` and `CsvExpressionsSuite`.

Closes#30180 from MaxGekk/schema_of_csv-sql-schema.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Return schema in SQL format instead of Catalog string from the `SchemaOfJson` expression.

### Why are the changes needed?

In some cases, `from_json()` cannot parse schemas returned by `schema_of_json`, for instance, when JSON fields have spaces (gaps). Such fields will be quoted after the changes, and can be parsed by `from_json()`.

Here is the example:

```scala

val in = Seq("""{"a b": 1}""").toDS()

in.select(from_json('value, schema_of_json("""{"a b": 100}""")) as "parsed")

```

raises the exception:

```

== SQL ==

struct<a b:bigint>

------^^^

at org.apache.spark.sql.catalyst.parser.ParseException.withCommand(ParseDriver.scala:263)

at org.apache.spark.sql.catalyst.parser.AbstractSqlParser.parse(ParseDriver.scala:130)

at org.apache.spark.sql.catalyst.parser.AbstractSqlParser.parseTableSchema(ParseDriver.scala:76)

at org.apache.spark.sql.types.DataType$.fromDDL(DataType.scala:131)

at org.apache.spark.sql.catalyst.expressions.ExprUtils$.evalTypeExpr(ExprUtils.scala:33)

at org.apache.spark.sql.catalyst.expressions.JsonToStructs.<init>(jsonExpressions.scala:537)

at org.apache.spark.sql.functions$.from_json(functions.scala:4141)

```

### Does this PR introduce _any_ user-facing change?

Yes. For example, `schema_of_json` for the input `{"col":0}`.

Before: `struct<col:bigint>`

After: `STRUCT<`col`: BIGINT>`

### How was this patch tested?

By existing test suites `JsonFunctionsSuite` and `JsonExpressionsSuite`.

Closes#30172 from MaxGekk/schema_of_json-sql-schema.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

- [x] Expand dictionary definitions into standalone functions.

- [x] Fix annotations for ordering functions.

### Why are the changes needed?

To simplify further maintenance of docstrings.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#30143 from zero323/SPARK-32084.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Adds a SQL function `raise_error` which underlies the refactored `assert_true` function. `assert_true` now also (optionally) accepts a custom error message field.

`raise_error` is exposed in SQL, Python, Scala, and R.

`assert_true` was previously only exposed in SQL; it is now also exposed in Python, Scala, and R.

### Why are the changes needed?

Improves usability of `assert_true` by clarifying error messaging, and adds the useful helper function `raise_error`.

### Does this PR introduce _any_ user-facing change?

Yes:

- Adds `raise_error` function to the SQL, Python, Scala, and R APIs.

- Adds `assert_true` function to the SQL, Python and R APIs.

### How was this patch tested?

Adds unit tests in SQL, Python, Scala, and R for `assert_true` and `raise_error`.

Closes#29947 from karenfeng/spark-32793.

Lead-authored-by: Karen Feng <karen.feng@databricks.com>

Co-authored-by: Hyukjin Kwon <gurwls223@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Explicitly document that `current_date` and `current_timestamp` are executed at the start of query evaluation. And all calls of `current_date`/`current_timestamp` within the same query return the same value

### Why are the changes needed?

Users could expect that `current_date` and `current_timestamp` return the current date/timestamp at the moment of query execution but in fact the functions are folded by the optimizer at the start of query evaluation:

0df8dd6073/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/finishAnalysis.scala (L71-L91)

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

by running `./dev/scalastyle`.

Closes#29892 from MaxGekk/doc-current_date.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

`nth_value` was added at SPARK-27951. This PR adds the corresponding PySpark API.

### Why are the changes needed?

To support the consistent APIs

### Does this PR introduce _any_ user-facing change?

Yes, it introduces a new PySpark function API.

### How was this patch tested?

Unittest was added.

Closes#29899 from HyukjinKwon/SPARK-33020.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

More precise description of the result of the `percentile_approx()` function and its synonym `approx_percentile()`. The proposed sentence clarifies that the function returns **one of elements** (or array of elements) from the input column.

### Why are the changes needed?

To improve Spark docs and avoid misunderstanding of the function behavior.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

`./dev/scalastyle`

Closes#29835 from MaxGekk/doc-percentile_approx.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

In the docs concerning the approx_count_distinct I have changed the description of the rsd parameter from **_maximum estimation error allowed_** to _**maximum relative standard deviation allowed**_

### Why are the changes needed?

Maximum estimation error allowed can be misleading. You can set the target relative standard deviation, which affects the estimation error, but on given runs the estimation error can still be above the rsd parameter.

### Does this PR introduce _any_ user-facing change?

This PR should make it easier for users reading the docs to understand that the rsd parameter in approx_count_distinct doesn't cap the estimation error, but just sets the target deviation instead,

### How was this patch tested?

No tests, as no code changes were made.

Closes#29424 from Comonut/fix-approx_count_distinct-rsd-param-description.

Authored-by: alexander-daskalov <alexander.daskalov@adevinta.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

Disallow the use of unused imports:

- Unnecessary increases the memory footprint of the application

- Removes the imports that are required for the examples in the docstring from the file-scope to the example itself. This keeps the files itself clean, and gives a more complete example as it also includes the imports :)

```

fokkodriesprongFan spark % flake8 python | grep -i "imported but unused"

python/pyspark/cloudpickle.py:46:1: F401 'functools.partial' imported but unused

python/pyspark/cloudpickle.py:55:1: F401 'traceback' imported but unused

python/pyspark/heapq3.py:868:5: F401 '_heapq.*' imported but unused

python/pyspark/__init__.py:61:1: F401 'pyspark.version.__version__' imported but unused

python/pyspark/__init__.py:62:1: F401 'pyspark._globals._NoValue' imported but unused

python/pyspark/__init__.py:115:1: F401 'pyspark.sql.SQLContext' imported but unused

python/pyspark/__init__.py:115:1: F401 'pyspark.sql.HiveContext' imported but unused

python/pyspark/__init__.py:115:1: F401 'pyspark.sql.Row' imported but unused

python/pyspark/rdd.py:21:1: F401 're' imported but unused

python/pyspark/rdd.py:29:1: F401 'tempfile.NamedTemporaryFile' imported but unused

python/pyspark/mllib/regression.py:26:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/clustering.py:28:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/clustering.py:28:1: F401 'pyspark.mllib.linalg.DenseVector' imported but unused

python/pyspark/mllib/classification.py:26:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/feature.py:28:1: F401 'pyspark.mllib.linalg.DenseVector' imported but unused

python/pyspark/mllib/feature.py:28:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/feature.py:30:1: F401 'pyspark.mllib.regression.LabeledPoint' imported but unused

python/pyspark/mllib/tests/test_linalg.py:18:1: F401 'sys' imported but unused

python/pyspark/mllib/tests/test_linalg.py:642:5: F401 'pyspark.mllib.tests.test_linalg.*' imported but unused

python/pyspark/mllib/tests/test_feature.py:21:1: F401 'numpy.random' imported but unused

python/pyspark/mllib/tests/test_feature.py:21:1: F401 'numpy.exp' imported but unused

python/pyspark/mllib/tests/test_feature.py:23:1: F401 'pyspark.mllib.linalg.Vector' imported but unused

python/pyspark/mllib/tests/test_feature.py:23:1: F401 'pyspark.mllib.linalg.VectorUDT' imported but unused

python/pyspark/mllib/tests/test_feature.py:185:5: F401 'pyspark.mllib.tests.test_feature.*' imported but unused

python/pyspark/mllib/tests/test_util.py:97:5: F401 'pyspark.mllib.tests.test_util.*' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.Vector' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.DenseVector' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.VectorUDT' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg._convert_to_vector' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.DenseMatrix' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.SparseMatrix' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.MatrixUDT' imported but unused

python/pyspark/mllib/tests/test_stat.py:181:5: F401 'pyspark.mllib.tests.test_stat.*' imported but unused

python/pyspark/mllib/tests/test_streaming_algorithms.py:18:1: F401 'time.time' imported but unused

python/pyspark/mllib/tests/test_streaming_algorithms.py:18:1: F401 'time.sleep' imported but unused

python/pyspark/mllib/tests/test_streaming_algorithms.py:470:5: F401 'pyspark.mllib.tests.test_streaming_algorithms.*' imported but unused

python/pyspark/mllib/tests/test_algorithms.py:295:5: F401 'pyspark.mllib.tests.test_algorithms.*' imported but unused

python/pyspark/tests/test_serializers.py:90:13: F401 'xmlrunner' imported but unused

python/pyspark/tests/test_rdd.py:21:1: F401 'sys' imported but unused

python/pyspark/tests/test_rdd.py:29:1: F401 'pyspark.resource.ResourceProfile' imported but unused

python/pyspark/tests/test_rdd.py:885:5: F401 'pyspark.tests.test_rdd.*' imported but unused

python/pyspark/tests/test_readwrite.py:19:1: F401 'sys' imported but unused

python/pyspark/tests/test_readwrite.py:22:1: F401 'array.array' imported but unused

python/pyspark/tests/test_readwrite.py:309:5: F401 'pyspark.tests.test_readwrite.*' imported but unused

python/pyspark/tests/test_join.py:62:5: F401 'pyspark.tests.test_join.*' imported but unused

python/pyspark/tests/test_taskcontext.py:19:1: F401 'shutil' imported but unused

python/pyspark/tests/test_taskcontext.py:325:5: F401 'pyspark.tests.test_taskcontext.*' imported but unused

python/pyspark/tests/test_conf.py:36:5: F401 'pyspark.tests.test_conf.*' imported but unused

python/pyspark/tests/test_broadcast.py:148:5: F401 'pyspark.tests.test_broadcast.*' imported but unused

python/pyspark/tests/test_daemon.py:76:5: F401 'pyspark.tests.test_daemon.*' imported but unused

python/pyspark/tests/test_util.py:77:5: F401 'pyspark.tests.test_util.*' imported but unused

python/pyspark/tests/test_pin_thread.py:19:1: F401 'random' imported but unused

python/pyspark/tests/test_pin_thread.py:149:5: F401 'pyspark.tests.test_pin_thread.*' imported but unused

python/pyspark/tests/test_worker.py:19:1: F401 'sys' imported but unused

python/pyspark/tests/test_worker.py:26:5: F401 'resource' imported but unused

python/pyspark/tests/test_worker.py:203:5: F401 'pyspark.tests.test_worker.*' imported but unused

python/pyspark/tests/test_profiler.py:101:5: F401 'pyspark.tests.test_profiler.*' imported but unused

python/pyspark/tests/test_shuffle.py:18:1: F401 'sys' imported but unused

python/pyspark/tests/test_shuffle.py:171:5: F401 'pyspark.tests.test_shuffle.*' imported but unused

python/pyspark/tests/test_rddbarrier.py:43:5: F401 'pyspark.tests.test_rddbarrier.*' imported but unused

python/pyspark/tests/test_context.py:129:13: F401 'userlibrary.UserClass' imported but unused

python/pyspark/tests/test_context.py:140:13: F401 'userlib.UserClass' imported but unused

python/pyspark/tests/test_context.py:310:5: F401 'pyspark.tests.test_context.*' imported but unused

python/pyspark/tests/test_appsubmit.py:241:5: F401 'pyspark.tests.test_appsubmit.*' imported but unused

python/pyspark/streaming/dstream.py:18:1: F401 'sys' imported but unused

python/pyspark/streaming/tests/test_dstream.py:27:1: F401 'pyspark.RDD' imported but unused

python/pyspark/streaming/tests/test_dstream.py:647:5: F401 'pyspark.streaming.tests.test_dstream.*' imported but unused

python/pyspark/streaming/tests/test_kinesis.py:83:5: F401 'pyspark.streaming.tests.test_kinesis.*' imported but unused

python/pyspark/streaming/tests/test_listener.py:152:5: F401 'pyspark.streaming.tests.test_listener.*' imported but unused

python/pyspark/streaming/tests/test_context.py:178:5: F401 'pyspark.streaming.tests.test_context.*' imported but unused

python/pyspark/testing/utils.py:30:5: F401 'scipy.sparse' imported but unused

python/pyspark/testing/utils.py:36:5: F401 'numpy as np' imported but unused

python/pyspark/ml/regression.py:25:1: F401 'pyspark.ml.tree._TreeEnsembleParams' imported but unused

python/pyspark/ml/regression.py:25:1: F401 'pyspark.ml.tree._HasVarianceImpurity' imported but unused

python/pyspark/ml/regression.py:29:1: F401 'pyspark.ml.wrapper.JavaParams' imported but unused

python/pyspark/ml/util.py:19:1: F401 'sys' imported but unused

python/pyspark/ml/__init__.py:25:1: F401 'pyspark.ml.pipeline' imported but unused

python/pyspark/ml/pipeline.py:18:1: F401 'sys' imported but unused

python/pyspark/ml/stat.py:22:1: F401 'pyspark.ml.linalg.DenseMatrix' imported but unused

python/pyspark/ml/stat.py:22:1: F401 'pyspark.ml.linalg.Vectors' imported but unused

python/pyspark/ml/tests/test_training_summary.py:18:1: F401 'sys' imported but unused

python/pyspark/ml/tests/test_training_summary.py:364:5: F401 'pyspark.ml.tests.test_training_summary.*' imported but unused

python/pyspark/ml/tests/test_linalg.py:381:5: F401 'pyspark.ml.tests.test_linalg.*' imported but unused

python/pyspark/ml/tests/test_tuning.py:427:9: F401 'pyspark.sql.functions as F' imported but unused

python/pyspark/ml/tests/test_tuning.py:757:5: F401 'pyspark.ml.tests.test_tuning.*' imported but unused

python/pyspark/ml/tests/test_wrapper.py:120:5: F401 'pyspark.ml.tests.test_wrapper.*' imported but unused

python/pyspark/ml/tests/test_feature.py:19:1: F401 'sys' imported but unused

python/pyspark/ml/tests/test_feature.py:304:5: F401 'pyspark.ml.tests.test_feature.*' imported but unused

python/pyspark/ml/tests/test_image.py:19:1: F401 'py4j' imported but unused

python/pyspark/ml/tests/test_image.py:22:1: F401 'pyspark.testing.mlutils.PySparkTestCase' imported but unused

python/pyspark/ml/tests/test_image.py:71:5: F401 'pyspark.ml.tests.test_image.*' imported but unused

python/pyspark/ml/tests/test_persistence.py:456:5: F401 'pyspark.ml.tests.test_persistence.*' imported but unused

python/pyspark/ml/tests/test_evaluation.py:56:5: F401 'pyspark.ml.tests.test_evaluation.*' imported but unused

python/pyspark/ml/tests/test_stat.py:43:5: F401 'pyspark.ml.tests.test_stat.*' imported but unused

python/pyspark/ml/tests/test_base.py:70:5: F401 'pyspark.ml.tests.test_base.*' imported but unused

python/pyspark/ml/tests/test_param.py:20:1: F401 'sys' imported but unused

python/pyspark/ml/tests/test_param.py:375:5: F401 'pyspark.ml.tests.test_param.*' imported but unused

python/pyspark/ml/tests/test_pipeline.py:62:5: F401 'pyspark.ml.tests.test_pipeline.*' imported but unused

python/pyspark/ml/tests/test_algorithms.py:333:5: F401 'pyspark.ml.tests.test_algorithms.*' imported but unused

python/pyspark/ml/param/__init__.py:18:1: F401 'sys' imported but unused

python/pyspark/resource/tests/test_resources.py:17:1: F401 'random' imported but unused

python/pyspark/resource/tests/test_resources.py:20:1: F401 'pyspark.resource.ResourceProfile' imported but unused

python/pyspark/resource/tests/test_resources.py:75:5: F401 'pyspark.resource.tests.test_resources.*' imported but unused

python/pyspark/sql/functions.py:32:1: F401 'pyspark.sql.udf.UserDefinedFunction' imported but unused

python/pyspark/sql/functions.py:34:1: F401 'pyspark.sql.pandas.functions.pandas_udf' imported but unused

python/pyspark/sql/session.py:30:1: F401 'pyspark.sql.types.Row' imported but unused

python/pyspark/sql/session.py:30:1: F401 'pyspark.sql.types.StringType' imported but unused

python/pyspark/sql/readwriter.py:1084:5: F401 'pyspark.sql.Row' imported but unused

python/pyspark/sql/context.py:26:1: F401 'pyspark.sql.types.IntegerType' imported but unused

python/pyspark/sql/context.py:26:1: F401 'pyspark.sql.types.Row' imported but unused

python/pyspark/sql/context.py:26:1: F401 'pyspark.sql.types.StringType' imported but unused

python/pyspark/sql/context.py:27:1: F401 'pyspark.sql.udf.UDFRegistration' imported but unused

python/pyspark/sql/streaming.py:1212:5: F401 'pyspark.sql.Row' imported but unused

python/pyspark/sql/tests/test_utils.py:55:5: F401 'pyspark.sql.tests.test_utils.*' imported but unused

python/pyspark/sql/tests/test_pandas_map.py:18:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_pandas_map.py:22:1: F401 'pyspark.sql.functions.pandas_udf' imported but unused

python/pyspark/sql/tests/test_pandas_map.py:22:1: F401 'pyspark.sql.functions.PandasUDFType' imported but unused

python/pyspark/sql/tests/test_pandas_map.py:119:5: F401 'pyspark.sql.tests.test_pandas_map.*' imported but unused

python/pyspark/sql/tests/test_catalog.py:193:5: F401 'pyspark.sql.tests.test_catalog.*' imported but unused

python/pyspark/sql/tests/test_group.py:39:5: F401 'pyspark.sql.tests.test_group.*' imported but unused

python/pyspark/sql/tests/test_session.py:361:5: F401 'pyspark.sql.tests.test_session.*' imported but unused

python/pyspark/sql/tests/test_conf.py:49:5: F401 'pyspark.sql.tests.test_conf.*' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:19:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:21:1: F401 'pyspark.sql.functions.sum' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:21:1: F401 'pyspark.sql.functions.PandasUDFType' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:29:5: F401 'pandas.util.testing.assert_series_equal' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:32:5: F401 'pyarrow as pa' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:248:5: F401 'pyspark.sql.tests.test_pandas_cogrouped_map.*' imported but unused

python/pyspark/sql/tests/test_udf.py:24:1: F401 'py4j' imported but unused

python/pyspark/sql/tests/test_pandas_udf_typehints.py:246:5: F401 'pyspark.sql.tests.test_pandas_udf_typehints.*' imported but unused

python/pyspark/sql/tests/test_functions.py:19:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_functions.py:362:9: F401 'pyspark.sql.functions.exists' imported but unused

python/pyspark/sql/tests/test_functions.py:387:5: F401 'pyspark.sql.tests.test_functions.*' imported but unused

python/pyspark/sql/tests/test_pandas_udf_scalar.py:21:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_pandas_udf_scalar.py:45:5: F401 'pyarrow as pa' imported but unused

python/pyspark/sql/tests/test_pandas_udf_window.py:355:5: F401 'pyspark.sql.tests.test_pandas_udf_window.*' imported but unused

python/pyspark/sql/tests/test_arrow.py:38:5: F401 'pyarrow as pa' imported but unused

python/pyspark/sql/tests/test_pandas_grouped_map.py:20:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_pandas_grouped_map.py:38:5: F401 'pyarrow as pa' imported but unused

python/pyspark/sql/tests/test_dataframe.py:382:9: F401 'pyspark.sql.DataFrame' imported but unused

python/pyspark/sql/avro/functions.py:125:5: F401 'pyspark.sql.Row' imported but unused

python/pyspark/sql/pandas/functions.py:19:1: F401 'sys' imported but unused

```

After:

```

fokkodriesprongFan spark % flake8 python | grep -i "imported but unused"

fokkodriesprongFan spark %

```

### What changes were proposed in this pull request?

Removing unused imports from the Python files to keep everything nice and tidy.

### Why are the changes needed?

Cleaning up of the imports that aren't used, and suppressing the imports that are used as references to other modules, preserving backward compatibility.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Adding the rule to the existing Flake8 checks.

Closes#29121 from Fokko/SPARK-32319.

Authored-by: Fokko Driesprong <fokko@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Change casting of map and struct values to strings by using the `{}` brackets instead of `[]`. The behavior is controlled by the SQL config `spark.sql.legacy.castComplexTypesToString.enabled`. When it is `true`, `CAST` wraps maps and structs by `[]` in casting to strings. Otherwise, if this is `false`, which is the default, maps and structs are wrapped by `{}`.

### Why are the changes needed?

- To distinguish structs/maps from arrays.

- To make `show`'s output consistent with Hive and conversions to Hive strings.

- To display dataframe content in the same form by `spark-sql` and `show`

- To be consistent with the `*.sql` tests

### Does this PR introduce _any_ user-facing change?

Yes

### How was this patch tested?

By existing test suite `CastSuite`.

Closes#29308 from MaxGekk/show-struct-map.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to redesign the PySpark documentation.

I made a demo site to make it easier to review: https://hyukjin-spark.readthedocs.io/en/stable/reference/index.html.

Here is the initial draft for the final PySpark docs shape: https://hyukjin-spark.readthedocs.io/en/latest/index.html.

In more details, this PR proposes:

1. Use [pydata_sphinx_theme](https://github.com/pandas-dev/pydata-sphinx-theme) theme - [pandas](https://pandas.pydata.org/docs/) and [Koalas](https://koalas.readthedocs.io/en/latest/) use this theme. The CSS overwrite is ported from Koalas. The colours in the CSS were actually chosen by designers to use in Spark.

2. Use the Sphinx option to separate `source` and `build` directories as the documentation pages will likely grow.

3. Port current API documentation into the new style. It mimics Koalas and pandas to use the theme most effectively.

One disadvantage of this approach is that you should list up APIs or classes; however, I think this isn't a big issue in PySpark since we're being conservative on adding APIs. I also intentionally listed classes only instead of functions in ML and MLlib to make it relatively easier to manage.

### Why are the changes needed?

Often I hear the complaints, from the users, that current PySpark documentation is pretty messy to read - https://spark.apache.org/docs/latest/api/python/index.html compared other projects such as [pandas](https://pandas.pydata.org/docs/) and [Koalas](https://koalas.readthedocs.io/en/latest/).

It would be nicer if we can make it more organised instead of just listing all classes, methods and attributes to make it easier to navigate.

Also, the documentation has been there from almost the very first version of PySpark. Maybe it's time to update it.

### Does this PR introduce _any_ user-facing change?

Yes, PySpark API documentation will be redesigned.

### How was this patch tested?

Manually tested, and the demo site was made to show.

Closes#29188 from HyukjinKwon/SPARK-32179.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a follow-up of #29138 which added overload `slice` function to accept `Column` for `start` and `length` in Scala.

This PR is updating the equivalent Python function to accept `Column` as well.

### Why are the changes needed?

Now that Scala version accepts `Column`, Python version should also accept it.

### Does this PR introduce _any_ user-facing change?

Yes, PySpark users will also be able to pass Column object to `start` and `length` parameter in `slice` function.

### How was this patch tested?

Added tests.

Closes#29195 from ueshin/issues/SPARK-32338/slice.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

- Adds `DataFramWriterV2` class.

- Adds `writeTo` method to `pyspark.sql.DataFrame`.

- Adds related SQL partitioning functions (`years`, `months`, ..., `bucket`).

### Why are the changes needed?

Feature parity.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added new unit tests.

TODO: Should we test against `org.apache.spark.sql.connector.InMemoryTableCatalog`? If so, how to expose it in Python tests?

Closes#27331 from zero323/SPARK-29157.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR will remove references to these "blacklist" and "whitelist" terms besides the blacklisting feature as a whole, which can be handled in a separate JIRA/PR.

This touches quite a few files, but the changes are straightforward (variable/method/etc. name changes) and most quite self-contained.

### Why are the changes needed?

As per discussion on the Spark dev list, it will be beneficial to remove references to problematic language that can alienate potential community members. One such reference is "blacklist" and "whitelist". While it seems to me that there is some valid debate as to whether these terms have racist origins, the cultural connotations are inescapable in today's world.

### Does this PR introduce _any_ user-facing change?

In the test file `HiveQueryFileTest`, a developer has the ability to specify the system property `spark.hive.whitelist` to specify a list of Hive query files that should be tested. This system property has been renamed to `spark.hive.includelist`. The old property has been kept for compatibility, but will log a warning if used. I am open to feedback from others on whether keeping a deprecated property here is unnecessary given that this is just for developers running tests.

### How was this patch tested?

Existing tests should be suitable since no behavior changes are expected as a result of this PR.

Closes#28874 from xkrogen/xkrogen-SPARK-32036-rename-blacklists.

Authored-by: Erik Krogen <ekrogen@linkedin.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

This PR aims to drop Python 2.7, 3.4 and 3.5.

Roughly speaking, it removes all the widely known Python 2 compatibility workarounds such as `sys.version` comparison, `__future__`. Also, it removes the Python 2 dedicated codes such as `ArrayConstructor` in Spark.

### Why are the changes needed?

1. Unsupport EOL Python versions

2. Reduce maintenance overhead and remove a bit of legacy codes and hacks for Python 2.

3. PyPy2 has a critical bug that causes a flaky test, SPARK-28358 given my testing and investigation.

4. Users can use Python type hints with Pandas UDFs without thinking about Python version

5. Users can leverage one latest cloudpickle, https://github.com/apache/spark/pull/28950. With Python 3.8+ it can also leverage C pickle.

### Does this PR introduce _any_ user-facing change?

Yes, users cannot use Python 2.7, 3.4 and 3.5 in the upcoming Spark version.

### How was this patch tested?

Manually tested and also tested in Jenkins.

Closes#28957 from HyukjinKwon/SPARK-32138.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Modify the example for `timestamp_seconds` and replace `collect()` by `show()`.

### Why are the changes needed?

The SQL config `spark.sql.session.timeZone` doesn't influence on the `collect` in the example. The code below demonstrates that:

```

$ export TZ="UTC"

```

```python

>>> from pyspark.sql.functions import timestamp_seconds

>>> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

>>> time_df = spark.createDataFrame([(1230219000,)], ['unix_time'])

>>> time_df.select(timestamp_seconds(time_df.unix_time).alias('ts')).collect()

[Row(ts=datetime.datetime(2008, 12, 25, 15, 30))]

```

The expected time is **07:30 but we get 15:30**.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running the modified example via:

```

$ ./python/run-tests --modules=pyspark-sql

```

Closes#28959 from MaxGekk/SPARK-32088-fix-timezone-issue-followup.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add American timezone during timestamp_seconds doctest

### Why are the changes needed?

`timestamp_seconds` doctest in `functions.py` used default timezone to get expected result

For example:

```python

>>> time_df = spark.createDataFrame([(1230219000,)], ['unix_time'])

>>> time_df.select(timestamp_seconds(time_df.unix_time).alias('ts')).collect()

[Row(ts=datetime.datetime(2008, 12, 25, 7, 30))]

```

But when we have a non-american timezone, the test case will get different test result.

For example, when we set current timezone as `Asia/Shanghai`, the test result will be

```

[Row(ts=datetime.datetime(2008, 12, 25, 23, 30))]

```

So no matter where we run the test case ,we will always get the expected permanent result if we set the timezone on one specific area.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test

Closes#28932 from GuoPhilipse/SPARK-32088-fix-timezone-issue.

Lead-authored-by: GuoPhilipse <46367746+GuoPhilipse@users.noreply.github.com>

Co-authored-by: GuoPhilipse <guofei_ok@126.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

we fail casting from numeric to timestamp by default.

## Why are the changes needed?

casting from numeric to timestamp is not a non-standard,meanwhile it may generate different result between spark and other systems,for example hive

## Does this PR introduce any user-facing change?

Yes,user cannot cast numeric to timestamp directly,user have to use the following function to achieve the same effect:TIMESTAMP_SECONDS/TIMESTAMP_MILLIS/TIMESTAMP_MICROS

## How was this patch tested?

unit test added

Closes#28593 from GuoPhilipse/31710-fix-compatibility.

Lead-authored-by: GuoPhilipse <guofei_ok@126.com>

Co-authored-by: GuoPhilipse <46367746+GuoPhilipse@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

A small documentation change to clarify that the `rand()` function produces values in `[0.0, 1.0)`.

### Why are the changes needed?

`rand()` uses `Rand()` - which generates values in [0, 1) ([documented here](a1dbcd13a3/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/randomExpressions.scala (L71))). The existing documentation suggests that 1.0 is a possible value returned by rand (i.e for a distribution written as `X ~ U(a, b)`, x can be a or b, so `U[0.0, 1.0]` suggests the value returned could include 1.0).

### Does this PR introduce any user-facing change?

Only documentation changes.

### How was this patch tested?

Documentation changes only.

Closes#28071 from Smeb/master.

Authored-by: Ben Ryves <benjamin.ryves@getyourguide.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to update the doc for the `timeZone` option in JSON/CSV datasources and for the `tz` parameter of the `from_utc_timestamp()`/`to_utc_timestamp()` functions, and to restrict format of config's values to 2 forms:

1. Geographical regions, such as `America/Los_Angeles`.

2. Fixed offsets - a fully resolved offset from UTC. For example, `-08:00`.

### Why are the changes needed?

Other formats such as three-letter time zone IDs are ambitious, and depend on the locale. For example, `CST` could be U.S. `Central Standard Time` and `China Standard Time`. Such formats have been already deprecated in JDK, see [Three-letter time zone IDs](https://docs.oracle.com/javase/8/docs/api/java/util/TimeZone.html).

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running `./dev/scalastyle`, and manual testing.

Closes#28051 from MaxGekk/doc-time-zone-option.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Based on the discussion in the mailing list [[Proposal] Modification to Spark's Semantic Versioning Policy](http://apache-spark-developers-list.1001551.n3.nabble.com/Proposal-Modification-to-Spark-s-Semantic-Versioning-Policy-td28938.html) , this PR is to add back the following APIs whose maintenance cost are relatively small.

- functions.toDegrees/toRadians

- functions.approxCountDistinct

- functions.monotonicallyIncreasingId

- Column.!==

- Dataset.explode

- Dataset.registerTempTable

- SQLContext.getOrCreate, setActive, clearActive, constructors

Below is the other removed APIs in the original PR, but not added back in this PR [https://issues.apache.org/jira/browse/SPARK-25908]:

- Remove some AccumulableInfo .apply() methods

- Remove non-label-specific multiclass precision/recall/fScore in favor of accuracy

- Remove unused Python StorageLevel constants

- Remove unused multiclass option in libsvm parsing

- Remove references to deprecated spark configs like spark.yarn.am.port

- Remove TaskContext.isRunningLocally

- Remove ShuffleMetrics.shuffle* methods

- Remove BaseReadWrite.context in favor of session

### Why are the changes needed?

Avoid breaking the APIs that are commonly used.

### Does this PR introduce any user-facing change?

Adding back the APIs that were removed in 3.0 branch does not introduce the user-facing changes, because Spark 3.0 has not been released.

### How was this patch tested?

Added a new test suite for these APIs.

Author: gatorsmile <gatorsmile@gmail.com>

Author: yi.wu <yi.wu@databricks.com>

Closes#27821 from gatorsmile/addAPIBackV2.

### What changes were proposed in this pull request?

the link for `partition discovery` is malformed, because for releases, there will contains` /docs/<version>/` in the full URL.

### Why are the changes needed?

fix doc

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

`SKIP_SCALADOC=1 SKIP_RDOC=1 SKIP_SQLDOC=1 jekyll serve` locally verified

Closes#28017 from yaooqinn/doc.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

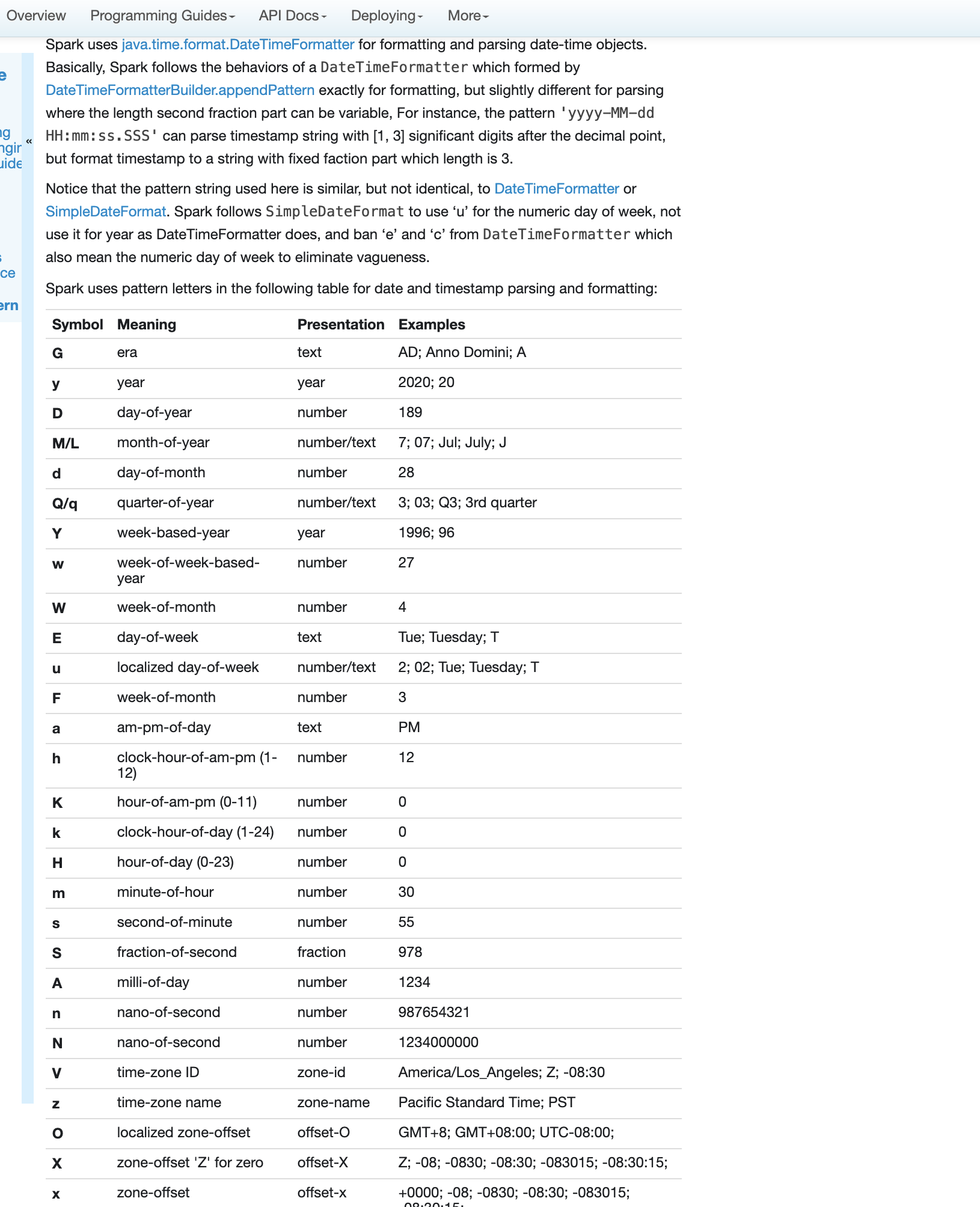

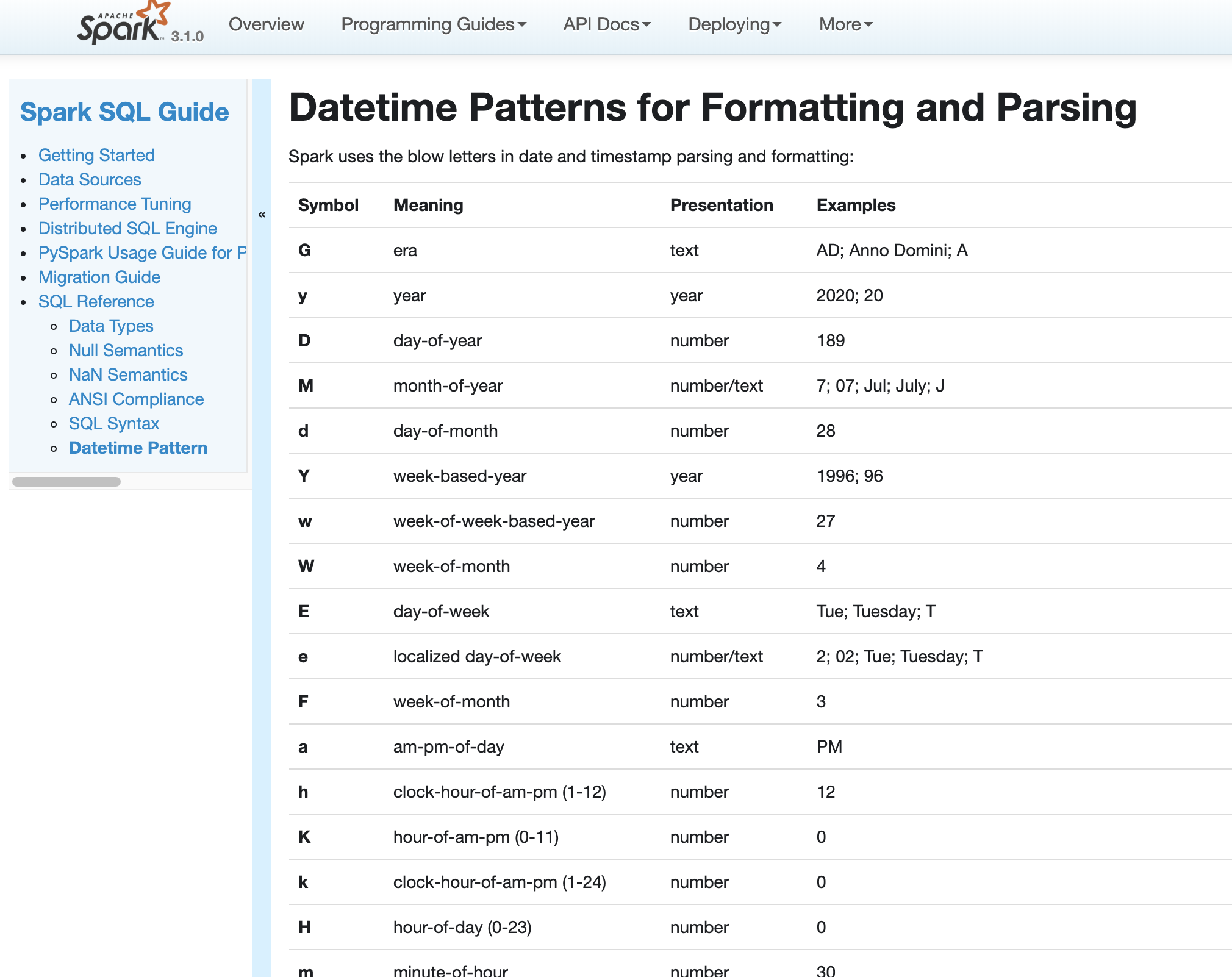

### What changes were proposed in this pull request?

Fix errors and missing parts for datetime pattern document

1. The pattern we use is similar to DateTimeFormatter and SimpleDateFormat but not identical. So we shouldn't use any of them in the API docs but use a link to the doc of our own.

2. Some pattern letters are missing

3. Some pattern letters are explicitly banned - Set('A', 'c', 'e', 'n', 'N')

4. the second fraction pattern different logic for parsing and formatting

### Why are the changes needed?

fix and improve doc

### Does this PR introduce any user-facing change?

yes, new and updated doc

### How was this patch tested?

pass Jenkins

viewed locally with `jekyll serve`

Closes#27956 from yaooqinn/SPARK-31189.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

- Adds following overloaded variants to Scala `o.a.s.sql.functions`:

- `percentile_approx(e: Column, percentage: Array[Double], accuracy: Long): Column`

- `percentile_approx(columnName: String, percentage: Array[Double], accuracy: Long): Column`

- `percentile_approx(e: Column, percentage: Double, accuracy: Long): Column`

- `percentile_approx(columnName: String, percentage: Double, accuracy: Long): Column`

- `percentile_approx(e: Column, percentage: Seq[Double], accuracy: Long): Column` (primarily for

Python interop).

- `percentile_approx(columnName: String, percentage: Seq[Double], accuracy: Long): Column`

- Adds `percentile_approx` to `pyspark.sql.functions`.

- Adds `percentile_approx` function to SparkR.

### Why are the changes needed?

Currently we support `percentile_approx` only in SQL expression. It is inconvenient and makes this function relatively unknown.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New unit tests for SparkR an PySpark.

As for now there are no additional tests in Scala API ‒ `ApproximatePercentile` is well tested and Python (including docstrings) and R tests provide additional tests, so it seems unnecessary.

Closes#27278 from zero323/SPARK-30569.

Lead-authored-by: zero323 <mszymkiewicz@gmail.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In Spark version 2.4 and earlier, datetime parsing, formatting and conversion are performed by using the hybrid calendar (Julian + Gregorian).

Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen one in ANSI SQL standard, Spark 3.0 switches to it by using Java 8 API classes (the java.time packages that are based on ISO chronology ). The switching job is completed in SPARK-26651.

But after the switching, there are some patterns not compatible between Java 8 and Java 7, Spark needs its own definition on the patterns rather than depends on Java API.

In this PR, we achieve this by writing the document and shadow the incompatible letters. See more details in [SPARK-31030](https://issues.apache.org/jira/browse/SPARK-31030)

### Why are the changes needed?

For backward compatibility.

### Does this PR introduce any user-facing change?

No.

After we define our own datetime parsing and formatting patterns, it's same to old Spark version.

### How was this patch tested?

Existing and new added UT.

Locally document test:

Closes#27830 from xuanyuanking/SPARK-31030.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR add Python API for invoking following higher functions:

- `transform`

- `exists`

- `forall`

- `filter`

- `aggregate`

- `zip_with`

- `transform_keys`

- `transform_values`

- `map_filter`

- `map_zip_with`

to `pyspark.sql`. Each of these accepts plain Python functions of one of the following types

- `(Column) -> Column: ...`

- `(Column, Column) -> Column: ...`

- `(Column, Column, Column) -> Column: ...`

Internally this proposal piggbacks on objects supporting Scala implementation ([SPARK-27297](https://issues.apache.org/jira/browse/SPARK-27297)) by:

1. Creating required `UnresolvedNamedLambdaVariables` exposing these as PySpark `Columns`

2. Invoking Python function with these columns as arguments.

3. Using the result, and underlying JVM objects from 1., to create `expressions.LambdaFunction` which is passed to desired expression, and repacked as Python `Column`.

### Why are the changes needed?

Currently higher order functions are available only using SQL and Scala API and can use only SQL expressions

```python

df.selectExpr("transform(values, x -> x + 1)")

```

This works reasonably well for simple functions, but can get really ugly with complex functions (complex functions, casts), resulting objects are somewhat verbose and we don't get any IDE support. Additionally DSL used, though very simple, is not documented.

With changes propose here, above query could be rewritten as:

```python

df.select(transform("values", lambda x: x + 1))

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

- For positive cases this PR adds doctest strings covering possible usage patterns.

- For negative cases (unsupported function types) this PR adds unit tests.

### Notes

If approved, the same approach can be used in SparkR.

Closes#27406 from zero323/SPARK-30681.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

This commit is published into the public domain.

### What changes were proposed in this pull request?

Some syntax issues in docstrings have been fixed.

### Why are the changes needed?

In some places, the documentation did not render as intended, e.g. parameter documentations were not formatted as such.

### Does this PR introduce any user-facing change?

Slight improvements in documentation.

### How was this patch tested?

Manual testing. No new Sphinx warnings arise due to this change.

Closes#27613 from DavidToneian/SPARK-30859.

Authored-by: David Toneian <david@toneian.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a follow-up for #23124, add a new config `spark.sql.legacy.allowDuplicatedMapKeys` to control the behavior of removing duplicated map keys in build-in functions. With the default value `false`, Spark will throw a RuntimeException while duplicated keys are found.

### Why are the changes needed?

Prevent silent behavior changes.

### Does this PR introduce any user-facing change?

Yes, new config added and the default behavior for duplicated map keys changed to RuntimeException thrown.

### How was this patch tested?

Modify existing UT.

Closes#27478 from xuanyuanking/SPARK-25892-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This reverts commit 1d20d13149.

Closes#27351 from gatorsmile/revertSPARK25496.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Minor documentation fix

### Why are the changes needed?

### Does this PR introduce any user-facing change?

### How was this patch tested?

Manually; consider adding tests?

Closes#27295 from deepyaman/patch-2.

Authored-by: Deepyaman Datta <deepyaman.datta@utexas.edu>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adds:

- `pyspark.sql.functions.overlay` function to PySpark

- `overlay` function to SparkR

### Why are the changes needed?

Feature parity. At the moment R and Python users can access this function only using SQL or `expr` / `selectExpr`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New unit tests.

Closes#27325 from zero323/SPARK-30607.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to move pandas related functionalities into pandas package. Namely:

```bash

pyspark/sql/pandas

├── __init__.py

├── conversion.py # Conversion between pandas <> PySpark DataFrames

├── functions.py # pandas_udf

├── group_ops.py # Grouped UDF / Cogrouped UDF + groupby.apply, groupby.cogroup.apply

├── map_ops.py # Map Iter UDF + mapInPandas

├── serializers.py # pandas <> PyArrow serializers

├── types.py # Type utils between pandas <> PyArrow

└── utils.py # Version requirement checks

```

In order to separately locate `groupby.apply`, `groupby.cogroup.apply`, `mapInPandas`, `toPandas`, and `createDataFrame(pdf)` under `pandas` sub-package, I had to use a mix-in approach which Scala side uses often by `trait`, and also pandas itself uses this approach (see `IndexOpsMixin` as an example) to group related functionalities. Currently, you can think it's like Scala's self typed trait. See the structure below:

```python

class PandasMapOpsMixin(object):

def mapInPandas(self, ...):

...

return ...

# other Pandas <> PySpark APIs

```

```python

class DataFrame(PandasMapOpsMixin):

# other DataFrame APIs equivalent to Scala side.

```

Yes, This is a big PR but they are mostly just moving around except one case `createDataFrame` which I had to split the methods.

### Why are the changes needed?

There are pandas functionalities here and there and I myself gets lost where it was. Also, when you have to make a change commonly for all of pandas related features, it's almost impossible now.

Also, after this change, `DataFrame` and `SparkSession` become more consistent with Scala side since pandas is specific to Python, and this change separates pandas-specific APIs away from `DataFrame` or `SparkSession`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests should cover. Also, I manually built the PySpark API documentation and checked.

Closes#27109 from HyukjinKwon/pandas-refactoring.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adds a note first and last can be non-deterministic in SQL function docs as well.

This is already documented in `functions.scala`.

### Why are the changes needed?

Some people look reading SQL docs only.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Jenkins will test.

Closes#27099 from HyukjinKwon/SPARK-30335.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to fix documentation for slide function. Fixed the spacing issue and added some parameter related info.

### Why are the changes needed?

Documentation improvement

### Does this PR introduce any user-facing change?

No (doc-only change).

### How was this patch tested?

Manually tested by documentation build.

Closes#26896 from bboutkov/pyspark_doc_fix.

Authored-by: Boris Boutkov <boris.boutkov@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

This PR adds some extra documentation for the new Cogrouped map Pandas udfs. Specifically:

- Updated the usage guide for the new `COGROUPED_MAP` Pandas udfs added in https://github.com/apache/spark/pull/24981

- Updated the docstring for pandas_udf to include the COGROUPED_MAP type as suggested by HyukjinKwon in https://github.com/apache/spark/pull/25939Closes#26110 from d80tb7/SPARK-29126-cogroup-udf-usage-guide.

Authored-by: Chris Martin <chris@cmartinit.co.uk>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to allow `array_contains` to take column instances.

### Why are the changes needed?

For consistent support in Scala and Python APIs. Scala allows column instances at `array_contains`

Scala:

```scala

import org.apache.spark.sql.functions._

val df = Seq(Array("a", "b", "c"), Array.empty[String]).toDF("data")

df.select(array_contains($"data", lit("a"))).show()

```

Python:

```python

from pyspark.sql.functions import array_contains, lit

df = spark.createDataFrame([(["a", "b", "c"],), ([],)], ['data'])

df.select(array_contains(df.data, lit("a"))).show()

```

However, PySpark sides does not allow.

### Does this PR introduce any user-facing change?

Yes.

```python

from pyspark.sql.functions import array_contains, lit

df = spark.createDataFrame([(["a", "b", "c"],), ([],)], ['data'])

df.select(array_contains(df.data, lit("a"))).show()

```

**Before:**

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/.../spark/python/pyspark/sql/functions.py", line 1950, in array_contains

return Column(sc._jvm.functions.array_contains(_to_java_column(col), value))

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1277, in __call__

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1241, in _build_args

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1228, in _get_args

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_collections.py", line 500, in convert

File "/.../spark/python/pyspark/sql/column.py", line 344, in __iter__

raise TypeError("Column is not iterable")

TypeError: Column is not iterable

```

**After:**

```

+-----------------------+

|array_contains(data, a)|

+-----------------------+

| true|

| false|

+-----------------------+

```

### How was this patch tested?

Manually tested and added a doctest.

Closes#26288 from HyukjinKwon/SPARK-29627.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR makes `element_at` in PySpark able to take PySpark `Column` instances.

### Why are the changes needed?

To match with Scala side. Seems it was intended but not working correctly as a bug.

### Does this PR introduce any user-facing change?

Yes. See below:

```python

from pyspark.sql import functions as F

x = spark.createDataFrame([([1,2,3],1),([4,5,6],2),([7,8,9],3)],['list','num'])

x.withColumn('aa',F.element_at('list',x.num.cast('int'))).show()

```

Before:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/.../spark/python/pyspark/sql/functions.py", line 2059, in element_at

return Column(sc._jvm.functions.element_at(_to_java_column(col), extraction))

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1277, in __call__

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1241, in _build_args

File "/.../spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_gateway.py", line 1228, in _get_args

File "/.../forked/spark/python/lib/py4j-0.10.8.1-src.zip/py4j/java_collections.py", line 500, in convert

File "/.../spark/python/pyspark/sql/column.py", line 344, in __iter__

raise TypeError("Column is not iterable")

TypeError: Column is not iterable

```

After:

```

+---------+---+---+

| list|num| aa|

+---------+---+---+

|[1, 2, 3]| 1| 1|

|[4, 5, 6]| 2| 5|

|[7, 8, 9]| 3| 9|

+---------+---+---+

```

### How was this patch tested?

Manually tested against literal, Python native types, and PySpark column.

Closes#25950 from HyukjinKwon/SPARK-29240.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Added "array indices start at 1" in annotation to make it clear for the usage of function slice, in R Scala Python component

### Why are the changes needed?

It will throw exception if the value stare is 0, but array indices start at 0 most of times in other scenarios.

### Does this PR introduce any user-facing change?

Yes, more info provided to user.

### How was this patch tested?

No tests added, only doc change.

Closes#25704 from sheepstop/master.

Authored-by: sheepstop <yangting617@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The Experimental and Evolving annotations are both (like Unstable) used to express that a an API may change. However there are many things in the code that have been marked that way since even Spark 1.x. Per the dev thread, anything introduced at or before Spark 2.3.0 is pretty much 'stable' in that it would not change without a deprecation cycle. Therefore I'd like to remove most of these annotations. And, remove the `:: Experimental ::` scaladoc tag too. And likewise for Python, R.

The changes below can be summarized as:

- Generally, anything introduced at or before Spark 2.3.0 has been unmarked as neither Evolving nor Experimental

- Obviously experimental items like DSv2, Barrier mode, ExperimentalMethods are untouched

- I _did_ unmark a few MLlib classes introduced in 2.4, as I am quite confident they're not going to change (e.g. KolmogorovSmirnovTest, PowerIterationClustering)

It's a big change to review, so I'd suggest scanning the list of _files_ changed to see if any area seems like it should remain partly experimental and examine those.

### Why are the changes needed?

Many of these annotations are incorrect; the APIs are de facto stable. Leaving them also makes legitimate usages of the annotations less meaningful.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#25558 from srowen/SPARK-28855.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

The parameters doc string of the function format_string was changed from _col_, _d_ to _format_, _cols_ which is what the actual function declaration states

### Why are the changes needed?

The parameters stated by the documentation was inaccurate

### Does this PR introduce any user-facing change?

Yes.

**BEFORE**

**AFTER**

### How was this patch tested?

N/A: documentation only

<!--

If tests were added, say they were added here. Please make sure to add some test cases that check the changes thoroughly including negative and positive cases if possible.

If it was tested in a way different from regular unit tests, please clarify how you tested step by step, ideally copy and paste-able, so that other reviewers can test and check, and descendants can verify in the future.

If tests were not added, please describe why they were not added and/or why it was difficult to add.

-->

Closes#25506 from darrentirto/SPARK-28777.

Authored-by: darrentirto <darrentirto@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

In the PR, I propose to use `uuuu` for years instead of `yyyy` in date/timestamp patterns without the era pattern `G` (https://docs.oracle.com/javase/8/docs/api/java/time/format/DateTimeFormatter.html). **Parsing/formatting of positive years (current era) will be the same.** The difference is in formatting negative years belong to previous era - BC (Before Christ).

I replaced the `yyyy` pattern by `uuuu` everywhere except:

1. Test, Suite & Benchmark. Existing tests must work as is.

2. `SimpleDateFormat` because it doesn't support the `uuuu` pattern.

3. Comments and examples (except comments related to already replaced patterns).

Before the changes, the year of common era `100` and the year of BC era `-99`, showed similarly as `100`. After the changes negative years will be formatted with the `-` sign.

Before:

```Scala

scala> Seq(java.time.LocalDate.of(-99, 1, 1)).toDF().show

+----------+

| value|

+----------+

|0100-01-01|

+----------+

```

After:

```Scala

scala> Seq(java.time.LocalDate.of(-99, 1, 1)).toDF().show

+-----------+

| value|

+-----------+

|-0099-01-01|

+-----------+

```

## How was this patch tested?

By existing test suites, and added tests for negative years to `DateFormatterSuite` and `TimestampFormatterSuite`.

Closes#25230 from MaxGekk/year-pattern-uuuu.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This adds simple check for `count` argument:

- If it is a `Column` we apply `_to_java_column` before invoking JVM counterpart

- Otherwise we proceed as before.

## How was this patch tested?

Manual testing.

Closes#25193 from zero323/SPARK-28278.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

In the PR, I propose to convert options values to strings by using `to_str()` for the following functions: `from_csv()`, `to_csv()`, `from_json()`, `to_json()`, `schema_of_csv()` and `schema_of_json()`. This will make handling of function options consistent to option handling in `DataFrameReader`/`DataFrameWriter`.

For example:

```Python

df.select(from_csv(df.value, "s string", {'ignoreLeadingWhiteSpace': True})

```

## How was this patch tested?

Added an example for `from_csv()` which was tested by:

```Shell

./python/run-tests --testnames pyspark.sql.functions

```

Closes#25182 from MaxGekk/options_to_str.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR proposes to rename `mapPartitionsInPandas` to `mapInPandas` with a separate evaluation type .

Had an offline discussion with rxin, mengxr and cloud-fan

The reason is basically:

1. `SCALAR_ITER` doesn't make sense with `mapPartitionsInPandas`.

2. It cannot share the same Pandas UDF, for instance, at `select` and `mapPartitionsInPandas` unlike `GROUPED_AGG` because iterator's return type is different.

3. `mapPartitionsInPandas` -> `mapInPandas` - see https://github.com/apache/spark/pull/25044#issuecomment-508298552 and https://github.com/apache/spark/pull/25044#issuecomment-508299764

Renaming `SCALAR_ITER` as `MAP_ITER` is abandoned due to 2. reason.

For `XXX_ITER`, it might have to have a different interface in the future if we happen to add other versions of them. But this is an orthogonal topic with `mapPartitionsInPandas`.

## How was this patch tested?

Existing tests should cover.

Closes#25044 from HyukjinKwon/SPARK-28198.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

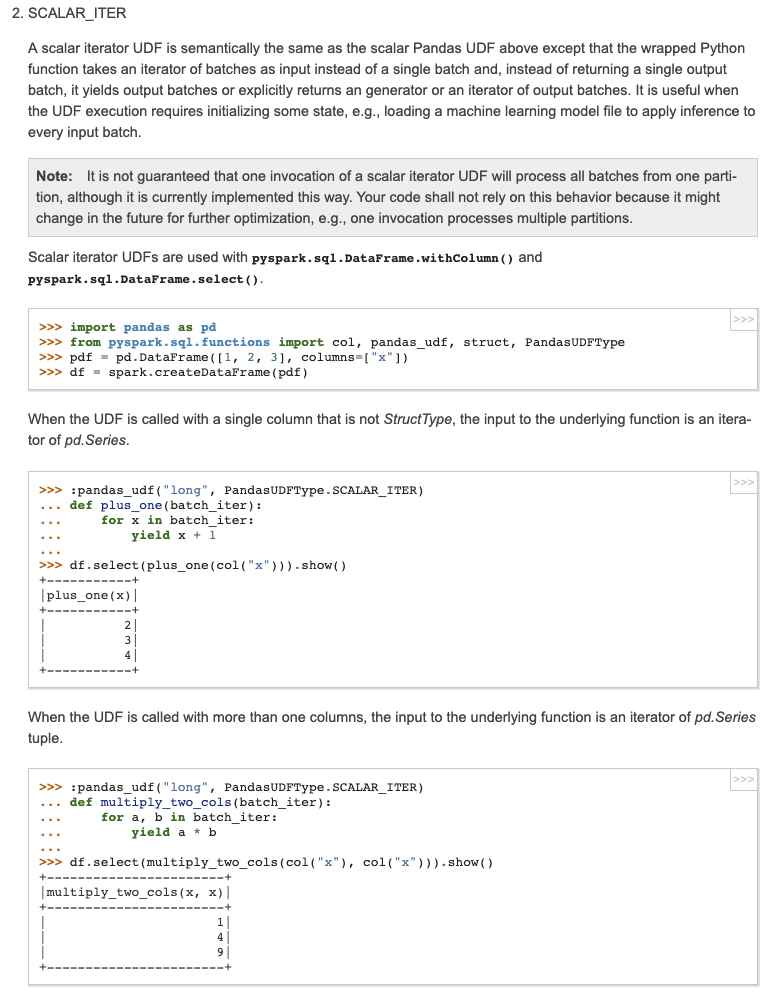

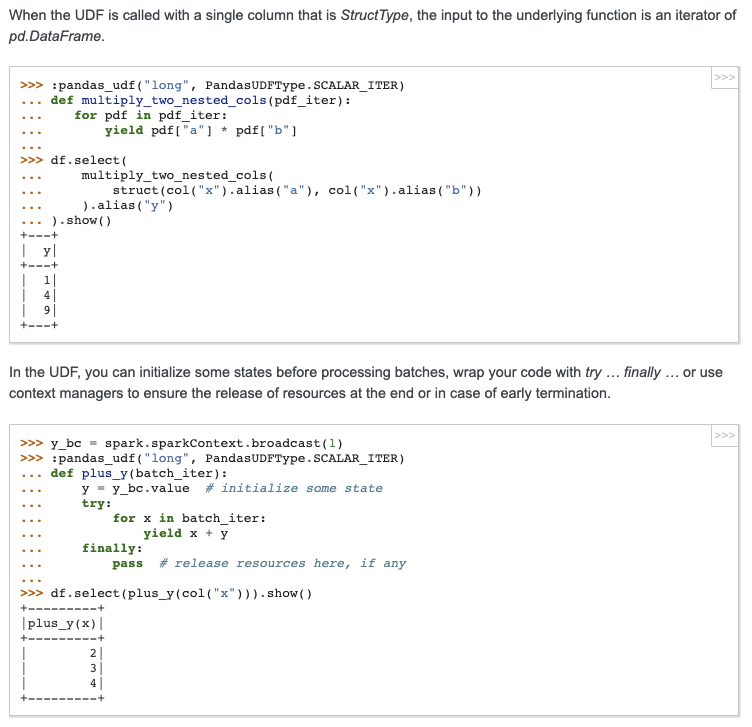

## What changes were proposed in this pull request?

Add docstring/doctest for `SCALAR_ITER` Pandas UDF. I explicitly mentioned that per-partition execution is an implementation detail, not guaranteed. I will submit another PR to add the same to user guide, just to keep this PR minimal.

I didn't add "doctest: +SKIP" in the first commit so it is easy to test locally.

cc: HyukjinKwon gatorsmile icexelloss BryanCutler WeichenXu123

## How was this patch tested?

doctest

Closes#25005 from mengxr/SPARK-28056.2.

Authored-by: Xiangrui Meng <meng@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>