These options were used to configure the built-in JRE SSL libraries

when downloading files from HTTPS servers. But because they were also

used to set up the now (long) removed internal HTTPS file server,

their default configuration chose convenience over security by having

overly lenient settings.

This change removes the configuration options that affect the JRE SSL

libraries. The JRE trust store can still be configured via system

properties (or globally in the JRE security config). The only lost

functionality is not being able to disable the default hostname

verifier when using spark-submit, which should be fine since Spark

itself is not using https for any internal functionality anymore.

I also removed the HTTP-related code from the REPL class loader, since

we haven't had a HTTP server for REPL-generated classes for a while.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20723 from vanzin/SPARK-23538.

## What changes were proposed in this pull request?

Before this commit, a non-interruptible iterator is returned if aggregator or ordering is specified.

This commit also ensures that sorter is closed even when task is cancelled(killed) in the middle of sorting.

## How was this patch tested?

Add a unit test in JobCancellationSuite

Author: Xianjin YE <advancedxy@gmail.com>

Closes#20449 from advancedxy/SPARK-23040.

## What changes were proposed in this pull request?

The algorithm in `DefaultPartitionCoalescer.setupGroups` is responsible for picking preferred locations for coalesced partitions. It analyzes the preferred locations of input partitions. It starts by trying to create one partition for each unique location in the input. However, if the the requested number of coalesced partitions is higher that the number of unique locations, it has to pick duplicate locations.

Previously, the duplicate locations would be picked by iterating over the input partitions in order, and copying their preferred locations to coalesced partitions. If the input partitions were clustered by location, this could result in severe skew.

With the fix, instead of iterating over the list of input partitions in order, we pick them at random. It's not perfectly balanced, but it's much better.

## How was this patch tested?

Unit test reproducing the behavior was added.

Author: Ala Luszczak <ala@databricks.com>

Closes#20664 from ala/SPARK-23496.

## What changes were proposed in this pull request?

When the shuffle dependency specifies aggregation ,and `dependency.mapSideCombine=false`, in the map side,there is no need for aggregation and sorting, so we should be able to use serialized sorting.

## How was this patch tested?

Existing unit test

Author: liuxian <liu.xian3@zte.com.cn>

Closes#20576 from 10110346/mapsidecombine.

The ExecutorAllocationManager should not adjust the target number of

executors when killing idle executors, as it has already adjusted the

target number down based on the task backlog.

The name `replace` was misleading with DynamicAllocation on, as the target number

of executors is changed outside of the call to `killExecutors`, so I adjusted that name. Also separated out the logic of `countFailures` as you don't always want that tied to `replace`.

While I was there I made two changes that weren't directly related to this:

1) Fixed `countFailures` in a couple cases where it was getting an incorrect value since it used to be tied to `replace`, eg. when killing executors on a blacklisted node.

2) hard error if you call `sc.killExecutors` with dynamic allocation on, since that's another way the ExecutorAllocationManager and the CoarseGrainedSchedulerBackend would get out of sync.

Added a unit test case which verifies that the calls to ExecutorAllocationClient do not adjust the number of executors.

Author: Imran Rashid <irashid@cloudera.com>

Closes#20604 from squito/SPARK-23365.

## What changes were proposed in this pull request?

If spark is run with "spark.authenticate=true", then it will fail to start in local mode.

This PR generates secret in local mode when authentication on.

## How was this patch tested?

Modified existing unit test.

Manually started spark-shell.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes#20652 from gaborgsomogyi/SPARK-23476.

## What changes were proposed in this pull request?

SPARK-20648 introduced the status `SKIPPED` for the stages. On the UI, previously, skipped stages were shown as `PENDING`; after this change, they are not shown on the UI.

The PR introduce a new section in order to show also `SKIPPED` stages in a proper table.

## How was this patch tested?

manual tests

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20651 from mgaido91/SPARK-23475.

## What changes were proposed in this pull request?

The root cause of missing completed stages is because `cleanupStages` will never remove skipped stages.

This PR changes the logic to always remove skipped stage first. This is safe since the job itself contains enough information to render skipped stages in the UI.

## How was this patch tested?

The new unit tests.

Author: Shixiong Zhu <zsxwing@gmail.com>

Closes#20656 from zsxwing/SPARK-23475.

## What changes were proposed in this pull request?

The issue here is `AppStatusStore.lastStageAttempt` will return the next available stage in the store when a stage doesn't exist.

This PR adds `last(stageId)` to ensure it returns a correct `StageData`

## How was this patch tested?

The new unit test.

Author: Shixiong Zhu <zsxwing@gmail.com>

Closes#20654 from zsxwing/SPARK-23481.

## What changes were proposed in this pull request?

Fixing exception got at sorting tasks by Host / Executor ID:

```

java.lang.IllegalArgumentException: Invalid sort column: Host

at org.apache.spark.ui.jobs.ApiHelper$.indexName(StagePage.scala:1017)

at org.apache.spark.ui.jobs.TaskDataSource.sliceData(StagePage.scala:694)

at org.apache.spark.ui.PagedDataSource.pageData(PagedTable.scala:61)

at org.apache.spark.ui.PagedTable$class.table(PagedTable.scala:96)

at org.apache.spark.ui.jobs.TaskPagedTable.table(StagePage.scala:708)

at org.apache.spark.ui.jobs.StagePage.liftedTree1$1(StagePage.scala:293)

at org.apache.spark.ui.jobs.StagePage.render(StagePage.scala:282)

at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82)

at org.apache.spark.ui.WebUI$$anonfun$2.apply(WebUI.scala:82)

at org.apache.spark.ui.JettyUtils$$anon$3.doGet(JettyUtils.scala:90)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:687)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:790)

at org.spark_project.jetty.servlet.ServletHolder.handle(ServletHolder.java:848)

at org.spark_project.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:584)

```

Moreover some refactoring to avoid similar problems by introducing constants for each header name and reusing them at the identification of the corresponding sorting index.

## How was this patch tested?

Manually:

Author: “attilapiros” <piros.attila.zsolt@gmail.com>

Closes#20601 from attilapiros/SPARK-23413.

## What changes were proposed in this pull request?

`ReadAheadInputStream` was introduced in https://github.com/apache/spark/pull/18317/ to optimize reading spill files from disk.

However, from the profiles it seems that the hot path of reading small amounts of data (like readInt) is inefficient - it involves taking locks, and multiple checks.

Optimize locking: Lock is not needed when simply accessing the active buffer. Only lock when needing to swap buffers or trigger async reading, or get information about the async state.

Optimize short-path single byte reads, that are used e.g. by Java library DataInputStream.readInt.

The asyncReader used to call "read" only once on the underlying stream, that never filled the underlying buffer when it was wrapping an LZ4BlockInputStream. If the buffer was returned unfilled, that would trigger the async reader to be triggered to fill the read ahead buffer on each call, because the reader would see that the active buffer is below the refill threshold all the time.

However, filling the full buffer all the time could introduce increased latency, so also add an `AtomicBoolean` flag for the async reader to return earlier if there is a reader waiting for data.

Remove `readAheadThresholdInBytes` and instead immediately trigger async read when switching the buffers. It allows to simplify code paths, especially the hot one that then only has to check if there is available data in the active buffer, without worrying if it needs to retrigger async read. It seems to have positive effect on perf.

## How was this patch tested?

It was noticed as a regression in some workloads after upgrading to Spark 2.3.

It was particularly visible on TPCDS Q95 running on instances with fast disk (i3 AWS instances).

Running with profiling:

* Spark 2.2 - 5.2-5.3 minutes 9.5% in LZ4BlockInputStream.read

* Spark 2.3 - 6.4-6.6 minutes 31.1% in ReadAheadInputStream.read

* Spark 2.3 + fix - 5.3-5.4 minutes 13.3% in ReadAheadInputStream.read - very slightly slower, practically within noise.

We didn't see other regressions, and many workloads in general seem to be faster with Spark 2.3 (not investigated if thanks to async readed, or unrelated).

Author: Juliusz Sompolski <julek@databricks.com>

Closes#20555 from juliuszsompolski/SPARK-23366.

## What changes were proposed in this pull request?

The purpose of this PR to reflect the stage level blacklisting on the executor tab for the currently active stages.

After this change in the executor tab at the Status column one of the following label will be:

- "Blacklisted" when the executor is blacklisted application level (old flag)

- "Dead" when the executor is not Blacklisted and not Active

- "Blacklisted in Stages: [...]" when the executor is Active but the there are active blacklisted stages for the executor. Within the [] coma separated active stageIDs are listed.

- "Active" when the executor is Active and there is no active blacklisted stages for the executor

## How was this patch tested?

Both with unit tests and manually.

#### Manual test

Spark was started as:

```bash

bin/spark-shell --master "local-cluster[2,1,1024]" --conf "spark.blacklist.enabled=true" --conf "spark.blacklist.stage.maxFailedTasksPerExecutor=1" --conf "spark.blacklist.application.maxFailedTasksPerExecutor=10"

```

And the job was:

```scala

import org.apache.spark.SparkEnv

val pairs = sc.parallelize(1 to 10000, 10).map { x =>

if (SparkEnv.get.executorId.toInt == 0) throw new RuntimeException("Bad executor")

else {

Thread.sleep(10)

(x % 10, x)

}

}

val all = pairs.cogroup(pairs)

all.collect()

```

UI screenshots about the running:

- One executor is blacklisted in the two stages:

- One stage completes the other one is still running:

- Both stages are completed:

### Unit tests

In AppStatusListenerSuite.scala both the node blacklisting for a stage and the executor blacklisting for stage are tested.

Author: “attilapiros” <piros.attila.zsolt@gmail.com>

Closes#20408 from attilapiros/SPARK-23189.

## What changes were proposed in this pull request?

In this PR StorageStatus is made to private and simplified a bit moreover SparkContext.getExecutorStorageStatus method is removed. The reason of keeping StorageStatus is that it is usage from SparkContext.getRDDStorageInfo.

Instead of the method SparkContext.getExecutorStorageStatus executor infos are extended with additional memory metrics such as usedOnHeapStorageMemory, usedOffHeapStorageMemory, totalOnHeapStorageMemory, totalOffHeapStorageMemory.

## How was this patch tested?

By running existing unit tests.

Author: “attilapiros” <piros.attila.zsolt@gmail.com>

Author: Attila Zsolt Piros <2017933+attilapiros@users.noreply.github.com>

Closes#20546 from attilapiros/SPARK-20659.

## What changes were proposed in this pull request?

Currently, we use SBT and MAVN to spark unit test, are affected by the parameters of `spark.testing`. However, when using the IDE test tool, `spark.testing` support is not very good, sometimes need to be manually added to the beforeEach. example: HiveSparkSubmitSuite RPackageUtilsSuite SparkSubmitSuite. The PR unified `spark.testing` parameter extraction to SparkFunSuite, support IDE test tool, and the test code is more compact.

## How was this patch tested?

the existed test cases.

Author: caoxuewen <cao.xuewen@zte.com.cn>

Closes#20582 from heary-cao/sparktesting.

## What changes were proposed in this pull request?

When `DebugFilesystem` closes opened stream, if any exception occurs, we still need to remove the open stream record from `DebugFilesystem`. Otherwise, it goes to report leaked filesystem connection.

## How was this patch tested?

Existing tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20524 from viirya/SPARK-23345.

## What changes were proposed in this pull request?

When a task is still running, metrics like executorRunTime are not available. Then `schedulerDelay` will be almost the same as `duration` and that's confusing.

This PR makes `schedulerDelay` return 0 when the task is running which is the same behavior as 2.2.

## How was this patch tested?

`AppStatusUtilsSuite.schedulerDelay`

Author: Shixiong Zhu <zsxwing@gmail.com>

Closes#20493 from zsxwing/SPARK-23326.

## What changes were proposed in this pull request?

Sort jobs/stages/tasks/queries with the completed timestamp before cleaning up them to make the behavior consistent with 2.2.

## How was this patch tested?

- Jenkins.

- Manually ran the following codes and checked the UI for jobs/stages/tasks/queries.

```

spark.ui.retainedJobs 10

spark.ui.retainedStages 10

spark.sql.ui.retainedExecutions 10

spark.ui.retainedTasks 10

```

```

new Thread() {

override def run() {

spark.range(1, 2).foreach { i =>

Thread.sleep(10000)

}

}

}.start()

Thread.sleep(5000)

for (_ <- 1 to 20) {

new Thread() {

override def run() {

spark.range(1, 2).foreach { i =>

}

}

}.start()

}

Thread.sleep(15000)

spark.range(1, 2).foreach { i =>

}

sc.makeRDD(1 to 100, 100).foreach { i =>

}

```

Author: Shixiong Zhu <zsxwing@gmail.com>

Closes#20481 from zsxwing/SPARK-23307.

## What changes were proposed in this pull request?

Shuffle Index temporay file is used for atomic creating shuffle index file, it is not needed when the index file already exists after another attempts of same task had it done.

## How was this patch tested?

exitsting ut

cc squito

Author: Kent Yao <yaooqinn@hotmail.com>

Closes#20422 from yaooqinn/SPARK-23253.

First the bad news: there's an unfixable race in the launcher code.

(By unfixable I mean it would take a lot more effort than this change

to fix it.) The good news is that it should only affect super short

lived applications, such as the one run by the flaky test, so it's

possible to work around it in our test.

The fix also uncovered an issue with the recently added "closeAndWait()"

method; closing the connection would still possibly cause data loss,

so this change waits a while for the connection to finish itself, and

closes the socket if that times out. The existing connection timeout

is reused so that if desired it's possible to control how long to wait.

As part of that I also restored the old behavior that disconnect() would

force a disconnection from the child app; the "wait for data to arrive"

approach is only taken when disposing of the handle.

I tested this by inserting a bunch of sleeps in the test and the socket

handling code in the launcher library; with those I was able to reproduce

the error from the jenkins jobs. With the changes, even with all the

sleeps still in place, all tests pass.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20462 from vanzin/SPARK-23020.

## What changes were proposed in this pull request?

`channel.write(buf)` may not write the whole buffer since the underlying channel is a FileChannel, we should retry until the whole buffer is written.

## How was this patch tested?

Jenkins

Author: Shixiong Zhu <zsxwing@gmail.com>

Closes#20461 from zsxwing/SPARK-23289.

The JVM seems to be doing early binding of classes that the Hive provider

depends on, causing an error to be thrown before it was caught by the code

in the class.

The fix wraps the creation of the provider in a try..catch so that

the provider can be ignored when dependencies are missing.

Added a unit test (which fails without the fix), and also tested

that getting tokens still works in a real cluster.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20399 from vanzin/SPARK-23209.

## What changes were proposed in this pull request?

Currently shuffle repartition uses RoundRobinPartitioning, the generated result is nondeterministic since the sequence of input rows are not determined.

The bug can be triggered when there is a repartition call following a shuffle (which would lead to non-deterministic row ordering), as the pattern shows below:

upstream stage -> repartition stage -> result stage

(-> indicate a shuffle)

When one of the executors process goes down, some tasks on the repartition stage will be retried and generate inconsistent ordering, and some tasks of the result stage will be retried generating different data.

The following code returns 931532, instead of 1000000:

```

import scala.sys.process._

import org.apache.spark.TaskContext

val res = spark.range(0, 1000 * 1000, 1).repartition(200).map { x =>

x

}.repartition(200).map { x =>

if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId < 2) {

throw new Exception("pkill -f java".!!)

}

x

}

res.distinct().count()

```

In this PR, we propose a most straight-forward way to fix this problem by performing a local sort before partitioning, after we make the input row ordering deterministic, the function from rows to partitions is fully deterministic too.

The downside of the approach is that with extra local sort inserted, the performance of repartition() will go down, so we add a new config named `spark.sql.execution.sortBeforeRepartition` to control whether this patch is applied. The patch is default enabled to be safe-by-default, but user may choose to manually turn it off to avoid performance regression.

This patch also changes the output rows ordering of repartition(), that leads to a bunch of test cases failure because they are comparing the results directly.

## How was this patch tested?

Add unit test in ExchangeSuite.

With this patch(and `spark.sql.execution.sortBeforeRepartition` set to true), the following query returns 1000000:

```

import scala.sys.process._

import org.apache.spark.TaskContext

spark.conf.set("spark.sql.execution.sortBeforeRepartition", "true")

val res = spark.range(0, 1000 * 1000, 1).repartition(200).map { x =>

x

}.repartition(200).map { x =>

if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId < 2) {

throw new Exception("pkill -f java".!!)

}

x

}

res.distinct().count()

res7: Long = 1000000

```

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20393 from jiangxb1987/shuffle-repartition.

## What changes were proposed in this pull request?

[Ticket](https://issues.apache.org/jira/browse/SPARK-22297)

- one of the tests seems to produce unreliable results due to execution speed variability

Since the original test was trying to connect to the test server with `40 ms` timeout, and the test server replied after `50 ms`, the error might be produced under the following conditions:

- it might occur that the test server replies correctly after `50 ms`

- but the client does only receive the timeout after `51 ms`s

- this might happen if the executor has to schedule a big number of threads, and decides to delay the thread/actor that is responsible to watch the timeout, because of high CPU load

- running an entire test suite usually produces high loads on the CPU executing the tests

## How was this patch tested?

The test's check cases remain the same and the set-up emulates the previous version's.

Author: Mark Petruska <petruska.mark@gmail.com>

Closes#19671 from mpetruska/SPARK-22297.

## What changes were proposed in this pull request?

This is a follow-up of #20297 which broke lint-java checks.

This pr fixes the lint-java issues.

```

[ERROR] src/test/java/org/apache/spark/launcher/BaseSuite.java:[21,8] (imports) UnusedImports: Unused import - java.util.concurrent.TimeUnit.

[ERROR] src/test/java/org/apache/spark/launcher/SparkLauncherSuite.java:[27,8] (imports) UnusedImports: Unused import - java.util.concurrent.TimeUnit.

```

## How was this patch tested?

Checked manually in my local environment.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#20376 from ueshin/issues/SPARK-23020/fup1.

## What changes were proposed in this pull request?

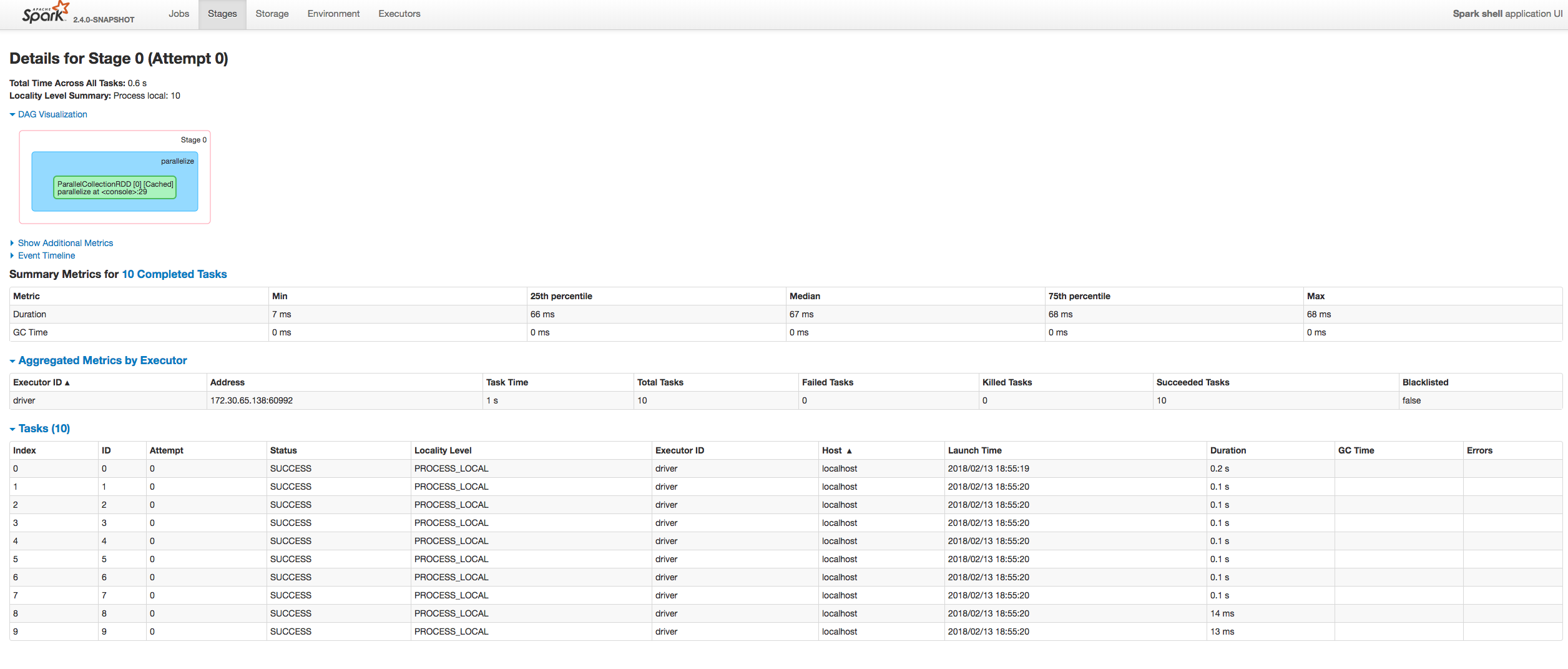

In this PR stage blacklisting is propagated to UI by introducing a new Spark listener event (SparkListenerExecutorBlacklistedForStage) which indicates the executor is blacklisted for a stage. Either because of the number of failures are exceeded a limit given for an executor (spark.blacklist.stage.maxFailedTasksPerExecutor) or because of the whole node is blacklisted for a stage (spark.blacklist.stage.maxFailedExecutorsPerNode). In case of the node is blacklisting all executors will listed as blacklisted for the stage.

Blacklisting state for a selected stage can be seen "Aggregated Metrics by Executor" table's blacklisting column, where after this change three possible labels could be found:

- "for application": when the executor is blacklisted for the application (see the configuration spark.blacklist.application.maxFailedTasksPerExecutor for details)

- "for stage": when the executor is **only** blacklisted for the stage

- "false" : when the executor is not blacklisted at all

## How was this patch tested?

It is tested both manually and with unit tests.

#### Unit tests

- HistoryServerSuite

- TaskSetBlacklistSuite

- AppStatusListenerSuite

#### Manual test for executor blacklisting

Running Spark as a local cluster:

```

$ bin/spark-shell --master "local-cluster[2,1,1024]" --conf "spark.blacklist.enabled=true" --conf "spark.blacklist.stage.maxFailedTasksPerExecutor=1" --conf "spark.blacklist.application.maxFailedTasksPerExecutor=10" --conf "spark.eventLog.enabled=true"

```

Executing:

``` scala

import org.apache.spark.SparkEnv

sc.parallelize(1 to 10, 10).map { x =>

if (SparkEnv.get.executorId == "0") throw new RuntimeException("Bad executor")

else (x % 3, x)

}.reduceByKey((a, b) => a + b).collect()

```

To see result check the "Aggregated Metrics by Executor" section at the bottom of picture:

#### Manual test for node blacklisting

Running Spark as on a cluster:

``` bash

./bin/spark-shell --master yarn --deploy-mode client --executor-memory=2G --num-executors=8 --conf "spark.blacklist.enabled=true" --conf "spark.blacklist.stage.maxFailedTasksPerExecutor=1" --conf "spark.blacklist.stage.maxFailedExecutorsPerNode=1" --conf "spark.blacklist.application.maxFailedTasksPerExecutor=10" --conf "spark.eventLog.enabled=true"

```

And the job was:

``` scala

import org.apache.spark.SparkEnv

sc.parallelize(1 to 10000, 10).map { x =>

if (SparkEnv.get.executorId.toInt >= 4) throw new RuntimeException("Bad executor")

else (x % 3, x)

}.reduceByKey((a, b) => a + b).collect()

```

The result is:

Here you can see apiros3.gce.test.com was node blacklisted for the stage because of failures on executor 4 and 5. As expected executor 3 is also blacklisted even it has no failures itself but sharing the node with 4 and 5.

Author: “attilapiros” <piros.attila.zsolt@gmail.com>

Author: Attila Zsolt Piros <2017933+attilapiros@users.noreply.github.com>

Closes#20203 from attilapiros/SPARK-22577.

## What changes were proposed in this pull request?

#20002 purposed a way to safe check the default partitioner, however, if `spark.default.parallelism` is set, the defaultParallelism still could be smaller than the proper number of partitions for upstreams RDDs. This PR tries to extend the approach to address the condition when `spark.default.parallelism` is set.

The requirements where the PR helps with are :

- Max partitioner is not eligible since it is atleast an order smaller, and

- User has explicitly set 'spark.default.parallelism', and

- Value of 'spark.default.parallelism' is lower than max partitioner

- Since max partitioner was discarded due to being at least an order smaller, default parallelism is worse - even though user specified.

Under the rest cases, the changes should be no-op.

## How was this patch tested?

Add corresponding test cases in `PairRDDFunctionsSuite` and `PartitioningSuite`.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20091 from jiangxb1987/partitioner.

The race in the code is because the handle might update

its state to the wrong state if the connection handling

thread is still processing incoming data; so the handle

needs to wait for the connection to finish up before

checking the final state.

The race in the test is because when waiting for a handle

to reach a final state, the waitFor() method needs to wait

until all handle state is updated (which also includes

waiting for the connection thread above to finish).

Otherwise, waitFor() may return too early, which would cause

a bunch of different races (like the listener not being yet

notified of the state change, or being in the middle of

being notified, or the handle not being properly disposed

and causing postChecks() to assert).

On top of that I found, by code inspection, a couple of

potential races that could make a handle end up in the

wrong state when being killed.

The original version of this fix introduced the flipped

version of the first race described above; the connection

closing might override the handle state before the

handle might have a chance to do cleanup. The fix there

is to only dispose of the handle from the connection

when there is an error, and let the handle dispose

itself in the normal case.

The fix also caused a bug in YarnClusterSuite to be surfaced;

the code was checking for a file in the classpath that was

not expected to be there in client mode. Because of the above

issues, the error was not propagating correctly and the (buggy)

test was incorrectly passing.

Tested by running the existing unit tests a lot (and not

seeing the errors I was seeing before).

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20297 from vanzin/SPARK-23020.

The code was sorting "0" as "less than" negative values, which is a little

wrong. Fix is simple, most of the changes are the added test and related

cleanup.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20284 from vanzin/SPARK-23103.

Detect the deletion of event log files from storage, and remove

data about the related application attempt in the SHS.

Also contains code to fix SPARK-21571 based on code by ericvandenbergfb.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20138 from vanzin/SPARK-20664.

## What changes were proposed in this pull request?

Temporarily ignoring flaky test `SparkLauncherSuite.testInProcessLauncher` to de-flake the builds. This should be re-enabled when SPARK-23020 is merged.

## How was this patch tested?

N/A (Test Only Change)

Author: Sameer Agarwal <sameerag@apache.org>

Closes#20291 from sameeragarwal/disable-test-2.

## What changes were proposed in this pull request?

Lots of our tests don't properly shutdown everything they create, and end up leaking lots of threads. For example, `TaskSetManagerSuite` doesn't stop the extra `TaskScheduler` and `DAGScheduler` it creates. There are a couple more instances, eg. in `DAGSchedulerSuite`.

This PR adds the possibility to print out the not properly stopped thread list after a test suite executed. The format is the following:

```

===== FINISHED o.a.s.scheduler.DAGSchedulerSuite: 'task end event should have updated accumulators (SPARK-20342)' =====

...

===== Global thread whitelist loaded with name /thread_whitelist from classpath: rpc-client.*, rpc-server.*, shuffle-client.*, shuffle-server.*' =====

ScalaTest-run:

===== THREADS NOT STOPPED PROPERLY =====

ScalaTest-run: dag-scheduler-event-loop

ScalaTest-run: globalEventExecutor-2-5

ScalaTest-run:

===== END OF THREAD DUMP =====

ScalaTest-run:

===== EITHER PUT THREAD NAME INTO THE WHITELIST FILE OR SHUT IT DOWN PROPERLY =====

```

With the help of this leaking threads has been identified in TaskSetManagerSuite. My intention is to hunt down and fix such bugs in later PRs.

## How was this patch tested?

Manual: TaskSetManagerSuite test executed and found out where are the leaking threads.

Automated: Pass the Jenkins.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes#19893 from gaborgsomogyi/SPARK-16139.

The race in the code is because the handle might update

its state to the wrong state if the connection handling

thread is still processing incoming data; so the handle

needs to wait for the connection to finish up before

checking the final state.

The race in the test is because when waiting for a handle

to reach a final state, the waitFor() method needs to wait

until all handle state is updated (which also includes

waiting for the connection thread above to finish).

Otherwise, waitFor() may return too early, which would cause

a bunch of different races (like the listener not being yet

notified of the state change, or being in the middle of

being notified, or the handle not being properly disposed

and causing postChecks() to assert).

On top of that I found, by code inspection, a couple of

potential races that could make a handle end up in the

wrong state when being killed.

Tested by running the existing unit tests a lot (and not

seeing the errors I was seeing before).

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20223 from vanzin/SPARK-23020.

## What changes were proposed in this pull request?

Spark still use a few years old version 3.2.11. This change is to upgrade json4s to 3.5.3.

Note that this change does not include the Jackson update because the Jackson version referenced in json4s 3.5.3 is 2.8.4, which has a security vulnerability ([see](https://issues.apache.org/jira/browse/SPARK-20433)).

## How was this patch tested?

Existing unit tests and build.

Author: shimamoto <chibochibo@gmail.com>

Closes#20233 from shimamoto/upgrade-json4s.

When resources happen to be constrained on an executor the first time a broadcast variable is instantiated it is persisted to disk by the BlockManager. Consequently, every subsequent call to TorrentBroadcast::readBroadcastBlock from other instances of that broadcast variable spawns another instance of the underlying value. That is, broadcast variables are spawned once per executor **unless** memory is constrained, in which case every instance of a broadcast variable is provided with a unique copy of the underlying value.

This patch fixes the above by explicitly caching the underlying values using weak references in a ReferenceMap.

Author: ho3rexqj <ho3rexqj@gmail.com>

Closes#20183 from ho3rexqj/fix/cache-broadcast-values.

There are two main changes to speed up rendering of the tasks list

when rendering the stage page.

The first one makes the code only load the tasks being shown in the

current page of the tasks table, and information related to only

those tasks. One side-effect of this change is that the graph that

shows task-related events now only shows events for the tasks in

the current page, instead of the previously hardcoded limit of "events

for the first 1000 tasks". That ends up helping with readability,

though.

To make sorting efficient when using a disk store, the task wrapper

was extended to include many new indices, one for each of the sortable

columns in the UI, and metrics for which quantiles are calculated.

The second changes the way metric quantiles are calculated for stages.

Instead of using the "Distribution" class to process data for all task

metrics, which requires scanning all tasks of a stage, the code now

uses the KVStore "skip()" functionality to only read tasks that contain

interesting information for the quantiles that are desired.

This is still not cheap; because there are many metrics that the UI

and API track, the code needs to scan the index for each metric to

gather the information. Savings come mainly from skipping deserialization

when using the disk store, but the in-memory code also seems to be

faster than before (most probably because of other changes in this

patch).

To make subsequent calls faster, some quantiles are cached in the

status store. This makes UIs much faster after the first time a stage

has been loaded.

With the above changes, a lot of code in the UI layer could be simplified.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20013 from vanzin/SPARK-20657.

## What changes were proposed in this pull request?

This patch modifies Spark's `MemoryAllocator` implementations so that `free(MemoryBlock)` mutates the passed block to clear pointers (in the off-heap case) or null out references to backing `long[]` arrays (in the on-heap case). The goal of this change is to add an extra layer of defense against use-after-free bugs because currently it's hard to detect corruption caused by blind writes to freed memory blocks.

## How was this patch tested?

New unit tests in `PlatformSuite`, including new tests for existing functionality because we did not have sufficient mutation coverage of the on-heap memory allocator's pooling logic.

Author: Josh Rosen <joshrosen@databricks.com>

Closes#20191 from JoshRosen/SPARK-22997-add-defenses-against-use-after-free-bugs-in-memory-allocator.

## What changes were proposed in this pull request?

1. Deprecate attemptId in StageInfo and add `def attemptNumber() = attemptId`

2. Replace usage of stageAttemptId with stageAttemptNumber

## How was this patch tested?

I manually checked the compiler warning info

Author: Xianjin YE <advancedxy@gmail.com>

Closes#20178 from advancedxy/SPARK-22952.

The code in LiveListenerBus was queueing events before start in the

queues themselves; so in situations like the following:

bus.post(someEvent)

bus.addToEventLogQueue(listener)

bus.start()

"someEvent" would not be delivered to "listener" if that was the first

listener in the queue, because the queue wouldn't exist when the

event was posted.

This change buffers the events before starting the bus in the bus itself,

so that they can be delivered to all registered queues when the bus is

started.

Also tweaked the unit tests to cover the behavior above.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20039 from vanzin/SPARK-22850.

## What changes were proposed in this pull request?

stageAttemptId added in TaskContext and corresponding construction modification

## How was this patch tested?

Added a new test in TaskContextSuite, two cases are tested:

1. Normal case without failure

2. Exception case with resubmitted stages

Link to [SPARK-22897](https://issues.apache.org/jira/browse/SPARK-22897)

Author: Xianjin YE <advancedxy@gmail.com>

Closes#20082 from advancedxy/SPARK-22897.

The scheduled task was racing with the test code and could influence

the values returned to the test, triggering assertions. The change adds

a new config that is only used during testing, and overrides it

on the affected test suite.

The issue in the bug can be reliably reproduced by reducing the interval

in the test (e.g. to 10ms).

While there, fixed an exception that shows up in the logs while these

tests run, and simplified some code (which was also causing misleading

log messages in the log output of the test).

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20050 from vanzin/SPARK-22864.

This change adds a new configuration option and support code that limits

how much disk space the SHS will use. The default value is pretty generous

so that applications will, hopefully, only rarely need to be replayed

because of their disk stored being evicted.

This works by keeping track of how much data each application is using.

Also, because it's not possible to know, before replaying, how much space

will be needed, it's possible that usage will exceed the configured limit

temporarily. The code uses the concept of a "lease" to try to limit how

much the SHS will exceed the limit in those cases.

Active UIs are also tracked, so they're never deleted. This works in

tandem with the existing option of how many active UIs are loaded; because

unused UIs will be unloaded, their disk stores will also become candidates

for deletion. If the data is not deleted, though, re-loading the UI is

pretty quick.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20011 from vanzin/SPARK-20654.

This change adds a new launcher that allows applications to be run

in a separate thread in the same process as the calling code. To

achieve that, some code from the child process implementation was

moved to abstract classes that implement the common functionality,

and the new launcher inherits from those.

The new launcher was added as a new class, instead of implemented

as a new option to the existing SparkLauncher, to avoid ambigous

APIs. For example, SparkLauncher has ways to set the child app's

environment, modify SPARK_HOME, or control the logging of the

child process, none of which apply to in-process apps.

The in-process launcher has limitations: it needs Spark in the

context class loader of the calling thread, and it's bound by

Spark's current limitation of a single client-mode application

per JVM. It also relies on the recently added SparkApplication

trait to make sure different apps don't mess up each other's

configuration, so config isolation is currently limited to cluster mode.

I also chose to keep the same socket-based communication for in-process

apps, even though it might be possible to avoid it for in-process

mode. That helps both implementations share more code.

Tested with new and existing unit tests, and with a simple app that

uses the launcher; also made sure the app ran fine with older launcher

jar to check binary compatibility.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#19591 from vanzin/SPARK-11035.

Port code from the old executors listener to the new one, so that

the driver logs present in the application start event are kept.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20038 from vanzin/SPARK-22836.

## What changes were proposed in this pull request?

In choosing a Partitioner to use for a cogroup-like operation between a number of RDDs, the default behaviour was if some of the RDDs already have a partitioner, we choose the one amongst them with the maximum number of partitions.

This behaviour, in some cases, could hit the 2G limit (SPARK-6235). To illustrate one such scenario, consider two RDDs:

rDD1: with smaller data and smaller number of partitions, alongwith a Partitioner.

rDD2: with much larger data and a larger number of partitions, without a Partitioner.

The cogroup of these two RDDs could hit the 2G limit, as a larger amount of data is shuffled into a smaller number of partitions.

This PR introduces a safety-check wherein the Partitioner is chosen only if either of the following conditions are met:

1. if the number of partitions of the RDD associated with the Partitioner is greater than or equal to the max number of upstream partitions; or

2. if the number of partitions of the RDD associated with the Partitioner is less than and within a single order of magnitude of the max number of upstream partitions.

## How was this patch tested?

Unit tests in PartitioningSuite and PairRDDFunctionsSuite

Author: sujithjay <sujith@logistimo.com>

Closes#20002 from sujithjay/SPARK-22465.

The code was ignoring SparkListenerLogStart, which was added

somewhat recently to record the Spark version used to generate

an event log.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20049 from vanzin/SPARK-22854.

## What changes were proposed in this pull request?

In https://github.com/apache/spark/pull/19681 we introduced a new interface called `AppStatusPlugin`, to register listeners and set up the UI for both live and history UI.

However I think it's an overkill for live UI. For example, we should not register `SQLListener` if users are not using SQL functions. Previously we register the `SQLListener` and set up SQL tab when `SparkSession` is firstly created, which indicates users are going to use SQL functions. But in #19681 , we register the SQL functions during `SparkContext` creation. The same thing should apply to streaming too.

I think we should keep the previous behavior, and only use this new interface for history server.

To reflect this change, I also rename the new interface to `SparkHistoryUIPlugin`

This PR also refines the tests for sql listener.

## How was this patch tested?

existing tests

Author: Wenchen Fan <wenchen@databricks.com>

Closes#19981 from cloud-fan/listener.