This change modifies the logic in the SecurityManager to do two

things:

- generate unique app secrets also when k8s is being used

- only store the secret in the user's UGI on YARN

The latter is needed so that k8s won't unnecessarily create

k8s secrets for the UGI credentials when only the auth token

is stored there.

On the k8s side, the secret is propagated to executors using

an environment variable instead. This ensures it works in both

client and cluster mode.

Security doc was updated to mention the feature and clarify that

proper access control in k8s should be enabled for it to be secure.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#23174 from vanzin/SPARK-26194.

## What changes were proposed in this pull request?

Kafka delegation token support implemented in [PR#22598](https://github.com/apache/spark/pull/22598) but that didn't contain documentation because of rapid changes. Because it has been merged in this PR I've documented it.

## How was this patch tested?

jekyll build + manual html check

Closes#23195 from gaborgsomogyi/SPARK-26236.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Updated SQL migration guide according to changes in https://github.com/apache/spark/pull/23120Closes#23235 from MaxGekk/failuresafe-partial-result-followup.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to use **java.time API** for parsing timestamps and dates from CSV content with microseconds precision. The SQL config `spark.sql.legacy.timeParser.enabled` allow to switch back to previous behaviour with using `java.text.SimpleDateFormat`/`FastDateFormat` for parsing/generating timestamps/dates.

## How was this patch tested?

It was tested by `UnivocityParserSuite`, `CsvExpressionsSuite`, `CsvFunctionsSuite` and `CsvSuite`.

Closes#23150 from MaxGekk/time-parser.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

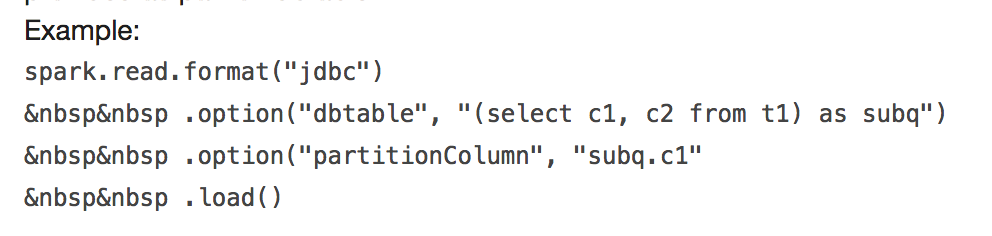

## What changes were proposed in this pull request?

It will throw:

```

requirement failed: When reading JDBC data sources, users need to specify all or none for the following options: 'partitionColumn', 'lowerBound', 'upperBound', and 'numPartitions'

```

and

```

User-defined partition column subq.c1 not found in the JDBC relation ...

```

This PR fix this error example.

## How was this patch tested?

manual tests

Closes#23170 from wangyum/SPARK-24499.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Keeps K8s executor resources present if case of failure or normal termination.

Introduces a new boolean config option: `spark.kubernetes.deleteExecutors`, with default value set to true.

The idea is to update Spark K8s backend structures but leave the resources around.

The assumption is that since entries are not removed from the `removedExecutorsCache` we are immune to updates that refer to the the executor resources previously removed.

The only delete operation not touched is the one in the `doKillExecutors` method.

Reason is right now we dont support [blacklisting](https://issues.apache.org/jira/browse/SPARK-23485) and dynamic allocation with Spark on K8s. In both cases in the future we might want to handle these scenarios although its more complicated.

More tests can be added if approach is approved.

## How was this patch tested?

Manually by running a Spark job and verifying pods are not deleted.

Closes#23136 from skonto/keep_pods.

Authored-by: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com>

Signed-off-by: Yinan Li <ynli@google.com>

## What changes were proposed in this pull request?

`resource` package is a Unix specific package. See https://docs.python.org/2/library/resource.html and https://docs.python.org/3/library/resource.html.

Note that we document Windows support:

> Spark runs on both Windows and UNIX-like systems (e.g. Linux, Mac OS).

This should be backported into branch-2.4 to restore Windows support in Spark 2.4.1.

## How was this patch tested?

Manually mocking the changed logics.

Closes#23055 from HyukjinKwon/SPARK-26080.

Lead-authored-by: hyukjinkwon <gurwls223@apache.org>

Co-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

It's a bad idea to use case class as public API, as it has a very wide surface. For example, the `copy` method, its fields, the companion object, etc.

For a particular case, `UserDefinedFunction`. It has a private constructor, and I believe we only want users to access a few methods:`apply`, `nullable`, `asNonNullable`, etc.

However, all its fields, and `copy` method, and the companion object are public unexpectedly. As a result, we made many tricks to work around the binary compatibility issues.

This PR proposes to only make interfaces public, and hide implementations behind with a private class. Now `UserDefinedFunction` is a pure trait, and the concrete implementation is `SparkUserDefinedFunction`, which is private.

Changing class to interface is not binary compatible(but source compatible), so 3.0 is a good chance to do it.

This is the first PR to go with this direction. If it's accepted, I'll create a umbrella JIRA and fix all the public case classes.

## How was this patch tested?

existing tests.

Closes#23178 from cloud-fan/udf.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR updates maven version from 3.5.4 to 3.6.0. The release note of the 3.6.0 is [here](https://maven.apache.org/docs/3.6.0/release-notes.html).

From [the release note of the 3.6.0](https://maven.apache.org/docs/3.6.0/release-notes.html), the followings are new features:

1. There had been issues related to the project discoverytime which has been increased in previous version which influenced some of our users.

1. The output in the reactor summary has been improved.

1. There was an issue related to the classpath ordering.

## How was this patch tested?

Existing tests

Closes#23177 from kiszk/SPARK-26212.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Fix Typos.

This PR is the complete version of https://github.com/apache/spark/pull/23145.

## How was this patch tested?

NA

Closes#23185 from kjmrknsn/docUpdate.

Authored-by: Keiji Yoshida <kjmrknsn@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently the `SET` command works without any warnings even if the specified key is for `SparkConf` entries and it has no effect because the command does not update `SparkConf`, but the behavior might confuse users. We should track `SparkConf` entries and make the command reject for such entries.

## How was this patch tested?

Added a test and existing tests.

Closes#23031 from ueshin/issues/SPARK-26060/set_command.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Adds USER directives to the Dockerfiles which is configurable via build argument (`spark_uid`) for easy customisation. A `-u` flag is added to `bin/docker-image-tool.sh` to make it easy to customise this e.g.

```

> bin/docker-image-tool.sh -r rvesse -t uid -u 185 build

> bin/docker-image-tool.sh -r rvesse -t uid push

```

If no UID is explicitly specified it defaults to `185` - this is per skonto's suggestion to align with the OpenShift standard reserved UID for Java apps (

https://lists.openshift.redhat.com/openshift-archives/users/2016-March/msg00283.html)

Notes:

- We have to make the `WORKDIR` writable by the root group or otherwise jobs will fail with `AccessDeniedException`

To Do:

- [x] Debug and resolve issue with client mode test

- [x] Consider whether to always propagate `SPARK_USER_NAME` to environment of driver and executor pods so `entrypoint.sh` can insert that into `/etc/passwd` entry

- [x] Rebase once PR #23013 is merged and update documentation accordingly

Built the Docker images with the new Dockerfiles that include the `USER` directives. Ran the Spark on K8S integration tests against the new images. All pass except client mode which I am currently debugging further.

Also manually dropped myself into the resulting container images via `docker run` and checked `id -u` output to see that UID is as expected.

Tried customising the UID from the default via the new `-u` argument to `docker-image-tool.sh` and again checked the resulting image for the correct runtime UID.

cc felixcheung skonto vanzin

Closes#23017 from rvesse/SPARK-26015.

Authored-by: Rob Vesse <rvesse@dotnetrdf.org>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Fix Typos.

## How was this patch tested?

NA

Closes#23145 from kjmrknsn/docUpdate.

Authored-by: Keiji Yoshida <kjmrknsn@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

We have deprecated `OneHotEncoder` at Spark 2.3.0 and introduced `OneHotEncoderEstimator`. At 3.0.0, we remove deprecated `OneHotEncoder` and rename `OneHotEncoderEstimator` to `OneHotEncoder`.

TODO: According to ML migration guide, we need to keep `OneHotEncoderEstimator` as an alias after renaming. This is not done at this patch in order to facilitate review.

## How was this patch tested?

Existing tests.

Closes#23100 from viirya/remove_one_hot_encoder.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

Currently duplicated map keys are not handled consistently. For example, map look up respects the duplicated key appears first, `Dataset.collect` only keeps the duplicated key appears last, `MapKeys` returns duplicated keys, etc.

This PR proposes to remove duplicated map keys with last wins policy, to follow Java/Scala and Presto. It only applies to built-in functions, as users can create map with duplicated map keys via private APIs anyway.

updated functions: `CreateMap`, `MapFromArrays`, `MapFromEntries`, `StringToMap`, `MapConcat`, `TransformKeys`.

For other places:

1. data source v1 doesn't have this problem, as users need to provide a java/scala map, which can't have duplicated keys.

2. data source v2 may have this problem. I've added a note to `ArrayBasedMapData` to ask the caller to take care of duplicated keys. In the future we should enforce it in the stable data APIs for data source v2.

3. UDF doesn't have this problem, as users need to provide a java/scala map. Same as data source v1.

4. file format. I checked all of them and only parquet does not enforce it. For backward compatibility reasons I change nothing but leave a note saying that the behavior will be undefined if users write map with duplicated keys to parquet files. Maybe we can add a config and fail by default if parquet files have map with duplicated keys. This can be done in followup.

## How was this patch tested?

updated tests and new tests

Closes#23124 from cloud-fan/map.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

a followup of https://github.com/apache/spark/pull/23043 . Add a test to show the minor behavior change introduced by #23043 , and add migration guide.

## How was this patch tested?

a new test

Closes#23141 from cloud-fan/follow.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR adds configurations to use subpaths with Spark on k8s. Subpaths (https://kubernetes.io/docs/concepts/storage/volumes/#using-subpath) allow the user to specify a path within a volume to use instead of the volume's root.

## How was this patch tested?

Added unit tests. Ran SparkPi on a cluster with event logging pointed at a subpath-mount and verified the driver host created and used the subpath.

Closes#23026 from NiharS/k8s_subpath.

Authored-by: Nihar Sheth <niharrsheth@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Allow the Spark Structured Streaming user to specify the prefix of the consumer group (group.id), compared to force consumer group ids of the form `spark-kafka-source-*`

## How was this patch tested?

Unit tests provided by Spark (backwards compatible change, i.e., user can optionally use the functionality)

`mvn test -pl external/kafka-0-10`

Closes#23103 from zouzias/SPARK-26121.

Authored-by: Anastasios Zouzias <anastasios@sqooba.io>

Signed-off-by: cody koeninger <cody@koeninger.org>

## What changes were proposed in this pull request?

This PR is to add back `unionAll`, which is widely used. The name is also consistent with our ANSI SQL. We also have the corresponding `intersectAll` and `exceptAll`, which were introduced in Spark 2.4.

## How was this patch tested?

Added a test case in DataFrameSuite

Closes#23131 from gatorsmile/addBackUnionAll.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The DOI foundation recommends [this new resolver](https://www.doi.org/doi_handbook/3_Resolution.html#3.8). Accordingly, this PR re`sed`s all static DOI links ;-)

## How was this patch tested?

It wasn't, since it seems as safe as a "[typo fix](https://spark.apache.org/contributing.html)".

In case any of the files is included from other projects, and should be updated there, please let me know.

Closes#23129 from katrinleinweber/resolve-DOIs-securely.

Authored-by: Katrin Leinweber <9948149+katrinleinweber@users.noreply.github.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

"Running on Kubernetes" references `spark.driver.pod.name` few places, and it should be `spark.kubernetes.driver.pod.name`.

## How was this patch tested?

See changes

Closes#23133 from Leemoonsoo/fix-driver-pod-name-prop.

Authored-by: Lee moon soo <moon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

When doing typed aggregation on a Dataset, for struct key type, the key attribute is named as "key". But for non-struct type, the key attribute is named as "value". This key attribute should also be named as "key" for non-struct type.

## How was this patch tested?

Added test.

Closes#23054 from viirya/SPARK-26085.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

An input without valid JSON tokens on the root level will be treated as a bad record, and handled according to `mode`. Previously such input was converted to `null`. After the changes, the input is converted to a row with `null`s in the `PERMISSIVE` mode according the schema. This allows to remove a code in the `from_json` function which can produce `null` as result rows.

## How was this patch tested?

It was tested by existing test suites. Some of them I have to modify (`JsonSuite` for example) because previously bad input was just silently ignored. For now such input is handled according to specified `mode`.

Closes#22938 from MaxGekk/json-nulls.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

bin/docker-image-tool.sh tries to build all docker images (JVM, PySpark

and SparkR) by default. But not all spark distributions are built with

SparkR and hence this script will fail on such distros.

With this change, we make building alternate language binding docker images (PySpark and SparkR) optional. User has to specify dockerfile for those language bindings using -p and -R flags accordingly, to build the binding docker images.

## How was this patch tested?

Tested following scenarios.

*bin/docker-image-tool.sh -r <repo> -t <tag> build* --> Builds only JVM docker image (default behavior)

*bin/docker-image-tool.sh -r <repo> -t <tag> -p kubernetes/dockerfiles/spark/bindings/python/Dockerfile build* --> Builds both JVM and PySpark docker images

*bin/docker-image-tool.sh -r <repo> -t <tag> -p kubernetes/dockerfiles/spark/bindings/python/Dockerfile -R kubernetes/dockerfiles/spark/bindings/R/Dockerfile build* --> Builds JVM, PySpark and SparkR docker images.

Author: Nagaram Prasad Addepally <ram@cloudera.com>

Closes#23053 from ramaddepally/SPARK-25957.

## What changes were proposed in this pull request?

Introducing spark.ui.requestHeaderSize for configuring Jetty's HTTP requestHeaderSize.

This way long authorization field does not lead to HTTP 413.

## How was this patch tested?

Manually with curl (which version must be at least 7.55).

With the original default value (8k limit):

```bash

# Starting history server with default requestHeaderSize

$ ./sbin/start-history-server.sh

starting org.apache.spark.deploy.history.HistoryServer, logging to /Users/attilapiros/github/spark/logs/spark-attilapiros-org.apache.spark.deploy.history.HistoryServer-1-apiros-MBP.lan.out

# Creating huge header

$ echo -n "X-Custom-Header: " > cookie

$ printf 'A%.0s' {1..9500} >> cookie

# HTTP GET with huge header fails with 431

$ curl -H cookie http://458apiros-MBP.lan:18080/

<h1>Bad Message 431</h1><pre>reason: Request Header Fields Too Large</pre>

# The log contains the error

$ tail -1 /Users/attilapiros/github/spark/logs/spark-attilapiros-org.apache.spark.deploy.history.HistoryServer-1-apiros-MBP.lan.out

18/11/19 21:24:28 WARN HttpParser: Header is too large 8193>8192

```

After:

```bash

# Creating the history properties file with the increased requestHeaderSize

$ echo spark.ui.requestHeaderSize=10000 > history.properties

# Starting Spark History Server with the settings

$ ./sbin/start-history-server.sh --properties-file history.properties

starting org.apache.spark.deploy.history.HistoryServer, logging to /Users/attilapiros/github/spark/logs/spark-attilapiros-org.apache.spark.deploy.history.HistoryServer-1-apiros-MBP.lan.out

# HTTP GET with huge header gives back HTML5 (I have added here only just a part of the response)

$ curl -H cookie http://458apiros-MBP.lan:18080/

<!DOCTYPE html><html>

<head>...

<link rel="shortcut icon" href="/static/spark-logo-77x50px-hd.png"></link>

<title>History Server</title>

</head>

<body>

...

```

Closes#23090 from attilapiros/JettyHeaderSize.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

Due to implementation limitation, currently Spark can't compare or do equality check between map types. As a result, map values can't appear in EQUAL or comparison expressions, can't be grouping key, etc.

The more important thing is, map loop up needs to do equality check of the map key, and thus can't support map as map key when looking up values from a map. Thus it's not useful to have map as map key.

This PR proposes to stop users from creating maps using map type as key. The list of expressions that are updated: `CreateMap`, `MapFromArrays`, `MapFromEntries`, `MapConcat`, `TransformKeys`. I manually checked all the places that create `MapType`, and came up with this list.

Note that, maps with map type key still exist, via reading from parquet files, converting from scala/java map, etc. This PR is not to completely forbid map as map key, but to avoid creating it by Spark itself.

Motivation: when I was trying to fix the duplicate key problem, I found it's impossible to do it with map type map key. I think it's reasonable to avoid map type map key for builtin functions.

## How was this patch tested?

updated test

Closes#23045 from cloud-fan/map-key.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

[Hive 2.3.4 is released on Nov. 7th](https://hive.apache.org/downloads.html#7-november-2018-release-234-available). This PR aims to support that version.

## How was this patch tested?

Pass the Jenkins with the updated version

Closes#23059 from dongjoon-hyun/SPARK-26091.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Highlights specific security issues to be aware of with Spark on K8S and recommends K8S mechanisms that should be used to secure clusters.

## How was this patch tested?

N/A - Documentation only

CC felixcheung tgravescs skonto

Closes#23013 from rvesse/SPARK-25023.

Authored-by: Rob Vesse <rvesse@dotnetrdf.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR proposes to bump up the minimum versions of R from 3.1 to 3.4.

R version. 3.1.x is too old. It's released 4.5 years ago. R 3.4.0 is released 1.5 years ago. Considering the timing for Spark 3.0, deprecating lower versions, bumping up R to 3.4 might be reasonable option.

It should be good to deprecate and drop < R 3.4 support.

## How was this patch tested?

Jenkins tests.

Closes#23012 from HyukjinKwon/SPARK-26014.

Authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR makes Spark's default Scala version as 2.12, and Scala 2.11 will be the alternative version. This implies that Scala 2.12 will be used by our CI builds including pull request builds.

We'll update the Jenkins to include a new compile-only jobs for Scala 2.11 to ensure the code can be still compiled with Scala 2.11.

## How was this patch tested?

existing tests

Closes#22967 from dbtsai/scala2.12.

Authored-by: DB Tsai <d_tsai@apple.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Currently, we do not have a mechanism to collect driver logs if a user chooses

to run their application in client mode. This is a big issue as admin teams need

to create their own mechanisms to capture driver logs.

This commit adds a logger which, if enabled, adds a local log appender to the

root logger and asynchronously syncs it an application specific log file on hdfs

(Spark Driver Log Dir).

Additionally, this collects spark-shell driver logs at INFO level by default.

The change is that instead of setting root logger level to WARN, we will set the

consoleAppender threshold to WARN, in case of spark-shell. This ensures that

only WARN logs are printed on CONSOLE but other log appenders still capture INFO

(or the default log level logs).

1. Verified that logs are written to local and remote dir

2. Added a unit test case

3. Verified this for spark-shell, client mode and pyspark.

4. Verified in both non-kerberos and kerberos environment

5. Verified with following unexpected termination conditions: Ctrl + C, Driver

OOM, Large Log Files

6. Ran an application in spark-shell and ensured that driver logs were

captured at INFO level

7. Started the application at WARN level, programmatically changed the level to

INFO and ensured that logs on console were printed at INFO level

Closes#22504 from ankuriitg/ankurgupta/SPARK-25118.

Authored-by: ankurgupta <ankur.gupta@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Every time a task is unschedulable because of the condition where no. of task failures < no. of executors available, we currently abort the taskSet - failing the job. This change tries to acquire new executors so that we can complete the job successfully. We try to acquire a new executor only when we can kill an existing idle executor. We fallback to the older implementation where we abort the job if we cannot find an idle executor.

## How was this patch tested?

I performed some manual tests to check and validate the behavior.

```scala

val rdd = sc.parallelize(Seq(1 to 10), 3)

import org.apache.spark.TaskContext

val mapped = rdd.mapPartitionsWithIndex ( (index, iterator) => { if (index == 2) { Thread.sleep(30 * 1000); val attemptNum = TaskContext.get.attemptNumber; if (attemptNum < 3) throw new Exception("Fail for blacklisting")}; iterator.toList.map (x => x + " -> " + index).iterator } )

mapped.collect

```

Closes#22288 from dhruve/bug/SPARK-22148.

Lead-authored-by: Dhruve Ashar <dhruveashar@gmail.com>

Co-authored-by: Dhruve Ashar <dhruve@users.noreply.github.com>

Co-authored-by: Tom Graves <tgraves@apache.org>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

Fix typos and misspellings, per https://github.com/apache/spark-website/pull/158#issuecomment-435790366

## How was this patch tested?

Existing tests.

Closes#22950 from srowen/Typos.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Change ptats.Stats() to pstats.Stats() for `spark.python.profile.dump` in configuration.md.

## How was this patch tested?

Doc test

Closes#22933 from AlexHagerman/doc_fix.

Authored-by: Alex Hagerman <alex@unexpectedeof.net>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Propose changing the documentation to state that there are 4, not 3, cluster managers available.

## How was this patch tested?

This is a docs-only patch and doesn't need any new testing beyond the normal CI process for Spark.

Closes#22922 from jameslamb/bugfix/cluster_docs.

Authored-by: James Lamb <jaylamb20@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Clarify documentation about security.

## How was this patch tested?

None, just documentation

Closes#22852 from tgravescs/SPARK-25023.

Authored-by: Thomas Graves <tgraves@thirteenroutine.corp.gq1.yahoo.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

This turns off hdfs erasure coding by default for event logs, regardless of filesystem defaults. Because this requires apis only available in hadoop 3, this uses reflection. EC isn't a very good choice for event logs, as hflush() is a no-op, and so updates to the file are not visible for a long time. This can still be configured by setting "spark.eventLog.allowErasureCoding=true", which will use filesystem defaults.

## How was this patch tested?

deployed a cluster with the changes with HDFS EC on. By default, event logs didn't use EC, but configuration still would allow EC. Also tried writing to the local fs (which doesn't support EC at all) and things worked fine.

Closes#22881 from squito/SPARK-25855.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Both Spark and Hive support views. However in some cases views created by Hive are not readable by Spark. For example, if column aliases are not specified in view definition queries, both Spark and Hive will generate alias names, but in different ways. In order for Spark to be able to read views created by Hive, users should explicitly specify column aliases in view definition queries.

Given that it's not uncommon that Hive and Spark are used together in enterprise data warehouse, this PR aims to explicitly describe this compatibility issue to help users troubleshoot this issue easily.

## How was this patch tested?

Docs are manually generated and checked locally.

```

SKIP_API=1 jekyll serve

```

Closes#22868 from seancxmao/SPARK-25833.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

New feature to pass podspec files for driver and executor pods.

## How was this patch tested?

new unit and integration tests

- [x] more overwrites in integration tests

- [ ] invalid template integration test, documentation

Author: Onur Satici <osatici@palantir.com>

Author: Yifei Huang <yifeih@palantir.com>

Author: onursatici <onursatici@gmail.com>

Closes#22146 from onursatici/pod-template.

## What changes were proposed in this pull request?

This PR targets to document binary type in "Apache Arrow in Spark".

## How was this patch tested?

Manually built the documentation and checked.

Closes#22871 from HyukjinKwon/SPARK-25179.

Authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Remove deprecated functions which includes:

SQLContext/HiveContext stuff

sparkR.init

jsonFile

parquetFile

registerTempTable

saveAsParquetFile

unionAll

createExternalTable

dropTempTable

## How was this patch tested?

jenkins

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#22843 from felixcheung/rrddapi.

## What changes were proposed in this pull request?

Changed the `kubernetes-client` version and refactored code that broke as a result

## How was this patch tested?

Unit and Integration tests

Closes#22820 from ifilonenko/SPARK-25828.

Authored-by: Ilan Filonenko <ifilondz@gmail.com>

Signed-off-by: Erik Erlandson <eerlands@redhat.com>

## What changes were proposed in this pull request?

Remove SQLContext methods deprecated in 1.4

## How was this patch tested?

Existing tests.

Closes#22815 from srowen/SPARK-25821.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Add note about AddJar return value change in migration guide

## How was this patch tested?

n/a

Closes#22826 from srowen/SPARK-25760.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Since Spark 2.2, view definitions are stored in a different way from prior versions. This may cause Spark unable to read views created by prior versions. See [SPARK-25797](https://issues.apache.org/jira/browse/SPARK-25797) for more details.

Basically, we have 2 options.

1) Make Spark 2.2+ able to get older view definitions back. Since the expanded text is buggy and unusable, we have to use original text (this is possible with [SPARK-25459](https://issues.apache.org/jira/browse/SPARK-25459)). However, because older Spark versions don't save the context for the database, we cannot always get correct view definitions without view default database.

2) Recreate the views by `ALTER VIEW AS` or `CREATE OR REPLACE VIEW AS`.

This PR aims to add migration doc to help users troubleshoot this issue by above option 2.

## How was this patch tested?

N/A.

Docs are generated and checked locally

```

cd docs

SKIP_API=1 jekyll serve --watch

```

Closes#22846 from seancxmao/SPARK-25797.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Current the function `from_avro` throws exception on reading corrupt records.

In practice, there could be various reasons of data corruption. It would be good to support `PERMISSIVE` mode and allow the function from_avro to process all the input file/streaming, which is consistent with from_json and from_csv. There is no obvious down side for supporting `PERMISSIVE` mode.

Different from `from_csv` and `from_json`, the default parse mode is `FAILFAST` for the following reasons:

1. Since Avro is structured data format, input data is usually able to be parsed by certain schema. In such case, exposing the problems of input data to users is better than hiding it.

2. For `PERMISSIVE` mode, we have to force the data schema as fully nullable. This seems quite unnecessary for Avro. Reversing non-null schema might archive more perf optimizations in Spark.

3. To be consistent with the behavior in Spark 2.4 .

## How was this patch tested?

Unit test

Manual previewing generated html for the Avro data source doc:

Closes#22814 from gengliangwang/improve_from_avro.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Check the `spark.sql.repl.eagerEval.enabled` configuration property in SparkDataFrame `show()` method. If the `SparkSession` has eager execution enabled, the data will be returned to the R client when the data frame is created. So instead of seeing this

```

> df <- createDataFrame(faithful)

> df

SparkDataFrame[eruptions:double, waiting:double]

```

you will see

```

> df <- createDataFrame(faithful)

> df

+---------+-------+

|eruptions|waiting|

+---------+-------+

| 3.6| 79.0|

| 1.8| 54.0|

| 3.333| 74.0|

| 2.283| 62.0|

| 4.533| 85.0|

| 2.883| 55.0|

| 4.7| 88.0|

| 3.6| 85.0|

| 1.95| 51.0|

| 4.35| 85.0|

| 1.833| 54.0|

| 3.917| 84.0|

| 4.2| 78.0|

| 1.75| 47.0|

| 4.7| 83.0|

| 2.167| 52.0|

| 1.75| 62.0|

| 4.8| 84.0|

| 1.6| 52.0|

| 4.25| 79.0|

+---------+-------+

only showing top 20 rows

```

## How was this patch tested?

Manual tests as well as unit tests (one new test case is added).

Author: adrian555 <v2ave10p>

Closes#22455 from adrian555/eager_execution.

## What changes were proposed in this pull request?

As this is targeted for 3.0.0 and Python2 will be deprecated by Jan 1st, 2020, I feel it is appropriate to change the default to Python3. Especially as these projects [found here](https://python3statement.org/) are deprecating their support.

## How was this patch tested?

Unit and Integration tests

Author: Ilan Filonenko <ifilondz@gmail.com>

Closes#22810 from ifilonenko/SPARK-24516.

## What changes were proposed in this pull request?

In the PR, I propose to switch `from_json` on `FailureSafeParser`, and to make the function compatible to `PERMISSIVE` mode by default, and to support the `FAILFAST` mode as well. The `DROPMALFORMED` mode is not supported by `from_json`.

## How was this patch tested?

It was tested by existing `JsonSuite`/`CSVSuite`, `JsonFunctionsSuite` and `JsonExpressionsSuite` as well as new tests for `from_json` which checks different modes.

Closes#22237 from MaxGekk/from_json-failuresafe.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Our current doc does not explain how we are passing the data source specific options to the underlying data source. According to [the review comment](https://github.com/apache/spark/pull/22622#discussion_r222911529), this PR aims to add more detailed information and examples

## How was this patch tested?

Manual.

Closes#22801 from dongjoon-hyun/SPARK-25656.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

This takes over original PR at #22019. The original proposal is to have null for float and double types. Later a more reasonable proposal is to disallow empty strings. This patch adds logic to throw exception when finding empty strings for non string types.

## How was this patch tested?

Added test.

Closes#22787 from viirya/SPARK-25040.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

add R API for PrefixSpan

## How was this patch tested?

add test in test_mllib_fpm.R

Author: Huaxin Gao <huaxing@us.ibm.com>

Closes#21710 from huaxingao/spark-24207.

## What changes were proposed in this pull request?

Fix minor error in the code "sketch of pregel implementation" of GraphX guide.

This fixed error relates to `[SPARK-12995][GraphX] Remove deprecate APIs from Pregel`

## How was this patch tested?

N/A

Closes#22780 from WeichenXu123/minor_doc_update1.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Since we didn't test Java 9 ~ 11 up to now in the community, fix the document to describe Java 8 only.

## How was this patch tested?

N/A (This is a document only change.)

Closes#22781 from dongjoon-hyun/SPARK-JDK-DOC.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Fix some broken links in the new document. I have clicked through all the links. Hopefully i haven't missed any :-)

## How was this patch tested?

Built using jekyll and verified the links.

Closes#22772 from dilipbiswal/doc_check.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

1. Split the main page of sql-programming-guide into 7 parts:

- Getting Started

- Data Sources

- Performance Turing

- Distributed SQL Engine

- PySpark Usage Guide for Pandas with Apache Arrow

- Migration Guide

- Reference

2. Add left menu for sql-programming-guide, keep first level index for each part in the menu.

## How was this patch tested?

Local test with jekyll build/serve.

Closes#22746 from xuanyuanking/SPARK-24499.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Remove Kafka 0.8 integration

## How was this patch tested?

Existing tests, build scripts

Closes#22703 from srowen/SPARK-25705.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is the work on setting up Secure HDFS interaction with Spark-on-K8S.

The architecture is discussed in this community-wide google [doc](https://docs.google.com/document/d/1RBnXD9jMDjGonOdKJ2bA1lN4AAV_1RwpU_ewFuCNWKg)

This initiative can be broken down into 4 Stages

**STAGE 1**

- [x] Detecting `HADOOP_CONF_DIR` environmental variable and using Config Maps to store all Hadoop config files locally, while also setting `HADOOP_CONF_DIR` locally in the driver / executors

**STAGE 2**

- [x] Grabbing `TGT` from `LTC` or using keytabs+principle and creating a `DT` that will be mounted as a secret or using a pre-populated secret

**STAGE 3**

- [x] Driver

**STAGE 4**

- [x] Executor

## How was this patch tested?

Locally tested on a single-noded, pseudo-distributed Kerberized Hadoop Cluster

- [x] E2E Integration tests https://github.com/apache/spark/pull/22608

- [ ] Unit tests

## Docs and Error Handling?

- [x] Docs

- [x] Error Handling

## Contribution Credit

kimoonkim skonto

Closes#21669 from ifilonenko/secure-hdfs.

Lead-authored-by: Ilan Filonenko <if56@cornell.edu>

Co-authored-by: Ilan Filonenko <ifilondz@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This PR adds CLI support for YARN custom resources, e.g. GPUs and any other resources YARN defines.

The custom resources are defined with Spark properties, no additional CLI arguments were introduced.

The properties can be defined in the following form:

**AM resources, client mode:**

Format: `spark.yarn.am.resource.<resource-name>`

The property name follows the naming convention of YARN AM cores / memory properties: `spark.yarn.am.memory and spark.yarn.am.cores

`

**Driver resources, cluster mode:**

Format: `spark.yarn.driver.resource.<resource-name>`

The property name follows the naming convention of driver cores / memory properties: `spark.driver.memory and spark.driver.cores.`

**Executor resources:**

Format: `spark.yarn.executor.resource.<resource-name>`

The property name follows the naming convention of executor cores / memory properties: `spark.executor.memory / spark.executor.cores`.

For the driver resources (cluster mode) and executor resources properties, we use the `yarn` prefix here as custom resource types are specific to YARN, currently.

**Validation:**

Please note that a validation logic is added to avoid having requested resources defined in 2 ways, for example defining the following configs:

```

"--conf", "spark.driver.memory=2G",

"--conf", "spark.yarn.driver.resource.memory=1G"

```

will not start execution and will print an error message.

## How was this patch tested?

Unit tests + manual execution with Hadoop2 and Hadoop 3 builds.

Testing have been performed on a real cluster with Spark and YARN configured:

Cluster and client mode

Request Resource Types with lowercase and uppercase units

Start Spark job with only requesting standard resources (mem / cpu)

Error handling cases:

- Request unknown resource type

- Request Resource type (either memory / cpu) with duplicate configs at the same time (e.g. with this config:

```

--conf spark.yarn.am.resource.memory=1G \

--conf spark.yarn.driver.resource.memory=2G \

--conf spark.yarn.executor.resource.memory=3G \

```

), ResourceTypeValidator handles these cases well, so it is not permitted

- Request standard resource (memory / cpu) with the new style configs, e.g. --conf spark.yarn.am.resource.memory=1G, this is not permitted and handled well.

An example about how I ran the testcases:

```

cd ~;export HADOOP_CONF_DIR=/opt/hadoop/etc/hadoop/;

./spark-2.4.0-SNAPSHOT-bin-custom-spark/bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn \

--deploy-mode cluster \

--driver-memory 1G \

--driver-cores 1 \

--executor-memory 1G \

--executor-cores 1 \

--conf spark.logConf=true \

--conf spark.yarn.executor.resource.gpu=3G \

--verbose \

./spark-2.4.0-SNAPSHOT-bin-custom-spark/examples/jars/spark-examples_2.11-2.4.0-SNAPSHOT.jar \

10;

```

Closes#20761 from szyszy/SPARK-20327.

Authored-by: Szilard Nemeth <snemeth@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Many companies have their own enterprise GitHub to manage Spark code. To build and test in those repositories with Jenkins need to modify this script.

So I suggest to add some environment variables to allow regression testing in enterprise Jenkins instead of default Spark repository in GitHub.

## How was this patch tested?

Manually test.

Closes#22678 from LantaoJin/SPARK-25685.

Lead-authored-by: lajin <lajin@ebay.com>

Co-authored-by: LantaoJin <jinlantao@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

According to the SQL standard, when a query contains `HAVING`, it indicates an aggregate operator. For more details please refer to https://blog.jooq.org/2014/12/04/do-you-really-understand-sqls-group-by-and-having-clauses/

However, in Spark SQL parser, we treat HAVING as a normal filter when there is no GROUP BY, which breaks SQL semantic and lead to wrong result. This PR fixes the parser.

## How was this patch tested?

new test

Closes#22696 from cloud-fan/having.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Removes all vestiges of Flume in the build, for Spark 3.

I don't think this needs Jenkins config changes.

## How was this patch tested?

Existing tests.

Closes#22692 from srowen/SPARK-25598.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Remove Hadoop 2.6 references and make 2.7 the default.

Obviously, this is for master/3.0.0 only.

After this we can also get rid of the separate test jobs for Hadoop 2.6.

## How was this patch tested?

Existing tests

Closes#22615 from srowen/SPARK-25016.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is a follow-up pr of #18536 and #22545 to update the migration guide.

## How was this patch tested?

Build and check the doc locally.

Closes#22682 from ueshin/issues/SPARK-20946_25525/migration_guide.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Added

- Python foreach

- Scala, Java and Python foreachBatch

- Multiple watermark policy

- The semantics of what changes are allowed to the streaming between restarts.

## How was this patch tested?

No tests

Closes#22627 from tdas/SPARK-25639.

Authored-by: Tathagata Das <tathagata.das1565@gmail.com>

Signed-off-by: Tathagata Das <tathagata.das1565@gmail.com>

## What changes were proposed in this pull request?

Documentation is updated with proper classname org.apache.spark.io.ZStdCompressionCodec

## How was this patch tested?

we used the spark.io.compression.codec = org.apache.spark.io.ZStdCompressionCodec

and verified the logs.

Closes#22669 from shivusondur/CompressionIssue.

Authored-by: shivusondur <shivusondur@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.0.0-SNAPSHOT.

## How was this patch tested?

N/A

Closes#22606 from gatorsmile/bump3.0.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR aims to fix the failed test of `OracleIntegrationSuite`.

## How was this patch tested?

Existing integration tests.

Closes#22461 from seancxmao/SPARK-25453.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Since we don't fail a job when `AccumulatorV2.merge` fails, we should try to update the remaining accumulators so that they can still report correct values.

## How was this patch tested?

The new unit test.

Closes#22586 from zsxwing/SPARK-25568.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Markdown links are not working inside html table. We should use html link tag.

## How was this patch tested?

Verified in IntelliJ IDEA's markdown editor and online markdown editor.

Closes#22588 from viirya/SPARK-25262-followup.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This adds a missing end markup tag. This should go `master` branch only.

## How was this patch tested?

This is a doc-only change. Manual via `SKIP_API=1 jekyll build`.

Closes#22584 from dongjoon-hyun/SPARK-25262.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This adds a missing markup tag. This should go to `master/branch-2.4`.

## How was this patch tested?

Manual via `SKIP_API=1 jekyll build`.

Closes#22585 from dongjoon-hyun/SPARK-23285.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

SparkSubmit already logs in the user if a keytab is provided, the only issue is that it uses the existing configs which have "yarn" in their name. As such, the configs were changed to:

`spark.kerberos.keytab` and `spark.kerberos.principal`.

## How was this patch tested?

Will be tested with K8S tests, but needs to be tested with Yarn

- [x] K8S Secure HDFS tests

- [x] Yarn Secure HDFS tests vanzin

Closes#22362 from ifilonenko/SPARK-25372.

Authored-by: Ilan Filonenko <if56@cornell.edu>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Describe spark.sql.parquet.writeLegacyFormat property in Spark SQL, DataFrames and Datasets Guide.

## How was this patch tested?

N/A

Closes#22453 from seancxmao/SPARK-20937.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

One more legacy config to go ...

Closes#22515 from rxin/allowCreatingManagedTableUsingNonemptyLocation.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

See title. Makes our legacy backward compatibility configs more consistent.

## How was this patch tested?

Make sure all references have been updated:

```

> git grep compareDateTimestampInTimestamp

docs/sql-programming-guide.md: - Since Spark 2.4, Spark compares a DATE type with a TIMESTAMP type after promotes both sides to TIMESTAMP. To set `false` to `spark.sql.legacy.compareDateTimestampInTimestamp` restores the previous behavior. This option will be removed in Spark 3.0.

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/TypeCoercion.scala: // if conf.compareDateTimestampInTimestamp is true

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/TypeCoercion.scala: => if (conf.compareDateTimestampInTimestamp) Some(TimestampType) else Some(StringType)

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/TypeCoercion.scala: => if (conf.compareDateTimestampInTimestamp) Some(TimestampType) else Some(StringType)

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala: buildConf("spark.sql.legacy.compareDateTimestampInTimestamp")

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala: def compareDateTimestampInTimestamp : Boolean = getConf(COMPARE_DATE_TIMESTAMP_IN_TIMESTAMP)

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/analysis/TypeCoercionSuite.scala: "spark.sql.legacy.compareDateTimestampInTimestamp" -> convertToTS.toString) {

```

Closes#22508 from rxin/SPARK-23549.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This reverts commit 417ad92502.

We decided to keep the current behaviors unchanged and will consider whether we will deprecate the these functions in 3.0. For more details, see the discussion in https://issues.apache.org/jira/browse/SPARK-23715

## How was this patch tested?

The existing tests.

Closes#22505 from gatorsmile/revertSpark-23715.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Hadoop2.6 and hadoop2.7 do not contain zstd and brotli compressioncodec ,hadoop 3.1 also contains only zstd compressioncodec .

So I think we should remove zstd and brotil for the time being.

**set `spark.sql.parquet.compression.codec=brotli`:**

Caused by: org.apache.parquet.hadoop.BadConfigurationException: Class org.apache.hadoop.io.compress.BrotliCodec was not found

at org.apache.parquet.hadoop.CodecFactory.getCodec(CodecFactory.java:235)

at org.apache.parquet.hadoop.CodecFactory$HeapBytesCompressor.<init>(CodecFactory.java:142)

at org.apache.parquet.hadoop.CodecFactory.createCompressor(CodecFactory.java:206)

at org.apache.parquet.hadoop.CodecFactory.getCompressor(CodecFactory.java:189)

at org.apache.parquet.hadoop.ParquetRecordWriter.<init>(ParquetRecordWriter.java:153)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:411)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:349)

at org.apache.spark.sql.execution.datasources.parquet.ParquetOutputWriter.<init>(ParquetOutputWriter.scala:37)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anon$1.newInstance(ParquetFileFormat.scala:161)

**set `spark.sql.parquet.compression.codec=zstd`:**

Caused by: org.apache.parquet.hadoop.BadConfigurationException: Class org.apache.hadoop.io.compress.ZStandardCodec was not found

at org.apache.parquet.hadoop.CodecFactory.getCodec(CodecFactory.java:235)

at org.apache.parquet.hadoop.CodecFactory$HeapBytesCompressor.<init>(CodecFactory.java:142)

at org.apache.parquet.hadoop.CodecFactory.createCompressor(CodecFactory.java:206)

at org.apache.parquet.hadoop.CodecFactory.getCompressor(CodecFactory.java:189)

at org.apache.parquet.hadoop.ParquetRecordWriter.<init>(ParquetRecordWriter.java:153)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:411)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:349)

at org.apache.spark.sql.execution.datasources.parquet.ParquetOutputWriter.<init>(ParquetOutputWriter.scala:37)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anon$1.newInstance(ParquetFileFormat.scala:161)

## How was this patch tested?

Exist unit test

Closes#22358 from 10110346/notsupportzstdandbrotil.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In ArrayContains, we currently cast the right hand side expression to match the element type of the left hand side Array. This may result in down casting and may return wrong result or questionable result.

Example :

```SQL

spark-sql> select array_contains(array(1), 1.34);

true

```

```SQL

spark-sql> select array_contains(array(1), 'foo');

null

```

We should safely coerce both left and right hand side expressions.

## How was this patch tested?

Added tests in DataFrameFunctionsSuite

Closes#22408 from dilipbiswal/SPARK-25417.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The PR updates the migration guide in order to explain the changes introduced in the behavior of the IN operator with subqueries, in particular, the improved handling of struct attributes in these situations.

## How was this patch tested?

NA

Closes#22469 from mgaido91/SPARK-24341_followup.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

To be more consistent with other statistics based configs.

## How was this patch tested?

N/A - straightforward rename of config option. Used `git grep` to make sure there are no mention of it.

Closes#22457 from rxin/SPARK-24626.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Update `tuning.md` and `rdd-programming-guide.md` to use JDK8.

## How was this patch tested?

manual tests

Closes#22446 from wangyum/java8.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

SPARK-22333 introduced a regression in the resolution of `CURRENT_DATE` and `CURRENT_TIMESTAMP`. Before that ticket, these 2 functions were resolved in a case insensitive way. After, this depends on the value of `spark.sql.caseSensitive`.

The PR restores the previous behavior and makes their resolution case insensitive anyhow. The PR takes over #21217, therefore it closes#21217 and credit for this patch should be given to jamesthomp.

## How was this patch tested?

added UT

Closes#22440 from mgaido91/SPARK-24151.

Lead-authored-by: James Thompson <jamesthomp@users.noreply.github.com>

Co-authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

What changes were proposed in this pull request

Updated the Migration guide for the behavior changes done in the JIRA issue SPARK-23425.

How was this patch tested?

Manually verified.

Closes#22396 from sujith71955/master_newtest.

Authored-by: s71955 <sujithchacko.2010@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the dev list, we can still discuss whether the next version is 2.5.0 or 3.0.0. Let us first bump the master branch version to `2.5.0-SNAPSHOT`.

## How was this patch tested?

N/A

Closes#22426 from gatorsmile/bumpVersionMaster.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Add description of Executor Task Metrics to the documentation.

Closes#22397 from LucaCanali/docMonitoringTaskMetrics.

Authored-by: LucaCanali <luca.canali@cern.ch>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

This is a rework of #21433 to address some concerns there.

Closes#22398 from michaelmior/long-callsite2.

Authored-by: Michael Mior <mmior@uwaterloo.ca>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose new CSV option `emptyValue` and an update in the SQL Migration Guide which describes how to revert previous behavior when empty strings were not written at all. Since Spark 2.4, empty strings are saved as `""` to distinguish them from saved `null`s.

Closes#22234Closes#22367

## How was this patch tested?

It was tested by `CSVSuite` and new tests added in the PR #22234Closes#22389 from MaxGekk/csv-empty-value-master.

Lead-authored-by: Mario Molina <mmolimar@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Add spark.executor.pyspark.memory limit for K8S

## How was this patch tested?

Unit and Integration tests

Closes#22298 from ifilonenko/SPARK-25021.

Authored-by: Ilan Filonenko <if56@cornell.edu>

Signed-off-by: Holden Karau <holden@pigscanfly.ca>

## What changes were proposed in this pull request?

How to reproduce permission issue:

```sh

# build spark

./dev/make-distribution.sh --name SPARK-25330 --tgz -Phadoop-2.7 -Phive -Phive-thriftserver -Pyarn

tar -zxf spark-2.4.0-SNAPSHOT-bin-SPARK-25330.tar && cd spark-2.4.0-SNAPSHOT-bin-SPARK-25330

export HADOOP_PROXY_USER=user_a

bin/spark-sql

export HADOOP_PROXY_USER=user_b

bin/spark-sql

```

```java

Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.security.AccessControlException: Permission denied: user=user_b, access=EXECUTE, inode="/tmp/hive-$%7Buser.name%7D/user_b/668748f2-f6c5-4325-a797-fd0a7ee7f4d4":user_b:hadoop:drwx------

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkTraverse(FSPermissionChecker.java:259)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:205)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190)

```

The issue occurred in this commit: feb886f209. This pr revert Hadoop 2.7 to 2.7.3 to avoid this issue.

## How was this patch tested?

unit tests and manual tests.

Closes#22327 from wangyum/SPARK-25330.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The default behaviour of Spark on K8S currently is to create `emptyDir` volumes to back `SPARK_LOCAL_DIRS`. In some environments e.g. diskless compute nodes this may actually hurt performance because these are backed by the Kubelet's node storage which on a diskless node will typically be some remote network storage.

Even if this is enterprise grade storage connected via a high speed interconnect the way Spark uses these directories as scratch space (lots of relatively small short lived files) has been observed to cause serious performance degradation. Therefore we would like to provide the option to use K8S's ability to instead back these `emptyDir` volumes with `tmpfs`. Therefore this PR adds a configuration option that enables `SPARK_LOCAL_DIRS` to be backed by Memory backed `emptyDir` volumes rather than the default.

Documentation is added to describe both the default behaviour plus this new option and its implications. One of which is that scratch space then counts towards your pods memory limits and therefore users will need to adjust their memory requests accordingly.

*NB* - This is an alternative version of PR #22256 reduced to just the `tmpfs` piece

## How was this patch tested?

Ran with this option in our diskless compute environments to verify functionality

Author: Rob Vesse <rvesse@dotnetrdf.org>

Closes#22323 from rvesse/SPARK-25262-tmpfs.

## What changes were proposed in this pull request?

Upgrade chill to 0.9.3, Kryo to 4.0.2, to get bug fixes and improvements.

The resolved tickets includes:

- SPARK-25258 Upgrade kryo package to version 4.0.2

- SPARK-23131 Kryo raises StackOverflow during serializing GLR model

- SPARK-25176 Kryo fails to serialize a parametrised type hierarchy

More details:

https://github.com/twitter/chill/releases/tag/v0.9.3cc3910d501

## How was this patch tested?

Existing tests.

Closes#22179 from wangyum/SPARK-23131.

Lead-authored-by: Yuming Wang <yumwang@ebay.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Improve build for Scala 2.12. Current build for sbt fails on the subproject `repl`:

```

[info] Compiling 6 Scala sources to /Users/rendong/wdi/spark/repl/target/scala-2.12/classes...

[error] /Users/rendong/wdi/spark/repl/scala-2.11/src/main/scala/org/apache/spark/repl/SparkILoopInterpreter.scala:80: overriding lazy value importableSymbolsWithRenames in class ImportHandler of type List[(this.intp.global.Symbol, this.intp.global.Name)];

[error] lazy value importableSymbolsWithRenames needs `override' modifier

[error] lazy val importableSymbolsWithRenames: List[(Symbol, Name)] = {

[error] ^

[warn] /Users/rendong/wdi/spark/repl/src/main/scala/org/apache/spark/repl/SparkILoop.scala:53: variable addedClasspath in class ILoop is deprecated (since 2.11.0): use reset, replay or require to update class path

[warn] if (addedClasspath != "") {

[warn] ^

[warn] /Users/rendong/wdi/spark/repl/src/main/scala/org/apache/spark/repl/SparkILoop.scala:54: variable addedClasspath in class ILoop is deprecated (since 2.11.0): use reset, replay or require to update class path

[warn] settings.classpath append addedClasspath

[warn] ^

[warn] two warnings found

[error] one error found

[error] (repl/compile:compileIncremental) Compilation failed

[error] Total time: 93 s, completed 2018-9-3 10:07:26

```

## How was this patch tested?

```

./dev/change-scala-version.sh 2.12

## For Maven

./build/mvn -Pscala-2.12 [mvn commands]

## For SBT

sbt -Dscala.version=2.12.6

```

Closes#22310 from sadhen/SPARK-25298.

Authored-by: Darcy Shen <sadhen@zoho.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

As described in [SPARK-25261](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-25261),the unit of spark.executor.memory and spark.driver.memory is parsed as bytes in some cases if no unit specified, while in https://spark.apache.org/docs/latest/configuration.html#application-properties, they are descibed as MiB, which may lead to some misunderstandings.

## How was this patch tested?

N/A

Closes#22252 from ivoson/branch-correct-configuration.

Lead-authored-by: huangtengfei02 <huangtengfei02@baidu.com>

Co-authored-by: Huang Tengfei <tengfei.h@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>