### What changes were proposed in this pull request?

In [SPARK-33139] we defined `setActionSession` and `clearActiveSession` as deprecated API, it turns out it is widely used, and after discussion, even if without this PR, it should work with unify view feature, it might only be a risk if user really abuse using these two API. So revert the PR is needed.

[SPARK-33139] has two commit, include a follow up. Revert them both.

### Why are the changes needed?

Revert.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing UT.

Closes#30367 from leanken/leanken-revert-SPARK-33139.

Authored-by: xuewei.linxuewei <xuewei.linxuewei@alibaba-inc.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to migrate to [NumPy documentation style](https://numpydoc.readthedocs.io/en/latest/format.html), see also SPARK-33243.

While I am migrating, I also fixed some Python type hints accordingly.

### Why are the changes needed?

For better documentation as text itself, and generated HTMLs

### Does this PR introduce _any_ user-facing change?

Yes, they will see a better format of HTMLs, and better text format. See SPARK-33243.

### How was this patch tested?

Manually tested via running `./dev/lint-python`.

Closes#30181 from HyukjinKwon/SPARK-33250.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In [SPARK-33139](https://github.com/apache/spark/pull/30042), I was using reflect "Class.forName" in python code to invoke method in SparkSession which is not recommended. using getattr to access "SparkSession$.Module$" instead.

### Why are the changes needed?

Code refine.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#30092 from leanken/leanken-SPARK-33139-followup.

Authored-by: xuewei.linxuewei <xuewei.linxuewei@alibaba-inc.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR is a sub-task of [SPARK-33138](https://issues.apache.org/jira/browse/SPARK-33138). In order to make SQLConf.get reliable and stable, we need to make sure user can't pollute the SQLConf and SparkSession Context via calling setActiveSession and clearActiveSession.

Change of the PR:

* add legacy config spark.sql.legacy.allowModifyActiveSession to fallback to old behavior if user do need to call these two API.

* by default, if user call these two API, it will throw exception

* add extra two internal and private API setActiveSessionInternal and clearActiveSessionInternal for current internal usage

* change all internal reference to new internal API except for SQLContext.setActive and SQLContext.clearActive

### Why are the changes needed?

Make SQLConf.get reliable and stable.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

* Add UT in SparkSessionBuilderSuite to test the legacy config

* Existing test

Closes#30042 from leanken/leanken-SPARK-33139.

Authored-by: xuewei.linxuewei <xuewei.linxuewei@alibaba-inc.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Fixes typo in docsting of `toDF`

### Why are the changes needed?

The third argument of `toDF` is actually `sampleRatio`.

related discussion: https://github.com/apache/spark/pull/12746#discussion-diff-62704834

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

This patch doesn't affect any logic, so existing tests should cover it.

Closes#29551 from unirt/minor_fix_docs.

Authored-by: unirt <lunirtc@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

As discussed in https://github.com/apache/spark/pull/29491#discussion_r474451282 and in SPARK-32686, this PR un-deprecates Spark's ability to infer a DataFrame schema from a list of dictionaries. The ability is Pythonic and matches functionality offered by Pandas.

### Why are the changes needed?

This change clarifies to users that this behavior is supported and is not going away in the near future.

### Does this PR introduce _any_ user-facing change?

Yes. There used to be a `UserWarning` for this, but now there isn't.

### How was this patch tested?

I tested this manually.

Before:

```python

>>> spark.createDataFrame(spark.sparkContext.parallelize([{'a': 5}]))

/Users/nchamm/Documents/GitHub/nchammas/spark/python/pyspark/sql/session.py:388: UserWarning: Using RDD of dict to inferSchema is deprecated. Use pyspark.sql.Row instead

warnings.warn("Using RDD of dict to inferSchema is deprecated. "

DataFrame[a: bigint]

>>> spark.createDataFrame([{'a': 5}])

.../python/pyspark/sql/session.py:378: UserWarning: inferring schema from dict is deprecated,please use pyspark.sql.Row instead

warnings.warn("inferring schema from dict is deprecated,"

DataFrame[a: bigint]

```

After:

```python

>>> spark.createDataFrame(spark.sparkContext.parallelize([{'a': 5}]))

DataFrame[a: bigint]

>>> spark.createDataFrame([{'a': 5}])

DataFrame[a: bigint]

```

Closes#29510 from nchammas/SPARK-32686-df-dict-infer-schema.

Authored-by: Nicholas Chammas <nicholas.chammas@liveramp.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

Disallow the use of unused imports:

- Unnecessary increases the memory footprint of the application

- Removes the imports that are required for the examples in the docstring from the file-scope to the example itself. This keeps the files itself clean, and gives a more complete example as it also includes the imports :)

```

fokkodriesprongFan spark % flake8 python | grep -i "imported but unused"

python/pyspark/cloudpickle.py:46:1: F401 'functools.partial' imported but unused

python/pyspark/cloudpickle.py:55:1: F401 'traceback' imported but unused

python/pyspark/heapq3.py:868:5: F401 '_heapq.*' imported but unused

python/pyspark/__init__.py:61:1: F401 'pyspark.version.__version__' imported but unused

python/pyspark/__init__.py:62:1: F401 'pyspark._globals._NoValue' imported but unused

python/pyspark/__init__.py:115:1: F401 'pyspark.sql.SQLContext' imported but unused

python/pyspark/__init__.py:115:1: F401 'pyspark.sql.HiveContext' imported but unused

python/pyspark/__init__.py:115:1: F401 'pyspark.sql.Row' imported but unused

python/pyspark/rdd.py:21:1: F401 're' imported but unused

python/pyspark/rdd.py:29:1: F401 'tempfile.NamedTemporaryFile' imported but unused

python/pyspark/mllib/regression.py:26:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/clustering.py:28:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/clustering.py:28:1: F401 'pyspark.mllib.linalg.DenseVector' imported but unused

python/pyspark/mllib/classification.py:26:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/feature.py:28:1: F401 'pyspark.mllib.linalg.DenseVector' imported but unused

python/pyspark/mllib/feature.py:28:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/feature.py:30:1: F401 'pyspark.mllib.regression.LabeledPoint' imported but unused

python/pyspark/mllib/tests/test_linalg.py:18:1: F401 'sys' imported but unused

python/pyspark/mllib/tests/test_linalg.py:642:5: F401 'pyspark.mllib.tests.test_linalg.*' imported but unused

python/pyspark/mllib/tests/test_feature.py:21:1: F401 'numpy.random' imported but unused

python/pyspark/mllib/tests/test_feature.py:21:1: F401 'numpy.exp' imported but unused

python/pyspark/mllib/tests/test_feature.py:23:1: F401 'pyspark.mllib.linalg.Vector' imported but unused

python/pyspark/mllib/tests/test_feature.py:23:1: F401 'pyspark.mllib.linalg.VectorUDT' imported but unused

python/pyspark/mllib/tests/test_feature.py:185:5: F401 'pyspark.mllib.tests.test_feature.*' imported but unused

python/pyspark/mllib/tests/test_util.py:97:5: F401 'pyspark.mllib.tests.test_util.*' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.Vector' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.SparseVector' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.DenseVector' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.VectorUDT' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg._convert_to_vector' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.DenseMatrix' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.SparseMatrix' imported but unused

python/pyspark/mllib/tests/test_stat.py:23:1: F401 'pyspark.mllib.linalg.MatrixUDT' imported but unused

python/pyspark/mllib/tests/test_stat.py:181:5: F401 'pyspark.mllib.tests.test_stat.*' imported but unused

python/pyspark/mllib/tests/test_streaming_algorithms.py:18:1: F401 'time.time' imported but unused

python/pyspark/mllib/tests/test_streaming_algorithms.py:18:1: F401 'time.sleep' imported but unused

python/pyspark/mllib/tests/test_streaming_algorithms.py:470:5: F401 'pyspark.mllib.tests.test_streaming_algorithms.*' imported but unused

python/pyspark/mllib/tests/test_algorithms.py:295:5: F401 'pyspark.mllib.tests.test_algorithms.*' imported but unused

python/pyspark/tests/test_serializers.py:90:13: F401 'xmlrunner' imported but unused

python/pyspark/tests/test_rdd.py:21:1: F401 'sys' imported but unused

python/pyspark/tests/test_rdd.py:29:1: F401 'pyspark.resource.ResourceProfile' imported but unused

python/pyspark/tests/test_rdd.py:885:5: F401 'pyspark.tests.test_rdd.*' imported but unused

python/pyspark/tests/test_readwrite.py:19:1: F401 'sys' imported but unused

python/pyspark/tests/test_readwrite.py:22:1: F401 'array.array' imported but unused

python/pyspark/tests/test_readwrite.py:309:5: F401 'pyspark.tests.test_readwrite.*' imported but unused

python/pyspark/tests/test_join.py:62:5: F401 'pyspark.tests.test_join.*' imported but unused

python/pyspark/tests/test_taskcontext.py:19:1: F401 'shutil' imported but unused

python/pyspark/tests/test_taskcontext.py:325:5: F401 'pyspark.tests.test_taskcontext.*' imported but unused

python/pyspark/tests/test_conf.py:36:5: F401 'pyspark.tests.test_conf.*' imported but unused

python/pyspark/tests/test_broadcast.py:148:5: F401 'pyspark.tests.test_broadcast.*' imported but unused

python/pyspark/tests/test_daemon.py:76:5: F401 'pyspark.tests.test_daemon.*' imported but unused

python/pyspark/tests/test_util.py:77:5: F401 'pyspark.tests.test_util.*' imported but unused

python/pyspark/tests/test_pin_thread.py:19:1: F401 'random' imported but unused

python/pyspark/tests/test_pin_thread.py:149:5: F401 'pyspark.tests.test_pin_thread.*' imported but unused

python/pyspark/tests/test_worker.py:19:1: F401 'sys' imported but unused

python/pyspark/tests/test_worker.py:26:5: F401 'resource' imported but unused

python/pyspark/tests/test_worker.py:203:5: F401 'pyspark.tests.test_worker.*' imported but unused

python/pyspark/tests/test_profiler.py:101:5: F401 'pyspark.tests.test_profiler.*' imported but unused

python/pyspark/tests/test_shuffle.py:18:1: F401 'sys' imported but unused

python/pyspark/tests/test_shuffle.py:171:5: F401 'pyspark.tests.test_shuffle.*' imported but unused

python/pyspark/tests/test_rddbarrier.py:43:5: F401 'pyspark.tests.test_rddbarrier.*' imported but unused

python/pyspark/tests/test_context.py:129:13: F401 'userlibrary.UserClass' imported but unused

python/pyspark/tests/test_context.py:140:13: F401 'userlib.UserClass' imported but unused

python/pyspark/tests/test_context.py:310:5: F401 'pyspark.tests.test_context.*' imported but unused

python/pyspark/tests/test_appsubmit.py:241:5: F401 'pyspark.tests.test_appsubmit.*' imported but unused

python/pyspark/streaming/dstream.py:18:1: F401 'sys' imported but unused

python/pyspark/streaming/tests/test_dstream.py:27:1: F401 'pyspark.RDD' imported but unused

python/pyspark/streaming/tests/test_dstream.py:647:5: F401 'pyspark.streaming.tests.test_dstream.*' imported but unused

python/pyspark/streaming/tests/test_kinesis.py:83:5: F401 'pyspark.streaming.tests.test_kinesis.*' imported but unused

python/pyspark/streaming/tests/test_listener.py:152:5: F401 'pyspark.streaming.tests.test_listener.*' imported but unused

python/pyspark/streaming/tests/test_context.py:178:5: F401 'pyspark.streaming.tests.test_context.*' imported but unused

python/pyspark/testing/utils.py:30:5: F401 'scipy.sparse' imported but unused

python/pyspark/testing/utils.py:36:5: F401 'numpy as np' imported but unused

python/pyspark/ml/regression.py:25:1: F401 'pyspark.ml.tree._TreeEnsembleParams' imported but unused

python/pyspark/ml/regression.py:25:1: F401 'pyspark.ml.tree._HasVarianceImpurity' imported but unused

python/pyspark/ml/regression.py:29:1: F401 'pyspark.ml.wrapper.JavaParams' imported but unused

python/pyspark/ml/util.py:19:1: F401 'sys' imported but unused

python/pyspark/ml/__init__.py:25:1: F401 'pyspark.ml.pipeline' imported but unused

python/pyspark/ml/pipeline.py:18:1: F401 'sys' imported but unused

python/pyspark/ml/stat.py:22:1: F401 'pyspark.ml.linalg.DenseMatrix' imported but unused

python/pyspark/ml/stat.py:22:1: F401 'pyspark.ml.linalg.Vectors' imported but unused

python/pyspark/ml/tests/test_training_summary.py:18:1: F401 'sys' imported but unused

python/pyspark/ml/tests/test_training_summary.py:364:5: F401 'pyspark.ml.tests.test_training_summary.*' imported but unused

python/pyspark/ml/tests/test_linalg.py:381:5: F401 'pyspark.ml.tests.test_linalg.*' imported but unused

python/pyspark/ml/tests/test_tuning.py:427:9: F401 'pyspark.sql.functions as F' imported but unused

python/pyspark/ml/tests/test_tuning.py:757:5: F401 'pyspark.ml.tests.test_tuning.*' imported but unused

python/pyspark/ml/tests/test_wrapper.py:120:5: F401 'pyspark.ml.tests.test_wrapper.*' imported but unused

python/pyspark/ml/tests/test_feature.py:19:1: F401 'sys' imported but unused

python/pyspark/ml/tests/test_feature.py:304:5: F401 'pyspark.ml.tests.test_feature.*' imported but unused

python/pyspark/ml/tests/test_image.py:19:1: F401 'py4j' imported but unused

python/pyspark/ml/tests/test_image.py:22:1: F401 'pyspark.testing.mlutils.PySparkTestCase' imported but unused

python/pyspark/ml/tests/test_image.py:71:5: F401 'pyspark.ml.tests.test_image.*' imported but unused

python/pyspark/ml/tests/test_persistence.py:456:5: F401 'pyspark.ml.tests.test_persistence.*' imported but unused

python/pyspark/ml/tests/test_evaluation.py:56:5: F401 'pyspark.ml.tests.test_evaluation.*' imported but unused

python/pyspark/ml/tests/test_stat.py:43:5: F401 'pyspark.ml.tests.test_stat.*' imported but unused

python/pyspark/ml/tests/test_base.py:70:5: F401 'pyspark.ml.tests.test_base.*' imported but unused

python/pyspark/ml/tests/test_param.py:20:1: F401 'sys' imported but unused

python/pyspark/ml/tests/test_param.py:375:5: F401 'pyspark.ml.tests.test_param.*' imported but unused

python/pyspark/ml/tests/test_pipeline.py:62:5: F401 'pyspark.ml.tests.test_pipeline.*' imported but unused

python/pyspark/ml/tests/test_algorithms.py:333:5: F401 'pyspark.ml.tests.test_algorithms.*' imported but unused

python/pyspark/ml/param/__init__.py:18:1: F401 'sys' imported but unused

python/pyspark/resource/tests/test_resources.py:17:1: F401 'random' imported but unused

python/pyspark/resource/tests/test_resources.py:20:1: F401 'pyspark.resource.ResourceProfile' imported but unused

python/pyspark/resource/tests/test_resources.py:75:5: F401 'pyspark.resource.tests.test_resources.*' imported but unused

python/pyspark/sql/functions.py:32:1: F401 'pyspark.sql.udf.UserDefinedFunction' imported but unused

python/pyspark/sql/functions.py:34:1: F401 'pyspark.sql.pandas.functions.pandas_udf' imported but unused

python/pyspark/sql/session.py:30:1: F401 'pyspark.sql.types.Row' imported but unused

python/pyspark/sql/session.py:30:1: F401 'pyspark.sql.types.StringType' imported but unused

python/pyspark/sql/readwriter.py:1084:5: F401 'pyspark.sql.Row' imported but unused

python/pyspark/sql/context.py:26:1: F401 'pyspark.sql.types.IntegerType' imported but unused

python/pyspark/sql/context.py:26:1: F401 'pyspark.sql.types.Row' imported but unused

python/pyspark/sql/context.py:26:1: F401 'pyspark.sql.types.StringType' imported but unused

python/pyspark/sql/context.py:27:1: F401 'pyspark.sql.udf.UDFRegistration' imported but unused

python/pyspark/sql/streaming.py:1212:5: F401 'pyspark.sql.Row' imported but unused

python/pyspark/sql/tests/test_utils.py:55:5: F401 'pyspark.sql.tests.test_utils.*' imported but unused

python/pyspark/sql/tests/test_pandas_map.py:18:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_pandas_map.py:22:1: F401 'pyspark.sql.functions.pandas_udf' imported but unused

python/pyspark/sql/tests/test_pandas_map.py:22:1: F401 'pyspark.sql.functions.PandasUDFType' imported but unused

python/pyspark/sql/tests/test_pandas_map.py:119:5: F401 'pyspark.sql.tests.test_pandas_map.*' imported but unused

python/pyspark/sql/tests/test_catalog.py:193:5: F401 'pyspark.sql.tests.test_catalog.*' imported but unused

python/pyspark/sql/tests/test_group.py:39:5: F401 'pyspark.sql.tests.test_group.*' imported but unused

python/pyspark/sql/tests/test_session.py:361:5: F401 'pyspark.sql.tests.test_session.*' imported but unused

python/pyspark/sql/tests/test_conf.py:49:5: F401 'pyspark.sql.tests.test_conf.*' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:19:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:21:1: F401 'pyspark.sql.functions.sum' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:21:1: F401 'pyspark.sql.functions.PandasUDFType' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:29:5: F401 'pandas.util.testing.assert_series_equal' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:32:5: F401 'pyarrow as pa' imported but unused

python/pyspark/sql/tests/test_pandas_cogrouped_map.py:248:5: F401 'pyspark.sql.tests.test_pandas_cogrouped_map.*' imported but unused

python/pyspark/sql/tests/test_udf.py:24:1: F401 'py4j' imported but unused

python/pyspark/sql/tests/test_pandas_udf_typehints.py:246:5: F401 'pyspark.sql.tests.test_pandas_udf_typehints.*' imported but unused

python/pyspark/sql/tests/test_functions.py:19:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_functions.py:362:9: F401 'pyspark.sql.functions.exists' imported but unused

python/pyspark/sql/tests/test_functions.py:387:5: F401 'pyspark.sql.tests.test_functions.*' imported but unused

python/pyspark/sql/tests/test_pandas_udf_scalar.py:21:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_pandas_udf_scalar.py:45:5: F401 'pyarrow as pa' imported but unused

python/pyspark/sql/tests/test_pandas_udf_window.py:355:5: F401 'pyspark.sql.tests.test_pandas_udf_window.*' imported but unused

python/pyspark/sql/tests/test_arrow.py:38:5: F401 'pyarrow as pa' imported but unused

python/pyspark/sql/tests/test_pandas_grouped_map.py:20:1: F401 'sys' imported but unused

python/pyspark/sql/tests/test_pandas_grouped_map.py:38:5: F401 'pyarrow as pa' imported but unused

python/pyspark/sql/tests/test_dataframe.py:382:9: F401 'pyspark.sql.DataFrame' imported but unused

python/pyspark/sql/avro/functions.py:125:5: F401 'pyspark.sql.Row' imported but unused

python/pyspark/sql/pandas/functions.py:19:1: F401 'sys' imported but unused

```

After:

```

fokkodriesprongFan spark % flake8 python | grep -i "imported but unused"

fokkodriesprongFan spark %

```

### What changes were proposed in this pull request?

Removing unused imports from the Python files to keep everything nice and tidy.

### Why are the changes needed?

Cleaning up of the imports that aren't used, and suppressing the imports that are used as references to other modules, preserving backward compatibility.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Adding the rule to the existing Flake8 checks.

Closes#29121 from Fokko/SPARK-32319.

Authored-by: Fokko Driesprong <fokko@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR aims to drop Python 2.7, 3.4 and 3.5.

Roughly speaking, it removes all the widely known Python 2 compatibility workarounds such as `sys.version` comparison, `__future__`. Also, it removes the Python 2 dedicated codes such as `ArrayConstructor` in Spark.

### Why are the changes needed?

1. Unsupport EOL Python versions

2. Reduce maintenance overhead and remove a bit of legacy codes and hacks for Python 2.

3. PyPy2 has a critical bug that causes a flaky test, SPARK-28358 given my testing and investigation.

4. Users can use Python type hints with Pandas UDFs without thinking about Python version

5. Users can leverage one latest cloudpickle, https://github.com/apache/spark/pull/28950. With Python 3.8+ it can also leverage C pickle.

### Does this PR introduce _any_ user-facing change?

Yes, users cannot use Python 2.7, 3.4 and 3.5 in the upcoming Spark version.

### How was this patch tested?

Manually tested and also tested in Jenkins.

Closes#28957 from HyukjinKwon/SPARK-32138.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

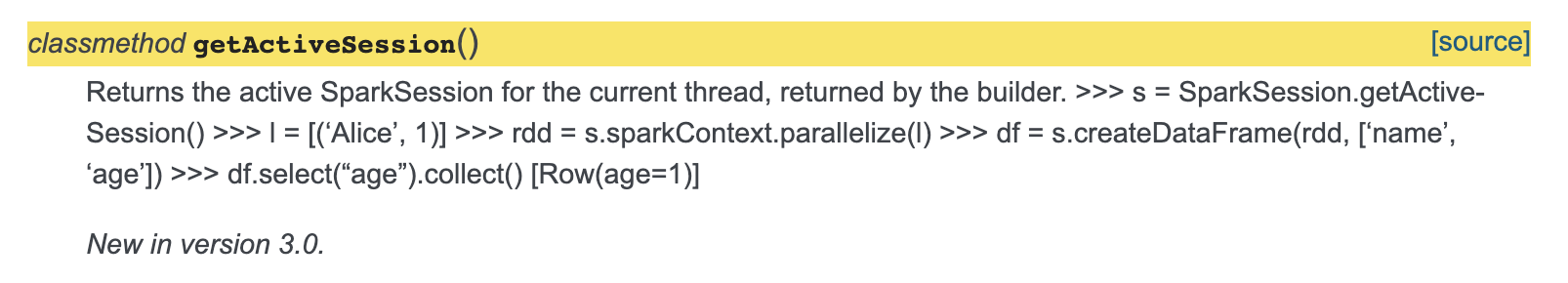

### What changes were proposed in this pull request?

Minor fix so that the documentation of `getActiveSession` is fixed.

The sample code snippet doesn't come up formatted rightly, added spacing for this to be fixed.

Also added return to docs.

### Why are the changes needed?

The sample code is getting mixed up as description in the docs.

[Current Doc Link](http://spark.apache.org/docs/latest/api/python/pyspark.sql.html?highlight=getactivesession#pyspark.sql.SparkSession.getActiveSession)

### Does this PR introduce _any_ user-facing change?

Yes, documentation of getActiveSession is fixed.

And added description about return.

### How was this patch tested?

Adding a spacing between description and code seems to fix the issue.

Closes#28978 from animenon/docs_minor.

Authored-by: animenon <animenon@mail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

As discussed on the Jira ticket, this change clears the SQLContext._instantiatedContext class attribute when the SparkSession is stopped. That way, the attribute will be reset with a new, usable SQLContext when a new SparkSession is started.

### Why are the changes needed?

When the underlying SQLContext is instantiated for a SparkSession, the instance is saved as a class attribute and returned from subsequent calls to SQLContext.getOrCreate(). If the SparkContext is stopped and a new one started, the SQLContext class attribute is never cleared so any code which calls SQLContext.getOrCreate() will get a SQLContext with a reference to the old, unusable SparkContext.

A similar issue was identified and fixed for SparkSession in [SPARK-19055](https://issues.apache.org/jira/browse/SPARK-19055), but the fix did not change SQLContext as well. I ran into this because mllib still [uses](https://github.com/apache/spark/blob/master/python/pyspark/mllib/common.py#L105) SQLContext.getOrCreate() under the hood.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

A new test was added. I verified that the test fails without the included change.

Closes#27610 from afavaro/restart-sqlcontext.

Authored-by: Alex Favaro <alex.favaro@affirm.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/27109. It should match the parameter lists in `createDataFrame`.

### Why are the changes needed?

To pass parameters supposed to pass.

### Does this PR introduce any user-facing change?

No (it's only in master)

### How was this patch tested?

Manually tested and existing tests should cover.

Closes#27225 from HyukjinKwon/SPARK-30434-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to move pandas related functionalities into pandas package. Namely:

```bash

pyspark/sql/pandas

├── __init__.py

├── conversion.py # Conversion between pandas <> PySpark DataFrames

├── functions.py # pandas_udf

├── group_ops.py # Grouped UDF / Cogrouped UDF + groupby.apply, groupby.cogroup.apply

├── map_ops.py # Map Iter UDF + mapInPandas

├── serializers.py # pandas <> PyArrow serializers

├── types.py # Type utils between pandas <> PyArrow

└── utils.py # Version requirement checks

```

In order to separately locate `groupby.apply`, `groupby.cogroup.apply`, `mapInPandas`, `toPandas`, and `createDataFrame(pdf)` under `pandas` sub-package, I had to use a mix-in approach which Scala side uses often by `trait`, and also pandas itself uses this approach (see `IndexOpsMixin` as an example) to group related functionalities. Currently, you can think it's like Scala's self typed trait. See the structure below:

```python

class PandasMapOpsMixin(object):

def mapInPandas(self, ...):

...

return ...

# other Pandas <> PySpark APIs

```

```python

class DataFrame(PandasMapOpsMixin):

# other DataFrame APIs equivalent to Scala side.

```

Yes, This is a big PR but they are mostly just moving around except one case `createDataFrame` which I had to split the methods.

### Why are the changes needed?

There are pandas functionalities here and there and I myself gets lost where it was. Also, when you have to make a change commonly for all of pandas related features, it's almost impossible now.

Also, after this change, `DataFrame` and `SparkSession` become more consistent with Scala side since pandas is specific to Python, and this change separates pandas-specific APIs away from `DataFrame` or `SparkSession`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests should cover. Also, I manually built the PySpark API documentation and checked.

Closes#27109 from HyukjinKwon/pandas-refactoring.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

I propose that we change the example code documentation to call the proper function .

For example, under the `foreachBatch` function, the example code was calling the `foreach()` function by mistake.

### Why are the changes needed?

I suppose it could confuse some people, and it is a typo

### Does this PR introduce any user-facing change?

No, there is no "meaningful" code being change, simply the documentation

### How was this patch tested?

I made the change on a fork and it still worked

Closes#26299 from mstill3/patch-1.

Authored-by: Matt Stillwell <18670089+mstill3@users.noreply.github.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

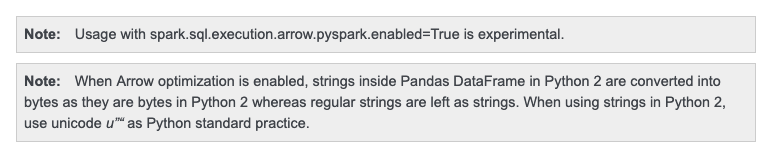

## What changes were proposed in this pull request?

When Arrow optimization is enabled in Python 2.7,

```python

import pandas

pdf = pandas.DataFrame(["test1", "test2"])

df = spark.createDataFrame(pdf)

df.show()

```

I got the following output:

```

+----------------+

| 0|

+----------------+

|[74 65 73 74 31]|

|[74 65 73 74 32]|

+----------------+

```

This looks because Python's `str` and `byte` are same. it does look right:

```python

>>> str == bytes

True

>>> isinstance("a", bytes)

True

```

To cut it short:

1. Python 2 treats `str` as `bytes`.

2. PySpark added some special codes and hacks to recognizes `str` as string types.

3. PyArrow / Pandas followed Python 2 difference

To fix, we have two options:

1. Fix it to match the behaviour to PySpark's

2. Note the differences

but Python 2 is deprecated anyway. I think it's better to just note it and for go option 2.

## How was this patch tested?

Manually tested.

Doc was checked too:

Closes#24838 from HyukjinKwon/SPARK-27995.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

`spark.sql.execution.arrow.enabled` was added when we add PySpark arrow optimization.

Later, in the current master, SparkR arrow optimization was added and it's controlled by the same configuration `spark.sql.execution.arrow.enabled`.

There look two issues about this:

1. `spark.sql.execution.arrow.enabled` in PySpark was added from 2.3.0 whereas SparkR optimization was added 3.0.0. The stability is different so it's problematic when we change the default value for one of both optimization first.

2. Suppose users want to share some JVM by PySpark and SparkR. They are currently forced to use the optimization for all or none if the configuration is set globally.

This PR proposes two separate configuration groups for PySpark and SparkR about Arrow optimization:

- Deprecate `spark.sql.execution.arrow.enabled`

- Add `spark.sql.execution.arrow.pyspark.enabled` (fallback to `spark.sql.execution.arrow.enabled`)

- Add `spark.sql.execution.arrow.sparkr.enabled`

- Deprecate `spark.sql.execution.arrow.fallback.enabled`

- Add `spark.sql.execution.arrow.pyspark.fallback.enabled ` (fallback to `spark.sql.execution.arrow.fallback.enabled`)

Note that `spark.sql.execution.arrow.maxRecordsPerBatch` is used within JVM side for both.

Note that `spark.sql.execution.arrow.fallback.enabled` was added due to behaviour change. We don't need it in SparkR - SparkR side has the automatic fallback.

## How was this patch tested?

Manually tested and some unittests were added.

Closes#24700 from HyukjinKwon/separate-sparkr-arrow.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This increases the minimum support version of pyarrow to 0.12.1 and removes workarounds in pyspark to remain compatible with prior versions. This means that users will need to have at least pyarrow 0.12.1 installed and available in the cluster or an `ImportError` will be raised to indicate an upgrade is needed.

## How was this patch tested?

Existing tests using:

Python 2.7.15, pyarrow 0.12.1, pandas 0.24.2

Python 3.6.7, pyarrow 0.12.1, pandas 0.24.0

Closes#24298 from BryanCutler/arrow-bump-min-pyarrow-SPARK-27276.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This change is a cleanup and consolidation of 3 areas related to Pandas UDFs:

1) `ArrowStreamPandasSerializer` now inherits from `ArrowStreamSerializer` and uses the base class `dump_stream`, `load_stream` to create Arrow reader/writer and send Arrow record batches. `ArrowStreamPandasSerializer` makes the conversions to/from Pandas and converts to Arrow record batch iterators. This change removed duplicated creation of Arrow readers/writers.

2) `createDataFrame` with Arrow now uses `ArrowStreamPandasSerializer` instead of doing its own conversions from Pandas to Arrow and sending record batches through `ArrowStreamSerializer`.

3) Grouped Map UDFs now reuse existing logic in `ArrowStreamPandasSerializer` to send Pandas DataFrame results as a `StructType` instead of separating each column from the DataFrame. This makes the code a little more consistent with the Python worker, but does require that the returned StructType column is flattened out in `FlatMapGroupsInPandasExec` in Scala.

## How was this patch tested?

Existing tests and ran tests with pyarrow 0.12.0

Closes#24095 from BryanCutler/arrow-refactor-cleanup-UDFs.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This change adds support for returning StructType from a scalar Pandas UDF, where the return value of the function is a pandas.DataFrame. Nested structs are not supported and an error will be raised, child types can be any other type currently supported.

## How was this patch tested?

Added additional unit tests to `test_pandas_udf_scalar`

Closes#23900 from BryanCutler/pyspark-support-scalar_udf-StructType-SPARK-23836.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

Since 0.11.0, PyArrow supports to raise an error for unsafe cast ([PR](https://github.com/apache/arrow/pull/2504)). We should use it to raise a proper error for pandas udf users when such cast is detected.

Added a SQL config `spark.sql.execution.pandas.arrowSafeTypeConversion` to disable Arrow safe type check.

## How was this patch tested?

Added test and manually test.

Closes#22807 from viirya/SPARK-25811.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

add getActiveSession in session.py

## How was this patch tested?

add doctest

Closes#22295 from huaxingao/spark25255.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Holden Karau <holden@pigscanfly.ca>

## What changes were proposed in this pull request?

In [SPARK-20946](https://issues.apache.org/jira/browse/SPARK-20946), we modified `SparkSession.getOrCreate` to not update conf for existing `SparkContext` because `SparkContext` is shared by all sessions.

We should not update it in PySpark side as well.

## How was this patch tested?

Added tests.

Closes#22545 from ueshin/issues/SPARK-25525/not_update_existing_conf.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In Scala, `HiveContext` sets a config `spark.sql.catalogImplementation` of the given `SparkContext` and then passes to `SparkSession.builder`.

The `HiveContext` in PySpark should behave as the same as Scala.

## How was this patch tested?

Existing tests.

Closes#22552 from ueshin/issues/SPARK-25540/hive_context.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This changes the calls of `toPandas()` and `createDataFrame()` to use the Arrow stream format, when Arrow is enabled. Previously, Arrow data was written to byte arrays where each chunk is an output of the Arrow file format. This was mainly due to constraints at the time, and caused some overhead by writing the schema/footer on each chunk of data and then having to read multiple Arrow file inputs and concat them together.

Using the Arrow stream format has improved these by increasing performance, lower memory overhead for the average case, and simplified the code. Here are the details of this change:

**toPandas()**

_Before:_

Spark internal rows are converted to Arrow file format, each group of records is a complete Arrow file which contains the schema and other metadata. Next a collect is done and an Array of Arrow files is the result. After that each Arrow file is sent to Python driver which then loads each file and concats them to a single Arrow DataFrame.

_After:_

Spark internal rows are converted to ArrowRecordBatches directly, which is the simplest Arrow component for IPC data transfers. The driver JVM then immediately starts serving data to Python as an Arrow stream, sending the schema first. It then starts a Spark job with a custom handler that sends Arrow RecordBatches to Python. Partitions arriving in order are sent immediately, and out-of-order partitions are buffered until the ones that precede it come in. This improves performance, simplifies memory usage on executors, and improves the average memory usage on the JVM driver. Since the order of partitions must be preserved, the worst case is that the first partition will be the last to arrive all data must be buffered in memory until then. This case is no worse that before when doing a full collect.

**createDataFrame()**

_Before:_

A Pandas DataFrame is split into parts and each part is made into an Arrow file. Then each file is prefixed by the buffer size and written to a temp file. The temp file is read and each Arrow file is parallelized as a byte array.

_After:_

A Pandas DataFrame is split into parts, then an Arrow stream is written to a temp file where each part is an ArrowRecordBatch. The temp file is read as a stream and the Arrow messages are examined. If the message is an ArrowRecordBatch, the data is saved as a byte array. After reading the file, each ArrowRecordBatch is parallelized as a byte array. This has slightly more processing than before because we must look each Arrow message to extract the record batches, but performance ends up a litle better. It is cleaner in the sense that IPC from Python to JVM is done over a single Arrow stream.

## How was this patch tested?

Added new unit tests for the additions to ArrowConverters in Scala, existing tests for Python.

## Performance Tests - toPandas

Tests run on a 4 node standalone cluster with 32 cores total, 14.04.1-Ubuntu and OpenJDK 8

measured wall clock time to execute `toPandas()` and took the average best time of 5 runs/5 loops each.

Test code

```python

df = spark.range(1 << 25, numPartitions=32).toDF("id").withColumn("x1", rand()).withColumn("x2", rand()).withColumn("x3", rand()).withColumn("x4", rand())

for i in range(5):

start = time.time()

_ = df.toPandas()

elapsed = time.time() - start

```

Current Master | This PR

---------------------|------------

5.803557 | 5.16207

5.409119 | 5.133671

5.493509 | 5.147513

5.433107 | 5.105243

5.488757 | 5.018685

Avg Master | Avg This PR

------------------|--------------

5.5256098 | 5.1134364

Speedup of **1.08060595**

## Performance Tests - createDataFrame

Tests run on a 4 node standalone cluster with 32 cores total, 14.04.1-Ubuntu and OpenJDK 8

measured wall clock time to execute `createDataFrame()` and get the first record. Took the average best time of 5 runs/5 loops each.

Test code

```python

def run():

pdf = pd.DataFrame(np.random.rand(10000000, 10))

spark.createDataFrame(pdf).first()

for i in range(6):

start = time.time()

run()

elapsed = time.time() - start

gc.collect()

print("Run %d: %f" % (i, elapsed))

```

Current Master | This PR

--------------------|----------

6.234608 | 5.665641

6.32144 | 5.3475

6.527859 | 5.370803

6.95089 | 5.479151

6.235046 | 5.529167

Avg Master | Avg This PR

---------------|----------------

6.4539686 | 5.4784524

Speedup of **1.178064192**

## Memory Improvements

**toPandas()**

The most significant improvement is reduction of the upper bound space complexity in the JVM driver. Before, the entire dataset was collected in the JVM first before sending it to Python. With this change, as soon as a partition is collected, the result handler immediately sends it to Python, so the upper bound is the size of the largest partition. Also, using the Arrow stream format is more efficient because the schema is written once per stream, followed by record batches. The schema is now only send from driver JVM to Python. Before, multiple Arrow file formats were used that each contained the schema. This duplicated schema was created in the executors, sent to the driver JVM, and then Python where all but the first one received are discarded.

I verified the upper bound limit by running a test that would collect data that would exceed the amount of driver JVM memory available. Using these settings on a standalone cluster:

```

spark.driver.memory 1g

spark.executor.memory 5g

spark.sql.execution.arrow.enabled true

spark.sql.execution.arrow.fallback.enabled false

spark.sql.execution.arrow.maxRecordsPerBatch 0

spark.driver.maxResultSize 2g

```

Test code:

```python

from pyspark.sql.functions import rand

df = spark.range(1 << 25, numPartitions=32).toDF("id").withColumn("x1", rand()).withColumn("x2", rand()).withColumn("x3", rand())

df.toPandas()

```

This makes total data size of 33554432×8×4 = 1073741824

With the current master, it fails with OOM but passes using this PR.

**createDataFrame()**

No significant change in memory except that using the stream format instead of separate file formats avoids duplicated the schema, similar to toPandas above. The process of reading the stream and parallelizing the batches does cause the record batch message metadata to be copied, but it's size is insignificant.

Closes#21546 from BryanCutler/arrow-toPandas-stream-SPARK-23030.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Follow up for SPARK-24665, find some others hard code during code review.

## How was this patch tested?

Existing UT.

Closes#22122 from xuanyuanking/SPARK-24665-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

…ark shell

## What changes were proposed in this pull request?

This PR catches TypeError when testing existence of HiveConf when creating pyspark shell

## How was this patch tested?

Manually tested. Here are the manual test cases:

Build with hive:

```

(pyarrow-dev) Lis-MacBook-Pro:spark icexelloss$ bin/pyspark

Python 3.6.5 | packaged by conda-forge | (default, Apr 6 2018, 13:44:09)

[GCC 4.2.1 Compatible Apple LLVM 6.1.0 (clang-602.0.53)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

18/06/14 14:55:41 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.4.0-SNAPSHOT

/_/

Using Python version 3.6.5 (default, Apr 6 2018 13:44:09)

SparkSession available as 'spark'.

>>> spark.conf.get('spark.sql.catalogImplementation')

'hive'

```

Build without hive:

```

(pyarrow-dev) Lis-MacBook-Pro:spark icexelloss$ bin/pyspark

Python 3.6.5 | packaged by conda-forge | (default, Apr 6 2018, 13:44:09)

[GCC 4.2.1 Compatible Apple LLVM 6.1.0 (clang-602.0.53)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

18/06/14 15:04:52 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.4.0-SNAPSHOT

/_/

Using Python version 3.6.5 (default, Apr 6 2018 13:44:09)

SparkSession available as 'spark'.

>>> spark.conf.get('spark.sql.catalogImplementation')

'in-memory'

```

Failed to start shell:

```

(pyarrow-dev) Lis-MacBook-Pro:spark icexelloss$ bin/pyspark

Python 3.6.5 | packaged by conda-forge | (default, Apr 6 2018, 13:44:09)

[GCC 4.2.1 Compatible Apple LLVM 6.1.0 (clang-602.0.53)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

18/06/14 15:07:53 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

/Users/icexelloss/workspace/spark/python/pyspark/shell.py:45: UserWarning: Failed to initialize Spark session.

warnings.warn("Failed to initialize Spark session.")

Traceback (most recent call last):

File "/Users/icexelloss/workspace/spark/python/pyspark/shell.py", line 41, in <module>

spark = SparkSession._create_shell_session()

File "/Users/icexelloss/workspace/spark/python/pyspark/sql/session.py", line 581, in _create_shell_session

return SparkSession.builder.getOrCreate()

File "/Users/icexelloss/workspace/spark/python/pyspark/sql/session.py", line 168, in getOrCreate

raise py4j.protocol.Py4JError("Fake Py4JError")

py4j.protocol.Py4JError: Fake Py4JError

(pyarrow-dev) Lis-MacBook-Pro:spark icexelloss$

```

Author: Li Jin <ice.xelloss@gmail.com>

Closes#21569 from icexelloss/SPARK-24563-fix-pyspark-shell-without-hive.

Currently, in spark-shell, if the session fails to start, the

user sees a bunch of unrelated errors which are caused by code

in the shell initialization that references the "spark" variable,

which does not exist in that case. Things like:

```

<console>:14: error: not found: value spark

import spark.sql

```

The user is also left with a non-working shell (unless they want

to just write non-Spark Scala or Python code, that is).

This change fails the whole shell session at the point where the

failure occurs, so that the last error message is the one with

the actual information about the failure.

For the python error handling, I moved the session initialization code

to session.py, so that traceback.print_exc() only shows the last error.

Otherwise, the printed exception would contain all previous exceptions

with a message "During handling of the above exception, another

exception occurred", making the actual error kinda hard to parse.

Tested with spark-shell, pyspark (with 2.7 and 3.5), by forcing an

error during SparkContext initialization.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#21368 from vanzin/SPARK-16451.

## What changes were proposed in this pull request?

The pandas_udf functionality was introduced in 2.3.0, but is not completely stable and still evolving. This adds a label to indicate it is still an experimental API.

## How was this patch tested?

NA

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#21435 from BryanCutler/arrow-pandas_udf-experimental-SPARK-24392.

## What changes were proposed in this pull request?

When using Arrow for createDataFrame or toPandas and an error is encountered with fallback disabled, this will raise the same type of error instead of a RuntimeError. This change also allows for the traceback of the error to be retained and prevents the accidental chaining of exceptions with Python 3.

## How was this patch tested?

Updated existing tests to verify error type.

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#20839 from BryanCutler/arrow-raise-same-error-SPARK-23699.

The exit() builtin is only for interactive use. applications should use sys.exit().

## What changes were proposed in this pull request?

All usage of the builtin `exit()` function is replaced by `sys.exit()`.

## How was this patch tested?

I ran `python/run-tests`.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Benjamin Peterson <benjamin@python.org>

Closes#20682 from benjaminp/sys-exit.

## What changes were proposed in this pull request?

This PR adds a configuration to control the fallback of Arrow optimization for `toPandas` and `createDataFrame` with Pandas DataFrame.

## How was this patch tested?

Manually tested and unit tests added.

You can test this by:

**`createDataFrame`**

```python

spark.conf.set("spark.sql.execution.arrow.enabled", False)

pdf = spark.createDataFrame([[{'a': 1}]]).toPandas()

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", True)

spark.createDataFrame(pdf, "a: map<string, int>")

```

```python

spark.conf.set("spark.sql.execution.arrow.enabled", False)

pdf = spark.createDataFrame([[{'a': 1}]]).toPandas()

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", False)

spark.createDataFrame(pdf, "a: map<string, int>")

```

**`toPandas`**

```python

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", True)

spark.createDataFrame([[{'a': 1}]]).toPandas()

```

```python

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", False)

spark.createDataFrame([[{'a': 1}]]).toPandas()

```

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20678 from HyukjinKwon/SPARK-23380-conf.

## What changes were proposed in this pull request?

This PR proposes to explicitly specify Pandas and PyArrow versions in PySpark tests to skip or test.

We declared the extra dependencies:

b8bfce51ab/python/setup.py (L204)

In case of PyArrow:

Currently we only check if pyarrow is installed or not without checking the version. It already fails to run tests. For example, if PyArrow 0.7.0 is installed:

```

======================================================================

ERROR: test_vectorized_udf_wrong_return_type (pyspark.sql.tests.ScalarPandasUDF)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/.../spark/python/pyspark/sql/tests.py", line 4019, in test_vectorized_udf_wrong_return_type

f = pandas_udf(lambda x: x * 1.0, MapType(LongType(), LongType()))

File "/.../spark/python/pyspark/sql/functions.py", line 2309, in pandas_udf

return _create_udf(f=f, returnType=return_type, evalType=eval_type)

File "/.../spark/python/pyspark/sql/udf.py", line 47, in _create_udf

require_minimum_pyarrow_version()

File "/.../spark/python/pyspark/sql/utils.py", line 132, in require_minimum_pyarrow_version

"however, your version was %s." % pyarrow.__version__)

ImportError: pyarrow >= 0.8.0 must be installed on calling Python process; however, your version was 0.7.0.

----------------------------------------------------------------------

Ran 33 tests in 8.098s

FAILED (errors=33)

```

In case of Pandas:

There are few tests for old Pandas which were tested only when Pandas version was lower, and I rewrote them to be tested when both Pandas version is lower and missing.

## How was this patch tested?

Manually tested by modifying the condition:

```

test_createDataFrame_column_name_encoding (pyspark.sql.tests.ArrowTests) ... skipped 'Pandas >= 1.19.2 must be installed; however, your version was 0.19.2.'

test_createDataFrame_does_not_modify_input (pyspark.sql.tests.ArrowTests) ... skipped 'Pandas >= 1.19.2 must be installed; however, your version was 0.19.2.'

test_createDataFrame_respect_session_timezone (pyspark.sql.tests.ArrowTests) ... skipped 'Pandas >= 1.19.2 must be installed; however, your version was 0.19.2.'

```

```

test_createDataFrame_column_name_encoding (pyspark.sql.tests.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

test_createDataFrame_does_not_modify_input (pyspark.sql.tests.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

test_createDataFrame_respect_session_timezone (pyspark.sql.tests.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

```

```

test_createDataFrame_column_name_encoding (pyspark.sql.tests.ArrowTests) ... skipped 'PyArrow >= 1.8.0 must be installed; however, your version was 0.8.0.'

test_createDataFrame_does_not_modify_input (pyspark.sql.tests.ArrowTests) ... skipped 'PyArrow >= 1.8.0 must be installed; however, your version was 0.8.0.'

test_createDataFrame_respect_session_timezone (pyspark.sql.tests.ArrowTests) ... skipped 'PyArrow >= 1.8.0 must be installed; however, your version was 0.8.0.'

```

```

test_createDataFrame_column_name_encoding (pyspark.sql.tests.ArrowTests) ... skipped 'PyArrow >= 0.8.0 must be installed; however, it was not found.'

test_createDataFrame_does_not_modify_input (pyspark.sql.tests.ArrowTests) ... skipped 'PyArrow >= 0.8.0 must be installed; however, it was not found.'

test_createDataFrame_respect_session_timezone (pyspark.sql.tests.ArrowTests) ... skipped 'PyArrow >= 0.8.0 must be installed; however, it was not found.'

```

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20487 from HyukjinKwon/pyarrow-pandas-skip.

## What changes were proposed in this pull request?

In the current PySpark code, Python created `jsparkSession` doesn't add to JVM's defaultSession, this `SparkSession` object cannot be fetched from Java side, so the below scala code will be failed when loaded in PySpark application.

```scala

class TestSparkSession extends SparkListener with Logging {

override def onOtherEvent(event: SparkListenerEvent): Unit = {

event match {

case CreateTableEvent(db, table) =>

val session = SparkSession.getActiveSession.orElse(SparkSession.getDefaultSession)

assert(session.isDefined)

val tableInfo = session.get.sharedState.externalCatalog.getTable(db, table)

logInfo(s"Table info ${tableInfo}")

case e =>

logInfo(s"event $e")

}

}

}

```

So here propose to add fresh create `jsparkSession` to `defaultSession`.

## How was this patch tested?

Manual verification.

Author: jerryshao <sshao@hortonworks.com>

Author: hyukjinkwon <gurwls223@gmail.com>

Author: Saisai Shao <sai.sai.shao@gmail.com>

Closes#20404 from jerryshao/SPARK-23228.

## What changes were proposed in this pull request?

This PR proposes to deprecate `register*` for UDFs in `SQLContext` and `Catalog` in Spark 2.3.0.

These are inconsistent with Scala / Java APIs and also these basically do the same things with `spark.udf.register*`.

Also, this PR moves the logcis from `[sqlContext|spark.catalog].register*` to `spark.udf.register*` and reuse the docstring.

This PR also handles minor doc corrections. It also includes https://github.com/apache/spark/pull/20158

## How was this patch tested?

Manually tested, manually checked the API documentation and tests added to check if deprecated APIs call the aliases correctly.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20288 from HyukjinKwon/deprecate-udf.

## What changes were proposed in this pull request?

This the case when calling `SparkSession.createDataFrame` using a Pandas DataFrame that has non-str column labels.

The column name conversion logic to handle non-string or unicode in python2 is:

```

if column is not any type of string:

name = str(column)

else if column is unicode in Python 2:

name = column.encode('utf-8')

```

## How was this patch tested?

Added a new test with a Pandas DataFrame that has int column labels

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#20210 from BryanCutler/python-createDataFrame-int-col-error-SPARK-23009.

## What changes were proposed in this pull request?

This fixes createDataFrame from Pandas to only assign modified timestamp series back to a copied version of the Pandas DataFrame. Previously, if the Pandas DataFrame was only a reference (e.g. a slice of another) each series will still get assigned back to the reference even if it is not a modified timestamp column. This caused the following warning "SettingWithCopyWarning: A value is trying to be set on a copy of a slice from a DataFrame."

## How was this patch tested?

existing tests

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#20213 from BryanCutler/pyspark-createDataFrame-copy-slice-warn-SPARK-23018.

## What changes were proposed in this pull request?

It provides a better error message when doing `spark_session.createDataFrame(pandas_df)` with no schema and an error occurs in the schema inference due to incompatible types.

The Pandas column names are propagated down and the error message mentions which column had the merging error.

https://issues.apache.org/jira/browse/SPARK-22566

## How was this patch tested?

Manually in the `./bin/pyspark` console, and with new tests: `./python/run-tests`

<img width="873" alt="screen shot 2017-11-21 at 13 29 49" src="https://user-images.githubusercontent.com/3977115/33080121-382274e0-cecf-11e7-808f-057a65bb7b00.png">

I state that the contribution is my original work and that I license the work to the Apache Spark project under the project’s open source license.

Author: Guilherme Berger <gberger@palantir.com>

Closes#19792 from gberger/master.

## What changes were proposed in this pull request?

Currently we check pandas version by capturing if `ImportError` for the specific imports is raised or not but we can compare `LooseVersion` of the version strings as the same as we're checking pyarrow version.

## How was this patch tested?

Existing tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#20054 from ueshin/issues/SPARK-22874.

## What changes were proposed in this pull request?

Upgrade Spark to Arrow 0.8.0 for Java and Python. Also includes an upgrade of Netty to 4.1.17 to resolve dependency requirements.

The highlights that pertain to Spark for the update from Arrow versoin 0.4.1 to 0.8.0 include:

* Java refactoring for more simple API

* Java reduced heap usage and streamlined hot code paths

* Type support for DecimalType, ArrayType

* Improved type casting support in Python

* Simplified type checking in Python

## How was this patch tested?

Existing tests

Author: Bryan Cutler <cutlerb@gmail.com>

Author: Shixiong Zhu <zsxwing@gmail.com>

Closes#19884 from BryanCutler/arrow-upgrade-080-SPARK-22324.

## What changes were proposed in this pull request?

When converting Pandas DataFrame/Series from/to Spark DataFrame using `toPandas()` or pandas udfs, timestamp values behave to respect Python system timezone instead of session timezone.

For example, let's say we use `"America/Los_Angeles"` as session timezone and have a timestamp value `"1970-01-01 00:00:01"` in the timezone. Btw, I'm in Japan so Python timezone would be `"Asia/Tokyo"`.

The timestamp value from current `toPandas()` will be the following:

```

>>> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

>>> df = spark.createDataFrame([28801], "long").selectExpr("timestamp(value) as ts")

>>> df.show()

+-------------------+

| ts|

+-------------------+

|1970-01-01 00:00:01|

+-------------------+

>>> df.toPandas()

ts

0 1970-01-01 17:00:01

```

As you can see, the value becomes `"1970-01-01 17:00:01"` because it respects Python timezone.

As we discussed in #18664, we consider this behavior is a bug and the value should be `"1970-01-01 00:00:01"`.

## How was this patch tested?

Added tests and existing tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#19607 from ueshin/issues/SPARK-22395.

## What changes were proposed in this pull request?

In PySpark API Document, [SparkSession.build](http://spark.apache.org/docs/2.2.0/api/python/pyspark.sql.html) is not documented and shows default value description.

```

SparkSession.builder = <pyspark.sql.session.Builder object ...

```

This PR adds the doc.

The following is the diff of the generated result.

```

$ diff old.html new.html

95a96,101

> <dl class="attribute">

> <dt id="pyspark.sql.SparkSession.builder">

> <code class="descname">builder</code><a class="headerlink" href="#pyspark.sql.SparkSession.builder" title="Permalink to this definition">¶</a></dt>

> <dd><p>A class attribute having a <a class="reference internal" href="#pyspark.sql.SparkSession.Builder" title="pyspark.sql.SparkSession.Builder"><code class="xref py py-class docutils literal"><span class="pre">Builder</span></code></a> to construct <a class="reference internal" href="#pyspark.sql.SparkSession" title="pyspark.sql.SparkSession"><code class="xref py py-class docutils literal"><span class="pre">SparkSession</span></code></a> instances</p>

> </dd></dl>

>

212,216d217

< <dt id="pyspark.sql.SparkSession.builder">

< <code class="descname">builder</code><em class="property"> = <pyspark.sql.session.SparkSession.Builder object></em><a class="headerlink" href="#pyspark.sql.SparkSession.builder" title="Permalink to this definition">¶</a></dt>

< <dd></dd></dl>

<

< <dl class="attribute">

```

## How was this patch tested?

Manual.

```

cd python/docs

make html

open _build/html/pyspark.sql.html

```

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#19726 from dongjoon-hyun/SPARK-22490.

## What changes were proposed in this pull request?

If schema is passed as a list of unicode strings for column names, they should be re-encoded to 'utf-8' to be consistent. This is similar to the #13097 but for creation of DataFrame using Arrow.

## How was this patch tested?

Added new test of using unicode names for schema.

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#19738 from BryanCutler/arrow-createDataFrame-followup-unicode-SPARK-20791.

## What changes were proposed in this pull request?

This change uses Arrow to optimize the creation of a Spark DataFrame from a Pandas DataFrame. The input df is sliced according to the default parallelism. The optimization is enabled with the existing conf "spark.sql.execution.arrow.enabled" and is disabled by default.

## How was this patch tested?

Added new unit test to create DataFrame with and without the optimization enabled, then compare results.

Author: Bryan Cutler <cutlerb@gmail.com>

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#19459 from BryanCutler/arrow-createDataFrame-from_pandas-SPARK-20791.

## What changes were proposed in this pull request?

Currently, a pandas.DataFrame that contains a timestamp of type 'datetime64[ns]' when converted to a Spark DataFrame with `createDataFrame` will interpret the values as LongType. This fix will check for a timestamp type and convert it to microseconds which will allow Spark to read as TimestampType.

## How was this patch tested?

Added unit test to verify Spark schema is expected for TimestampType and DateType when created from pandas

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#19646 from BryanCutler/pyspark-non-arrow-createDataFrame-ts-fix-SPARK-22417.

## What changes were proposed in this pull request?

**Context**

While reviewing https://github.com/apache/spark/pull/17227, I realised here we type-dispatch per record. The PR itself is fine in terms of performance as is but this prints a prefix, `"obj"` in exception message as below:

```

from pyspark.sql.types import *

schema = StructType([StructField('s', IntegerType(), nullable=False)])

spark.createDataFrame([["1"]], schema)

...

TypeError: obj.s: IntegerType can not accept object '1' in type <type 'str'>

```

I suggested to get rid of this but during investigating this, I realised my approach might bring a performance regression as it is a hot path.

Only for SPARK-19507 and https://github.com/apache/spark/pull/17227, It needs more changes to cleanly get rid of the prefix and I rather decided to fix both issues together.

**Propersal**

This PR tried to

- get rid of per-record type dispatch as we do in many code paths in Scala so that it improves the performance (roughly ~25% improvement) - SPARK-21296

This was tested with a simple code `spark.createDataFrame(range(1000000), "int")`. However, I am quite sure the actual improvement in practice is larger than this, in particular, when the schema is complicated.

- improve error message in exception describing field information as prose - SPARK-19507

## How was this patch tested?

Manually tested and unit tests were added in `python/pyspark/sql/tests.py`.

Benchmark - codes: https://gist.github.com/HyukjinKwon/c3397469c56cb26c2d7dd521ed0bc5a3

Error message - codes: https://gist.github.com/HyukjinKwon/b1b2c7f65865444c4a8836435100e398

**Before**

Benchmark:

- Results: https://gist.github.com/HyukjinKwon/4a291dab45542106301a0c1abcdca924

Error message

- Results: https://gist.github.com/HyukjinKwon/57b1916395794ce924faa32b14a3fe19

**After**

Benchmark

- Results: https://gist.github.com/HyukjinKwon/21496feecc4a920e50c4e455f836266e

Error message

- Results: https://gist.github.com/HyukjinKwon/7a494e4557fe32a652ce1236e504a395Closes#17227

Author: hyukjinkwon <gurwls223@gmail.com>

Author: David Gingrich <david@textio.com>

Closes#18521 from HyukjinKwon/python-type-dispatch.

Now that Structured Streaming has been out for several Spark release and has large production use cases, the `Experimental` label is no longer appropriate. I've left `InterfaceStability.Evolving` however, as I think we may make a few changes to the pluggable Source & Sink API in Spark 2.3.

Author: Michael Armbrust <michael@databricks.com>

Closes#18065 from marmbrus/streamingGA.

## What changes were proposed in this pull request?

In SparkSession initialization, we store created the instance of SparkSession into a class variable _instantiatedContext. Next time we can use SparkSession.builder.getOrCreate() to retrieve the existing SparkSession instance.

However, when the active SparkContext is stopped and we create another new SparkContext to use, the existing SparkSession is still associated with the stopped SparkContext. So the operations with this existing SparkSession will be failed.

We need to detect such case in SparkSession and renew the class variable _instantiatedContext if needed.

## How was this patch tested?

New test added in PySpark.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#16454 from viirya/fix-pyspark-sparksession.

## What changes were proposed in this pull request?

SQLConf is session-scoped and mutable. However, we do have the requirement for a static SQL conf, which is global and immutable, e.g. the `schemaStringThreshold` in `HiveExternalCatalog`, the flag to enable/disable hive support, the global temp view database in https://github.com/apache/spark/pull/14897.

Actually we've already implemented static SQL conf implicitly via `SparkConf`, this PR just make it explicit and expose it to users, so that they can see the config value via SQL command or `SparkSession.conf`, and forbid users to set/unset static SQL conf.

## How was this patch tested?

new tests in SQLConfSuite

Author: Wenchen Fan <wenchen@databricks.com>

Closes#15295 from cloud-fan/global-conf.