### What changes were proposed in this pull request?

This PR proposes to redesign the PySpark documentation.

I made a demo site to make it easier to review: https://hyukjin-spark.readthedocs.io/en/stable/reference/index.html.

Here is the initial draft for the final PySpark docs shape: https://hyukjin-spark.readthedocs.io/en/latest/index.html.

In more details, this PR proposes:

1. Use [pydata_sphinx_theme](https://github.com/pandas-dev/pydata-sphinx-theme) theme - [pandas](https://pandas.pydata.org/docs/) and [Koalas](https://koalas.readthedocs.io/en/latest/) use this theme. The CSS overwrite is ported from Koalas. The colours in the CSS were actually chosen by designers to use in Spark.

2. Use the Sphinx option to separate `source` and `build` directories as the documentation pages will likely grow.

3. Port current API documentation into the new style. It mimics Koalas and pandas to use the theme most effectively.

One disadvantage of this approach is that you should list up APIs or classes; however, I think this isn't a big issue in PySpark since we're being conservative on adding APIs. I also intentionally listed classes only instead of functions in ML and MLlib to make it relatively easier to manage.

### Why are the changes needed?

Often I hear the complaints, from the users, that current PySpark documentation is pretty messy to read - https://spark.apache.org/docs/latest/api/python/index.html compared other projects such as [pandas](https://pandas.pydata.org/docs/) and [Koalas](https://koalas.readthedocs.io/en/latest/).

It would be nicer if we can make it more organised instead of just listing all classes, methods and attributes to make it easier to navigate.

Also, the documentation has been there from almost the very first version of PySpark. Maybe it's time to update it.

### Does this PR introduce _any_ user-facing change?

Yes, PySpark API documentation will be redesigned.

### How was this patch tested?

Manually tested, and the demo site was made to show.

Closes#29188 from HyukjinKwon/SPARK-32179.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

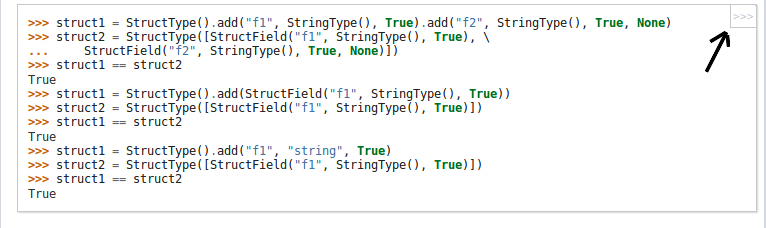

## What changes were proposed in this pull request?

Add a non-intrusive button for python API documentation, which will remove ">>>" prompts and outputs of code - for easier copying of code.

For example: The below code-snippet in the document is difficult to copy due to ">>>" prompts

```

>>> l = [('Alice', 1)]

>>> spark.createDataFrame(l).collect()

[Row(_1='Alice', _2=1)]

```

Becomes this - After the copybutton in the corner of of code-block is pressed - which is easier to copy

```

l = [('Alice', 1)]

spark.createDataFrame(l).collect()

```

## File changes

Made changes to python/docs/conf.py and copybutton.js - thus only modifying sphinx frontend and no changes were made to the documentation itself- Build process for documentation remains the same.

copybutton.js -> This JS snippet was taken from the official python.org documentation site.

## How was this patch tested?

NA

Closes#24456 from sangramga/copybutton.

Authored-by: sangramga <sangramga@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Mark ml.classification algorithms as experimental to match Scala algorithms, update PyDoc for for thresholds on `LogisticRegression` to have same level of info as Scala, and enable mathjax for PyDoc.

## How was this patch tested?

Built docs locally & PySpark SQL tests

Author: Holden Karau <holden@us.ibm.com>

Closes#12938 from holdenk/SPARK-15162-SPARK-15164-update-some-pydocs.

We should have lint rules using sphinx to automatically catch the pydoc issues that are sometimes introduced.

Right now ./dev/lint-python will skip building the docs if sphinx isn't present - but it might make sense to fail hard - just a matter of if we want to insist all PySpark developers have sphinx present.

Author: Holden Karau <holden@us.ibm.com>

Closes#11109 from holdenk/SPARK-13154-add-pydoc-lint-for-docs.

use RELEASE_VERSION when building the Python API docs

Author: Davies Liu <davies@databricks.com>

Closes#4731 from davies/api_version and squashes the following commits:

c9744c9 [Davies Liu] Update create-release.sh

08cbc3f [Davies Liu] fix python docs

This PR allow Python users to set params in constructors and in setParams, where we use decorator `keyword_only` to force keyword arguments. The trade-off is discussed in the design doc of SPARK-4586.

Generated doc:

CC: davies rxin

Author: Xiangrui Meng <meng@databricks.com>

Closes#4564 from mengxr/py-pipeline-kw and squashes the following commits:

fedf720 [Xiangrui Meng] use toDF

d565f2c [Xiangrui Meng] Merge remote-tracking branch 'apache/master' into py-pipeline-kw

cbc15d3 [Xiangrui Meng] fix style

5032097 [Xiangrui Meng] update pipeline signature

950774e [Xiangrui Meng] simplify keyword_only and update constructor/setParams signatures

fdde5fc [Xiangrui Meng] fix style

c9384b8 [Xiangrui Meng] fix sphinx doc

8e59180 [Xiangrui Meng] add setParams and make constructors take params, where we force keyword args

This PR adds Python API for ML pipeline and parameters. The design doc can be found on the JIRA page. It includes transformers and an estimator to demo the simple text classification example code.

TODO:

- [x] handle parameters in LRModel

- [x] unit tests

- [x] missing some docs

CC: davies jkbradley

Author: Xiangrui Meng <meng@databricks.com>

Author: Davies Liu <davies@databricks.com>

Closes#4151 from mengxr/SPARK-4586 and squashes the following commits:

415268e [Xiangrui Meng] remove inherit_doc from __init__

edbd6fe [Xiangrui Meng] move Identifiable to ml.util

44c2405 [Xiangrui Meng] Merge pull request #2 from davies/ml

dd1256b [Xiangrui Meng] Merge remote-tracking branch 'apache/master' into SPARK-4586

14ae7e2 [Davies Liu] fix docs

54ca7df [Davies Liu] fix tests

78638df [Davies Liu] Merge branch 'SPARK-4586' of github.com:mengxr/spark into ml

fc59a02 [Xiangrui Meng] Merge remote-tracking branch 'apache/master' into SPARK-4586

1dca16a [Davies Liu] refactor

090b3a3 [Davies Liu] Merge branch 'master' of github.com:apache/spark into ml

0882513 [Xiangrui Meng] update doc style

a4f4dbf [Xiangrui Meng] add unit test for LR

7521d1c [Xiangrui Meng] add unit tests to HashingTF and Tokenizer

ba0ba1e [Xiangrui Meng] add unit tests for pipeline

0586c7b [Xiangrui Meng] add more comments to the example

5153cff [Xiangrui Meng] simplify java models

036ca04 [Xiangrui Meng] gen numFeatures

46fa147 [Xiangrui Meng] update mllib/pom.xml to include python files in the assembly

1dcc17e [Xiangrui Meng] update code gen and make param appear in the doc

f66ba0c [Xiangrui Meng] make params a property

d5efd34 [Xiangrui Meng] update doc conf and move embedded param map to instance attribute

f4d0fe6 [Xiangrui Meng] use LabeledDocument and Document in example

05e3e40 [Xiangrui Meng] update example

d3e8dbe [Xiangrui Meng] more docs optimize pipeline.fit impl

56de571 [Xiangrui Meng] fix style

d0c5bb8 [Xiangrui Meng] a working copy

bce72f4 [Xiangrui Meng] Merge remote-tracking branch 'apache/master' into SPARK-4586

17ecfb9 [Xiangrui Meng] code gen for shared params

d9ea77c [Xiangrui Meng] update doc

c18dca1 [Xiangrui Meng] make the example working

dadd84e [Xiangrui Meng] add base classes and docs

a3015cf [Xiangrui Meng] add Estimator and Transformer

46eea43 [Xiangrui Meng] a pipeline in python

33b68e0 [Xiangrui Meng] a working LR

Sphinx documents contains a corrupted ReST format and have some warnings.

The purpose of this issue is same as https://issues.apache.org/jira/browse/SPARK-3773.

commit: 0e8203f4fb

output

```

$ cd ./python/docs

$ make clean html

rm -rf _build/*

sphinx-build -b html -d _build/doctrees . _build/html

Making output directory...

Running Sphinx v1.2.3

loading pickled environment... not yet created

building [html]: targets for 4 source files that are out of date

updating environment: 4 added, 0 changed, 0 removed

reading sources... [100%] pyspark.sql

/Users/<user>/MyRepos/Scala/spark/python/pyspark/mllib/feature.py:docstring of pyspark.mllib.feature.Word2VecModel.findSynonyms:4: WARNING: Field list ends without a blank line; unexpected unindent.

/Users/<user>/MyRepos/Scala/spark/python/pyspark/mllib/feature.py:docstring of pyspark.mllib.feature.Word2VecModel.transform:3: WARNING: Field list ends without a blank line; unexpected unindent.

/Users/<user>/MyRepos/Scala/spark/python/pyspark/sql.py:docstring of pyspark.sql:4: WARNING: Bullet list ends without a blank line; unexpected unindent.

looking for now-outdated files... none found

pickling environment... done

checking consistency... done

preparing documents... done

writing output... [100%] pyspark.sql

writing additional files... (12 module code pages) _modules/index search

copying static files... WARNING: html_static_path entry u'/Users/<user>/MyRepos/Scala/spark/python/docs/_static' does not exist

done

copying extra files... done

dumping search index... done

dumping object inventory... done

build succeeded, 4 warnings.

Build finished. The HTML pages are in _build/html.

```

Author: cocoatomo <cocoatomo77@gmail.com>

Closes#2766 from cocoatomo/issues/3909-sphinx-build-warnings and squashes the following commits:

2c7faa8 [cocoatomo] [SPARK-3909][PySpark][Doc] A corrupted format in Sphinx documents and building warnings

Retire Epydoc, use Sphinx to generate API docs.

Refine Sphinx docs, also convert some docstrings into Sphinx style.

It looks like:

Author: Davies Liu <davies.liu@gmail.com>

Closes#2689 from davies/docs and squashes the following commits:

bf4a0a5 [Davies Liu] fix links

3fb1572 [Davies Liu] fix _static in jekyll

65a287e [Davies Liu] fix scripts and logo

8524042 [Davies Liu] Merge branch 'master' of github.com:apache/spark into docs

d5b874a [Davies Liu] Merge branch 'master' of github.com:apache/spark into docs

4bc1c3c [Davies Liu] refactor

746d0b6 [Davies Liu] @param -> :param

240b393 [Davies Liu] replace epydoc with sphinx doc

Using Sphinx to generate API docs for PySpark.

requirement: Sphinx

```

$ cd python/docs/

$ make html

```

The generated API docs will be located at python/docs/_build/html/index.html

It can co-exists with those generated by Epydoc.

This is the first working version, after merging in, then we can continue to improve it and replace the epydoc finally.

Author: Davies Liu <davies.liu@gmail.com>

Closes#2292 from davies/sphinx and squashes the following commits:

425a3b1 [Davies Liu] cleanup

1573298 [Davies Liu] move docs to python/docs/

5fe3903 [Davies Liu] Merge branch 'master' into sphinx

9468ab0 [Davies Liu] fix makefile

b408f38 [Davies Liu] address all comments

e2ccb1b [Davies Liu] update name and version

9081ead [Davies Liu] generate PySpark API docs using Sphinx