### What changes were proposed in this pull request?

1.Add version information to the configuration of `R`.

2.Update the docs of `R`.

I sorted out some information show below.

Item name | Since version | JIRA ID | Commit ID | Note

-- | -- | -- | -- | --

spark.r.backendConnectionTimeout | 2.1.0 | SPARK-17919 | 2881a2d1d1a650a91df2c6a01275eba14a43b42a#diff-025470e1b7094d7cf4a78ea353fb3981 |

spark.r.numRBackendThreads | 1.4.0 | SPARK-8282 | 28e8a6ea65fd08ab9cefc4d179d5c66ffefd3eb4#diff-697f7f2fc89808e0113efc71ed235db2 |

spark.r.heartBeatInterval | 2.1.0 | SPARK-17919 | 2881a2d1d1a650a91df2c6a01275eba14a43b42a#diff-fe903bf14db371aa320b7cc516f2463c |

spark.sparkr.r.command | 1.5.3 | SPARK-10971 | 9695f452e86a88bef3bcbd1f3c0b00ad9e9ac6e1#diff-025470e1b7094d7cf4a78ea353fb3981 |

spark.r.command | 1.5.3 | SPARK-10971 | 9695f452e86a88bef3bcbd1f3c0b00ad9e9ac6e1#diff-025470e1b7094d7cf4a78ea353fb3981 |

### Why are the changes needed?

Supplemental configuration version information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exists UT

Closes#27708 from beliefer/add-version-to-R-config.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.1.0-SNAPSHOT.

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27698 from gatorsmile/updateVersion.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Rename config `spark.resources.discovery.plugin` to `spark.resources.discoveryPlugin`.

Also, as a side minor change: labeled `ResourceDiscoveryScriptPlugin` as `DeveloperApi` since it's not for end user.

### Why are the changes needed?

Discovery plugin doesn't need to reserve the "discovery" namespace here and it's more consistent with the interface name `ResourceDiscoveryPlugin` if we use `discoveryPlugin` instead.

### Does this PR introduce any user-facing change?

No, it's newly added in Spark3.0.

### How was this patch tested?

Pass Jenkins.

Closes#27689 from Ngone51/spark_30689_followup.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

fix the warning for limiting resources when we don't know the number of executor cores. The issue is that there are places in the Spark code that use cores/task cpus to calculate slots and until the entire Stage level scheduling feature is in, we have to rely on the cores being the limiting resource.

Change the check to only warn when custom resources are specified.

### Why are the changes needed?

fix the check and warn when we should

### Does this PR introduce any user-facing change?

A warning is printed

### How was this patch tested?

manually tested spark-shell with standalone mode, yarn, local mode.

Closes#27686 from tgravescs/SPARK-30942.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Set `FAIL_ON_UNKNOWN_PROPERTIES` to `false` in `JsonProtocol` to allow ignore unknown fields in a Spark event. After this change, if we add new fields to a Spark event parsed by `ObjectMapper`, the event json string generated by a new Spark version can still be read by an old Spark History Server.

Since Spark History Server is an extra service, it usually takes time to upgrade, and it's possible that a Spark application is upgraded before SHS. Forwards-compatibility will allow an old SHS to support new Spark applications (may lose some new features but most of functions should still work).

### Why are the changes needed?

`JsonProtocol` is supposed to provide strong backwards-compatibility and forwards-compatibility guarantees: any version of Spark should be able to read JSON output written by any other version, including newer versions.

However, the forwards-compatibility guarantee is broken for events parsed by `ObjectMapper`. If a new field is added to an event parsed by `ObjectMapper` (e.g., 6dc5921e66 (diff-dc5c7a41fbb7479cef48b67eb41ad254R33)), the event json string generated by a new Spark version cannot be parsed by an old version of SHS right now.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

The new added tests.

Closes#27680 from zsxwing/SPARK-30936.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1.Add version information to the configuration of `Deploy`.

2.Update the docs of `Deploy`.

I sorted out some information show below.

Item name | Since version | JIRA ID | Commit ID | Note

-- | -- | -- | -- | --

spark.deploy.recoveryMode | 0.8.1 | None | d66c01f2b6defb3db6c1be99523b734a4d960532#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.recoveryMode.factory | 1.2.0 | SPARK-1830 | deefd9d7377a8091a1d184b99066febd0e9f6afd#diff-29dffdccd5a7f4c8b496c293e87c8668 | This configuration appears in branch-1.3, but the version number in the pom.xml file corresponding to the commit is 1.2.0-SNAPSHOT

spark.deploy.recoveryDirectory | 0.8.1 | None | d66c01f2b6defb3db6c1be99523b734a4d960532#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.zookeeper.url | 0.8.1 | None | d66c01f2b6defb3db6c1be99523b734a4d960532#diff-4457313ca662a1cd60197122d924585c |

spark.deploy.zookeeper.dir | 0.8.1 | None | d66c01f2b6defb3db6c1be99523b734a4d960532#diff-a84228cb45c7d5bd93305a1f5bf720b6 |

spark.deploy.retainedApplications | 0.8.0 | None | 46eecd110a4017ea0c86cbb1010d0ccd6a5eb2ef#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.retainedDrivers | 1.1.0 | None | 7446f5ff93142d2dd5c79c63fa947f47a1d4db8b#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.dead.worker.persistence | 0.8.0 | None | 46eecd110a4017ea0c86cbb1010d0ccd6a5eb2ef#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.maxExecutorRetries | 1.6.3 | SPARK-16956 | ace458f0330f22463ecf7cbee7c0465e10fba8a8#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.spreadOut | 0.6.1 | None | bb2b9ff37cd2503cc6ea82c5dd395187b0910af0#diff-0e7ae91819fc8f7b47b0f97be7116325 |

spark.deploy.defaultCores | 0.9.0 | None | d8bcc8e9a095c1b20dd7a17b6535800d39bff80e#diff-29dffdccd5a7f4c8b496c293e87c8668 |

### Why are the changes needed?

Supplemental configuration version information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exists UT

Closes#27668 from beliefer/add-version-to-deploy-config.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Spark `ConfigEntry` and `ConfigBuilder` missing Spark version information of each configuration at release. This is not good for Spark user when they visiting the page of spark configuration.

http://spark.apache.org/docs/latest/configuration.html

The new Spark SQL config docs looks like:

```

> SET -v

spark.sql.adaptive.enabled false When true, enable adaptive query execution.

spark.sql.adaptive.nonEmptyPartitionRatioForBroadcastJoin 0.2 The relation with a non-empty partition ratio lower than this config will not be considered as the build side of a broadcast-hash join in adaptive execution regardless of its size.This configuration only has an effect when 'spark.sql.adaptive.enabled' is enabled.

spark.sql.adaptive.optimizeSkewedJoin.enabled true When true and adaptive execution is enabled, a skewed join is automatically handled at runtime.

spark.sql.adaptive.optimizeSkewedJoin.skewedPartitionFactor 10 A partition is considered as a skewed partition if its size is larger than this factor multiple the median partition size and also larger than spark.sql.adaptive.optimizeSkewedJoin.skewedPartitionSizeThreshold

spark.sql.adaptive.optimizeSkewedJoin.skewedPartitionMaxSplits 5 Configures the maximum number of task to handle a skewed partition in adaptive skewedjoin.

spark.sql.adaptive.optimizeSkewedJoin.skewedPartitionSizeThreshold 64MB Configures the minimum size in bytes for a partition that is considered as a skewed partition in adaptive skewed join.

spark.sql.adaptive.shuffle.fetchShuffleBlocksInBatch.enabled true Whether to fetch the continuous shuffle blocks in batch. Instead of fetching blocks one by one, fetching continuous shuffle blocks for the same map task in batch can reduce IO and improve performance. Note, multiple continuous blocks exist in single fetch request only happen when 'spark.sql.adaptive.enabled' and 'spark.sql.adaptive.shuffle.reducePostShufflePartitions.enabled' is enabled, this feature also depends on a relocatable serializer, the concatenation support codec in use and the new version shuffle fetch protocol.

spark.sql.adaptive.shuffle.localShuffleReader.enabled true When true and 'spark.sql.adaptive.enabled' is enabled, this enables the optimization of converting the shuffle reader to local shuffle reader for the shuffle exchange of the broadcast hash join in probe side.

spark.sql.adaptive.shuffle.maxNumPostShufflePartitions <undefined> The advisory maximum number of post-shuffle partitions used in adaptive execution. This is used as the initial number of pre-shuffle partitions. By default it equals to spark.sql.shuffle.partitions. This configuration only has an effect when 'spark.sql.adaptive.enabled' and 'spark.sql.adaptive.shuffle.reducePostShufflePartitions.enabled' is enabled.

```

**Note**: Because there are so many configuration items that are exposed and require a lot of finishing, I will add the version numbers of these configuration items in another PR.

### Why are the changes needed?

Supplemental configuration version information.

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

Exists UT

Closes#27592 from beliefer/add-version-to-config.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

Fix for #27395

### What changes were proposed in this pull request?

The `allGather` method is added to the `BarrierTaskContext`. This method contains the same functionality as the `BarrierTaskContext.barrier` method; it blocks the task until all tasks make the call, at which time they may continue execution. In addition, the `allGather` method takes an input message. Upon returning from the `allGather` the task receives a list of all the messages sent by all the tasks that made the `allGather` call.

### Why are the changes needed?

There are many situations where having the tasks communicate in a synchronized way is useful. One simple example is if each task needs to start a server to serve requests from one another; first the tasks must find a free port (the result of which is undetermined beforehand) and then start making requests, but to do so they each must know the port chosen by the other task. An `allGather` method would allow them to inform each other of the port they will run on.

### Does this PR introduce any user-facing change?

Yes, an `BarrierTaskContext.allGather` method will be available through the Scala, Java, and Python APIs.

### How was this patch tested?

Most of the code path is already covered by tests to the `barrier` method, since this PR includes a refactor so that much code is shared by the `barrier` and `allGather` methods. However, a test is added to assert that an all gather on each tasks partition ID will return a list of every partition ID.

An example through the Python API:

```python

>>> from pyspark import BarrierTaskContext

>>>

>>> def f(iterator):

... context = BarrierTaskContext.get()

... return [context.allGather('{}'.format(context.partitionId()))]

...

>>> sc.parallelize(range(4), 4).barrier().mapPartitions(f).collect()[0]

[u'3', u'1', u'0', u'2']

```

Closes#27640 from sarthfrey/master.

Lead-authored-by: sarthfrey-db <sarth.frey@databricks.com>

Co-authored-by: sarthfrey <sarth.frey@gmail.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

- Add missing `since` annotation.

- Don't show classes under `org.apache.spark.sql.dynamicpruning` package in API docs.

- Fix the scope of `xxxExactNumeric` to remove it from the API docs.

### Why are the changes needed?

Avoid leaking APIs unintentionally in Spark 3.0.0.

### Does this PR introduce any user-facing change?

No. All these changes are to avoid leaking APIs unintentionally in Spark 3.0.0.

### How was this patch tested?

Manually generated the API docs and verified the above issues have been fixed.

Closes#27560 from xuanyuanking/SPARK-30809.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This PR aims to upgrade Py4J to `0.10.9` for better Python 3.7 support in Apache Spark 3.0.0 (master/branch-3.0). This is not for `branch-2.4`.

- Apache Spark 3.0.0 is using `Py4J 0.10.8.1` (released on 2018-10-21) because `0.10.8.1` was the first official release to support Python 3.7.

- https://www.py4j.org/changelog.html#py4j-0-10-8-and-py4j-0-10-8-1

- `Py4J 0.10.9` was released on January 25th 2020 with better Python 3.7 support and `magic_member` bug fix.

- https://github.com/bartdag/py4j/releases/tag/0.10.9

- https://www.py4j.org/changelog.html#py4j-0-10-9

No.

Pass the Jenkins with the existing tests.

Closes#27641 from dongjoon-hyun/SPARK-30884.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently, after https://github.com/apache/spark/pull/27313, it shows the warning about dynamic allocation which is disabled by default.

```bash

$ ./bin/spark-shell

```

```

...

20/02/18 11:04:56 WARN ResourceProfile: Please ensure that the number of slots available on your executors is

limited by the number of cores to task cpus and not another custom resource. If cores is not the limiting resource

then dynamic allocation will not work properly!

```

This PR brings back the configuration checking for this warning. Seems mistakenly removed at https://github.com/apache/spark/pull/27313/files#diff-364713d7776956cb8b0a771e9b62f82dL2841

### Why are the changes needed?

To remove false warning.

### Does this PR introduce any user-facing change?

Yes, it will don't show the warning. It's master only change so no user-facing to end users.

### How was this patch tested?

Manually tested.

Closes#27615 from HyukjinKwon/SPARK-29148.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

The `allGather` method is added to the `BarrierTaskContext`. This method contains the same functionality as the `BarrierTaskContext.barrier` method; it blocks the task until all tasks make the call, at which time they may continue execution. In addition, the `allGather` method takes an input message. Upon returning from the `allGather` the task receives a list of all the messages sent by all the tasks that made the `allGather` call.

### Why are the changes needed?

There are many situations where having the tasks communicate in a synchronized way is useful. One simple example is if each task needs to start a server to serve requests from one another; first the tasks must find a free port (the result of which is undetermined beforehand) and then start making requests, but to do so they each must know the port chosen by the other task. An `allGather` method would allow them to inform each other of the port they will run on.

### Does this PR introduce any user-facing change?

Yes, an `BarrierTaskContext.allGather` method will be available through the Scala, Java, and Python APIs.

### How was this patch tested?

Most of the code path is already covered by tests to the `barrier` method, since this PR includes a refactor so that much code is shared by the `barrier` and `allGather` methods. However, a test is added to assert that an all gather on each tasks partition ID will return a list of every partition ID.

An example through the Python API:

```python

>>> from pyspark import BarrierTaskContext

>>>

>>> def f(iterator):

... context = BarrierTaskContext.get()

... return [context.allGather('{}'.format(context.partitionId()))]

...

>>> sc.parallelize(range(4), 4).barrier().mapPartitions(f).collect()[0]

[u'3', u'1', u'0', u'2']

```

Closes#27395 from sarthfrey/master.

Lead-authored-by: sarthfrey-db <sarth.frey@databricks.com>

Co-authored-by: sarthfrey <sarth.frey@gmail.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

(cherry picked from commit 57254c9719)

Signed-off-by: Xiangrui Meng <meng@databricks.com>

### What changes were proposed in this pull request?

Currently the uploadBlockSync api in BlockTransferService always succeeds irrespective of whether the BlockManager was able to successfully replicate a block on peer block manager or not. This PR makes sure that the NettyBlockRpcServer invokes onFailure callback when it is not able to replicate the block to itself because of any reason. The onFailure callback makes sure that the BlockTransferService on client side gets the failure and retry replication the Block on some other BlockManager.

### Why are the changes needed?

Currently the Spark Block replication retry logic is not working correctly. It doesn't retry on other Block managers even when replication fails on 1 of the peers.

A user can cache an DataFrame with different replication factor. Ex - df.persist(StorageLevel.MEMORY_ONLY_2) - This will cache each partition at two different BlockManagers. When a DataFrame partition is computed first time, it is firstly stored locally on the local BlockManager and then it is replicated to other block managers based on replication factor config. The replication of block to other block managers might fail because of memory/network etc issues and so there is already provision to retry the replication on some other peer based on "spark.storage.maxReplicationFailures" config, Currently when this replication fails, the client does not know about the failure and so it doesn't retry on other peers. This PR fixes this issue.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added Unit Test.

Closes#27539 from prakharjain09/bm_replicate.

Authored-by: Prakhar Jain <prakharjain09@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In `org.apache.spark.sql.execution.exchange.BroadcastExchangeExec#relationFuture` make a copy of `org.apache.spark.SparkContext#localProperties` and pass it to the broadcast execution thread in `org.apache.spark.sql.execution.exchange.BroadcastExchangeExec#executionContext`

### Why are the changes needed?

When executing `BroadcastExchangeExec`, the relationFuture is evaluated via a separate thread. The threads inherit the `localProperties` from `sparkContext` as they are the child threads.

These threads are created in the executionContext (thread pools). Each Thread pool has a default `keepAliveSeconds` of 60 seconds for idle threads.

Scenarios where the thread pool has threads which are idle and reused for a subsequent new query, the thread local properties will not be inherited from spark context (thread properties are inherited only on thread creation) hence end up having old or no properties set. This will cause taskset properties to be missing when properties are transferred by child thread via `sparkContext.runJob/submitJob`

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added UT

Closes#27266 from ajithme/broadcastlocalprop.

Authored-by: Ajith <ajith2489@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Make logging events dropping every 60s works fine, the orignal implementaion some times not working due to susequent events comming and updating the DroppedEventCounter

### Why are the changes needed?

Currenly, the logging may be skipped and delayed a long time under high concurrency, that make debugging hard. So This PR will try to fix it.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

NA

Closes#27002 from liupc/Improve-logging-dropped-events-and-logging-threadDump.

Authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch addresses the post-hoc review comment linked here - https://github.com/apache/spark/pull/25670#discussion_r373304076

### Why are the changes needed?

We would like to explicitly document the direct relationship before we finish up structuring of configurations.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

N/A

Closes#27576 from HeartSaVioR/SPARK-28869-FOLLOWUP-doc.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add docs to describe the config naming guideline.

### Why are the changes needed?

To encourage contributors to name configs more consistently.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#27577 from cloud-fan/config.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This PR is based on an existing/previou PR - https://github.com/apache/spark/pull/19045

### What changes were proposed in this pull request?

This changes adds a decommissioning state that we can enter when the cloud provider/scheduler lets us know we aren't going to be removed immediately but instead will be removed soon. This concept fits nicely in K8s and also with spot-instances on AWS / preemptible instances all of which we can get a notice that our host is going away. For now we simply stop scheduling jobs, in the future we could perform some kind of migration of data during scale-down, or at least stop accepting new blocks to cache.

There is a design document at https://docs.google.com/document/d/1xVO1b6KAwdUhjEJBolVPl9C6sLj7oOveErwDSYdT-pE/edit?usp=sharing

### Why are the changes needed?

With more move to preemptible multi-tenancy, serverless environments, and spot-instances better handling of node scale down is required.

### Does this PR introduce any user-facing change?

There is no API change, however an additional configuration flag is added to enable/disable this behaviour.

### How was this patch tested?

New integration tests in the Spark K8s integration testing. Extension of the AppClientSuite to test decommissioning seperate from the K8s.

Closes#26440 from holdenk/SPARK-20628-keep-track-of-nodes-which-are-going-to-be-shutdown-r4.

Lead-authored-by: Holden Karau <hkarau@apple.com>

Co-authored-by: Holden Karau <holden@pigscanfly.ca>

Signed-off-by: Holden Karau <hkarau@apple.com>

### What changes were proposed in this pull request?

The `allGather` method is added to the `BarrierTaskContext`. This method contains the same functionality as the `BarrierTaskContext.barrier` method; it blocks the task until all tasks make the call, at which time they may continue execution. In addition, the `allGather` method takes an input message. Upon returning from the `allGather` the task receives a list of all the messages sent by all the tasks that made the `allGather` call.

### Why are the changes needed?

There are many situations where having the tasks communicate in a synchronized way is useful. One simple example is if each task needs to start a server to serve requests from one another; first the tasks must find a free port (the result of which is undetermined beforehand) and then start making requests, but to do so they each must know the port chosen by the other task. An `allGather` method would allow them to inform each other of the port they will run on.

### Does this PR introduce any user-facing change?

Yes, an `BarrierTaskContext.allGather` method will be available through the Scala, Java, and Python APIs.

### How was this patch tested?

Most of the code path is already covered by tests to the `barrier` method, since this PR includes a refactor so that much code is shared by the `barrier` and `allGather` methods. However, a test is added to assert that an all gather on each tasks partition ID will return a list of every partition ID.

An example through the Python API:

```python

>>> from pyspark import BarrierTaskContext

>>>

>>> def f(iterator):

... context = BarrierTaskContext.get()

... return [context.allGather('{}'.format(context.partitionId()))]

...

>>> sc.parallelize(range(4), 4).barrier().mapPartitions(f).collect()[0]

[u'3', u'1', u'0', u'2']

```

Closes#27395 from sarthfrey/master.

Lead-authored-by: sarthfrey-db <sarth.frey@databricks.com>

Co-authored-by: sarthfrey <sarth.frey@gmail.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

### What changes were proposed in this pull request?

This is another PR for stage level scheduling. In particular this adds changes to the dynamic allocation manager and the scheduler backend to be able to track what executors are needed per ResourceProfile. Note the api is still private to Spark until the entire feature gets in, so this functionality will be there but only usable by tests for profiles other then the DefaultProfile.

The main changes here are simply tracking things on a ResourceProfile basis as well as sending the executor requests to the scheduler backend for all ResourceProfiles.

I introduce a ResourceProfileManager in this PR that will track all the actual ResourceProfile objects so that we can keep them all in a single place and just pass around and use in datastructures the resource profile id. The resource profile id can be used with the ResourceProfileManager to get the actual ResourceProfile contents.

There are various places in the code that use executor "slots" for things. The ResourceProfile adds functionality to keep that calculation in it. This logic is more complex then it should due to standalone mode and mesos coarse grained not setting the executor cores config. They default to all cores on the worker, so calculating slots is harder there.

This PR keeps the functionality to make the cores the limiting resource because the scheduler still uses that for "slots" for a few things.

This PR does also add the resource profile id to the Stage and stage info classes to be able to test things easier. That full set of changes will come with the scheduler PR that will be after this one.

The PR stops at the scheduler backend pieces for the cluster manager and the real YARN support hasn't been added in this PR, that again will be in a separate PR, so this has a few of the API changes up to the cluster manager and then just uses the default profile requests to continue.

The code for the entire feature is here for reference: https://github.com/apache/spark/pull/27053/files although it needs to be upmerged again as well.

### Why are the changes needed?

Needed for stage level scheduling feature.

### Does this PR introduce any user-facing change?

No user facing api changes added here.

### How was this patch tested?

Lots of unit tests and manually testing. I tested on yarn, k8s, standalone, local modes. Ran both failure and success cases.

Closes#27313 from tgravescs/SPARK-29148.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

- Fix the scope of `Logging.initializeForcefully` so that it doesn't appear in subclasses' public methods. Right now, `sc.initializeForcefully(false, false)` is allowed to called.

- Don't show classes under `org.apache.spark.internal` package in API docs.

- Add missing `since` annotation.

- Fix the scope of `ArrowUtils` to remove it from the API docs.

### Why are the changes needed?

Avoid leaking APIs unintentionally in Spark 3.0.0.

### Does this PR introduce any user-facing change?

No. All these changes are to avoid leaking APIs unintentionally in Spark 3.0.0.

### How was this patch tested?

Manually generated the API docs and verified the above issues have been fixed.

Closes#27528 from zsxwing/audit-ss-apis.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Revert

#27360#27396#27374#27389

### Why are the changes needed?

BLAS need more performace tests, specially on sparse datasets.

Perfermance test of LogisticRegression (https://github.com/apache/spark/pull/27374) on sparse dataset shows that blockify vectors to matrices and use BLAS will cause performance regression.

LinearSVC and LinearRegression were also updated in the same way as LogisticRegression, so we need to revert them to make sure no regression.

### Does this PR introduce any user-facing change?

remove newly added param blockSize

### How was this patch tested?

reverted testsuites

Closes#27487 from zhengruifeng/revert_blockify_ii.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

Add new config `spark.network.maxRemoteBlockSizeFetchToMem` fallback to the old config `spark.maxRemoteBlockSizeFetchToMem`.

### Why are the changes needed?

For naming consistency.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27463 from xuanyuanking/SPARK-26700-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Eagerly filter out zombie `TaskSetManager` before offering resources to reduce any overhead as possible.

And this PR also avoid doing `recomputeLocality` and `addPendingTask` when `TaskSetManager` is zombie.

### Why are the changes needed?

Zombie `TaskSetManager` could still exist in Pool's `schedulableQueue` when it has running tasks. Offering resources on a zombie `TaskSetManager` could bring unnecessary overhead and is meaningless.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#27455 from Ngone51/exclude-zombie-tsm.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

If `updateBlockInfo` returns false, which means the `BlockManager` will re-register and report all blocks later. So, we may report two times for the same block, which causes `AppStatusListener` to count used memory for two times, too. As a result, the used memory can exceed the total memory.

So, this PR changes it to not post `SparkListenerBlockUpdated` when `updateBlockInfo` returns false. And, always clean up used memory whenever `AppStatusListener` receives `SparkListenerBlockManagerAdded`.

### Why are the changes needed?

This PR tries to fix negative memory usage in UI (https://user-images.githubusercontent.com/3488126/72131225-95e37e00-33b6-11ea-8708-6e5ed328d1ca.png, see #27144 ). Though, I'm not very sure this is the root cause for #27144 since known information is limited here.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added new tests by xuanyuanking

Closes#27306 from Ngone51/fix-possible-negative-memory.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: wuyi <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This change is to allow custom resource scheduler (GPUs,FPGAs,etc) resource discovery to be more flexible. Users are asking for it to work with hadoop 2.x versions that do not support resource scheduling in YARN and/or also they may not run in an isolated environment.

This change creates a plugin api that users can write their own resource discovery class that allows a lot more flexibility. The user can chain plugins for different resource types. The user specified plugins execute in the order specified and will fall back to use the discovery script plugin if they don't return information for a particular resource.

I had to open up a few of the classes to be public and change them to not be case classes and make them developer api in order for the the plugin to get enough information it needs.

I also relaxed the yarn side so that if yarn isn't configured for resource scheduling we just warn and go on. This helps users that have yarn 3.1 but haven't configured the resource scheduling side on their cluster yet, or aren't running in isolated environment.

The user would configured this like:

--conf spark.resources.discovery.plugin="org.apache.spark.resource.ResourceDiscoveryFPGAPlugin, org.apache.spark.resource.ResourceDiscoveryGPUPlugin"

Note the executor side had to be wrapped with a classloader to make sure we include the user classpath for jars they specified on submission.

Note this is more flexible because the discovery script has limitations such as spawning it in a separate process. This means if you are trying to allocate resources in that process they might be released when the script returns. Other things are the class makes it more flexible to be able to integrate with existing systems and solutions for assigning resources.

### Why are the changes needed?

to more easily use spark resource scheduling with older versions of hadoop or in non-isolated enivronments.

### Does this PR introduce any user-facing change?

Yes a plugin api

### How was this patch tested?

Unit tests added and manual testing done on yarn and standalone modes.

Closes#27410 from tgravescs/hadoop27spark3.

Lead-authored-by: Thomas Graves <tgraves@nvidia.com>

Co-authored-by: Thomas Graves <tgraves@apache.org>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

- Replace `new Integer(0)` by a serializable instance in RDD.scala

- Use `.valueOf()` instead of constructors of `java.lang.Integer` and `java.lang.Double` because constructors has been deprecated, see https://docs.oracle.com/javase/9/docs/api/java/lang/Integer.html

### Why are the changes needed?

This fixes the following warnings:

1. RDD.scala:240: constructor Integer in class Integer is deprecated: see corresponding Javadoc for more information.

2. MutableProjectionSuite.scala:63: constructor Integer in class Integer is deprecated: see corresponding Javadoc for more information.

3. UDFSuite.scala:446: constructor Integer in class Integer is deprecated: see corresponding Javadoc for more information.

4. UDFSuite.scala:451: constructor Double in class Double is deprecated: see corresponding Javadoc for more information.

5. HiveUserDefinedTypeSuite.scala:71: constructor Double in class Double is deprecated: see corresponding Javadoc for more information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- By RDDSuite, MutableProjectionSuite, UDFSuite and HiveUserDefinedTypeSuite

Closes#27399 from MaxGekk/eliminate-warning-part4.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Add a section to the Configuration page to document configurations for executor metrics.

At the same time, rename spark.eventLog.logStageExecutorProcessTreeMetrics.enabled to spark.executor.processTreeMetrics.enabled and make it independent of spark.eventLog.logStageExecutorMetrics.enabled.

### Why are the changes needed?

Executor metrics are new in Spark 3.0. They lack documentation.

Memory metrics as a whole are always collected, but the ones obtained from the process tree have to be optionally enabled. Making this depend on a single configuration makes for more intuitive behavior. Given this, the configuration property is renamed to better reflect its meaning.

### Does this PR introduce any user-facing change?

Yes, only in that the configurations are all new to 3.0.

### How was this patch tested?

Not necessary.

Closes#27329 from wypoon/SPARK-27324.

Authored-by: Wing Yew Poon <wypoon@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

### What changes were proposed in this pull request?

This PR reflects review comments on post-hoc review among PRs for SPARK-29779 (#27085), SPARK-30479 (#27164). The list of review comments this PR addresses are below:

* https://github.com/apache/spark/pull/27085#discussion_r373304218

* https://github.com/apache/spark/pull/27164#discussion_r373300793

* https://github.com/apache/spark/pull/27164#discussion_r373301193

* https://github.com/apache/spark/pull/27164#discussion_r373301351

I also applied review comments to the CORE module (BasicEventFilterBuilder.scala) as well, as the review comments for SQL/core module (SQLEventFilterBuilder.scala) can be applied there as well.

### Why are the changes needed?

There're post-hoc reviews on PRs for such issues, like links in above section.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs.

Closes#27414 from HeartSaVioR/SPARK-28869-SPARK-29779-SPARK-30479-FOLLOWUP-posthoc-reviews.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Followup from https://github.com/apache/spark/pull/27367 to fix a couple of my minor issues with the Test. Fix an indentation and then use UTF-8 instead of ASCII.

### Why are the changes needed?

followup

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

compiled and ran unit test

Closes#27420 from tgravescs/SPARK-30638-followup.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently, when speculative tasks fail/get killed, they are still considered as pending and count towards the calculation of number of needed executors. To be more accurate: `stageAttemptToNumSpeculativeTasks(stageAttempt)` is incremented on onSpeculativeTaskSubmitted, but never decremented. `stageAttemptToNumSpeculativeTasks -= stageAttempt` is performed on stage completion. **This means Spark is marking ended speculative tasks as pending, which leads to Spark to hold more executors that it actually needs!**

This PR fixes this issue by updating `stageAttemptToSpeculativeTaskIndices` and `stageAttemptToNumSpeculativeTasks` on speculative tasks completion. This PR also addresses some other minor issues: scheduler behavior after receiving an intentionally killed task event; try to address [SPARK-28403](https://issues.apache.org/jira/browse/SPARK-28403).

### Why are the changes needed?

This has caused resource wastage in our production with speculation enabled. With aggressive speculation, we found data skewed jobs can hold hundreds of idle executors with less than 10 tasks running.

An easy repro of the issue (`--conf spark.speculation=true --conf spark.executor.cores=4 --conf spark.dynamicAllocation.maxExecutors=1000` in cluster mode):

```

val n = 4000

val someRDD = sc.parallelize(1 to n, n)

someRDD.mapPartitionsWithIndex( (index: Int, it: Iterator[Int]) => {

if (index < 300 && index >= 150) {

Thread.sleep(index * 1000) // Fake running tasks

} else if (index == 300) {

Thread.sleep(1000 * 1000) // Fake long running tasks

}

it.toList.map(x => index + ", " + x).iterator

}).collect

```

You will see when running the last task, we would be hold 38 executors (see below), which is exactly (152 + 3) / 4 = 38.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added a comprehensive unit test.

Test with the above repro shows that we are holding 2 executors at the end

Closes#27223 from linzebing/speculation_fix.

Authored-by: zebingl@fb.com <zebingl@fb.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Add the allocated resources to parameters to the PluginContext so that any plugins in driver or executor could use this information to initialize devices or use this information in a useful manner.

### Why are the changes needed?

To allow users to initialize/track devices once at the executor level before each task runs to use them.

### Does this PR introduce any user-facing change?

Yes to the people using the Executor/Driver plugin interface.

### How was this patch tested?

Unit tests and manually by writing a plugin that initialized GPU's using this interface.

Closes#27367 from tgravescs/pluginWithResources.

Lead-authored-by: Thomas Graves <tgraves@nvidia.com>

Co-authored-by: Thomas Graves <tgraves@apache.org>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

This PR fixes a couple of minor issues on SPARK-30481:

* SHS runs "compaction" regardless of the result of "merge application listing".

If "merge application listing" fails, most likely the application log will have some issue and "compaction" won't work properly then. We can just skip trying compaction when "merge application listing" fails.

* When "compaction" throws exception we don't handle it.

It's expected to swallow exception, but we don't even log the exception for now. It should be logged properly.

### Why are the changes needed?

Described in above section.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing UTs.

Closes#27408 from HeartSaVioR/SPARK-30481-FOLLOWUP-MINOR-FIXES.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

…

### What changes were proposed in this pull request?

If the resource discovery goes bad, like it doesn't return enough GPUs, currently it just throws an exception. This is hard for users to see because you have to go find the executor logs and its not reported back to the driver. On yarn if you exit explicitly then the driver logs show the error thrown and its much more useful. On yarn with the explicit exit with non-zero it also goes against failed executor launch attempts and the application will eventually exit. so if its fundamentally a bad configuration or bad discovery script it won't just hang forever. I also tested on k8s and standalone and the behaviors there don't change, the executor cleanly exit with an error message in the logs. The standalone ui makes it easy to see failed executors.

### Why are the changes needed?

better user experience.

### Does this PR introduce any user-facing change?

no api changes

### How was this patch tested?

ran unit tests and manually tested on yarn, standalone, and k8s.

Closes#27385 from tgravescs/SPARK-30529.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

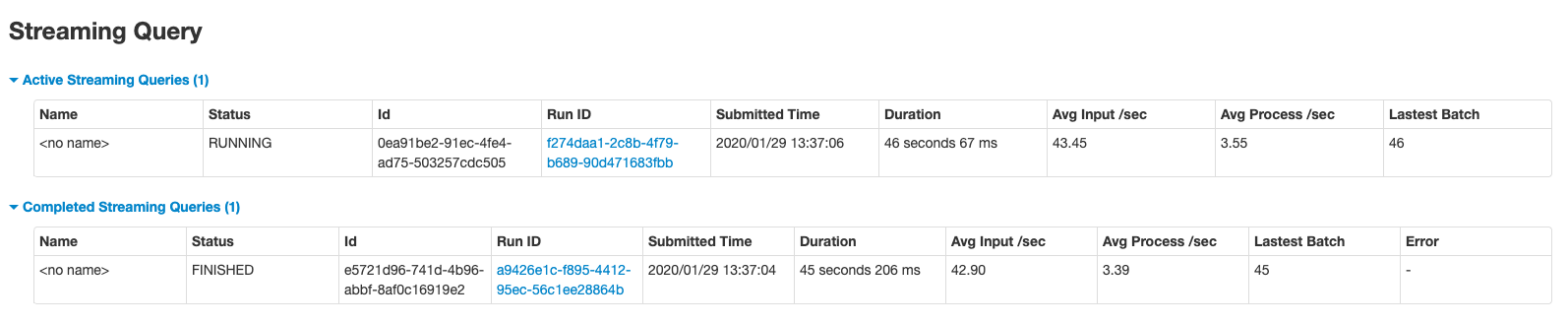

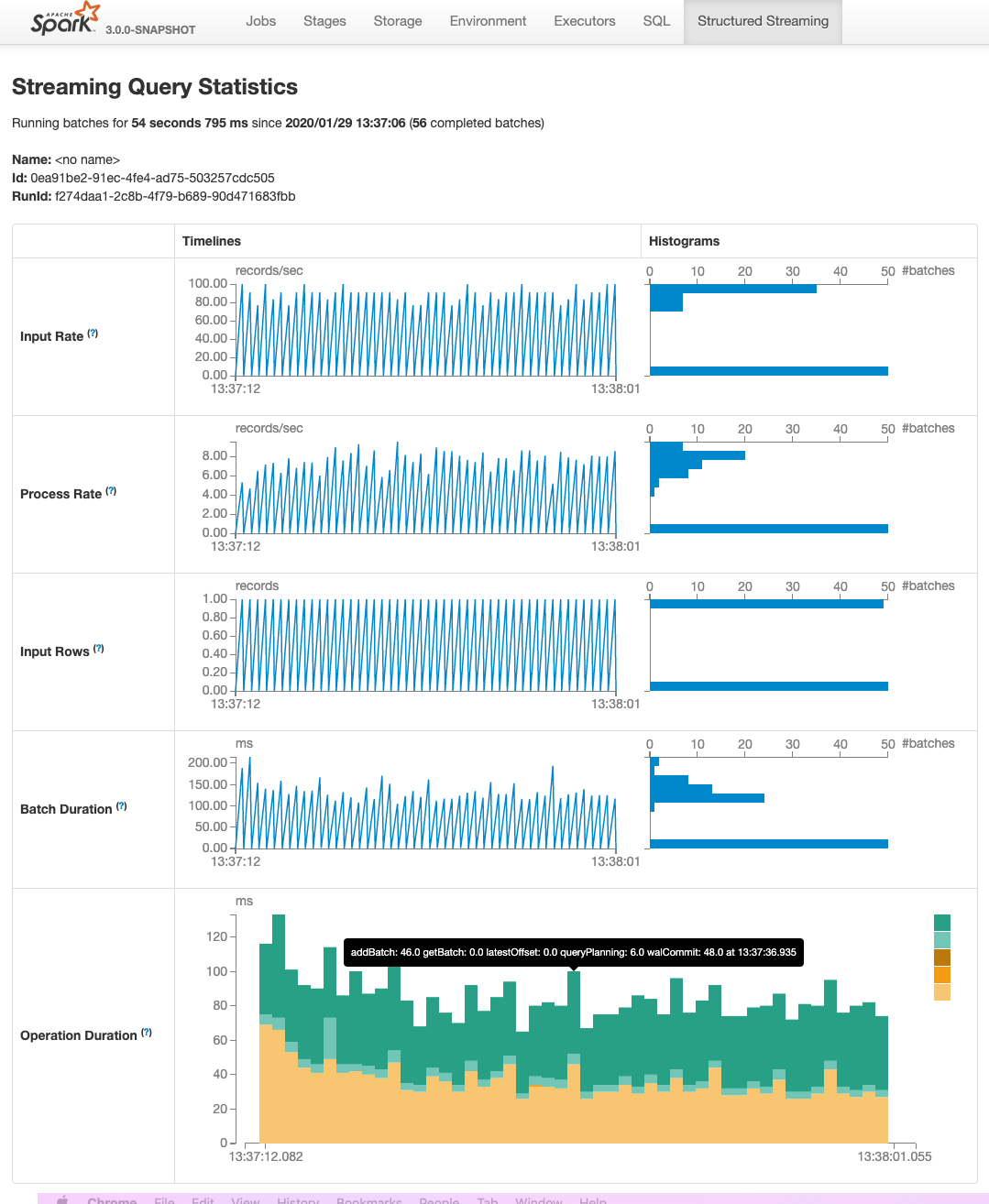

This PR adds two pages to Web UI for Structured Streaming:

- "/streamingquery": Streaming Query Page, providing some aggregate information for running/completed streaming queries.

- "/streamingquery/statistics": Streaming Query Statistics Page, providing detailed information for streaming query, including `Input Rate`, `Process Rate`, `Input Rows`, `Batch Duration` and `Operation Duration`

### Why are the changes needed?

It helps users to better monitor Structured Streaming query.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- new added and existing UTs

- manual test

Closes#26201 from uncleGen/SPARK-29543.

Lead-authored-by: uncleGen <hustyugm@gmail.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: Genmao Yu <hustyugm@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

There are scenarios where Spark History Server is located behind the VPC. So whenever api calls hit to get the executor Summary(allexecutors). There can be delay in getting the response of executor summary and in mean time "stage-page-template.html" is loaded and the response of executor Summary is not added to the stage-page-template.html.

As the result of which Aggregated Metrics by Executor in stage page is showing blank.

This scenario can be easily found in the cases when there is some proxy-server which is responsible for sending the request and response to spark History server.

This can be reproduced in Knox/In-house proxy servers which are used to send and receive response to Spark History Server.

Alternative scenario to test this case, Open the spark UI in developer mode in browser add some breakpoint in stagepage.js, this will add some delay in getting the response and now if we check the spark UI for stage Aggregated Metrics by Executor in stage page is showing blank.

So In-order to fix this there is a need to add the change in stagepage.js . There is a need to add the api call to get the html page(stage-page-template.html) first and after that other api calls to get the data that needs to attached in the stagepage (like executor Summary, stageExecutorSummaryInfoKeys exc)

### Why are the changes needed?

Since stage page is useful for debugging purpose, This helps in understanding how many task ran on the particular executor and information related to shuffle read and write on that executor.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually tested. Testing this in a reproducible way requires a running browser or HTML rendering engine that executes the JavaScript.Open the spark UI in developer mode in browser add some breakpoint in stagepage.js, this will add some delay in getting the response and now if we check the spark UI for stage Aggregated Metrics by Executor in stage page is showing blank.

Before fix

<img width="1529" alt="Screenshot 2020-01-20 at 3 21 55 PM" src="https://user-images.githubusercontent.com/34540906/72716739-bcfd3500-3b98-11ea-8dbe-90a135822f92.png">

After fix

<img width="1540" alt="Screenshot 2020-01-20 at 3 23 12 PM" src="https://user-images.githubusercontent.com/34540906/72716782-d30af580-3b98-11ea-8764-2bde77764604.png">

Closes#27292 from SaurabhChawla100/SPARK-30582.

Authored-by: Saurabh Chawla <saurabhc@qubole.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This patch addresses remaining functionality on event log compaction: integrate compaction into FsHistoryProvider.

This patch is next task of SPARK-30479 (#27164), please refer the description of PR #27085 to see overall rationalization of this patch.

### Why are the changes needed?

One of major goal of SPARK-28594 is to prevent the event logs to become too huge, and SPARK-29779 achieves the goal. We've got another approach in prior, but the old approach required models in both KVStore and live entities to guarantee compatibility, while they're not designed to do so.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added UT.

Closes#27208 from HeartSaVioR/SPARK-30481.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@apache.org>

### What changes were proposed in this pull request?

1, stack input vectors to blocks (like ALS/MLP);

2, add new param `blockSize`;

3, add a new class `InstanceBlock`

4, standardize the input outside of optimization procedure;

### Why are the changes needed?

1, reduce RAM to persist traing dataset; (save ~40% in test)

2, use Level-2 BLAS routines; (12% ~ 28% faster, without native BLAS)

### Does this PR introduce any user-facing change?

a new param `blockSize`

### How was this patch tested?

existing and updated testsuites

Closes#27360 from zhengruifeng/blockify_svc.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

Add HostLocalBlock size in log total bytes

### Why are the changes needed?

total size in log is wrong as hostlocal block size is missed

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

Manually checking the log

Closes#27320 from Udbhav30/bug.

Authored-by: Udbhav30 <u.agrawal30@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Increased the limit for log events that could be stored in `SparkFunSuite.LogAppender` from 100 to 1000.

### Why are the changes needed?

Sometimes (see traces in SPARK-30599) additional info is logged via log4j, and appended to `LogAppender`. For example, unusual log entries are:

```

[36] Removed broadcast_214_piece0 on 192.168.1.66:52354 in memory (size: 5.7 KiB, free: 2003.8 MiB)

[37] Removed broadcast_204_piece0 on 192.168.1.66:52354 in memory (size: 5.7 KiB, free: 2003.9 MiB)

[38] Removed broadcast_200_piece0 on 192.168.1.66:52354 in memory (size: 3.7 KiB, free: 2003.9 MiB)

[39] Removed broadcast_207_piece0 on 192.168.1.66:52354 in memory (size: 24.2 KiB, free: 2003.9 MiB)

[40] Removed broadcast_208_piece0 on 192.168.1.66:52354 in memory (size: 24.2 KiB, free: 2003.9 MiB)

```

and a test which uses `LogAppender` can fail with the exception:

```

java.lang.IllegalStateException: Number of events reached the limit of 100 while logging CSV header matches to schema w/ enforceSchema.

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By re-running `"SPARK-23786: warning should be printed if CSV header doesn't conform to schema"` in a loop.

Closes#27312 from MaxGekk/log-appender-filter.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/26930 to fix a bug.

When we create shuffle fetch requests, we first collect blocks until they reach the max size. Then we try to merge the blocks (the batch shuffle fetch feature) and split the merged blocks to several groups, to make sure each group doesn't reach the max numBlocks. For the last group, if it's smaller than the max numBlocks, put it back to the input list and deal with it again later.

The last step has a problem:

1. if we put a merged block back to the input list and merge it again, it fails.

2. when putting back some blocks, we should update `numBlocksToFetch`

This PR fixes these 2 problems.

### Why are the changes needed?

bug fix

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

new test

Closes#27280 from cloud-fan/aqe.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to output additional msg from the tests where a log appender is added. The message is printed as a part of `IllegalStateException` in the case of reaching the limit of maximum number of logged events.

### Why are the changes needed?

If a log appender is not removed from the log4j appenders list. the caller message could help to investigate the problem and find the test which doesn't remove the log appender.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running the modified test suites `AvroSuite`, `CSVSuite`, `ResolveHintsSuite` and etc.

Closes#27296 from MaxGekk/assign-name-to-log-appender.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Renamed an identifier `iterator` to `iter` to avoid compile error with Scala 2.13.

### Why are the changes needed?

As of Scala 2.13, scala.collection.Iterator has "iterator" method so if an inner class of Iterator means to refer an outer identifier named "iterator", it does not work as we think.

I listed source files that can be affected by that change by `find . -name "*.scala" -exec grep -El "new .*Iterator\[.* +{" {} \;`

As far as I confirmed util.Utils` is affected.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27275 from sarutak/fix-iterator-for-2.13.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

1) Added missing match case to SparkUncaughtExceptionHandler, so that it would not halt the process when the exception doesn't match any of the match case statements.

2) Added log message before halting process. During debugging it wasn't obvious why the Worker process would DEAD (until we set SPARK_NO_DAEMONIZE=1) due to the shell-scripts puts the process into background and essentially absorbs the exit code.

3) Added SparkUncaughtExceptionHandlerSuite. Basically we create a Spark exception-throwing application with SparkUncaughtExceptionHandler and then check its exit code.

### Why are the changes needed?

SPARK-30310, because the process would halt unexpectedly.

### How was this patch tested?

All unit tests (mvn test) were ran and OK.

Closes#26955 from tinhto-000/uncaught_exception_fix.

Authored-by: git <tinto@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This is the second PR for the Stage Level Scheduling. This is adding in the necessary executor side changes:

1) executors to know what ResourceProfile they should be using

2) handle parsing the resource profile settings - these are not in the global configs

3) then reporting back to the driver what resource profile it was started with.

This PR adds all the piping for YARN to pass the information all the way to executors, but it just uses the default ResourceProfile (which is the global applicatino level configs).

At a high level these changes include:

1) adding a new --resourceProfileId option to the CoarseGrainedExecutorBackend

2) Add the ResourceProfile settings to new internal confs that gets passed into the Executor

3) Executor changes that use the resource profile id passed in to read the corresponding ResourceProfile confs and then parse those requests and discover resources as necessary

4) Executor registers to Driver with the Resource profile id so that the ExecutorMonitor can track how many executor with each profile are running

5) YARN side changes to show that passing the resource profile id and confs actually works. Just uses the DefaultResourceProfile for now.

I also removed a check from the CoarseGrainedExecutorBackend that used to check to make sure there were task requirements before parsing any custom resource executor requests. With the resource profiles this becomes much more expensive because we would then have to pass the task requests to each executor and the check was just a short cut and not really needed. It was much cleaner just to remove it.

Note there were some changes to the ResourceProfile, ExecutorResourceRequests, and TaskResourceRequests in this PR as well because I discovered some issues with things not being immutable. That api now look like:

val rpBuilder = new ResourceProfileBuilder()

val ereq = new ExecutorResourceRequests()

val treq = new TaskResourceRequests()

ereq.cores(2).memory("6g").memoryOverhead("2g").pysparkMemory("2g").resource("gpu", 2, "/home/tgraves/getGpus")

treq.cpus(2).resource("gpu", 2)

val resourceProfile = rpBuilder.require(ereq).require(treq).build

This makes is so that ResourceProfile is immutable and Spark can use it directly without worrying about the user changing it.

### Why are the changes needed?

These changes are needed for the executor to report which ResourceProfile they are using so that ultimately the dynamic allocation manager can use that information to know how many with a profile are running and how many more it needs to request. Its also needed to get the resource profile confs to the executor so that it can run the appropriate discovery script if needed.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit tests and manually on YARN.

Closes#26682 from tgravescs/SPARK-29306.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>