## What changes were proposed in this pull request?

Currently we only clean up the local directories on application removed. However, when executors die and restart repeatedly, many temp files are left untouched in the local directories, which is undesired behavior and could cause disk space used up gradually.

We can detect executor death in the Worker, and clean up the non-shuffle files (files not ended with ".index" or ".data") in the local directories, we should not touch the shuffle files since they are expected to be used by the external shuffle service.

Scope of this PR is limited to only implement the cleanup logic on a Standalone cluster, we defer to experts familiar with other cluster managers(YARN/Mesos/K8s) to determine whether it's worth to add similar support.

## How was this patch tested?

Add new test suite to cover.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#21390 from jiangxb1987/cleanupNonshuffleFiles.

## What changes were proposed in this pull request?

* Adds support for local:// scheme like in k8s case for image based deployments where the jar is already in the image. Affects cluster mode and the mesos dispatcher. Covers also file:// scheme. Keeps the default case where jar resolution happens on the host.

## How was this patch tested?

Dispatcher image with the patch, use it to start DC/OS Spark service:

skonto/spark-local-disp:test

Test image with my application jar located at the root folder:

skonto/spark-local:test

Dockerfile for that image.

From mesosphere/spark:2.3.0-2.2.1-2-hadoop-2.6

COPY spark-examples_2.11-2.2.1.jar /

WORKDIR /opt/spark/dist

Tests:

The following work as expected:

* local normal example

```

dcos spark run --submit-args="--conf spark.mesos.appJar.local.resolution.mode=container --conf spark.executor.memory=1g --conf spark.mesos.executor.docker.image=skonto/spark-local:test

--conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi local:///spark-examples_2.11-2.2.1.jar"

```

* make sure the flag does not affect other uris

```

dcos spark run --submit-args="--conf spark.mesos.appJar.local.resolution.mode=container --conf spark.executor.memory=1g --conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi https://s3-eu-west-1.amazonaws.com/fdp-stavros-test/spark-examples_2.11-2.1.1.jar"

```

* normal example no local

```

dcos spark run --submit-args="--conf spark.executor.memory=1g --conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi https://s3-eu-west-1.amazonaws.com/fdp-stavros-test/spark-examples_2.11-2.1.1.jar"

```

The following fails

* uses local with no setting, default is host.

```

dcos spark run --submit-args="--conf spark.executor.memory=1g --conf spark.mesos.executor.docker.image=skonto/spark-local:test

--conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi local:///spark-examples_2.11-2.2.1.jar"

```

Author: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com>

Closes#21378 from skonto/local-upstream.

## What changes were proposed in this pull request?

Added sections to pandas_udf docs, in the grouped map section, to indicate columns are assigned by position.

## How was this patch tested?

NA

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#21471 from BryanCutler/arrow-doc-pandas_udf-column_by_pos-SPARK-21427.

## What changes were proposed in this pull request?

The pandas_udf functionality was introduced in 2.3.0, but is not completely stable and still evolving. This adds a label to indicate it is still an experimental API.

## How was this patch tested?

NA

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#21435 from BryanCutler/arrow-pandas_udf-experimental-SPARK-24392.

## What changes were proposed in this pull request?

Spark provides four codecs: `lz4`, `lzf`, `snappy`, and `zstd`. This pr add missing shortCompressionCodecNames to configuration.

## How was this patch tested?

manually tested

Author: Yuming Wang <yumwang@ebay.com>

Closes#21431 from wangyum/SPARK-19112.

## What changes were proposed in this pull request?

uniVocity parser allows to specify only required column names or indexes for [parsing](https://www.univocity.com/pages/parsers-tutorial) like:

```

// Here we select only the columns by their indexes.

// The parser just skips the values in other columns

parserSettings.selectIndexes(4, 0, 1);

CsvParser parser = new CsvParser(parserSettings);

```

In this PR, I propose to extract indexes from required schema and pass them into the CSV parser. Benchmarks show the following improvements in parsing of 1000 columns:

```

Select 100 columns out of 1000: x1.76

Select 1 column out of 1000: x2

```

**Note**: Comparing to current implementation, the changes can return different result for malformed rows in the `DROPMALFORMED` and `FAILFAST` modes if only subset of all columns is requested. To have previous behavior, set `spark.sql.csv.parser.columnPruning.enabled` to `false`.

## How was this patch tested?

It was tested by new test which selects 3 columns out of 15, by existing tests and by new benchmarks.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Author: Maxim Gekk <max.gekk@gmail.com>

Closes#21415 from MaxGekk/csv-column-pruning2.

## What changes were proposed in this pull request?

uniVocity parser allows to specify only required column names or indexes for [parsing](https://www.univocity.com/pages/parsers-tutorial) like:

```

// Here we select only the columns by their indexes.

// The parser just skips the values in other columns

parserSettings.selectIndexes(4, 0, 1);

CsvParser parser = new CsvParser(parserSettings);

```

In this PR, I propose to extract indexes from required schema and pass them into the CSV parser. Benchmarks show the following improvements in parsing of 1000 columns:

```

Select 100 columns out of 1000: x1.76

Select 1 column out of 1000: x2

```

**Note**: Comparing to current implementation, the changes can return different result for malformed rows in the `DROPMALFORMED` and `FAILFAST` modes if only subset of all columns is requested. To have previous behavior, set `spark.sql.csv.parser.columnPruning.enabled` to `false`.

## How was this patch tested?

It was tested by new test which selects 3 columns out of 15, by existing tests and by new benchmarks.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#21296 from MaxGekk/csv-column-pruning.

## What changes were proposed in this pull request?

This change changes spark behavior to use the correct environment variable set by Mesos in the container on startup.

Author: Jake Charland <jakec@uber.com>

Closes#18894 from jakecharland/MesosSandbox.

## What changes were proposed in this pull request?

This pr added an option `queryTimeout` for the number of seconds the the driver will wait for a Statement object to execute.

## How was this patch tested?

Added tests in `JDBCSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#21173 from maropu/SPARK-23856.

## What changes were proposed in this pull request?

Hadoop 3 introduces HDFS federation. This means that multiple namespaces are allowed on the same HDFS cluster. In Spark, we need to ask the delegation token for all the namenodes (for each namespace), otherwise accessing any other namespace different from the default one (for which we already fetch the delegation token) fails.

The PR adds the automatic discovery of all the namenodes related to all the namespaces available according to the configs in hdfs-site.xml.

## How was this patch tested?

manual tests in dockerized env

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21216 from mgaido91/SPARK-24149.

Instead of always throwing a generic exception when the AM fails,

print a generic error and throw the exception with the YARN

diagnostics containing the reason for the failure.

There was an issue with YARN sometimes providing a generic diagnostic

message, even though the AM provides a failure reason when

unregistering. That was happening because the AM was registering

too late, and if errors happened before the registration, YARN would

just create a generic "ExitCodeException" which wasn't very helpful.

Since most errors in this path are a result of not being able to

connect to the driver, this change modifies the AM registration

a bit so that the AM is registered before the connection to the

driver is established. That way, errors are properly propagated

through YARN back to the driver.

As part of that, I also removed the code that retried connections

to the driver from the client AM. At that point, the driver should

already be up and waiting for connections, so it's unlikely that

retrying would help - and in case it does, that means a flaky

network, which would mean problems would probably show up again.

The effect of that is that connection-related errors are reported

back to the driver much faster now (through the YARN report).

One thing to note is that there seems to be a race on the YARN

side that causes a report to be sent to the client without the

corresponding diagnostics string from the AM; the diagnostics are

available later from the RM web page. For that reason, the generic

error messages are kept in the Spark scheduler code, to help

guide users to a way of debugging their failure.

Also of note is that if YARN's max attempts configuration is lower

than Spark's, Spark will not unregister the AM with a proper

diagnostics message. Unfortunately there seems to be no way to

unregister the AM and still allow further re-attempts to happen.

Testing:

- existing unit tests

- some of our integration tests

- hardcoded an invalid driver address in the code and verified

the error in the shell. e.g.

```

scala> 18/05/04 15:09:34 ERROR cluster.YarnClientSchedulerBackend: YARN application has exited unexpectedly with state FAILED! Check the YARN application logs for more details.

18/05/04 15:09:34 ERROR cluster.YarnClientSchedulerBackend: Diagnostics message: Uncaught exception: org.apache.spark.SparkException: Exception thrown in awaitResult:

<AM stack trace>

Caused by: java.io.IOException: Failed to connect to localhost/127.0.0.1:1234

<More stack trace>

```

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#21243 from vanzin/SPARK-24182.

## What changes were proposed in this pull request?

We reverted `spark.sql.hive.convertMetastoreOrc` at https://github.com/apache/spark/pull/20536 because we should not ignore the table-specific compression conf. Now, it's resolved via [SPARK-23355](8aa1d7b0ed).

## How was this patch tested?

Pass the Jenkins.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#21186 from dongjoon-hyun/SPARK-24112.

## What changes were proposed in this pull request?

This copies the material from the spark.mllib user guide page for Naive Bayes to the spark.ml user guide page. I also improved the wording and organization slightly.

## How was this patch tested?

Built docs locally.

Author: Joseph K. Bradley <joseph@databricks.com>

Closes#21272 from jkbradley/nb-doc-update.

## What changes were proposed in this pull request?

This updates Parquet to 1.10.0 and updates the vectorized path for buffer management changes. Parquet 1.10.0 uses ByteBufferInputStream instead of byte arrays in encoders. This allows Parquet to break allocations into smaller chunks that are better for garbage collection.

## How was this patch tested?

Existing Parquet tests. Running in production at Netflix for about 3 months.

Author: Ryan Blue <blue@apache.org>

Closes#21070 from rdblue/SPARK-23972-update-parquet-to-1.10.0.

## What changes were proposed in this pull request?

In Apache Spark 2.4, [SPARK-23355](https://issues.apache.org/jira/browse/SPARK-23355) fixes a bug which ignores table properties during convertMetastore for tables created by STORED AS ORC/PARQUET.

For some Parquet tables having table properties like TBLPROPERTIES (parquet.compression 'NONE'), it was ignored by default before Apache Spark 2.4. After upgrading cluster, Spark will write uncompressed file which is different from Apache Spark 2.3 and old.

This PR adds a migration note for that.

## How was this patch tested?

N/A

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#21269 from dongjoon-hyun/SPARK-23355-DOC.

## What changes were proposed in this pull request?

This PR fixes the migration note for SPARK-23291 since it's going to backport to 2.3.1. See the discussion in https://issues.apache.org/jira/browse/SPARK-23291

## How was this patch tested?

N/A

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21249 from HyukjinKwon/SPARK-23291.

## What changes were proposed in this pull request?

`from_utc_timestamp` assumes its input is in UTC timezone and shifts it to the specified timezone. When the timestamp contains timezone(e.g. `2018-03-13T06:18:23+00:00`), Spark breaks the semantic and respect the timezone in the string. This is not what user expects and the result is different from Hive/Impala. `to_utc_timestamp` has the same problem.

More details please refer to the JIRA ticket.

This PR fixes this by returning null if the input timestamp contains timezone.

## How was this patch tested?

new tests

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21169 from cloud-fan/from_utc_timezone.

## What changes were proposed in this pull request?

Added support to specify the 'spark.executor.extraJavaOptions' value in terms of the `{{APP_ID}}` and/or `{{EXECUTOR_ID}}`, `{{APP_ID}}` will be replaced by Application Id and `{{EXECUTOR_ID}}` will be replaced by Executor Id while starting the executor.

## How was this patch tested?

I have verified this by checking the executor process command and gc logs. I verified the same in different deployment modes(Standalone, YARN, Mesos) client and cluster modes.

Author: Devaraj K <devaraj@apache.org>

Closes#21088 from devaraj-kavali/SPARK-24003.

## What changes were proposed in this pull request?

By default, the dynamic allocation will request enough executors to maximize the

parallelism according to the number of tasks to process. While this minimizes the

latency of the job, with small tasks this setting can waste a lot of resources due to

executor allocation overhead, as some executor might not even do any work.

This setting allows to set a ratio that will be used to reduce the number of

target executors w.r.t. full parallelism.

The number of executors computed with this setting is still fenced by

`spark.dynamicAllocation.maxExecutors` and `spark.dynamicAllocation.minExecutors`

## How was this patch tested?

Units tests and runs on various actual workloads on a Yarn Cluster

Author: Julien Cuquemelle <j.cuquemelle@criteo.com>

Closes#19881 from jcuquemelle/AddTaskPerExecutorSlot.

## What changes were proposed in this pull request?

Currently we find the wider common type by comparing the two types from left to right, this can be a problem when you have two data types which don't have a common type but each can be promoted to StringType.

For instance, if you have a table with the schema:

[c1: date, c2: string, c3: int]

The following succeeds:

SELECT coalesce(c1, c2, c3) FROM table

While the following produces an exception:

SELECT coalesce(c1, c3, c2) FROM table

This is only a issue when the seq of dataTypes contains `StringType` and all the types can do string promotion.

close#19033

## How was this patch tested?

Add test in `TypeCoercionSuite`

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#21074 from jiangxb1987/typeCoercion.

## What changes were proposed in this pull request?

This PR proposes to fix `roxygen2` to `5.0.1` in `docs/README.md` for SparkR documentation generation.

If I use higher version and creates the doc, it shows the diff below. Not a big deal but it bothered me.

```diff

diff --git a/R/pkg/DESCRIPTION b/R/pkg/DESCRIPTION

index 855eb5bf77f..159fca61e06 100644

--- a/R/pkg/DESCRIPTION

+++ b/R/pkg/DESCRIPTION

-57,6 +57,6 Collate:

'types.R'

'utils.R'

'window.R'

-RoxygenNote: 5.0.1

+RoxygenNote: 6.0.1

VignetteBuilder: knitr

NeedsCompilation: no

```

## How was this patch tested?

Manually tested. I met this every time I set the new environment for Spark dev but I have kept forgetting to fix it.

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21020 from HyukjinKwon/minor-r-doc.

## What changes were proposed in this pull request?

Easy fix in the documentation.

## How was this patch tested?

N/A

Closes#20948

Author: Daniel Sakuma <dsakuma@gmail.com>

Closes#20928 from dsakuma/fix_typo_configuration_docs.

## What changes were proposed in this pull request?

This PR is to finish https://github.com/apache/spark/pull/17272

This JIRA is a follow up work after SPARK-19583

As we discussed in that PR

The following DDL for a managed table with an existed default location should throw an exception:

CREATE TABLE ... (PARTITIONED BY ...) AS SELECT ...

CREATE TABLE ... (PARTITIONED BY ...)

Currently there are some situations which are not consist with above logic:

CREATE TABLE ... (PARTITIONED BY ...) succeed with an existed default location

situation: for both hive/datasource(with HiveExternalCatalog/InMemoryCatalog)

CREATE TABLE ... (PARTITIONED BY ...) AS SELECT ...

situation: hive table succeed with an existed default location

This PR is going to make above two situations consist with the logic that it should throw an exception

with an existed default location.

## How was this patch tested?

unit test added

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#20886 from gengliangwang/pr-17272.

## What changes were proposed in this pull request?

Easy fix in the markdown.

## How was this patch tested?

jekyII build test manually.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: lemonjing <932191671@qq.com>

Closes#20897 from Lemonjing/master.

## What changes were proposed in this pull request?

As mentioned in SPARK-23285, this PR introduces a new configuration property `spark.kubernetes.executor.cores` for specifying the physical CPU cores requested for each executor pod. This is to avoid changing the semantics of `spark.executor.cores` and `spark.task.cpus` and their role in task scheduling, task parallelism, dynamic resource allocation, etc. The new configuration property only determines the physical CPU cores available to an executor. An executor can still run multiple tasks simultaneously by using appropriate values for `spark.executor.cores` and `spark.task.cpus`.

## How was this patch tested?

Unit tests.

felixcheung srowen jiangxb1987 jerryshao mccheah foxish

Author: Yinan Li <ynli@google.com>

Author: Yinan Li <liyinan926@gmail.com>

Closes#20553 from liyinan926/master.

This change basically rewrites the security documentation so that it's

up to date with new features, more correct, and more complete.

Because security is such an important feature, I chose to move all the

relevant configuration documentation to the security page, instead of

having them peppered all over the place in the configuration page. This

allows an almost one-stop shop for security configuration in Spark. The

only exceptions are some YARN-specific minor features which I left in

the YARN page.

I also re-organized the page's topics, since they didn't make a lot of

sense. You had kerberos features described inside paragraphs talking

about UI access control, and other oddities. It should be easier now

to find information about specific Spark security features. I also

enabled TOCs for both the Security and YARN pages, since that makes it

easier to see what is covered.

I removed most of the comments from the SecurityManager javadoc since

they just replicated information in the security doc, with different

levels of out-of-dateness.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20742 from vanzin/SPARK-23572.

## What changes were proposed in this pull request?

This PR fixes an incorrect comparison in SQL between timestamp and date. This is because both of them are casted to `string` and then are compared lexicographically. This implementation shows `false` regarding this query `spark.sql("select cast('2017-03-01 00:00:00' as timestamp) between cast('2017-02-28' as date) and cast('2017-03-01' as date)").show`.

This PR shows `true` for this query by casting `date("2017-03-01")` to `timestamp("2017-03-01 00:00:00")`.

(Please fill in changes proposed in this fix)

## How was this patch tested?

Added new UTs to `TypeCoercionSuite`.

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#20774 from kiszk/SPARK-23549.

## What changes were proposed in this pull request?

Currently we allow writing data frames with empty schema into a file based datasource for certain file formats such as JSON, ORC etc. For formats such as Parquet and Text, we raise error at different times of execution. For text format, we return error from the driver early on in processing where as for format such as parquet, the error is raised from executor.

**Example**

spark.emptyDataFrame.write.format("parquet").mode("overwrite").save(path)

**Results in**

``` SQL

org.apache.parquet.schema.InvalidSchemaException: Cannot write a schema with an empty group: message spark_schema {

}

at org.apache.parquet.schema.TypeUtil$1.visit(TypeUtil.java:27)

at org.apache.parquet.schema.TypeUtil$1.visit(TypeUtil.java:37)

at org.apache.parquet.schema.MessageType.accept(MessageType.java:58)

at org.apache.parquet.schema.TypeUtil.checkValidWriteSchema(TypeUtil.java:23)

at org.apache.parquet.hadoop.ParquetFileWriter.<init>(ParquetFileWriter.java:225)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:342)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:302)

at org.apache.spark.sql.execution.datasources.parquet.ParquetOutputWriter.<init>(ParquetOutputWriter.scala:37)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anon$1.newInstance(ParquetFileFormat.scala:151)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$SingleDirectoryWriteTask.newOutputWriter(FileFormatWriter.scala:376)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$SingleDirectoryWriteTask.execute(FileFormatWriter.scala:387)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$org$apache$spark$sql$execution$datasources$FileFormatWriter$$executeTask$3.apply(FileFormatWriter.scala:278)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$org$apache$spark$sql$execution$datasources$FileFormatWriter$$executeTask$3.apply(FileFormatWriter.scala:276)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1411)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.org$apache$spark$sql$execution$datasources$FileFormatWriter$$executeTask(FileFormatWriter.scala:281)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$write$1.apply(FileFormatWriter.scala:206)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$write$1.apply(FileFormatWriter.scala:205)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87)

at org.apache.spark.scheduler.Task.run(Task.scala:109)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.

```

In this PR, we unify the error processing and raise error on attempt to write empty schema based dataframes into file based datasource (orc, parquet, text , csv, json etc) early on in the processing.

## How was this patch tested?

Unit tests added in FileBasedDatasourceSuite.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#20579 from dilipbiswal/spark-23372.

## What changes were proposed in this pull request?

To drop `exprId`s for `Alias` in user-facing info., this pr added an entry for `Alias` in `NonSQLExpression.sql`

## How was this patch tested?

Added tests in `UDFSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#20827 from maropu/SPARK-23666.

## What changes were proposed in this pull request?

Removal of the init-container for downloading remote dependencies. Built off of the work done by vanzin in an attempt to refactor driver/executor configuration elaborated in [this](https://issues.apache.org/jira/browse/SPARK-22839) ticket.

## How was this patch tested?

This patch was tested with unit and integration tests.

Author: Ilan Filonenko <if56@cornell.edu>

Closes#20669 from ifilonenko/remove-init-container.

## What changes were proposed in this pull request?

In the PR https://github.com/apache/spark/pull/20671, I forgot to update the doc about this new support.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20789 from gatorsmile/docUpdate.

## What changes were proposed in this pull request?

Below are the two cases.

``` SQL

case 1

scala> List.empty[String].toDF().rdd.partitions.length

res18: Int = 1

```

When we write the above data frame as parquet, we create a parquet file containing

just the schema of the data frame.

Case 2

``` SQL

scala> val anySchema = StructType(StructField("anyName", StringType, nullable = false) :: Nil)

anySchema: org.apache.spark.sql.types.StructType = StructType(StructField(anyName,StringType,false))

scala> spark.read.schema(anySchema).csv("/tmp/empty_folder").rdd.partitions.length

res22: Int = 0

```

For the 2nd case, since number of partitions = 0, we don't call the write task (the task has logic to create the empty metadata only parquet file)

The fix is to create a dummy single partition RDD and set up the write task based on it to ensure

the metadata-only file.

## How was this patch tested?

A new test is added to DataframeReaderWriterSuite.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#20525 from dilipbiswal/spark-23271.

## What changes were proposed in this pull request?

This PR adds a configuration to control the fallback of Arrow optimization for `toPandas` and `createDataFrame` with Pandas DataFrame.

## How was this patch tested?

Manually tested and unit tests added.

You can test this by:

**`createDataFrame`**

```python

spark.conf.set("spark.sql.execution.arrow.enabled", False)

pdf = spark.createDataFrame([[{'a': 1}]]).toPandas()

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", True)

spark.createDataFrame(pdf, "a: map<string, int>")

```

```python

spark.conf.set("spark.sql.execution.arrow.enabled", False)

pdf = spark.createDataFrame([[{'a': 1}]]).toPandas()

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", False)

spark.createDataFrame(pdf, "a: map<string, int>")

```

**`toPandas`**

```python

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", True)

spark.createDataFrame([[{'a': 1}]]).toPandas()

```

```python

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", False)

spark.createDataFrame([[{'a': 1}]]).toPandas()

```

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20678 from HyukjinKwon/SPARK-23380-conf.

## What changes were proposed in this pull request?

Seems R's substr API treats Scala substr API as zero based and so subtracts the given starting position by 1.

Because Scala's substr API also accepts zero-based starting position (treated as the first element), so the current R's substr test results are correct as they all use 1 as starting positions.

## How was this patch tested?

Modified tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20464 from viirya/SPARK-23291.

These options were used to configure the built-in JRE SSL libraries

when downloading files from HTTPS servers. But because they were also

used to set up the now (long) removed internal HTTPS file server,

their default configuration chose convenience over security by having

overly lenient settings.

This change removes the configuration options that affect the JRE SSL

libraries. The JRE trust store can still be configured via system

properties (or globally in the JRE security config). The only lost

functionality is not being able to disable the default hostname

verifier when using spark-submit, which should be fine since Spark

itself is not using https for any internal functionality anymore.

I also removed the HTTP-related code from the REPL class loader, since

we haven't had a HTTP server for REPL-generated classes for a while.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20723 from vanzin/SPARK-23538.

## What changes were proposed in this pull request?

If spark is run with "spark.authenticate=true", then it will fail to start in local mode.

This PR generates secret in local mode when authentication on.

## How was this patch tested?

Modified existing unit test.

Manually started spark-shell.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes#20652 from gaborgsomogyi/SPARK-23476.

## What changes were proposed in this pull request?

- Added clear information about triggers

- Made the semantics guarantees of watermarks more clear for streaming aggregations and stream-stream joins.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#20631 from tdas/SPARK-23454.

## What changes were proposed in this pull request?

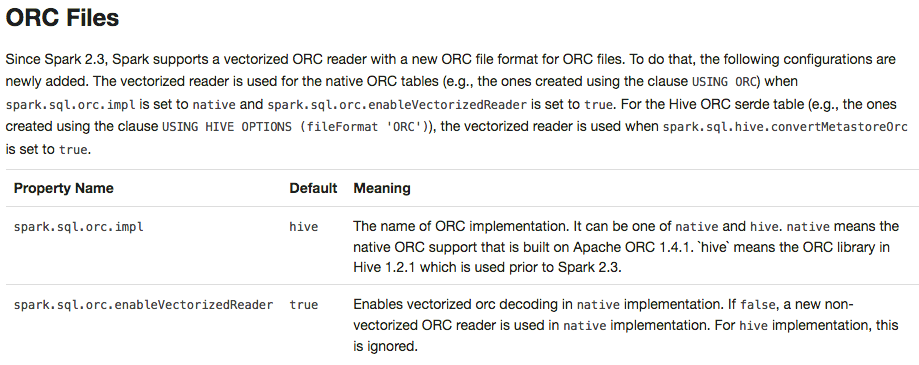

Apache Spark 2.3 introduced `native` ORC supports with vectorization and many fixes. However, it's shipped as a not-default option. This PR enables `native` ORC implementation and predicate-pushdown by default for Apache Spark 2.4. We will improve and stabilize ORC data source before Apache Spark 2.4. And, eventually, Apache Spark will drop old Hive-based ORC code.

## How was this patch tested?

Pass the Jenkins with existing tests.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20634 from dongjoon-hyun/SPARK-23456.

## What changes were proposed in this pull request?

To prevent any regressions, this PR changes ORC implementation to `hive` by default like Spark 2.2.X.

Users can enable `native` ORC. Also, ORC PPD is also restored to `false` like Spark 2.2.X.

## How was this patch tested?

Pass all test cases.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20610 from dongjoon-hyun/SPARK-ORC-DISABLE.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/19579 introduces a behavior change. We need to document it in the migration guide.

## How was this patch tested?

Also update the HiveExternalCatalogVersionsSuite to verify it.

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20606 from gatorsmile/addMigrationGuide.

## What changes were proposed in this pull request?

Added documentation about what MLlib guarantees in terms of loading ML models and Pipelines from old Spark versions. Discussed & confirmed on linked JIRA.

Author: Joseph K. Bradley <joseph@databricks.com>

Closes#20592 from jkbradley/SPARK-23154-backwards-compat-doc.

## What changes were proposed in this pull request?

This PR targets to explicitly specify supported types in Pandas UDFs.

The main change here is to add a deduplicated and explicit type checking in `returnType` ahead with documenting this; however, it happened to fix multiple things.

1. Currently, we don't support `BinaryType` in Pandas UDFs, for example, see:

```python

from pyspark.sql.functions import pandas_udf

pudf = pandas_udf(lambda x: x, "binary")

df = spark.createDataFrame([[bytearray(1)]])

df.select(pudf("_1")).show()

```

```

...

TypeError: Unsupported type in conversion to Arrow: BinaryType

```

We can document this behaviour for its guide.

2. Also, the grouped aggregate Pandas UDF fails fast on `ArrayType` but seems we can support this case.

```python

from pyspark.sql.functions import pandas_udf, PandasUDFType

foo = pandas_udf(lambda v: v.mean(), 'array<double>', PandasUDFType.GROUPED_AGG)

df = spark.range(100).selectExpr("id", "array(id) as value")

df.groupBy("id").agg(foo("value")).show()

```

```

...

NotImplementedError: ArrayType, StructType and MapType are not supported with PandasUDFType.GROUPED_AGG

```

3. Since we can check the return type ahead, we can fail fast before actual execution.

```python

# we can fail fast at this stage because we know the schema ahead

pandas_udf(lambda x: x, BinaryType())

```

## How was this patch tested?

Manually tested and unit tests for `BinaryType` and `ArrayType(...)` were added.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20531 from HyukjinKwon/pudf-cleanup.

This commit modifies the Mesos submission client to allow the principal

and secret to be provided indirectly via files. The path to these files

can be specified either via Spark configuration or via environment

variable.

Assuming these files are appropriately protected by FS/OS permissions

this means we don't ever leak the actual values in process info like ps

Environment variable specification is useful because it allows you to

interpolate the location of this file when using per-user Mesos

credentials.

For some background as to why we have taken this approach I will briefly describe our set up. On our systems we provide each authorised user account with their own Mesos credentials to provide certain security and audit guarantees to our customers. These credentials are managed by a central Secret management service. In our `spark-env.sh` we determine the appropriate secret and principal files to use depending on the user who is invoking Spark hence the need to inject these via environment variables as well as by configuration properties. So we set these environment variables appropriately and our Spark read in the contents of those files to authenticate itself with Mesos.

This is functionality we have been using it in production across multiple customer sites for some time. This has been in the field for around 18 months with no reported issues. These changes have been sufficient to meet our customer security and audit requirements.

We have been building and deploying custom builds of Apache Spark with various minor tweaks like this which we are now looking to contribute back into the community in order that we can rely upon stock Apache Spark builds and stop maintaining our own internal fork.

Author: Rob Vesse <rvesse@dotnetrdf.org>

Closes#20167 from rvesse/SPARK-16501.

## What changes were proposed in this pull request?

This PR proposes to disallow default value None when 'to_replace' is not a dictionary.

It seems weird we set the default value of `value` to `None` and we ended up allowing the case as below:

```python

>>> df.show()

```

```

+----+------+-----+

| age|height| name|

+----+------+-----+

| 10| 80|Alice|

...

```

```python

>>> df.na.replace('Alice').show()

```

```

+----+------+----+

| age|height|name|

+----+------+----+

| 10| 80|null|

...

```

**After**

This PR targets to disallow the case above:

```python

>>> df.na.replace('Alice').show()

```

```

...

TypeError: value is required when to_replace is not a dictionary.

```

while we still allow when `to_replace` is a dictionary:

```python

>>> df.na.replace({'Alice': None}).show()

```

```

+----+------+----+

| age|height|name|

+----+------+----+

| 10| 80|null|

...

```

## How was this patch tested?

Manually tested, tests were added in `python/pyspark/sql/tests.py` and doctests were fixed.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20499 from HyukjinKwon/SPARK-19454-followup.

## What changes were proposed in this pull request?

Further clarification of caveats in using stream-stream outer joins.

## How was this patch tested?

N/A

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#20494 from tdas/SPARK-23064-2.

Add breaking changes, as well as update behavior changes, to `2.3` ML migration guide.

## How was this patch tested?

Doc only

Author: Nick Pentreath <nickp@za.ibm.com>

Closes#20421 from MLnick/SPARK-23112-ml-guide.

## What changes were proposed in this pull request?

Rename the public APIs and names of pandas udfs.

- `PANDAS SCALAR UDF` -> `SCALAR PANDAS UDF`

- `PANDAS GROUP MAP UDF` -> `GROUPED MAP PANDAS UDF`

- `PANDAS GROUP AGG UDF` -> `GROUPED AGG PANDAS UDF`

## How was this patch tested?

The existing tests

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20428 from gatorsmile/renamePandasUDFs.

## What changes were proposed in this pull request?

User guide and examples are updated to reflect multiclass logistic regression summary which was added in [SPARK-17139](https://issues.apache.org/jira/browse/SPARK-17139).

I did not make a separate summary example, but added the summary code to the multiclass example that already existed. I don't see the need for a separate example for the summary.

## How was this patch tested?

Docs and examples only. Ran all examples locally using spark-submit.

Author: sethah <shendrickson@cloudera.com>

Closes#20332 from sethah/multiclass_summary_example.

## What changes were proposed in this pull request?

Adding user facing documentation for working with Arrow in Spark

Author: Bryan Cutler <cutlerb@gmail.com>

Author: Li Jin <ice.xelloss@gmail.com>

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#19575 from BryanCutler/arrow-user-docs-SPARK-2221.

Update ML user guide with highlights and migration guide for `2.3`.

## How was this patch tested?

Doc only.

Author: Nick Pentreath <nickp@za.ibm.com>

Closes#20363 from MLnick/SPARK-23112-ml-guide.

## What changes were proposed in this pull request?

Added documentation for new transformer.

Author: Bago Amirbekian <bago@databricks.com>

Closes#20285 from MrBago/sizeHintDocs.

## What changes were proposed in this pull request?

The example jar file is now in ./examples/jars directory of Spark distribution.

Author: Arseniy Tashoyan <tashoyan@users.noreply.github.com>

Closes#20349 from tashoyan/patch-1.

## What changes were proposed in this pull request?

streaming programming guide changes

## How was this patch tested?

manually

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#20340 from felixcheung/rstreamdoc.

## What changes were proposed in this pull request?

Fix spelling in quick-start doc.

## How was this patch tested?

Doc only.

Author: Shashwat Anand <me@shashwat.me>

Closes#20336 from ashashwat/SPARK-23165.

## What changes were proposed in this pull request?

Docs changes:

- Adding a warning that the backend is experimental.

- Removing a defunct internal-only option from documentation

- Clarifying that node selectors can be used right away, and other minor cosmetic changes

## How was this patch tested?

Docs only change

Author: foxish <ramanathana@google.com>

Closes#20314 from foxish/ambiguous-docs.

## What changes were proposed in this pull request?

We have `OneHotEncoderEstimator` now and `OneHotEncoder` will be deprecated since 2.3.0. We should add `OneHotEncoderEstimator` into mllib document.

We also need to provide corresponding examples for `OneHotEncoderEstimator` which are used in the document too.

## How was this patch tested?

Existing tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20257 from viirya/SPARK-23048.

Update user guide entry for `FeatureHasher` to match the Scala / Python doc, to describe the `categoricalCols` parameter.

## How was this patch tested?

Doc only

Author: Nick Pentreath <nickp@za.ibm.com>

Closes#20293 from MLnick/SPARK-23127-catCol-userguide.

## What changes were proposed in this pull request?

In latest structured-streaming-kafka-integration document, Java code example for Kafka integration is using `DataFrame<Row>`, shouldn't it be changed to `DataSet<Row>`?

## How was this patch tested?

manual test has been performed to test the updated example Java code in Spark 2.2.1 with Kafka 1.0

Author: brandonJY <brandonJY@users.noreply.github.com>

Closes#20312 from brandonJY/patch-2.

## What changes were proposed in this pull request?

In the Kubernetes mode, fails fast in the submission process if any submission client local dependencies are used as the use case is not supported yet.

## How was this patch tested?

Unit tests, integration tests, and manual tests.

vanzin foxish

Author: Yinan Li <liyinan926@gmail.com>

Closes#20320 from liyinan926/master.

## What changes were proposed in this pull request?

This PR completes the docs, specifying the default units assumed in configuration entries of type size.

This is crucial since unit-less values are accepted and the user might assume the base unit is bytes, which in most cases it is not, leading to hard-to-debug problems.

## How was this patch tested?

This patch updates only documentation only.

Author: Fernando Pereira <fernando.pereira@epfl.ch>

Closes#20269 from ferdonline/docs_units.

## What changes were proposed in this pull request?

When there is an operation between Decimals and the result is a number which is not representable exactly with the result's precision and scale, Spark is returning `NULL`. This was done to reflect Hive's behavior, but it is against SQL ANSI 2011, which states that "If the result cannot be represented exactly in the result type, then whether it is rounded or truncated is implementation-defined". Moreover, Hive now changed its behavior in order to respect the standard, thanks to HIVE-15331.

Therefore, the PR propose to:

- update the rules to determine the result precision and scale according to the new Hive's ones introduces in HIVE-15331;

- round the result of the operations, when it is not representable exactly with the result's precision and scale, instead of returning `NULL`

- introduce a new config `spark.sql.decimalOperations.allowPrecisionLoss` which default to `true` (ie. the new behavior) in order to allow users to switch back to the previous one.

Hive behavior reflects SQLServer's one. The only difference is that the precision and scale are adjusted for all the arithmetic operations in Hive, while SQL Server is said to do so only for multiplications and divisions in the documentation. This PR follows Hive's behavior.

A more detailed explanation is available here: https://mail-archives.apache.org/mod_mbox/spark-dev/201712.mbox/%3CCAEorWNAJ4TxJR9NBcgSFMD_VxTg8qVxusjP%2BAJP-x%2BJV9zH-yA%40mail.gmail.com%3E.

## How was this patch tested?

modified and added UTs. Comparisons with results of Hive and SQLServer.

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20023 from mgaido91/SPARK-22036.

## What changes were proposed in this pull request?

This patch bumps the master branch version to `2.4.0-SNAPSHOT`.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20222 from gatorsmile/bump24.

## What changes were proposed in this pull request?

Small typing correction - double word

## How was this patch tested?

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Matthias Beaupère <matthias.beaupere@gmail.com>

Closes#20212 from matthiasbe/patch-1.

This change allows a user to submit a Spark application on kubernetes

having to provide a single image, instead of one image for each type

of container. The image's entry point now takes an extra argument that

identifies the process that is being started.

The configuration still allows the user to provide different images

for each container type if they so desire.

On top of that, the entry point was simplified a bit to share more

code; mainly, the same env variable is used to propagate the user-defined

classpath to the different containers.

Aside from being modified to match the new behavior, the

'build-push-docker-images.sh' script was renamed to 'docker-image-tool.sh'

to more closely match its purpose; the old name was a little awkward

and now also not entirely correct, since there is a single image. It

was also moved to 'bin' since it's not necessarily an admin tool.

Docs have been updated to match the new behavior.

Tested locally with minikube.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20192 from vanzin/SPARK-22994.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/18164 introduces the behavior changes. We need to document it.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20234 from gatorsmile/docBehaviorChange.

## What changes were proposed in this pull request?

doc update

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#20198 from felixcheung/rrefreshdoc.

[SPARK-21786][SQL] When acquiring 'compressionCodecClassName' in 'ParquetOptions', `parquet.compression` needs to be considered.

## What changes were proposed in this pull request?

Since Hive 1.1, Hive allows users to set parquet compression codec via table-level properties parquet.compression. See the JIRA: https://issues.apache.org/jira/browse/HIVE-7858 . We do support orc.compression for ORC. Thus, for external users, it is more straightforward to support both. See the stackflow question: https://stackoverflow.com/questions/36941122/spark-sql-ignores-parquet-compression-propertie-specified-in-tblproperties

In Spark side, our table-level compression conf compression was added by #11464 since Spark 2.0.

We need to support both table-level conf. Users might also use session-level conf spark.sql.parquet.compression.codec. The priority rule will be like

If other compression codec configuration was found through hive or parquet, the precedence would be compression, parquet.compression, spark.sql.parquet.compression.codec. Acceptable values include: none, uncompressed, snappy, gzip, lzo.

The rule for Parquet is consistent with the ORC after the change.

Changes:

1.Increased acquiring 'compressionCodecClassName' from `parquet.compression`,and the precedence order is `compression`,`parquet.compression`,`spark.sql.parquet.compression.codec`, just like what we do in `OrcOptions`.

2.Change `spark.sql.parquet.compression.codec` to support "none".Actually in `ParquetOptions`,we do support "none" as equivalent to "uncompressed", but it does not allowed to configured to "none".

3.Change `compressionCode` to `compressionCodecClassName`.

## How was this patch tested?

Add test.

Author: fjh100456 <fu.jinhua6@zte.com.cn>

Closes#20076 from fjh100456/ParquetOptionIssue.

## What changes were proposed in this pull request?

This pr modified `elt` to output binary for binary inputs.

`elt` in the current master always output data as a string. But, in some databases (e.g., MySQL), if all inputs are binary, `elt` also outputs binary (Also, this might be a small surprise).

This pr is related to #19977.

## How was this patch tested?

Added tests in `SQLQueryTestSuite` and `TypeCoercionSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#20135 from maropu/SPARK-22937.

- Make it possible to build images from a git clone.

- Make it easy to use minikube to test things.

Also fixed what seemed like a bug: the base image wasn't getting the tag

provided in the command line. Adding the tag allows users to use multiple

Spark builds in the same kubernetes cluster.

Tested by deploying images on minikube and running spark-submit from a dev

environment; also by building the images with different tags and verifying

"docker images" in minikube.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20154 from vanzin/SPARK-22960.

## What changes were proposed in this pull request?

update R migration guide and vignettes

## How was this patch tested?

manually

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#20106 from felixcheung/rreleasenote23.

## What changes were proposed in this pull request?

Fixing three small typos in the docs, in particular:

It take a `RDD` -> It takes an `RDD` (twice)

It take an `JavaRDD` -> It takes a `JavaRDD`

I didn't create any Jira issue for this minor thing, I hope it's ok.

## How was this patch tested?

visually by clicking on 'preview'

Author: Jirka Kremser <jkremser@redhat.com>

Closes#20108 from Jiri-Kremser/docs-typo.

## What changes were proposed in this pull request?

Currently, we do not guarantee an order evaluation of conjuncts in either Filter or Join operator. This is also true to the mainstream RDBMS vendors like DB2 and MS SQL Server. Thus, we should also push down the deterministic predicates that are after the first non-deterministic, if possible.

## How was this patch tested?

Updated the existing test cases.

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20069 from gatorsmile/morePushDown.

## What changes were proposed in this pull request?

This pr modified `concat` to concat binary inputs into a single binary output.

`concat` in the current master always output data as a string. But, in some databases (e.g., PostgreSQL), if all inputs are binary, `concat` also outputs binary.

## How was this patch tested?

Added tests in `SQLQueryTestSuite` and `TypeCoercionSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#19977 from maropu/SPARK-22771.

## What changes were proposed in this pull request?

This PR updates the Kubernetes documentation corresponding to the following features/changes in #19954.

* Ability to use remote dependencies through the init-container.

* Ability to mount user-specified secrets into the driver and executor pods.

vanzin jiangxb1987 foxish

Author: Yinan Li <liyinan926@gmail.com>

Closes#20059 from liyinan926/doc-update.

What changes were proposed in this pull request?

This PR contains documentation on the usage of Kubernetes scheduler in Spark 2.3, and a shell script to make it easier to build docker images required to use the integration. The changes detailed here are covered by https://github.com/apache/spark/pull/19717 and https://github.com/apache/spark/pull/19468 which have merged already.

How was this patch tested?

The script has been in use for releases on our fork. Rest is documentation.

cc rxin mateiz (shepherd)

k8s-big-data SIG members & contributors: foxish ash211 mccheah liyinan926 erikerlandson ssuchter varunkatta kimoonkim tnachen ifilonenko

reviewers: vanzin felixcheung jiangxb1987 mridulm

TODO:

- [x] Add dockerfiles directory to built distribution. (https://github.com/apache/spark/pull/20007)

- [x] Change references to docker to instead say "container" (https://github.com/apache/spark/pull/19995)

- [x] Update configuration table.

- [x] Modify spark.kubernetes.allocation.batch.delay to take time instead of int (#20032)

Author: foxish <ramanathana@google.com>

Closes#19946 from foxish/update-k8s-docs.

## What changes were proposed in this pull request?

Like `Parquet`, users can use `ORC` with Apache Spark structured streaming. This PR adds `orc()` to `DataStreamReader`(Scala/Python) in order to support creating streaming dataset with ORC file format more easily like the other file formats. Also, this adds a test coverage for ORC data source and updates the document.

**BEFORE**

```scala

scala> spark.readStream.schema("a int").orc("/tmp/orc_ss").writeStream.format("console").start()

<console>:24: error: value orc is not a member of org.apache.spark.sql.streaming.DataStreamReader

spark.readStream.schema("a int").orc("/tmp/orc_ss").writeStream.format("console").start()

```

**AFTER**

```scala

scala> spark.readStream.schema("a int").orc("/tmp/orc_ss").writeStream.format("console").start()

res0: org.apache.spark.sql.streaming.StreamingQuery = org.apache.spark.sql.execution.streaming.StreamingQueryWrapper678b3746

scala>

-------------------------------------------

Batch: 0

-------------------------------------------

+---+

| a|

+---+

| 1|

+---+

```

## How was this patch tested?

Pass the newly added test cases.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#19975 from dongjoon-hyun/SPARK-22781.

## What changes were proposed in this pull request?

Easy fix in the link.

## How was this patch tested?

Tested manually

Author: Mahmut CAVDAR <mahmutcvdr@gmail.com>

Closes#19996 from mcavdar/master.

This PR contains implementation of the basic submission client for the cluster mode of Spark on Kubernetes. It's step 2 from the step-wise plan documented [here](https://github.com/apache-spark-on-k8s/spark/issues/441#issuecomment-330802935).

This addition is covered by the [SPIP](http://apache-spark-developers-list.1001551.n3.nabble.com/SPIP-Spark-on-Kubernetes-td22147.html) vote which passed on Aug 31.

This PR and #19468 together form a MVP of Spark on Kubernetes that allows users to run Spark applications that use resources locally within the driver and executor containers on Kubernetes 1.6 and up. Some changes on pom and build/test setup are copied over from #19468 to make this PR self contained and testable.

The submission client is mainly responsible for creating the Kubernetes pod that runs the Spark driver. It follows a step-based approach to construct the driver pod, as the code under the `submit.steps` package shows. The steps are orchestrated by `DriverConfigurationStepsOrchestrator`. `Client` creates the driver pod and waits for the application to complete if it's configured to do so, which is the case by default.

This PR also contains Dockerfiles of the driver and executor images. They are included because some of the environment variables set in the code would not make sense without referring to the Dockerfiles.

* The patch contains unit tests which are passing.

* Manual testing: ./build/mvn -Pkubernetes clean package succeeded.

* It is a subset of the entire changelist hosted at http://github.com/apache-spark-on-k8s/spark which is in active use in several organizations.

* There is integration testing enabled in the fork currently hosted by PepperData which is being moved over to RiseLAB CI.

* Detailed documentation on trying out the patch in its entirety is in: https://apache-spark-on-k8s.github.io/userdocs/running-on-kubernetes.html

cc rxin felixcheung mateiz (shepherd)

k8s-big-data SIG members & contributors: mccheah foxish ash211 ssuchter varunkatta kimoonkim erikerlandson tnachen ifilonenko liyinan926

Author: Yinan Li <liyinan926@gmail.com>

Closes#19717 from liyinan926/spark-kubernetes-4.

## What changes were proposed in this pull request?

Update broadcast behavior changes in migration section.

## How was this patch tested?

N/A

Author: Yuming Wang <wgyumg@gmail.com>

Closes#19858 from wangyum/SPARK-22489-migration.

## What changes were proposed in this pull request?

The spark properties for configuring the ContextCleaner are not documented in the official documentation at https://spark.apache.org/docs/latest/configuration.html#available-properties.

This PR adds the doc.

## How was this patch tested?

Manual.

```

cd docs

jekyll build

open _site/configuration.html

```

Author: gaborgsomogyi <gabor.g.somogyi@gmail.com>

Closes#19826 from gaborgsomogyi/SPARK-22428.

## What changes were proposed in this pull request?

How to reproduce:

```scala

import org.apache.spark.sql.execution.joins.BroadcastHashJoinExec

spark.createDataFrame(Seq((1, "4"), (2, "2"))).toDF("key", "value").createTempView("table1")

spark.createDataFrame(Seq((1, "1"), (2, "2"))).toDF("key", "value").createTempView("table2")

val bl = sql("SELECT /*+ MAPJOIN(t1) */ * FROM table1 t1 JOIN table2 t2 ON t1.key = t2.key").queryExecution.executedPlan

println(bl.children.head.asInstanceOf[BroadcastHashJoinExec].buildSide)

```

The result is `BuildRight`, but should be `BuildLeft`. This PR fix this issue.

## How was this patch tested?

unit tests

Author: Yuming Wang <wgyumg@gmail.com>

Closes#19714 from wangyum/SPARK-22489.

## What changes were proposed in this pull request?

This is a stripped down version of the `KubernetesClusterSchedulerBackend` for Spark with the following components:

- Static Allocation of Executors

- Executor Pod Factory

- Executor Recovery Semantics

It's step 1 from the step-wise plan documented [here](https://github.com/apache-spark-on-k8s/spark/issues/441#issuecomment-330802935).

This addition is covered by the [SPIP vote](http://apache-spark-developers-list.1001551.n3.nabble.com/SPIP-Spark-on-Kubernetes-td22147.html) which passed on Aug 31 .

## How was this patch tested?

- The patch contains unit tests which are passing.

- Manual testing: `./build/mvn -Pkubernetes clean package` succeeded.

- It is a **subset** of the entire changelist hosted in http://github.com/apache-spark-on-k8s/spark which is in active use in several organizations.

- There is integration testing enabled in the fork currently [hosted by PepperData](spark-k8s-jenkins.pepperdata.org:8080) which is being moved over to RiseLAB CI.

- Detailed documentation on trying out the patch in its entirety is in: https://apache-spark-on-k8s.github.io/userdocs/running-on-kubernetes.html

cc rxin felixcheung mateiz (shepherd)

k8s-big-data SIG members & contributors: mccheah ash211 ssuchter varunkatta kimoonkim erikerlandson liyinan926 tnachen ifilonenko

Author: Yinan Li <liyinan926@gmail.com>

Author: foxish <ramanathana@google.com>

Author: mcheah <mcheah@palantir.com>

Closes#19468 from foxish/spark-kubernetes-3.

## What changes were proposed in this pull request?

When converting Pandas DataFrame/Series from/to Spark DataFrame using `toPandas()` or pandas udfs, timestamp values behave to respect Python system timezone instead of session timezone.

For example, let's say we use `"America/Los_Angeles"` as session timezone and have a timestamp value `"1970-01-01 00:00:01"` in the timezone. Btw, I'm in Japan so Python timezone would be `"Asia/Tokyo"`.

The timestamp value from current `toPandas()` will be the following:

```

>>> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

>>> df = spark.createDataFrame([28801], "long").selectExpr("timestamp(value) as ts")

>>> df.show()

+-------------------+

| ts|

+-------------------+

|1970-01-01 00:00:01|

+-------------------+

>>> df.toPandas()

ts

0 1970-01-01 17:00:01

```

As you can see, the value becomes `"1970-01-01 17:00:01"` because it respects Python timezone.

As we discussed in #18664, we consider this behavior is a bug and the value should be `"1970-01-01 00:00:01"`.

## How was this patch tested?

Added tests and existing tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#19607 from ueshin/issues/SPARK-22395.

## What changes were proposed in this pull request?

A discussed in SPARK-19606, the addition of a new config property named "spark.mesos.constraints.driver" for constraining drivers running on a Mesos cluster

## How was this patch tested?

Corresponding unit test added also tested locally on a Mesos cluster

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Paul Mackles <pmackles@adobe.com>

Closes#19543 from pmackles/SPARK-19606.