### What changes were proposed in this pull request?

The current error message from `_infer_type` in `_infer_schema` only includes the unsupported column type but not the column name. This PR adds the column name in the error message to make it easier for users to identify which column should they drop or convert.

### Why are the changes needed?

Improve user experience.

### Does this PR introduce _any_ user-facing change?

Yes. The error message from `_infer_schema` is changed.

Before:

"not supported type: foo"

After:

"Column bar contains not supported type: foo"

### How was this patch tested?

Updated the existing unit test.

Closes#29365 from liangz1/types-error-colname.

Authored-by: Liang Zhang <liang.zhang@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The test creates 10 batches of data to train the model and expects to see error on test data improves as model is trained. If the difference between the 2nd error and the 10th error is smaller than 2, the assertion fails:

```

FAIL: test_train_prediction (pyspark.mllib.tests.test_streaming_algorithms.StreamingLinearRegressionWithTests)

Test that error on test data improves as model is trained.

----------------------------------------------------------------------

Traceback (most recent call last):

File "/home/runner/work/spark/spark/python/pyspark/mllib/tests/test_streaming_algorithms.py", line 466, in test_train_prediction

eventually(condition, timeout=180.0)

File "/home/runner/work/spark/spark/python/pyspark/testing/utils.py", line 81, in eventually

lastValue = condition()

File "/home/runner/work/spark/spark/python/pyspark/mllib/tests/test_streaming_algorithms.py", line 461, in condition

self.assertGreater(errors[1] - errors[-1], 2)

AssertionError: 1.672640157855923 not greater than 2

```

I saw this quite a few time on Jenkins but was not able to reproduce this on my local. These are the ten errors I got:

```

4.517395047937127

4.894265404350079

3.0392090466559876

1.8786361640757654

0.8973106042078115

0.3715780507684368

0.20815690742907672

0.17333033743125845

0.15686783249863873

0.12584413600569616

```

I am thinking of having 15 batches of data instead of 10, so the model can be trained for a longer time. Hopefully the 15th error - 2nd error will always be larger than 2 on Jenkins. These are the 15 errors I got on my local:

```

4.517395047937127

4.894265404350079

3.0392090466559876

1.8786361640757658

0.8973106042078115

0.3715780507684368

0.20815690742907672

0.17333033743125845

0.15686783249863873

0.12584413600569616

0.11883853835108477

0.09400261862100823

0.08887491447353497

0.05984929624986607

0.07583948141520978

```

### Why are the changes needed?

Fix flaky test

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Manually tested

Closes#29380 from huaxingao/flaky_test.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Huaxin Gao <huaxing@us.ibm.com>

### What changes were proposed in this pull request?

This PR proposes to write the main page of PySpark documentation. The base work is finished at https://github.com/apache/spark/pull/29188.

### Why are the changes needed?

For better usability and readability in PySpark documentation.

### Does this PR introduce _any_ user-facing change?

Yes, it creates a new main page as below:

### How was this patch tested?

Manually built the PySpark documentation.

```bash

cd python

make clean html

```

Closes#29320 from HyukjinKwon/SPARK-32507.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Change casting of map and struct values to strings by using the `{}` brackets instead of `[]`. The behavior is controlled by the SQL config `spark.sql.legacy.castComplexTypesToString.enabled`. When it is `true`, `CAST` wraps maps and structs by `[]` in casting to strings. Otherwise, if this is `false`, which is the default, maps and structs are wrapped by `{}`.

### Why are the changes needed?

- To distinguish structs/maps from arrays.

- To make `show`'s output consistent with Hive and conversions to Hive strings.

- To display dataframe content in the same form by `spark-sql` and `show`

- To be consistent with the `*.sql` tests

### Does this PR introduce _any_ user-facing change?

Yes

### How was this patch tested?

By existing test suite `CastSuite`.

Closes#29308 from MaxGekk/show-struct-map.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This is a follow-up of #29278.

This PR changes the config name to switch allow/disallow `SparkContext` in executors as per the comment https://github.com/apache/spark/pull/29278#pullrequestreview-460256338.

### Why are the changes needed?

The config name `spark.executor.allowSparkContext` is more reasonable.

### Does this PR introduce _any_ user-facing change?

Yes, the config name is changed.

### How was this patch tested?

Updated tests.

Closes#29340 from ueshin/issues/SPARK-32160/change_config_name.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

set params default values in trait Params for feature and tuning in both Scala and Python.

### Why are the changes needed?

Make ML has the same default param values between estimator and its corresponding transformer, and also between Scala and Python.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing and modified tests

Closes#29153 from huaxingao/default2.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Huaxin Gao <huaxing@us.ibm.com>

### What changes were proposed in this pull request?

This is a follow-up of #28986.

This PR adds a config to switch allow/disallow to create `SparkContext` in executors.

- `spark.driver.allowSparkContextInExecutors`

### Why are the changes needed?

Some users or libraries actually create `SparkContext` in executors.

We shouldn't break their workloads.

### Does this PR introduce _any_ user-facing change?

Yes, users will be able to create `SparkContext` in executors with the config enabled.

### How was this patch tested?

More tests are added.

Closes#29278 from ueshin/issues/SPARK-32160/add_configs.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes:

1. To introduce `InheritableThread` class, that works identically with `threading.Thread` but it can inherit the inheritable attributes of a JVM thread such as `InheritableThreadLocal`.

This was a problem from the pinned thread mode, see also https://github.com/apache/spark/pull/24898. Now it works as below:

```python

import pyspark

spark.sparkContext.setLocalProperty("a", "hi")

def print_prop():

print(spark.sparkContext.getLocalProperty("a"))

pyspark.InheritableThread(target=print_prop).start()

```

```

hi

```

2. Also, it adds the resource leak fix into `InheritableThread`. Py4J leaks the thread and does not close the connection from Python to JVM. In `InheritableThread`, it manually closes the connections when PVM garbage collection happens. So, JVM threads finish safely. I manually verified by profiling but there's also another easy way to verify:

```bash

PYSPARK_PIN_THREAD=true ./bin/pyspark

```

```python

>>> from threading import Thread

>>> Thread(target=lambda: spark.range(1000).collect()).start()

>>> Thread(target=lambda: spark.range(1000).collect()).start()

>>> Thread(target=lambda: spark.range(1000).collect()).start()

>>> spark._jvm._gateway_client.deque

deque([<py4j.clientserver.ClientServerConnection object at 0x119f7aba8>, <py4j.clientserver.ClientServerConnection object at 0x119fc9b70>, <py4j.clientserver.ClientServerConnection object at 0x119fc9e10>, <py4j.clientserver.ClientServerConnection object at 0x11a015358>, <py4j.clientserver.ClientServerConnection object at 0x119fc00f0>])

>>> Thread(target=lambda: spark.range(1000).collect()).start()

>>> spark._jvm._gateway_client.deque

deque([<py4j.clientserver.ClientServerConnection object at 0x119f7aba8>, <py4j.clientserver.ClientServerConnection object at 0x119fc9b70>, <py4j.clientserver.ClientServerConnection object at 0x119fc9e10>, <py4j.clientserver.ClientServerConnection object at 0x11a015358>, <py4j.clientserver.ClientServerConnection object at 0x119fc08d0>, <py4j.clientserver.ClientServerConnection object at 0x119fc00f0>])

```

This issue is fixed now.

3. Because now we have a fix for the issue here, it also proposes to deprecate `collectWithJobGroup` which was a temporary workaround added to avoid this leak issue.

### Why are the changes needed?

To support pinned thread mode properly without a resource leak, and a proper inheritable local properties.

### Does this PR introduce _any_ user-facing change?

Yes, it adds an API `InheritableThread` class for pinned thread mode.

### How was this patch tested?

Manually tested as described above, and unit test was added as well.

Closes#28968 from HyukjinKwon/SPARK-32010.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add training summary to MultilayerPerceptronClassificationModel...

### Why are the changes needed?

so that user can get the training process status, such as loss value of each iteration and total iteration number.

### Does this PR introduce _any_ user-facing change?

Yes

MultilayerPerceptronClassificationModel.summary

MultilayerPerceptronClassificationModel.evaluate

### How was this patch tested?

new tests

Closes#29250 from huaxingao/mlp_summary.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

1. Describe the JSON option `allowNonNumericNumbers` which is used in read

2. Add new test cases for allowed JSON field values: NaN, +INF, +Infinity, Infinity, -INF and -Infinity

### Why are the changes needed?

To improve UX with Spark SQL and to provide users full info about the supported option.

### Does this PR introduce _any_ user-facing change?

Yes, in PySpark.

### How was this patch tested?

Added new test to `JsonParsingOptionsSuite`

Closes#29275 from MaxGekk/allowNonNumericNumbers-doc.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

return an empty list instead of None when calling `df.head()`

### Why are the changes needed?

`df.head()` and `df.head(1)` are inconsistent when df is empty.

### Does this PR introduce _any_ user-facing change?

Yes. If a user relies on `df.head()` to return None, things like `if df.head() is None:` will be broken.

### How was this patch tested?

Closes#29214 from tianshizz/SPARK-31525.

Authored-by: Tianshi Zhu <zhutianshirea@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Fixes spacing in an error message

### Why are the changes needed?

Makes error messages easier to read

### Does this PR introduce _any_ user-facing change?

Yes, it changes the error message

### How was this patch tested?

This patch doesn't affect any logic, so existing tests should cover it

Closes#29264 from hauntsaninja/patch-1.

Authored-by: Shantanu <12621235+hauntsaninja@users.noreply.github.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Support set off heap memory in `ExecutorResourceRequests`

### Why are the changes needed?

Support stage level scheduling

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added UT in `ResourceProfileSuite` and `DAGSchedulerSuite`

Closes#28972 from warrenzhu25/30794.

Authored-by: Warren Zhu <zhonzh@microsoft.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

This PR removes the manual port of `heapq3.py` introduced from SPARK-3073. The main reason of this was to support Python 2.6 and 2.7 because Python 2's `heapq.merge()` doesn't not support `key` and `reverse`.

See

- https://docs.python.org/2/library/heapq.html#heapq.merge in Python 2

- https://docs.python.org/3.8/library/heapq.html#heapq.merge in Python 3

Since we dropped the Python 2 at SPARK-32138, we can remove this away.

### Why are the changes needed?

To remove unnecessary codes. Also, we can leverage bug fixes made in Python 3.x at `heapq`.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Existing tests should cover. I locally ran and verified:

```bash

./python/run-tests --python-executable=python3 --testname="pyspark.tests.test_shuffle"

./python/run-tests --python-executable=python3 --testname="pyspark.shuffle ExternalSorter"

./python/run-tests --python-executable=python3 --testname="pyspark.tests.test_rdd RDDTests.test_external_group_by_key"

```

Closes#29229 from HyukjinKwon/SPARK-32435.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to redesign the PySpark documentation.

I made a demo site to make it easier to review: https://hyukjin-spark.readthedocs.io/en/stable/reference/index.html.

Here is the initial draft for the final PySpark docs shape: https://hyukjin-spark.readthedocs.io/en/latest/index.html.

In more details, this PR proposes:

1. Use [pydata_sphinx_theme](https://github.com/pandas-dev/pydata-sphinx-theme) theme - [pandas](https://pandas.pydata.org/docs/) and [Koalas](https://koalas.readthedocs.io/en/latest/) use this theme. The CSS overwrite is ported from Koalas. The colours in the CSS were actually chosen by designers to use in Spark.

2. Use the Sphinx option to separate `source` and `build` directories as the documentation pages will likely grow.

3. Port current API documentation into the new style. It mimics Koalas and pandas to use the theme most effectively.

One disadvantage of this approach is that you should list up APIs or classes; however, I think this isn't a big issue in PySpark since we're being conservative on adding APIs. I also intentionally listed classes only instead of functions in ML and MLlib to make it relatively easier to manage.

### Why are the changes needed?

Often I hear the complaints, from the users, that current PySpark documentation is pretty messy to read - https://spark.apache.org/docs/latest/api/python/index.html compared other projects such as [pandas](https://pandas.pydata.org/docs/) and [Koalas](https://koalas.readthedocs.io/en/latest/).

It would be nicer if we can make it more organised instead of just listing all classes, methods and attributes to make it easier to navigate.

Also, the documentation has been there from almost the very first version of PySpark. Maybe it's time to update it.

### Does this PR introduce _any_ user-facing change?

Yes, PySpark API documentation will be redesigned.

### How was this patch tested?

Manually tested, and the demo site was made to show.

Closes#29188 from HyukjinKwon/SPARK-32179.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a follow-up of #29138 which added overload `slice` function to accept `Column` for `start` and `length` in Scala.

This PR is updating the equivalent Python function to accept `Column` as well.

### Why are the changes needed?

Now that Scala version accepts `Column`, Python version should also accept it.

### Does this PR introduce _any_ user-facing change?

Yes, PySpark users will also be able to pass Column object to `start` and `length` parameter in `slice` function.

### How was this patch tested?

Added tests.

Closes#29195 from ueshin/issues/SPARK-32338/slice.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

- Adds `DataFramWriterV2` class.

- Adds `writeTo` method to `pyspark.sql.DataFrame`.

- Adds related SQL partitioning functions (`years`, `months`, ..., `bucket`).

### Why are the changes needed?

Feature parity.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added new unit tests.

TODO: Should we test against `org.apache.spark.sql.connector.InMemoryTableCatalog`? If so, how to expose it in Python tests?

Closes#27331 from zero323/SPARK-29157.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Use `/usr/bin/env python3` consistently instead of `/usr/bin/env python` in build scripts, to reliably select Python 3.

### Why are the changes needed?

Scripts no longer work with Python 2.

### Does this PR introduce _any_ user-facing change?

No, should be all build system changes.

### How was this patch tested?

Existing tests / NA

Closes#29151 from srowen/SPARK-29909.2.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to upgrade PySpark's embedded cloudpickle to the latest cloudpickle v1.5.0 (See https://github.com/cloudpipe/cloudpickle/blob/v1.5.0/cloudpickle/cloudpickle.py)

### Why are the changes needed?

There are many bug fixes. For example, the bug described in the JIRA:

dill unpickling fails because they define `types.ClassType`, which is undefined in dill. This results in the following error:

```

Traceback (most recent call last):

File "/usr/local/lib/python3.6/site-packages/apache_beam/internal/pickler.py", line 279, in loads

return dill.loads(s)

File "/usr/local/lib/python3.6/site-packages/dill/_dill.py", line 317, in loads

return load(file, ignore)

File "/usr/local/lib/python3.6/site-packages/dill/_dill.py", line 305, in load

obj = pik.load()

File "/usr/local/lib/python3.6/site-packages/dill/_dill.py", line 577, in _load_type

return _reverse_typemap[name]

KeyError: 'ClassType'

```

See also https://github.com/cloudpipe/cloudpickle/issues/82. This was fixed for cloudpickle 1.3.0+ (https://github.com/cloudpipe/cloudpickle/pull/337), but PySpark's cloudpickle.py doesn't have this change yet.

More notably, now it supports C pickle implementation with Python 3.8 which hugely improve performance. This is already adopted in another project such as Ray.

### Does this PR introduce _any_ user-facing change?

Yes, as described above, the bug fixes. Internally, users also could leverage the fast cloudpickle backed by C pickle.

### How was this patch tested?

Jenkins will test it out.

Closes#29114 from HyukjinKwon/SPARK-32094.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

set params default values in trait ...Params in both Scala and Python.

I will do this in two PRs. I will change classification, regression, clustering and fpm in this PR. Will change the rest in another PR.

### Why are the changes needed?

Make ML has the same default param values between estimator and its corresponding transformer, and also between Scala and Python.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests

Closes#29112 from huaxingao/set_default.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Huaxin Gao <huaxing@us.ibm.com>

### What changes were proposed in this pull request?

Add training summary for FMClassificationModel...

### Why are the changes needed?

so that user can get the training process status, such as loss value of each iteration and total iteration number.

### Does this PR introduce _any_ user-facing change?

Yes

FMClassificationModel.summary

FMClassificationModel.evaluate

### How was this patch tested?

new tests

Closes#28960 from huaxingao/fm_summary.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Huaxin Gao <huaxing@us.ibm.com>

### What changes were proposed in this pull request?

This PR will remove references to these "blacklist" and "whitelist" terms besides the blacklisting feature as a whole, which can be handled in a separate JIRA/PR.

This touches quite a few files, but the changes are straightforward (variable/method/etc. name changes) and most quite self-contained.

### Why are the changes needed?

As per discussion on the Spark dev list, it will be beneficial to remove references to problematic language that can alienate potential community members. One such reference is "blacklist" and "whitelist". While it seems to me that there is some valid debate as to whether these terms have racist origins, the cultural connotations are inescapable in today's world.

### Does this PR introduce _any_ user-facing change?

In the test file `HiveQueryFileTest`, a developer has the ability to specify the system property `spark.hive.whitelist` to specify a list of Hive query files that should be tested. This system property has been renamed to `spark.hive.includelist`. The old property has been kept for compatibility, but will log a warning if used. I am open to feedback from others on whether keeping a deprecated property here is unnecessary given that this is just for developers running tests.

### How was this patch tested?

Existing tests should be suitable since no behavior changes are expected as a result of this PR.

Closes#28874 from xkrogen/xkrogen-SPARK-32036-rename-blacklists.

Authored-by: Erik Krogen <ekrogen@linkedin.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

This PR aims to test PySpark with Python 3.8 in Github Actions. In the script side, it is already ready:

4ad9bfd53b/python/run-tests.py (L161)

This PR includes small related fixes together:

1. Install Python 3.8

2. Only install one Python implementation instead of installing many for SQL and Yarn test cases because they need one Python executable in their test cases that is higher than Python 2.

3. Do not install Python 2 which is not needed anymore after we dropped Python 2 at SPARK-32138

4. Remove a comment about installing PyPy3 on Jenkins - SPARK-32278. It is already installed.

### Why are the changes needed?

Currently, only PyPy3 and Python 3.6 are being tested with PySpark in Github Actions. We should test the latest version of Python as well because some optimizations can be only enabled with Python 3.8+. See also https://github.com/apache/spark/pull/29114

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Was not tested. Github Actions build in this PR will test it out.

Closes#29116 from HyukjinKwon/test-python3.8-togehter.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR proposes to port the test case from https://github.com/apache/spark/pull/29098 to branch-3.0 and master. In the master and branch-3.0, this was fixed together at ecaa495b1f but no partition case is not being tested.

### Why are the changes needed?

To improve test coverage.

### Does this PR introduce _any_ user-facing change?

No, test-only.

### How was this patch tested?

Unit test was forward-ported.

Closes#29099 from HyukjinKwon/SPARK-32300-1.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

`datetime` is already imported a few lines below :)

ce27cc54c1/python/pyspark/sql/tests/test_pandas_udf_scalar.py (L24)

### Why are the changes needed?

This is the last instance of the duplicate import.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manual.

Closes#29109 from Fokko/SPARK-32311.

Authored-by: Fokko Driesprong <fokko@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

# What changes were proposed in this pull request?

While seeing if we can use mypy for checking the Python types, I've stumbled across this missing import:

34fa913311/python/pyspark/ml/feature.py (L5773-L5774)

### Why are the changes needed?

The `import` is required because it's used.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manual.

Closes#29108 from Fokko/SPARK-32309.

Authored-by: Fokko Driesprong <fokko@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR aims to drop Python 2.7, 3.4 and 3.5.

Roughly speaking, it removes all the widely known Python 2 compatibility workarounds such as `sys.version` comparison, `__future__`. Also, it removes the Python 2 dedicated codes such as `ArrayConstructor` in Spark.

### Why are the changes needed?

1. Unsupport EOL Python versions

2. Reduce maintenance overhead and remove a bit of legacy codes and hacks for Python 2.

3. PyPy2 has a critical bug that causes a flaky test, SPARK-28358 given my testing and investigation.

4. Users can use Python type hints with Pandas UDFs without thinking about Python version

5. Users can leverage one latest cloudpickle, https://github.com/apache/spark/pull/28950. With Python 3.8+ it can also leverage C pickle.

### Does this PR introduce _any_ user-facing change?

Yes, users cannot use Python 2.7, 3.4 and 3.5 in the upcoming Spark version.

### How was this patch tested?

Manually tested and also tested in Jenkins.

Closes#28957 from HyukjinKwon/SPARK-32138.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to run the Spark tests in Github Actions.

To briefly explain the main idea:

- Reuse `dev/run-tests.py` with SBT build

- Reuse the modules in `dev/sparktestsupport/modules.py` to test each module

- Pass the modules to test into `dev/run-tests.py` directly via `TEST_ONLY_MODULES` environment variable. For example, `pyspark-sql,core,sql,hive`.

- `dev/run-tests.py` _does not_ take the dependent modules into account but solely the specified modules to test.

Another thing to note might be `SlowHiveTest` annotation. Running the tests in Hive modules takes too much so the slow tests are extracted and it runs as a separate job. It was extracted from the actual elapsed time in Jenkins:

So, Hive tests are separated into to jobs. One is slow test cases, and the other one is the other test cases.

_Note that_ the current GitHub Actions build virtually copies what the default PR builder on Jenkins does (without other profiles such as JDK 11, Hadoop 2, etc.). The only exception is Kinesis https://github.com/apache/spark/pull/29057/files#diff-04eb107ee163a50b61281ca08f4e4c7bR23

### Why are the changes needed?

Last week and onwards, the Jenkins machines became very unstable for many reasons:

- Apparently, the machines became extremely slow. Almost all tests can't pass.

- One machine (worker 4) started to have the corrupt `.m2` which fails the build.

- Documentation build fails time to time for an unknown reason in Jenkins machine specifically. This is disabled for now at https://github.com/apache/spark/pull/29017.

- Almost all PRs are basically blocked by this instability currently.

The advantages of using Github Actions:

- To avoid depending on few persons who can access to the cluster.

- To reduce the elapsed time in the build - we could split the tests (e.g., SQL, ML, CORE), and run them in parallel so the total build time will significantly reduce.

- To control the environment more flexibly.

- Other contributors can test and propose to fix Github Actions configurations so we can distribute this build management cost.

Note that:

- The current build in Jenkins takes _more than 7 hours_. With Github actions it takes _less than 2 hours_

- We can now control the environments especially for Python easily.

- The test and build look more stable than the Jenkins'.

### Does this PR introduce _any_ user-facing change?

No, dev-only change.

### How was this patch tested?

Tested at https://github.com/HyukjinKwon/spark/pull/4Closes#29057 from HyukjinKwon/migrate-to-github-actions.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

# What changes were proposed in this pull request?

current problems:

```

mlp = MultilayerPerceptronClassifier(layers=[2, 2, 2], seed=123)

model = mlp.fit(df)

path = tempfile.mkdtemp()

model_path = path + "/mlp"

model.save(model_path)

model2 = MultilayerPerceptronClassificationModel.load(model_path)

self.assertEqual(model2.getSolver(), "l-bfgs") # this fails because model2.getSolver() returns 'auto'

model2.transform(df)

# this fails with Exception pyspark.sql.utils.IllegalArgumentException: MultilayerPerceptronClassifier_dec859ed24ec parameter solver given invalid value auto.

```

FMClassifier/Regression and GeneralizedLinearRegression have the same problems.

Here are the root cause of the problems:

1. In HasSolver, both Scala and Python default solver to 'auto'

2. On Scala side, mlp overrides the default of solver to 'l-bfgs', FMClassifier/Regression overrides the default of solver to 'adamW', and glr overrides the default of solver to 'irls'

3. On Scala side, mlp overrides the default of solver in MultilayerPerceptronClassificationParams, so both MultilayerPerceptronClassification and MultilayerPerceptronClassificationModel have 'l-bfgs' as default

4. On Python side, mlp overrides the default of solver in MultilayerPerceptronClassification, so it has default as 'l-bfgs', but MultilayerPerceptronClassificationModel doesn't override the default so it gets the default from HasSolver which is 'auto'. In theory, we don't care about the solver value or any other params values for MultilayerPerceptronClassificationModel, because we have the fitted model already. That's why on Python side, we never set default values for any of the XXXModel.

5. when calling getSolver on the loaded mlp model, it calls this line of code underneath:

```

def _transfer_params_from_java(self):

"""

Transforms the embedded params from the companion Java object.

"""

......

# SPARK-14931: Only check set params back to avoid default params mismatch.

if self._java_obj.isSet(java_param):

value = _java2py(sc, self._java_obj.getOrDefault(java_param))

self._set(**{param.name: value})

......

```

that's why model2.getSolver() returns 'auto'. The code doesn't get the default Scala value (in this case 'l-bfgs') to set to Python param, so it takes the default value (in this case 'auto') on Python side.

6. when calling model2.transform(df), it calls this underneath:

```

def _transfer_params_to_java(self):

"""

Transforms the embedded params to the companion Java object.

"""

......

if self.hasDefault(param):

pair = self._make_java_param_pair(param, self._defaultParamMap[param])

pair_defaults.append(pair)

......

```

Again, it gets the Python default solver which is 'auto', and this caused the Exception

7. Currently, on Scala side, for some of the algorithms, we set default values in the XXXParam, so both estimator and transformer get the default value. However, for some of the algorithms, we only set default in estimators, and the XXXModel doesn't get the default value. On Python side, we never set defaults for the XXXModel. This causes the default value inconsistency.

8. My proposed solution: set default params in XXXParam for both Scala and Python, so both the estimator and transformer have the same default value for both Scala and Python. I currently only changed solver in this PR. If everyone is OK with the fix, I will change all the other params as well.

I hope my explanation makes sense to your folks :)

### Why are the changes needed?

Fix bug

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

existing and new tests

Closes#29060 from huaxingao/solver_parity.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to partially reverts the simple string in `NullType` at https://github.com/apache/spark/pull/28833: `NullType.simpleString` back from `unknown` to `null`.

### Why are the changes needed?

- Technically speaking, it's orthogonal with the issue itself, SPARK-20680.

- It needs some more discussion, see https://github.com/apache/spark/pull/28833#issuecomment-655277714

### Does this PR introduce _any_ user-facing change?

It reverts back the user-facing changes at https://github.com/apache/spark/pull/28833.

The simple string of `NullType` is back to `null`.

### How was this patch tested?

I just logically reverted. Jenkins should test it out.

Closes#29041 from HyukjinKwon/SPARK-20680.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR proposes to disallow to create `SparkContext` in executors, e.g., in UDFs.

### Why are the changes needed?

Currently executors can create SparkContext, but shouldn't be able to create it.

```scala

sc.range(0, 1).foreach { _ =>

new SparkContext(new SparkConf().setAppName("test").setMaster("local"))

}

```

### Does this PR introduce _any_ user-facing change?

Yes, users won't be able to create `SparkContext` in executors.

### How was this patch tested?

Addes tests.

Closes#28986 from ueshin/issues/SPARK-32160/disallow_spark_context_in_executors.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is the new PR which to address the close one #17953

1. support "void" primitive data type in the `AstBuilder`, point it to `NullType`

2. forbid creating tables with VOID/NULL column type

### Why are the changes needed?

1. Spark is incompatible with hive void type. When Hive table schema contains void type, DESC table will throw an exception in Spark.

>hive> create table bad as select 1 x, null z from dual;

>hive> describe bad;

OK

x int

z void

In Spark2.0.x, the behaviour to read this view is normal:

>spark-sql> describe bad;

x int NULL

z void NULL

Time taken: 4.431 seconds, Fetched 2 row(s)

But in lastest Spark version, it failed with SparkException: Cannot recognize hive type string: void

>spark-sql> describe bad;

17/05/09 03:12:08 ERROR thriftserver.SparkSQLDriver: Failed in [describe bad]

org.apache.spark.SparkException: Cannot recognize hive type string: void

Caused by: org.apache.spark.sql.catalyst.parser.ParseException:

DataType void() is not supported.(line 1, pos 0)

== SQL ==

void

^^^

... 61 more

org.apache.spark.SparkException: Cannot recognize hive type string: void

2. Hive CTAS statements throws error when select clause has NULL/VOID type column since HIVE-11217

In Spark, creating table with a VOID/NULL column should throw readable exception message, include

- create data source table (using parquet, json, ...)

- create hive table (with or without stored as)

- CTAS

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Add unit tests

Closes#28833 from LantaoJin/SPARK-20680_COPY.

Authored-by: LantaoJin <jinlantao@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Improve the error message in test GroupedMapInPandasTests.test_grouped_over_window_with_key to show the incorrect values.

### Why are the changes needed?

This test failure has come up often in Arrow testing because it tests a struct with timestamp values through a Pandas UDF. The current error message is not helpful as it doesn't show the incorrect values, only that it failed. This change will instead raise an assertion error with the incorrect values on a failure.

Before:

```

======================================================================

FAIL: test_grouped_over_window_with_key (pyspark.sql.tests.test_pandas_grouped_map.GroupedMapInPandasTests)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/spark/python/pyspark/sql/tests/test_pandas_grouped_map.py", line 588, in test_grouped_over_window_with_key

self.assertTrue(all([r[0] for r in result]))

AssertionError: False is not true

```

After:

```

======================================================================

ERROR: test_grouped_over_window_with_key (pyspark.sql.tests.test_pandas_grouped_map.GroupedMapInPandasTests)

----------------------------------------------------------------------

...

AssertionError: {'start': datetime.datetime(2018, 3, 20, 0, 0), 'end': datetime.datetime(2018, 3, 25, 0, 0)}, != {'start': datetime.datetime(2020, 3, 20, 0, 0), 'end': datetime.datetime(2020, 3, 25, 0, 0)}

```

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Improved existing test

Closes#28987 from BryanCutler/pandas-grouped-map-test-output-SPARK-32162.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

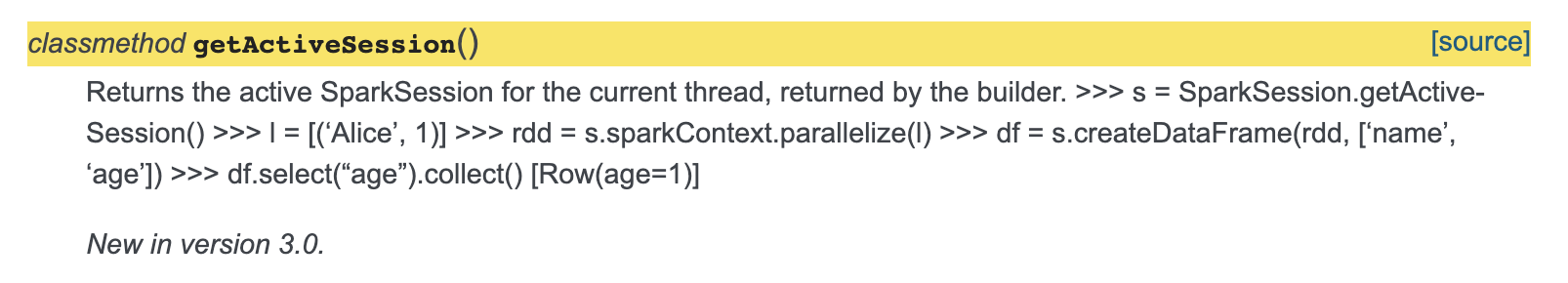

Minor fix so that the documentation of `getActiveSession` is fixed.

The sample code snippet doesn't come up formatted rightly, added spacing for this to be fixed.

Also added return to docs.

### Why are the changes needed?

The sample code is getting mixed up as description in the docs.

[Current Doc Link](http://spark.apache.org/docs/latest/api/python/pyspark.sql.html?highlight=getactivesession#pyspark.sql.SparkSession.getActiveSession)

### Does this PR introduce _any_ user-facing change?

Yes, documentation of getActiveSession is fixed.

And added description about return.

### How was this patch tested?

Adding a spacing between description and code seems to fix the issue.

Closes#28978 from animenon/docs_minor.

Authored-by: animenon <animenon@mail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add summary to RandomForestClassificationModel...

### Why are the changes needed?

so user can get a summary of this classification model, and retrieve common metrics such as accuracy, weightedTruePositiveRate, roc (for binary), pr curves (for binary), etc.

### Does this PR introduce _any_ user-facing change?

Yes

```

RandomForestClassificationModel.summary

RandomForestClassificationModel.evaluate

```

### How was this patch tested?

Add new tests

Closes#28913 from huaxingao/rf_summary.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Modify the example for `timestamp_seconds` and replace `collect()` by `show()`.

### Why are the changes needed?

The SQL config `spark.sql.session.timeZone` doesn't influence on the `collect` in the example. The code below demonstrates that:

```

$ export TZ="UTC"

```

```python

>>> from pyspark.sql.functions import timestamp_seconds

>>> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

>>> time_df = spark.createDataFrame([(1230219000,)], ['unix_time'])

>>> time_df.select(timestamp_seconds(time_df.unix_time).alias('ts')).collect()

[Row(ts=datetime.datetime(2008, 12, 25, 15, 30))]

```

The expected time is **07:30 but we get 15:30**.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running the modified example via:

```

$ ./python/run-tests --modules=pyspark-sql

```

Closes#28959 from MaxGekk/SPARK-32088-fix-timezone-issue-followup.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add American timezone during timestamp_seconds doctest

### Why are the changes needed?

`timestamp_seconds` doctest in `functions.py` used default timezone to get expected result

For example:

```python

>>> time_df = spark.createDataFrame([(1230219000,)], ['unix_time'])

>>> time_df.select(timestamp_seconds(time_df.unix_time).alias('ts')).collect()

[Row(ts=datetime.datetime(2008, 12, 25, 7, 30))]

```

But when we have a non-american timezone, the test case will get different test result.

For example, when we set current timezone as `Asia/Shanghai`, the test result will be

```

[Row(ts=datetime.datetime(2008, 12, 25, 23, 30))]

```

So no matter where we run the test case ,we will always get the expected permanent result if we set the timezone on one specific area.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test

Closes#28932 from GuoPhilipse/SPARK-32088-fix-timezone-issue.

Lead-authored-by: GuoPhilipse <46367746+GuoPhilipse@users.noreply.github.com>

Co-authored-by: GuoPhilipse <guofei_ok@126.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Add training summary for LinearSVCModel......

### Why are the changes needed?

so that user can get the training process status, such as loss value of each iteration and total iteration number.

### Does this PR introduce _any_ user-facing change?

Yes

```LinearSVCModel.summary```

```LinearSVCModel.evaluate```

### How was this patch tested?

new tests

Closes#28884 from huaxingao/svc_summary.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Adding support to Association Rules in Spark ml.fpm.

### Why are the changes needed?

Support is an indication of how frequently the itemset of an association rule appears in the database and suggests if the rules are generally applicable to the dateset. Refer to [wiki](https://en.wikipedia.org/wiki/Association_rule_learning#Support) for more details.

### Does this PR introduce _any_ user-facing change?

Yes. Associate Rules now have support measure

### How was this patch tested?

existing and new unit test

Closes#28903 from huaxingao/fpm.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

When you use floats are index of pandas, it creates a Spark DataFrame with a wrong results as below when Arrow is enabled:

```bash

./bin/pyspark --conf spark.sql.execution.arrow.pyspark.enabled=true

```

```python

>>> import pandas as pd

>>> spark.createDataFrame(pd.DataFrame({'a': [1,2,3]}, index=[2., 3., 4.])).show()

+---+

| a|

+---+

| 1|

| 1|

| 2|

+---+

```

This is because direct slicing uses the value as index when the index contains floats:

```python

>>> pd.DataFrame({'a': [1,2,3]}, index=[2., 3., 4.])[2:]

a

2.0 1

3.0 2

4.0 3

>>> pd.DataFrame({'a': [1,2,3]}, index=[2., 3., 4.]).iloc[2:]

a

4.0 3

>>> pd.DataFrame({'a': [1,2,3]}, index=[2, 3, 4])[2:]

a

4 3

```

This PR proposes to explicitly use `iloc` to positionally slide when we create a DataFrame from a pandas DataFrame with Arrow enabled.

FWIW, I was trying to investigate why direct slicing refers the index value or the positional index sometimes but I stopped investigating further after reading this https://pandas.pydata.org/pandas-docs/stable/getting_started/10min.html#selection

> While standard Python / Numpy expressions for selecting and setting are intuitive and come in handy for interactive work, for production code, we recommend the optimized pandas data access methods, `.at`, `.iat`, `.loc` and `.iloc`.

### Why are the changes needed?

To create the correct Spark DataFrame from a pandas DataFrame without a data loss.

### Does this PR introduce _any_ user-facing change?

Yes, it is a bug fix.

```bash

./bin/pyspark --conf spark.sql.execution.arrow.pyspark.enabled=true

```

```python

import pandas as pd

spark.createDataFrame(pd.DataFrame({'a': [1,2,3]}, index=[2., 3., 4.])).show()

```

Before:

```

+---+

| a|

+---+

| 1|

| 1|

| 2|

+---+

```

After:

```

+---+

| a|

+---+

| 1|

| 2|

| 3|

+---+

```

### How was this patch tested?

Manually tested and unittest were added.

Closes#28928 from HyukjinKwon/SPARK-32098.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

### What changes were proposed in this pull request?

Add a generic ClassificationSummary trait

### Why are the changes needed?

Add a generic ClassificationSummary trait so all the classification models can use it to implement summary.

Currently in classification, we only have summary implemented in ```LogisticRegression```. There are requests to implement summary for ```LinearSVCModel``` in https://issues.apache.org/jira/browse/SPARK-20249 and to implement summary for ```RandomForestClassificationModel``` in https://issues.apache.org/jira/browse/SPARK-23631. If we add a generic ClassificationSummary trait and put all the common code there, we can easily add summary to ```LinearSVCModel``` and ```RandomForestClassificationModel```, and also add summary to all the other classification models.

We can use the same approach to add a generic RegressionSummary trait to regression package and implement summary for all the regression models.

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

existing tests

Closes#28710 from huaxingao/summary_trait.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to remove the warning about multi-thread in local properties, and change the guide to use `collectWithJobGroup` for multi-threads for now because:

- It is too noisy to users who don't use multiple threads - the number of this single thread case is arguably more prevailing.

- There was a critical issue found about pin-thread mode SPARK-32010, which will be fixed in Spark 3.1.

- To smoothly migrate, `RDD.collectWithJobGroup` was added, which will be deprecated in Spark 3.1 with SPARK-32010 fixed.

I will target to deprecate `RDD.collectWithJobGroup`, and make this pin-thread mode stable in Spark 3.1. In the future releases, I plan to make this mode as a default mode, and remove `RDD.collectWithJobGroup` away.

### Why are the changes needed?

To avoid guiding users a feature with a critical issue, and provide a proper workaround for now.

### Does this PR introduce _any_ user-facing change?

Yes, warning message and documentation.

### How was this patch tested?

Manually tested:

Before:

```

>>> spark.sparkContext.setLocalProperty("a", "b")

/.../spark/python/pyspark/util.py:141: UserWarning: Currently, 'setLocalProperty' (set to local

properties) with multiple threads does not properly work.

Internally threads on PVM and JVM are not synced, and JVM thread can be reused for multiple

threads on PVM, which fails to isolate local properties for each thread on PVM.

To work around this, you can set PYSPARK_PIN_THREAD to true (see SPARK-22340). However,

note that it cannot inherit the local properties from the parent thread although it isolates each

thread on PVM and JVM with its own local properties.

To work around this, you should manually copy and set the local properties from the parent thread

to the child thread when you create another thread.

```

After:

```

>>> spark.sparkContext.setLocalProperty("a", "b")

```

Closes#28845 from HyukjinKwon/SPARK-32011.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch adds user-specified fold column support to `CrossValidator`. User can assign fold numbers to dataset instead of letting Spark do random splits.

### Why are the changes needed?

This gives `CrossValidator` users more flexibility in splitting folds.

### Does this PR introduce _any_ user-facing change?

Yes, a new `foldCol` param is added to `CrossValidator`. User can use it to specify custom fold splitting.

### How was this patch tested?

Added unit tests.

Closes#28704 from viirya/SPARK-31777.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Liang-Chi Hsieh <liangchi@uber.com>

## What changes were proposed in this pull request?

we fail casting from numeric to timestamp by default.

## Why are the changes needed?

casting from numeric to timestamp is not a non-standard,meanwhile it may generate different result between spark and other systems,for example hive

## Does this PR introduce any user-facing change?

Yes,user cannot cast numeric to timestamp directly,user have to use the following function to achieve the same effect:TIMESTAMP_SECONDS/TIMESTAMP_MILLIS/TIMESTAMP_MICROS

## How was this patch tested?

unit test added

Closes#28593 from GuoPhilipse/31710-fix-compatibility.

Lead-authored-by: GuoPhilipse <guofei_ok@126.com>

Co-authored-by: GuoPhilipse <46367746+GuoPhilipse@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In LogisticRegression and LinearRegression, if set maxIter=n, the model.summary.totalIterations returns n+1 if the training procedure does not drop out. This is because we use ```objectiveHistory.length``` as totalIterations, but ```objectiveHistory``` contains init sate, thus ```objectiveHistory.length``` is 1 larger than number of training iterations.

### Why are the changes needed?

correctness

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

add new tests and also modify existing tests

Closes#28786 from huaxingao/summary_iter.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This is similar with 64cb6f7066

The test `StreamingLogisticRegressionWithSGDTests.test_training_and_prediction` seems also flaky. This PR just increases the timeout to 3 mins too. The cause is very likely the time elapsed.

See https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/123787/testReport/pyspark.mllib.tests.test_streaming_algorithms/StreamingLogisticRegressionWithSGDTests/test_training_and_prediction/

```

Traceback (most recent call last):

File "/home/jenkins/workspace/SparkPullRequestBuilder2/python/pyspark/mllib/tests/test_streaming_algorithms.py", line 330, in test_training_and_prediction

eventually(condition, timeout=60.0)

File "/home/jenkins/workspace/SparkPullRequestBuilder2/python/pyspark/testing/utils.py", line 90, in eventually

% (timeout, lastValue))

AssertionError: Test failed due to timeout after 60 sec, with last condition returning: Latest errors: 0.67, 0.71, 0.78, 0.7, 0.75, 0.74, 0.73, 0.69, 0.62, 0.71, 0.69, 0.75, 0.72, 0.77, 0.71, 0.74, 0.76, 0.78, 0.7, 0.78, 0.8, 0.74, 0.77, 0.75, 0.76, 0.76, 0.75

```

### Why are the changes needed?

To make PR builds more stable.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Jenkins will test them out.

Closes#28798 from HyukjinKwon/SPARK-31966.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR proposes to move the doctests in `registerJavaUDAF` and `registerJavaFunction` to the proper unittests that run conditionally when the test classes are present.

Both tests are dependent on the test classes in JVM side, `test.org.apache.spark.sql.JavaStringLength` and `test.org.apache.spark.sql.MyDoubleAvg`. So if you run the tests against the plain `sbt package`, it fails as below:

```

**********************************************************************

File "/.../spark/python/pyspark/sql/udf.py", line 366, in pyspark.sql.udf.UDFRegistration.registerJavaFunction

Failed example:

spark.udf.registerJavaFunction(

"javaStringLength", "test.org.apache.spark.sql.JavaStringLength", IntegerType())

Exception raised:

Traceback (most recent call last):

...

test.org.apache.spark.sql.JavaStringLength, please make sure it is on the classpath;

...

6 of 7 in pyspark.sql.udf.UDFRegistration.registerJavaFunction

2 of 4 in pyspark.sql.udf.UDFRegistration.registerJavaUDAF

***Test Failed*** 8 failures.

```

### Why are the changes needed?

In order to support to run the tests against the plain SBT build. See also https://spark.apache.org/developer-tools.html

### Does this PR introduce _any_ user-facing change?

No, it's test-only.

### How was this patch tested?

Manually tested as below:

```bash

./build/sbt -DskipTests -Phive-thriftserver clean package

cd python

./run-tests --python-executable=python3 --testname="pyspark.sql.udf UserDefinedFunction"

./run-tests --python-executable=python3 --testname="pyspark.sql.tests.test_udf UDFTests"

```

```bash

./build/sbt -DskipTests -Phive-thriftserver clean test:package

cd python

./run-tests --python-executable=python3 --testname="pyspark.sql.udf UserDefinedFunction"

./run-tests --python-executable=python3 --testname="pyspark.sql.tests.test_udf UDFTests"

```

Closes#28795 from HyukjinKwon/SPARK-31965.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

When using pyarrow to convert a Pandas categorical column, use `is_categorical` instead of trying to import `CategoricalDtype`

### Why are the changes needed?

The import for `CategoricalDtype` had changed from Pandas 0.23 to 1.0 and pyspark currently tries both locations. Using `is_categorical` is a more stable API.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests

Closes#28793 from BryanCutler/arrow-use-is_categorical-SPARK-31964.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is another approach to fix the issue. See the previous try https://github.com/apache/spark/pull/28745. It was too invasive so I took more conservative approach.

This PR proposes to resolve grouping attributes separately first so it can be properly referred when `FlatMapGroupsInPandas` and `FlatMapCoGroupsInPandas` are resolved without ambiguity.

Previously,

```python

from pyspark.sql.functions import *

df = spark.createDataFrame([[1, 1]], ["column", "Score"])

pandas_udf("column integer, Score float", PandasUDFType.GROUPED_MAP)

def my_pandas_udf(pdf):

return pdf.assign(Score=0.5)

df.groupby('COLUMN').apply(my_pandas_udf).show()

```

was failed as below:

```

pyspark.sql.utils.AnalysisException: "Reference 'COLUMN' is ambiguous, could be: COLUMN, COLUMN.;"

```

because the unresolved `COLUMN` in `FlatMapGroupsInPandas` doesn't know which reference to take from the child projection.

After this fix, it resolves the child projection first with grouping keys and pass, to `FlatMapGroupsInPandas`, the attribute as a grouping key from the child projection that is positionally selected.

### Why are the changes needed?

To resolve grouping keys correctly.

### Does this PR introduce _any_ user-facing change?

Yes,

```python

from pyspark.sql.functions import *

df = spark.createDataFrame([[1, 1]], ["column", "Score"])

pandas_udf("column integer, Score float", PandasUDFType.GROUPED_MAP)

def my_pandas_udf(pdf):

return pdf.assign(Score=0.5)

df.groupby('COLUMN').apply(my_pandas_udf).show()

```

```python

df1 = spark.createDataFrame([(1, 1)], ("column", "value"))

df2 = spark.createDataFrame([(1, 1)], ("column", "value"))

df1.groupby("COLUMN").cogroup(

df2.groupby("COLUMN")

).applyInPandas(lambda r, l: r + l, df1.schema).show()

```

Before:

```

pyspark.sql.utils.AnalysisException: Reference 'COLUMN' is ambiguous, could be: COLUMN, COLUMN.;

```

```

pyspark.sql.utils.AnalysisException: cannot resolve '`COLUMN`' given input columns: [COLUMN, COLUMN, value, value];;

'FlatMapCoGroupsInPandas ['COLUMN], ['COLUMN], <lambda>(column#9L, value#10L, column#13L, value#14L), [column#22L, value#23L]

:- Project [COLUMN#9L, column#9L, value#10L]

: +- LogicalRDD [column#9L, value#10L], false

+- Project [COLUMN#13L, column#13L, value#14L]

+- LogicalRDD [column#13L, value#14L], false

```

After:

```

+------+-----+

|column|Score|

+------+-----+

| 1| 0.5|

+------+-----+

```

```

+------+-----+

|column|value|

+------+-----+

| 2| 2|

+------+-----+

```

### How was this patch tested?

Unittests were added and manually tested.

Closes#28777 from HyukjinKwon/SPARK-31915-another.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>