This change modifies the behavior of the delegation token code when running

on YARN, so that the driver controls the renewal, in both client and cluster

mode. For that, a few different things were changed:

* The AM code only runs code that needs DTs when DTs are available.

In a way, this restores the AM behavior to what it was pre-SPARK-23361, but

keeping the fix added in that bug. Basically, all the AM code is run in a

"UGI.doAs()" block; but code that needs to talk to HDFS (basically the

distributed cache handling code) was delayed to the point where the driver

is up and running, and thus when valid delegation tokens are available.

* SparkSubmit / ApplicationMaster now handle user login, not the token manager.

The previous AM code was relying on the token manager to keep the user

logged in when keytabs are used. This required some odd APIs in the token

manager and the AM so that the right UGI was exposed and used in the right

places.

After this change, the logged in user is handled separately from the token

manager, so the API was cleaned up, and, as explained above, the whole AM

runs under the logged in user, which also helps with simplifying some more code.

* Distributed cache configs are sent separately to the AM.

Because of the delayed initialization of the cached resources in the AM, it

became easier to write the cache config to a separate properties file instead

of bundling it with the rest of the Spark config. This also avoids having

to modify the SparkConf to hide things from the UI.

* Finally, the AM doesn't manage the token manager anymore.

The above changes allow the token manager to be completely handled by the

driver's scheduler backend code also in YARN mode (whether client or cluster),

making it similar to other RMs. To maintain the fix added in SPARK-23361 also

in client mode, the AM now sends an extra message to the driver on initialization

to fetch delegation tokens; and although it might not really be needed, the

driver also keeps the running AM updated when new tokens are created.

Tested in a kerberized cluster with the same tests used to validate SPARK-23361,

in both client and cluster mode. Also tested with a non-kerberized cluster.

Closes#23338 from vanzin/SPARK-25689.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

When acquiring unroll memory from `StaticMemoryManager`, let it fail fast if required space exceeds memory limit, just like acquiring storage memory.

I think this may reduce some computation and memory evicting costs especially when required space(`numBytes`) is very big.

## How was this patch tested?

Existing unit tests.

Closes#23426 from SongYadong/acquireUnrollMemory_fail_fast.

Authored-by: SongYadong <song.yadong1@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR upgrades Mockito from 1.10.19 to 2.23.4. The following changes are required.

- Replace `org.mockito.Matchers` with `org.mockito.ArgumentMatchers`

- Replace `anyObject` with `any`

- Replace `getArgumentAt` with `getArgument` and add type annotation.

- Use `isNull` matcher in case of `null` is invoked.

```scala

saslHandler.channelInactive(null);

- verify(handler).channelInactive(any(TransportClient.class));

+ verify(handler).channelInactive(isNull());

```

- Make and use `doReturn` wrapper to avoid [SI-4775](https://issues.scala-lang.org/browse/SI-4775)

```scala

private def doReturn(value: Any) = org.mockito.Mockito.doReturn(value, Seq.empty: _*)

```

## How was this patch tested?

Pass the Jenkins with the existing tests.

Closes#23452 from dongjoon-hyun/SPARK-26536.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

The PR makes hardcoded spark.driver, spark.executor, and spark.cores.max configs to use `ConfigEntry`.

Note that some config keys are from `SparkLauncher` instead of defining in the config package object because the string is already defined in it and it does not depend on core module.

## How was this patch tested?

Existing tests.

Closes#23415 from ueshin/issues/SPARK-26445/hardcoded_driver_executor_configs.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The PR makes hardcoded configs below to use ConfigEntry.

* spark.pyspark

* spark.python

* spark.r

This patch doesn't change configs which are not relevant to SparkConf (e.g. system properties, python source code)

## How was this patch tested?

Existing tests.

Closes#23428 from HeartSaVioR/SPARK-26489.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

The overriden of SparkSubmit's exitFn at some previous tests in SparkSubmitSuite may cause the following tests pass even they failed when they were run separately. This PR is to fix this problem.

## How was this patch tested?

unittest

Closes#23404 from liupc/Fix-SparkSubmitSuite-exitFn.

Authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This should make tests in core modules pass for Java 11.

## How was this patch tested?

Existing tests, with modifications.

Closes#23419 from srowen/Java11.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR addresses warning messages in Java files reported at [lgtm.com](https://lgtm.com).

[lgtm.com](https://lgtm.com) provides automated code review of Java/Python/JavaScript files for OSS projects. [Here](https://lgtm.com/projects/g/apache/spark/alerts/?mode=list&severity=warning) are warning messages regarding Apache Spark project.

This PR addresses the following warnings:

- Result of multiplication cast to wider type

- Implicit narrowing conversion in compound assignment

- Boxed variable is never null

- Useless null check

NOTE: `Potential input resource leak` looks false positive for now.

## How was this patch tested?

Existing UTs

Closes#23420 from kiszk/SPARK-26508.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

There's some inconsistency for log level while logging error messages in

delegation token providers. (DEBUG, INFO, WARNING)

Given that failing to obtain token would often crash the query, I guess

it would be nice to set higher log level for error log messages.

## How was this patch tested?

The patch just changed the log level.

Closes#23418 from HeartSaVioR/FIX-inconsistency-log-level-between-delegation-token-providers.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.eventLog` configs to use `ConfigEntry` and put them in the `config` package.

## How was this patch tested?

existing tests

Closes#23395 from mgaido91/SPARK-26470.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

In the method `taskList`(since https://github.com/apache/spark/pull/21688), the executor log value is queried in KV store for every task(method `constructTaskData`).

This PR propose to use a hashmap for reducing duplicated KV store lookups in the method.

## How was this patch tested?

Manual check

Closes#23310 from gengliangwang/removeExecutorLog.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This pr makes hardcoded "spark.history" configs to use `ConfigEntry` and put them in `History` config object.

## How was this patch tested?

Existing tests.

Closes#23384 from ueshin/issues/SPARK-26443/hardcoded_history_configs.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Add docs to describe how remove policy act while considering the property `spark.dynamicAllocation.cachedExecutorIdleTimeout` in ExecutorAllocationManager

## How was this patch tested?

comment-only PR.

Closes#23386 from TopGunViper/SPARK-26446.

Authored-by: wuqingxin <wuqingxin@baidu.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

…leAccumulator

## What changes were proposed in this pull request?

This PR implements metric sources for LongAccumulator and DoubleAccumulator, such that a user can register these accumulators easily and have their values be reported by the driver's metric namespace.

## How was this patch tested?

Unit tests, and manual tests.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23242 from abellina/SPARK-26285_accumulator_source.

Lead-authored-by: Alessandro Bellina <abellina@yahoo-inc.com>

Co-authored-by: Alessandro Bellina <abellina@oath.com>

Co-authored-by: Alessandro Bellina <abellina@gmail.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

Recently, the ability to expose the metrics for YARN Shuffle Service was added as part of [SPARK-18364](https://github.com/apache/spark/pull/22485). We need to add some metrics to be able to determine the number of active connections as well as open connections to the external shuffle service to benchmark network and connection issues on large cluster environments.

Added two more shuffle server metrics for Spark Yarn shuffle service: numRegisteredConnections which indicate the number of registered connections to the shuffle service and numActiveConnections which indicate the number of active connections to the shuffle service at any given point in time.

If these metrics are outputted to a file, we get something like this:

1533674653489 default.shuffleService: Hostname=server1.abc.com, openBlockRequestLatencyMillis_count=729, openBlockRequestLatencyMillis_rate15=0.7110833548897356, openBlockRequestLatencyMillis_rate5=1.657808981793011, openBlockRequestLatencyMillis_rate1=2.2404486061620474, openBlockRequestLatencyMillis_rateMean=0.9242558551196706,

numRegisteredConnections=35,

blockTransferRateBytes_count=2635880512, blockTransferRateBytes_rate15=2578547.6094160094, blockTransferRateBytes_rate5=6048721.726302424, blockTransferRateBytes_rate1=8548922.518223226, blockTransferRateBytes_rateMean=3341878.633637769, registeredExecutorsSize=5, registerExecutorRequestLatencyMillis_count=5, registerExecutorRequestLatencyMillis_rate15=0.0027973949328659836, registerExecutorRequestLatencyMillis_rate5=0.0021278007987206426, registerExecutorRequestLatencyMillis_rate1=2.8270296777387467E-6, registerExecutorRequestLatencyMillis_rateMean=0.006339206380043053, numActiveConnections=35

Closes#22498 from pgandhi999/SPARK-18364.

Authored-by: pgandhi <pgandhi@oath.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

When NoClassDefFoundError thrown,it will cause job hang.

`Exception in thread "dag-scheduler-event-loop" java.lang.NoClassDefFoundError: Lcom/xxx/data/recommend/aggregator/queue/QueueName;

at java.lang.Class.getDeclaredFields0(Native Method)

at java.lang.Class.privateGetDeclaredFields(Class.java:2436)

at java.lang.Class.getDeclaredField(Class.java:1946)

at java.io.ObjectStreamClass.getDeclaredSUID(ObjectStreamClass.java:1659)

at java.io.ObjectStreamClass.access$700(ObjectStreamClass.java:72)

at java.io.ObjectStreamClass$2.run(ObjectStreamClass.java:480)

at java.io.ObjectStreamClass$2.run(ObjectStreamClass.java:468)

at java.security.AccessController.doPrivileged(Native Method)

at java.io.ObjectStreamClass.<init>(ObjectStreamClass.java:468)

at java.io.ObjectStreamClass.lookup(ObjectStreamClass.java:365)

at java.io.ObjectOutputStream.writeClass(ObjectOutputStream.java:1212)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1119)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.writeArray(ObjectOutputStream.java:1377)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1173)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.defaultWriteFields(ObjectOutputStream.java:1547)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1508)

at java.io.ObjectOutputStream.writeOrdinaryObject(ObjectOutputStream.java:1431)

at java.io.ObjectOutputStream.writeObject0(ObjectOutputStream.java:1177)

at java.io.ObjectOutputStream.writeArray(ObjectOutputStream.java:1377)`

It is caused by NoClassDefFoundError will not catch up during task seriazation.

`var taskBinary: Broadcast[Array[Byte]] = null

try {

// For ShuffleMapTask, serialize and broadcast (rdd, shuffleDep).

// For ResultTask, serialize and broadcast (rdd, func).

val taskBinaryBytes: Array[Byte] = stage match {

case stage: ShuffleMapStage =>

JavaUtils.bufferToArray(

closureSerializer.serialize((stage.rdd, stage.shuffleDep): AnyRef))

case stage: ResultStage =>

JavaUtils.bufferToArray(closureSerializer.serialize((stage.rdd, stage.func): AnyRef))

}

taskBinary = sc.broadcast(taskBinaryBytes)

} catch {

// In the case of a failure during serialization, abort the stage.

case e: NotSerializableException =>

abortStage(stage, "Task not serializable: " + e.toString, Some(e))

runningStages -= stage

// Abort execution

return

case NonFatal(e) =>

abortStage(stage, s"Task serialization failed: $e\n${Utils.exceptionString(e)}", Some(e))

runningStages -= stage

return

}`

image below shows that stage 33 blocked and never be scheduled.

<img width="1273" alt="2018-06-28 4 28 42" src="https://user-images.githubusercontent.com/26762018/42621188-b87becca-85ef-11e8-9a0b-0ddf07504c96.png">

<img width="569" alt="2018-06-28 4 28 49" src="https://user-images.githubusercontent.com/26762018/42621191-b8b260e8-85ef-11e8-9d10-e97a5918baa6.png">

## How was this patch tested?

UT

Closes#21664 from caneGuy/zhoukang/fix-noclassdeferror.

Authored-by: zhoukang <zhoukang199191@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

This change hooks up the k8s backed to the updated HadoopDelegationTokenManager,

so that delegation tokens are also available in client mode, and keytab-based token

renewal is enabled.

The change re-works the k8s feature steps related to kerberos so

that the driver does all the credential management and provides all

the needed information to executors - so nothing needs to be added

to executor pods. This also makes cluster mode behave a lot more

similarly to client mode, since no driver-related config steps are run

in the latter case.

The main two things that don't need to happen in executors anymore are:

- adding the Hadoop config to the executor pods: this is not needed

since the Spark driver will serialize the Hadoop config and send

it to executors when running tasks.

- mounting the kerberos config file in the executor pods: this is

not needed once you remove the above. The Hadoop conf sent by

the driver with the tasks is already resolved (i.e. has all the

kerberos names properly defined), so executors do not need access

to the kerberos realm information anymore.

The change also avoids creating delegation tokens unnecessarily.

This means that they'll only be created if a secret with tokens

was not provided, and if a keytab is not provided. In either of

those cases, the driver code will handle delegation tokens: in

cluster mode by creating a secret and stashing them, in client

mode by using existing mechanisms to send DTs to executors.

One last feature: the change also allows defining a keytab with

a "local:" URI. This is supported in client mode (although that's

the same as not saying "local:"), and in k8s cluster mode. This

allows the keytab to be mounted onto the image from a pre-existing

secret, for example.

Finally, the new code always sets SPARK_USER in the driver and

executor pods. This is in line with how other resource managers

behave: the submitting user reflects which user will access

Hadoop services in the app. (With kerberos, that's overridden

by the logged in user.) That user is unrelated to the OS user

the app is running as inside the containers.

Tested:

- client and cluster mode with kinit

- cluster mode with keytab

- cluster mode with local: keytab

- YARN cluster with keytab (to make sure it isn't broken)

Closes#22911 from vanzin/SPARK-25815.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Change microseconds to milliseconds in annotation of Utils.timeStringAsMs.

Closes#23346 from stczwd/stczwd.

Authored-by: Jackey Lee <qcsd2011@163.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is kind of a followup of https://github.com/apache/spark/pull/23239

The `UnsafeProject` will normalize special float/double values(NaN and -0.0), so the sorter doesn't have to handle it.

However, for consistency and future-proof, this PR proposes to normalize `-0.0` in the prefix comparator, so that it's same with the normal ordering. Note that prefix comparator handles NaN as well.

This is not a bug fix, but a safe guard.

## How was this patch tested?

existing tests

Closes#23334 from cloud-fan/sort.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Multiple SparkContexts are discouraged and it has been warning for last 4 years, see SPARK-4180. It could cause arbitrary and mysterious error cases, see SPARK-2243.

Honestly, I didn't even know Spark still allows it, which looks never officially supported, see SPARK-2243.

I believe It should be good timing now to remove this configuration.

## How was this patch tested?

Each doc was manually checked and manually tested:

```

$ ./bin/spark-shell --conf=spark.driver.allowMultipleContexts=true

...

scala> new SparkContext()

org.apache.spark.SparkException: Only one SparkContext should be running in this JVM (see SPARK-2243).The currently running SparkContext was created at:

org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:939)

...

org.apache.spark.SparkContext$.$anonfun$assertNoOtherContextIsRunning$2(SparkContext.scala:2435)

at scala.Option.foreach(Option.scala:274)

at org.apache.spark.SparkContext$.assertNoOtherContextIsRunning(SparkContext.scala:2432)

at org.apache.spark.SparkContext$.markPartiallyConstructed(SparkContext.scala:2509)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:80)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:112)

... 49 elided

```

Closes#23311 from HyukjinKwon/SPARK-26362.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Based on the [comment](https://github.com/apache/spark/pull/23272#discussion_r240735509), it seems to be better to put `freePage` into a `finally` block. This patch as a follow-up to do so.

## How was this patch tested?

Existing tests.

Closes#23294 from viirya/SPARK-26265-followup.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

Currently this check is only performed for dynamic allocation use case in

ExecutorAllocationManager.

## What changes were proposed in this pull request?

Checks that cpu per task is lower than number of cores per executor otherwise throw an exception

## How was this patch tested?

manual tests

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23290 from ashangit/master.

Authored-by: n.fraison <n.fraison@criteo.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

These three condition descriptions should be updated, follow #23228 :

<li>no Ordering is specified,</li>

<li>no Aggregator is specified, and</li>

<li>the number of partitions is less than

<code>spark.shuffle.sort.bypassMergeThreshold</code>.

</li>

1、If the shuffle dependency specifies aggregation, but it only aggregates at the reduce-side, BypassMergeSortShuffle can still be used.

2、If the number of output partitions is spark.shuffle.sort.bypassMergeThreshold(eg.200), we can use BypassMergeSortShuffle.

## How was this patch tested?

N/A

Closes#23281 from lcqzte10192193/wid-lcq-1211.

Authored-by: lichaoqun <li.chaoqun@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

When Kafka delegation token obtained, SCRAM `sasl.mechanism` has to be configured for authentication. This can be configured on the related source/sink which is inconvenient from user perspective. Such granularity is not required and this configuration can be implemented with one central parameter.

In this PR `spark.kafka.sasl.token.mechanism` added to configure this centrally (default: `SCRAM-SHA-512`).

## How was this patch tested?

Existing unit tests + on cluster.

Closes#23274 from gaborgsomogyi/SPARK-26322.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

YARN applicationMaster metrics registration introduced in SPARK-24594 causes further registration of static metrics (Codegenerator and HiveExternalCatalog) and of JVM metrics, which I believe do not belong in this context.

This looks like an unintended side effect of using the start method of [[MetricsSystem]].

A possible solution proposed here, is to introduce startNoRegisterSources to avoid these additional registrations of static sources and of JVM sources in the case of YARN applicationMaster metrics (this could be useful for other metrics that may be added in the future).

## How was this patch tested?

Manually tested on a YARN cluster,

Closes#22279 from LucaCanali/YarnMetricsRemoveExtraSourceRegistration.

Lead-authored-by: Luca Canali <luca.canali@cern.ch>

Co-authored-by: LucaCanali <luca.canali@cern.ch>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Follow up pr for #23207, include following changes:

- Rename `SQLShuffleMetricsReporter` to `SQLShuffleReadMetricsReporter` to make it match with write side naming.

- Display text changes for read side for naming consistent.

- Rename function in `ShuffleWriteProcessor`.

- Delete `private[spark]` in execution package.

## How was this patch tested?

Existing tests.

Closes#23286 from xuanyuanking/SPARK-26193-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This proposes an alternative way to load secret keys into a Spark application that is running on Kubernetes. Instead of automatically generating the secret, the secret key can reside in a file that is shared between both the driver and executor containers.

Unit tests.

Closes#23252 from mccheah/auth-secret-with-file.

Authored-by: mcheah <mcheah@palantir.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In `BytesToBytesMap.MapIterator.advanceToNextPage`, We will first lock this `MapIterator` and then `TaskMemoryManager` when going to free a memory page by calling `freePage`. At the same time, it is possibly that another memory consumer first locks `TaskMemoryManager` and then this `MapIterator` when it acquires memory and causes spilling on this `MapIterator`.

So it ends with the `MapIterator` object holds lock to the `MapIterator` object and waits for lock on `TaskMemoryManager`, and the other consumer holds lock to `TaskMemoryManager` and waits for lock on the `MapIterator` object.

To avoid deadlock here, this patch proposes to keep reference to the page to free and free it after releasing the lock of `MapIterator`.

## How was this patch tested?

Added test and manually test by running the test 100 times to make sure there is no deadlock.

Closes#23272 from viirya/SPARK-26265.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

… incorrect.

## What changes were proposed in this pull request?

In the reported heartbeat information, the unit of the memory data is bytes, which is converted by the formatBytes() function in the utils.js file before being displayed in the interface. The cardinality of the unit conversion in the formatBytes function is 1000, which should be 1024.

Change the cardinality of the unit conversion in the formatBytes function to 1024.

## How was this patch tested?

manual tests

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22683 from httfighter/SPARK-25696.

Lead-authored-by: 韩田田00222924 <han.tiantian@zte.com.cn>

Co-authored-by: han.tiantian@zte.com.cn <han.tiantian@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

This adds the entire memory used by spark’s executor (as measured by procfs) to the executor metrics. The memory usage is collected from the entire process tree under the executor. The metrics are subdivided into memory used by java, by python, and by other processes, to aid users in diagnosing the source of high memory usage.

The additional metrics are sent to the driver in heartbeats, using the mechanism introduced by SPARK-23429. This also slightly extends that approach to allow one ExecutorMetricType to collect multiple metrics.

Added unit tests and also tested on a live cluster.

Closes#22612 from rezasafi/ptreememory2.

Authored-by: Reza Safi <rezasafi@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

`1. The shuffle dependency specifies no aggregation or output ordering.`

If the shuffle dependency specifies aggregation, but it only aggregates at the reduce-side, serialized shuffle can still be used.

`3. The shuffle produces fewer than 16777216 output partitions.`

If the number of output partitions is 16777216 , we can use serialized shuffle.

We can see this mothod: `canUseSerializedShuffle`

## How was this patch tested?

N/A

Closes#23228 from 10110346/SerializedShuffle_doc.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Add MAXIMUM_PAGE_SIZE_BYTES Exception test

## How was this patch tested?

Existing tests

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23226 from wangjiaochun/BytesToBytesMapSuite.

Authored-by: 10087686 <wang.jiaochun@zte.com.cn>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

If there are no records in memory, then we don't need to create an empty temp spill file.

## How was this patch tested?

Existing tests

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23225 from wangjiaochun/ShufflSorter.

Authored-by: 10087686 <wang.jiaochun@zte.com.cn>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Root cause: Prior to Spark2.4, When we enable zst for eventLog compression, for inprogress application, It always throws exception in the Application UI, when we open from the history server. But after 2.4 it will display the UI information based on the completed frames in the zstd compressed eventLog. But doesn't read incomplete frames for inprogress application.

In this PR, we have added 'setContinous(true)' for reading input stream from eventLog, so that it can read from open frames also. (By default 'isContinous=false' for zstd inputStream and when we try to read an open frame, it throws truncated error)

## How was this patch tested?

Test steps:

1) Add the configurations in the spark-defaults.conf

(i) spark.eventLog.compress true

(ii) spark.io.compression.codec zstd

2) Restart history server

3) bin/spark-shell

4) sc.parallelize(1 to 1000, 1000).count

5) Open app UI from the history server UI

**Before fix**

**After fix:**

Closes#23241 from shahidki31/zstdEventLog.

Authored-by: Shahid <shahidki31@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

1. Implement `SQLShuffleWriteMetricsReporter` on the SQL side as the customized `ShuffleWriteMetricsReporter`.

2. Add shuffle write metrics to `ShuffleExchangeExec`, and use these metrics to create corresponding `SQLShuffleWriteMetricsReporter` in shuffle dependency.

3. Rework on `ShuffleMapTask` to add new class named `ShuffleWriteProcessor` which control shuffle write process, we use sql shuffle write metrics by customizing a ShuffleWriteProcessor on SQL side.

## How was this patch tested?

Add UT in SQLMetricsSuite.

Manually test locally, update screen shot to document attached in JIRA.

Closes#23207 from xuanyuanking/SPARK-26193.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

spark.kafka.sasl.kerberos.service.name is an optional parameter but most of the time value `kafka` has to be set. As I've written in the jira the following reasoning is behind:

* Kafka's configuration guide suggest the same value: https://kafka.apache.org/documentation/#security_sasl_kerberos_brokerconfig

* It would be easier for spark users by providing less configuration

* Other streaming engines are doing the same

In this PR I've changed the parameter from optional to `WithDefault` and set `kafka` as default value.

## How was this patch tested?

Available unit tests + on cluster.

Closes#23254 from gaborgsomogyi/SPARK-26304.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Delete unnecessary If statement, because it Impossible execution when

records less than or equal to zero.it is only execution when records begin zero.

...................

if (inMemSorter == null || inMemSorter.numRecords() <= 0) {

return 0L;

}

....................

if (inMemSorter.numRecords() > 0) {

.....................

}

## How was this patch tested?

Existing tests

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23247 from wangjiaochun/inMemSorter.

Authored-by: 10087686 <wang.jiaochun@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

Adds a new method to SparkAppHandle called getError which returns

the exception (if present) that caused the underlying Spark app to

fail.

New tests added to SparkLauncherSuite for the new method.

Closes#21849Closes#23221 from vanzin/SPARK-24243.

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

`enablePerfMetrics `was originally designed in `BytesToBytesMap `to control `getNumHashCollisions getTimeSpentResizingNs getAverageProbesPerLookup`.

However, as the Spark version gradual progress. this parameter is only used for `getAverageProbesPerLookup ` and always given to true when using `BytesToBytesMap`.

it is also dangerous to determine whether `getAverageProbesPerLookup `opens and throws an `IllegalStateException `exception.

So this pr will be remove `enablePerfMetrics `parameter from `BytesToBytesMap`. thanks.

## How was this patch tested?

the existed test cases.

Closes#23244 from heary-cao/enablePerfMetrics.

Authored-by: caoxuewen <cao.xuewen@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This change modifies the logic in the SecurityManager to do two

things:

- generate unique app secrets also when k8s is being used

- only store the secret in the user's UGI on YARN

The latter is needed so that k8s won't unnecessarily create

k8s secrets for the UGI credentials when only the auth token

is stored there.

On the k8s side, the secret is propagated to executors using

an environment variable instead. This ensures it works in both

client and cluster mode.

Security doc was updated to mention the feature and clarify that

proper access control in k8s should be enabled for it to be secure.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#23174 from vanzin/SPARK-26194.

## What changes were proposed in this pull request?

We explicitly avoid files with hdfs erasure coding for the streaming WAL

and for event logs, as hdfs EC does not support all relevant apis.

However, the new builder api used has different semantics -- it does not

create parent dirs, and it does not resolve relative paths. This

updates createNonEcFile to have similar semantics to the old api.

## How was this patch tested?

Ran tests with the WAL pointed at a non-existent dir, which failed before this change. Manually tested the new function with a relative path as well.

Unit tests via jenkins.

Closes#23092 from squito/SPARK-26094.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In my local setup, I set log4j root category as ERROR (https://stackoverflow.com/questions/27781187/how-to-stop-info-messages-displaying-on-spark-console , first item show up if we google search "set spark log level".) When I run such command

```

spark-submit --class foo bar.jar

```

Nothing shows up, and the script exits.

After quick investigation, I think the log level for ClassNotFoundException/NoClassDefFoundError in SparkSubmit should be ERROR instead of WARN. Since the whole process exit because of the exception/error.

Before https://github.com/apache/spark/pull/20925, the message is not controlled by `log4j.rootCategory`.

## How was this patch tested?

Manual check.

Closes#23189 from gengliangwang/changeLogLevel.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Correct some document description errors.

## How was this patch tested?

N/A

Closes#23162 from 10110346/docerror.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently, the common `withTempDir` function is used in Spark SQL test cases. To handle `val dir = Utils. createTempDir()` and `Utils. deleteRecursively (dir)`. Unfortunately, the `withTempDir` function cannot be used in the Spark Core test case. This PR Sharing `withTempDir` function in Spark Sql and SparkCore to clean up SparkCore test cases. thanks.

## How was this patch tested?

N / A

Closes#23151 from heary-cao/withCreateTempDir.

Authored-by: caoxuewen <cao.xuewen@zte.com.cn>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Python with rpc and disk encryption enabled along with a python broadcast variable and just read the value back on the driver side the job failed with:

Traceback (most recent call last): File "broadcast.py", line 37, in <module> words_new.value File "/pyspark.zip/pyspark/broadcast.py", line 137, in value File "pyspark.zip/pyspark/broadcast.py", line 122, in load_from_path File "pyspark.zip/pyspark/broadcast.py", line 128, in load EOFError: Ran out of input

To reproduce use configs: --conf spark.network.crypto.enabled=true --conf spark.io.encryption.enabled=true

Code:

words_new = sc.broadcast(["scala", "java", "hadoop", "spark", "akka"])

words_new.value

print(words_new.value)

## How was this patch tested?

words_new = sc.broadcast([“scala”, “java”, “hadoop”, “spark”, “akka”])

textFile = sc.textFile(“README.md”)

wordCounts = textFile.flatMap(lambda line: line.split()).map(lambda word: (word + words_new.value[1], 1)).reduceByKey(lambda a, b: a+b)

count = wordCounts.count()

print(count)

words_new.value

print(words_new.value)

Closes#23166 from redsanket/SPARK-26201.

Authored-by: schintap <schintap@oath.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

It adds kafka delegation token support for structured streaming. Please see the relevant [SPIP](https://docs.google.com/document/d/1ouRayzaJf_N5VQtGhVq9FURXVmRpXzEEWYHob0ne3NY/edit?usp=sharing)

What this PR contains:

* Configuration parameters for the feature

* Delegation token fetching from broker

* Usage of token through dynamic JAAS configuration

* Minor refactoring in the existing code

What this PR doesn't contain:

* Documentation changes because design can change

## How was this patch tested?

Existing tests + added small amount of additional unit tests.

Because it's an external service integration mainly tested on cluster.

* 4 node cluster

* Kafka broker version 1.1.0

* Topic with 4 partitions

* security.protocol = SASL_SSL

* sasl.mechanism = SCRAM-SHA-256

An example of obtaining a token:

```

18/10/01 01:07:49 INFO kafka010.TokenUtil: TOKENID HMAC OWNER RENEWERS ISSUEDATE EXPIRYDATE MAXDATE

18/10/01 01:07:49 INFO kafka010.TokenUtil: D1-v__Q5T_uHx55rW16Jwg [hidden] User:user [] 2018-10-01T01:07 2018-10-02T01:07 2018-10-08T01:07

18/10/01 01:07:49 INFO security.KafkaDelegationTokenProvider: Get token from Kafka: Kind: KAFKA_DELEGATION_TOKEN, Service: kafka.server.delegation.token, Ident: 44 31 2d 76 5f 5f 51 35 54 5f 75 48 78 35 35 72 57 31 36 4a 77 67

```

An example token usage:

```

18/10/01 01:08:07 INFO kafka010.KafkaSecurityHelper: Scram JAAS params: org.apache.kafka.common.security.scram.ScramLoginModule required tokenauth=true serviceName="kafka" username="D1-v__Q5T_uHx55rW16Jwg" password="[hidden]";

18/10/01 01:08:07 INFO kafka010.KafkaSourceProvider: Delegation token detected, using it for login.

```

Closes#22598 from gaborgsomogyi/SPARK-25501.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In `BlockManager`, `getRemoteValues` gets a `ChunkedByteBuffer` (by calling `getRemoteBytes`) and creates an `InputStream` from it. `getRemoteBytes`, in turn, gets a `ManagedBuffer` and converts it to a `ChunkedByteBuffer`.

Instead, expose a `getRemoteManagedBuffer` method so `getRemoteValues` can just get this `ManagedBuffer` and use its `InputStream`.

When reading a remote cache block from disk, this reduces heap memory usage significantly.

Retain `getRemoteBytes` for other callers.

## How was this patch tested?

Imran Rashid wrote an application (https://github.com/squito/spark_2gb_test/blob/master/src/main/scala/com/cloudera/sparktest/LargeBlocks.scala), that among other things, tests reading remote cache blocks. I ran this application, using 2500MB blocks, to test reading a cache block on disk. Without this change, with `--executor-memory 5g`, the test fails with `java.lang.OutOfMemoryError: Java heap space`. With the change, the test passes with `--executor-memory 2g`.

I also ran the unit tests in core. In particular, `DistributedSuite` has a set of tests that exercise the `getRemoteValues` code path. `BlockManagerSuite` has several tests that call `getRemoteBytes`; I left these unchanged, so `getRemoteBytes` still gets exercised.

Closes#23058 from wypoon/SPARK-25905.

Authored-by: Wing Yew Poon <wypoon@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

This PR changes the broadcast object in TorrentBroadcast from a strong reference to a weak reference. This allows it to be garbage collected even if the Dataset is held in memory. This is ok, because the broadcast object can always be re-read.

## How was this patch tested?

Tested in Spark shell by taking a heap dump, full repro steps listed in https://issues.apache.org/jira/browse/SPARK-25998.

Closes#22995 from bkrieger/bk/torrent-broadcast-weak.

Authored-by: Brandon Krieger <bkrieger@palantir.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

… of hard coded "/" in DependencyUtils

## What changes were proposed in this pull request?

Use Java system property "file.separator" instead of hard coded "/" in DependencyUtils.

## How was this patch tested?

Manual test:

Submit Spark application via REST API that reads data from Elasticsearch using spark-elasticsearch library.

Without fix application fails with error:

18/11/22 10:36:20 ERROR Version: Multiple ES-Hadoop versions detected in the classpath; please use only one

jar:file:/C:/<...>/spark-2.4.0-bin-hadoop2.6/work/driver-20181122103610-0001/myApp-assembly-1.0.jar

jar:file:/C:/<...>/myApp-assembly-1.0.jar

18/11/22 10:36:20 ERROR Main: Application [MyApp] failed:

java.lang.Error: Multiple ES-Hadoop versions detected in the classpath; please use only one

jar:file:/C:/<...>/spark-2.4.0-bin-hadoop2.6/work/driver-20181122103610-0001/myApp-assembly-1.0.jar

jar:file:/C:/<...>/myApp-assembly-1.0.jar

at org.elasticsearch.hadoop.util.Version.<clinit>(Version.java:73)

at org.elasticsearch.hadoop.rest.RestService.findPartitions(RestService.java:214)

at org.elasticsearch.spark.rdd.AbstractEsRDD.esPartitions$lzycompute(AbstractEsRDD.scala:73)

at org.elasticsearch.spark.rdd.AbstractEsRDD.esPartitions(AbstractEsRDD.scala:72)

at org.elasticsearch.spark.rdd.AbstractEsRDD.getPartitions(AbstractEsRDD.scala:44)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:251)

at org.apache.spark.rdd.MapPartitionsRDD.getPartitions(MapPartitionsRDD.scala:49)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:253)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:251)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:251)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2126)

at org.apache.spark.rdd.RDD$$anonfun$collect$1.apply(RDD.scala:945)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:363)

at org.apache.spark.rdd.RDD.collect(RDD.scala:944)

...

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.worker.DriverWrapper$.main(DriverWrapper.scala:65)

at org.apache.spark.deploy.worker.DriverWrapper.main(DriverWrapper.scala)

With fix application runs successfully.

Closes#23102 from markpavey/JIRA_SPARK-26137_DependencyUtilsFileSeparatorFix.

Authored-by: Mark Pavey <markpavey@exabre.co.uk>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

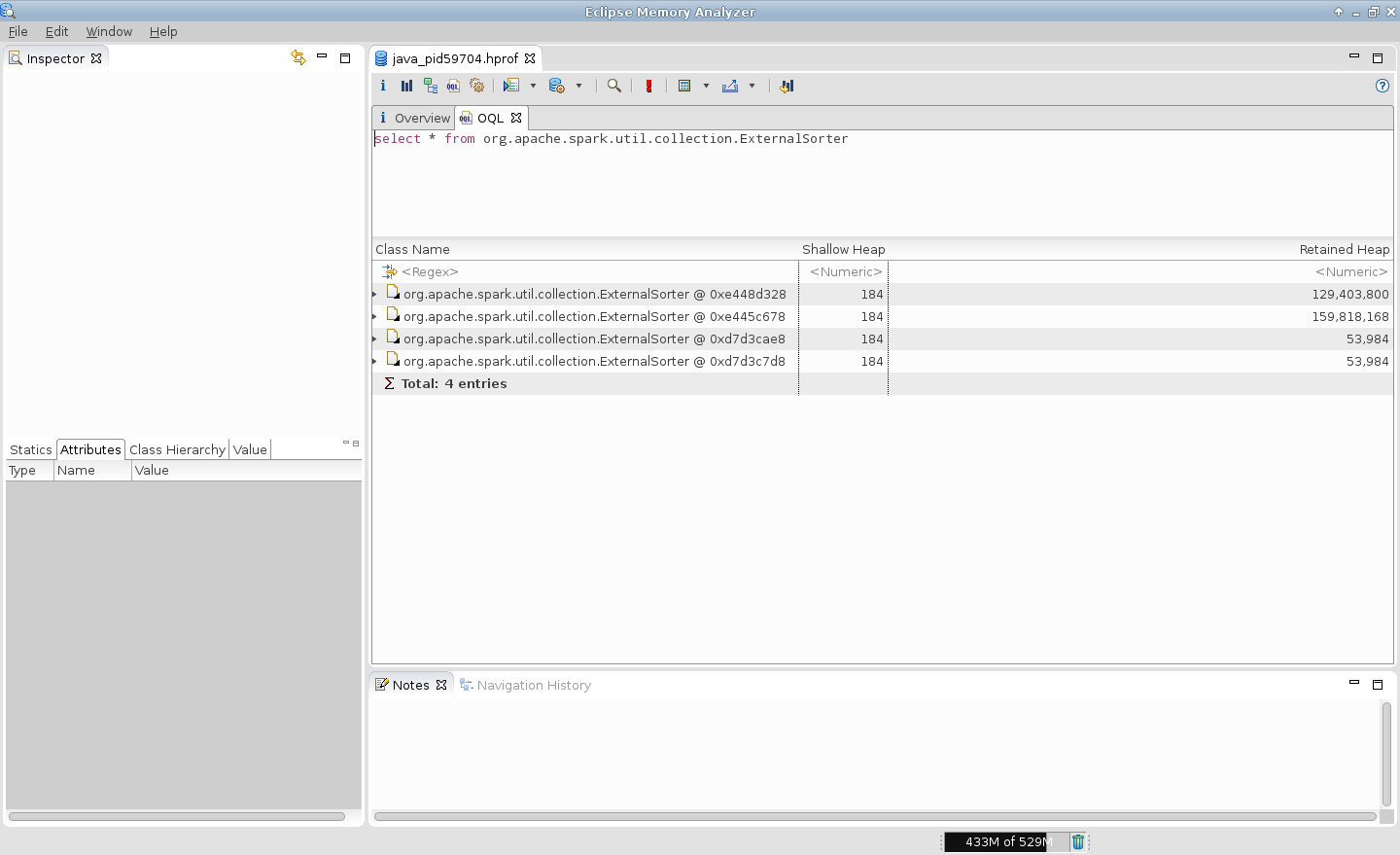

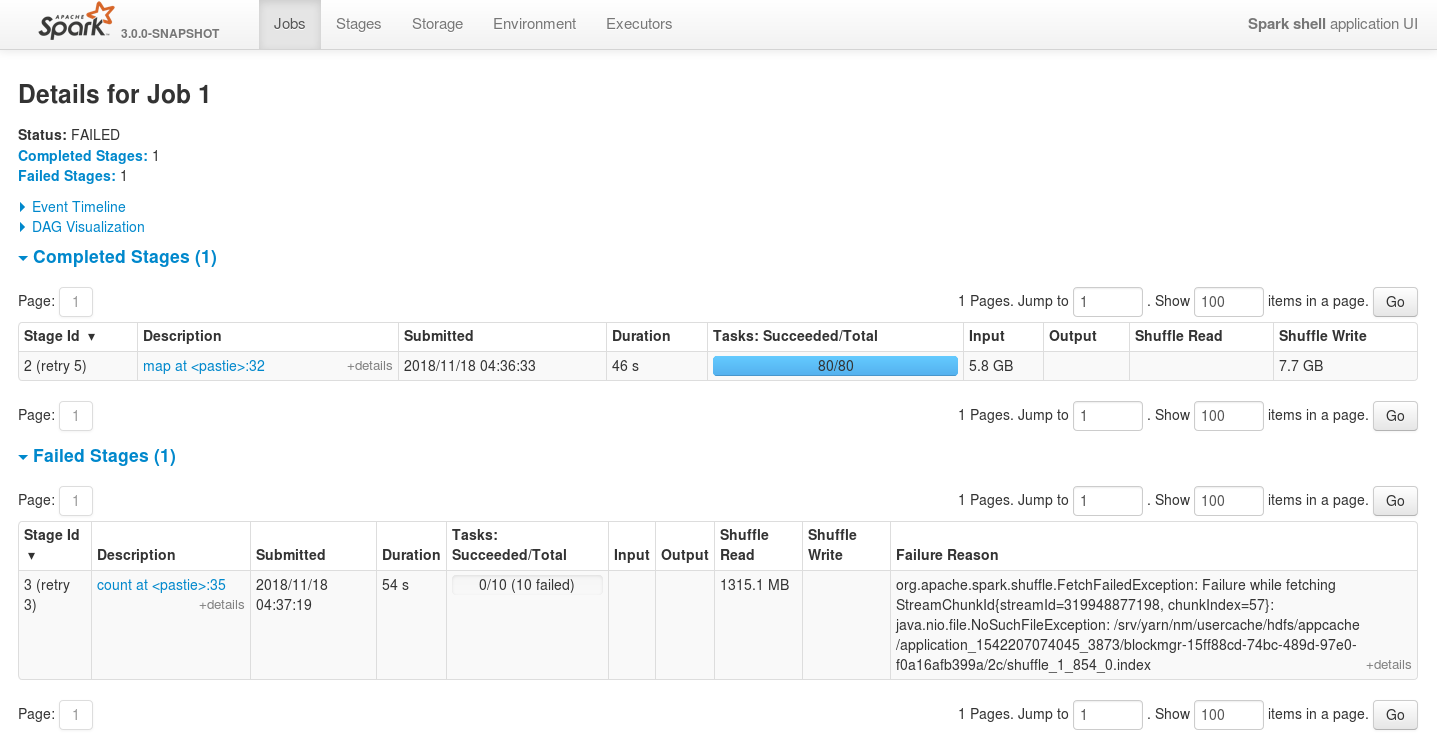

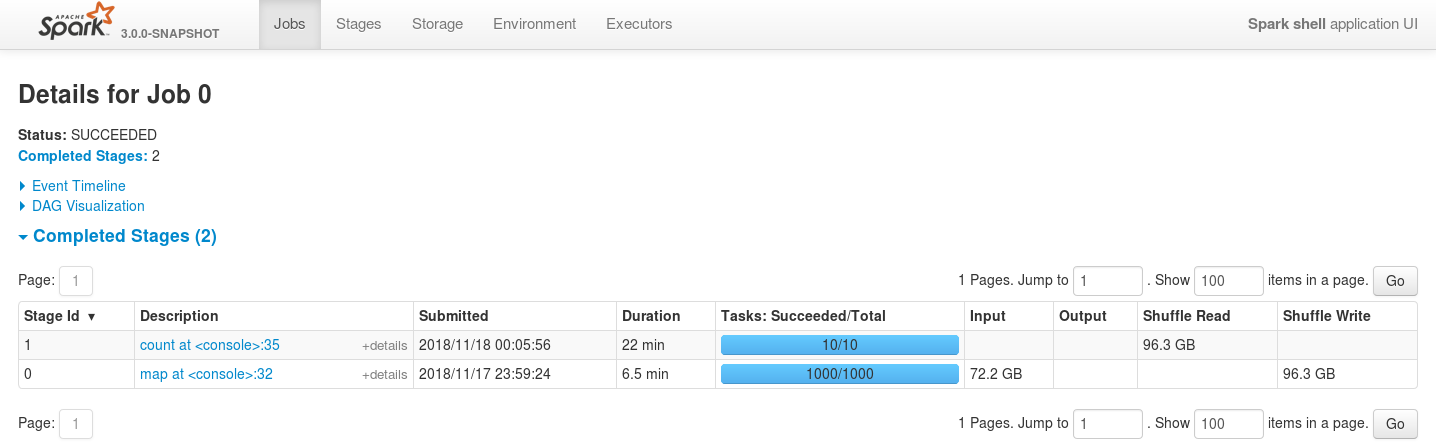

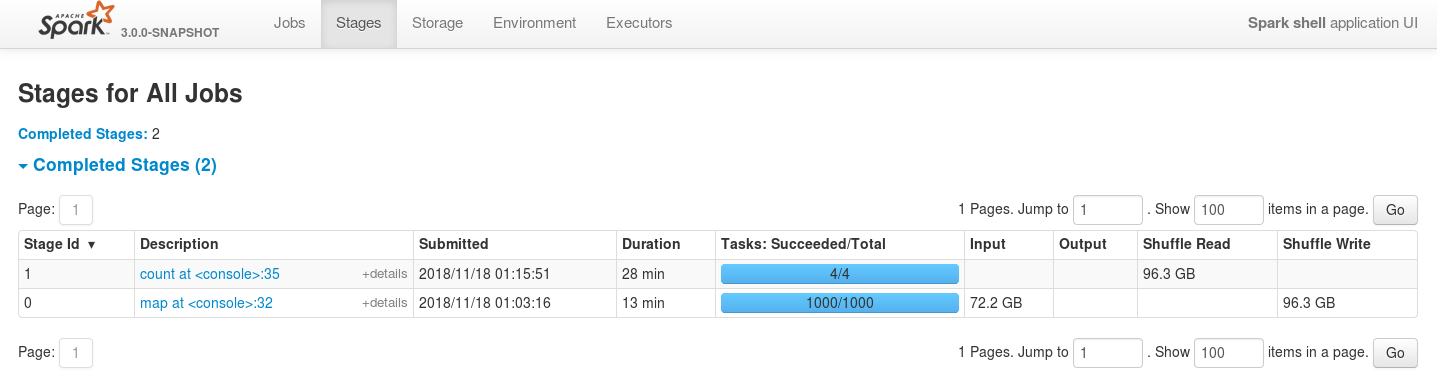

## What changes were proposed in this pull request?

This pull request fixes [SPARK-26114](https://issues.apache.org/jira/browse/SPARK-26114) issue that occurs when trying to reduce the number of partitions by means of coalesce without shuffling after shuffle-based transformations.

The leak occurs because of not cleaning up `ExternalSorter`'s `readingIterator` field as it's done for its `map` and `buffer` fields.

Additionally there are changes to the `CompletionIterator` to prevent capturing its `sub`-iterator and holding it even after the completion iterator completes. It is necessary because in some cases, e.g. in case of standard scala's `flatMap` iterator (which is used is `CoalescedRDD`'s `compute` method) the next value of the main iterator is assigned to `flatMap`'s `cur` field only after it is available.

For DAGs where ShuffledRDD is a parent of CoalescedRDD it means that the data should be fetched from the map-side of the shuffle, but the process of fetching this data consumes quite a lot of memory in addition to the memory already consumed by the iterator held by `flatMap`'s `cur` field (until it is reassigned).

For the following data

```scala

import org.apache.hadoop.io._

import org.apache.hadoop.io.compress._

import org.apache.commons.lang._

import org.apache.spark._

// generate 100M records of sample data

sc.makeRDD(1 to 1000, 1000)

.flatMap(item => (1 to 100000)

.map(i => new Text(RandomStringUtils.randomAlphanumeric(3).toLowerCase) -> new Text(RandomStringUtils.randomAlphanumeric(1024))))

.saveAsSequenceFile("/tmp/random-strings", Some(classOf[GzipCodec]))

```

and the following job

```scala

import org.apache.hadoop.io._

import org.apache.spark._

import org.apache.spark.storage._

val rdd = sc.sequenceFile("/tmp/random-strings", classOf[Text], classOf[Text])

rdd

.map(item => item._1.toString -> item._2.toString)

.repartitionAndSortWithinPartitions(new HashPartitioner(1000))

.coalesce(10,false)

.count

```

... executed like the following

```bash

spark-shell \

--num-executors=5 \

--executor-cores=2 \

--master=yarn \

--deploy-mode=client \

--conf spark.executor.memoryOverhead=512 \

--conf spark.executor.memory=1g \

--conf spark.dynamicAllocation.enabled=false \

--conf spark.executor.extraJavaOptions='-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/tmp -Dio.netty.noUnsafe=true'

```

... executors are always failing with OutOfMemoryErrors.

The main issue is multiple leaks of ExternalSorter references.

For example, in case of 2 tasks per executor it is expected to be 2 simultaneous instances of ExternalSorter per executor but heap dump generated on OutOfMemoryError shows that there are more ones.

P.S. This PR does not cover cases with CoGroupedRDDs which use ExternalAppendOnlyMap internally, which itself can lead to OutOfMemoryErrors in many places.

## How was this patch tested?

- Existing unit tests

- New unit tests

- Job executions on the live environment

Here is the screenshot before applying this patch

Here is the screenshot after applying this patch

And in case of reducing the number of executors even more the job is still stable

Closes#23083 from szhem/SPARK-26114-externalsorter-leak.

Authored-by: Sergey Zhemzhitsky <szhemzhitski@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is the write side counterpart to https://github.com/apache/spark/pull/23105

## How was this patch tested?

No behavior change expected, as it is a straightforward refactoring. Updated all existing test cases.

Closes#23106 from rxin/SPARK-26141.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: Reynold Xin <rxin@databricks.com>

## What changes were proposed in this pull request?

In https://github.com/apache/spark/pull/23105, due to working on two parallel PRs at once, I made the mistake of committing the copy of the PR that used the name ShuffleMetricsReporter for the interface, rather than the appropriate one ShuffleReadMetricsReporter. This patch fixes that.

## How was this patch tested?

This should be fine as long as compilation passes.

Closes#23147 from rxin/ShuffleReadMetricsReporter.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

Total tasks in the aggregated table and the tasks table are not matching some times in the WEBUI.

We need to force update the executor summary of the particular executorId, when ever last task of that executor has reached. Currently it force update based on last task on the stage end. So, for some particular executorId task might miss at the stage end.

Tests to reproduce:

```

bin/spark-shell --master yarn --conf spark.executor.instances=3

sc.parallelize(1 to 10000, 10).map{ x => throw new RuntimeException("Bad executor")}.collect()

```

Before patch:

After patch:

Closes#23038 from shahidki31/SPARK-25451.

Authored-by: Shahid <shahidki31@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Support column sort, pagination and search for Stage Page using jQuery DataTable and REST API. Before this commit, the Stage page generated a hard-coded HTML table that could not support search. Supporting search and sort (over all applications rather than the 20 entries in the current page) in any case will greatly improve the user experience.

Created the stagespage-template.html for displaying application information in datables. Added REST api endpoint and javascript code to fetch data from the endpoint and display it on the data table.

Because of the above change, certain functionalities in the page had to be modified to support the addition of datatables. For example, the toggle checkbox 'Select All' previously would add the checked fields as columns in the Task table and as rows in the Summary Metrics table, but after the change, only columns are added in the Task Table as it got tricky to add rows dynamically in the datatables.

## How was this patch tested?

I have attached the screenshots of the Stage Page UI before and after the fix.

**Before:**

<img width="1419" alt="30564304-35991e1c-9c8a-11e7-850f-2ac7a347f600" src="https://user-images.githubusercontent.com/22228190/42137915-52054558-7d3a-11e8-8c85-433b2c94161d.png">

<img width="1435" alt="31360592-cbaa2bae-ad14-11e7-941d-95b4c7d14970" src="https://user-images.githubusercontent.com/22228190/42137928-79df500a-7d3a-11e8-9068-5630afe46ff3.png">

**After:**

<img width="1432" alt="31360591-c5650ee4-ad14-11e7-9665-5a08d8f21830" src="https://user-images.githubusercontent.com/22228190/42137936-a3fb9f42-7d3a-11e8-8502-22b3897cbf64.png">

<img width="1388" alt="31360604-d266b6b0-ad14-11e7-94b5-dcc4bb5443f4" src="https://user-images.githubusercontent.com/22228190/42137970-0fabc58c-7d3b-11e8-95ad-383b1bd1f106.png">

Closes#21688 from pgandhi999/SPARK-21809-2.3.

Authored-by: pgandhi <pgandhi@oath.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

The DOI foundation recommends [this new resolver](https://www.doi.org/doi_handbook/3_Resolution.html#3.8). Accordingly, this PR re`sed`s all static DOI links ;-)

## How was this patch tested?

It wasn't, since it seems as safe as a "[typo fix](https://spark.apache.org/contributing.html)".

In case any of the files is included from other projects, and should be updated there, please let me know.

Closes#23129 from katrinleinweber/resolve-DOIs-securely.

Authored-by: Katrin Leinweber <9948149+katrinleinweber@users.noreply.github.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In the summary section of stage page:

1. the following metrics names can be revised:

Output => Output Size / Records

Shuffle Read: => Shuffle Read Size / Records

Shuffle Write => Shuffle Write Size / Records

After changes, the names are more clear, and consistent with the other names in the same page.

2. The associated job id URL should not contain the 3 tails spaces. Reduce the number of spaces to one, and exclude the space from link. This is consistent with SQL execution page.

## How was this patch tested?

Manual check:

Closes#23125 from gengliangwang/reviseStagePage.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

`deserialize` for kryo, the type of input parameter is ByteBuffer, if it is not backed by an accessible byte array. it will throw `UnsupportedOperationException`

Exception Info:

```

java.lang.UnsupportedOperationException was thrown.

java.lang.UnsupportedOperationException

at java.nio.ByteBuffer.array(ByteBuffer.java:994)

at org.apache.spark.serializer.KryoSerializerInstance.deserialize(KryoSerializer.scala:362)

```

## How was this patch tested?

Added a unit test

Closes#22779 from 10110346/InputStreamKryo.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This patch defines an internal Spark interface for reporting shuffle metrics and uses that in shuffle reader. Before this patch, shuffle metrics is tied to a specific implementation (using a thread local temporary data structure and accumulators). After this patch, callers that define their own shuffle RDDs can create a custom metrics implementation.

With this patch, we would be able to create a better metrics for the SQL layer, e.g. reporting shuffle metrics in the SQL UI, for each exchange operator.

Note that I'm separating read side and write side implementations, as they are very different, to simplify code review. Write side change is at https://github.com/apache/spark/pull/23106

## How was this patch tested?

No behavior change expected, as it is a straightforward refactoring. Updated all existing test cases.

Closes#23105 from rxin/SPARK-26140.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

the pr #20014 which introduced `SparkOutOfMemoryError` to avoid killing the entire executor when an `OutOfMemoryError `is thrown.

so apply for memory using `MemoryConsumer. allocatePage `when catch exception, use `SparkOutOfMemoryError `instead of `OutOfMemoryError`

## How was this patch tested?

N / A

Closes#23084 from heary-cao/SparkOutOfMemoryError.

Authored-by: caoxuewen <cao.xuewen@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR is a follow-up PR of #21600 to fix the unnecessary UI redirect.

## How was this patch tested?

Local verification

Closes#23116 from jerryshao/SPARK-24553.

Authored-by: jerryshao <jerryshao@tencent.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose:

- new SQL config `spark.sql.debug.maxToStringFields` to control maximum number fields up to which `truncatedString` cuts its input sequences.

- Moving `truncatedString` out of `core` to `sql/catalyst` because it is used only in the `sql/catalyst` packages for restricting number of fields converted to strings from `TreeNode` and expressions of`StructType`.

## How was this patch tested?

Added a test to `QueryExecutionSuite` to check that `spark.sql.debug.maxToStringFields` impacts to behavior of `truncatedString`.

Closes#23039 from MaxGekk/truncated-string-catalyst.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Task summary table displays the summary of the task table in the stage page. However, the 'Duration' metrics of 'task summary' table and 'task table' are not matching. The reason is because, in the 'task summary' we display 'executorRunTime' as the duration, and in the 'task table' the actual duration of the task. Except duration metrics, all other metrics are properly displaying in the task summary.

In Spark2.2, used to show 'executorRunTime' as duration in the 'taskTable'. That is why, in summary metrics also the 'exeuctorRunTime' shows as the duration. So, we need to show 'executorRunTime' as the duration in the tasks table to follow the same behaviour as the previous versions of spark.

## How was this patch tested?

Before patch:

After patch:

Closes#23081 from shahidki31/duratinSummary.

Authored-by: Shahid <shahidki31@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Hotfix a change to SparkHadoopUtil that doesn't work in 2.11

## How was this patch tested?

Existing tests.

Closes#23097 from srowen/SPARK-26043.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Introducing spark.ui.requestHeaderSize for configuring Jetty's HTTP requestHeaderSize.

This way long authorization field does not lead to HTTP 413.

## How was this patch tested?

Manually with curl (which version must be at least 7.55).

With the original default value (8k limit):

```bash

# Starting history server with default requestHeaderSize

$ ./sbin/start-history-server.sh

starting org.apache.spark.deploy.history.HistoryServer, logging to /Users/attilapiros/github/spark/logs/spark-attilapiros-org.apache.spark.deploy.history.HistoryServer-1-apiros-MBP.lan.out

# Creating huge header

$ echo -n "X-Custom-Header: " > cookie

$ printf 'A%.0s' {1..9500} >> cookie

# HTTP GET with huge header fails with 431

$ curl -H cookie http://458apiros-MBP.lan:18080/

<h1>Bad Message 431</h1><pre>reason: Request Header Fields Too Large</pre>

# The log contains the error

$ tail -1 /Users/attilapiros/github/spark/logs/spark-attilapiros-org.apache.spark.deploy.history.HistoryServer-1-apiros-MBP.lan.out

18/11/19 21:24:28 WARN HttpParser: Header is too large 8193>8192

```

After:

```bash

# Creating the history properties file with the increased requestHeaderSize

$ echo spark.ui.requestHeaderSize=10000 > history.properties

# Starting Spark History Server with the settings

$ ./sbin/start-history-server.sh --properties-file history.properties

starting org.apache.spark.deploy.history.HistoryServer, logging to /Users/attilapiros/github/spark/logs/spark-attilapiros-org.apache.spark.deploy.history.HistoryServer-1-apiros-MBP.lan.out

# HTTP GET with huge header gives back HTML5 (I have added here only just a part of the response)

$ curl -H cookie http://458apiros-MBP.lan:18080/

<!DOCTYPE html><html>

<head>...

<link rel="shortcut icon" href="/static/spark-logo-77x50px-hd.png"></link>

<title>History Server</title>

</head>

<body>

...

```

Closes#23090 from attilapiros/JettyHeaderSize.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

The build has a lot of deprecation warnings. Some are new in Scala 2.12 and Java 11. We've fixed some, but I wanted to take a pass at fixing lots of easy miscellaneous ones here.

They're too numerous and small to list here; see the pull request. Some highlights:

- `BeanInfo` is deprecated in 2.12, and BeanInfo classes are pretty ancient in Java. Instead, case classes can explicitly declare getters

- Eta expansion of zero-arg methods; foo() becomes () => foo() in many cases

- Floating-point Range is inexact and deprecated, like 0.0 to 100.0 by 1.0

- finalize() is finally deprecated (just needs to be suppressed)

- StageInfo.attempId was deprecated and easiest to remove here

I'm not now going to touch some chunks of deprecation warnings:

- Parquet deprecations

- Hive deprecations (particularly serde2 classes)

- Deprecations in generated code (mostly Thriftserver CLI)

- ProcessingTime deprecations (we may need to revive this class as internal)

- many MLlib deprecations because they concern methods that may be removed anyway

- a few Kinesis deprecations I couldn't figure out

- Mesos get/setRole, which I don't know well

- Kafka/ZK deprecations (e.g. poll())

- Kinesis

- a few other ones that will probably resolve by deleting a deprecated method

## How was this patch tested?

Existing tests, including manual testing with the 2.11 build and Java 11.

Closes#23065 from srowen/SPARK-26090.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Empty chunk in ChunkedByteBuffer will truncate the ChunkedByteBufferInputStream.

The detail reason is described in: https://issues.apache.org/jira/browse/SPARK-26068

## How was this patch tested?

Modified current UT to cover this case.

Closes#23040 from LinhongLiu/fix-empty-chunked-byte-buffer.

Lead-authored-by: Liu,Linhong <liulinhong@baidu.com>

Co-authored-by: Xianjin YE <yexianjin@baidu.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Make SparkHadoopUtil private to Spark

## How was this patch tested?

Existing tests.

Closes#23066 from srowen/SPARK-26043.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Don't propagate SPARK_CONF_DIR to the driver in mesos cluster mode.

## How was this patch tested?

I built the 2.3.2 tag with this patch added and deployed a test job to a mesos cluster to confirm that the incorrect SPARK_CONF_DIR was no longer passed from the submit command.

Closes#22937 from mpmolek/fix-conf-dir.

Authored-by: Matt Molek <mpmolek@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

SparkSubmit determines pyspark app by the suffix of primary resource but Livy

uses "spark-internal" as the primary resource when calling spark-submit,

therefore args.isPython is set to false in SparkSubmit.scala.

In Yarn mode, SparkSubmit module is responsible for resolving maven coordinates

and adding them to "spark.submit.pyFiles" so that python's system path can be set correctly.

The fix is to resolve maven coordinates not only when args.isPython is true,

but also when primary resource is spark-internal.

Tested the patch with Livy submitting pyspark app, spark-submit, pyspark with or without packages config.

Signed-off-by: Shanyu Zhao <shzhaomicrosoft.com>

Closes#23009 from shanyu/shanyu-26011.

Authored-by: Shanyu Zhao <shzhao@microsoft.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

register following classes in Kryo:

"org.apache.spark.ml.stat.distribution.MultivariateGaussian",

"org.apache.spark.mllib.stat.distribution.MultivariateGaussian"

## How was this patch tested?

added tests

Due to existing module dependency, I can not import spark-core in mllib-local's testsuits, so I do not add testsuite in `org.apache.spark.ml.stat.distribution.MultivariateGaussianSuite`.

And I notice that class `ClusterStats` in `ClusteringEvaluator` is registered in a different way, should it be modified to keep in line with others in ML? srowen

Closes#22974 from zhengruifeng/kryo_MultivariateGaussian.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR makes Spark's default Scala version as 2.12, and Scala 2.11 will be the alternative version. This implies that Scala 2.12 will be used by our CI builds including pull request builds.

We'll update the Jenkins to include a new compile-only jobs for Scala 2.11 to ensure the code can be still compiled with Scala 2.11.

## How was this patch tested?

existing tests

Closes#22967 from dbtsai/scala2.12.

Authored-by: DB Tsai <d_tsai@apple.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Add scala and java lint check rules to ban the usage of `throw new xxxErrors` and fix up all exists instance followed by https://github.com/apache/spark/pull/22989#issuecomment-437939830. See more details in https://github.com/apache/spark/pull/22969.

## How was this patch tested?

Local test with lint-scala and lint-java.

Closes#22989 from xuanyuanking/SPARK-25986.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

…. Other related changes to get JDK 11 working, to test

## What changes were proposed in this pull request?

- Access `sun.misc.Cleaner` (Java 8) and `jdk.internal.ref.Cleaner` (JDK 9+) by reflection (note: the latter only works if illegal reflective access is allowed)

- Access `sun.misc.Unsafe.invokeCleaner` in Java 9+ instead of `sun.misc.Cleaner` (Java 8)

In order to test anything on JDK 11, I also fixed a few small things, which I include here:

- Fix minor JDK 11 compile issues

- Update scala plugin, Jetty for JDK 11, to facilitate tests too

This doesn't mean JDK 11 tests all pass now, but lots do. Note also that the JDK 9+ solution for the Cleaner has a big caveat.

## How was this patch tested?

Existing tests. Manually tested JDK 11 build and tests, and tests covering this change appear to pass. All Java 8 tests should still pass, but this change alone does not achieve full JDK 11 compatibility.

Closes#22993 from srowen/SPARK-24421.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

Currently, we do not have a mechanism to collect driver logs if a user chooses

to run their application in client mode. This is a big issue as admin teams need

to create their own mechanisms to capture driver logs.

This commit adds a logger which, if enabled, adds a local log appender to the

root logger and asynchronously syncs it an application specific log file on hdfs

(Spark Driver Log Dir).

Additionally, this collects spark-shell driver logs at INFO level by default.

The change is that instead of setting root logger level to WARN, we will set the

consoleAppender threshold to WARN, in case of spark-shell. This ensures that

only WARN logs are printed on CONSOLE but other log appenders still capture INFO

(or the default log level logs).

1. Verified that logs are written to local and remote dir

2. Added a unit test case

3. Verified this for spark-shell, client mode and pyspark.

4. Verified in both non-kerberos and kerberos environment

5. Verified with following unexpected termination conditions: Ctrl + C, Driver

OOM, Large Log Files

6. Ran an application in spark-shell and ensured that driver logs were

captured at INFO level

7. Started the application at WARN level, programmatically changed the level to

INFO and ensured that logs on console were printed at INFO level

Closes#22504 from ankuriitg/ankurgupta/SPARK-25118.

Authored-by: ankurgupta <ankur.gupta@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

* Implement (optional) use of KryoPool in KryoSerializer, an alternative to the existing implementation of caching a Kryo instance inside KryoSerializerInstance

* Add config key & documentation of spark.kryo.pool in order to turn this on

* Add benchmark KryoSerializerBenchmark to compare new and old implementation

* Add results of benchmark

## How was this patch tested?

Added new tests inside KryoSerializerSuite to test the pool implementation as well as added the pool option to the existing regression testing for SPARK-7766

This is my original work and I license the work to the project under the project’s open source license.

Closes#22855 from patrickbrownsync/kryo-pool.

Authored-by: Patrick Brown <patrick.brown@blyncsy.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Deprecated in Java 11, replace Class.newInstance with Class.getConstructor.getInstance, and primtive wrapper class constructors with valueOf or equivalent

## How was this patch tested?

Existing tests.

Closes#22988 from srowen/SPARK-25984.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently, Spark writes Spark version number into Hive Table properties with `spark.sql.create.version`.

```

parameters:{

spark.sql.sources.schema.part.0={

"type":"struct",

"fields":[{"name":"a","type":"integer","nullable":true,"metadata":{}}]

},

transient_lastDdlTime=1541142761,

spark.sql.sources.schema.numParts=1,

spark.sql.create.version=2.4.0

}

```

This PR aims to write Spark versions to ORC/Parquet file metadata with `org.apache.spark.sql.create.version` because we used `org.apache.` prefix in Parquet metadata already. It's different from Hive Table property key `spark.sql.create.version`, but it seems that we cannot change Hive Table property for backward compatibility.

After this PR, ORC and Parquet file generated by Spark will have the following metadata.

**ORC (`native` and `hive` implmentation)**

```

$ orc-tools meta /tmp/o

File Version: 0.12 with ...

...

User Metadata:

org.apache.spark.sql.create.version=3.0.0

```

**PARQUET**

```

$ parquet-tools meta /tmp/p

...

creator: parquet-mr version 1.10.0 (build 031a6654009e3b82020012a18434c582bd74c73a)

extra: org.apache.spark.sql.create.version = 3.0.0

extra: org.apache.spark.sql.parquet.row.metadata = {"type":"struct","fields":[{"name":"id","type":"long","nullable":false,"metadata":{}}]}

```

## How was this patch tested?

Pass the Jenkins with newly added test cases.

This closes#22255.

Closes#22932 from dongjoon-hyun/SPARK-25102.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

HistoryPage.scala counts applications (with a predicate depending on if it is displaying incomplete or complete applications) to check if it must display the dataTable.

Since it only checks if allAppsSize > 0, we could use exists method on the iterator. This way we stop iterating at the first occurence found.

Such a change has been relevant (roughly 12s improvement on page loading) on our cluster that runs tens of thousands of jobs per day.

Closes#22982 from Willymontaz/SPARK-25973.

Authored-by: William Montaz <w.montaz@criteo.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

- Remove some AccumulableInfo .apply() methods

- Remove non-label-specific multiclass precision/recall/fScore in favor of accuracy

- Remove toDegrees/toRadians in favor of degrees/radians (SparkR: only deprecated)

- Remove approxCountDistinct in favor of approx_count_distinct (SparkR: only deprecated)

- Remove unused Python StorageLevel constants

- Remove Dataset unionAll in favor of union

- Remove unused multiclass option in libsvm parsing

- Remove references to deprecated spark configs like spark.yarn.am.port

- Remove TaskContext.isRunningLocally

- Remove ShuffleMetrics.shuffle* methods

- Remove BaseReadWrite.context in favor of session

- Remove Column.!== in favor of =!=

- Remove Dataset.explode

- Remove Dataset.registerTempTable

- Remove SQLContext.getOrCreate, setActive, clearActive, constructors

Not touched yet

- everything else in MLLib

- HiveContext

- Anything deprecated more recently than 2.0.0, generally

## How was this patch tested?

Existing tests

Closes#22921 from srowen/SPARK-25908.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: hyukjinkwon <gurwls223@apache.org>

Co-authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Removal of intermediate structures in HighlyCompressedMapStatus will speed up its creation and deserialization time.

https://issues.apache.org/jira/browse/SPARK-25885

## How was this patch tested?

Additional tests are not necessary for the patch.

Closes#22894 from Koraseg/mapStatusesOptimization.

Authored-by: koraseg <artem.kupchinsky@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

JVMs can't allocate arrays of length exactly Int.MaxValue, so ensure we never try to allocate an array that big. This commit changes some defaults & configs to gracefully fallover to something that doesn't require one large array in some cases; in other cases it simply improves an error message for cases which will still fail.

Closes#22818 from squito/SPARK-25827.

Authored-by: Imran Rashid <irashid@cloudera.com>