## What changes were proposed in this pull request?

This PR adds a new collection function: shuffle. It generates a random permutation of the given array. This implementation uses the "inside-out" version of Fisher-Yates algorithm.

## How was this patch tested?

New tests are added to CollectionExpressionsSuite.scala and DataFrameFunctionsSuite.scala.

Author: Takuya UESHIN <ueshin@databricks.com>

Author: pkuwm <ihuizhi.lu@gmail.com>

Closes#21802 from ueshin/issues/SPARK-23928/shuffle.

## What changes were proposed in this pull request?

Add a JDBC Option "pushDownPredicate" (default `true`) to allow/disallow predicate push-down in JDBC data source.

## How was this patch tested?

Add a test in `JDBCSuite`

Author: maryannxue <maryannxue@apache.org>

Closes#21875 from maryannxue/spark-24288.

## What changes were proposed in this pull request?

AnalysisBarrier was introduced in SPARK-20392 to improve analysis speed (don't re-analyze nodes that have already been analyzed).

Before AnalysisBarrier, we already had some infrastructure in place, with analysis specific functions (resolveOperators and resolveExpressions). These functions do not recursively traverse down subplans that are already analyzed (with a mutable boolean flag _analyzed). The issue with the old system was that developers started using transformDown, which does a top-down traversal of the plan tree, because there was not top-down resolution function, and as a result analyzer performance became pretty bad.

In order to fix the issue in SPARK-20392, AnalysisBarrier was introduced as a special node and for this special node, transform/transformUp/transformDown don't traverse down. However, the introduction of this special node caused a lot more troubles than it solves. This implicit node breaks assumptions and code in a few places, and it's hard to know when analysis barrier would exist, and when it wouldn't. Just a simple search of AnalysisBarrier in PR discussions demonstrates it is a source of bugs and additional complexity.

Instead, this pull request removes AnalysisBarrier and reverts back to the old approach. We added infrastructure in tests that fail explicitly if transform methods are used in the analyzer.

## How was this patch tested?

Added a test suite AnalysisHelperSuite for testing the resolve* methods and transform* methods.

Author: Reynold Xin <rxin@databricks.com>

Author: Xiao Li <gatorsmile@gmail.com>

Closes#21822 from rxin/SPARK-24865.

## What changes were proposed in this pull request?

In most cases, we should use `spark.sessionState.newHadoopConf()` instead of `sparkContext.hadoopConfiguration`, so that the hadoop configurations specified in Spark session

configuration will come into effect.

Add a rule matching `spark.sparkContext.hadoopConfiguration` or `spark.sqlContext.sparkContext.hadoopConfiguration` to prevent the usage.

## How was this patch tested?

Unit test

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#21873 from gengliangwang/linterRule.

## What changes were proposed in this pull request?

This is an extension to the original PR, in which rule exclusion did not work for classes derived from Optimizer, e.g., SparkOptimizer.

To solve this issue, Optimizer and its derived classes will define/override `defaultBatches` and `nonExcludableRules` in order to define its default rule set as well as rules that cannot be excluded by the SQL config. In the meantime, Optimizer's `batches` method is dedicated to the rule exclusion logic and is defined "final".

## How was this patch tested?

Added UT.

Author: maryannxue <maryannxue@apache.org>

Closes#21876 from maryannxue/rule-exclusion.

## What changes were proposed in this pull request?

This PR aims to the followings.

1. Like `com.databricks.spark.csv` mapping, we had better map `com.databricks.spark.avro` to built-in Avro data source.

2. Remove incorrect error message, `Please find an Avro package at ...`.

## How was this patch tested?

Pass the newly added tests.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#21878 from dongjoon-hyun/SPARK-24924.

## What changes were proposed in this pull request?

If we use `reverse` function for array type of primitive type containing `null` and the child array is `UnsafeArrayData`, the function returns a wrong result because `UnsafeArrayData` doesn't define the behavior of re-assignment, especially we can't set a valid value after we set `null`.

## How was this patch tested?

Added some tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#21830 from ueshin/issues/SPARK-24878/fix_reverse.

## What changes were proposed in this pull request?

```Scala

val udf1 = udf({(x: Int, y: Int) => x + y})

val df = spark.range(0, 3).toDF("a")

.withColumn("b", udf1($"a", udf1($"a", lit(10))))

df.cache()

df.write.saveAsTable("t")

```

Cache is not being used because the plans do not match with the cached plan. This is a regression caused by the changes we made in AnalysisBarrier, since not all the Analyzer rules are idempotent.

## How was this patch tested?

Added a test.

Also found a bug in the DSV1 write path. This is not a regression. Thus, opened a separate JIRA https://issues.apache.org/jira/browse/SPARK-24869

Author: Xiao Li <gatorsmile@gmail.com>

Closes#21821 from gatorsmile/testMaster22.

## What changes were proposed in this pull request?

Besides spark setting spark.sql.sources.partitionOverwriteMode also allow setting partitionOverWriteMode per write

## How was this patch tested?

Added unit test in InsertSuite

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Koert Kuipers <koert@tresata.com>

Closes#21818 from koertkuipers/feat-partition-overwrite-mode-per-write.

## What changes were proposed in this pull request?

In the PR, I propose to extend the `StructType`/`StructField` classes by new method `toDDL` which converts a value of the `StructType`/`StructField` type to a string formatted in DDL style. The resulted string can be used in a table creation.

The `toDDL` method of `StructField` is reused in `SHOW CREATE TABLE`. In this way the PR fixes the bug of unquoted names of nested fields.

## How was this patch tested?

I add a test for checking the new method and 2 round trip tests: `fromDDL` -> `toDDL` and `toDDL` -> `fromDDL`

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#21803 from MaxGekk/to-ddl.

## What changes were proposed in this pull request?

Improvement `IN` predicate type mismatched message:

```sql

Mismatched columns:

[(, t, 4, ., `, t, 4, a, `, :, d, o, u, b, l, e, ,, , t, 5, ., `, t, 5, a, `, :, d, e, c, i, m, a, l, (, 1, 8, ,, 0, ), ), (, t, 4, ., `, t, 4, c, `, :, s, t, r, i, n, g, ,, , t, 5, ., `, t, 5, c, `, :, b, i, g, i, n, t, )]

```

After this patch:

```sql

Mismatched columns:

[(t4.`t4a`:double, t5.`t5a`:decimal(18,0)), (t4.`t4c`:string, t5.`t5c`:bigint)]

```

## How was this patch tested?

unit tests

Author: Yuming Wang <yumwang@ebay.com>

Closes#21863 from wangyum/SPARK-18874.

## What changes were proposed in this pull request?

Add support for custom encoding on csv writer, see https://issues.apache.org/jira/browse/SPARK-19018

## How was this patch tested?

Added two unit tests in CSVSuite

Author: crafty-coder <carlospb86@gmail.com>

Author: Carlos <crafty-coder@users.noreply.github.com>

Closes#20949 from crafty-coder/master.

## What changes were proposed in this pull request?

Thanks to henryr for the original idea at https://github.com/apache/spark/pull/21049

Description from the original PR :

Subqueries (at least in SQL) have 'bag of tuples' semantics. Ordering

them is therefore redundant (unless combined with a limit).

This patch removes the top sort operators from the subquery plans.

This closes https://github.com/apache/spark/pull/21049.

## How was this patch tested?

Added test cases in SubquerySuite to cover in, exists and scalar subqueries.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#21853 from dilipbiswal/SPARK-23957.

## What changes were proposed in this pull request?

When `trueValue` and `falseValue` are semantic equivalence, the condition expression in `if` can be removed to avoid extra computation in runtime.

## How was this patch tested?

Test added.

Author: DB Tsai <d_tsai@apple.com>

Closes#21848 from dbtsai/short-circuit-if.

## What changes were proposed in this pull request?

The HandleNullInputsForUDF would always add a new `If` node every time it is applied. That would cause a difference between the same plan being analyzed once and being analyzed twice (or more), thus raising issues like plan not matched in the cache manager. The solution is to mark the arguments as null-checked, which is to add a "KnownNotNull" node above those arguments, when adding the UDF under an `If` node, because clearly the UDF will not be called when any of those arguments is null.

## How was this patch tested?

Add new tests under sql/UDFSuite and AnalysisSuite.

Author: maryannxue <maryannxue@apache.org>

Closes#21851 from maryannxue/spark-24891.

## What changes were proposed in this pull request?

This updates the DataSourceV2 API to use InternalRow instead of Row for the default case with no scan mix-ins.

Support for readers that produce Row is added through SupportsDeprecatedScanRow, which matches the previous API. Readers that used Row now implement this class and should be migrated to InternalRow.

Readers that previously implemented SupportsScanUnsafeRow have been migrated to use no SupportsScan mix-ins and produce InternalRow.

## How was this patch tested?

This uses existing tests.

Author: Ryan Blue <blue@apache.org>

Closes#21118 from rdblue/SPARK-23325-datasource-v2-internal-row.

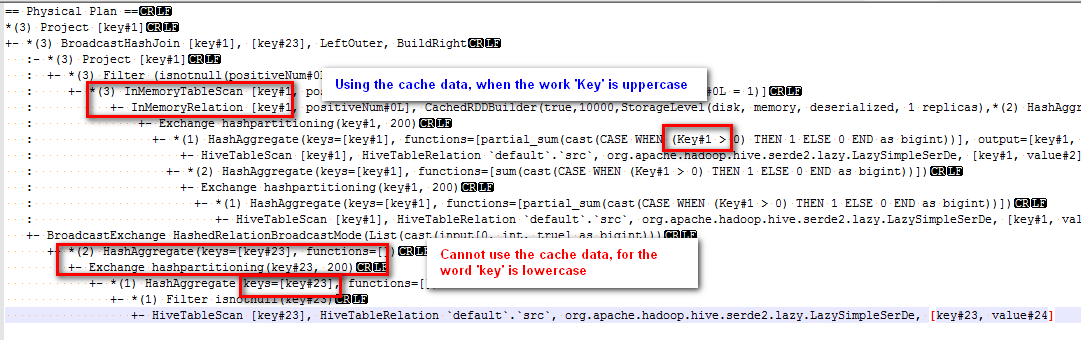

## What changes were proposed in this pull request?

Modified the canonicalized to not case-insensitive.

Before the PR, cache can't work normally if there are case letters in SQL,

for example:

sql("CREATE TABLE IF NOT EXISTS src (key INT, value STRING) USING hive")

sql("select key, sum(case when Key > 0 then 1 else 0 end) as positiveNum " +

"from src group by key").cache().createOrReplaceTempView("src_cache")

sql(

s"""select a.key

from

(select key from src_cache where positiveNum = 1)a

left join

(select key from src_cache )b

on a.key=b.key

""").explain

The physical plan of the sql is:

The subquery "select key from src_cache where positiveNum = 1" on the left of join can use the cache data, but the subquery "select key from src_cache" on the right of join cannot use the cache data.

## How was this patch tested?

new added test

Author: 10129659 <chen.yanshan@zte.com.cn>

Closes#21823 from eatoncys/canonicalized.

## What changes were proposed in this pull request?

Streaming queries with watermarks do not work with Trigger.Once because of the following.

- Watermark is updated in the driver memory after a batch completes, but it is persisted to checkpoint (in the offset log) only when the next batch is planned

- In trigger.once, the query terminated as soon as one batch has completed. Hence, the updated watermark is never persisted anywhere.

The simple solution is to persist the updated watermark value in the commit log when a batch is marked as completed. Then the next batch, in the next trigger.once run can pick it up from the commit log.

## How was this patch tested?

new unit tests

Co-authored-by: Tathagata Das <tathagata.das1565gmail.com>

Co-authored-by: c-horn <chorn4033gmail.com>

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#21746 from tdas/SPARK-24699.

This commit adds the `cascadeTruncate` option to the JDBC datasource

API, for databases that support this functionality (PostgreSQL and

Oracle at the moment). This allows for applying a cascading truncate

that affects tables that have foreign key constraints on the table

being truncated.

## What changes were proposed in this pull request?

Add `cascadeTruncate` option to JDBC datasource API. Allow this to affect the

`TRUNCATE` query for databases that support this option.

## How was this patch tested?

Existing tests for `truncateQuery` were updated. Also, an additional test was added

to ensure that the correct syntax was applied, and that enabling the config for databases

that do not support this option does not result in invalid queries.

Author: Daniel van der Ende <daniel.vanderende@gmail.com>

Closes#20057 from danielvdende/SPARK-22880.

## What changes were proposed in this pull request?

### What's problem?

In some cases, sub scalar query could throw a NPE, which is caused in execution side.

```

java.lang.NullPointerException

at org.apache.spark.sql.execution.FileSourceScanExec.<init>(DataSourceScanExec.scala:169)

at org.apache.spark.sql.execution.FileSourceScanExec.doCanonicalize(DataSourceScanExec.scala:526)

at org.apache.spark.sql.execution.FileSourceScanExec.doCanonicalize(DataSourceScanExec.scala:159)

at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized$lzycompute(QueryPlan.scala:211)

at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized(QueryPlan.scala:210)

at org.apache.spark.sql.catalyst.plans.QueryPlan$$anonfun$3.apply(QueryPlan.scala:225)

at org.apache.spark.sql.catalyst.plans.QueryPlan$$anonfun$3.apply(QueryPlan.scala:225)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.TraversableLike$$anonfun$map$1.apply(TraversableLike.scala:234)

at scala.collection.immutable.List.foreach(List.scala:392)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:234)

at scala.collection.immutable.List.map(List.scala:296)

at org.apache.spark.sql.catalyst.plans.QueryPlan.doCanonicalize(QueryPlan.scala:225)

at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized$lzycompute(QueryPlan.scala:211)

at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized(QueryPlan.scala:210)

at org.apache.spark.sql.catalyst.plans.QueryPlan.sameResult(QueryPlan.scala:258)

at org.apache.spark.sql.execution.ScalarSubquery.semanticEquals(subquery.scala:58)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions$Expr.equals(EquivalentExpressions.scala:36)

at scala.collection.mutable.HashTable$class.elemEquals(HashTable.scala:364)

at scala.collection.mutable.HashMap.elemEquals(HashMap.scala:40)

at scala.collection.mutable.HashTable$class.scala$collection$mutable$HashTable$$findEntry0(HashTable.scala:139)

at scala.collection.mutable.HashTable$class.findEntry(HashTable.scala:135)

at scala.collection.mutable.HashMap.findEntry(HashMap.scala:40)

at scala.collection.mutable.HashMap.get(HashMap.scala:70)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.addExpr(EquivalentExpressions.scala:56)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.addExprTree(EquivalentExpressions.scala:97)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions$$anonfun$addExprTree$1.apply(EquivalentExpressions.scala:98)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions$$anonfun$addExprTree$1.apply(EquivalentExpressions.scala:98)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.addExprTree(EquivalentExpressions.scala:98)

at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext$$anonfun$subexpressionElimination$1.apply(CodeGenerator.scala:1102)

at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext$$anonfun$subexpressionElimination$1.apply(CodeGenerator.scala:1102)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext.subexpressionElimination(CodeGenerator.scala:1102)

at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext.generateExpressions(CodeGenerator.scala:1154)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateUnsafeProjection$.createCode(GenerateUnsafeProjection.scala:270)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateUnsafeProjection$.create(GenerateUnsafeProjection.scala:319)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateUnsafeProjection$.generate(GenerateUnsafeProjection.scala:308)

at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.create(Projection.scala:181)

at org.apache.spark.sql.execution.ProjectExec$$anonfun$9.apply(basicPhysicalOperators.scala:71)

at org.apache.spark.sql.execution.ProjectExec$$anonfun$9.apply(basicPhysicalOperators.scala:70)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitionsWithIndexInternal$1$$anonfun$apply$24.apply(RDD.scala:818)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitionsWithIndexInternal$1$$anonfun$apply$24.apply(RDD.scala:818)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87)

at org.apache.spark.scheduler.Task.run(Task.scala:109)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:367)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

```

### How does this happen?

Here looks what happen now:

1. Sub scalar query was made (for instance `SELECT (SELECT id FROM foo)`).

2. Try to extract some common expressions (via `CodeGenerator.subexpressionElimination`) so that it can generates some common codes and can be reused.

3. During this, seems it extracts some expressions that can be reused (via `EquivalentExpressions.addExprTree`)

b2deef64f6/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/codegen/CodeGenerator.scala (L1102)

4. During this, if the hash (`EquivalentExpressions.Expr.hashCode`) happened to be the same at `EquivalentExpressions.addExpr` anyhow, `EquivalentExpressions.Expr.equals` is called to identify object in the same hash, which eventually calls `semanticEquals` in `ScalarSubquery`

087879a77a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/EquivalentExpressions.scala (L54)087879a77a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/EquivalentExpressions.scala (L36)

5. `ScalarSubquery`'s `semanticEquals` needs `SubqueryExec`'s `sameResult`

77a2fc5b52/sql/core/src/main/scala/org/apache/spark/sql/execution/subquery.scala (L58)

6. `SubqueryExec`'s `sameResult` requires a canonicalized plan which calls `FileSourceScanExec`'s `doCanonicalize`

e008ad1752/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/QueryPlan.scala (L258)

7. In `FileSourceScanExec`'s `doCanonicalize`, `FileSourceScanExec`'s `relation` is required but seems `transient` so it becomes `null`.

e76b0124fb/sql/core/src/main/scala/org/apache/spark/sql/execution/DataSourceScanExec.scala (L527)e76b0124fb/sql/core/src/main/scala/org/apache/spark/sql/execution/DataSourceScanExec.scala (L160)

8. NPE is thrown.

\*1. driver side

\*2., 3., 4., 5., 6., 7., 8. executor side

Note that most of cases, it looks fine because we will usually call:

087879a77a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/EquivalentExpressions.scala (L40)

which make a canonicalized plan via:

b045315e5d/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/Expression.scala (L192)77a2fc5b52/sql/core/src/main/scala/org/apache/spark/sql/execution/subquery.scala (L52)

### How to reproduce?

This looks what happened now. I can reproduce this by a bit of messy way:

```diff

diff --git a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/EquivalentExpressions.scala b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/EquivalentExpressions.scala

index 8d06804ce1e..d25fc9a7ba9 100644

--- a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/EquivalentExpressions.scala

+++ b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/EquivalentExpressions.scala

-37,7 +37,9 class EquivalentExpressions {

case _ => false

}

- override def hashCode: Int = e.semanticHash()

+ override def hashCode: Int = {

+ 1

+ }

}

```

```scala

spark.range(1).write.mode("overwrite").parquet("/tmp/foo")

spark.read.parquet("/tmp/foo").createOrReplaceTempView("foo")

spark.conf.set("spark.sql.codegen.wholeStage", false)

sql("SELECT (SELECT id FROM foo) == (SELECT id FROM foo)").collect()

```

### How does this PR fix?

- Make all variables that access to `FileSourceScanExec`'s `relation` as `lazy val` so that we avoid NPE. This is a temporary fix.

- Allow `makeCopy` in `SparkPlan` without Spark session too. This looks still able to be accessed within executor side. For instance:

```

at org.apache.spark.sql.execution.SparkPlan.makeCopy(SparkPlan.scala:70)

at org.apache.spark.sql.execution.SparkPlan.makeCopy(SparkPlan.scala:47)

at org.apache.spark.sql.catalyst.trees.TreeNode.withNewChildren(TreeNode.scala:233)

at org.apache.spark.sql.catalyst.plans.QueryPlan.doCanonicalize(QueryPlan.scala:243)

at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized$lzycompute(QueryPlan.scala:211)

at org.apache.spark.sql.catalyst.plans.QueryPlan.canonicalized(QueryPlan.scala:210)

at org.apache.spark.sql.catalyst.plans.QueryPlan.sameResult(QueryPlan.scala:258)

at org.apache.spark.sql.execution.ScalarSubquery.semanticEquals(subquery.scala:58)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions$Expr.equals(EquivalentExpressions.scala:36)

at scala.collection.mutable.HashTable$class.elemEquals(HashTable.scala:364)

at scala.collection.mutable.HashMap.elemEquals(HashMap.scala:40)

at scala.collection.mutable.HashTable$class.scala$collection$mutable$HashTable$$findEntry0(HashTable.scala:139)

at scala.collection.mutable.HashTable$class.findEntry(HashTable.scala:135)

at scala.collection.mutable.HashMap.findEntry(HashMap.scala:40)

at scala.collection.mutable.HashMap.get(HashMap.scala:70)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.addExpr(EquivalentExpressions.scala:54)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.addExprTree(EquivalentExpressions.scala:95)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions$$anonfun$addExprTree$1.apply(EquivalentExpressions.scala:96)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions$$anonfun$addExprTree$1.apply(EquivalentExpressions.scala:96)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.sql.catalyst.expressions.EquivalentExpressions.addExprTree(EquivalentExpressions.scala:96)

at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext$$anonfun$subexpressionElimination$1.apply(CodeGenerator.scala:1102)

at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext$$anonfun$subexpressionElimination$1.apply(CodeGenerator.scala:1102)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext.subexpressionElimination(CodeGenerator.scala:1102)

at org.apache.spark.sql.catalyst.expressions.codegen.CodegenContext.generateExpressions(CodeGenerator.scala:1154)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateUnsafeProjection$.createCode(GenerateUnsafeProjection.scala:270)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateUnsafeProjection$.create(GenerateUnsafeProjection.scala:319)

at org.apache.spark.sql.catalyst.expressions.codegen.GenerateUnsafeProjection$.generate(GenerateUnsafeProjection.scala:308)

at org.apache.spark.sql.catalyst.expressions.UnsafeProjection$.create(Projection.scala:181)

at org.apache.spark.sql.execution.ProjectExec$$anonfun$9.apply(basicPhysicalOperators.scala:71)

at org.apache.spark.sql.execution.ProjectExec$$anonfun$9.apply(basicPhysicalOperators.scala:70)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitionsWithIndexInternal$1$$anonfun$apply$24.apply(RDD.scala:818)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitionsWithIndexInternal$1$$anonfun$apply$24.apply(RDD.scala:818)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87)

at org.apache.spark.scheduler.Task.run(Task.scala:109)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:367)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

```

This PR takes over https://github.com/apache/spark/pull/20856.

## How was this patch tested?

Manually tested and unit test was added.

Closes#20856

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21815 from HyukjinKwon/SPARK-23731.

## What changes were proposed in this pull request?

Enhances the parser and analyzer to support ANSI compliant syntax for GROUPING SET. As part of this change we derive the grouping expressions from user supplied groupings in the grouping sets clause.

```SQL

SELECT c1, c2, max(c3)

FROM t1

GROUP BY GROUPING SETS ((c1), (c1, c2))

```

## How was this patch tested?

Added tests in SQLQueryTestSuite and ResolveGroupingAnalyticsSuite.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#21813 from dilipbiswal/spark-24424.

## What changes were proposed in this pull request?

As stated in https://github.com/apache/spark/pull/21321, in the error messages we should use `catalogString`. This is not the case, as SPARK-22893 used `simpleString` in order to have the same representation everywhere and it missed some places.

The PR unifies the messages using alway the `catalogString` representation of the dataTypes in the messages.

## How was this patch tested?

existing/modified UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21804 from mgaido91/SPARK-24268_catalog.

## What changes were proposed in this pull request?

`RateSourceSuite` may leave garbage files under `sql/core/dummy`, we should use a corrected temp directory

## How was this patch tested?

test only

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21817 from cloud-fan/minor.

## What changes were proposed in this pull request?

Currently, the group state of user-defined-type is encoded as top-level columns in the UnsafeRows stores in the state store. The timeout timestamp is also saved as (when needed) as the last top-level column. Since the group state is serialized to top-level columns, you cannot save "null" as a value of state (setting null in all the top-level columns is not equivalent). So we don't let the user set the timeout without initializing the state for a key. Based on user experience, this leads to confusion.

This PR is to change the row format such that the state is saved as nested columns. This would allow the state to be set to null, and avoid these confusing corner cases. However, queries recovering from existing checkpoint will use the previous format to maintain compatibility with existing production queries.

## How was this patch tested?

Refactored existing end-to-end tests and added new tests for explicitly testing obj-to-row conversion for both state formats.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#21739 from tdas/SPARK-22187-1.

## What changes were proposed in this pull request?

Currently the same Parquet footer is read twice in the function `buildReaderWithPartitionValues` of ParquetFileFormat if filter push down is enabled.

Fix it with simple changes.

## How was this patch tested?

Unit test

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#21814 from gengliangwang/parquetFooter.

## What changes were proposed in this pull request?

This patch proposes breaking down configuration of retaining batch size on state into two pieces: files and in memory (cache). While this patch reuses existing configuration for files, it introduces new configuration, "spark.sql.streaming.maxBatchesToRetainInMemory" to configure max count of batch to retain in memory.

## How was this patch tested?

Apply this patch on top of SPARK-24441 (https://github.com/apache/spark/pull/21469), and manually tested in various workloads to ensure overall size of states in memory is around 2x or less of the size of latest version of state, while it was 10x ~ 80x before applying the patch.

Author: Jungtaek Lim <kabhwan@gmail.com>

Closes#21700 from HeartSaVioR/SPARK-24717.

## What changes were proposed in this pull request?

It's a little tricky and fragile to use a dummy filter to switch codegen on/off. For now we should use local/cached relation to switch. In the future when we are able to use a config to turn off codegen, we shall use that.

## How was this patch tested?

test only PR.

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21795 from cloud-fan/follow.

## What changes were proposed in this pull request?

1. Extend the Parser to enable parsing a column list as the pivot column.

2. Extend the Parser and the Pivot node to enable parsing complex expressions with aliases as the pivot value.

3. Add type check and constant check in Analyzer for Pivot node.

## How was this patch tested?

Add tests in pivot.sql

Author: maryannxue <maryannxue@apache.org>

Closes#21720 from maryannxue/spark-24164.

## What changes were proposed in this pull request?

In DatasetSuite.scala, in the 1299 line,

test("SPARK-19896: cannot have circular references in in case class") ,

there are duplicate words "in in". We can get rid of one.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: 韩田田00222924 <han.tiantian@zte.com.cn>

Closes#21767 from httfighter/inin.

## What changes were proposed in this pull request?

This pr fixes lint-java and Scala 2.12 build.

lint-java:

```

[ERROR] src/test/resources/log4j.properties:[0] (misc) NewlineAtEndOfFile: File does not end with a newline.

```

Scala 2.12 build:

```

[error] /.../sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/continuous/ContinuousCoalesceRDD.scala:121: overloaded method value addTaskCompletionListener with alternatives:

[error] (f: org.apache.spark.TaskContext => Unit)org.apache.spark.TaskContext <and>

[error] (listener: org.apache.spark.util.TaskCompletionListener)org.apache.spark.TaskContext

[error] cannot be applied to (org.apache.spark.TaskContext => java.util.List[Runnable])

[error] context.addTaskCompletionListener { ctx =>

[error] ^

```

## How was this patch tested?

Manually executed lint-java and Scala 2.12 build in my local environment.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#21801 from ueshin/issues/SPARK-24386_24768/fix_build.

## What changes were proposed in this pull request?

This issue aims to upgrade Apache ORC library from 1.4.4 to 1.5.2 in order to bring the following benefits into Apache Spark.

- [ORC-91](https://issues.apache.org/jira/browse/ORC-91) Support for variable length blocks in HDFS (The current space wasted in ORC to padding is known to be 5%.)

- [ORC-344](https://issues.apache.org/jira/browse/ORC-344) Support for using Decimal64ColumnVector

In addition to that, Apache Hive 3.1 and 3.2 will use ORC 1.5.1 ([HIVE-19669](https://issues.apache.org/jira/browse/HIVE-19465)) and 1.5.2 ([HIVE-19792](https://issues.apache.org/jira/browse/HIVE-19792)) respectively. This will improve the compatibility between Apache Spark and Apache Hive by sharing the common library.

## How was this patch tested?

Pass the Jenkins with all existing tests.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#21582 from dongjoon-hyun/SPARK-24576.

## What changes were proposed in this pull request?

Remove the non-negative checks of window start time to make window support negative start time, and add a check to guarantee the absolute value of start time is less than slide duration.

## How was this patch tested?

New unit tests.

Author: HanShuliang <kevinzwx1992@gmail.com>

Closes#18903 from KevinZwx/dev.

## What changes were proposed in this pull request?

We have some functions which need to aware the nullabilities of all children, such as `CreateArray`, `CreateMap`, `Concat`, and so on. Currently we add casts to fix the nullabilities, but the casts might be removed during the optimization phase.

After the discussion, we decided to not add extra casts for just fixing the nullabilities of the nested types, but handle them by functions themselves.

## How was this patch tested?

Modified and added some tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#21704 from ueshin/issues/SPARK-24734/concat_containsnull.

## What changes were proposed in this pull request?

Support Decimal type push down to the parquet data sources.

The Decimal comparator used is: [`BINARY_AS_SIGNED_INTEGER_COMPARATOR`](c6764c4a08/parquet-column/src/main/java/org/apache/parquet/schema/PrimitiveComparator.java (L224-L292)).

## How was this patch tested?

unit tests and manual tests.

**manual tests**:

```scala

spark.range(10000000).selectExpr("id", "cast(id as decimal(9)) as d1", "cast(id as decimal(9, 2)) as d2", "cast(id as decimal(18)) as d3", "cast(id as decimal(18, 4)) as d4", "cast(id as decimal(38)) as d5", "cast(id as decimal(38, 18)) as d6").coalesce(1).write.option("parquet.block.size", 1048576).parquet("/tmp/spark/parquet/decimal")

val df = spark.read.parquet("/tmp/spark/parquet/decimal/")

spark.sql("set spark.sql.parquet.filterPushdown.decimal=true")

// Only read about 1 MB data

df.filter("d2 = 10000").show

// Only read about 1 MB data

df.filter("d4 = 10000").show

spark.sql("set spark.sql.parquet.filterPushdown.decimal=false")

// Read 174.3 MB data

df.filter("d2 = 10000").show

// Read 174.3 MB data

df.filter("d4 = 10000").show

```

Author: Yuming Wang <yumwang@ebay.com>

Closes#21556 from wangyum/SPARK-24549.

## What changes were proposed in this pull request?

In the PR, I propose to move `testFile()` to the common trait `SQLTestUtilsBase` and wrap test files in `AvroSuite` by the method `testFile()` which returns full paths to test files in the resource folder.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#21773 from MaxGekk/test-file.

## What changes were proposed in this pull request?

This pr modified code to project required data from CSV parsed data when column pruning disabled.

In the current master, an exception below happens if `spark.sql.csv.parser.columnPruning.enabled` is false. This is because required formats and CSV parsed formats are different from each other;

```

./bin/spark-shell --conf spark.sql.csv.parser.columnPruning.enabled=false

scala> val dir = "/tmp/spark-csv/csv"

scala> spark.range(10).selectExpr("id % 2 AS p", "id").write.mode("overwrite").partitionBy("p").csv(dir)

scala> spark.read.csv(dir).selectExpr("sum(p)").collect()

18/06/25 13:48:46 ERROR Executor: Exception in task 2.0 in stage 2.0 (TID 7)

java.lang.ClassCastException: org.apache.spark.unsafe.types.UTF8String cannot be cast to java.lang.Integer

at scala.runtime.BoxesRunTime.unboxToInt(BoxesRunTime.java:101)

at org.apache.spark.sql.catalyst.expressions.BaseGenericInternalRow$class.getInt(rows.scala:41)

...

```

## How was this patch tested?

Added tests in `CSVSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#21657 from maropu/SPARK-24676.

## What changes were proposed in this pull request?

`Timestamp` support pushdown to parquet data source.

Only `TIMESTAMP_MICROS` and `TIMESTAMP_MILLIS` support push down.

## How was this patch tested?

unit tests and benchmark tests

Author: Yuming Wang <yumwang@ebay.com>

Closes#21741 from wangyum/SPARK-24718.

## What changes were proposed in this pull request?

The original pr is: https://github.com/apache/spark/pull/18424

Add a new optimizer rule to convert an IN predicate to an equivalent Parquet filter and add `spark.sql.parquet.pushdown.inFilterThreshold` to control limit thresholds. Different data types have different limit thresholds, this is a copy of data for reference:

Type | limit threshold

-- | --

string | 370

int | 210

long | 285

double | 270

float | 220

decimal | Won't provide better performance before [SPARK-24549](https://issues.apache.org/jira/browse/SPARK-24549)

## How was this patch tested?

unit tests and manual tests

Author: Yuming Wang <yumwang@ebay.com>

Closes#21603 from wangyum/SPARK-17091.

## What changes were proposed in this pull request?

When we use a reference from Dataset in filter or sort, which was not used in the prior select, an AnalysisException occurs, e.g.,

```scala

val df = Seq(("test1", 0), ("test2", 1)).toDF("name", "id")

df.select(df("name")).filter(df("id") === 0).show()

```

```scala

org.apache.spark.sql.AnalysisException: Resolved attribute(s) id#6 missing from name#5 in operator !Filter (id#6 = 0).;;

!Filter (id#6 = 0)

+- AnalysisBarrier

+- Project [name#5]

+- Project [_1#2 AS name#5, _2#3 AS id#6]

+- LocalRelation [_1#2, _2#3]

```

This change updates the rule `ResolveMissingReferences` so `Filter` and `Sort` with non-empty `missingInputs` will also be transformed.

## How was this patch tested?

Added tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#21745 from viirya/SPARK-24781.

## What changes were proposed in this pull request?

Relax the check to allow complex aggregate expressions, like `ceil(sum(col1))` or `sum(col1) + 1`, which roughly means any aggregate expression that could appear in an Aggregate plan except pandas UDF (due to the fact that it is not supported in pivot yet).

## How was this patch tested?

Added 2 tests in pivot.sql

Author: maryannxue <maryannxue@apache.org>

Closes#21753 from maryannxue/pivot-relax-syntax.

## What changes were proposed in this pull request?

The PR is a followup to move the test cases introduced by the original PR in their proper location.

## How was this patch tested?

moved UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21751 from mgaido91/SPARK-24208_followup.

## What changes were proposed in this pull request?

The reader schema is said to be evolved (or projected) when it changed after the data is written. The followings are already supported in file-based data sources. Note that partition columns are not maintained in files. In this PR, `column` means `non-partition column`.

1. Add a column

2. Hide a column

3. Change a column position

4. Change a column type (upcast)

This issue aims to guarantee users a backward-compatible read-schema test coverage on file-based data sources and to prevent future regressions by *adding read schema tests explicitly*.

Here, we consider safe changes without data loss. For example, data type change should be from small types to larger types like `int`-to-`long`, not vice versa.

As of today, in the master branch, file-based data sources have the following coverage.

File Format | Coverage | Note

----------- | ---------- | ------------------------------------------------

TEXT | N/A | Schema consists of a single string column.

CSV | 1, 2, 4 |

JSON | 1, 2, 3, 4 |

ORC | 1, 2, 3, 4 | Native vectorized ORC reader has the widest coverage among ORC formats.

PARQUET | 1, 2, 3 |

## How was this patch tested?

Pass the Jenkins with newly added test suites.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20208 from dongjoon-hyun/SPARK-SCHEMA-EVOLUTION.

## What changes were proposed in this pull request?

With https://github.com/apache/spark/pull/21389, data source schema is validated on driver side before launching read/write tasks.

However,

1. Putting all the validations together in `DataSourceUtils` is tricky and hard to maintain. On second thought after review, I find that the `OrcFileFormat` in hive package is not matched, so that its validation wrong.

2. `DataSourceUtils.verifyWriteSchema` and `DataSourceUtils.verifyReadSchema` is not supposed to be called in every file format. We can move them to some upper entry.

So, I propose we can add a new method `validateDataType` in FileFormat. File format implementation can override the method to specify its supported/non-supported data types.

Although we should focus on data source V2 API, `FileFormat` should remain workable for some time. Adding this new method should be helpful.

## How was this patch tested?

Unit test

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#21667 from gengliangwang/refactorSchemaValidate.

## What changes were proposed in this pull request?

The PR adds the SQL function `array_union`. The behavior of the function is based on Presto's one.

This function returns returns an array of the elements in the union of array1 and array2.

Note: The order of elements in the result is not defined.

## How was this patch tested?

Added UTs

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#21061 from kiszk/SPARK-23914.

## What changes were proposed in this pull request?

In the PR, I propose to extend `RuntimeConfig` by new method `isModifiable()` which returns `true` if a config parameter can be modified at runtime (for current session state). For static SQL and core parameters, the method returns `false`.

## How was this patch tested?

Added new test to `RuntimeConfigSuite` for checking Spark core and SQL parameters.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#21730 from MaxGekk/is-modifiable.

## What changes were proposed in this pull request?

The PR simplifies the retrieval of config in `size`, as we can access them from tasks too thanks to SPARK-24250.

## How was this patch tested?

existing UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21736 from mgaido91/SPARK-24605_followup.

## What changes were proposed in this pull request?

In ProgressReporter for streams, we use the `committedOffsets` as the startOffset and `availableOffsets` as the end offset when reporting the status of a trigger in `finishTrigger`. This is a bad pattern that has existed since the beginning of ProgressReporter and it is bad because its super hard to reason about when `availableOffsets` and `committedOffsets` are updated, and when they are recorded. Case in point, this bug silently existed in ContinuousExecution, since before MicroBatchExecution was refactored.

The correct fix it to record the offsets explicitly. This PR adds a simple method which is explicitly called from MicroBatch/ContinuousExecition before updating the `committedOffsets`.

## How was this patch tested?

Added new tests

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#21744 from tdas/SPARK-24697.

## What changes were proposed in this pull request?

A self-join on a dataset which contains a `FlatMapGroupsInPandas` fails because of duplicate attributes. This happens because we are not dealing with this specific case in our `dedupAttr` rules.

The PR fix the issue by adding the management of the specific case

## How was this patch tested?

added UT + manual tests

Author: Marco Gaido <marcogaido91@gmail.com>

Author: Marco Gaido <mgaido@hortonworks.com>

Closes#21737 from mgaido91/SPARK-24208.

## What changes were proposed in this pull request?

The PR proposes to add support for running the same SQL test input files against different configs leading to the same result.

## How was this patch tested?

Involved UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21568 from mgaido91/SPARK-24562.

## What changes were proposed in this pull request?

This PR is proposing a fix for the output data type of ```If``` and ```CaseWhen``` expression. Upon till now, the implementation of exprassions has ignored nullability of nested types from different execution branches and returned the type of the first branch.

This could lead to an unwanted ```NullPointerException``` from other expressions depending on a ```If```/```CaseWhen``` expression.

Example:

```

val rows = new util.ArrayList[Row]()

rows.add(Row(true, ("a", 1)))

rows.add(Row(false, (null, 2)))

val schema = StructType(Seq(

StructField("cond", BooleanType, false),

StructField("s", StructType(Seq(

StructField("val1", StringType, true),

StructField("val2", IntegerType, false)

)), false)

))

val df = spark.createDataFrame(rows, schema)

df

.select(when('cond, struct(lit("x").as("val1"), lit(10).as("val2"))).otherwise('s) as "res")

.select('res.getField("val1"))

.show()

```

Exception:

```

Exception in thread "main" java.lang.NullPointerException

at org.apache.spark.sql.catalyst.expressions.codegen.UnsafeWriter.write(UnsafeWriter.java:109)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificUnsafeProjection.apply(Unknown Source)

at org.apache.spark.sql.execution.LocalTableScanExec$$anonfun$unsafeRows$1.apply(LocalTableScanExec.scala:44)

at org.apache.spark.sql.execution.LocalTableScanExec$$anonfun$unsafeRows$1.apply(LocalTableScanExec.scala:44)

...

```

Output schema:

```

root

|-- res.val1: string (nullable = false)

```

## How was this patch tested?

New test cases added into

- DataFrameSuite.scala

- conditionalExpressions.scala

Author: Marek Novotny <mn.mikke@gmail.com>

Closes#21687 from mn-mikke/SPARK-24165.

## What changes were proposed in this pull request?

Currently, when a streaming query has multiple watermark, the policy is to choose the min of them as the global watermark. This is safe to do as the global watermark moves with the slowest stream, and is therefore is safe as it does not unexpectedly drop some data as late, etc. While this is indeed the safe thing to do, in some cases, you may want the watermark to advance with the fastest stream, that is, take the max of multiple watermarks. This PR is to add that configuration. It makes the following changes.

- Adds a configuration to specify max as the policy.

- Saves the configuration in OffsetSeqMetadata because changing it in the middle can lead to unpredictable results.

- For old checkpoints without the configuration, it assumes the default policy as min (irrespective of the policy set at the session where the query is being restarted). This is to ensure that existing queries are affected in any way.

TODO

- [ ] Add a test for recovery from existing checkpoints.

## How was this patch tested?

New unit test

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#21701 from tdas/SPARK-24730.

## What changes were proposed in this pull request?

Support the LIMIT operator in structured streaming.

For streams in append or complete output mode, a stream with a LIMIT operator will return no more than the specified number of rows. LIMIT is still unsupported for the update output mode.

This change reverts e4fee395ec as part of it because it is a better and more complete implementation.

## How was this patch tested?

New and existing unit tests.

Author: Mukul Murthy <mukul.murthy@gmail.com>

Closes#21662 from mukulmurthy/SPARK-24662.

## What changes were proposed in this pull request?

This is the first follow-up of https://github.com/apache/spark/pull/21573 , which was only merged to 2.3.

This PR fixes the memory leak in another way: free the `UnsafeExternalMap` when the task ends. All the data buffers in Spark SQL are using `UnsafeExternalMap` and `UnsafeExternalSorter` under the hood, e.g. sort, aggregate, window, SMJ, etc. `UnsafeExternalSorter` registers a task completion listener to free the resource, we should apply the same thing to `UnsafeExternalMap`.

TODO in the next PR:

do not consume all the inputs when having limit in whole stage codegen.

## How was this patch tested?

existing tests

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21738 from cloud-fan/limit.

## What changes were proposed in this pull request?

As the implementation of the broadcast hash join is independent of the input hash partitioning, reordering keys is not necessary. Thus, we solve this issue by simply removing the broadcast hash join from the reordering rule in EnsureRequirements.

## How was this patch tested?

N/A

Author: Xiao Li <gatorsmile@gmail.com>

Closes#21728 from gatorsmile/cleanER.

## What changes were proposed in this pull request?

SPARK-22893 tried to unify error messages about dataTypes. Unfortunately, still many places were missing the `simpleString` method in other to have the same representation everywhere.

The PR unified the messages using alway the simpleString representation of the dataTypes in the messages.

## How was this patch tested?

existing/modified UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21321 from mgaido91/SPARK-24268.

## What changes were proposed in this pull request?

Implement map_concat high order function.

This implementation does not pick a winner when the specified maps have overlapping keys. Therefore, this implementation preserves existing duplicate keys in the maps and potentially introduces new duplicates (After discussion with ueshin, we settled on option 1 from [here](https://issues.apache.org/jira/browse/SPARK-23936?focusedCommentId=16464245&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-16464245)).

## How was this patch tested?

New tests

Manual tests

Run all sbt SQL tests

Run all pyspark sql tests

Author: Bruce Robbins <bersprockets@gmail.com>

Closes#21073 from bersprockets/SPARK-23936.

## What changes were proposed in this pull request?

In the PR, I propose to provide a tip to user how to resolve the issue of timeout expiration for broadcast joins. In particular, they can increase the timeout via **spark.sql.broadcastTimeout** or disable the broadcast at all by setting **spark.sql.autoBroadcastJoinThreshold** to `-1`.

## How was this patch tested?

It tested manually from `spark-shell`:

```

scala> spark.conf.set("spark.sql.broadcastTimeout", 1)

scala> val df = spark.range(100).join(spark.range(15).as[Long].map { x =>

Thread.sleep(5000)

x

}).where("id = value")

scala> df.count()

```

```

org.apache.spark.SparkException: Could not execute broadcast in 1 secs. You can increase the timeout for broadcasts via spark.sql.broadcastTimeout or disable broadcast join by setting spark.sql.autoBroadcastJoinThreshold to -1

at org.apache.spark.sql.execution.exchange.BroadcastExchangeExec.doExecuteBroadcast(BroadcastExchangeExec.scala:150)

```

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#21727 from MaxGekk/broadcast-timeout-error.

## What changes were proposed in this pull request?

SQL `Aggregator` with output type `Option[Boolean]` creates column of type `StructType`. It's not in consistency with a Dataset of similar java class.

This changes the way `definedByConstructorParams` checks given type. For `Option[_]`, it goes to check its type argument.

## How was this patch tested?

Added test.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#21611 from viirya/SPARK-24569.

## What changes were proposed in this pull request?

Refer to the [`WideSchemaBenchmark`](https://github.com/apache/spark/blob/v2.3.1/sql/core/src/test/scala/org/apache/spark/sql/execution/benchmark/WideSchemaBenchmark.scala) update `FilterPushdownBenchmark`:

1. Write the result to `benchmarks/FilterPushdownBenchmark-results.txt` for easy maintenance.

2. Add more benchmark case: `StringStartsWith`, `Decimal`, `InSet -> InFilters` and `tinyint`.

## How was this patch tested?

manual tests

Author: Yuming Wang <yumwang@ebay.com>

Closes#21677 from wangyum/SPARK-24692.

## What changes were proposed in this pull request?

If table is renamed to a existing new location, data won't show up.

```

scala> Seq("hello").toDF("a").write.format("parquet").saveAsTable("t")

scala> sql("select * from t").show()

+-----+

| a|

+-----+

|hello|

+-----+

scala> sql("alter table t rename to test")

res2: org.apache.spark.sql.DataFrame = []

scala> sql("select * from test").show()

+---+

| a|

+---+

+---+

```

The file layout is like

```

$ tree test

test

├── gabage

└── t

├── _SUCCESS

└── part-00000-856b0f10-08f1-42d6-9eb3-7719261f3d5e-c000.snappy.parquet

```

In Hive, if the new location exists, the renaming will fail even the location is empty.

We should have the same validation in Catalog, in case of unexpected bugs.

## How was this patch tested?

New unit test.

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#21655 from gengliangwang/validate_rename_table.

## What changes were proposed in this pull request?

Add an overloaded version to `from_utc_timestamp` and `to_utc_timestamp` having second argument as a `Column` instead of `String`.

## How was this patch tested?

Unit testing, especially adding two tests to org.apache.spark.sql.DateFunctionsSuite.scala

Author: Antonio Murgia <antonio.murgia@agilelab.it>

Author: Antonio Murgia <antonio.murgia2@studio.unibo.it>

Closes#21693 from tmnd1991/feature/SPARK-24673.

## What changes were proposed in this pull request?

This is a minor improvement for the test of SPARK-17213

## How was this patch tested?

N/A

Author: Xiao Li <gatorsmile@gmail.com>

Closes#21716 from gatorsmile/testMaster23.

## What changes were proposed in this pull request?

Since SPARK-24250 has been resolved, executors correctly references user-defined configurations. So, this pr added a static config to control cache size for generated classes in `CodeGenerator`.

## How was this patch tested?

Added tests in `ExecutorSideSQLConfSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#21705 from maropu/SPARK-24727.

## What changes were proposed in this pull request?

In the PR, I propose to add new function - *schema_of_json()* which infers schema of JSON string literal. The result of the function is a string containing a schema in DDL format.

One of the use cases is using of *schema_of_json()* in the combination with *from_json()*. Currently, _from_json()_ requires a schema as a mandatory argument. The *schema_of_json()* function will allow to point out an JSON string as an example which has the same schema as the first argument of _from_json()_. For instance:

```sql

select from_json(json_column, schema_of_json('{"c1": [0], "c2": [{"c3":0}]}'))

from json_table;

```

## How was this patch tested?

Added new test to `JsonFunctionsSuite`, `JsonExpressionsSuite` and SQL tests to `json-functions.sql`

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#21686 from MaxGekk/infer_schema_json.

## What changes were proposed in this pull request?

In Dataset.join we have a small hack for resolving ambiguity in the column name for self-joins. The current code supports only `EqualTo`.

The PR extends the fix to `EqualNullSafe`.

Credit for this PR should be given to daniel-shields.

## How was this patch tested?

added UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21605 from mgaido91/SPARK-24385_2.

## What changes were proposed in this pull request?

The ColumnPruning rule tries adding an extra Project if an input node produces fields more than needed, but as a post-processing step, it needs to remove the lower Project in the form of "Project - Filter - Project" otherwise it would conflict with PushPredicatesThroughProject and would thus cause a infinite optimization loop. The current post-processing method is defined as:

```

private def removeProjectBeforeFilter(plan: LogicalPlan): LogicalPlan = plan transform {

case p1 Project(_, f Filter(_, p2 Project(_, child)))

if p2.outputSet.subsetOf(child.outputSet) =>

p1.copy(child = f.copy(child = child))

}

```

This method works well when there is only one Filter but would not if there's two or more Filters. In this case, there is a deterministic filter and a non-deterministic filter so they stay as separate filter nodes and cannot be combined together.

An simplified illustration of the optimization process that forms the infinite loop is shown below (F1 stands for the 1st filter, F2 for the 2nd filter, P for project, S for scan of relation, PredicatePushDown as abbrev. of PushPredicatesThroughProject):

```

F1 - F2 - P - S

PredicatePushDown => F1 - P - F2 - S

ColumnPruning => F1 - P - F2 - P - S

=> F1 - P - F2 - S (Project removed)

PredicatePushDown => P - F1 - F2 - S

ColumnPruning => P - F1 - P - F2 - S

=> P - F1 - P - F2 - P - S

=> P - F1 - F2 - P - S (only one Project removed)

RemoveRedundantProject => F1 - F2 - P - S (goes back to the loop start)

```

So the problem is the ColumnPruning rule adds a Project under a Filter (and fails to remove it in the end), and that new Project triggers PushPredicateThroughProject. Once the filters have been push through the Project, a new Project will be added by the ColumnPruning rule and this goes on and on.

The fix should be when adding Projects, the rule applies top-down, but later when removing extra Projects, the process should go bottom-up to ensure all extra Projects can be matched.

## How was this patch tested?

Added a optimization rule test in ColumnPruningSuite; and a end-to-end test in SQLQuerySuite.

Author: maryannxue <maryannxue@apache.org>

Closes#21674 from maryannxue/spark-24696.

## What changes were proposed in this pull request?

Provide a continuous processing implementation of coalesce(1), as well as allowing aggregates on top of it.

The changes in ContinuousQueuedDataReader and such are to use split.index (the ID of the partition within the RDD currently being compute()d) rather than context.partitionId() (the partition ID of the scheduled task within the Spark job - that is, the post coalesce writer). In the absence of a narrow dependency, these values were previously always the same, so there was no need to distinguish.

## How was this patch tested?

new unit test

Author: Jose Torres <torres.joseph.f+github@gmail.com>

Closes#21560 from jose-torres/coalesce.

## What changes were proposed in this pull request?

A few math functions (`abs` , `bitwiseNOT`, `isnan`, `nanvl`) are not in **math_funcs** group. They should really be.

## How was this patch tested?

Awaiting Jenkins

Author: Jacek Laskowski <jacek@japila.pl>

Closes#21448 from jaceklaskowski/SPARK-24408-math-funcs-doc.

## What changes were proposed in this pull request?

Add a new test suite to test RecordBinaryComparator.

## How was this patch tested?

New test suite.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#21570 from jiangxb1987/rbc-test.

findTightestCommonTypeOfTwo has been renamed to findTightestCommonType

## What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Fokko Driesprong <fokkodriesprong@godatadriven.com>

Closes#21597 from Fokko/fd-typo.

## What changes were proposed in this pull request?

This pr corrected the default configuration (`spark.master=local[1]`) for benchmarks. Also, this updated performance results on the AWS `r3.xlarge`.

## How was this patch tested?

N/A

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#21625 from maropu/FixDataSourceReadBenchmark.

## What changes were proposed in this pull request?

In the master, when `csvColumnPruning`(implemented in [this commit](64fad0b519 (diff-d19881aceddcaa5c60620fdcda99b4c4))) enabled and partitions scanned only, it throws an exception below;

```

scala> val dir = "/tmp/spark-csv/csv"

scala> spark.range(10).selectExpr("id % 2 AS p", "id").write.mode("overwrite").partitionBy("p").csv(dir)

scala> spark.read.csv(dir).selectExpr("sum(p)").collect()

18/06/25 13:12:51 ERROR Executor: Exception in task 0.0 in stage 2.0 (TID 5)

java.lang.NullPointerException

at org.apache.spark.sql.execution.datasources.csv.UnivocityParser.org$apache$spark$sql$execution$datasources$csv$UnivocityParser$$convert(UnivocityParser.scala:197)

at org.apache.spark.sql.execution.datasources.csv.UnivocityParser.parse(UnivocityParser.scala:190)

at org.apache.spark.sql.execution.datasources.csv.UnivocityParser$$anonfun$5.apply(UnivocityParser.scala:309)

at org.apache.spark.sql.execution.datasources.csv.UnivocityParser$$anonfun$5.apply(UnivocityParser.scala:309)

at org.apache.spark.sql.execution.datasources.FailureSafeParser.parse(FailureSafeParser.scala:61)

...

```

This pr modified code to skip CSV parsing in the case.

## How was this patch tested?

Added tests in `CSVSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#21631 from maropu/SPARK-24645.

## What changes were proposed in this pull request?

Updated URL/href links to include a '/' before '?id' to make links consistent and avoid http 302 redirect errors within UI port 4040 tabs.

## How was this patch tested?

Built a runnable distribution and executed jobs. Validated that http 302 redirects are no longer encountered when clicking on links within UI port 4040 tabs.

Author: Steven Kallman <SJKallmangmail.com>

Author: Kallman, Steven <Steven.Kallman@CapitalOne.com>

Closes#21600 from SJKallman/{Spark-24553}{WEB-UI}-redirect-href-fixes.

## What changes were proposed in this pull request?

This pr added code to verify a schema in Json/Orc/ParquetFileFormat along with CSVFileFormat.

## How was this patch tested?

Added verification tests in `FileBasedDataSourceSuite` and `HiveOrcSourceSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#21389 from maropu/SPARK-24204.

## What changes were proposed in this pull request?

Set createTime for every hive partition created in Spark SQL, which could be used to manage data lifecycle in Hive warehouse. We found that almost every partition modified by spark sql has not been set createTime.

```

mysql> select * from partitions where create_time=0 limit 1\G;

*************************** 1. row ***************************

PART_ID: 1028584

CREATE_TIME: 0

LAST_ACCESS_TIME: 1502203611

PART_NAME: date=20170130

SD_ID: 1543605

TBL_ID: 211605

LINK_TARGET_ID: NULL

1 row in set (0.27 sec)

```

## How was this patch tested?

N/A

Author: debugger87 <yangchaozhong.2009@gmail.com>

Author: Chaozhong Yang <yangchaozhong.2009@gmail.com>

Closes#18900 from debugger87/fix/set-create-time-for-hive-partition.

## What changes were proposed in this pull request?

Address comments in #21370 and add more test.

## How was this patch tested?

Enhance test in pyspark/sql/test.py and DataFrameSuite

Author: Yuanjian Li <xyliyuanjian@gmail.com>

Closes#21553 from xuanyuanking/SPARK-24215-follow.

## What changes were proposed in this pull request?

The PR adds the SQL function ```sequence```.

https://issues.apache.org/jira/browse/SPARK-23927

The behavior of the function is based on Presto's one.

Ref: https://prestodb.io/docs/current/functions/array.html

- ```sequence(start, stop) → array<bigint>```

Generate a sequence of integers from ```start``` to ```stop```, incrementing by ```1``` if ```start``` is less than or equal to ```stop```, otherwise ```-1```.

- ```sequence(start, stop, step) → array<bigint>```

Generate a sequence of integers from ```start``` to ```stop```, incrementing by ```step```.

- ```sequence(start_date, stop_date) → array<date>```

Generate a sequence of dates from ```start_date``` to ```stop_date```, incrementing by ```interval 1 day``` if ```start_date``` is less than or equal to ```stop_date```, otherwise ```- interval 1 day```.

- ```sequence(start_date, stop_date, step_interval) → array<date>```

Generate a sequence of dates from ```start_date``` to ```stop_date```, incrementing by ```step_interval```. The type of ```step_interval``` is ```CalendarInterval```.

- ```sequence(start_timestemp, stop_timestemp) → array<timestamp>```

Generate a sequence of timestamps from ```start_timestamps``` to ```stop_timestamps```, incrementing by ```interval 1 day``` if ```start_date``` is less than or equal to ```stop_date```, otherwise ```- interval 1 day```.

- ```sequence(start_timestamp, stop_timestamp, step_interval) → array<timestamp>```

Generate a sequence of timestamps from ```start_timestamps``` to ```stop_timestamps```, incrementing by ```step_interval```. The type of ```step_interval``` is ```CalendarInterval```.

## How was this patch tested?

Added unit tests.

Author: Vayda, Oleksandr: IT (PRG) <Oleksandr.Vayda@barclayscapital.com>

Closes#21155 from wajda/feature/array-api-sequence.

## What changes were proposed in this pull request?

In PR, I propose new behavior of `size(null)` under the config flag `spark.sql.legacy.sizeOfNull`. If the former one is disabled, the `size()` function returns `null` for `null` input. By default the `spark.sql.legacy.sizeOfNull` is enabled to keep backward compatibility with previous versions. In that case, `size(null)` returns `-1`.

## How was this patch tested?

Modified existing tests for the `size()` function to check new behavior (`null`) and old one (`-1`).

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#21598 from MaxGekk/legacy-size-of-null.

## What changes were proposed in this pull request?

Here is the description in the JIRA -

Currently, our JDBC connector provides the option `dbtable` for users to specify the to-be-loaded JDBC source table.

```SQL

val jdbcDf = spark.read

.format("jdbc")

.option("dbtable", "dbName.tableName")

.options(jdbcCredentials: Map)

.load()

```

Normally, users do not fetch the whole JDBC table due to the poor performance/throughput of JDBC. Thus, they normally just fetch a small set of tables. For advanced users, they can pass a subquery as the option.

```SQL

val query = """ (select * from tableName limit 10) as tmp """

val jdbcDf = spark.read

.format("jdbc")

.option("dbtable", query)

.options(jdbcCredentials: Map)

.load()

```

However, this is straightforward to end users. We should simply allow users to specify the query by a new option `query`. We will handle the complexity for them.

```SQL

val query = """select * from tableName limit 10"""

val jdbcDf = spark.read

.format("jdbc")

.option("query", query)

.options(jdbcCredentials: Map)

.load()

```

## How was this patch tested?

Added tests in JDBCSuite and JDBCWriterSuite.

Also tested against MySQL, Postgress, Oracle, DB2 (using docker infrastructure) to make sure there are no syntax issues.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#21590 from dilipbiswal/SPARK-24423.

## What changes were proposed in this pull request?

Presto's implementation accepts arbitrary arrays of primitive types as an input:

```

presto> SELECT array_join(ARRAY [1, 2, 3], ', ');

_col0

---------

1, 2, 3

(1 row)

```

This PR proposes to implement a type coercion rule for ```array_join``` function that converts arrays of primitive as well as non-primitive types to arrays of string.

## How was this patch tested?

New test cases add into:

- sql-tests/inputs/typeCoercion/native/arrayJoin.sql

- DataFrameFunctionsSuite.scala

Author: Marek Novotny <mn.mikke@gmail.com>

Closes#21620 from mn-mikke/SPARK-24636.

This passes the unique task attempt id instead of attempt number to v2 data sources because attempt number is reused when stages are retried. When attempt numbers are reused, sources that track data by partition id and attempt number may incorrectly clean up data because the same attempt number can be both committed and aborted.

For v1 / Hadoop writes, generate a unique ID based on available attempt numbers to avoid a similar problem.

Closes#21558

Author: Marcelo Vanzin <vanzin@cloudera.com>

Author: Ryan Blue <blue@apache.org>

Closes#21606 from vanzin/SPARK-24552.2.

Use LongAdder to make SQLMetrics thread safe.

## What changes were proposed in this pull request?

Replace += with LongAdder.add() for concurrent counting

## How was this patch tested?

Unit tests with local threads

Author: Stacy Kerkela <stacy.kerkela@databricks.com>

Closes#21634 from dbkerkela/sqlmetrics-concurrency-stacy.

## What changes were proposed in this pull request?

In function array_zip, when split is required by the high number of arguments, a codegen error can happen.

The PR fixes codegen for cases when splitting the code is required.

## How was this patch tested?

added UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21621 from mgaido91/SPARK-24633.

## What changes were proposed in this pull request?

1. Add parameter 'cascade' in CacheManager.uncacheQuery(). Under 'cascade=false' mode, only invalidate the current cache, and for other dependent caches, rebuild execution plan and reuse cached buffer.

2. Pass true/false from callers in different uncache scenarios:

- Drop tables and regular (persistent) views: regular mode

- Drop temporary views: non-cascading mode

- Modify table contents (INSERT/UPDATE/MERGE/DELETE): regular mode

- Call `DataSet.unpersist()`: non-cascading mode

- Call `Catalog.uncacheTable()`: follow the same convention as drop tables/view, which is, use non-cascading mode for temporary views and regular mode for the rest

Note that a regular (persistent) view is a database object just like a table, so after dropping a regular view (whether cached or not cached), any query referring to that view should no long be valid. Hence if a cached persistent view is dropped, we need to invalidate the all dependent caches so that exceptions will be thrown for any later reference. On the other hand, a temporary view is in fact equivalent to an unnamed DataSet, and dropping a temporary view should have no impact on queries referencing that view. Thus we should do non-cascading uncaching for temporary views, which also guarantees a consistent uncaching behavior between temporary views and unnamed DataSets.

## How was this patch tested?

New tests in CachedTableSuite and DatasetCacheSuite.

Author: Maryann Xue <maryannxue@apache.org>

Closes#21594 from maryannxue/noncascading-cache.

## What changes were proposed in this pull request?

This is a follow-up pr of #21045 which added `arrays_zip`.

The `arrays_zip` in functions.scala should've been `scala.annotation.varargs`.

This pr makes it `scala.annotation.varargs`.

## How was this patch tested?

Existing tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#21630 from ueshin/issues/SPARK-23931/fup1.

## What changes were proposed in this pull request?

This pr modified JDBC datasource code to verify and normalize a partition column based on the JDBC resolved schema before building `JDBCRelation`.

Closes#20370

## How was this patch tested?

Added tests in `JDBCSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#21379 from maropu/SPARK-24327.

## What changes were proposed in this pull request?

Currently, a `pandas_udf` of type `PandasUDFType.GROUPED_MAP` will assign the resulting columns based on index of the return pandas.DataFrame. If a new DataFrame is returned and constructed using a dict, then the order of the columns could be arbitrary and be different than the defined schema for the UDF. If the schema types still match, then no error will be raised and the user will see column names and column data mixed up.

This change will first try to assign columns using the return type field names. If a KeyError occurs, then the column index is checked if it is string based. If so, then the error is raised as it is most likely a naming mistake, else it will fallback to assign columns by position and raise a TypeError if the field types do not match.

## How was this patch tested?

Added a test that returns a new DataFrame with column order different than the schema.

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#21427 from BryanCutler/arrow-grouped-map-mixesup-cols-SPARK-24324.

## What changes were proposed in this pull request?

This pr added benchmark code `FilterPushdownBenchmark` for string pushdown and updated performance results on the AWS `r3.xlarge`.

## How was this patch tested?

N/A

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#21288 from maropu/UpdateParquetBenchmark.

## What changes were proposed in this pull request?

Currently, restrictions in JSONOptions for `encoding` and `lineSep` are the same for read and for write. For example, a requirement for `lineSep` in the code:

```

df.write.option("encoding", "UTF-32BE").json(file)

```

doesn't allow to skip `lineSep` and use its default value `\n` because it throws the exception:

```

equirement failed: The lineSep option must be specified for the UTF-32BE encoding

java.lang.IllegalArgumentException: requirement failed: The lineSep option must be specified for the UTF-32BE encoding

```

In the PR, I propose to separate JSONOptions in read and write, and make JSONOptions in write less restrictive.

## How was this patch tested?

Added new test for blacklisted encodings in read. And the `lineSep` option was removed in write for some tests.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Author: Maxim Gekk <max.gekk@gmail.com>

Closes#21247 from MaxGekk/json-options-in-write.

## What changes were proposed in this pull request?

In https://github.com/apache/spark/pull/19080 we simplified the distribution/partitioning framework, and make all the join-like operators require `HashClusteredDistribution` from children. Unfortunately streaming join operator was missed.

This can cause wrong result. Think about

```

val input1 = MemoryStream[Int]

val input2 = MemoryStream[Int]

val df1 = input1.toDF.select('value as 'a, 'value * 2 as 'b)

val df2 = input2.toDF.select('value as 'a, 'value * 2 as 'b).repartition('b)

val joined = df1.join(df2, Seq("a", "b")).select('a)