## What changes were proposed in this pull request?

How to reproduce:

```scala

spark.sql("CREATE TABLE tbl(id long)")

spark.sql("INSERT OVERWRITE TABLE tbl VALUES 4")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"INSERT OVERWRITE LOCAL DIRECTORY '/tmp/spark/parquet' " +

"STORED AS PARQUET SELECT ID FROM view1")

spark.read.parquet("/tmp/spark/parquet").schema

scala> spark.read.parquet("/tmp/spark/parquet").schema

res10: org.apache.spark.sql.types.StructType = StructType(StructField(id,LongType,true))

```

The schema should be `StructType(StructField(ID,LongType,true))` as we `SELECT ID FROM view1`.

This pr fix this issue.

## How was this patch tested?

unit tests

Closes#22359 from wangyum/SPARK-25313-FOLLOW-UP.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

At Spark 2.0.0, SPARK-14335 adds some [commented-out test coverages](https://github.com/apache/spark/pull/12117/files#diff-dd4b39a56fac28b1ced6184453a47358R177

). This PR enables them because it's supported since 2.0.0.

## How was this patch tested?

Pass the Jenkins with re-enabled test coverage.

Closes#22363 from dongjoon-hyun/SPARK-25375.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Before Apache Spark 2.3, table properties were ignored when writing data to a hive table(created with STORED AS PARQUET/ORC syntax), because the compression configurations were not passed to the FileFormatWriter in hadoopConf. Then it was fixed in #20087. But actually for CTAS with USING PARQUET/ORC syntax, table properties were ignored too when convertMastore, so the test case for CTAS not supported.

Now it has been fixed in #20522 , the test case should be enabled too.

## How was this patch tested?

This only re-enables the test cases of previous PR.

Closes#22302 from fjh100456/compressionCodec.

Authored-by: fjh100456 <fu.jinhua6@zte.com.cn>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

In SharedSparkSession and TestHive, we need to disable the rule ConvertToLocalRelation for better test case coverage.

## How was this patch tested?

Identify the failures after excluding "ConvertToLocalRelation" rule.

Closes#22270 from dilipbiswal/SPARK-25267-final.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

`HiveExternalCatalogVersionsSuite` Scala-2.12 test has been failing due to class path issue. It is marked as `ABORTED` because it fails at `beforeAll` during data population stage.

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.12/

```

org.apache.spark.sql.hive.HiveExternalCatalogVersionsSuite *** ABORTED ***

Exception encountered when invoking run on a nested suite - spark-submit returned with exit code 1.

```

The root cause of the failure is that `runSparkSubmit` mixes 2.4.0-SNAPSHOT classes and old Spark (2.1.3/2.2.2/2.3.1) together during `spark-submit`. This PR aims to provide `non-test` mode execution mode to `runSparkSubmit` by removing the followings.

- SPARK_TESTING

- SPARK_SQL_TESTING

- SPARK_PREPEND_CLASSES

- SPARK_DIST_CLASSPATH

Previously, in the class path, new Spark classes are behind the old Spark classes. So, new ones are unseen. However, Spark 2.4.0 reveals this bug due to the recent data source class changes.

## How was this patch tested?

Manual test. After merging, it will be tested via Jenkins.

```scala

$ dev/change-scala-version.sh 2.12

$ build/mvn -DskipTests -Phive -Pscala-2.12 clean package

$ build/mvn -Phive -Pscala-2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.hive.HiveExternalCatalogVersionsSuite test

...

HiveExternalCatalogVersionsSuite:

- backward compatibility

...

Tests: succeeded 1, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

Closes#22340 from dongjoon-hyun/SPARK-25337.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

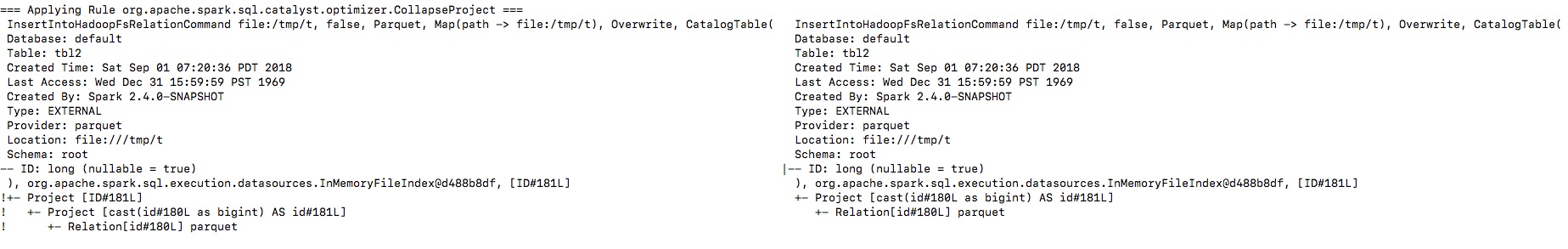

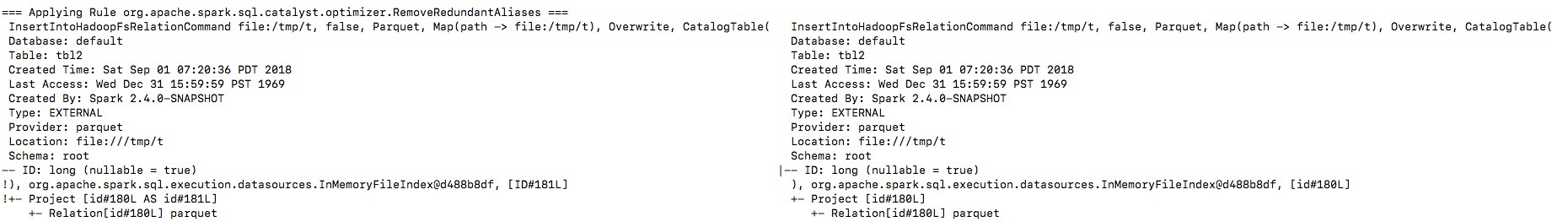

Let's see the follow example:

```

val location = "/tmp/t"

val df = spark.range(10).toDF("id")

df.write.format("parquet").saveAsTable("tbl")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"CREATE TABLE tbl2(ID long) USING parquet location $location")

spark.sql("INSERT OVERWRITE TABLE tbl2 SELECT ID FROM view1")

println(spark.read.parquet(location).schema)

spark.table("tbl2").show()

```

The output column name in schema will be `id` instead of `ID`, thus the last query shows nothing from `tbl2`.

By enabling the debug message we can see that the output naming is changed from `ID` to `id`, and then the `outputColumns` in `InsertIntoHadoopFsRelationCommand` is changed in `RemoveRedundantAliases`.

**To guarantee correctness**, we should change the output columns from `Seq[Attribute]` to `Seq[String]` to avoid its names being replaced by optimizer.

I will fix project elimination related rules in https://github.com/apache/spark/pull/22311 after this one.

## How was this patch tested?

Unit test.

Closes#22320 from gengliangwang/fixOutputSchema.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In both ORC data sources, `createFilter` function has exponential time complexity due to its skewed filter tree generation. This PR aims to improve it by using new `buildTree` function.

**REPRODUCE**

```scala

// Create and read 1 row table with 1000 columns

sql("set spark.sql.orc.filterPushdown=true")

val selectExpr = (1 to 1000).map(i => s"id c$i")

spark.range(1).selectExpr(selectExpr: _*).write.mode("overwrite").orc("/tmp/orc")

print(s"With 0 filters, ")

spark.time(spark.read.orc("/tmp/orc").count)

// Increase the number of filters

(20 to 30).foreach { width =>

val whereExpr = (1 to width).map(i => s"c$i is not null").mkString(" and ")

print(s"With $width filters, ")

spark.time(spark.read.orc("/tmp/orc").where(whereExpr).count)

}

```

**RESULT**

```scala

With 0 filters, Time taken: 653 ms

With 20 filters, Time taken: 962 ms

With 21 filters, Time taken: 1282 ms

With 22 filters, Time taken: 1982 ms

With 23 filters, Time taken: 3855 ms

With 24 filters, Time taken: 6719 ms

With 25 filters, Time taken: 12669 ms

With 26 filters, Time taken: 25032 ms

With 27 filters, Time taken: 49585 ms

With 28 filters, Time taken: 98980 ms // over 1 min 38 seconds

With 29 filters, Time taken: 198368 ms // over 3 mins

With 30 filters, Time taken: 393744 ms // over 6 mins

```

**AFTER THIS PR**

```scala

With 0 filters, Time taken: 774 ms

With 20 filters, Time taken: 601 ms

With 21 filters, Time taken: 399 ms

With 22 filters, Time taken: 679 ms

With 23 filters, Time taken: 363 ms

With 24 filters, Time taken: 342 ms

With 25 filters, Time taken: 336 ms

With 26 filters, Time taken: 352 ms

With 27 filters, Time taken: 322 ms

With 28 filters, Time taken: 302 ms

With 29 filters, Time taken: 307 ms

With 30 filters, Time taken: 301 ms

```

## How was this patch tested?

Pass the Jenkins with newly added test cases.

Closes#22313 from dongjoon-hyun/SPARK-25306.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

remove test-2.10.jar and add test-2.12.jar.

## How was this patch tested?

```

$ sbt -Dscala-2.12

> ++ 2.12.6

> project hive

> testOnly *HiveSparkSubmitSuite -- -z "8489"

```

Closes#22308 from sadhen/SPARK-8489-FOLLOWUP.

Authored-by: Darcy Shen <sadhen@zoho.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

### For `SPARK-5775 read array from partitioned_parquet_with_key_and_complextypes`:

scala2.12

```

scala> (1 to 10).toString

res4: String = Range 1 to 10

```

scala2.11

```

scala> (1 to 10).toString

res2: String = Range(1, 2, 3, 4, 5, 6, 7, 8, 9, 10)

```

And

```

def prepareAnswer(answer: Seq[Row], isSorted: Boolean): Seq[Row] = {

val converted: Seq[Row] = answer.map(prepareRow)

if (!isSorted) converted.sortBy(_.toString()) else converted

}

```

sortBy `_.toString` is not a good idea.

### Other failures are caused by

```

Array(Int.box(1)).toSeq == Array(Double.box(1.0)).toSeq

```

It is false in 2.12.2 + and is true in 2.11.x , 2.12.0, 2.12.1

## How was this patch tested?

This is a patch on a specific unit test.

Closes#22264 from sadhen/SPARK25256.

Authored-by: 忍冬 <rendong@wacai.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Since https://github.com/apache/spark/pull/21696. Spark uses Parquet schema instead of Hive metastore schema to do pushdown.

That change can avoid wrong records returned when Hive metastore schema and parquet schema are in different letter cases. This pr add a test case for it.

More details:

https://issues.apache.org/jira/browse/SPARK-25206

## How was this patch tested?

unit tests

Closes#22267 from wangyum/SPARK-24716-TESTS.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Introduced by #21320 and #11744

```

$ sbt

> ++2.12.6

> project sql

> compile

...

[error] [warn] spark/sql/core/src/main/scala/org/apache/spark/sql/execution/ProjectionOverSchema.scala:41: match may not be exhaustive.

[error] It would fail on the following inputs: (_, ArrayType(_, _)), (_, _)

[error] [warn] getProjection(a.child).map(p => (p, p.dataType)).map {

[error] [warn]

[error] [warn] spark/sql/core/src/main/scala/org/apache/spark/sql/execution/ProjectionOverSchema.scala:52: match may not be exhaustive.

[error] It would fail on the following input: (_, _)

[error] [warn] getProjection(child).map(p => (p, p.dataType)).map {

[error] [warn]

...

```

And

```

$ sbt

> ++2.12.6

> project hive

> testOnly *ParquetMetastoreSuite

...

[error] /Users/rendong/wdi/spark/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala:22: object tools is not a member of package scala

[error] import scala.tools.nsc.Properties

[error] ^

[error] /Users/rendong/wdi/spark/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala:146: not found: value Properties

[error] val version = Properties.versionNumberString match {

[error] ^

[error] two errors found

...

```

## How was this patch tested?

Existing tests.

Closes#22260 from sadhen/fix_exhaustive_match.

Authored-by: 忍冬 <rendong@wacai.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to not perform recursive parallel listening of files in the `scanPartitions` method because it can cause a deadlock. Instead of that I propose to do `scanPartitions` in parallel for top level partitions only.

## How was this patch tested?

I extended an existing test to trigger the deadlock.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#22233 from MaxGekk/fix-recover-partitions.

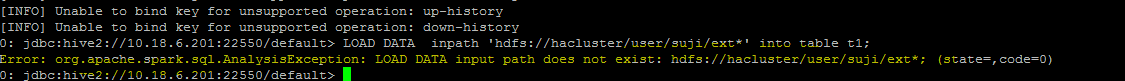

## What changes were proposed in this pull request?

**Problem statement**

load data command with hdfs file paths consists of wild card strings like * are not working

eg:

"load data inpath 'hdfs://hacluster/user/ext* into table t1"

throws Analysis exception while executing this query

**Analysis -**

Currently fs.exists() API which is used for path validation in load command API cannot resolve the path with wild card pattern, To mitigate this problem i am using globStatus() API another api which can resolve the paths with hdfs supported wildcards like *,? etc(inline with hive wildcard support).

**Improvement identified as part of this issue -**

Currently system wont support wildcard character to be used for folder level path in a local file system. This PR has handled this scenario, the same globStatus API will unify the validation logic of local and non local file systems, this will ensure the behavior consistency between the hdfs and local file path in load command.

with this improvement user will be able to use a wildcard character in folder level path of a local file system in load command inline with hive behaviour, in older versions user can use wildcards only in file path of the local file system if they use in folder path system use to give an error by mentioning that not supported.

eg: load data local inpath '/localfilesystem/folder* into table t1

## How was this patch tested?

a) Manually tested by executing test-cases in HDFS yarn cluster. Reports is been attached in below section.

b) Existing test-case can verify the impact and functionality for local file path scenarios

c) A test-case is been added for verifying the functionality when wild card is been used in folder level path of a local file system

## Test Results

Note: all ip's were updated to localhost for security reasons.

HDFS path details

```

vm1:/opt/ficlient # hadoop fs -ls /user/data/sujith1

Found 2 items

-rw-r--r-- 3 shahid hadoop 4802 2018-03-26 15:45 /user/data/sujith1/typeddata60.txt

-rw-r--r-- 3 shahid hadoop 4883 2018-03-26 15:45 /user/data/sujith1/typeddata61.txt

vm1:/opt/ficlient # hadoop fs -ls /user/data/sujith2

Found 2 items

-rw-r--r-- 3 shahid hadoop 4802 2018-03-26 15:45 /user/data/sujith2/typeddata60.txt

-rw-r--r-- 3 shahid hadoop 4883 2018-03-26 15:45 /user/data/sujith2/typeddata61.txt

```

positive scenario by specifying complete file path to know about record size

```

0: jdbc:hive2://localhost:22550/default> create table wild_spark (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.217 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/typeddata60.txt' into table wild_spark;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (4.236 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/typeddata61.txt' into table wild_spark;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.602 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from wild_spark;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

1 row selected (18.529 seconds)

0: jdbc:hive2://localhost:22550/default>

```

With wild card character in file path

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.409 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/type*' into table spark_withWildChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.502 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

```

with ? wild card scenario

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar_DiffChar (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.489 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/?ypeddata60.txt' into table spark_withWildChar_DiffChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.152 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/?ypeddata61.txt' into table spark_withWildChar_DiffChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.644 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar_DiffChar;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

1 row selected (16.078 seconds)

```

with folder level wild card scenario

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar_folderlevel (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.489 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/suji*/*' into table spark_withWildChar_folderlevel;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.152 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar_folderlevel;

+-----------+--+

| count(1) |

+-----------+--+

| 242 |

+-----------+--+

1 row selected (16.078 seconds)

```

Negative scenario invalid path

```

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujiinvalid*/*' into table spark_withWildChar_folder;

Error: org.apache.spark.sql.AnalysisException: LOAD DATA input path does not exist: /user/data/sujiinvalid*/*; (state=,code=0)

0: jdbc:hive2://localhost:22550/default>

```

Hive Test results- file level

```

0: jdbc:hive2://localhost:21066/> create table hive_withWildChar_files (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) stored as TEXTFILE;

No rows affected (0.723 seconds)

0: jdbc:hive2://localhost:21066/> load data inpath '/user/data/sujith1/type*' into table hive_withWildChar_files;

INFO : Loading data to table default.hive_withwildchar_files from hdfs://hacluster/user/sujith1/type*

No rows affected (0.682 seconds)

0: jdbc:hive2://localhost:21066/> select count(*) from hive_withWildChar_files;

+------+--+

| _c0 |

+------+--+

| 121 |

+------+--+

1 row selected (50.832 seconds)

```

Hive Test results- folder level

```

0: jdbc:hive2://localhost:21066/> create table hive_withWildChar_folder (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) stored as TEXTFILE;

No rows affected (0.459 seconds)

0: jdbc:hive2://localhost:21066/> load data inpath '/user/data/suji*/*' into table hive_withWildChar_folder;

INFO : Loading data to table default.hive_withwildchar_folder from hdfs://hacluster/user/data/suji*/*

No rows affected (0.76 seconds)

0: jdbc:hive2://localhost:21066/> select count(*) from hive_withWildChar_folder;

+------+--+

| _c0 |

+------+--+

| 242 |

+------+--+

1 row selected (46.483 seconds)

```

Closes#20611 from sujith71955/master_wldcardsupport.

Lead-authored-by: s71955 <sujithchacko.2010@gmail.com>

Co-authored-by: sujith71955 <sujithchacko.2010@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

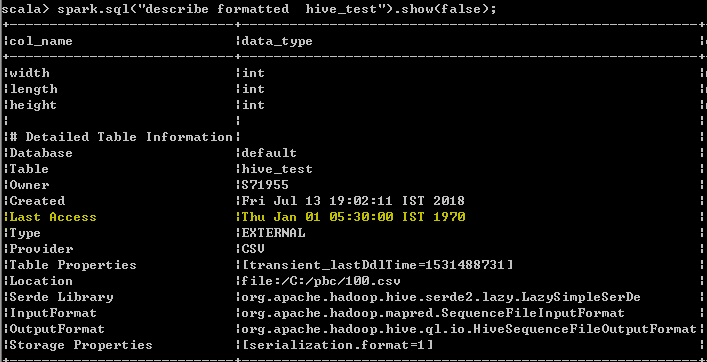

## What changes were proposed in this pull request?

[SPARK-25126] (https://issues.apache.org/jira/browse/SPARK-25126)

reports loading a large number of orc files consumes a lot of memory

in both 2.0 and 2.3. The issue is caused by creating a Reader for every

orc file in order to infer the schema.

In OrFileOperator.ReadSchema, a Reader is created for every file

although only the first valid one is used. This uses significant

amount of memory when there `paths` have a lot of files. In 2.3

a different code path (OrcUtils.readSchema) is used for inferring

schema for orc files. This commit changes both functions to create

Reader lazily.

## How was this patch tested?

Pass the Jenkins with a newly added test case by dongjoon-hyun

Closes#22157 from raofu/SPARK-25126.

Lead-authored-by: Rao Fu <rao@coupang.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Co-authored-by: Rao Fu <raofu04@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This pr proposed to show RDD/relation names in RDD/Hive table scan nodes.

This change made these names show up in the webUI and explain results.

For example;

```

scala> sql("CREATE TABLE t(c1 int) USING hive")

scala> sql("INSERT INTO t VALUES(1)")

scala> spark.table("t").explain()

== Physical Plan ==

Scan hive default.t [c1#8], HiveTableRelation `default`.`t`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [c1#8]

^^^^^^^^^^^

```

<img width="212" alt="spark-pr-hive" src="https://user-images.githubusercontent.com/692303/44501013-51264c80-a6c6-11e8-94f8-0704aee83bb6.png">

Closes#20226

## How was this patch tested?

Added tests in `DataFrameSuite`, `DatasetSuite`, and `HiveExplainSuite`

Closes#22153 from maropu/pr20226.

Lead-authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Co-authored-by: Tejas Patil <tejasp@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Fix issues arising from the fact that builtins __file__, __long__, __raw_input()__, __unicode__, __xrange()__, etc. were all removed from Python 3. __Undefined names__ have the potential to raise [NameError](https://docs.python.org/3/library/exceptions.html#NameError) at runtime.

## How was this patch tested?

* $ __python2 -m flake8 . --count --select=E9,F82 --show-source --statistics__

* $ __python3 -m flake8 . --count --select=E9,F82 --show-source --statistics__

holdenk

flake8 testing of https://github.com/apache/spark on Python 3.6.3

$ __python3 -m flake8 . --count --select=E901,E999,F821,F822,F823 --show-source --statistics__

```

./dev/merge_spark_pr.py:98:14: F821 undefined name 'raw_input'

result = raw_input("\n%s (y/n): " % prompt)

^

./dev/merge_spark_pr.py:136:22: F821 undefined name 'raw_input'

primary_author = raw_input(

^

./dev/merge_spark_pr.py:186:16: F821 undefined name 'raw_input'

pick_ref = raw_input("Enter a branch name [%s]: " % default_branch)

^

./dev/merge_spark_pr.py:233:15: F821 undefined name 'raw_input'

jira_id = raw_input("Enter a JIRA id [%s]: " % default_jira_id)

^

./dev/merge_spark_pr.py:278:20: F821 undefined name 'raw_input'

fix_versions = raw_input("Enter comma-separated fix version(s) [%s]: " % default_fix_versions)

^

./dev/merge_spark_pr.py:317:28: F821 undefined name 'raw_input'

raw_assignee = raw_input(

^

./dev/merge_spark_pr.py:430:14: F821 undefined name 'raw_input'

pr_num = raw_input("Which pull request would you like to merge? (e.g. 34): ")

^

./dev/merge_spark_pr.py:442:18: F821 undefined name 'raw_input'

result = raw_input("Would you like to use the modified title? (y/n): ")

^

./dev/merge_spark_pr.py:493:11: F821 undefined name 'raw_input'

while raw_input("\n%s (y/n): " % pick_prompt).lower() == "y":

^

./dev/create-release/releaseutils.py:58:16: F821 undefined name 'raw_input'

response = raw_input("%s [y/n]: " % msg)

^

./dev/create-release/releaseutils.py:152:38: F821 undefined name 'unicode'

author = unidecode.unidecode(unicode(author, "UTF-8")).strip()

^

./python/setup.py:37:11: F821 undefined name '__version__'

VERSION = __version__

^

./python/pyspark/cloudpickle.py:275:18: F821 undefined name 'buffer'

dispatch[buffer] = save_buffer

^

./python/pyspark/cloudpickle.py:807:18: F821 undefined name 'file'

dispatch[file] = save_file

^

./python/pyspark/sql/conf.py:61:61: F821 undefined name 'unicode'

if not isinstance(obj, str) and not isinstance(obj, unicode):

^

./python/pyspark/sql/streaming.py:25:21: F821 undefined name 'long'

intlike = (int, long)

^

./python/pyspark/streaming/dstream.py:405:35: F821 undefined name 'long'

return self._sc._jvm.Time(long(timestamp * 1000))

^

./sql/hive/src/test/resources/data/scripts/dumpdata_script.py:21:10: F821 undefined name 'xrange'

for i in xrange(50):

^

./sql/hive/src/test/resources/data/scripts/dumpdata_script.py:22:14: F821 undefined name 'xrange'

for j in xrange(5):

^

./sql/hive/src/test/resources/data/scripts/dumpdata_script.py:23:18: F821 undefined name 'xrange'

for k in xrange(20022):

^

20 F821 undefined name 'raw_input'

20

```

Closes#20838 from cclauss/fix-undefined-names.

Authored-by: cclauss <cclauss@bluewin.ch>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

Fixing typos is sometimes very hard. It's not so easy to visually review them. Recently, I discovered a very useful tool for it, [misspell](https://github.com/client9/misspell).

This pull request fixes minor typos detected by [misspell](https://github.com/client9/misspell) except for the false positives. If you would like me to work on other files as well, let me know.

## How was this patch tested?

### before

```

$ misspell . | grep -v '.js'

R/pkg/R/SQLContext.R:354:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:424:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:445:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:495:43: "definiton" is a misspelling of "definition"

NOTICE-binary:454:16: "containd" is a misspelling of "contained"

R/pkg/R/context.R:46:43: "definiton" is a misspelling of "definition"

R/pkg/R/context.R:74:43: "definiton" is a misspelling of "definition"

R/pkg/R/DataFrame.R:591:48: "persistance" is a misspelling of "persistence"

R/pkg/R/streaming.R:166:44: "occured" is a misspelling of "occurred"

R/pkg/inst/worker/worker.R:65:22: "ouput" is a misspelling of "output"

R/pkg/tests/fulltests/test_utils.R:106:25: "environemnt" is a misspelling of "environment"

common/kvstore/src/test/java/org/apache/spark/util/kvstore/InMemoryStoreSuite.java:38:39: "existant" is a misspelling of "existent"

common/kvstore/src/test/java/org/apache/spark/util/kvstore/LevelDBSuite.java:83:39: "existant" is a misspelling of "existent"

common/network-common/src/main/java/org/apache/spark/network/crypto/TransportCipher.java:243:46: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:234:19: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:238:63: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:244:46: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:276:39: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/util/AbstractFileRegion.java:27:20: "transfered" is a misspelling of "transferred"

common/unsafe/src/test/scala/org/apache/spark/unsafe/types/UTF8StringPropertyCheckSuite.scala:195:15: "orgin" is a misspelling of "origin"

core/src/main/scala/org/apache/spark/api/python/PythonRDD.scala:621:39: "gauranteed" is a misspelling of "guaranteed"

core/src/main/scala/org/apache/spark/status/storeTypes.scala:113:29: "ect" is a misspelling of "etc"

core/src/main/scala/org/apache/spark/storage/DiskStore.scala:282:18: "transfered" is a misspelling of "transferred"

core/src/main/scala/org/apache/spark/util/ListenerBus.scala:64:17: "overriden" is a misspelling of "overridden"

core/src/test/scala/org/apache/spark/ShuffleSuite.scala:211:7: "substracted" is a misspelling of "subtracted"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:1922:49: "agriculteur" is a misspelling of "agriculture"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:2468:84: "truely" is a misspelling of "truly"

core/src/test/scala/org/apache/spark/storage/FlatmapIteratorSuite.scala:25:18: "persistance" is a misspelling of "persistence"

core/src/test/scala/org/apache/spark/storage/FlatmapIteratorSuite.scala:26:69: "persistance" is a misspelling of "persistence"

data/streaming/AFINN-111.txt:1219:0: "humerous" is a misspelling of "humorous"

dev/run-pip-tests:55:28: "enviroments" is a misspelling of "environments"

dev/run-pip-tests:91:37: "virutal" is a misspelling of "virtual"

dev/merge_spark_pr.py:377:72: "accross" is a misspelling of "across"

dev/merge_spark_pr.py:378:66: "accross" is a misspelling of "across"

dev/run-pip-tests:126:25: "enviroments" is a misspelling of "environments"

docs/configuration.md:1830:82: "overriden" is a misspelling of "overridden"

docs/structured-streaming-programming-guide.md:525:45: "processs" is a misspelling of "processes"

docs/structured-streaming-programming-guide.md:1165:61: "BETWEN" is a misspelling of "BETWEEN"

docs/sql-programming-guide.md:1891:810: "behaivor" is a misspelling of "behavior"

examples/src/main/python/sql/arrow.py:98:8: "substract" is a misspelling of "subtract"

examples/src/main/python/sql/arrow.py:103:27: "substract" is a misspelling of "subtract"

licenses/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/hungarian.txt:170:0: "teh" is a misspelling of "the"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/portuguese.txt:53:0: "eles" is a misspelling of "eels"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:99:20: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:539:11: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala:77:36: "Teh" is a misspelling of "The"

mllib/src/main/scala/org/apache/spark/mllib/clustering/StreamingKMeans.scala:230:24: "inital" is a misspelling of "initial"

mllib/src/main/scala/org/apache/spark/mllib/stat/MultivariateOnlineSummarizer.scala:276:9: "Euclidian" is a misspelling of "Euclidean"

mllib/src/test/scala/org/apache/spark/ml/clustering/KMeansSuite.scala:237:26: "descripiton" is a misspelling of "descriptions"

python/pyspark/find_spark_home.py:30:13: "enviroment" is a misspelling of "environment"

python/pyspark/context.py:937:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:938:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:939:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:940:12: "supress" is a misspelling of "suppress"

python/pyspark/heapq3.py:6:63: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:7:2: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:263:29: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:263:39: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:270:49: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:270:59: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:275:2: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:275:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:277:29: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:277:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:713:8: "probabilty" is a misspelling of "probability"

python/pyspark/ml/clustering.py:1038:8: "Currenlty" is a misspelling of "Currently"

python/pyspark/ml/stat.py:339:23: "Euclidian" is a misspelling of "Euclidean"

python/pyspark/ml/regression.py:1378:20: "paramter" is a misspelling of "parameter"

python/pyspark/mllib/stat/_statistics.py:262:8: "probabilty" is a misspelling of "probability"

python/pyspark/rdd.py:1363:32: "paramter" is a misspelling of "parameter"

python/pyspark/streaming/tests.py:825:42: "retuns" is a misspelling of "returns"

python/pyspark/sql/tests.py:768:29: "initalization" is a misspelling of "initialization"

python/pyspark/sql/tests.py:3616:31: "initalize" is a misspelling of "initialize"

resource-managers/mesos/src/main/scala/org/apache/spark/scheduler/cluster/mesos/MesosSchedulerBackendUtil.scala:120:39: "arbitary" is a misspelling of "arbitrary"

resource-managers/mesos/src/test/scala/org/apache/spark/deploy/mesos/MesosClusterDispatcherArgumentsSuite.scala:26:45: "sucessfully" is a misspelling of "successfully"

resource-managers/mesos/src/main/scala/org/apache/spark/scheduler/cluster/mesos/MesosSchedulerUtils.scala:358:27: "constaints" is a misspelling of "constraints"

resource-managers/yarn/src/test/scala/org/apache/spark/deploy/yarn/YarnClusterSuite.scala:111:24: "senstive" is a misspelling of "sensitive"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/SessionCatalog.scala:1063:5: "overwirte" is a misspelling of "overwrite"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala:1348:17: "compatability" is a misspelling of "compatibility"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/basicLogicalOperators.scala:77:36: "paramter" is a misspelling of "parameter"

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:1374:22: "precendence" is a misspelling of "precedence"

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/analysis/AnalysisSuite.scala:238:27: "unnecassary" is a misspelling of "unnecessary"

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ConditionalExpressionSuite.scala:212:17: "whn" is a misspelling of "when"

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/StreamingSymmetricHashJoinHelper.scala:147:60: "timestmap" is a misspelling of "timestamp"

sql/core/src/test/scala/org/apache/spark/sql/TPCDSQuerySuite.scala:150:45: "precentage" is a misspelling of "percentage"

sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVInferSchemaSuite.scala:135:29: "infered" is a misspelling of "inferred"

sql/hive/src/test/resources/golden/udf_instr-1-2e76f819563dbaba4beb51e3a130b922:1:52: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_instr-2-32da357fc754badd6e3898dcc8989182:1:52: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_locate-1-6e41693c9c6dceea4d7fab4c02884e4e:1:63: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_locate-2-d9b5934457931447874d6bb7c13de478:1:63: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_translate-2-f7aa38a33ca0df73b7a1e6b6da4b7fe8:9:79: "occurence" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_translate-2-f7aa38a33ca0df73b7a1e6b6da4b7fe8:13:110: "occurence" is a misspelling of "occurrence"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/annotate_stats_join.q:46:105: "distint" is a misspelling of "distinct"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/auto_sortmerge_join_11.q:29:3: "Currenly" is a misspelling of "Currently"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/avro_partitioned.q:72:15: "existant" is a misspelling of "existent"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/decimal_udf.q:25:3: "substraction" is a misspelling of "subtraction"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/groupby2_map_multi_distinct.q:16:51: "funtion" is a misspelling of "function"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/groupby_sort_8.q:15:30: "issueing" is a misspelling of "issuing"

sql/hive/src/test/scala/org/apache/spark/sql/sources/HadoopFsRelationTest.scala:669:52: "wiht" is a misspelling of "with"

sql/hive-thriftserver/src/main/java/org/apache/hive/service/cli/session/HiveSessionImpl.java:474:9: "Refering" is a misspelling of "Referring"

```

### after

```

$ misspell . | grep -v '.js'

common/network-common/src/main/java/org/apache/spark/network/util/AbstractFileRegion.java:27:20: "transfered" is a misspelling of "transferred"

core/src/main/scala/org/apache/spark/status/storeTypes.scala:113:29: "ect" is a misspelling of "etc"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:1922:49: "agriculteur" is a misspelling of "agriculture"

data/streaming/AFINN-111.txt:1219:0: "humerous" is a misspelling of "humorous"

licenses/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/hungarian.txt:170:0: "teh" is a misspelling of "the"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/portuguese.txt:53:0: "eles" is a misspelling of "eels"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:99:20: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:539:11: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala:77:36: "Teh" is a misspelling of "The"

mllib/src/main/scala/org/apache/spark/mllib/stat/MultivariateOnlineSummarizer.scala:276:9: "Euclidian" is a misspelling of "Euclidean"

python/pyspark/heapq3.py:6:63: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:7:2: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:263:29: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:263:39: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:270:49: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:270:59: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:275:2: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:275:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:277:29: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:277:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/ml/stat.py:339:23: "Euclidian" is a misspelling of "Euclidean"

```

Closes#22070 from seratch/fix-typo.

Authored-by: Kazuhiro Sera <seratch@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

## What changes were proposed in this pull request?

A logical `Limit` is performed physically by two operations `LocalLimit` and `GlobalLimit`.

Most of time, we gather all data into a single partition in order to run `GlobalLimit`. If we use a very big limit number, shuffling data causes performance issue also reduces parallelism.

We can avoid shuffling into single partition if we don't care data ordering. This patch implements this idea by doing a map stage during global limit. It collects the info of row numbers at each partition. For each partition, we locally retrieves limited data without any shuffling to finish this global limit.

For example, we have three partitions with rows (100, 100, 50) respectively. In global limit of 100 rows, we may take (34, 33, 33) rows for each partition locally. After global limit we still have three partitions.

If the data partition has certain ordering, we can't distribute required rows evenly to each partitions because it could change data ordering. But we still can avoid shuffling.

## How was this patch tested?

Jenkins tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#16677 from viirya/improve-global-limit-parallelism.

## What changes were proposed in this pull request?

Currently, Analyze table calculates table size sequentially for each partition. We can parallelize size calculations over partitions.

Results : Tested on a table with 100 partitions and data stored in S3.

With changes :

- 10.429s

- 10.557s

- 10.439s

- 9.893s

Without changes :

- 110.034s

- 99.510s

- 100.743s

- 99.106s

## How was this patch tested?

Simple unit test.

Closes#21608 from Achuth17/improveAnalyze.

Lead-authored-by: Achuth17 <Achuth.narayan@gmail.com>

Co-authored-by: arajagopal17 <arajagopal@qubole.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR fixes typo regarding `auxiliary verb + verb[s]`. This is a follow-on of #21956.

## How was this patch tested?

N/A

Closes#22040 from kiszk/spellcheck1.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This is a follow-up pr of #22014.

We still have some more compilation errors in scala-2.12 with sbt:

```

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/DataFrameNaFunctions.scala:493: match may not be exhaustive.

[error] It would fail on the following input: (_, _)

[error] [warn] val typeMatches = (targetType, f.dataType) match {

[error] [warn]

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/MicroBatchExecution.scala:393: match may not be exhaustive.

[error] It would fail on the following input: (_, _)

[error] [warn] prevBatchOff.get.toStreamProgress(sources).foreach {

[error] [warn]

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/execution/aggregate/AggUtils.scala:173: match may not be exhaustive.

[error] It would fail on the following input: AggregateExpression(_, _, false, _)

[error] [warn] val rewrittenDistinctFunctions = functionsWithDistinct.map {

[error] [warn]

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/state/SymmetricHashJoinStateManager.scala:271: match may not be exhaustive.

[error] It would fail on the following input: (_, _)

[error] [warn] keyWithIndexToValueMetrics.customMetrics.map {

[error] [warn]

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/execution/command/tables.scala:959: match may not be exhaustive.

[error] It would fail on the following input: CatalogTableType(_)

[error] [warn] val tableTypeString = metadata.tableType match {

[error] [warn]

[error] [warn] /.../sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala:923: match may not be exhaustive.

[error] It would fail on the following input: CatalogTableType(_)

[error] [warn] hiveTable.setTableType(table.tableType match {

[error] [warn]

```

## How was this patch tested?

Manually build with Scala-2.12.

Closes#22039 from ueshin/issues/SPARK-25036/fix_match.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

## What changes were proposed in this pull request?

The design details is attached to the JIRA issue [here](https://drive.google.com/file/d/1zKm3aNZ3DpsqIuoMvRsf0kkDkXsAasxH/view)

High level overview of the changes are:

- Enhance the qualifier to be more than one string

- Add support to store the qualifier. Enhance the lookupRelation to keep the qualifier appropriately.

- Enhance the table matching column resolution algorithm to account for qualifier being more than a string.

- Enhance the table matching algorithm in UnresolvedStar.expand

- Ensure that we continue to support select t1.i1 from db1.t1

## How was this patch tested?

- New tests are added.

- Several test scenarios were added in a separate [test pr 17067](https://github.com/apache/spark/pull/17067). The tests that were not supported earlier are marked with TODO markers and those are now supported with the code changes here.

- Existing unit tests ( hive, catalyst and sql) were run successfully.

Closes#17185 from skambha/colResolution.

Authored-by: Sunitha Kambhampati <skambha@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

There are many warnings in the current build (for instance see https://amplab.cs.berkeley.edu/jenkins/job/spark-master-test-sbt-hadoop-2.7/4734/console).

**common**:

```

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/kvstore/src/main/java/org/apache/spark/util/kvstore/LevelDB.java:237: warning: [rawtypes] found raw type: LevelDBIterator

[warn] void closeIterator(LevelDBIterator it) throws IOException {

[warn] ^

[warn] missing type arguments for generic class LevelDBIterator<T>

[warn] where T is a type-variable:

[warn] T extends Object declared in class LevelDBIterator

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportServer.java:151: warning: [deprecation] group() in AbstractBootstrap has been deprecated

[warn] if (bootstrap != null && bootstrap.group() != null) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportServer.java:152: warning: [deprecation] group() in AbstractBootstrap has been deprecated

[warn] bootstrap.group().shutdownGracefully();

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportServer.java:154: warning: [deprecation] childGroup() in ServerBootstrap has been deprecated

[warn] if (bootstrap != null && bootstrap.childGroup() != null) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportServer.java:155: warning: [deprecation] childGroup() in ServerBootstrap has been deprecated

[warn] bootstrap.childGroup().shutdownGracefully();

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/util/NettyUtils.java:112: warning: [deprecation] PooledByteBufAllocator(boolean,int,int,int,int,int,int,int) in PooledByteBufAllocator has been deprecated

[warn] return new PooledByteBufAllocator(

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/client/TransportClient.java:321: warning: [rawtypes] found raw type: Future

[warn] public void operationComplete(Future future) throws Exception {

[warn] ^

[warn] missing type arguments for generic class Future<V>

[warn] where V is a type-variable:

[warn] V extends Object declared in interface Future

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/client/TransportResponseHandler.java:215: warning: [rawtypes] found raw type: StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, resp.streamId, resp.byteCount,

[warn] ^

[warn] missing type arguments for generic class StreamInterceptor<T>

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/client/TransportResponseHandler.java:215: warning: [rawtypes] found raw type: StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, resp.streamId, resp.byteCount,

[warn] ^

[warn] missing type arguments for generic class StreamInterceptor<T>

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/client/TransportResponseHandler.java:215: warning: [unchecked] unchecked call to StreamInterceptor(MessageHandler<T>,String,long,StreamCallback) as a member of the raw type StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, resp.streamId, resp.byteCount,

[warn] ^

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportRequestHandler.java:255: warning: [rawtypes] found raw type: StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, wrappedCallback.getID(),

[warn] ^

[warn] missing type arguments for generic class StreamInterceptor<T>

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportRequestHandler.java:255: warning: [rawtypes] found raw type: StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, wrappedCallback.getID(),

[warn] ^

[warn] missing type arguments for generic class StreamInterceptor<T>

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/server/TransportRequestHandler.java:255: warning: [unchecked] unchecked call to StreamInterceptor(MessageHandler<T>,String,long,StreamCallback) as a member of the raw type StreamInterceptor

[warn] StreamInterceptor interceptor = new StreamInterceptor(this, wrappedCallback.getID(),

[warn] ^

[warn] where T is a type-variable:

[warn] T extends Message declared in class StreamInterceptor

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/crypto/TransportCipher.java:270: warning: [deprecation] transfered() in FileRegion has been deprecated

[warn] region.transferTo(byteRawChannel, region.transfered());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:304: warning: [deprecation] transfered() in FileRegion has been deprecated

[warn] region.transferTo(byteChannel, region.transfered());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/test/java/org/apache/spark/network/ProtocolSuite.java:119: warning: [deprecation] transfered() in FileRegion has been deprecated

[warn] while (in.transfered() < in.count()) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/network-common/src/test/java/org/apache/spark/network/ProtocolSuite.java:120: warning: [deprecation] transfered() in FileRegion has been deprecated

[warn] in.transferTo(channel, in.transfered());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/unsafe/src/test/java/org/apache/spark/unsafe/hash/Murmur3_x86_32Suite.java:80: warning: [static] static method should be qualified by type name, Murmur3_x86_32, instead of by an expression

[warn] Assert.assertEquals(-300363099, hasher.hashUnsafeWords(bytes, offset, 16, 42));

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/unsafe/src/test/java/org/apache/spark/unsafe/hash/Murmur3_x86_32Suite.java:84: warning: [static] static method should be qualified by type name, Murmur3_x86_32, instead of by an expression

[warn] Assert.assertEquals(-1210324667, hasher.hashUnsafeWords(bytes, offset, 16, 42));

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/common/unsafe/src/test/java/org/apache/spark/unsafe/hash/Murmur3_x86_32Suite.java:88: warning: [static] static method should be qualified by type name, Murmur3_x86_32, instead of by an expression

[warn] Assert.assertEquals(-634919701, hasher.hashUnsafeWords(bytes, offset, 16, 42));

[warn] ^

```

**launcher**:

```

[warn] Pruning sources from previous analysis, due to incompatible CompileSetup.

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/launcher/src/main/java/org/apache/spark/launcher/AbstractLauncher.java:31: warning: [rawtypes] found raw type: AbstractLauncher

[warn] public abstract class AbstractLauncher<T extends AbstractLauncher> {

[warn] ^

[warn] missing type arguments for generic class AbstractLauncher<T>

[warn] where T is a type-variable:

[warn] T extends AbstractLauncher declared in class AbstractLauncher

```

**core**:

```

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/main/scala/org/apache/spark/api/r/RBackend.scala:99: method group in class AbstractBootstrap is deprecated: see corresponding Javadoc for more information.

[warn] if (bootstrap != null && bootstrap.group() != null) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/main/scala/org/apache/spark/api/r/RBackend.scala💯 method group in class AbstractBootstrap is deprecated: see corresponding Javadoc for more information.

[warn] bootstrap.group().shutdownGracefully()

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/main/scala/org/apache/spark/api/r/RBackend.scala:102: method childGroup in class ServerBootstrap is deprecated: see corresponding Javadoc for more information.

[warn] if (bootstrap != null && bootstrap.childGroup() != null) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/main/scala/org/apache/spark/api/r/RBackend.scala:103: method childGroup in class ServerBootstrap is deprecated: see corresponding Javadoc for more information.

[warn] bootstrap.childGroup().shutdownGracefully()

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/util/ClosureCleanerSuite.scala:151: reflective access of structural type member method getData should be enabled

[warn] by making the implicit value scala.language.reflectiveCalls visible.

[warn] This can be achieved by adding the import clause 'import scala.language.reflectiveCalls'

[warn] or by setting the compiler option -language:reflectiveCalls.

[warn] See the Scaladoc for value scala.language.reflectiveCalls for a discussion

[warn] why the feature should be explicitly enabled.

[warn] val rdd = sc.parallelize(1 to 1).map(concreteObject.getData)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/util/ClosureCleanerSuite.scala:175: reflective access of structural type member value innerObject2 should be enabled

[warn] by making the implicit value scala.language.reflectiveCalls visible.

[warn] val rdd = sc.parallelize(1 to 1).map(concreteObject.innerObject2.getData)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/util/ClosureCleanerSuite.scala:175: reflective access of structural type member method getData should be enabled

[warn] by making the implicit value scala.language.reflectiveCalls visible.

[warn] val rdd = sc.parallelize(1 to 1).map(concreteObject.innerObject2.getData)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/LocalSparkContext.scala:32: constructor Slf4JLoggerFactory in class Slf4JLoggerFactory is deprecated: see corresponding Javadoc for more information.

[warn] InternalLoggerFactory.setDefaultFactory(new Slf4JLoggerFactory())

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:218: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] assert(wrapper.stageAttemptId === stages.head.attemptId)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:261: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] stageAttemptId = stages.head.attemptId))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:287: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] stageAttemptId = stages.head.attemptId))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:471: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] stageAttemptId = stages.last.attemptId))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:966: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] listener.onTaskStart(SparkListenerTaskStart(dropped.stageId, dropped.attemptId, task))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:972: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] listener.onTaskEnd(SparkListenerTaskEnd(dropped.stageId, dropped.attemptId,

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:976: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] .taskSummary(dropped.stageId, dropped.attemptId, Array(0.25d, 0.50d, 0.75d))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:1146: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] SparkListenerTaskEnd(stage1.stageId, stage1.attemptId, "taskType", Success, tasks(1), null))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/status/AppStatusListenerSuite.scala:1150: value attemptId in class StageInfo is deprecated: Use attemptNumber instead

[warn] SparkListenerTaskEnd(stage1.stageId, stage1.attemptId, "taskType", Success, tasks(0), null))

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/storage/DiskStoreSuite.scala:197: method transfered in trait FileRegion is deprecated: see corresponding Javadoc for more information.

[warn] while (region.transfered() < region.count()) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/core/src/test/scala/org/apache/spark/storage/DiskStoreSuite.scala:198: method transfered in trait FileRegion is deprecated: see corresponding Javadoc for more information.

[warn] region.transferTo(byteChannel, region.transfered())

[warn] ^

```

**sql**:

```

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/analysis/AnalysisSuite.scala:534: abstract type T is unchecked since it is eliminated by erasure

[warn] assert(partitioning.isInstanceOf[T])

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/analysis/AnalysisSuite.scala:534: abstract type T is unchecked since it is eliminated by erasure

[warn] assert(partitioning.isInstanceOf[T])

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ObjectExpressionsSuite.scala:323: inferred existential type Option[Class[_$1]]( forSome { type _$1 }), which cannot be expressed by wildcards, should be enabled

[warn] by making the implicit value scala.language.existentials visible.

[warn] This can be achieved by adding the import clause 'import scala.language.existentials'

[warn] or by setting the compiler option -language:existentials.

[warn] See the Scaladoc for value scala.language.existentials for a discussion

[warn] why the feature should be explicitly enabled.

[warn] val optClass = Option(collectionCls)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/SpecificParquetRecordReaderBase.java:226: warning: [deprecation] ParquetFileReader(Configuration,FileMetaData,Path,List<BlockMetaData>,List<ColumnDescriptor>) in ParquetFileReader has been deprecated

[warn] this.reader = new ParquetFileReader(

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:178: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] (descriptor.getType() == PrimitiveType.PrimitiveTypeName.INT32 ||

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:179: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] (descriptor.getType() == PrimitiveType.PrimitiveTypeName.INT64 &&

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:181: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] descriptor.getType() == PrimitiveType.PrimitiveTypeName.FLOAT ||

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:182: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] descriptor.getType() == PrimitiveType.PrimitiveTypeName.DOUBLE ||

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:183: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] descriptor.getType() == PrimitiveType.PrimitiveTypeName.BINARY))) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:198: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] switch (descriptor.getType()) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:221: warning: [deprecation] getTypeLength() in ColumnDescriptor has been deprecated

[warn] readFixedLenByteArrayBatch(rowId, num, column, descriptor.getTypeLength());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:224: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] throw new IOException("Unsupported type: " + descriptor.getType());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:246: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] descriptor.getType().toString(),

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:258: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] switch (descriptor.getType()) {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/execution/datasources/parquet/VectorizedColumnReader.java:384: warning: [deprecation] getType() in ColumnDescriptor has been deprecated

[warn] throw new UnsupportedOperationException("Unsupported type: " + descriptor.getType());

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/vectorized/ArrowColumnVector.java:458: warning: [static] static variable should be qualified by type name, BaseRepeatedValueVector, instead of by an expression

[warn] int index = rowId * accessor.OFFSET_WIDTH;

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/main/java/org/apache/spark/sql/vectorized/ArrowColumnVector.java:460: warning: [static] static variable should be qualified by type name, BaseRepeatedValueVector, instead of by an expression

[warn] int end = offsets.getInt(index + accessor.OFFSET_WIDTH);

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/BenchmarkQueryTest.scala:57: a pure expression does nothing in statement position; you may be omitting necessary parentheses

[warn] case s => s

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetInteroperabilitySuite.scala:182: inferred existential type org.apache.parquet.column.statistics.Statistics[?0]( forSome { type ?0 <: Comparable[?0] }), which cannot be expressed by wildcards, should be enabled

[warn] by making the implicit value scala.language.existentials visible.

[warn] This can be achieved by adding the import clause 'import scala.language.existentials'

[warn] or by setting the compiler option -language:existentials.

[warn] See the Scaladoc for value scala.language.existentials for a discussion

[warn] why the feature should be explicitly enabled.

[warn] val columnStats = oneBlockColumnMeta.getStatistics

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/execution/streaming/sources/ForeachBatchSinkSuite.scala:146: implicit conversion method conv should be enabled

[warn] by making the implicit value scala.language.implicitConversions visible.

[warn] This can be achieved by adding the import clause 'import scala.language.implicitConversions'

[warn] or by setting the compiler option -language:implicitConversions.

[warn] See the Scaladoc for value scala.language.implicitConversions for a discussion

[warn] why the feature should be explicitly enabled.

[warn] implicit def conv(x: (Int, Long)): KV = KV(x._1, x._2)

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/streaming/continuous/shuffle/ContinuousShuffleSuite.scala:48: implicit conversion method unsafeRow should be enabled

[warn] by making the implicit value scala.language.implicitConversions visible.

[warn] private implicit def unsafeRow(value: Int) = {

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetInteroperabilitySuite.scala:178: method getType in class ColumnDescriptor is deprecated: see corresponding Javadoc for more information.

[warn] assert(oneFooter.getFileMetaData.getSchema.getColumns.get(0).getType() ===

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/parquet/ParquetTest.scala:154: method readAllFootersInParallel in object ParquetFileReader is deprecated: see corresponding Javadoc for more information.

[warn] ParquetFileReader.readAllFootersInParallel(configuration, fs.getFileStatus(path)).asScala.toSeq

[warn] ^

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/sql/hive/src/test/java/org/apache/spark/sql/hive/test/Complex.java:679: warning: [cast] redundant cast to Complex

[warn] Complex typedOther = (Complex)other;

[warn] ^

```

**mllib**:

```

[warn] Pruning sources from previous analysis, due to incompatible CompileSetup.

[warn] /home/jenkins/workspace/spark-master-test-maven-hadoop-2.7/mllib/src/test/scala/org/apache/spark/ml/recommendation/ALSSuite.scala:597: match may not be exhaustive.

[warn] It would fail on the following inputs: None, Some((x: Tuple2[?, ?] forSome x not in (?, ?)))

[warn] val df = dfs.find {

[warn] ^

```

This PR does not target fix all of them since some look pretty tricky to fix and there look too many warnings including false positive (like deprecated API but it's used in its test, etc.)

## How was this patch tested?

Existing tests should cover this.

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21975 from HyukjinKwon/remove-build-warnings.

## What changes were proposed in this pull request?

This PR addresses issues 2,3 in this [document](https://docs.google.com/document/d/1fbkjEL878witxVQpOCbjlvOvadHtVjYXeB-2mgzDTvk).

* We modified the closure cleaner to identify closures that are implemented via the LambdaMetaFactory mechanism (serializedLambdas) (issue2).

* We also fix the issue due to scala/bug#11016. There are two options for solving the Unit issue, either add () at the end of the closure or use the trick described in the doc. Otherwise overloading resolution does not work (we are not going to eliminate either of the methods) here. Compiler tries to adapt to Unit and makes these two methods candidates for overloading, when there is polymorphic overloading there is no ambiguity (that is the workaround implemented). This does not look that good but it serves its purpose as we need to support two different uses for method: `addTaskCompletionListener`. One that passes a TaskCompletionListener and one that passes a closure that is wrapped with a TaskCompletionListener later on (issue3).

Note: regarding issue 1 in the doc the plan is:

> Do Nothing. Don’t try to fix this as this is only a problem for Java users who would want to use 2.11 binaries. In that case they can cast to MapFunction to be able to utilize lambdas. In Spark 3.0.0 the API should be simplified so that this issue is removed.

## How was this patch tested?

This was manually tested:

```./dev/change-scala-version.sh 2.12

./build/mvn -DskipTests -Pscala-2.12 clean package

./build/mvn -Pscala-2.12 clean package -DwildcardSuites=org.apache.spark.serializer.ProactiveClosureSerializationSuite -Dtest=None

./build/mvn -Pscala-2.12 clean package -DwildcardSuites=org.apache.spark.util.ClosureCleanerSuite -Dtest=None

./build/mvn -Pscala-2.12 clean package -DwildcardSuites=org.apache.spark.streaming.DStreamClosureSuite -Dtest=None```

Author: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com>

Closes#21930 from skonto/scala2.12-sup.

## What changes were proposed in this pull request?

When we do an average, the result is computed dividing the sum of the values by their count. In the case the result is a DecimalType, the way we are casting/managing the precision and scale is not really optimized and it is not coherent with what we do normally.