### What changes were proposed in this pull request?

When `spark.sql.caseSensitive` is `false` (by default), check that there are not duplicate column names on the same level (top level or nested levels) in reading from in-built datasources Parquet, ORC, Avro and JSON. If such duplicate columns exist, throw the exception:

```

org.apache.spark.sql.AnalysisException: Found duplicate column(s) in the data schema:

```

### Why are the changes needed?

To make handling of duplicate nested columns is similar to handling of duplicate top-level columns i. e. output the same error when `spark.sql.caseSensitive` is `false`:

```Scala

org.apache.spark.sql.AnalysisException: Found duplicate column(s) in the data schema: `camelcase`

```

Checking of top-level duplicates was introduced by https://github.com/apache/spark/pull/17758.

### Does this PR introduce _any_ user-facing change?

Yes. For the example from SPARK-32431:

ORC:

```scala

java.io.IOException: Error reading file: file:/private/var/folders/p3/dfs6mf655d7fnjrsjvldh0tc0000gn/T/spark-c02c2f9a-0cdc-4859-94fc-b9c809ca58b1/part-00001-63e8c3f0-7131-4ec9-be02-30b3fdd276f4-c000.snappy.orc

at org.apache.orc.impl.RecordReaderImpl.nextBatch(RecordReaderImpl.java:1329)

at org.apache.orc.mapreduce.OrcMapreduceRecordReader.ensureBatch(OrcMapreduceRecordReader.java:78)

...

Caused by: java.io.EOFException: Read past end of RLE integer from compressed stream Stream for column 3 kind DATA position: 6 length: 6 range: 0 offset: 12 limit: 12 range 0 = 0 to 6 uncompressed: 3 to 3

at org.apache.orc.impl.RunLengthIntegerReaderV2.readValues(RunLengthIntegerReaderV2.java:61)

at org.apache.orc.impl.RunLengthIntegerReaderV2.next(RunLengthIntegerReaderV2.java:323)

```

JSON:

```scala

+------------+

|StructColumn|

+------------+

| [,,]|

+------------+

```

Parquet:

```scala

+------------+

|StructColumn|

+------------+

| [0,, 1]|

+------------+

```

Avro:

```scala

+------------+

|StructColumn|

+------------+

| [,,]|

+------------+

```

After the changes, Parquet, ORC, JSON and Avro output the same error:

```scala

Found duplicate column(s) in the data schema: `camelcase`;

org.apache.spark.sql.AnalysisException: Found duplicate column(s) in the data schema: `camelcase`;

at org.apache.spark.sql.util.SchemaUtils$.checkColumnNameDuplication(SchemaUtils.scala:112)

at org.apache.spark.sql.util.SchemaUtils$.checkSchemaColumnNameDuplication(SchemaUtils.scala:51)

at org.apache.spark.sql.util.SchemaUtils$.checkSchemaColumnNameDuplication(SchemaUtils.scala:67)

```

### How was this patch tested?

Run modified test suites:

```

$ build/sbt "sql/test:testOnly org.apache.spark.sql.FileBasedDataSourceSuite"

$ build/sbt "avro/test:testOnly org.apache.spark.sql.avro.*"

```

and added new UT to `SchemaUtilsSuite`.

Closes#29234 from MaxGekk/nested-case-insensitive-column.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to support pushed down filters in Avro datasource V1 and V2.

1. Added new SQL config `spark.sql.avro.filterPushdown.enabled` to control filters pushdown to Avro datasource. It is on by default.

2. Renamed `CSVFilters` to `OrderedFilters`.

3. `OrderedFilters` is used in `AvroFileFormat` (DSv1) and in `AvroPartitionReaderFactory` (DSv2)

4. Modified `AvroDeserializer` to return None from the `deserialize` method when pushdown filters return `false`.

### Why are the changes needed?

The changes improve performance on synthetic benchmarks up to **2** times on JDK 11:

```

OpenJDK 64-Bit Server VM 11.0.7+10-post-Ubuntu-2ubuntu218.04 on Linux 4.15.0-1063-aws

Intel(R) Xeon(R) CPU E5-2670 v2 2.50GHz

Filters pushdown: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------------------------------

w/o filters 9614 9669 54 0.1 9614.1 1.0X

pushdown disabled 10077 10141 66 0.1 10077.2 1.0X

w/ filters 4681 4713 29 0.2 4681.5 2.1X

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- Added UT to `AvroCatalystDataConversionSuite` and `AvroSuite`

- Re-running `AvroReadBenchmark` using Amazon EC2:

| Item | Description |

| ---- | ----|

| Region | us-west-2 (Oregon) |

| Instance | r3.xlarge (spot instance) |

| AMI | ami-06f2f779464715dc5 (ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1) |

| Java | OpenJDK8/11 installed by`sudo add-apt-repository ppa:openjdk-r/ppa` & `sudo apt install openjdk-11-jdk`|

and `./dev/run-benchmarks`:

```python

#!/usr/bin/env python3

import os

from sparktestsupport.shellutils import run_cmd

benchmarks = [

['avro/test', 'org.apache.spark.sql.execution.benchmark.AvroReadBenchmark']

]

print('Set SPARK_GENERATE_BENCHMARK_FILES=1')

os.environ['SPARK_GENERATE_BENCHMARK_FILES'] = '1'

for b in benchmarks:

print("Run benchmark: %s" % b[1])

run_cmd(['build/sbt', '%s:runMain %s' % (b[0], b[1])])

```

Closes#29145 from MaxGekk/avro-filters-pushdown.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

Same as https://github.com/apache/spark/pull/29078 and https://github.com/apache/spark/pull/28971 . This makes the rest of the default modules (i.e. those you get without specifying `-Pyarn` etc) compile under Scala 2.13. It does not close the JIRA, as a result. this also of course does not demonstrate that tests pass yet in 2.13.

Note, this does not fix the `repl` module; that's separate.

### Why are the changes needed?

Eventually, we need to support a Scala 2.13 build, perhaps in Spark 3.1.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests. (2.13 was not tested; this is about getting it to compile without breaking 2.12)

Closes#29111 from srowen/SPARK-29292.3.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/28760 to fix the remaining issues:

1. should consider data source options when refreshing cache by path at the end of `InsertIntoHadoopFsRelationCommand`

2. should consider data source options when inferring schema for file source

3. should consider data source options when getting the qualified path in file source v2.

### Why are the changes needed?

We didn't catch these issues in https://github.com/apache/spark/pull/28760, because the test case is to check error when initializing the file system. If we initialize the file system multiple times during a simple read/write action, the test case actually only test the first time.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

rewrite the test to make sure the entire data source read/write action can succeed.

Closes#28948 from cloud-fan/fix.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

1. Add the following parquet files to the resource folder `external/avro/src/test/resources`:

- Files saved by Spark 2.4.5 (cee4ecbb16) without meta info `org.apache.spark.version`

- `before_1582_date_v2_4_5.avro` with a date column: `avro.schema {"type":"record","name":"topLevelRecord","fields":[{"name":"dt","type":[{"type":"int","logicalType":"date"},"null"]}]}`

- `before_1582_timestamp_millis_v2_4_5.avro` with a timestamp column: `avro.schema {"type":"record","name":"test","namespace":"logical","fields":[{"name":"dt","type":["null",{"type":"long","logicalType":"timestamp-millis"}],"default":null}]}`

- `before_1582_timestamp_micros_v2_4_5.avro` with a timestamp column: `avro.schema {"type":"record","name":"topLevelRecord","fields":[{"name":"dt","type":[{"type":"long","logicalType":"timestamp-micros"},"null"]}]}`

- Files saved by Spark 2.4.6-rc3 (570848da7c) with the meta info `org.apache.spark.version 2.4.6`:

- `before_1582_date_v2_4_6.avro` is similar to `before_1582_date_v2_4_5.avro` except Spark version in parquet meta info.

- `before_1582_timestamp_micros_v2_4_6.avro` is similar to `before_1582_timestamp_micros_v2_4_5.avro` except meta info.

- `before_1582_timestamp_millis_v2_4_6.avro` is similar to `before_1582_timestamp_millis_v2_4_5.avro` except meta info.

2. Removed a few avro files becaused they are replaced by Avro files generated by Spark 2.4.5 above.

3. Add new test "generate test files for checking compatibility with Spark 2.4" to `AvroSuite` (marked as ignored). The parquet files above were generated by this test.

4. Modified "SPARK-31159: compatibility with Spark 2.4 in reading dates/timestamps" in `AvroSuite` to use new parquet files.

### Why are the changes needed?

To improve test coverage.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By `AvroV1Suite` and `AvroV2Suite`.

Closes#28664 from MaxGekk/avro-update-resource-files.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When reading/writing datetime values that before the rebase switch day, from/to Avro/Parquet files, fail by default and ask users to set a config to explicitly do rebase or not.

### Why are the changes needed?

Rebase or not rebase have different behaviors and we should let users decide it explicitly. In most cases, users won't hit this exception as it only affects ancient datetime values.

### Does this PR introduce _any_ user-facing change?

Yes, now users will see an error when reading/writing dates before 1582-10-15 or timestamps before 1900-01-01 from/to Parquet/Avro files, with an error message to ask setting a config.

### How was this patch tested?

updated tests

Closes#28477 from cloud-fan/rebase.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adds a new parquet/avro file metadata: `org.apache.spark.legacyDatetime`. It indicates that the file was written with the "rebaseInWrite" config enabled, and spark need to do rebase when reading it.

This makes Spark be able to do rebase more smartly:

1. If we don't know which Spark version writes the file, do rebase if the "rebaseInRead" config is true.

2. If the file was written by Spark 2.4 and earlier, then do rebase.

3. If the file was written by Spark 3.0 and later, do rebase if the `org.apache.spark.legacyDatetime` exists in file metadata.

### Why are the changes needed?

It's very easy to have mixed-calendar parquet/avro files: e.g. A user upgrades to Spark 3.0 and writes some parquet files to an existing directory. Then he realizes that the directory contains legacy datetime values before 1582. However, it's too late and he has to find out all the legacy files manually and read them separately.

To support mixed-calendar parquet/avro files, we need to decide to rebase or not based on the file metadata.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Updated test

Closes#28137 from cloud-fan/datetime.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to optimise the `DateTimeUtils`.`rebaseJulianToGregorianMicros()` and `rebaseGregorianToJulianMicros()` functions, and make them faster by using pre-calculated rebasing tables. This approach allows to avoid expensive conversions via local timestamps. For example, the `America/Los_Angeles` time zone has just a few time points when difference between Proleptic Gregorian calendar and the hybrid calendar (Julian + Gregorian since 1582-10-15) is changed in the time interval 0001-01-01 .. 2100-01-01:

| i | local timestamp | Proleptic Greg. seconds | Hybrid (Julian+Greg) seconds | difference in minutes|

| -- | ------- |----|----| ---- |

|0|0001-01-01 00:00|-62135568422|-62135740800|-2872|

|1|0100-03-01 00:00|-59006333222|-59006419200|-1432|

|...|...|...|...|...|

|13|1582-10-15 00:00|-12219264422|-12219264000|7|

|14|1883-11-18 12:00|-2717640000|-2717640000|0|

The difference in microseconds between Proleptic and hybrid calendars for any local timestamp in time intervals `[local timestamp(i), local timestamp(i+1))`, and for any microseconds in the time interval `[Gregorian micros(i), Gregorian micros(i+1))` is the same. In this way, we can rebase an input micros by following the steps:

1. Look at the table, and find the time interval where the micros falls to

2. Take the difference between 2 calendars for this time interval

3. Add the difference to the input micros. The result is rebased microseconds that has the same local timestamp representation.

Here are details of the implementation:

- Pre-calculated tables are stored to JSON files `gregorian-julian-rebase-micros.json` and `julian-gregorian-rebase-micros.json` in the resource folder of `sql/catalyst`. The diffs and switch time points are stored as seconds, for example:

```json

[

{

"tz" : "America/Los_Angeles",

"switches" : [ -62135740800, -59006419200, ... , -2717640000 ],

"diffs" : [ 172378, 85978, ..., 0 ]

}

]

```

The JSON files are generated by 2 tests in `RebaseDateTimeSuite` - `generate 'gregorian-julian-rebase-micros.json'` and `generate 'julian-gregorian-rebase-micros.json'`. Both tests are disabled by default.

The `switches` time points are ordered from old to recent timestamps. This condition is checked by the test `validate rebase records in JSON files` in `RebaseDateTimeSuite`. Also sizes of the `switches` and `diffs` arrays are the same (this is checked by the same test).

- The **_Asia/Tehran, Iran, Africa/Casablanca and Africa/El_Aaiun_** time zones weren't added to the JSON files, see [SPARK-31385](https://issues.apache.org/jira/browse/SPARK-31385)

- The rebase info from the JSON files is placed to hash tables - `gregJulianRebaseMap` and `julianGregRebaseMap`. I use `AnyRefMap` because it is almost 2 times faster than Scala's immutable Map. Also I tried `java.util.HashMap` but it has worse lookup time than `AnyRefMap` in our case.

The hash maps store the switch time points and diffs in microseconds precision to avoid conversions from microseconds to seconds in the runtime.

- I moved the code related to days and microseconds rebasing to the separate object `RebaseDateTime` to do not pollute `DateTimeUtils`. Tests related to date-time rebasing are moved to `RebaseDateTimeSuite` for the same reason.

- I placed rebasing via local timestamp to separate methods that require zone id as the first parameter assuming that the caller has zone id already. This allows to void unnecessary retrieving the default time zone. The methods are marked as `private[sql]` because they are used in `RebaseDateTimeSuite` as reference implementation.

- Modified the `rebaseGregorianToJulianMicros()` and `rebaseJulianToGregorianMicros()` methods in `RebaseDateTime` to look up the rebase tables first of all. If hash maps don't contain rebasing info for the given time zone id, the methods falls back to the implementation via local timestamps. This allows to support time zones specified as zone offsets like '-08:00'.

### Why are the changes needed?

To make timestamps rebasing faster:

- Saving timestamps to parquet files is ~ **x3.8 faster**

- Loading timestamps from parquet files is ~**x2.8 faster**.

- Loading timestamps by Vectorized reader ~**x4.6 faster**.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- Added the test `validate rebase records in JSON files` to `RebaseDateTimeSuite`. The test validates 2 json files from the resource folder - `gregorian-julian-rebase-micros.json` and `julian-gregorian-rebase-micros.json`, and it checks per each time zone records that

- the number of switch points is equal to the number of diffs between calendars. If the numbers are different, this will violate the assumption made in `RebaseDateTime.rebaseMicros`.

- swith points are ordered from old to recent timestamps. This pre-condition is required for linear search in the `rebaseMicros` function.

- Added the test `optimization of micros rebasing - Gregorian to Julian` to `RebaseDateTimeSuite` which iterates over timestamps from 0001-01-01 to 2100-01-01 with the steps 1 ± 0.5 months, and checks that optimised function `RebaseDateTime`.`rebaseGregorianToJulianMicros()` returns the same result as non-optimised one. The check is performed for the UTC, PST, CET, Africa/Dakar, America/Los_Angeles, Antarctica/Vostok, Asia/Hong_Kong, Europe/Amsterdam time zones.

- Added the test `optimization of micros rebasing - Julian to Gregorian` to `RebaseDateTimeSuite` which does similar checks as the test above but for rebasing from the hybrid calendar (Julian + Gregorian) to Proleptic Gregorian calendar.

- The tests for days rebasing are moved from `DateTimeUtilsSuite` to `RebaseDateTimeSuite` because the rebasing related code is moved from `DateTimeUtils` to the separate object `RebaseDateTime`.

- Re-run `DateTimeRebaseBenchmark` at the America/Los_Angeles time zone (it is set explicitly in the PR #28127):

| Item | Description |

| ---- | ----|

| Region | us-west-2 (Oregon) |

| Instance | r3.xlarge |

| AMI | ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1 (ami-06f2f779464715dc5) |

| Java | OpenJDK 64-Bit Server VM 1.8.0_242 and OpenJDK 64-Bit Server VM 11.0.6+10 |

Closes#28119 from MaxGekk/optimize-rebase-micros.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Write Spark version into Avro file metadata

### Why are the changes needed?

The version info is very useful for backward compatibility. This is also done in parquet/orc.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

new test

Closes#28102 from cloud-fan/avro.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to replace the following SQL configs:

1. `spark.sql.legacy.parquet.rebaseDateTime.enabled` by

- `spark.sql.legacy.parquet.rebaseDateTimeInWrite.enabled` (`false` by default). The config enables rebasing dates/timestamps while saving to Parquet files. If it is set to `true`, dates/timestamps are converted to local date-time in Proleptic Gregorian calendar, date-time fields are extracted, and used in building new local date-time in the hybrid calendar (Julian + Gregorian). The resulted local date-time is converted to days or microseconds since the epoch.

- `spark.sql.legacy.parquet.rebaseDateTimeInRead.enabled` (`false` by default). The config enables rebasing of dates/timestamps in reading from Parquet files.

2. `spark.sql.legacy.avro.rebaseDateTime.enabled` by

- `spark.sql.legacy.avro.rebaseDateTimeInWrite.enabled` (`false` by default). It enables dates/timestamps rebasing from Proleptic Gregorian calendar to the hybrid calendar via local date/timestamps.

- `spark.sql.legacy.avro.rebaseDateTimeInRead.enabled` (`false` by default). It enables rebasing dates/timestamps from the hybrid calendar to Proleptic Gregorian calendar in read. The rebasing is performed by converting micros/millis/days to a local date/timestamp in the source calendar, interpreting the resulted date/timestamp in the target calendar, and getting the number of micros/millis/days since the epoch 1970-01-01 00:00:00Z.

### Why are the changes needed?

This allows to load dates/timestamps saved by Spark 2.4, and save to Parquet/Avro files without rebasing. And the reverse use case - load data saved by Spark 3.0, and save it in the form which is compatible with Spark 2.4.

### Does this PR introduce any user-facing change?

Yes, users have to use new SQL configs. Old SQL configs are removed by the PR.

### How was this patch tested?

By existing test suites `AvroV1Suite`, `AvroV2Suite` and `ParquetIOSuite`.

Closes#28082 from MaxGekk/split-rebase-configs.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to change types of `DateTimeTestUtils` values and functions by replacing `java.util.TimeZone` to `java.time.ZoneId`. In particular:

1. Type of `ALL_TIMEZONES` is changed to `Seq[ZoneId]`.

2. Remove `val outstandingTimezones: Seq[TimeZone]`.

3. Change the type of the time zone parameter in `withDefaultTimeZone` to `ZoneId`.

4. Modify affected test suites.

### Why are the changes needed?

Currently, Spark SQL's date-time expressions and functions have been already ported on Java 8 time API but tests still use old time APIs. In particular, `DateTimeTestUtils` exposes functions that accept only TimeZone instances. This is inconvenient, and CPU consuming because need to convert TimeZone instances to ZoneId instances via strings (zone ids).

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By affected test suites executed by jenkins builds.

Closes#28033 from MaxGekk/with-default-time-zone.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. The tests added by #27953 are moved from `AvroLogicalTypeSuite` to `AvroSuite`.

2. Checking of the `rebaseDateTime` flag is moved out from functions bodies.

### Why are the changes needed?

1. The tests are moved because they are not directly related to logical types.

2. Checking the flag out of functions bodies should improve performance.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running Avro tests via the command `build/sbt avro/test`

Closes#27964 from MaxGekk/rebase-avro-datetime-followup.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The PR addresses the issue of compatibility with Spark 2.4 and earlier version in reading/writing dates and timestamp via **Avro** datasource. Previous releases are based on a hybrid calendar - Julian + Gregorian. Since Spark 3.0, Proleptic Gregorian calendar is used by default, see SPARK-26651. In particular, the issue pops up for dates/timestamps before 1582-10-15 when the hybrid calendar switches from/to Gregorian to/from Julian calendar. The same local date in different calendar is converted to different number of days since the epoch 1970-01-01. For example, the 1001-01-01 date is converted to:

- -719164 in Julian calendar. Spark 2.4 saves the number as a value of DATE type into **Avro** files.

- -719162 in Proleptic Gregorian calendar. Spark 3.0 saves the number as a date value.

The PR proposes rebasing from/to Proleptic Gregorian calendar to the hybrid one under the SQL config:

```

spark.sql.legacy.avro.rebaseDateTime.enabled

```

which is set to `false` by default which means the rebasing is not performed by default.

The details of the implementation:

1. Re-use 2 methods of `DateTimeUtils` added by the PR https://github.com/apache/spark/pull/27915 for rebasing microseconds.

2. Re-use 2 methods of `DateTimeUtils` added by the PR https://github.com/apache/spark/pull/27915 for rebasing days.

3. Use `rebaseGregorianToJulianMicros()` and `rebaseGregorianToJulianDays()` while saving timestamps/dates to **Avro** files if the SQL config is on.

4. Use `rebaseJulianToGregorianMicros()` and `rebaseJulianToGregorianDays()` while loading timestamps/dates from **Avro** files if the SQL config is on.

5. The SQL config `spark.sql.legacy.avro.rebaseDateTime.enabled` controls conversions from/to dates, and timestamps of the `timestamp-millis`, `timestamp-micros` logical types.

### Why are the changes needed?

For the backward compatibility with Spark 2.4 and earlier versions. The changes allow users to read dates/timestamps saved by previous version, and get the same result. Also after the changes, users can enable the rebasing in write, and save dates/timestamps that can be loaded correctly by Spark 2.4 and earlier versions.

### Does this PR introduce any user-facing change?

Yes, the timestamp `1001-01-01 01:02:03.123456` saved by Spark 2.4.5 as `timestamp-micros` is interpreted by Spark 3.0.0-preview2 differently:

```scala

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> spark.read.format("avro").load("/Users/maxim/tmp/before_1582/2_4_5_date_avro").show(false)

+----------+

|date |

+----------+

|1001-01-07|

+----------+

```

After the changes:

```scala

scala> spark.conf.set("spark.sql.legacy.avro.rebaseDateTime.enabled", true)

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> spark.read.format("avro").load("/Users/maxim/tmp/before_1582/2_4_5_date_avro").show(false)

+----------+

|date |

+----------+

|1001-01-01|

+----------+

```

### How was this patch tested?

1. Added tests to `AvroLogicalTypeSuite` to check rebasing in read. The test reads back avro files saved by Spark 2.4.5 via:

```shell

$ export TZ="America/Los_Angeles"

```

```scala

scala> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

scala> val df = Seq("1001-01-01").toDF("dateS").select($"dateS".cast("date").as("date"))

df: org.apache.spark.sql.DataFrame = [date: date]

scala> df.write.format("avro").save("/Users/maxim/tmp/before_1582/2_4_5_date_avro")

scala> val df2 = Seq("1001-01-01 01:02:03.123456").toDF("tsS").select($"tsS".cast("timestamp").as("ts"))

df2: org.apache.spark.sql.DataFrame = [ts: timestamp]

scala> df2.write.format("avro").save("/Users/maxim/tmp/before_1582/2_4_5_ts_avro")

scala> :paste

// Entering paste mode (ctrl-D to finish)

val timestampSchema = s"""

| {

| "namespace": "logical",

| "type": "record",

| "name": "test",

| "fields": [

| {"name": "ts", "type": ["null", {"type": "long","logicalType": "timestamp-millis"}], "default": null}

| ]

| }

|""".stripMargin

// Exiting paste mode, now interpreting.

scala> df3.write.format("avro").option("avroSchema", timestampSchema).save("/Users/maxim/tmp/before_1582/2_4_5_ts_millis_avro")

```

2. Added the following tests to `AvroLogicalTypeSuite` to check rebasing of dates/timestamps (in microsecond and millisecond precision). The tests write rebased a date/timestamps and read them back w/ enabled/disabled rebasing, and compare results. :

- `rebasing microseconds timestamps in write`

- `rebasing milliseconds timestamps in write`

- `rebasing dates in write`

Closes#27953 from MaxGekk/rebase-avro-datetime.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.1.0-SNAPSHOT.

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27698 from gatorsmile/updateVersion.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Follow up on [SPARK-30428](https://github.com/apache/spark/pull/27112) which added support for partition pruning in File source V2.

This PR implements the necessary changes in order to pass the `dataFilters` to the `listFiles`. This enables having `FileIndex` implementations which use the `dataFilters` for further pruning the file listing (see the discussion [here](https://github.com/apache/spark/pull/27112#discussion_r364757217)).

### Why are the changes needed?

Datasources such as `csv` and `json` do not implement the `SupportsPushDownFilters` trait. In order to support data skipping uniformly for all file based data sources, one can override the `listFiles` method in a `FileIndex` implementation, which consults external metadata and prunes the list of files.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Modifying the unit tests for v2 file sources to verify the `dataFilters` are passed

Closes#27157 from guykhazma/PushdataFiltersInFileListing.

Authored-by: Guy Khazma <guykhag@gmail.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to output additional msg from the tests where a log appender is added. The message is printed as a part of `IllegalStateException` in the case of reaching the limit of maximum number of logged events.

### Why are the changes needed?

If a log appender is not removed from the log4j appenders list. the caller message could help to investigate the problem and find the test which doesn't remove the log appender.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running the modified test suites `AvroSuite`, `CSVSuite`, `ResolveHintsSuite` and etc.

Closes#27296 from MaxGekk/assign-name-to-log-appender.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose move out creation of `AvroOption` from `AvroPartitionReaderFactory.buildReader`, and create it earlier in `AvroScan.createReaderFactory`.

### Why are the changes needed?

- To avoid building `AvroOptions` from a map of Avro options and Hadoop conf per each partition.

- If an instance of `AvroOptions` is built only once at the driver side, we could output warnings while parsing Avro options and don't worry about noisiness of the warnings.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By `AvroSuite`

Closes#27272 from MaxGekk/avro-options-once-for-read.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to remove the `header` option in the `Avro source v2: support partition pruning` test.

### Why are the changes needed?

The option is not supported by Avro, and may misleading readers.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By `AvroSuite`.

Closes#27203 from MaxGekk/avro-suite-remove-header-option.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the PR, I propose to check the `ignoreExtensionKey` option in the case insensitive map of `AvroOption`.

### Why are the changes needed?

The map `options` passed to `AvroUtils.inferSchema` contains all keys in the lower cases in fact. Actually, the map is converted from a `CaseInsensitiveStringMap`. Consequently, the check 3663dbe541/external/avro/src/main/scala/org/apache/spark/sql/avro/AvroUtils.scala (L45) always return `false`, and the deprecation log warning is never printed.

### Does this PR introduce any user-facing change?

Yes, after the changes the log warning is printed once.

### How was this patch tested?

Added new test to `AvroSuite` which checks existence of log warning.

Closes#27200 from MaxGekk/avro-fix-ignoreExtension-contains.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the PR, I propose to replace `.collect()`, `.count()` and `.foreach(_ => ())` in SQL benchmarks and use the `NoOp` datasource. I added an implicit class to `SqlBasedBenchmark` with the `.noop()` method. It can be used in benchmark like: `ds.noop()`. The last one is unfolded to `ds.write.format("noop").mode(Overwrite).save()`.

### Why are the changes needed?

To avoid additional overhead that `collect()` (and other actions) has. For example, `.collect()` has to convert values according to external types and pull data to the driver. This can hide actual performance regressions or improvements of benchmarked operations.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Re-run all modified benchmarks using Amazon EC2.

| Item | Description |

| ---- | ----|

| Region | us-west-2 (Oregon) |

| Instance | r3.xlarge (spot instance) |

| AMI | ami-06f2f779464715dc5 (ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1) |

| Java | OpenJDK8/10 |

- Run `TPCDSQueryBenchmark` using instructions from the PR #26049

```

# `spark-tpcds-datagen` needs this. (JDK8)

$ git clone https://github.com/apache/spark.git -b branch-2.4 --depth 1 spark-2.4

$ export SPARK_HOME=$PWD

$ ./build/mvn clean package -DskipTests

# Generate data. (JDK8)

$ git clone gitgithub.com:maropu/spark-tpcds-datagen.git

$ cd spark-tpcds-datagen/

$ build/mvn clean package

$ mkdir -p /data/tpcds

$ ./bin/dsdgen --output-location /data/tpcds/s1 // This need `Spark 2.4`

```

- Other benchmarks ran by the script:

```

#!/usr/bin/env python3

import os

from sparktestsupport.shellutils import run_cmd

benchmarks = [

['sql/test', 'org.apache.spark.sql.execution.benchmark.AggregateBenchmark'],

['avro/test', 'org.apache.spark.sql.execution.benchmark.AvroReadBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.BloomFilterBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.DataSourceReadBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.DateTimeBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.ExtractBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.FilterPushdownBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.InExpressionBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.IntervalBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.JoinBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.MakeDateTimeBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.MiscBenchmark'],

['hive/test', 'org.apache.spark.sql.execution.benchmark.ObjectHashAggregateExecBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.OrcNestedSchemaPruningBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.OrcV2NestedSchemaPruningBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.ParquetNestedSchemaPruningBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.RangeBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.UDFBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.WideSchemaBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.WideTableBenchmark'],

['hive/test', 'org.apache.spark.sql.hive.orc.OrcReadBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.datasources.csv.CSVBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.datasources.json.JsonBenchmark']

]

print('Set SPARK_GENERATE_BENCHMARK_FILES=1')

os.environ['SPARK_GENERATE_BENCHMARK_FILES'] = '1'

for b in benchmarks:

print("Run benchmark: %s" % b[1])

run_cmd(['build/sbt', '%s:runMain %s' % (b[0], b[1])])

```

Closes#27078 from MaxGekk/noop-in-benchmarks.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

File source V2: support partition pruning.

Note: subquery predicates are not pushed down for partition pruning even after this PR, due to the limitation for the current data source V2 API and framework. The rule `PlanSubqueries` requires the subquery expression to be in the children or class parameters in `SparkPlan`, while the condition is not satisfied for `BatchScanExec`.

### Why are the changes needed?

It's important for reading performance.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

New unit tests for all the V2 file sources

Closes#27112 from gengliangwang/PartitionPruningInFileScan.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This is a follow-up of https://github.com/apache/spark/pull/26907

It changes the for loop `for (element <- array.asScala)` to while loop

### Why are the changes needed?

As per https://github.com/databricks/scala-style-guide#traversal-and-zipwithindex, we should use while loop for the performance-sensitive code.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing tests.

Closes#27127 from gengliangwang/SPARK-30267-FollowUp.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

Adding a `LogicalWriteInfo` interface as suggested by cloud-fan in https://github.com/apache/spark/pull/25990#issuecomment-555132991

### Why are the changes needed?

It provides compile-time guarantees where we previously had none, which will make it harder to introduce bugs in the future.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Compiles and passes tests

Closes#26678 from edrevo/add-logical-write-info.

Lead-authored-by: Ximo Guanter <joaquin.guantergonzalbez@telefonica.com>

Co-authored-by: Ximo Guanter

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

The Deserializer assumed that avro arrays are always of type `GenericData$Array` which is not the case.

Assuming they are from java.util.List is safer and fixes a ClassCastException in some avro code.

### What changes were proposed in this pull request?

Java.util.List has all the necessary methods and is the base class of GenericData$Array.

### Why are the changes needed?

To prevent the following exception in more complex avro objects:

```

java.lang.ClassCastException: java.util.ArrayList cannot be cast to org.apache.avro.generic.GenericData$Array

at org.apache.spark.sql.avro.AvroDeserializer.$anonfun$newWriter$19(AvroDeserializer.scala:170)

at org.apache.spark.sql.avro.AvroDeserializer.$anonfun$newWriter$19$adapted(AvroDeserializer.scala:169)

at org.apache.spark.sql.avro.AvroDeserializer.$anonfun$getRecordWriter$1(AvroDeserializer.scala:314)

at org.apache.spark.sql.avro.AvroDeserializer.$anonfun$getRecordWriter$1$adapted(AvroDeserializer.scala:310)

at org.apache.spark.sql.avro.AvroDeserializer.$anonfun$getRecordWriter$2(AvroDeserializer.scala:332)

at org.apache.spark.sql.avro.AvroDeserializer.$anonfun$getRecordWriter$2$adapted(AvroDeserializer.scala:329)

at org.apache.spark.sql.avro.AvroDeserializer.$anonfun$converter$3(AvroDeserializer.scala:56)

at org.apache.spark.sql.avro.AvroDeserializer.deserialize(AvroDeserializer.scala:70)

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

The current tests already test this behavior. In essesence this patch just changes a type case to a more basic type. So I expect no functional impact.

Closes#26907 from steven-aerts/spark-30267.

Authored-by: Steven Aerts <steven.aerts@gmail.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

This is a follow-up of https://github.com/apache/spark/pull/26780

In https://github.com/apache/spark/pull/26780, a new Avro data source option `actualSchema` is introduced for setting the original Avro schema in function `from_avro`, while the expected schema is supposed to be set in the parameter `jsonFormatSchema` of `from_avro`.

However, there is another Avro data source option `avroSchema`. It is used for setting the expected schema in readiong and writing.

This PR is to use the option `avroSchema` option for reading Avro data with an evolved schema and remove the new one `actualSchema`

### Why are the changes needed?

Unify and simplify the Avro data source options.

### Does this PR introduce any user-facing change?

Yes.

To deserialize Avro data with an evolved schema, before changes:

```

from_avro('col, expectedSchema, ("actualSchema" -> actualSchema))

```

After changes:

```

from_avro('col, actualSchema, ("avroSchema" -> expectedSchema))

```

The second parameter is always the actual Avro schema after changes.

### How was this patch tested?

Update the existing tests in https://github.com/apache/spark/pull/26780Closes#27045 from gengliangwang/renameAvroOption.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

1. Revert "Preparing development version 3.0.1-SNAPSHOT": 56dcd79

2. Revert "Preparing Spark release v3.0.0-preview2-rc2": c216ef1

### Why are the changes needed?

Shouldn't change master.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

manual test:

https://github.com/apache/spark/compare/5de5e46..wangyum:revert-masterCloses#26915 from wangyum/revert-master.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

In the PR, I propose to move all tests that use deprecated Spark APIs to separate test classes, and add the annotation:

```scala

deprecated("This test suite will be removed.", "3.0.0")

```

The annotation suppress warnings from already deprecated methods and classes.

### Why are the changes needed?

The warnings about deprecated Spark APIs in tests does not indicate any issues because the tests use such APIs intentionally. Eliminating the warnings allows to highlight other warnings that could show real problems.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By existing test suites and by

- DeprecatedAvroFunctionsSuite

- DeprecatedDateFunctionsSuite

- DeprecatedDatasetAggregatorSuite

- DeprecatedStreamingAggregationSuite

- DeprecatedWholeStageCodegenSuite

Closes#26885 from MaxGekk/eliminate-deprecate-warnings.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

Follow up of https://github.com/apache/spark/pull/24405

### What changes were proposed in this pull request?

The current implementation of _from_avro_ and _AvroDataToCatalyst_ doesn't allow doing schema evolution since it requires the deserialization of an Avro record with the exact same schema with which it was serialized.

The proposed change is to add a new option `actualSchema` to allow passing the schema used to serialize the records. This allows using a different compatible schema for reading by passing both schemas to _GenericDatumReader_. If no writer's schema is provided, nothing changes from before.

### Why are the changes needed?

Consider the following example.

```

// schema ID: 1

val schema1 = """

{

"type": "record",

"name": "MySchema",

"fields": [

{"name": "col1", "type": "int"},

{"name": "col2", "type": "string"}

]

}

"""

// schema ID: 2

val schema2 = """

{

"type": "record",

"name": "MySchema",

"fields": [

{"name": "col1", "type": "int"},

{"name": "col2", "type": "string"},

{"name": "col3", "type": "string", "default": ""}

]

}

"""

```

The two schemas are compatible - i.e. you can use `schema2` to deserialize events serialized with `schema1`, in which case there will be the field `col3` with the default value.

Now imagine that you have two dataframes (read from batch or streaming), one with Avro events from schema1 and the other with events from schema2. **We want to combine them into one dataframe** for storing or further processing.

With the current `from_avro` function we can only decode each of them with the corresponding schema:

```

scalaval df1 = ... // Avro events created with schema1

df1: org.apache.spark.sql.DataFrame = [eventBytes: binary]

scalaval decodedDf1 = df1.select(from_avro('eventBytes, schema1) as "decoded")

decodedDf1: org.apache.spark.sql.DataFrame = [decoded: struct<col1: int, col2: string>]

scalaval df2= ... // Avro events created with schema2

df2: org.apache.spark.sql.DataFrame = [eventBytes: binary]

scalaval decodedDf2 = df2.select(from_avro('eventBytes, schema2) as "decoded")

decodedDf2: org.apache.spark.sql.DataFrame = [decoded: struct<col1: int, col2: string, col3: string>]

```

but then `decodedDf1` and `decodedDf2` have different Spark schemas and we can't union them. Instead, with the proposed change we can decode `df1` in the following way:

```

scalaimport scala.collection.JavaConverters._

scalaval decodedDf1 = df1.select(from_avro(data = 'eventBytes, jsonFormatSchema = schema2, options = Map("actualSchema" -> schema1).asJava) as "decoded")

decodedDf1: org.apache.spark.sql.DataFrame = [decoded: struct<col1: int, col2: string, col3: string>]

```

so that both dataframes have the same schemas and can be merged.

### Does this PR introduce any user-facing change?

This PR allows users to pass a new configuration but it doesn't affect current code.

### How was this patch tested?

A new unit test was added.

Closes#26780 from Fokko/SPARK-27506.

Lead-authored-by: Fokko Driesprong <fokko@apache.org>

Co-authored-by: Gianluca Amori <gianluca.amori@gmail.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

This patch fixes the Java code style violations in SPARK-30159 (#26788) which are caught by lint-java (Github Action caught it and I can reproduce it locally). Looks like Jenkins build may have different policy on checking Java style check or less accurate.

### Why are the changes needed?

Java linter starts complaining.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

lint-java passed locally

This closes#26819Closes#26818 from HeartSaVioR/SPARK-30159-FOLLOWUP.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Before this PR, the method `checkAnswer` in Object `QueryTest` returns an optional string. It doesn't throw exceptions when errors happen.

The actual exceptions are thrown in the trait `QueryTest`.

However, there are some test suites(`StreamSuite`, `SessionStateSuite`, `BinaryFileFormatSuite`, etc.) that use the no-op method `QueryTest.checkAnswer` and expect it to fail test cases when the execution results don't match the expected answers.

After this PR:

1. the method `checkAnswer` in Object `QueryTest` will fail tests on errors or unexpected results.

2. add a new method `getErrorMessageInCheckAnswer`, which is exactly the same as the previous version of `checkAnswer`. There are some test suites use this one to customize the test failure message.

3. for the test suites that extend the trait `QueryTest`, we should use the method `checkAnswer` directly, instead of calling the method from Object `QueryTest`.

### Why are the changes needed?

We should fix these method calls to perform actual validations in test suites.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing unit tests.

Closes#26788 from gengliangwang/fixCheckAnswer.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

```java

public static final int YEARS_PER_DECADE = 10;

public static final int YEARS_PER_CENTURY = 100;

public static final int YEARS_PER_MILLENNIUM = 1000;

public static final byte MONTHS_PER_QUARTER = 3;

public static final int MONTHS_PER_YEAR = 12;

public static final byte DAYS_PER_WEEK = 7;

public static final long DAYS_PER_MONTH = 30L;

public static final long HOURS_PER_DAY = 24L;

public static final long MINUTES_PER_HOUR = 60L;

public static final long SECONDS_PER_MINUTE = 60L;

public static final long SECONDS_PER_HOUR = MINUTES_PER_HOUR * SECONDS_PER_MINUTE;

public static final long SECONDS_PER_DAY = HOURS_PER_DAY * SECONDS_PER_HOUR;

public static final long MILLIS_PER_SECOND = 1000L;

public static final long MILLIS_PER_MINUTE = SECONDS_PER_MINUTE * MILLIS_PER_SECOND;

public static final long MILLIS_PER_HOUR = MINUTES_PER_HOUR * MILLIS_PER_MINUTE;

public static final long MILLIS_PER_DAY = HOURS_PER_DAY * MILLIS_PER_HOUR;

public static final long MICROS_PER_MILLIS = 1000L;

public static final long MICROS_PER_SECOND = MILLIS_PER_SECOND * MICROS_PER_MILLIS;

public static final long MICROS_PER_MINUTE = SECONDS_PER_MINUTE * MICROS_PER_SECOND;

public static final long MICROS_PER_HOUR = MINUTES_PER_HOUR * MICROS_PER_MINUTE;

public static final long MICROS_PER_DAY = HOURS_PER_DAY * MICROS_PER_HOUR;

public static final long MICROS_PER_MONTH = DAYS_PER_MONTH * MICROS_PER_DAY;

/* 365.25 days per year assumes leap year every four years */

public static final long MICROS_PER_YEAR = (36525L * MICROS_PER_DAY) / 100;

public static final long NANOS_PER_MICROS = 1000L;

public static final long NANOS_PER_MILLIS = MICROS_PER_MILLIS * NANOS_PER_MICROS;

public static final long NANOS_PER_SECOND = MILLIS_PER_SECOND * NANOS_PER_MILLIS;

```

The above parameters are defined in IntervalUtils, DateTimeUtils, and CalendarInterval, some of them are redundant, some of them are cross-referenced.

### Why are the changes needed?

To simplify code, enhance consistency and reduce risks

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

modified uts

Closes#26399 from yaooqinn/SPARK-29757.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

To push the built jars to maven release repository, we need to remove the 'SNAPSHOT' tag from the version name.

Made the following changes in this PR:

* Update all the `3.0.0-SNAPSHOT` version name to `3.0.0-preview`

* Update the sparkR version number check logic to allow jvm version like `3.0.0-preview`

**Please note those changes were generated by the release script in the past, but this time since we manually add tags on master branch, we need to manually apply those changes too.**

We shall revert the changes after 3.0.0-preview release passed.

### Why are the changes needed?

To make the maven release repository to accept the built jars.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

### What changes were proposed in this pull request?

To push the built jars to maven release repository, we need to remove the 'SNAPSHOT' tag from the version name.

Made the following changes in this PR:

* Update all the `3.0.0-SNAPSHOT` version name to `3.0.0-preview`

* Update the PySpark version from `3.0.0.dev0` to `3.0.0`

**Please note those changes were generated by the release script in the past, but this time since we manually add tags on master branch, we need to manually apply those changes too.**

We shall revert the changes after 3.0.0-preview release passed.

### Why are the changes needed?

To make the maven release repository to accept the built jars.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#26243 from jiangxb1987/3.0.0-preview-prepare.

Lead-authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

This PR regenerate the benchmark results in `catalyst` and `avro` module in order to compare JDK8/JDK11 result.

### Why are the changes needed?

This PR aims to verify that there is no regression on JDK11.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

This is a test-only update. We need to run the benchmark manually.

Closes#25972 from dongjoon-hyun/SPARK-29300.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Scala 2.13 emits a deprecation warning for procedure-like declarations:

```

def foo() {

...

```

This is equivalent to the following, so should be changed to avoid a warning:

```

def foo(): Unit = {

...

```

### Why are the changes needed?

It will avoid about a thousand compiler warnings when we start to support Scala 2.13. I wanted to make the change in 3.0 as there are less likely to be back-ports from 3.0 to 2.4 than 3.1 to 3.0, for example, minimizing that downside to touching so many files.

Unfortunately, that makes this quite a big change.

### Does this PR introduce any user-facing change?

No behavior change at all.

### How was this patch tested?

Existing tests.

Closes#25968 from srowen/SPARK-29291.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Refactored SQL-related benchmark and made them depend on `SqlBasedBenchmark`. In particular, creation of Spark session are moved into `override def getSparkSession: SparkSession`.

### Why are the changes needed?

This should simplify maintenance of SQL-based benchmarks by reducing the number of dependencies. In the future, it should be easier to refactor & extend all SQL benchmarks by changing only one trait. Finally, all SQL-based benchmarks will look uniformly.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running the modified benchmarks.

Closes#25828 from MaxGekk/sql-benchmarks-refactoring.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

reorganize the packages of DS v2 interfaces/classes:

1. `org.spark.sql.connector.catalog`: put `TableCatalog`, `Table` and other related interfaces/classes

2. `org.spark.sql.connector.expression`: put `Expression`, `Transform` and other related interfaces/classes

3. `org.spark.sql.connector.read`: put `ScanBuilder`, `Scan` and other related interfaces/classes

4. `org.spark.sql.connector.write`: put `WriteBuilder`, `BatchWrite` and other related interfaces/classes

### Why are the changes needed?

Data Source V2 has evolved a lot. It's a bit weird that `Expression` is in `org.spark.sql.catalog.v2` and `Table` is in `org.spark.sql.sources.v2`.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing tests

Closes#25700 from cloud-fan/package.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The Experimental and Evolving annotations are both (like Unstable) used to express that a an API may change. However there are many things in the code that have been marked that way since even Spark 1.x. Per the dev thread, anything introduced at or before Spark 2.3.0 is pretty much 'stable' in that it would not change without a deprecation cycle. Therefore I'd like to remove most of these annotations. And, remove the `:: Experimental ::` scaladoc tag too. And likewise for Python, R.

The changes below can be summarized as:

- Generally, anything introduced at or before Spark 2.3.0 has been unmarked as neither Evolving nor Experimental

- Obviously experimental items like DSv2, Barrier mode, ExperimentalMethods are untouched

- I _did_ unmark a few MLlib classes introduced in 2.4, as I am quite confident they're not going to change (e.g. KolmogorovSmirnovTest, PowerIterationClustering)

It's a big change to review, so I'd suggest scanning the list of _files_ changed to see if any area seems like it should remain partly experimental and examine those.

### Why are the changes needed?

Many of these annotations are incorrect; the APIs are de facto stable. Leaving them also makes legitimate usages of the annotations less meaningful.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#25558 from srowen/SPARK-28855.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently we have 2 configs to specify which v2 sources should fallback to v1 code path. One config for read path, and one config for write path.

However, I found it's awkward to work with these 2 configs:

1. for `CREATE TABLE USING format`, should this be read path or write path?

2. for `V2SessionCatalog.loadTable`, we need to return `UnresolvedTable` if it's a DS v1 or we need to fallback to v1 code path. However, at that time, we don't know if the returned table will be used for read or write.

We don't have any new features or perf improvement in file source v2. The fallback API is just a safeguard if we have bugs in v2 implementations. There are not many benefits to support falling back to v1 for read and write path separately.

This PR proposes to merge these 2 configs into one.

## How was this patch tested?

existing tests

Closes#25465 from cloud-fan/merge-conf.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

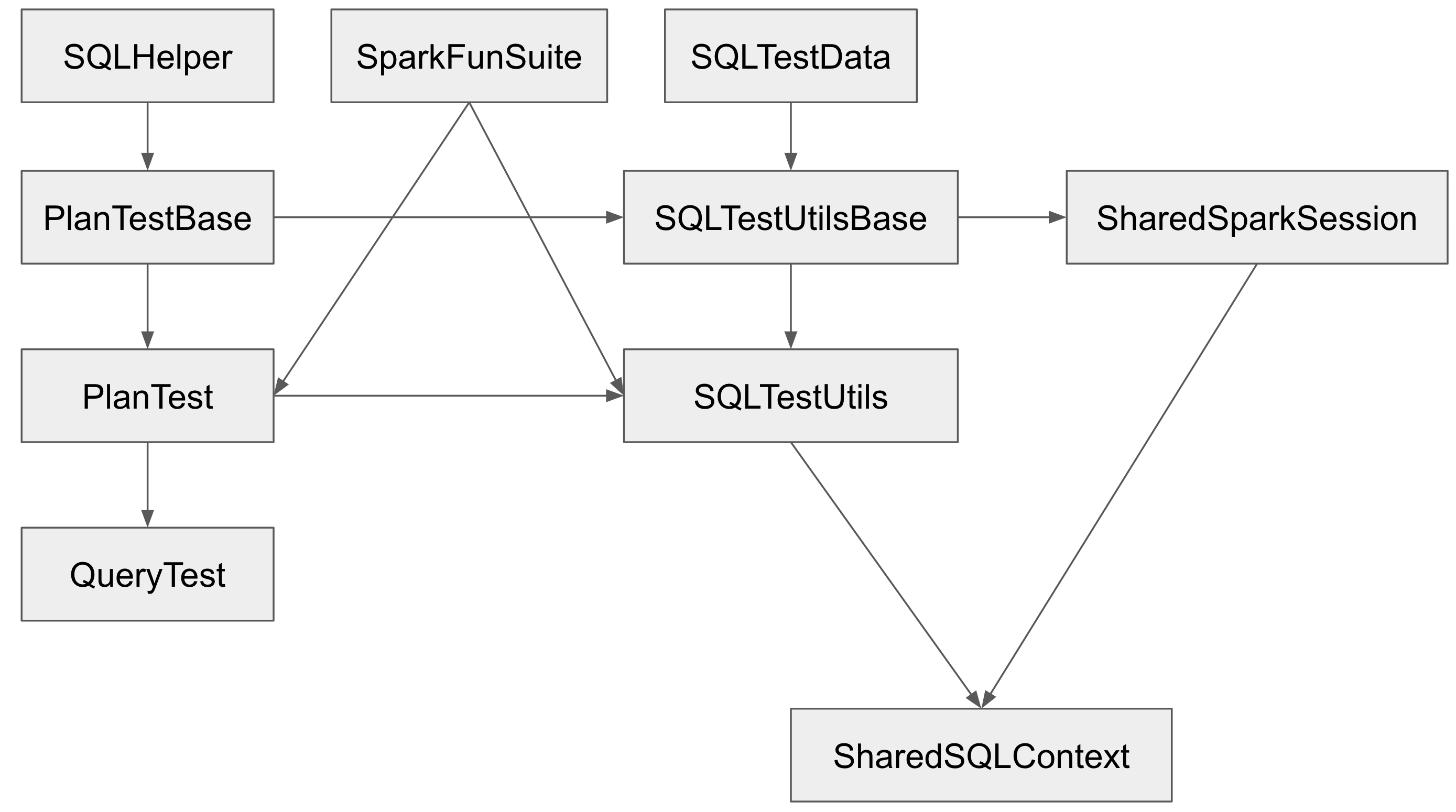

## What changes were proposed in this pull request?

The Spark SQL test framework needs to support 2 kinds of tests:

1. tests inside Spark to test Spark itself (extends `SparkFunSuite`)

2. test outside of Spark to test Spark applications (introduced at b57ed2245c)

The class hierarchy of the major testing traits:

`PlanTestBase`, `SQLTestUtilsBase` and `SharedSparkSession` intentionally don't extend `SparkFunSuite`, so that they can be used for tests outside of Spark. Tests in Spark should extends `QueryTest` and/or `SharedSQLContext` in most cases.

However, the name is a little confusing. As a result, some test suites extend `SharedSparkSession` instead of `SharedSQLContext`. `SharedSparkSession` doesn't work well with `SparkFunSuite` as it doesn't have the special handling of thread auditing in `SharedSQLContext`. For example, you will see a warning starting with `===== POSSIBLE THREAD LEAK IN SUITE` when you run `DataFrameSelfJoinSuite`.

This PR proposes to rename `SharedSparkSession` to `SharedSparkSessionBase`, and rename `SharedSQLContext` to `SharedSparkSession`.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review https://spark.apache.org/contributing.html before opening a pull request.

Closes#25463 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The mapping of Spark schema to Avro schema is many-to-many. (See https://spark.apache.org/docs/latest/sql-data-sources-avro.html#supported-types-for-spark-sql---avro-conversion)

The default schema mapping might not be exactly what users want. For example, by default, a "string" column is always written as "string" Avro type, but users might want to output the column as "enum" Avro type.

With PR https://github.com/apache/spark/pull/21847, Spark supports user-specified schema in the batch writer.

For the function `to_avro`, we should support user-specified output schema as well.

## How was this patch tested?

Unit test.

Closes#25419 from gengliangwang/to_avro.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR change `CalendarIntervalType`'s readable string representation from `calendarinterval` to `interval`.

## How was this patch tested?

Existing UT

Closes#25225 from wangyum/SPARK-28469.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

There are some hardcoded configs, using config entry to replace them.

## How was this patch tested?

Existing UT

Closes#25059 from WangGuangxin/ConfigEntry.

Authored-by: wangguangxin.cn <wangguangxin.cn@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Migrate Avro to File source V2.

## How was this patch tested?

Unit test

Closes#25017 from gengliangwang/avroV2.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

When using `from_avro` to deserialize avro data to catalyst StructType format, if `ConvertToLocalRelation` is applied at the time, `from_avro` produces only the last value (overriding previous values).

The cause is `AvroDeserializer` reuses output row for StructType. Normally, it should be fine in Spark SQL. But `ConvertToLocalRelation` just uses `InterpretedProjection` to project local rows. `InterpretedProjection` creates new row for each output thro, it includes the same nested row object from `AvroDeserializer`. By the end, converted local relation has only last value.

I think there're two possible options:

1. Make `AvroDeserializer` output new row for StructType.

2. Use `InterpretedMutableProjection` in `ConvertToLocalRelation` and call `copy()` on output rows.

Option 2 is chose because previously `ConvertToLocalRelation` also creates new rows, this `InterpretedMutableProjection` + `copy()` shoudn't bring too much performance penalty. `ConvertToLocalRelation` should be arguably less critical, compared with `AvroDeserializer`.

## How was this patch tested?

Added test.

Closes#24805 from viirya/SPARK-27798.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>