## What changes were proposed in this pull request?

This is very like #23590 .

`ByteBuffer.allocate` may throw `OutOfMemoryError` when the response is large but no enough memory is available. However, when this happens, `TransportClient.sendRpcSync` will just hang forever if the timeout set to unlimited.

This PR catches `Throwable` and uses the error to complete `SettableFuture`.

## How was this patch tested?

I tested in my IDE by setting the value of size to -1 to verify the result. Without this patch, it won't be finished until timeout (May hang forever if timeout set to MAX_INT), or the expected `IllegalArgumentException` will be caught.

```java

Override

public void onSuccess(ByteBuffer response) {

try {

int size = response.remaining();

ByteBuffer copy = ByteBuffer.allocate(size); // set size to -1 in runtime when debug

copy.put(response);

// flip "copy" to make it readable

copy.flip();

result.set(copy);

} catch (Throwable t) {

result.setException(t);

}

}

```

Closes#24964 from LantaoJin/SPARK-28160.

Lead-authored-by: LantaoJin <jinlantao@gmail.com>

Co-authored-by: lajin <lajin@ebay.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This pr add two API for [SessionCatalog](df4cb471c9/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/SessionCatalog.scala):

```scala

def listTables(db: String, pattern: String, includeLocalTempViews: Boolean): Seq[TableIdentifier]

def listLocalTempViews(pattern: String): Seq[TableIdentifier]

```

Because in some cases `listTables` does not need local temporary view and sometimes only need list local temporary view.

## How was this patch tested?

unit tests

Closes#24995 from wangyum/SPARK-28196.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

To make the #24972 change smaller. This pr improves `SparkMetadataOperationSuite` to avoid creating new sessions when getSchemas/getTables/getColumns.

## How was this patch tested?

N/A

Closes#24985 from wangyum/SPARK-28184.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Currently, ORC's `inferSchema` is implemented as randomly choosing one ORC file and reading its schema.

This PR follows the behavior of Parquet, it implements merge schemas logic by reading all ORC files in parallel through a spark job.

Users can enable merge schema by `spark.read.orc("xxx").option("mergeSchema", "true")` or by setting `spark.sql.orc.mergeSchema` to `true`, the prior one has higher priority.

## How was this patch tested?

tested by UT OrcUtilsSuite.scala

Closes#24043 from WangGuangxin/SPARK-11412.

Lead-authored-by: wangguangxin.cn <wangguangxin.cn@gmail.com>

Co-authored-by: wangguangxin.cn <wangguangxin.cn@bytedance.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

SPARK-27534 missed to address my own comments at https://github.com/WeichenXu123/spark/pull/8

It's better to push this in since the codes are already cleaned up.

## How was this patch tested?

Unittests fixed

Closes#25003 from HyukjinKwon/SPARK-27534.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

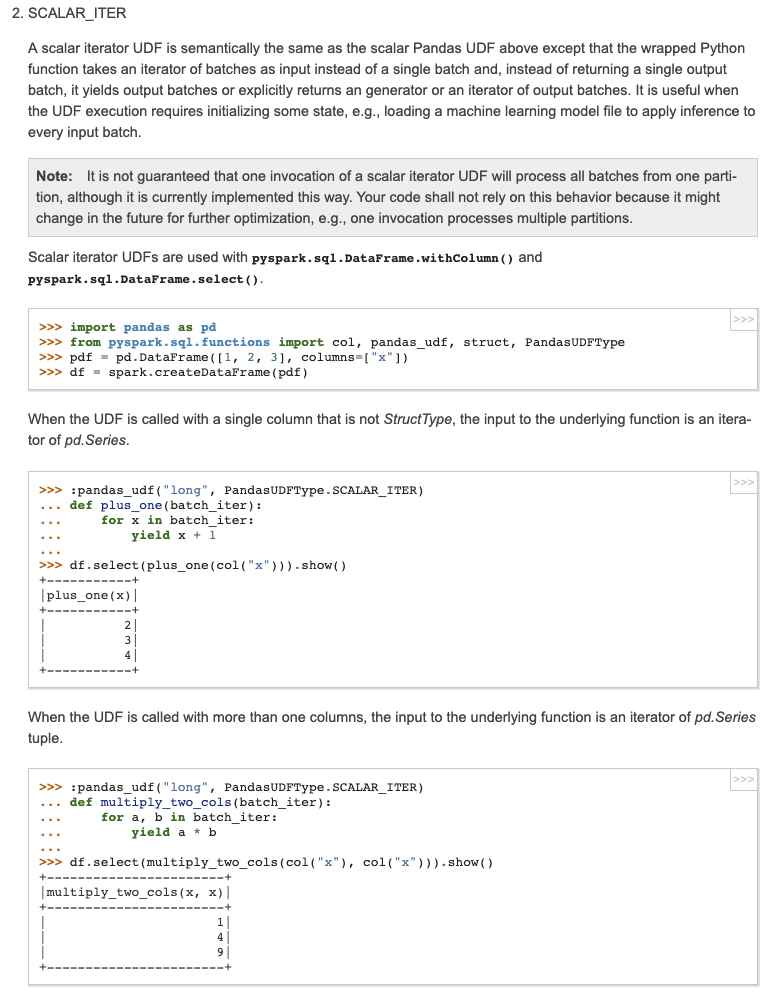

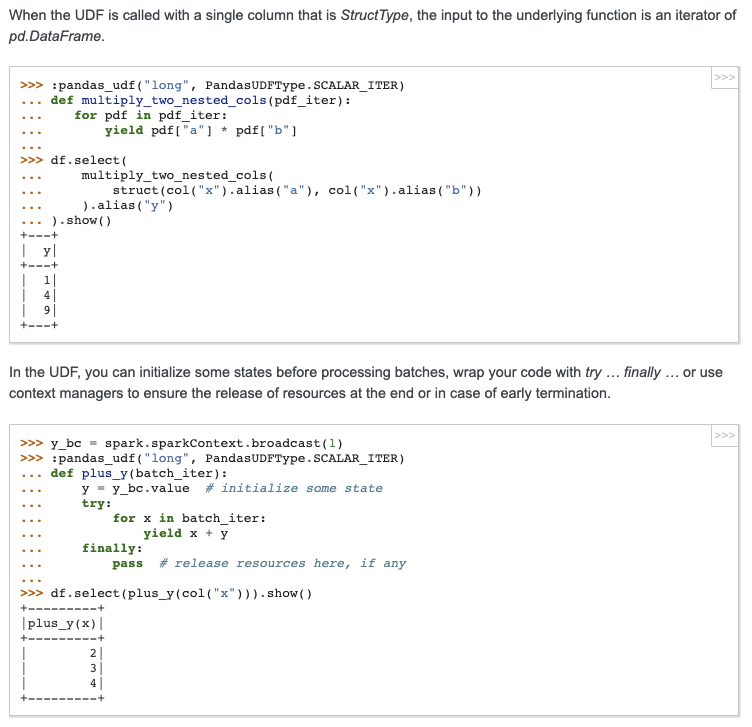

## What changes were proposed in this pull request?

Add docstring/doctest for `SCALAR_ITER` Pandas UDF. I explicitly mentioned that per-partition execution is an implementation detail, not guaranteed. I will submit another PR to add the same to user guide, just to keep this PR minimal.

I didn't add "doctest: +SKIP" in the first commit so it is easy to test locally.

cc: HyukjinKwon gatorsmile icexelloss BryanCutler WeichenXu123

## How was this patch tested?

doctest

Closes#25005 from mengxr/SPARK-28056.2.

Authored-by: Xiangrui Meng <meng@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This is the first part of [SPARK-27396](https://issues.apache.org/jira/browse/SPARK-27396). This is the minimum set of changes necessary to support a pluggable back end for columnar processing. Follow on JIRAs would cover removing some of the duplication between functionality in this patch and functionality currently covered by things like ColumnarBatchScan.

## How was this patch tested?

I added in a new unit test to cover new code not really covered in other places.

I also did manual testing by implementing two plugins/extensions that take advantage of the new APIs to allow for columnar processing for some simple queries. One version runs on the [CPU](https://gist.github.com/revans2/c3cad77075c4fa5d9d271308ee2f1b1d). The other version run on a GPU, but because it has unreleased dependencies I will not include a link to it yet.

The CPU version I would expect to add in as an example with other documentation in a follow on JIRA

This is contributed on behalf of NVIDIA Corporation.

Closes#24795 from revans2/columnar-basic.

Authored-by: Robert (Bobby) Evans <bobby@apache.org>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

Add error handling to `ExecutorPodsPollingSnapshotSource`

Closes#24952 from onursatici/os/polling-source.

Authored-by: Onur Satici <onursatici@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

The `OVERLAY` function is a `ANSI` `SQL`.

For example:

```

SELECT OVERLAY('abcdef' PLACING '45' FROM 4);

SELECT OVERLAY('yabadoo' PLACING 'daba' FROM 5);

SELECT OVERLAY('yabadoo' PLACING 'daba' FROM 5 FOR 0);

SELECT OVERLAY('babosa' PLACING 'ubb' FROM 2 FOR 4);

```

The results of the above four `SQL` are:

```

abc45f

yabadaba

yabadabadoo

bubba

```

Note: If the input string is null, then the result is null too.

There are some mainstream database support the syntax.

**PostgreSQL:**

https://www.postgresql.org/docs/11/functions-string.html

**Vertica:** https://www.vertica.com/docs/9.2.x/HTML/Content/Authoring/SQLReferenceManual/Functions/String/OVERLAY.htm?zoom_highlight=overlay

**Oracle:**

https://docs.oracle.com/en/database/oracle/oracle-database/19/arpls/UTL_RAW.html#GUID-342E37E7-FE43-4CE1-A0E9-7DAABD000369

**DB2:**

https://www.ibm.com/support/knowledgecenter/SSGMCP_5.3.0/com.ibm.cics.rexx.doc/rexx/overlay.html

There are some show of the PR on my production environment.

```

spark-sql> SELECT OVERLAY('abcdef' PLACING '45' FROM 4);

abc45f

Time taken: 6.385 seconds, Fetched 1 row(s)

spark-sql> SELECT OVERLAY('yabadoo' PLACING 'daba' FROM 5);

yabadaba

Time taken: 0.191 seconds, Fetched 1 row(s)

spark-sql> SELECT OVERLAY('yabadoo' PLACING 'daba' FROM 5 FOR 0);

yabadabadoo

Time taken: 0.186 seconds, Fetched 1 row(s)

spark-sql> SELECT OVERLAY('babosa' PLACING 'ubb' FROM 2 FOR 4);

bubba

Time taken: 0.151 seconds, Fetched 1 row(s)

spark-sql> SELECT OVERLAY(null PLACING '45' FROM 4);

NULL

Time taken: 0.22 seconds, Fetched 1 row(s)

spark-sql> SELECT OVERLAY(null PLACING 'daba' FROM 5);

NULL

Time taken: 0.157 seconds, Fetched 1 row(s)

spark-sql> SELECT OVERLAY(null PLACING 'daba' FROM 5 FOR 0);

NULL

Time taken: 0.254 seconds, Fetched 1 row(s)

spark-sql> SELECT OVERLAY(null PLACING 'ubb' FROM 2 FOR 4);

NULL

Time taken: 0.159 seconds, Fetched 1 row(s)

```

## How was this patch tested?

Exists UT and new UT.

Closes#24918 from beliefer/ansi-sql-overlay.

Lead-authored-by: gengjiaan <gengjiaan@360.cn>

Co-authored-by: Jiaan Geng <beliefer@163.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

## What changes were proposed in this pull request?

Closes the generator when Python UDFs stop early.

### Manually verification on pandas iterator UDF and mapPartitions

```python

from pyspark.sql import SparkSession

from pyspark.sql.functions import pandas_udf, PandasUDFType

from pyspark.sql.functions import col, udf

from pyspark.taskcontext import TaskContext

import time

import os

spark.conf.set('spark.sql.execution.arrow.maxRecordsPerBatch', '1')

spark.conf.set('spark.sql.pandas.udf.buffer.size', '4')

pandas_udf("int", PandasUDFType.SCALAR_ITER)

def fi1(it):

try:

for batch in it:

yield batch + 100

time.sleep(1.0)

except BaseException as be:

print("Debug: exception raised: " + str(type(be)))

raise be

finally:

open("/tmp/000001.tmp", "a").close()

df1 = spark.range(10).select(col('id').alias('a')).repartition(1)

# will see log Debug: exception raised: <class 'GeneratorExit'>

# and file "/tmp/000001.tmp" generated.

df1.select(col('a'), fi1('a')).limit(2).collect()

def mapper(it):

try:

for batch in it:

yield batch

except BaseException as be:

print("Debug: exception raised: " + str(type(be)))

raise be

finally:

open("/tmp/000002.tmp", "a").close()

df2 = spark.range(10000000).repartition(1)

# will see log Debug: exception raised: <class 'GeneratorExit'>

# and file "/tmp/000002.tmp" generated.

df2.rdd.mapPartitions(mapper).take(2)

```

## How was this patch tested?

Unit test added.

Please review https://spark.apache.org/contributing.html before opening a pull request.

Closes#24986 from WeichenXu123/pandas_iter_udf_limit.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Avoid hard-coded config: `spark.sql.globalTempDatabase`.

## How was this patch tested?

N/A

Closes#24979 from wangyum/SPARK-28179.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

This ensures that tokens are always created with an empty UGI, which

allows multiple contexts to be (sequentially) started from the same JVM.

Tested with code attached to the bug, and also usual kerberos tests.

Closes#24955 from vanzin/SPARK-28150.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

For simplicity, all `LambdaVariable`s are globally unique, to avoid any potential conflicts. However, this causes a perf problem: we can never hit codegen cache for encoder expressions that deal with collections (which means they contain `LambdaVariable`).

To overcome this problem, `LambdaVariable` should have per-query unique IDs. This PR does 2 things:

1. refactor `LambdaVariable` to carry an ID, so that it's easier to change the ID.

2. add an optimizer rule to reassign `LambdaVariable` IDs, which are per-query unique.

## How was this patch tested?

new tests

Closes#24735 from cloud-fan/dataset.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

SQL ANSI 2011 states that in case of overflow during arithmetic operations, an exception should be thrown. This is what most of the SQL DBs do (eg. SQLServer, DB2). Hive currently returns NULL (as Spark does) but HIVE-18291 is open to be SQL compliant.

The PR introduce an option to decide which behavior Spark should follow, ie. returning NULL on overflow or throwing an exception.

## How was this patch tested?

added UTs

Closes#20350 from mgaido91/SPARK-23179.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

To support GPU-aware scheduling in Standalone (cluster mode), Worker should have ability to setup resources(e.g. GPU/FPGA) when it starts up.

Similar as driver/executor do, Worker has two ways(resourceFile & resourceDiscoveryScript) to setup resources when it starts up. User could use `SPARK_WORKER_OPTS` to apply resource configs on Worker in the form of "-Dx=y". For example,

```

SPARK_WORKER_OPTS="-Dspark.worker.resource.gpu.amount=2 \

-Dspark.worker.resource.fpga.amount=1 \

-Dspark.worker.resource.fpga.discoveryScript=/Users/wuyi/tmp/getFPGAResources.sh \

-Dspark.worker.resourcesFile=/Users/wuyi/tmp/worker-resource-file"

```

## How was this patch tested?

Tested manually in Standalone locally:

- Worker could start up normally when no resources are configured

- Worker should fail to start up when exception threw during setup resources(e.g. unknown directory, parse fail)

- Worker could setup resources from resource file

- Worker could setup resources from discovery scripts

- Worker should setup resources from resource file & discovery scripts when both are configure.

Closes#24841 from Ngone51/dev-worker-resources-setup.

Authored-by: wuyi <ngone_5451@163.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

## What changes were proposed in this pull request?

Currently with `toLocalIterator()` and `toPandas()` with Arrow enabled, if the Spark job being run in the background serving thread errors, it will be caught and sent to Python through the PySpark serializer.

This is not the ideal solution because it is only catch a SparkException, it won't handle an error that occurs in the serializer, and each method has to have it's own special handling to propagate the error.

This PR instead returns the Python Server object along with the serving port and authentication info, so that it allows the Python caller to join with the serving thread. During the call to join, the serving thread Future is completed either successfully or with an exception. In the latter case, the exception will be propagated to Python through the Py4j call.

## How was this patch tested?

Existing tests

Closes#24834 from BryanCutler/pyspark-propagate-server-error-SPARK-27992.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

At Spark 2.4.0/2.3.2/2.2.3, [SPARK-24948](https://issues.apache.org/jira/browse/SPARK-24948) delegated access permission checks to the file system, and maintains a blacklist for all event log files failed once at reading. The blacklisted log files are released back after `CLEAN_INTERVAL_S` seconds.

However, the released files whose sizes don't changes are ignored forever due to `info.fileSize < entry.getLen()` condition (previously [here](3c96937c7b (diff-a7befb99e7bd7e3ab5c46c2568aa5b3eR454)) and now at [shouldReloadLog](https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/deploy/history/FsHistoryProvider.scala#L571)) which returns `false` always when the size is the same with the existing value in `KVStore`. This is recovered only via SHS restart.

This PR aims to remove the existing entry from `KVStore` when it goes to the blacklist.

## How was this patch tested?

Pass the Jenkins with the updated test case.

Closes#24966 from dongjoon-hyun/SPARK-28157.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

updated the usage message in sbin/start-slave.sh.

<masterURL> argument moved to first

## How was this patch tested?

tested locally with

Starting master

starting slave with (./start-slave.sh spark://<IP>:<PORT> -c 1

and opening spark shell with ./spark-shell --master spark://<IP>:<PORT>

Closes#24974 from shivusondur/jira28164.

Authored-by: shivusondur <shivusondur@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

SparkRackResolver generates an INFO message every time is called with 0 arguments.

In this PR I've deleted it because it's too verbose.

## How was this patch tested?

Existing unit tests + spark-shell.

Closes#24935 from gaborgsomogyi/SPARK-28005.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

Spark's `InMemoryFileIndex` contains two places where `FileNotFound` exceptions are caught and logged as warnings (during [directory listing](bcd3b61c4b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/InMemoryFileIndex.scala (L274)) and [block location lookup](bcd3b61c4b/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/InMemoryFileIndex.scala (L333))). This logic was added in #15153 and #21408.

I think that this is a dangerous default behavior because it can mask bugs caused by race conditions (e.g. overwriting a table while it's being read) or S3 consistency issues (there's more discussion on this in the [JIRA ticket](https://issues.apache.org/jira/browse/SPARK-27676)). Failing fast when we detect missing files is not sufficient to make concurrent table reads/writes or S3 listing safe (there are other classes of eventual consistency issues to worry about), but I think it's still beneficial to throw exceptions and fail-fast on the subset of inconsistencies / races that we _can_ detect because that increases the likelihood that an end user will notice the problem and investigate further.

There may be some cases where users _do_ want to ignore missing files, but I think that should be an opt-in behavior via the existing `spark.sql.files.ignoreMissingFiles` flag (the current behavior is itself race-prone because a file might be be deleted between catalog listing and query execution time, triggering FileNotFoundExceptions on executors (which are handled in a way that _does_ respect `ignoreMissingFIles`)).

This PR updates `InMemoryFileIndex` to guard the log-and-ignore-FileNotFoundException behind the existing `spark.sql.files.ignoreMissingFiles` flag.

**Note**: this is a change of default behavior, so I think it needs to be mentioned in release notes.

## How was this patch tested?

New unit tests to simulate file-deletion race conditions, tested with both values of the `ignoreMissingFIles` flag.

Closes#24668 from JoshRosen/SPARK-27676.

Lead-authored-by: Josh Rosen <rosenville@gmail.com>

Co-authored-by: Josh Rosen <joshrosen@stripe.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Track tasks separately for each stage attempt (instead of tracking by stage), and do NOT reset the numRunningTasks to 0 on StageCompleted.

In the case of stage retry, the `taskEnd` event from the zombie stage sometimes makes the number of `totalRunningTasks` negative, which will causes the job to get stuck.

Similar problem also exists with `stageIdToTaskIndices` & `stageIdToSpeculativeTaskIndices`.

If it is a failed `taskEnd` event of the zombie stage, this will cause `stageIdToTaskIndices` or `stageIdToSpeculativeTaskIndices` to remove the task index of the active stage, and the number of `totalPendingTasks` will increase unexpectedly.

## How was this patch tested?

unit test properly handle task end events from completed stages

Closes#24497 from cxzl25/fix_stuck_job_follow_up.

Authored-by: sychen <sychen@ctrip.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

Before this PR during fetching a disk persisted RDD block the network was always used to get the requested block content even when both the source and fetcher executor was running on the same host.

The idea to access another executor local disk files by directly reading the disk comes from the external shuffle service where the local dirs are stored for each executor (block manager).

To make this possible the following changes are done:

- `RegisterBlockManager` message is extended with the `localDirs` which is stored by the block manager master for each block manager as a new property of the `BlockManagerInfo`

- `GetLocationsAndStatus` is extended with the requester host

- `BlockLocationsAndStatus` (the reply for `GetLocationsAndStatus` message) is extended with the an option of local directories, which is filled with a local directories of a same host executor (if there is any, otherwise None is used). This is where the block content can be read from.

Shuffle blocks are out of scope of this PR: there will be a separate PR opened for that (for another Jira issue).

## How was this patch tested?

With a new unit test in `BlockManagerSuite`. See the the test prefixed by "SPARK-27622: avoid the network when block requested from same host".

Closes#24554 from attilapiros/SPARK-27622.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

continuation to https://github.com/apache/spark/pull/24788

## What changes were proposed in this pull request?

Changes are related to BIG ENDIAN system

This changes are done to

identify s390x platform.

use byteorder to BIG_ENDIAN for big endian systems

changes for 2 are done in access functions putFloats() and putDouble()

## How was this patch tested?

Changes have been tested to build successfully on s390x as well x86 platform to make sure build is successful.

Closes#24861 from ketank-new/ketan_latest_v2.3.2.

Authored-by: ketank-new <ketan22584@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

if the input dataset is alreadly cached, then we do not need to cache the internal rdd (like kmeans)

## How was this patch tested?

existing test

Closes#24919 from zhengruifeng/gmm_fix_double_caching.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

cache dataset in BisectingKMeans

cache dataset in LDA if Online solver is chosen.

## How was this patch tested?

existing test

Closes#24920 from zhengruifeng/bikm_cache.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

add missing RankingEvaluator

## How was this patch tested?

added testsuites

Closes#24869 from zhengruifeng/ranking_eval.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Update doc of config `spark.driver.resourcesFile`

## How was this patch tested?

N/A

Closes#24954 from jiangxb1987/ResourceAllocation.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

After this PR, we can test Pandas and Python UDF as below **in Scala side**:

```scala

import IntegratedUDFTestUtils._

val pandasTestUDF = TestScalarPandasUDF("udf")

spark.range(10).select(pandasTestUDF($"id")).show()

```

## How was this patch tested?

Manually tested.

Closes#24945 from HyukjinKwon/SPARK-27893-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR proposes to remove cloned `pyspark-coverage-site` repo.

it doesn't looks a problem in PR builder but somehow it's problematic in `spark-master-test-sbt-hadoop-2.7`.

## How was this patch tested?

Jenkins.

Closes#23729 from HyukjinKwon/followup-coverage.

Lead-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Co-authored-by: shane knapp <incomplete@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This patch removes `fillna(0)` when creating ArrowBatch from a pandas Series.

With `fillna(0)`, the original code would turn a timestamp type into object type, which pyarrow will complain later:

```

>>> s = pd.Series([pd.NaT, pd.Timestamp('2015-01-01')])

>>> s.dtypes

dtype('<M8[ns]')

>>> s.fillna(0)

0 0

1 2015-01-01 00:00:00

dtype: object

```

## How was this patch tested?

Added `test_timestamp_nat`

Closes#24844 from icexelloss/SPARK-28003-arrow-nat.

Authored-by: Li Jin <ice.xelloss@gmail.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

See https://github.com/apache/spark/pull/24702/files#r296765487 -- this just fixes a Java style error. I'm not clear why the PR build didn't catch it.

## How was this patch tested?

N/A

Closes#24951 from srowen/SPARK-27989.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

remove the oldest checkpointed file only if next checkpoint exists.

I think this patch needs back-porting.

## How was this patch tested?

existing test

local check in spark-shell with following suite:

```

import org.apache.spark.ml.linalg.Vectors

import org.apache.spark.ml.classification.GBTClassifier

case class Row(features: org.apache.spark.ml.linalg.Vector, label: Int)

sc.setCheckpointDir("/checkpoints")

val trainingData = sc.parallelize(1 to 2426874, 256).map(x => Row(Vectors.dense(x, x + 1, x * 2 % 10), if (x % 5 == 0) 1 else 0)).toDF

val classifier = new GBTClassifier()

.setLabelCol("label")

.setFeaturesCol("features")

.setProbabilityCol("probability")

.setMaxIter(100)

.setMaxDepth(10)

.setCheckpointInterval(2)

classifier.fit(trainingData)

```

Closes#24870 from zhengruifeng/ck_update.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

Disabled negative dns caching for docker images

Improved logging on DNS resolution, convenient for slow k8s clusters

## What changes were proposed in this pull request?

Added retries when building the connection to the driver in K8s.

In some scenarios DNS reslution can take more than the timeout.

Also openjdk-8 by default has negative dns caching enabled, which means even retries may not help depending on the times.

## How was this patch tested?

This patch was tested agains an specific k8s cluster with slow response time in DNS to ensure it woks.

Closes#24702 from jlpedrosa/feature/kuberetries.

Authored-by: Jose Luis Pedrosa <jlpedrosa@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This patch addresses a missing spot which Map should be passed as CaseInsensitiveStringMap - KafkaContinuousStream seems to be only the missed one.

Before this fix, it has a relevant bug where `pollTimeoutMs` is always set to default value, as the value of `KafkaSourceProvider.CONSUMER_POLL_TIMEOUT` is `kafkaConsumer.pollTimeoutMs` which key-lowercased map has been provided as `sourceOptions`.

## How was this patch tested?

N/A.

Closes#24942 from HeartSaVioR/MINOR-use-case-insensitive-map-for-kafka-continuous-source.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In #24068, IvanVergiliev fixes the issue that OrcFilters.createBuilder has exponential complexity in the height of the filter tree due to the way the check-and-build pattern is implemented.

Comparing to the approach in #24068, I propose a simple solution for the issue:

1. separate the logic of building a convertible filter tree and the actual SearchArgument builder, since the two procedures are different and their return types are different. Thus the new introduced class `ActionType`,`TrimUnconvertibleFilters` and `BuildSearchArgument` in #24068 can be dropped. The code is more readable.

2. For most of the leaf nodes, the convertible result is always Some(node), we can abstract it like this PR.

3. The code is actually small changes on the previous code. See https://github.com/apache/spark/pull/24783

## How was this patch tested?

Run the benchmark provided in #24068:

```

val schema = StructType.fromDDL("col INT")

(20 to 30).foreach { width =>

val whereFilter = (1 to width).map(i => EqualTo("col", i)).reduceLeft(Or)

val start = System.currentTimeMillis()

OrcFilters.createFilter(schema, Seq(whereFilter))

println(s"With $width filters, conversion takes ${System.currentTimeMillis() - start} ms")

}

```

Result:

```

With 20 filters, conversion takes 6 ms

With 21 filters, conversion takes 0 ms

With 22 filters, conversion takes 0 ms

With 23 filters, conversion takes 0 ms

With 24 filters, conversion takes 0 ms

With 25 filters, conversion takes 0 ms

With 26 filters, conversion takes 0 ms

With 27 filters, conversion takes 0 ms

With 28 filters, conversion takes 0 ms

With 29 filters, conversion takes 0 ms

With 30 filters, conversion takes 0 ms

```

Also verified with Unit tests.

Closes#24910 from gengliangwang/refactorOrcFilters.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Currently, pretty skipped message added by f7435bec6a mechanism seems not working when xmlrunner is installed apparently.

This PR fixes two things:

1. When `xmlrunner` is installed, seems `xmlrunner` does not respect `vervosity` level in unittests (default is level 1).

So the output looks as below

```

Running tests...

----------------------------------------------------------------------

SSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSS

----------------------------------------------------------------------

```

So it is not caught by our message detection mechanism.

2. If we manually set the `vervocity` level to `xmlrunner`, it prints messages as below:

```

test_mixed_udf (pyspark.sql.tests.test_pandas_udf_scalar.ScalarPandasUDFTests) ... SKIP (0.000s)

test_mixed_udf_and_sql (pyspark.sql.tests.test_pandas_udf_scalar.ScalarPandasUDFTests) ... SKIP (0.000s)

...

```

This is different in our Jenkins machine:

```

test_createDataFrame_column_name_encoding (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.23.2 must be installed; however, it was not found.'

test_createDataFrame_does_not_modify_input (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.23.2 must be installed; however, it was not found.'

...

```

Note that last `SKIP` is different. This PR fixes the regular expression to catch `SKIP` case as well.

## How was this patch tested?

Manually tested.

**Before:**

```

Starting test(python2.7): pyspark....

Finished test(python2.7): pyspark.... (0s)

...

Tests passed in 562 seconds

========================================================================

...

```

**After:**

```

Starting test(python2.7): pyspark....

Finished test(python2.7): pyspark.... (48s) ... 93 tests were skipped

...

Tests passed in 560 seconds

Skipped tests pyspark.... with python2.7:

pyspark...(...) ... SKIP (0.000s)

...

========================================================================

...

```

Closes#24927 from HyukjinKwon/SPARK-28130.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

When we use upper case partition name in Hive table, like:

```

CREATE TABLE src (KEY STRING, VALUE STRING) PARTITIONED BY (DS STRING)

```

Then, `insert into table` query doesn't work

```

INSERT INTO TABLE src PARTITION(ds) SELECT 'k' key, 'v' value, '1' ds

// or

INSERT INTO TABLE src PARTITION(DS) SELECT 'k' KEY, 'v' VALUE, '1' DS

```

```

[info] org.apache.spark.sql.AnalysisException:

org.apache.hadoop.hive.ql.metadata.Table.ValidationFailureSemanticException: Partition spec {ds=, DS=1} contains non-partition columns;

```

As Hive metastore is not case preserving and keeps partition columns with lower cased names, we lowercase column names in partition spec before passing to Hive client. But we write upper case column names in partition paths.

However, when calling `loadDynamicPartitions` to do `insert into table` for dynamic partition, Hive calculates full path spec for partition paths. So it calculates a partition spec like `{ds=, DS=1}` in above case and fails partition column validation. This patch is proposed to fix the issue by lowercasing the column names in written partition paths for Hive partitioned table.

This fix touchs `saveAsHiveFile` method, which is used in `InsertIntoHiveDirCommand` and `InsertIntoHiveTable` commands. Among them, only `InsertIntoHiveTable` passes `partitionAttributes` parameter. So I think this change only affects `InsertIntoHiveTable` command.

## How was this patch tested?

Added test.

Closes#24886 from viirya/SPARK-28054.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

ShuffleMapTask's partition field is a FilePartition and FilePartition's 'files' field is a Stream$cons which is essentially a linked list. It is therefore serialized recursively.

If the number of files in each partition is, say, 10000 files, recursing into a linked list of length 10000 overflows the stack

The problem is only in Bucketed partitions. The corresponding implementation for non Bucketed partitions uses a StreamBuffer. The proposed change applies the same for Bucketed partitions.

## How was this patch tested?

Existing unit tests. Added new unit test. The unit test fails without the patch. Manual testing on dataset used to reproduce the problem.

Closes#24865 from parthchandra/SPARK-27100.

Lead-authored-by: Parth Chandra <parthc@apple.com>

Co-authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

[PostgreSQL](7c850320d8/src/test/regress/sql/strings.sql (L624)) support another trim pattern: `TRIM(trimStr FROM str)`:

Function | Return Type | Description | Example | Result

--- | --- | --- | --- | ---

trim([leading \| trailing \| both] [characters] from string) | text | Remove the longest string containing only characters from characters (a space by default) from the start, end, or both ends (both is the default) of string | trim(both 'xyz' from 'yxTomxx') | Tom

This pr add support this trim pattern. After this pr. We can support all standard syntax except `TRIM(FROM str)` because it conflicts with our Literals:

```sql

Literals of type 'FROM' are currently not supported.(line 1, pos 12)

== SQL ==

SELECT TRIM(FROM ' SPARK SQL ')

```

PostgreSQL, Vertica and MySQL support this pattern. Teradata, Oracle, DB2, SQL Server, Hive and Presto

**PostgreSQL**:

```

postgres=# SELECT substr(version(), 0, 16), trim('xyz' FROM 'yxTomxx');

substr | btrim

-----------------+-------

PostgreSQL 11.3 | Tom

(1 row)

```

**Vertica**:

```

dbadmin=> SELECT version(), trim('xyz' FROM 'yxTomxx');

version | btrim

------------------------------------+-------

Vertica Analytic Database v9.1.1-0 | Tom

(1 row)

```

**MySQL**:

```

mysql> SELECT version(), trim('xyz' FROM 'yxTomxx');

+-----------+----------------------------+

| version() | trim('xyz' FROM 'yxTomxx') |

+-----------+----------------------------+

| 5.7.26 | yxTomxx |

+-----------+----------------------------+

1 row in set (0.00 sec)

```

More details:

https://www.postgresql.org/docs/11/functions-string.html

## How was this patch tested?

unit tests

Closes#24924 from wangyum/SPARK-28075-2.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

When running FlatMapGroupsInPandasExec or AggregateInPandasExec the shuffle uses a default number of partitions of 200 in "spark.sql.shuffle.partitions". If the data is small, e.g. in testing, many of the partitions will be empty but are treated just the same.

This PR checks the `mapPartitionsInternal` iterator to be non-empty before calling `ArrowPythonRunner` to start computation on the iterator.

## How was this patch tested?

Existing tests. Ran the following benchmarks a simple example where most partitions are empty:

```python

from pyspark.sql.functions import pandas_udf, PandasUDFType

from pyspark.sql.types import *

df = spark.createDataFrame(

[(1, 1.0), (1, 2.0), (2, 3.0), (2, 5.0), (2, 10.0)],

("id", "v"))

pandas_udf("id long, v double", PandasUDFType.GROUPED_MAP)

def normalize(pdf):

v = pdf.v

return pdf.assign(v=(v - v.mean()) / v.std())

df.groupby("id").apply(normalize).count()

```

**Before**

```

In [4]: %timeit df.groupby("id").apply(normalize).count()

1.58 s ± 62.8 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

In [5]: %timeit df.groupby("id").apply(normalize).count()

1.52 s ± 29.5 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

In [6]: %timeit df.groupby("id").apply(normalize).count()

1.52 s ± 37.8 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

```

**After this Change**

```

In [2]: %timeit df.groupby("id").apply(normalize).count()

646 ms ± 89.9 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

In [3]: %timeit df.groupby("id").apply(normalize).count()

408 ms ± 84.6 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

In [4]: %timeit df.groupby("id").apply(normalize).count()

381 ms ± 29.9 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

```

Closes#24926 from BryanCutler/pyspark-pandas_udf-map-agg-skip-empty-parts-SPARK-28128.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The `mapChildren` method in the TreeNode class is commonly used across the whole Spark SQL codebase. In this method, there's a if statement that checks non-empty children. However, there's a cached lazy val `containsChild`, which can avoid unnecessary computation since `containsChild` is used in other methods and therefore constructed anyway.

Benchmark showed that this optimization can improve the whole TPC-DS planning time by 6.8%. There is no regression on any TPC-DS query.

## How was this patch tested?

Existing UTs.

Closes#24925 from yeshengm/treenode-children.

Authored-by: Yesheng Ma <kimi.ysma@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Event logs are different from the other data in terms of the lifetime. It would be great to have a new configuration for Spark event log compression like `spark.eventLog.compression.codec` .

This PR adds this new configuration as an optional configuration. So, if `spark.eventLog.compression.codec` is not given, `spark.io.compression.codec` will be used.

## How was this patch tested?

Pass the Jenkins with the newly added test case.

Closes#24921 from dongjoon-hyun/SPARK-28118.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>