## What changes were proposed in this pull request?

Update the url of reference paper.

## How was this patch tested?

It is comments, so nothing tested.

Author: bomeng <bmeng@us.ibm.com>

Closes#19614 from bomeng/22399.

Often times we want to impute custom values other than 'NaN'. My addition helps people locate this function without reading the API.

## What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: tengpeng <tengpeng@users.noreply.github.com>

Closes#19600 from tengpeng/patch-5.

## Background

In #18837 , ArtRand added Mesos secrets support to the dispatcher. **This PR is to add the same secrets support to the drivers.** This means if the secret configs are set, the driver will launch executors that have access to either env or file-based secrets.

One use case for this is to support TLS in the driver <=> executor communication.

## What changes were proposed in this pull request?

Most of the changes are a refactor of the dispatcher secrets support (#18837) - moving it to a common place that can be used by both the dispatcher and drivers. The same goes for the unit tests.

## How was this patch tested?

There are four config combinations: [env or file-based] x [value or reference secret]. For each combination:

- Added a unit test.

- Tested in DC/OS.

Author: Susan X. Huynh <xhuynh@mesosphere.com>

Closes#19437 from susanxhuynh/sh-mesos-driver-secret.

## What changes were proposed in this pull request?

Event Log Server has a total of five configuration parameters, and now the description of the other two configuration parameters on the doc, user-friendly access and use.

## How was this patch tested?

manual tests

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: guoxiaolong <guo.xiaolong1@zte.com.cn>

Closes#19242 from guoxiaolongzte/addEventLogConf.

## What changes were proposed in this pull request?

The HTTP Strict-Transport-Security response header (often abbreviated as HSTS) is a security feature that lets a web site tell browsers that it should only be communicated with using HTTPS, instead of using HTTP.

Note: The Strict-Transport-Security header is ignored by the browser when your site is accessed using HTTP; this is because an attacker may intercept HTTP connections and inject the header or remove it. When your site is accessed over HTTPS with no certificate errors, the browser knows your site is HTTPS capable and will honor the Strict-Transport-Security header.

The HTTP X-XSS-Protection response header is a feature of Internet Explorer, Chrome and Safari that stops pages from loading when they detect reflected cross-site scripting (XSS) attacks.

The HTTP X-Content-Type-Options response header is used to protect against MIME sniffing vulnerabilities.

## How was this patch tested?

Checked on my system locally.

<img width="750" alt="screen shot 2017-10-03 at 6 49 20 pm" src="https://user-images.githubusercontent.com/6433184/31127234-eadf7c0c-a86b-11e7-8e5d-f6ea3f97b210.png">

Author: krishna-pandey <krish.pandey21@gmail.com>

Author: Krishna Pandey <krish.pandey21@gmail.com>

Closes#19419 from krishna-pandey/SPARK-22188.

Hive delegation tokens are only needed when the Spark driver has no access

to the kerberos TGT. That happens only in two situations:

- when using a proxy user

- when using cluster mode without a keytab

This change modifies the Hive provider so that it only generates delegation

tokens in those situations, and tweaks the YARN AM so that it makes the proper

user visible to the Hive code when running with keytabs, so that the TGT

can be used instead of a delegation token.

The effect of this change is that now it's possible to initialize multiple,

non-concurrent SparkContext instances in the same JVM. Before, the second

invocation would fail to fetch a new Hive delegation token, which then could

make the second (or third or...) application fail once the token expired.

With this change, the TGT will be used to authenticate to the HMS instead.

This change also avoids polluting the current logged in user's credentials

when launching applications. The credentials are copied only when running

applications as a proxy user. This makes it possible to implement SPARK-11035

later, where multiple threads might be launching applications, and each app

should have its own set of credentials.

Tested by verifying HDFS and Hive access in following scenarios:

- client and cluster mode

- client and cluster mode with proxy user

- client and cluster mode with principal / keytab

- long-running cluster app with principal / keytab

- pyspark app that creates (and stops) multiple SparkContext instances

through its lifetime

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#19509 from vanzin/SPARK-22290.

## What changes were proposed in this pull request?

I see that block updates are not logged to the event log.

This makes sense as a default for performance reasons.

However, I find it helpful when trying to get a better understanding of caching for a job to be able to log these updates.

This PR adds a configuration setting `spark.eventLog.blockUpdates` (defaulting to false) which allows block updates to be recorded in the log.

This contribution is original work which is licensed to the Apache Spark project.

## How was this patch tested?

Current and additional unit tests.

Author: Michael Mior <mmior@uwaterloo.ca>

Closes#19263 from michaelmior/log-block-updates.

## What changes were proposed in this pull request?

In the current BlockManager's `getRemoteBytes`, it will call `BlockTransferService#fetchBlockSync` to get remote block. In the `fetchBlockSync`, Spark will allocate a temporary `ByteBuffer` to store the whole fetched block. This will potentially lead to OOM if block size is too big or several blocks are fetched simultaneously in this executor.

So here leveraging the idea of shuffle fetch, to spill the large block to local disk before consumed by upstream code. The behavior is controlled by newly added configuration, if block size is smaller than the threshold, then this block will be persisted in memory; otherwise it will first spill to disk, and then read from disk file.

To achieve this feature, what I did is:

1. Rename `TempShuffleFileManager` to `TempFileManager`, since now it is not only used by shuffle.

2. Add a new `TempFileManager` to manage the files of fetched remote blocks, the files are tracked by weak reference, will be deleted when no use at all.

## How was this patch tested?

This was tested by adding UT, also manual verification in local test to perform GC to clean the files.

Author: jerryshao <sshao@hortonworks.com>

Closes#19476 from jerryshao/SPARK-22062.

## What changes were proposed in this pull request?

Adds links to the fork that provides integration with Nomad, in the same places the k8s integration is linked to.

## How was this patch tested?

I clicked on the links to make sure they're correct ;)

Author: Ben Barnard <barnardb@gmail.com>

Closes#19354 from barnardb/link-to-nomad-integration.

## What changes were proposed in this pull request?

Added documentation for loading csv files into Dataframes

## How was this patch tested?

/dev/run-tests

Author: Jorge Machado <jorge.w.machado@hotmail.com>

Closes#19429 from jomach/master.

## What changes were proposed in this pull request?

The number of cores assigned to each executor is configurable. When this is not explicitly set, multiple executors from the same application may be launched on the same worker too.

## How was this patch tested?

N/A

Author: liuxian <liu.xian3@zte.com.cn>

Closes#18711 from 10110346/executorcores.

## What changes were proposed in this pull request?

Move flume behind a profile, take 2. See https://github.com/apache/spark/pull/19365 for most of the back-story.

This change should fix the problem by removing the examples module dependency and moving Flume examples to the module itself. It also adds deprecation messages, per a discussion on dev about deprecating for 2.3.0.

## How was this patch tested?

Existing tests, which still enable flume integration.

Author: Sean Owen <sowen@cloudera.com>

Closes#19412 from srowen/SPARK-22142.2.

## What changes were proposed in this pull request?

Add 'flume' profile to enable Flume-related integration modules

## How was this patch tested?

Existing tests; no functional change

Author: Sean Owen <sowen@cloudera.com>

Closes#19365 from srowen/SPARK-22142.

The application listing is still generated from event logs, but is now stored

in a KVStore instance. By default an in-memory store is used, but a new config

allows setting a local disk path to store the data, in which case a LevelDB

store will be created.

The provider stores things internally using the public REST API types; I believe

this is better going forward since it will make it easier to get rid of the

internal history server API which is mostly redundant at this point.

I also added a finalizer to LevelDBIterator, to make sure that resources are

eventually released. This helps when code iterates but does not exhaust the

iterator, thus not triggering the auto-close code.

HistoryServerSuite was modified to not re-start the history server unnecessarily;

this makes the json validation tests run more quickly.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#18887 from vanzin/SPARK-20642.

## What changes were proposed in this pull request?

The `percentile_approx` function previously accepted numeric type input and output double type results.

But since all numeric types, date and timestamp types are represented as numerics internally, `percentile_approx` can support them easily.

After this PR, it supports date type, timestamp type and numeric types as input types. The result type is also changed to be the same as the input type, which is more reasonable for percentiles.

This change is also required when we generate equi-height histograms for these types.

## How was this patch tested?

Added a new test and modified some existing tests.

Author: Zhenhua Wang <wangzhenhua@huawei.com>

Closes#19321 from wzhfy/approx_percentile_support_types.

## What changes were proposed in this pull request?

Updated docs so that a line of python in the quick start guide executes. Closes#19283

## How was this patch tested?

Existing tests.

Author: John O'Leary <jgoleary@gmail.com>

Closes#19326 from jgoleary/issues/22107.

Change-Id: I88c272444ca734dc2cbc2592607c11287b90a383

## What changes were proposed in this pull request?

The documentation on File DStreams is enhanced to

1. Detail the exact timestamp logic for examining directories and files.

1. Detail how object stores different from filesystems, and so how using them as a source of data should be treated with caution, possibly publishing data to the store differently (direct PUTs as opposed to stage + rename)

## How was this patch tested?

n/a

Author: Steve Loughran <stevel@hortonworks.com>

Closes#17743 from steveloughran/cloud/SPARK-20448-document-dstream-blobstore.

## What changes were proposed in this pull request?

In the current Spark, when submitting application on YARN with remote resources `./bin/spark-shell --jars http://central.maven.org/maven2/com/github/swagger-akka-http/swagger-akka-http_2.11/0.10.1/swagger-akka-http_2.11-0.10.1.jar --master yarn-client -v`, Spark will be failed with:

```

java.io.IOException: No FileSystem for scheme: http

at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2586)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2593)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:91)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2632)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2614)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:370)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296)

at org.apache.spark.deploy.yarn.Client.copyFileToRemote(Client.scala:354)

at org.apache.spark.deploy.yarn.Client.org$apache$spark$deploy$yarn$Client$$distribute$1(Client.scala:478)

at org.apache.spark.deploy.yarn.Client$$anonfun$prepareLocalResources$11$$anonfun$apply$6.apply(Client.scala:600)

at org.apache.spark.deploy.yarn.Client$$anonfun$prepareLocalResources$11$$anonfun$apply$6.apply(Client.scala:599)

at scala.collection.mutable.ArraySeq.foreach(ArraySeq.scala:74)

at org.apache.spark.deploy.yarn.Client$$anonfun$prepareLocalResources$11.apply(Client.scala:599)

at org.apache.spark.deploy.yarn.Client$$anonfun$prepareLocalResources$11.apply(Client.scala:598)

at scala.collection.immutable.List.foreach(List.scala:381)

at org.apache.spark.deploy.yarn.Client.prepareLocalResources(Client.scala:598)

at org.apache.spark.deploy.yarn.Client.createContainerLaunchContext(Client.scala:848)

at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:173)

```

This is because `YARN#client` assumes resources are on the Hadoop compatible FS. To fix this problem, here propose to download remote http(s) resources to local and add this local downloaded resources to dist cache. This solution has one downside: remote resources are downloaded and uploaded again, but it only restricted to only remote http(s) resources, also the overhead is not so big. The advantages of this solution is that it is simple and the code changes restricts to only `SparkSubmit`.

## How was this patch tested?

Unit test added, also verified in local cluster.

Author: jerryshao <sshao@hortonworks.com>

Closes#19130 from jerryshao/SPARK-21917.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/18266 add a new feature to support read JDBC table use custom schema, but we must specify all the fields. For simplicity, this PR support specify partial fields.

## How was this patch tested?

unit tests

Author: Yuming Wang <wgyumg@gmail.com>

Closes#19231 from wangyum/SPARK-22002.

## What changes were proposed in this pull request?

Auto generated Oracle schema some times not we expect:

- `number(1)` auto mapped to BooleanType, some times it's not we expect, per [SPARK-20921](https://issues.apache.org/jira/browse/SPARK-20921).

- `number` auto mapped to Decimal(38,10), It can't read big data, per [SPARK-20427](https://issues.apache.org/jira/browse/SPARK-20427).

This PR fix this issue by custom schema as follows:

```scala

val props = new Properties()

props.put("customSchema", "ID decimal(38, 0), N1 int, N2 boolean")

val dfRead = spark.read.schema(schema).jdbc(jdbcUrl, "tableWithCustomSchema", props)

dfRead.show()

```

or

```sql

CREATE TEMPORARY VIEW tableWithCustomSchema

USING org.apache.spark.sql.jdbc

OPTIONS (url '$jdbcUrl', dbTable 'tableWithCustomSchema', customSchema'ID decimal(38, 0), N1 int, N2 boolean')

```

## How was this patch tested?

unit tests

Author: Yuming Wang <wgyumg@gmail.com>

Closes#18266 from wangyum/SPARK-20427.

## What changes were proposed in this pull request?

Put Kafka 0.8 support behind a kafka-0-8 profile.

## How was this patch tested?

Existing tests, but, until PR builder and Jenkins configs are updated the effect here is to not build or test Kafka 0.8 support at all.

Author: Sean Owen <sowen@cloudera.com>

Closes#19134 from srowen/SPARK-21893.

# What changes were proposed in this pull request?

Added tunable parallelism to the pyspark implementation of one vs. rest classification. Added a parallelism parameter to the Scala implementation of one vs. rest along with functionality for using the parameter to tune the level of parallelism.

I take this PR #18281 over because the original author is busy but we need merge this PR soon.

After this been merged, we can close#18281 .

## How was this patch tested?

Test suite added.

Author: Ajay Saini <ajays725@gmail.com>

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19110 from WeichenXu123/spark-21027.

## What changes were proposed in this pull request?

Recently, I found two unreachable links in the document and fixed them.

Because of small changes related to the document, I don't file this issue in JIRA but please suggest I should do it if you think it's needed.

## How was this patch tested?

Tested manually.

Author: Kousuke Saruta <sarutak@oss.nttdata.co.jp>

Closes#19195 from sarutak/fix-unreachable-link.

## What changes were proposed in this pull request?

Fixed wrong documentation for Mean Absolute Error.

Even though the code is correct for the MAE:

```scala

Since("1.2.0")

def meanAbsoluteError: Double = {

summary.normL1(1) / summary.count

}

```

In the documentation the division by N is missing.

## How was this patch tested?

All of spark tests were run.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: FavioVazquez <favio.vazquezp@gmail.com>

Author: faviovazquez <favio.vazquezp@gmail.com>

Author: Favio André Vázquez <favio.vazquezp@gmail.com>

Closes#19190 from FavioVazquez/mae-fix.

## What changes were proposed in this pull request?

```

echo '{"field": 1}

{"field": 2}

{"field": "3"}' >/tmp/sample.json

```

```scala

import org.apache.spark.sql.types._

val schema = new StructType()

.add("field", ByteType)

.add("_corrupt_record", StringType)

val file = "/tmp/sample.json"

val dfFromFile = spark.read.schema(schema).json(file)

scala> dfFromFile.show(false)

+-----+---------------+

|field|_corrupt_record|

+-----+---------------+

|1 |null |

|2 |null |

|null |{"field": "3"} |

+-----+---------------+

scala> dfFromFile.filter($"_corrupt_record".isNotNull).count()

res1: Long = 0

scala> dfFromFile.filter($"_corrupt_record".isNull).count()

res2: Long = 3

```

When the `requiredSchema` only contains `_corrupt_record`, the derived `actualSchema` is empty and the `_corrupt_record` are all null for all rows. This PR captures above situation and raise an exception with a reasonable workaround messag so that users can know what happened and how to fix the query.

## How was this patch tested?

Added test case.

Author: Jen-Ming Chung <jenmingisme@gmail.com>

Closes#18865 from jmchung/SPARK-21610.

## What changes were proposed in this pull request?

Since [SPARK-15639](https://github.com/apache/spark/pull/13701), `spark.sql.parquet.cacheMetadata` and `PARQUET_CACHE_METADATA` is not used. This PR removes from SQLConf and docs.

## How was this patch tested?

Pass the existing Jenkins.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#19129 from dongjoon-hyun/SPARK-13656.

## What changes were proposed in this pull request?

Modified `CrossValidator` and `TrainValidationSplit` to be able to evaluate models in parallel for a given parameter grid. The level of parallelism is controlled by a parameter `numParallelEval` used to schedule a number of models to be trained/evaluated so that the jobs can be run concurrently. This is a naive approach that does not check the cluster for needed resources, so care must be taken by the user to tune the parameter appropriately. The default value is `1` which will train/evaluate in serial.

## How was this patch tested?

Added unit tests for CrossValidator and TrainValidationSplit to verify that model selection is the same when run in serial vs parallel. Manual testing to verify tasks run in parallel when param is > 1. Added parameter usage to relevant examples.

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#16774 from BryanCutler/parallel-model-eval-SPARK-19357.

## What changes were proposed in this pull request?

Update the line "For example, the data (12:09, cat) is out of order and late, and it falls in windows 12:05 - 12:15 and 12:10 - 12:20." as follow "For example, the data (12:09, cat) is out of order and late, and it falls in windows 12:00 - 12:10 and 12:05 - 12:15." under the programming structured streaming programming guide.

Author: Riccardo Corbella <r.corbella@reply.it>

Closes#19137 from riccardocorbella/bugfix.

## What changes were proposed in this pull request?

All built-in data sources support `Partition Discovery`. We had better update the document to give the users more benefit clearly.

**AFTER**

<img width="906" alt="1" src="https://user-images.githubusercontent.com/9700541/30083628-14278908-9244-11e7-98dc-9ad45fe233a9.png">

## How was this patch tested?

```

SKIP_API=1 jekyll serve --watch

```

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#19139 from dongjoon-hyun/partitiondiscovery.

Mesos has secrets primitives for environment and file-based secrets, this PR adds that functionality to the Spark dispatcher and the appropriate configuration flags.

Unit tested and manually tested against a DC/OS cluster with Mesos 1.4.

Author: ArtRand <arand@soe.ucsc.edu>

Closes#18837 from ArtRand/spark-20812-dispatcher-secrets-and-labels.

This patch adds statsd sink to the current metrics system in spark core.

Author: Xiaofeng Lin <xlin@twilio.com>

Closes#9518 from xflin/statsd.

Change-Id: Ib8720e86223d4a650df53f51ceb963cd95b49a44

## What changes were proposed in this pull request?

This PR adds ML examples for the FeatureHasher transform in Scala, Java, Python.

## How was this patch tested?

Manually ran examples and verified that output is consistent for different APIs

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#19024 from BryanCutler/ml-examples-FeatureHasher-SPARK-21810.

## What changes were proposed in this pull request?

Fair Scheduler can be built via one of the following options:

- By setting a `spark.scheduler.allocation.file` property,

- By setting `fairscheduler.xml` into classpath.

These options are checked **in order** and fair-scheduler is built via first found option. If invalid path is found, `FileNotFoundException` will be expected.

This PR aims unit test coverage of these use cases and a minor documentation change has been added for second option(`fairscheduler.xml` into classpath) to inform the users.

Also, this PR was related with #16813 and has been created separately to keep patch content as isolated and to help the reviewers.

## How was this patch tested?

Added new Unit Tests.

Author: erenavsarogullari <erenavsarogullari@gmail.com>

Closes#16992 from erenavsarogullari/SPARK-19662.

History Server Launch uses SparkClassCommandBuilder for launching the server. It is observed that SPARK_CLASSPATH has been removed and deprecated. For spark-submit this takes a different route and spark.driver.extraClasspath takes care of specifying additional jars in the classpath that were previously specified in the SPARK_CLASSPATH. Right now the only way specify the additional jars for launching daemons such as history server is using SPARK_DIST_CLASSPATH (https://spark.apache.org/docs/latest/hadoop-provided.html) but this I presume is a distribution classpath. It would be nice to have a similar config like spark.driver.extraClasspath for launching daemons similar to history server.

Added new environment variable SPARK_DAEMON_CLASSPATH to set classpath for launching daemons. Tested and verified for History Server and Standalone Mode.

## How was this patch tested?

Initially, history server start script would fail for the reason being that it could not find the required jars for launching the server in the java classpath. Same was true for running Master and Worker in standalone mode. By adding the environment variable SPARK_DAEMON_CLASSPATH to the java classpath, both the daemons(History Server, Standalone daemons) are starting up and running.

Author: pgandhi <pgandhi@yahoo-inc.com>

Author: pgandhi999 <parthkgandhi9@gmail.com>

Closes#19047 from pgandhi999/master.

## What changes were proposed in this pull request?

This PR proposes both:

- Add information about Javadoc, SQL docs and few more information in `docs/README.md` and a comment in `docs/_plugins/copy_api_dirs.rb` related with Javadoc.

- Adds some commands so that the script always runs the SQL docs build under `./sql` directory (for directly running `./sql/create-docs.sh` in the root directory).

## How was this patch tested?

Manual tests with `jekyll build` and `./sql/create-docs.sh` in the root directory.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#19019 from HyukjinKwon/minor-doc-build.

JIRA ticket: https://issues.apache.org/jira/browse/SPARK-21694

## What changes were proposed in this pull request?

Spark already supports launching containers attached to a given CNI network by specifying it via the config `spark.mesos.network.name`.

This PR adds support to pass in network labels to CNI plugins via a new config option `spark.mesos.network.labels`. These network labels are key-value pairs that are set in the `NetworkInfo` of both the driver and executor tasks. More details in the related Mesos documentation: http://mesos.apache.org/documentation/latest/cni/#mesos-meta-data-to-cni-plugins

## How was this patch tested?

Unit tests, for both driver and executor tasks.

Manual integration test to submit a job with the `spark.mesos.network.labels` option, hit the mesos/state.json endpoint, and check that the labels are set in the driver and executor tasks.

ArtRand skonto

Author: Susan X. Huynh <xhuynh@mesosphere.com>

Closes#18910 from susanxhuynh/sh-mesos-cni-labels.

Right now the spark shuffle service has a cache for index files. It is based on a # of files cached (spark.shuffle.service.index.cache.entries). This can cause issues if people have a lot of reducers because the size of each entry can fluctuate based on the # of reducers.

We saw an issues with a job that had 170000 reducers and it caused NM with spark shuffle service to use 700-800MB or memory in NM by itself.

We should change this cache to be memory based and only allow a certain memory size used. When I say memory based I mean the cache should have a limit of say 100MB.

https://issues.apache.org/jira/browse/SPARK-21501

Manual Testing with 170000 reducers has been performed with cache loaded up to max 100MB default limit, with each shuffle index file of size 1.3MB. Eviction takes place as soon as the total cache size reaches the 100MB limit and the objects will be ready for garbage collection there by avoiding NM to crash. No notable difference in runtime has been observed.

Author: Sanket Chintapalli <schintap@yahoo-inc.com>

Closes#18940 from redsanket/SPARK-21501.

## What changes were proposed in this pull request?

This PR proposes to install `mkdocs` by `pip install` if missing in the path. Mainly to fix Jenkins's documentation build failure in `spark-master-docs`. See https://amplab.cs.berkeley.edu/jenkins/job/spark-master-docs/3580/console.

It also adds `mkdocs` as requirements in `docs/README.md`.

## How was this patch tested?

I manually ran `jekyll build` under `docs` directory after manually removing `mkdocs` via `pip uninstall mkdocs`.

Also, tested this in the same way but on CentOS Linux release 7.3.1611 (Core) where I built Spark few times but never built documentation before and `mkdocs` is not installed.

```

...

Moving back into docs dir.

Moving to SQL directory and building docs.

Missing mkdocs in your path, trying to install mkdocs for SQL documentation generation.

Collecting mkdocs

Downloading mkdocs-0.16.3-py2.py3-none-any.whl (1.2MB)

100% |████████████████████████████████| 1.2MB 574kB/s

Requirement already satisfied: PyYAML>=3.10 in /usr/lib64/python2.7/site-packages (from mkdocs)

Collecting livereload>=2.5.1 (from mkdocs)

Downloading livereload-2.5.1-py2-none-any.whl

Collecting tornado>=4.1 (from mkdocs)

Downloading tornado-4.5.1.tar.gz (483kB)

100% |████████████████████████████████| 491kB 1.4MB/s

Collecting Markdown>=2.3.1 (from mkdocs)

Downloading Markdown-2.6.9.tar.gz (271kB)

100% |████████████████████████████████| 276kB 2.4MB/s

Collecting click>=3.3 (from mkdocs)

Downloading click-6.7-py2.py3-none-any.whl (71kB)

100% |████████████████████████████████| 71kB 2.8MB/s

Requirement already satisfied: Jinja2>=2.7.1 in /usr/lib/python2.7/site-packages (from mkdocs)

Requirement already satisfied: six in /usr/lib/python2.7/site-packages (from livereload>=2.5.1->mkdocs)

Requirement already satisfied: backports.ssl_match_hostname in /usr/lib/python2.7/site-packages (from tornado>=4.1->mkdocs)

Collecting singledispatch (from tornado>=4.1->mkdocs)

Downloading singledispatch-3.4.0.3-py2.py3-none-any.whl

Collecting certifi (from tornado>=4.1->mkdocs)

Downloading certifi-2017.7.27.1-py2.py3-none-any.whl (349kB)

100% |████████████████████████████████| 358kB 2.1MB/s

Collecting backports_abc>=0.4 (from tornado>=4.1->mkdocs)

Downloading backports_abc-0.5-py2.py3-none-any.whl

Requirement already satisfied: MarkupSafe>=0.23 in /usr/lib/python2.7/site-packages (from Jinja2>=2.7.1->mkdocs)

Building wheels for collected packages: tornado, Markdown

Running setup.py bdist_wheel for tornado ... done

Stored in directory: /root/.cache/pip/wheels/84/83/cd/6a04602633457269d161344755e6766d24307189b7a67ff4b7

Running setup.py bdist_wheel for Markdown ... done

Stored in directory: /root/.cache/pip/wheels/bf/46/10/c93e17ae86ae3b3a919c7b39dad3b5ccf09aeb066419e5c1e5

Successfully built tornado Markdown

Installing collected packages: singledispatch, certifi, backports-abc, tornado, livereload, Markdown, click, mkdocs

Successfully installed Markdown-2.6.9 backports-abc-0.5 certifi-2017.7.27.1 click-6.7 livereload-2.5.1 mkdocs-0.16.3 singledispatch-3.4.0.3 tornado-4.5.1

Generating markdown files for SQL documentation.

Generating HTML files for SQL documentation.

INFO - Cleaning site directory

INFO - Building documentation to directory: .../spark/sql/site

Moving back into docs dir.

Making directory api/sql

cp -r ../sql/site/. api/sql

Source: .../spark/docs

Destination: .../spark/docs/_site

Generating...

done.

Auto-regeneration: disabled. Use --watch to enable.

```

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#18984 from HyukjinKwon/sql-doc-mkdocs.

Add an option to the JDBC data source to initialize the environment of the remote database session

## What changes were proposed in this pull request?

This proposes an option to the JDBC datasource, tentatively called " sessionInitStatement" to implement the functionality of session initialization present for example in the Sqoop connector for Oracle (see https://sqoop.apache.org/docs/1.4.6/SqoopUserGuide.html#_oraoop_oracle_session_initialization_statements ) . After each database session is opened to the remote DB, and before starting to read data, this option executes a custom SQL statement (or a PL/SQL block in the case of Oracle).

See also https://issues.apache.org/jira/browse/SPARK-21519

## How was this patch tested?

Manually tested using Spark SQL data source and Oracle JDBC

Author: LucaCanali <luca.canali@cern.ch>

Closes#18724 from LucaCanali/JDBC_datasource_sessionInitStatement.

## What changes were proposed in this pull request?

This commit adds a new argument for IllegalArgumentException message. This recent commit added the argument:

[dcac1d57f0)

## How was this patch tested?

Unit test have been passed

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Marcos P. Sanchez <mpenate@stratio.com>

Closes#18862 from mpenate/feature/exception-errorifexists.

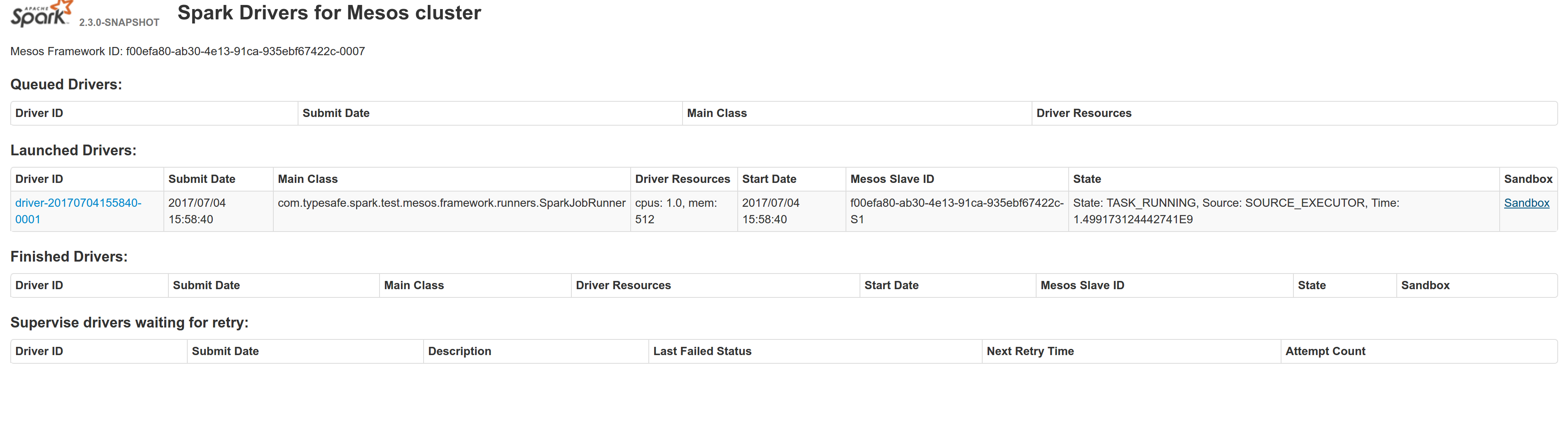

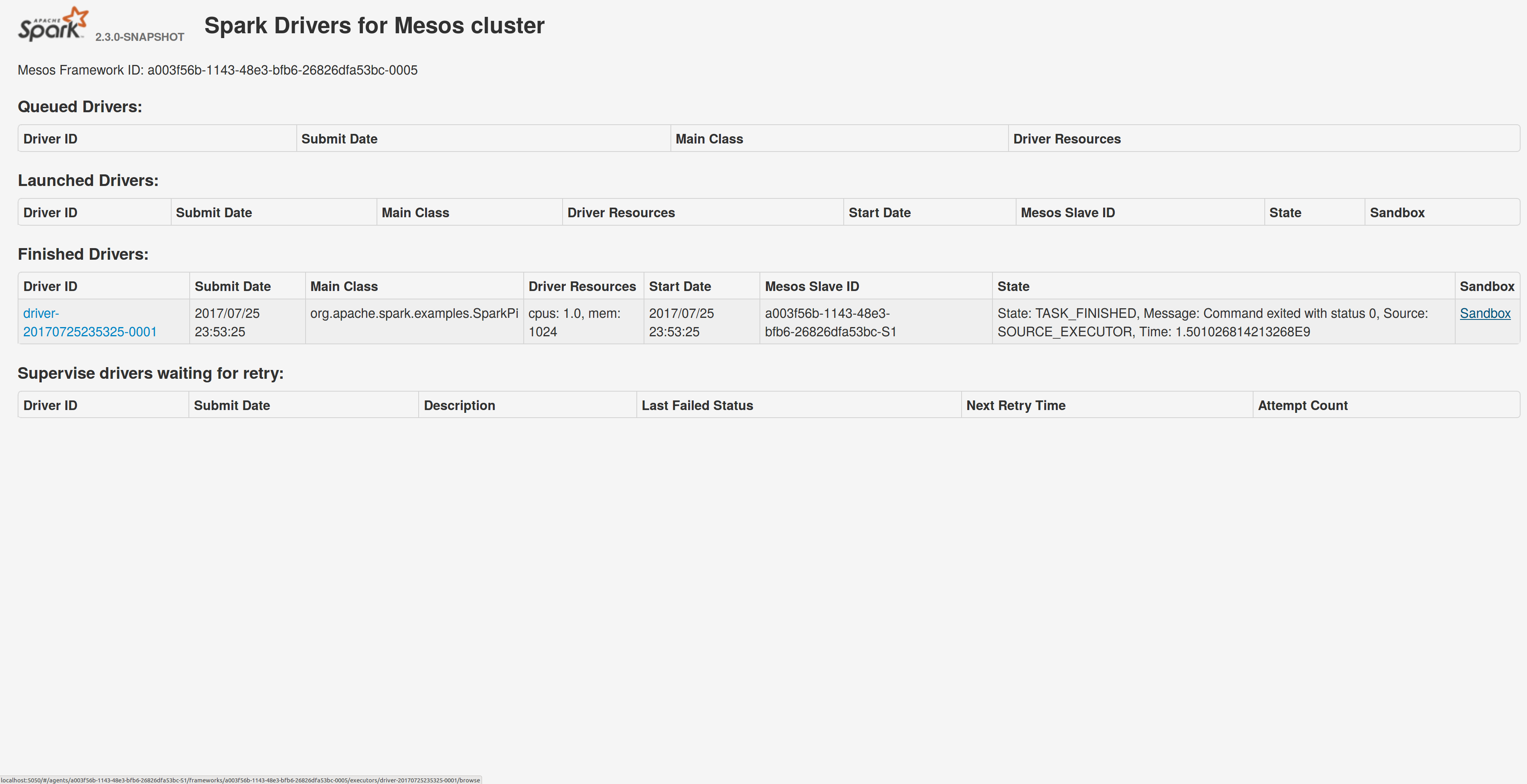

## What changes were proposed in this pull request?

Adds a sandbox link per driver in the dispatcher ui with minimal changes after a bug was fixed here:

https://issues.apache.org/jira/browse/MESOS-4992

The sandbox uri has the following format:

http://<proxy_uri>/#/slaves/\<agent-id\>/ frameworks/ \<scheduler-id\>/executors/\<driver-id\>/browse

For dc/os the proxy uri is <dc/os uri>/mesos. For the dc/os deployment scenario and to make things easier I introduced a new config property named `spark.mesos.proxy.baseURL` which should be passed to the dispatcher when launched using --conf. If no such configuration is detected then no sandbox uri is depicted, and there is an empty column with a header (this can be changed so nothing is shown).

Within dc/os the base url must be a property for the dispatcher that we should add in the future here:

9e7c909c3b/repo/packages/S/spark/26/config.json

It is not easy to detect in different environments what is that uri so user should pass it.

## How was this patch tested?

Tested with the mesos test suite here: https://github.com/typesafehub/mesos-spark-integration-tests.

Attached image shows the ui modification where the sandbox header is added.

Tested the uri redirection the way it was suggested here:

https://issues.apache.org/jira/browse/MESOS-4992

Built mesos 1.4 from the master branch and started the mesos dispatcher with the command:

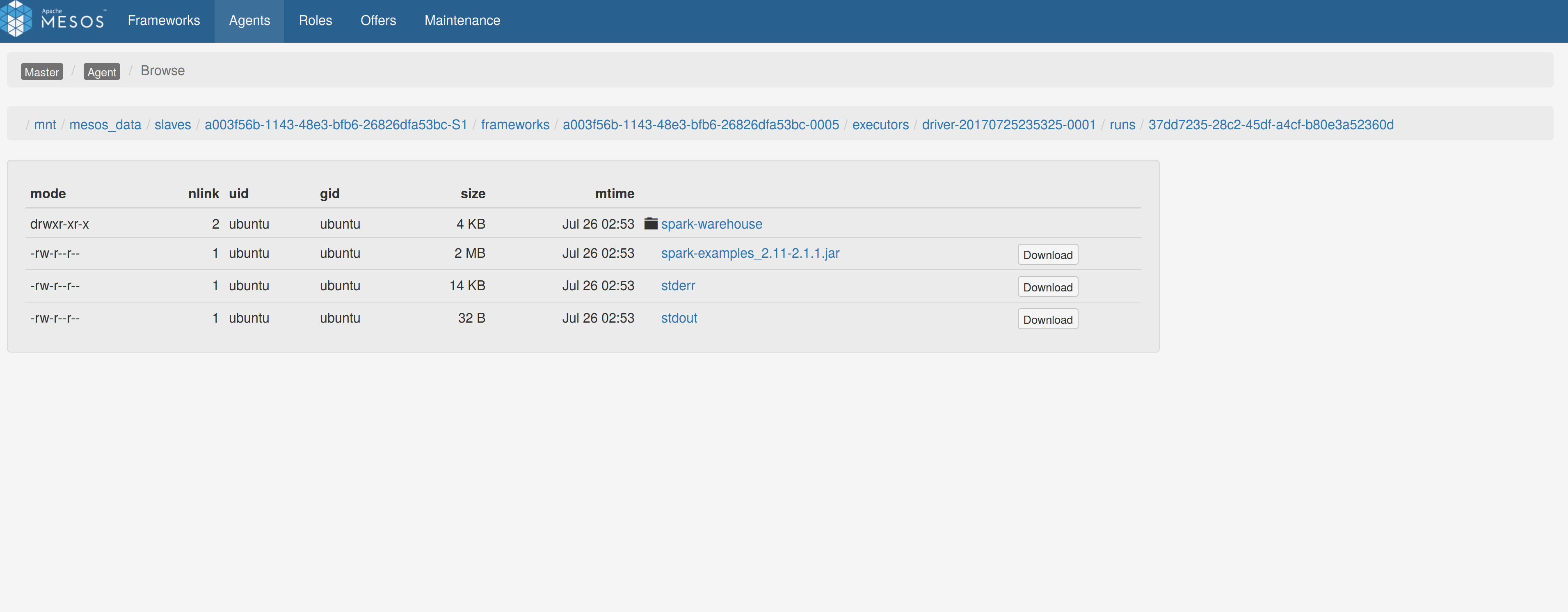

`./sbin/start-mesos-dispatcher.sh --conf spark.mesos.proxy.baseURL=http://localhost:5050 -m mesos://127.0.0.1:5050`

Run a spark example:

`./bin/spark-submit --class org.apache.spark.examples.SparkPi --master mesos://10.10.1.79:7078 --deploy-mode cluster --executor-memory 2G --total-executor-cores 2 http://<path>/spark-examples_2.11-2.1.1.jar 10`

Sandbox uri is shown at the bottom of the page:

Redirection works as expected:

Author: Stavros Kontopoulos <st.kontopoulos@gmail.com>

Closes#18528 from skonto/adds_the_sandbox_uri.

## What changes were proposed in this pull request?

When we use `bin/spark-sql` command configuring `--conf spark.hadoop.foo=bar`, the `SparkSQLCliDriver` initializes an instance of hiveconf, it does not add `foo->bar` to it.

this pr gets `spark.hadoop.*` properties from sysProps to this hiveconf

## How was this patch tested?

UT

Author: hzyaoqin <hzyaoqin@corp.netease.com>

Author: Kent Yao <yaooqinn@hotmail.com>

Closes#18668 from yaooqinn/SPARK-21451.

## What changes were proposed in this pull request?

Add missing import and missing parentheses to invoke `SparkSession::text()`.

## How was this patch tested?

Built and the code for this application, ran jekyll locally per docs/README.md.

Author: Christiam Camacho <camacho@ncbi.nlm.nih.gov>

Closes#18795 from christiam/master.

## What changes were proposed in this pull request?

Fix 2 rendering errors on configuration doc page, due to SPARK-21243 and SPARK-15355.

## How was this patch tested?

Manually built and viewed docs with jekyll

Author: Sean Owen <sowen@cloudera.com>

Closes#18793 from srowen/SPARK-21593.

## What changes were proposed in this pull request?

This pr added documents about unsupported functions in Hive UDF/UDTF/UDAF.

This pr relates to #18768 and #18527.

## How was this patch tested?

N/A

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#18792 from maropu/HOTFIX-20170731.

In programming guide, `numTasks` is used in several places as arguments of Transformations. However, in code, `numPartitions` is used. In this fix, I replace `numTasks` with `numPartitions` in programming guide for consistency.

Author: Cheng Wang <chengwang0511@gmail.com>

Closes#18774 from polarke/replace-numtasks-with-numpartitions-in-doc.