## What changes were proposed in this pull request?

Currently, if I run `spark-shell` in my local, it started to show the logs as below:

```

$ ./bin/spark-shell

...

19/02/28 04:42:43 INFO SecurityManager: Changing view acls to: hkwon

19/02/28 04:42:43 INFO SecurityManager: Changing modify acls to: hkwon

19/02/28 04:42:43 INFO SecurityManager: Changing view acls groups to:

19/02/28 04:42:43 INFO SecurityManager: Changing modify acls groups to:

19/02/28 04:42:43 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hkwon); groups with view permissions: Set(); users with modify permissions: Set(hkwon); groups with modify permissions: Set()

19/02/28 04:42:43 INFO SignalUtils: Registered signal handler for INT

19/02/28 04:42:48 INFO SparkContext: Running Spark version 3.0.0-SNAPSHOT

19/02/28 04:42:48 INFO SparkContext: Submitted application: Spark shell

19/02/28 04:42:48 INFO SecurityManager: Changing view acls to: hkwon

```

Seems to be the cause is https://github.com/apache/spark/pull/23806 and `prepareSubmitEnvironment` looks actually reinitializing the logging again.

This PR proposes to uninitializing log later after `prepareSubmitEnvironment`.

## How was this patch tested?

Manually tested.

Closes#23911 from HyukjinKwon/SPARK-26895.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Before this change, there was some code in the k8s backend to deal

with how to resolve dependencies and make them available to the

Spark application. It turns out that none of that code is necessary,

since spark-submit already handles all that for applications started

in client mode - like the k8s driver that is run inside a Spark-created

pod.

For that reason, specifically for pyspark, there's no need for the

k8s backend to deal with PYTHONPATH; or, in general, to change the URIs

provided by the user at all. spark-submit takes care of that.

For testing, I created a pyspark script that depends on another module

that is shipped with --py-files. Then I used:

- --py-files http://.../dep.pyhttp://.../test.py

- --py-files http://.../dep.ziphttp://.../test.py

- --py-files local:/.../dep.py local:/.../test.py

- --py-files local:/.../dep.zip local:/.../test.py

Without this change, all of the above commands fail. With the change, the

driver is able to see the dependencies in all the above cases; but executors

don't see the dependencies in the last two. That's a bug in shared Spark code

that deals with local: dependencies in pyspark (SPARK-26934).

I also tested a Scala app using the main jar from an http server.

Closes#23793 from vanzin/SPARK-24736.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Comparing whether Boolean expression is equal to true is redundant

For example:

The datatype of `a` is boolean.

Before:

if (a == true)

After:

if (a)

## How was this patch tested?

N/A

Closes#23884 from 10110346/simplifyboolean.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This patch applies redaction to command line arguments before logging them. This applies to two resource managers: standalone cluster and YARN.

This patch only concerns about arguments starting with `-D` since Spark is likely passing the Spark configuration to command line arguments as `-Dspark.blabla=blabla`. More change is necessary if we also want to handle the case of `--conf spark.blabla=blabla`.

## How was this patch tested?

Added UT for redact logic. This patch only touches how to log so not easy to add UT regarding it.

Closes#23820 from HeartSaVioR/MINOR-redact-command-line-args-for-running-driver-executor.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In the PR, I propose to refactor existing code related to date/time conversions, and replace constants like `1000` and `1000000` by `DateTimeUtils` constants and transformation functions from `java.util.concurrent.TimeUnit._`.

## How was this patch tested?

The changes are tested by existing test suites.

Closes#23878 from MaxGekk/magic-time-constants.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

Since the yarn module is actually private to Spark, this interface was never

actually "public". Since it has no use inside of Spark, let's avoid adding

a yarn-specific extension that isn't public, and point any potential users

are more general solutions (like using a SparkListener).

Closes#23839 from vanzin/SPARK-26788.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

Before this PR the method `BlockManager#putBlockDataAsStream()` (which is used during block replication where the block data is received as a stream) was reading the whole block content into the memory even at DISK_ONLY storage level.

With this change the received block data (which was temporary stored in a file) is just simply moved into the right location backing the target block. This way a possible OOM error is avoided.

In this implementation to save code duplications the method `doPutBytes` is refactored into a template method called `BlockStoreUpdater` which has a separate implementation to handle byte buffer based and temporary file based block store updates.

With existing unit tests of `DistributedSuite` (the ones dealing with replications):

- caching on disk, replicated (encryption = off) (with replication as stream)

- caching on disk, replicated (encryption = on) (with replication as stream)

- caching in memory, serialized, replicated (encryption = on) (with replication as stream)

- caching in memory, serialized, replicated (encryption = off) (with replication as stream)

- etc.

And with new unit tests testing `putBlockDataAsStream` method directly:

- test putBlockDataAsStream with caching (encryption = off)

- test putBlockDataAsStream with caching (encryption = on)

- test putBlockDataAsStream with caching on disk (encryption = off)

- test putBlockDataAsStream with caching on disk (encryption = on)

Closes#23688 from attilapiros/SPARK-25035.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

In the PR, I propose to test the input showed at the end of the article: https://arxiv.org/pdf/1805.08612.pdf . The difference of the test and paper's test is type of array. This test allocates arrays of bytes instead of array of ints.

## How was this patch tested?

New test is added to `SorterSuite`.

Closes#23856 from MaxGekk/timsort-bug-fix.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR aims to remove references to "Shark", which is a precursor to Spark SQL. I searched the whole project for the text "Shark" (ignore case) and just found a single match. Note that occurrences like nickname or test data are irrelevant.

## How was this patch tested?

N/A. Change comments only.

Closes#23876 from seancxmao/remove-Shark.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Don't use inaccessible fields in SizeEstimator, which comes up in Java 9+

## How was this patch tested?

Manually ran tests with Java 11; it causes these tests that failed before to pass.

This ought to pass on Java 8 as there's effectively no change for Java 8.

Closes#23866 from srowen/SPARK-26963.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR aims to fix some outdated comments about task schedulers.

1. Change "ClusterScheduler" to "YarnScheduler" in comments of `YarnClusterScheduler`

According to [SPARK-1140 Remove references to ClusterScheduler](https://issues.apache.org/jira/browse/SPARK-1140), ClusterScheduler is not used anymore.

I also searched "ClusterScheduler" within the whole project, no other occurrences are found in comments or test cases. Note classes like `YarnClusterSchedulerBackend` or `MesosClusterScheduler` are not relevant.

2. Update comments about `statusUpdate` from `TaskSetManager`

`statusUpdate` has been moved to `TaskSchedulerImpl`. StatusUpdate event handling is delegated to `handleSuccessfulTask`/`handleFailedTask`.

## How was this patch tested?

N/A. Fix comments only.

Closes#23844 from seancxmao/taskscheduler-comments.

Authored-by: seancxmao <seancxmao@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

`prepareSubmitEnvironment` performs globbing that will fail in the case where a proxy user (`--proxy-user`) doesn't have permission to the file. This is a bug also with 2.3, so we should backport, as currently you can't launch an application that for instance is passing a file under `--archives`, and that file is owned by the target user.

The solution is to call `prepareSubmitEnvironment` within a doAs context if proxying.

## How was this patch tested?

Manual tests running with `--proxy-user` and `--archives`, before and after, showing that the globbing is successful when the resource is owned by the target user.

I've looked at writing unit tests, but I am not sure I can do that cleanly (perhaps with a custom FileSystem). Open to ideas.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23806 from abellina/SPARK-26895_prepareSubmitEnvironment_from_doAs.

Lead-authored-by: Alessandro Bellina <abellina@gmail.com>

Co-authored-by: Alessandro Bellina <abellina@yahoo-inc.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Spark's TimSort deviates from JDK 11 TimSort in a couple places:

- `stackLen` was increased in jdk

- additional cases for break in `mergeCollapse`: `n < 0`

In the PR, I propose to align Spark TimSort to jdk implementation.

## How was this patch tested?

By existing test suites, in particular, `SorterSuite`.

Closes#23858 from MaxGekk/timsort-java-alignment.

Authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently, RDD.saveAsTextFile may throw NullPointerException then null row is present.

```

scala> sc.parallelize(Seq(1,null),1).saveAsTextFile("/tmp/foobar.dat")

19/02/15 21:39:17 ERROR Utils: Aborting task

java.lang.NullPointerException

at org.apache.spark.rdd.RDD.$anonfun$saveAsTextFile$3(RDD.scala:1510)

at scala.collection.Iterator$$anon$10.next(Iterator.scala:459)

at org.apache.spark.internal.io.SparkHadoopWriter$.$anonfun$executeTask$1(SparkHadoopWriter.scala:129)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1352)

at org.apache.spark.internal.io.SparkHadoopWriter$.executeTask(SparkHadoopWriter.scala:127)

at org.apache.spark.internal.io.SparkHadoopWriter$.$anonfun$write$1(SparkHadoopWriter.scala:83)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:425)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1318)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:428)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

```

This PR write "Null" for null row to avoid NPE and fix it.

## How was this patch tested?

NA

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23799 from liupc/Fix-saveAsTextFile-throws-NullPointerException-when-null-row-present.

Lead-authored-by: liupengcheng <liupengcheng@xiaomi.com>

Co-authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR targets to support Arrow optimization for conversion from Spark DataFrame to R DataFrame.

Like PySpark side, it falls back to non-optimization code path when it's unable to use Arrow optimization.

This can be tested as below:

```bash

$ ./bin/sparkR --conf spark.sql.execution.arrow.enabled=true

```

```r

collect(createDataFrame(mtcars))

```

### Requirements

- R 3.5.x

- Arrow package 0.12+

```bash

Rscript -e 'remotes::install_github("apache/arrowapache-arrow-0.12.0", subdir = "r")'

```

**Note:** currently, Arrow R package is not in CRAN. Please take a look at ARROW-3204.

**Note:** currently, Arrow R package seems not supporting Windows. Please take a look at ARROW-3204.

### Benchmarks

**Shall**

```bash

sync && sudo purge

./bin/sparkR --conf spark.sql.execution.arrow.enabled=false --driver-memory 4g

```

```bash

sync && sudo purge

./bin/sparkR --conf spark.sql.execution.arrow.enabled=true --driver-memory 4g

```

**R code**

```r

df <- cache(createDataFrame(read.csv("500000.csv")))

count(df)

test <- function() {

options(digits.secs = 6) # milliseconds

start.time <- Sys.time()

collect(df)

end.time <- Sys.time()

time.taken <- end.time - start.time

print(time.taken)

}

test()

```

**Data (350 MB):**

```r

object.size(read.csv("500000.csv"))

350379504 bytes

```

"500000 Records" http://eforexcel.com/wp/downloads-16-sample-csv-files-data-sets-for-testing/

**Results**

```

Time difference of 221.32014 secs

```

```

Time difference of 15.51145 secs

```

The performance improvement was around **1426%**.

### Limitations:

- For now, Arrow optimization with R does not support when the data is `raw`, and when user explicitly gives float type in the schema. They produce corrupt values. In this case, we decide to fall back to non-optimization code path.

- Due to ARROW-4512, it cannot send and receive batch by batch. It has to send all batches in Arrow stream format at once. It needs improvement later.

## How was this patch tested?

Existing tests related with Arrow optimization cover this change. Also, manually tested.

Closes#23760 from HyukjinKwon/SPARK-26762.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

`HadoopDelegationTokenProvider` has basically the same functionality just like `ServiceCredentialProvider` so the interfaces can be merged.

`YARNHadoopDelegationTokenManager` now loads `ServiceCredentialProvider`s in one step. The drawback of this if one provider fails all others are not loaded. `HadoopDelegationTokenManager` loads `HadoopDelegationTokenProvider`s independently so it provides more robust behaviour.

In this PR I've I've made the following changes:

* Deleted `YARNHadoopDelegationTokenManager` and `ServiceCredentialProvider`

* Made `HadoopDelegationTokenProvider` a `DeveloperApi`

## How was this patch tested?

Existing unit tests.

Closes#23686 from gaborgsomogyi/SPARK-26772.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This patch proposes to change the approach on extracting log urls as well as attributes from YARN executor:

- AS-IS: extract information from `Container` API and include them to container launch context

- TO-BE: let YARN executor self-extracting information

This approach leads us to populate more attributes like nodemanager's IPC port which can let us configure custom log url to JHS log url directly.

## How was this patch tested?

Existing unit tests.

Closes#23706 from HeartSaVioR/SPARK-26790.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Make it a debug message so that it doesn't show up in the vast

majority of cases, where HBase classes are not available.

Closes#23776 from vanzin/SPARK-26650.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to use `System.nanoTime()` instead of `System.currentTimeMillis()` in measurements of time intervals.

`System.currentTimeMillis()` returns current wallclock time and will follow changes to the system clock. Thus, negative wallclock adjustments can cause timeouts to "hang" for a long time (until wallclock time has caught up to its previous value again). This can happen when ntpd does a "step" after the network has been disconnected for some time. The most canonical example is during system bootup when DHCP takes longer than usual. This can lead to failures that are really hard to understand/reproduce. `System.nanoTime()` is guaranteed to be monotonically increasing irrespective of wallclock changes.

## How was this patch tested?

By existing test suites.

Closes#23727 from MaxGekk/system-nanotime.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR targets to add vectorized `gapply()` in R, Arrow optimization.

This can be tested as below:

```bash

$ ./bin/sparkR --conf spark.sql.execution.arrow.enabled=true

```

```r

df <- createDataFrame(mtcars)

collect(gapply(df,

"gear",

function(key, group) {

data.frame(gear = key[[1]], disp = mean(group$disp) > group$disp)

},

structType("gear double, disp boolean")))

```

### Requirements

- R 3.5.x

- Arrow package 0.12+

```bash

Rscript -e 'remotes::install_github("apache/arrowapache-arrow-0.12.0", subdir = "r")'

```

**Note:** currently, Arrow R package is not in CRAN. Please take a look at ARROW-3204.

**Note:** currently, Arrow R package seems not supporting Windows. Please take a look at ARROW-3204.

### Benchmarks

**Shall**

```bash

sync && sudo purge

./bin/sparkR --conf spark.sql.execution.arrow.enabled=false

```

```bash

sync && sudo purge

./bin/sparkR --conf spark.sql.execution.arrow.enabled=true

```

**R code**

```r

rdf <- read.csv("500000.csv")

rdf <- rdf[, c("Month.of.Joining", "Weight.in.Kgs.")] # We're only interested in the key and values to calculate.

df <- cache(createDataFrame(rdf))

count(df)

test <- function() {

options(digits.secs = 6) # milliseconds

start.time <- Sys.time()

count(gapply(df,

"Month_of_Joining",

function(key, group) {

data.frame(Month_of_Joining = key[[1]], Weight_in_Kgs_ = mean(group$Weight_in_Kgs_) > group$Weight_in_Kgs_)

},

structType("Month_of_Joining integer, Weight_in_Kgs_ boolean")))

end.time <- Sys.time()

time.taken <- end.time - start.time

print(time.taken)

}

test()

```

**Data (350 MB):**

```r

object.size(read.csv("500000.csv"))

350379504 bytes

```

"500000 Records" http://eforexcel.com/wp/downloads-16-sample-csv-files-data-sets-for-testing/

**Results**

```

Time difference of 35.67459 secs

```

```

Time difference of 4.301399 secs

```

The performance improvement was around **829%**.

**Note that** I am 100% sure this PR improves more then 829% because I gave up testing it with non-Arrow optimization because it took super super super long when the data size becomes bigger.

### Limitations

- For now, Arrow optimization with R does not support when the data is `raw`, and when user explicitly gives float type in the schema. They produce corrupt values.

- Due to ARROW-4512, it cannot send and receive batch by batch. It has to send all batches in Arrow stream format at once. It needs improvement later.

## How was this patch tested?

Unit tests were added

**TODOs:**

- [x] Draft codes

- [x] make the tests passed

- [x] make the CRAN check pass

- [x] Performance measurement

- [x] Supportability investigation (for instance types)

Closes#23746 from HyukjinKwon/SPARK-26759.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

- The benchmark of `XORShiftRandom.nextInt` vis-a-vis `java.util.Random.nextInt` is moved from the `XORShiftRandom` object to `XORShiftRandomBenchmark`.

- Added benchmarks for `nextLong`, `nextDouble` and `nextGaussian` that are used in Spark as well.

- Added a separate benchmark for `XORShiftRandom.hashSeed`.

Closes#23752 from MaxGekk/xorshiftrandom-benchmark.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Delegation token providers interface now has a parameter `fileSystems` but this is needed only for `HadoopFSDelegationTokenProvider`.

In this PR I've addressed this issue in the following way:

* Removed `fileSystems` parameter from `HadoopDelegationTokenProvider`

* Moved `YarnSparkHadoopUtil.hadoopFSsToAccess` into `HadoopFSDelegationTokenProvider`

* Moved `spark.yarn.stagingDir` into core

* Moved `spark.yarn.access.namenodes` into core and renamed to `spark.kerberos.access.namenodes`

* Moved `spark.yarn.access.hadoopFileSystems` into core and renamed to `spark.kerberos.access.hadoopFileSystems`

## How was this patch tested?

Existing unit tests.

Closes#23698 from gaborgsomogyi/SPARK-26766.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

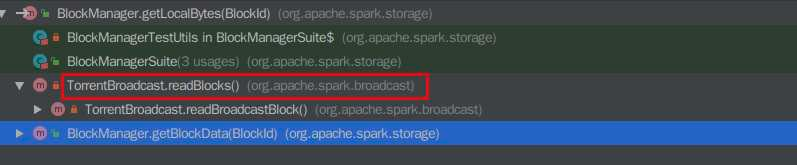

Recently, when I was reading some code of `BlockManager.getBlockData`, I found that there are useless code that would never reach. The related codes is as below:

```

override def getBlockData(blockId: BlockId): ManagedBuffer = {

if (blockId.isShuffle) {

shuffleManager.shuffleBlockResolver.getBlockData(blockId.asInstanceOf[ShuffleBlockId])

} else {

getLocalBytes(blockId) match {

case Some(blockData) =>

new BlockManagerManagedBuffer(blockInfoManager, blockId, blockData, true)

case None =>

// If this block manager receives a request for a block that it doesn't have then it's

// likely that the master has outdated block statuses for this block. Therefore, we send

// an RPC so that this block is marked as being unavailable from this block manager.

reportBlockStatus(blockId, BlockStatus.empty)

throw new BlockNotFoundException(blockId.toString)

}

}

}

```

```

def getLocalBytes(blockId: BlockId): Option[BlockData] = {

logDebug(s"Getting local block $blockId as bytes")

// As an optimization for map output fetches, if the block is for a shuffle, return it

// without acquiring a lock; the disk store never deletes (recent) items so this should work

if (blockId.isShuffle) {

val shuffleBlockResolver = shuffleManager.shuffleBlockResolver

// TODO: This should gracefully handle case where local block is not available. Currently

// downstream code will throw an exception.

val buf = new ChunkedByteBuffer(

shuffleBlockResolver.getBlockData(blockId.asInstanceOf[ShuffleBlockId]).nioByteBuffer())

Some(new ByteBufferBlockData(buf, true))

} else {

blockInfoManager.lockForReading(blockId).map { info => doGetLocalBytes(blockId, info) }

}

}

```

the `blockId.isShuffle` is checked twice, but however it seems that in the method calling hierarchy of `BlockManager.getLocalBytes`, the another callsite of the `BlockManager.getLocalBytes` is at `TorrentBroadcast.readBlocks` where the blockId can never be a `ShuffleBlockId`.

So I think we should remove these useless code for easy reading.

## How was this patch tested?

NA

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23693 from liupc/Remove-useless-code-in-BlockManager.

Authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

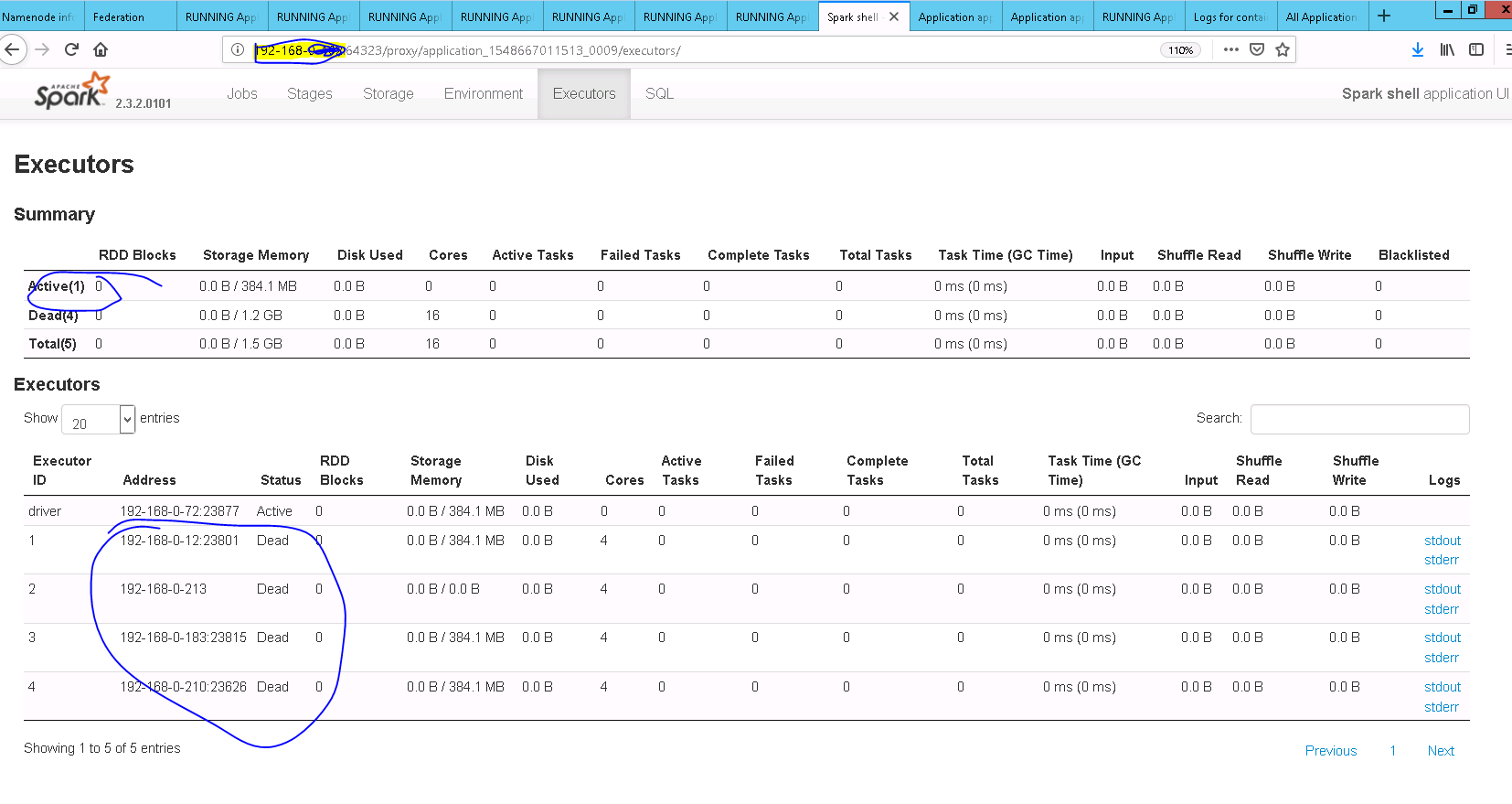

## What changes were proposed in this pull request?

**updateAndSyncNumExecutorsTarget** API should be called after **initializing** flag is unset

## How was this patch tested?

Added UT and also manually tested

After Fix

Closes#23697 from sandeep-katta/executorIssue.

Authored-by: sandeep-katta <sandeep.katta2007@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

When the job's partiton is zero, it will still get a jobid but not shown in ui. It's strange. This PR is to show this job in ui.

Example:

In bash:

mkdir -p /home/test/testdir

sc.textFile("/home/test/testdir")

Some logs:

```

19/01/24 17:26:19 INFO FileInputFormat: Total input paths to process : 0

19/01/24 17:26:19 INFO SparkContext: Starting job: collect at WordCount.scala:9

19/01/24 17:26:19 INFO DAGScheduler: Job 0 finished: collect at WordCount.scala:9, took 0.003735 s

```

## How was this patch tested?

UT

Closes#23637 from deshanxiao/spark-26714.

Authored-by: xiaodeshan <xiaodeshan@xiaomi.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Make .unpersist(), .destroy() non-blocking by default and adjust callers to request blocking only where important.

This also adds an optional blocking argument to Pyspark's RDD.unpersist(), which never had one.

## How was this patch tested?

Existing tests.

Closes#23685 from srowen/SPARK-26771.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Currently, spark would not release ShuffleBlockFetcherIterator until the whole task finished.In some conditions, it incurs memory leak.

An example is `rdd.repartition(m).coalesce(n, shuffle = false).save`, each `ShuffleBlockFetcherIterator` contains some metas about mapStatus(`blocksByAddress`) and each resultTask will keep n(max to shuffle partitions) shuffleBlockFetcherIterator and the memory would never released until the task completion, for they are referenced by the completion callbacks of TaskContext. In some case, it may take huge memory and incurs OOM.

Actually, We can release ShuffleBlockFetcherIterator as soon as it's consumed.

This PR is to resolve this problem.

## How was this patch tested?

unittest

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23438 from liupc/Fast-release-shuffleblockfetcheriterator.

Lead-authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Co-authored-by: liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

…not synchronized to the UI display

## What changes were proposed in this pull request?

The amount of memory used by the broadcast variable is not synchronized to the UI display.

I added the case for BroadcastBlockId and updated the memory usage.

## How was this patch tested?

We can test this patch with unit tests.

Closes#23649 from httfighter/SPARK-26726.

Lead-authored-by: 韩田田00222924 <han.tiantian@zte.com.cn>

Co-authored-by: han.tiantian@zte.com.cn <han.tiantian@zte.com.cn>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This patch proposes adding a new configuration on SHS: custom executor log URL pattern. This will enable end users to replace executor logs to other than RM provide, like external log service, which enables to serve executor logs when NodeManager becomes unavailable in case of YARN.

End users can build their own of custom executor log URLs with pre-defined patterns which would be vary on each resource manager. This patch adds some patterns to YARN resource manager. (For others, there's even no executor log url available so cannot define patterns as well.)

Please refer the doc change as well as added UTs in this patch to see how to set up the feature.

## How was this patch tested?

Added UT, as well as manual test with YARN cluster

Closes#23260 from HeartSaVioR/SPARK-26311.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

This fix replaces the Threshold with a Filter for ConsoleAppender which checks

to ensure that either the logLevel is greater than thresholdLevel (shell log

level) or the log originated from a custom defined logger. In these cases, it

lets a log event go through, otherwise it doesn't.

1. Ensured that custom log level works when set by default (via log4j.properties)

2. Ensured that logs are not printed twice when log level is changed by setLogLevel

3. Ensured that custom logs are printed when log level is changed back by setLogLevel

Closes#23675 from ankuriitg/ankurgupta/SPARK-26753.

Authored-by: ankurgupta <ankur.gupta@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

This avoids trying to get delegation tokens when a TGT is not available, e.g.

when running in yarn-cluster mode without a keytab. That would result in an

error since that is not allowed.

Tested with some (internal) integration tests that started failing with the

patch for SPARK-25689.

Closes#23689 from vanzin/SPARK-25689.followup.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

Otherwise the RDD data may be out of date by the time the test tries to check it.

Tested with an artificial delay inserted in AppStatusListener.

Closes#23654 from vanzin/SPARK-26732.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

This change addes a new mode for credential renewal that does not require

a keytab; it uses the local ticket cache instead, so it works while the

user keeps the cache valid.

This can be useful for, e.g., people running long spark-shell sessions where

their kerberos login is kept up-to-date.

The main change to enable this behavior is in HadoopDelegationTokenManager,

with a small change in the HDFS token provider. The other changes are to avoid

creating duplicate tokens when submitting the application to YARN; they allow

the tokens from the scheduler to be sent to the YARN AM, reducing the round trips

to HDFS.

For that, the scheduler initialization code was changed a little bit so that

the tokens are available when the YARN client is initialized. That basically

takes care of a long-standing TODO that was in the code to clean up configuration

propagation to the driver's RPC endpoint (in CoarseGrainedSchedulerBackend).

Tested with an app designed to stress this functionality, with both keytab and

cache-based logins. Some basic kerberos tests on k8s also.

Closes#23525 from vanzin/SPARK-26595.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

While obtaining token from hbase service , spark uses deprecated API of hbase ,

```public static Token<AuthenticationTokenIdentifier> obtainToken(Configuration conf)```

This deprecated API is already been removed from hbase 2.x version as part of the hbase 2.x major release. https://issues.apache.org/jira/browse/HBASE-14713_

there is one more stable API in

```public static Token<AuthenticationTokenIdentifier> obtainToken(Connection conn)``` in TokenUtil class

spark shall use this stable api for getting the delegation token.

To invoke this api first connection object has to be retrieved from ConnectionFactory and the same connection can be passed to obtainToken(Connection conn) for getting token.

eg: Call ```public static Connection createConnection(Configuration conf)```

, then call ```public static Token<AuthenticationTokenIdentifier> obtainToken( Connection conn)```.

## How was this patch tested?

Manual testing is been done.

Manual test result:

Before fix:

After fix:

1. Create 2 tables in hbase shell

>Launch hbase shell

>Enter commands to create tables and load data

create 'table1','cf'

put 'table1','row1','cf:cid','20'

create 'table2','cf'

put 'table2','row1','cf:cid','30'

>Show values command

get 'table1','row1','cf:cid' will diplay value as 20

get 'table2','row1','cf:cid' will diplay value as 30

2.Run SparkHbasetoHbase class in testSpark.jar using spark-submit

spark-submit --master yarn-cluster --class com.mrs.example.spark.SparkHbasetoHbase --conf "spark.yarn.security.credentials.hbase.enabled"="true" --conf "spark.security.credentials.hbase.enabled"="true" --keytab /opt/client/user.keytab --principal sen testSpark.jar

The SparkHbasetoHbase test class will update the value of table2 with sum of values of table1 & table2.

table2 = table1+table2

As we can see in the snapshot the spark job has been successfully able to interact with hbase service and able to update the row count.

Closes#23429 from sujith71955/master_hbase_service.

Authored-by: s71955 <sujithchacko.2010@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Manually release stdin writer and stderr reader thread when task is finished. This commit also marks

ShuffleBlockFetchIterator as fully consumed if isZombie is set.

## How was this patch tested?

Added new test

Closes#23638 from advancedxy/SPARK-26713.

Authored-by: Xianjin YE <advancedxy@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This is a followup of #16989

The fetch-big-block-to-disk feature is disabled by default, because it's not compatible with external shuffle service prior to Spark 2.2. The client sends stream request to fetch block chunks, and old shuffle service can't support it.

After 2 years, Spark 2.2 has EOL, and now it's safe to turn on this feature by default

## How was this patch tested?

existing tests

Closes#23625 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Adjust mem settings in UnifiedMemoryManager used in test suites to ha…ve execution memory > 0

Ref: https://github.com/apache/spark/pull/23457#issuecomment-457409976

## How was this patch tested?

Existing tests

Closes#23645 from srowen/SPARK-26725.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR makes hardcoded configs about spark memory and storage to use `ConfigEntry` and put them in the config package.

## How was this patch tested?

Existing unit tests.

Closes#23623 from SongYadong/configEntry_for_mem_storage.

Authored-by: SongYadong <song.yadong1@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

There are ugly provided dependencies inside core for the following:

* Hive

* Kafka

In this PR I've extracted them out. This PR contains the following:

* Token providers are now loaded with service loader

* Hive token provider moved to hive project

* Kafka token provider extracted into a new project

## How was this patch tested?

Existing + newly added unit tests.

Additionally tested on cluster.

Closes#23499 from gaborgsomogyi/SPARK-26254.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

SPARK-21568 made a change to ensure that progress bar is enabled for spark-shell

by default but not for other apps. Before that change, this was distinguished

using log-level which is not a good way to determine the same as users can change

the default log-level. That commit changed the way to determine whether current

app is running in spark-shell or not but it left the log-level part as it is,

which causes this regression. SPARK-25118 changed the default log level to INFO

for spark-shell because of which the progress bar is not enabled anymore.

This commit will remove the log-level check for enabling progress bar for

spark-shell as it is not necessary and seems to be a leftover from SPARK-21568

## How was this patch tested?

1. Ensured that progress bar is enabled with spark-shell by default

2. Ensured that progress bar is not enabled with spark-submit

Closes#23618 from ankuriitg/ankurgupta/SPARK-26694.

Authored-by: ankurgupta <ankur.gupta@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

To help debugging failed or slow tasks, its really useful to know the

size of the blocks getting fetched. Though that is available at the

debug level, debug logs aren't on in general -- but there is already an

info level log line that this augments a little.

## How was this patch tested?

Ran very basic local-cluster mode app, looked at logs. Example line:

```

INFO ShuffleBlockFetcherIterator: Getting 2 (194.0 B) non-empty blocks including 1 (97.0 B) local blocks and 1 (97.0 B) remote blocks

```

Full suite via jenkins.

Closes#23621 from squito/SPARK-26697.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Currently, heartbeat related arguments is not validated in spark, so if these args are inproperly specified, the Application may run for a while and not failed until the max executor failures reached(especially with spark.dynamicAllocation.enabled=true), thus may incurs resources waste.

This PR is to precheck these arguments in HeartbeatReceiver to fix this problem.

## How was this patch tested?

NA-just validation changes

Closes#23445 from liupc/validate-heartbeat-arguments-in-SparkSubmitArguments.

Authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Currently, some ML library may generate large ml model, which may be referenced in the task closure, so driver will broadcasting large task binary, and executor may not able to deserialize it and result in OOM failures(for instance, executor's memory is not enough). This problem not only affects apps using ml library, some user specified closure or function which refers large data may also have this problem.

In order to facilitate the debuging of memory problem caused by large taskBinary broadcast, we can add same warning logs for it.

This PR will add some warning logs on the driver side when broadcasting a large task binary, and it also included some minor log changes in the reading of broadcast.

## How was this patch tested?

NA-Just log changes.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23580 from liupc/Add-warning-logs-for-large-taskBinary-size.

Authored-by: Liupengcheng <liupengcheng@xiaomi.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Followup of #20091. We could also use existing partitioner when defaultNumPartitions is equal to the maxPartitioner's numPartitions.

## How was this patch tested?

Existed.

Closes#23581 from Ngone51/dev-use-existing-partitioner-when-defaultNumPartitions-equalTo-MaxPartitioner#-numPartitions.

Authored-by: Ngone51 <ngone_5451@163.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

`ByteBuffer.allocate` may throw `OutOfMemoryError` when the block is large but no enough memory is available. However, when this happens, right now BlockTransferService.fetchBlockSync will just hang forever as its `BlockFetchingListener. onBlockFetchSuccess` doesn't complete `Promise`.

This PR catches `Throwable` and uses the error to complete `Promise`.

## How was this patch tested?

Added a unit test. Since I cannot make `ByteBuffer.allocate` throw `OutOfMemoryError`, I passed a negative size to make `ByteBuffer.allocate` fail. Although the error type is different, it should trigger the same code path.

Closes#23590 from zsxwing/SPARK-26665.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.dynamicAllocation`, `spark.scheduler`, `spark.rpc`, `spark.task`, `spark.speculation`, and `spark.cleaner` configs to use `ConfigEntry`.

## How was this patch tested?

Existing tests

Closes#23416 from kiszk/SPARK-26463.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR proposes to extend the spark-submit option --num-executors to be applicable to Spark on K8S too. It is motivated by convenience, for example when migrating jobs written for YARN to run on K8S.

## How was this patch tested?

Manually tested on a K8S cluster.

Author: Luca Canali <luca.canali@cern.ch>

Closes#23573 from LucaCanali/addNumExecutorsToK8s.

## What changes were proposed in this pull request?

The PR makes hardcoded `spark.unsafe` configs to use ConfigEntry and put them in the `config` package.

## How was this patch tested?

Existing UTs

Closes#23412 from kiszk/SPARK-26477.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Misc code cleanup from lgtm.com analysis. See comments below for details.

## How was this patch tested?

Existing tests.

Closes#23571 from srowen/SPARK-26640.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Co-authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>