### What changes were proposed in this pull request?

Revert

#27360#27396#27374#27389

### Why are the changes needed?

BLAS need more performace tests, specially on sparse datasets.

Perfermance test of LogisticRegression (https://github.com/apache/spark/pull/27374) on sparse dataset shows that blockify vectors to matrices and use BLAS will cause performance regression.

LinearSVC and LinearRegression were also updated in the same way as LogisticRegression, so we need to revert them to make sure no regression.

### Does this PR introduce any user-facing change?

remove newly added param blockSize

### How was this patch tested?

reverted testsuites

Closes#27487 from zhengruifeng/revert_blockify_ii.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

Add new config `spark.network.maxRemoteBlockSizeFetchToMem` fallback to the old config `spark.maxRemoteBlockSizeFetchToMem`.

### Why are the changes needed?

For naming consistency.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27463 from xuanyuanking/SPARK-26700-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Eagerly filter out zombie `TaskSetManager` before offering resources to reduce any overhead as possible.

And this PR also avoid doing `recomputeLocality` and `addPendingTask` when `TaskSetManager` is zombie.

### Why are the changes needed?

Zombie `TaskSetManager` could still exist in Pool's `schedulableQueue` when it has running tasks. Offering resources on a zombie `TaskSetManager` could bring unnecessary overhead and is meaningless.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#27455 from Ngone51/exclude-zombie-tsm.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

If `updateBlockInfo` returns false, which means the `BlockManager` will re-register and report all blocks later. So, we may report two times for the same block, which causes `AppStatusListener` to count used memory for two times, too. As a result, the used memory can exceed the total memory.

So, this PR changes it to not post `SparkListenerBlockUpdated` when `updateBlockInfo` returns false. And, always clean up used memory whenever `AppStatusListener` receives `SparkListenerBlockManagerAdded`.

### Why are the changes needed?

This PR tries to fix negative memory usage in UI (https://user-images.githubusercontent.com/3488126/72131225-95e37e00-33b6-11ea-8708-6e5ed328d1ca.png, see #27144 ). Though, I'm not very sure this is the root cause for #27144 since known information is limited here.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added new tests by xuanyuanking

Closes#27306 from Ngone51/fix-possible-negative-memory.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: wuyi <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This change is to allow custom resource scheduler (GPUs,FPGAs,etc) resource discovery to be more flexible. Users are asking for it to work with hadoop 2.x versions that do not support resource scheduling in YARN and/or also they may not run in an isolated environment.

This change creates a plugin api that users can write their own resource discovery class that allows a lot more flexibility. The user can chain plugins for different resource types. The user specified plugins execute in the order specified and will fall back to use the discovery script plugin if they don't return information for a particular resource.

I had to open up a few of the classes to be public and change them to not be case classes and make them developer api in order for the the plugin to get enough information it needs.

I also relaxed the yarn side so that if yarn isn't configured for resource scheduling we just warn and go on. This helps users that have yarn 3.1 but haven't configured the resource scheduling side on their cluster yet, or aren't running in isolated environment.

The user would configured this like:

--conf spark.resources.discovery.plugin="org.apache.spark.resource.ResourceDiscoveryFPGAPlugin, org.apache.spark.resource.ResourceDiscoveryGPUPlugin"

Note the executor side had to be wrapped with a classloader to make sure we include the user classpath for jars they specified on submission.

Note this is more flexible because the discovery script has limitations such as spawning it in a separate process. This means if you are trying to allocate resources in that process they might be released when the script returns. Other things are the class makes it more flexible to be able to integrate with existing systems and solutions for assigning resources.

### Why are the changes needed?

to more easily use spark resource scheduling with older versions of hadoop or in non-isolated enivronments.

### Does this PR introduce any user-facing change?

Yes a plugin api

### How was this patch tested?

Unit tests added and manual testing done on yarn and standalone modes.

Closes#27410 from tgravescs/hadoop27spark3.

Lead-authored-by: Thomas Graves <tgraves@nvidia.com>

Co-authored-by: Thomas Graves <tgraves@apache.org>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

- Replace `new Integer(0)` by a serializable instance in RDD.scala

- Use `.valueOf()` instead of constructors of `java.lang.Integer` and `java.lang.Double` because constructors has been deprecated, see https://docs.oracle.com/javase/9/docs/api/java/lang/Integer.html

### Why are the changes needed?

This fixes the following warnings:

1. RDD.scala:240: constructor Integer in class Integer is deprecated: see corresponding Javadoc for more information.

2. MutableProjectionSuite.scala:63: constructor Integer in class Integer is deprecated: see corresponding Javadoc for more information.

3. UDFSuite.scala:446: constructor Integer in class Integer is deprecated: see corresponding Javadoc for more information.

4. UDFSuite.scala:451: constructor Double in class Double is deprecated: see corresponding Javadoc for more information.

5. HiveUserDefinedTypeSuite.scala:71: constructor Double in class Double is deprecated: see corresponding Javadoc for more information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- By RDDSuite, MutableProjectionSuite, UDFSuite and HiveUserDefinedTypeSuite

Closes#27399 from MaxGekk/eliminate-warning-part4.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Add a section to the Configuration page to document configurations for executor metrics.

At the same time, rename spark.eventLog.logStageExecutorProcessTreeMetrics.enabled to spark.executor.processTreeMetrics.enabled and make it independent of spark.eventLog.logStageExecutorMetrics.enabled.

### Why are the changes needed?

Executor metrics are new in Spark 3.0. They lack documentation.

Memory metrics as a whole are always collected, but the ones obtained from the process tree have to be optionally enabled. Making this depend on a single configuration makes for more intuitive behavior. Given this, the configuration property is renamed to better reflect its meaning.

### Does this PR introduce any user-facing change?

Yes, only in that the configurations are all new to 3.0.

### How was this patch tested?

Not necessary.

Closes#27329 from wypoon/SPARK-27324.

Authored-by: Wing Yew Poon <wypoon@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

### What changes were proposed in this pull request?

This PR reflects review comments on post-hoc review among PRs for SPARK-29779 (#27085), SPARK-30479 (#27164). The list of review comments this PR addresses are below:

* https://github.com/apache/spark/pull/27085#discussion_r373304218

* https://github.com/apache/spark/pull/27164#discussion_r373300793

* https://github.com/apache/spark/pull/27164#discussion_r373301193

* https://github.com/apache/spark/pull/27164#discussion_r373301351

I also applied review comments to the CORE module (BasicEventFilterBuilder.scala) as well, as the review comments for SQL/core module (SQLEventFilterBuilder.scala) can be applied there as well.

### Why are the changes needed?

There're post-hoc reviews on PRs for such issues, like links in above section.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs.

Closes#27414 from HeartSaVioR/SPARK-28869-SPARK-29779-SPARK-30479-FOLLOWUP-posthoc-reviews.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Followup from https://github.com/apache/spark/pull/27367 to fix a couple of my minor issues with the Test. Fix an indentation and then use UTF-8 instead of ASCII.

### Why are the changes needed?

followup

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

compiled and ran unit test

Closes#27420 from tgravescs/SPARK-30638-followup.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently, when speculative tasks fail/get killed, they are still considered as pending and count towards the calculation of number of needed executors. To be more accurate: `stageAttemptToNumSpeculativeTasks(stageAttempt)` is incremented on onSpeculativeTaskSubmitted, but never decremented. `stageAttemptToNumSpeculativeTasks -= stageAttempt` is performed on stage completion. **This means Spark is marking ended speculative tasks as pending, which leads to Spark to hold more executors that it actually needs!**

This PR fixes this issue by updating `stageAttemptToSpeculativeTaskIndices` and `stageAttemptToNumSpeculativeTasks` on speculative tasks completion. This PR also addresses some other minor issues: scheduler behavior after receiving an intentionally killed task event; try to address [SPARK-28403](https://issues.apache.org/jira/browse/SPARK-28403).

### Why are the changes needed?

This has caused resource wastage in our production with speculation enabled. With aggressive speculation, we found data skewed jobs can hold hundreds of idle executors with less than 10 tasks running.

An easy repro of the issue (`--conf spark.speculation=true --conf spark.executor.cores=4 --conf spark.dynamicAllocation.maxExecutors=1000` in cluster mode):

```

val n = 4000

val someRDD = sc.parallelize(1 to n, n)

someRDD.mapPartitionsWithIndex( (index: Int, it: Iterator[Int]) => {

if (index < 300 && index >= 150) {

Thread.sleep(index * 1000) // Fake running tasks

} else if (index == 300) {

Thread.sleep(1000 * 1000) // Fake long running tasks

}

it.toList.map(x => index + ", " + x).iterator

}).collect

```

You will see when running the last task, we would be hold 38 executors (see below), which is exactly (152 + 3) / 4 = 38.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added a comprehensive unit test.

Test with the above repro shows that we are holding 2 executors at the end

Closes#27223 from linzebing/speculation_fix.

Authored-by: zebingl@fb.com <zebingl@fb.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Add the allocated resources to parameters to the PluginContext so that any plugins in driver or executor could use this information to initialize devices or use this information in a useful manner.

### Why are the changes needed?

To allow users to initialize/track devices once at the executor level before each task runs to use them.

### Does this PR introduce any user-facing change?

Yes to the people using the Executor/Driver plugin interface.

### How was this patch tested?

Unit tests and manually by writing a plugin that initialized GPU's using this interface.

Closes#27367 from tgravescs/pluginWithResources.

Lead-authored-by: Thomas Graves <tgraves@nvidia.com>

Co-authored-by: Thomas Graves <tgraves@apache.org>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

This PR fixes a couple of minor issues on SPARK-30481:

* SHS runs "compaction" regardless of the result of "merge application listing".

If "merge application listing" fails, most likely the application log will have some issue and "compaction" won't work properly then. We can just skip trying compaction when "merge application listing" fails.

* When "compaction" throws exception we don't handle it.

It's expected to swallow exception, but we don't even log the exception for now. It should be logged properly.

### Why are the changes needed?

Described in above section.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing UTs.

Closes#27408 from HeartSaVioR/SPARK-30481-FOLLOWUP-MINOR-FIXES.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

…

### What changes were proposed in this pull request?

If the resource discovery goes bad, like it doesn't return enough GPUs, currently it just throws an exception. This is hard for users to see because you have to go find the executor logs and its not reported back to the driver. On yarn if you exit explicitly then the driver logs show the error thrown and its much more useful. On yarn with the explicit exit with non-zero it also goes against failed executor launch attempts and the application will eventually exit. so if its fundamentally a bad configuration or bad discovery script it won't just hang forever. I also tested on k8s and standalone and the behaviors there don't change, the executor cleanly exit with an error message in the logs. The standalone ui makes it easy to see failed executors.

### Why are the changes needed?

better user experience.

### Does this PR introduce any user-facing change?

no api changes

### How was this patch tested?

ran unit tests and manually tested on yarn, standalone, and k8s.

Closes#27385 from tgravescs/SPARK-30529.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

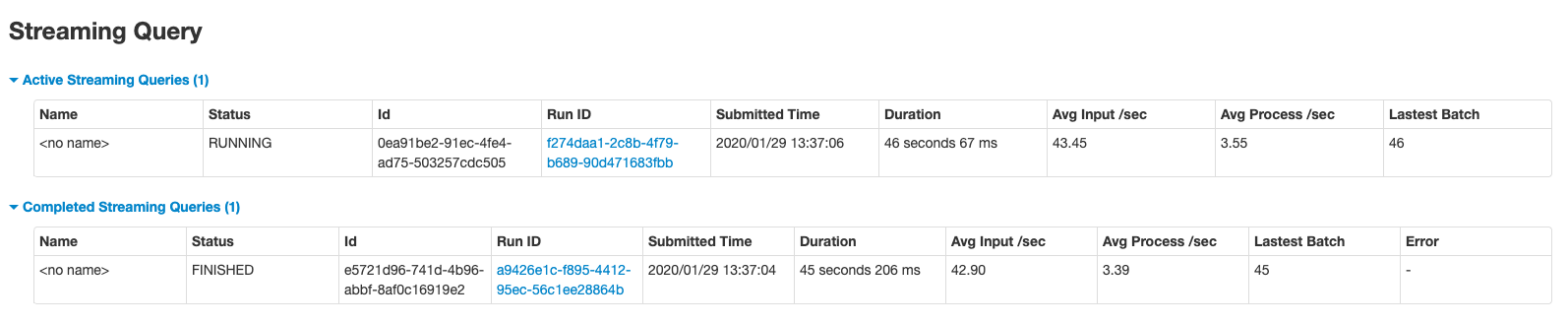

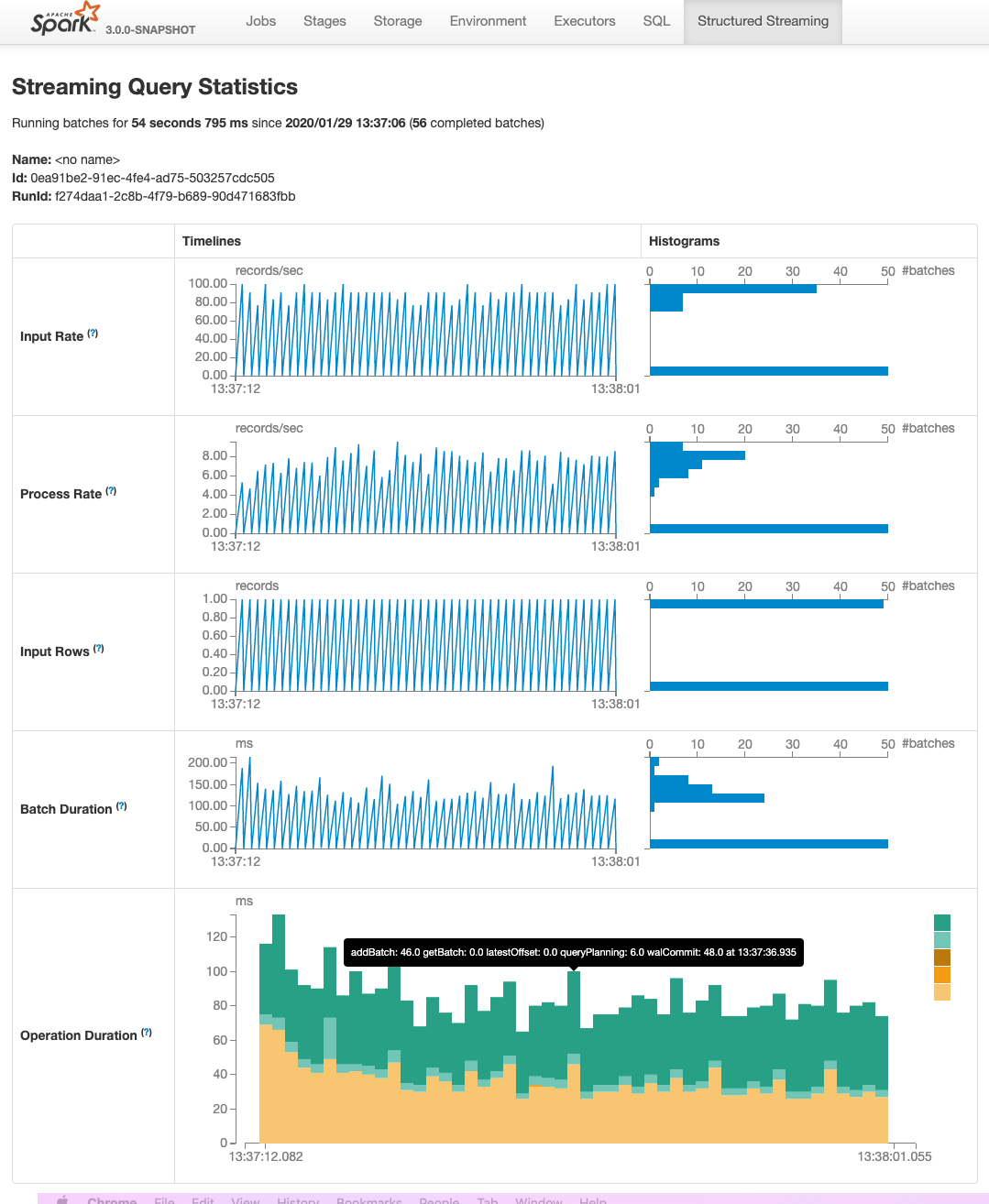

This PR adds two pages to Web UI for Structured Streaming:

- "/streamingquery": Streaming Query Page, providing some aggregate information for running/completed streaming queries.

- "/streamingquery/statistics": Streaming Query Statistics Page, providing detailed information for streaming query, including `Input Rate`, `Process Rate`, `Input Rows`, `Batch Duration` and `Operation Duration`

### Why are the changes needed?

It helps users to better monitor Structured Streaming query.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- new added and existing UTs

- manual test

Closes#26201 from uncleGen/SPARK-29543.

Lead-authored-by: uncleGen <hustyugm@gmail.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: Genmao Yu <hustyugm@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

There are scenarios where Spark History Server is located behind the VPC. So whenever api calls hit to get the executor Summary(allexecutors). There can be delay in getting the response of executor summary and in mean time "stage-page-template.html" is loaded and the response of executor Summary is not added to the stage-page-template.html.

As the result of which Aggregated Metrics by Executor in stage page is showing blank.

This scenario can be easily found in the cases when there is some proxy-server which is responsible for sending the request and response to spark History server.

This can be reproduced in Knox/In-house proxy servers which are used to send and receive response to Spark History Server.

Alternative scenario to test this case, Open the spark UI in developer mode in browser add some breakpoint in stagepage.js, this will add some delay in getting the response and now if we check the spark UI for stage Aggregated Metrics by Executor in stage page is showing blank.

So In-order to fix this there is a need to add the change in stagepage.js . There is a need to add the api call to get the html page(stage-page-template.html) first and after that other api calls to get the data that needs to attached in the stagepage (like executor Summary, stageExecutorSummaryInfoKeys exc)

### Why are the changes needed?

Since stage page is useful for debugging purpose, This helps in understanding how many task ran on the particular executor and information related to shuffle read and write on that executor.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually tested. Testing this in a reproducible way requires a running browser or HTML rendering engine that executes the JavaScript.Open the spark UI in developer mode in browser add some breakpoint in stagepage.js, this will add some delay in getting the response and now if we check the spark UI for stage Aggregated Metrics by Executor in stage page is showing blank.

Before fix

<img width="1529" alt="Screenshot 2020-01-20 at 3 21 55 PM" src="https://user-images.githubusercontent.com/34540906/72716739-bcfd3500-3b98-11ea-8dbe-90a135822f92.png">

After fix

<img width="1540" alt="Screenshot 2020-01-20 at 3 23 12 PM" src="https://user-images.githubusercontent.com/34540906/72716782-d30af580-3b98-11ea-8764-2bde77764604.png">

Closes#27292 from SaurabhChawla100/SPARK-30582.

Authored-by: Saurabh Chawla <saurabhc@qubole.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This patch addresses remaining functionality on event log compaction: integrate compaction into FsHistoryProvider.

This patch is next task of SPARK-30479 (#27164), please refer the description of PR #27085 to see overall rationalization of this patch.

### Why are the changes needed?

One of major goal of SPARK-28594 is to prevent the event logs to become too huge, and SPARK-29779 achieves the goal. We've got another approach in prior, but the old approach required models in both KVStore and live entities to guarantee compatibility, while they're not designed to do so.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added UT.

Closes#27208 from HeartSaVioR/SPARK-30481.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@apache.org>

### What changes were proposed in this pull request?

1, stack input vectors to blocks (like ALS/MLP);

2, add new param `blockSize`;

3, add a new class `InstanceBlock`

4, standardize the input outside of optimization procedure;

### Why are the changes needed?

1, reduce RAM to persist traing dataset; (save ~40% in test)

2, use Level-2 BLAS routines; (12% ~ 28% faster, without native BLAS)

### Does this PR introduce any user-facing change?

a new param `blockSize`

### How was this patch tested?

existing and updated testsuites

Closes#27360 from zhengruifeng/blockify_svc.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

Add HostLocalBlock size in log total bytes

### Why are the changes needed?

total size in log is wrong as hostlocal block size is missed

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

Manually checking the log

Closes#27320 from Udbhav30/bug.

Authored-by: Udbhav30 <u.agrawal30@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Increased the limit for log events that could be stored in `SparkFunSuite.LogAppender` from 100 to 1000.

### Why are the changes needed?

Sometimes (see traces in SPARK-30599) additional info is logged via log4j, and appended to `LogAppender`. For example, unusual log entries are:

```

[36] Removed broadcast_214_piece0 on 192.168.1.66:52354 in memory (size: 5.7 KiB, free: 2003.8 MiB)

[37] Removed broadcast_204_piece0 on 192.168.1.66:52354 in memory (size: 5.7 KiB, free: 2003.9 MiB)

[38] Removed broadcast_200_piece0 on 192.168.1.66:52354 in memory (size: 3.7 KiB, free: 2003.9 MiB)

[39] Removed broadcast_207_piece0 on 192.168.1.66:52354 in memory (size: 24.2 KiB, free: 2003.9 MiB)

[40] Removed broadcast_208_piece0 on 192.168.1.66:52354 in memory (size: 24.2 KiB, free: 2003.9 MiB)

```

and a test which uses `LogAppender` can fail with the exception:

```

java.lang.IllegalStateException: Number of events reached the limit of 100 while logging CSV header matches to schema w/ enforceSchema.

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By re-running `"SPARK-23786: warning should be printed if CSV header doesn't conform to schema"` in a loop.

Closes#27312 from MaxGekk/log-appender-filter.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/26930 to fix a bug.

When we create shuffle fetch requests, we first collect blocks until they reach the max size. Then we try to merge the blocks (the batch shuffle fetch feature) and split the merged blocks to several groups, to make sure each group doesn't reach the max numBlocks. For the last group, if it's smaller than the max numBlocks, put it back to the input list and deal with it again later.

The last step has a problem:

1. if we put a merged block back to the input list and merge it again, it fails.

2. when putting back some blocks, we should update `numBlocksToFetch`

This PR fixes these 2 problems.

### Why are the changes needed?

bug fix

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

new test

Closes#27280 from cloud-fan/aqe.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to output additional msg from the tests where a log appender is added. The message is printed as a part of `IllegalStateException` in the case of reaching the limit of maximum number of logged events.

### Why are the changes needed?

If a log appender is not removed from the log4j appenders list. the caller message could help to investigate the problem and find the test which doesn't remove the log appender.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running the modified test suites `AvroSuite`, `CSVSuite`, `ResolveHintsSuite` and etc.

Closes#27296 from MaxGekk/assign-name-to-log-appender.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Renamed an identifier `iterator` to `iter` to avoid compile error with Scala 2.13.

### Why are the changes needed?

As of Scala 2.13, scala.collection.Iterator has "iterator" method so if an inner class of Iterator means to refer an outer identifier named "iterator", it does not work as we think.

I listed source files that can be affected by that change by `find . -name "*.scala" -exec grep -El "new .*Iterator\[.* +{" {} \;`

As far as I confirmed util.Utils` is affected.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27275 from sarutak/fix-iterator-for-2.13.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

1) Added missing match case to SparkUncaughtExceptionHandler, so that it would not halt the process when the exception doesn't match any of the match case statements.

2) Added log message before halting process. During debugging it wasn't obvious why the Worker process would DEAD (until we set SPARK_NO_DAEMONIZE=1) due to the shell-scripts puts the process into background and essentially absorbs the exit code.

3) Added SparkUncaughtExceptionHandlerSuite. Basically we create a Spark exception-throwing application with SparkUncaughtExceptionHandler and then check its exit code.

### Why are the changes needed?

SPARK-30310, because the process would halt unexpectedly.

### How was this patch tested?

All unit tests (mvn test) were ran and OK.

Closes#26955 from tinhto-000/uncaught_exception_fix.

Authored-by: git <tinto@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This is the second PR for the Stage Level Scheduling. This is adding in the necessary executor side changes:

1) executors to know what ResourceProfile they should be using

2) handle parsing the resource profile settings - these are not in the global configs

3) then reporting back to the driver what resource profile it was started with.

This PR adds all the piping for YARN to pass the information all the way to executors, but it just uses the default ResourceProfile (which is the global applicatino level configs).

At a high level these changes include:

1) adding a new --resourceProfileId option to the CoarseGrainedExecutorBackend

2) Add the ResourceProfile settings to new internal confs that gets passed into the Executor

3) Executor changes that use the resource profile id passed in to read the corresponding ResourceProfile confs and then parse those requests and discover resources as necessary

4) Executor registers to Driver with the Resource profile id so that the ExecutorMonitor can track how many executor with each profile are running

5) YARN side changes to show that passing the resource profile id and confs actually works. Just uses the DefaultResourceProfile for now.

I also removed a check from the CoarseGrainedExecutorBackend that used to check to make sure there were task requirements before parsing any custom resource executor requests. With the resource profiles this becomes much more expensive because we would then have to pass the task requests to each executor and the check was just a short cut and not really needed. It was much cleaner just to remove it.

Note there were some changes to the ResourceProfile, ExecutorResourceRequests, and TaskResourceRequests in this PR as well because I discovered some issues with things not being immutable. That api now look like:

val rpBuilder = new ResourceProfileBuilder()

val ereq = new ExecutorResourceRequests()

val treq = new TaskResourceRequests()

ereq.cores(2).memory("6g").memoryOverhead("2g").pysparkMemory("2g").resource("gpu", 2, "/home/tgraves/getGpus")

treq.cpus(2).resource("gpu", 2)

val resourceProfile = rpBuilder.require(ereq).require(treq).build

This makes is so that ResourceProfile is immutable and Spark can use it directly without worrying about the user changing it.

### Why are the changes needed?

These changes are needed for the executor to report which ResourceProfile they are using so that ultimately the dynamic allocation manager can use that information to know how many with a profile are running and how many more it needs to request. Its also needed to get the resource profile confs to the executor so that it can run the appropriate discovery script if needed.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit tests and manually on YARN.

Closes#26682 from tgravescs/SPARK-29306.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

The issue here is that when Spark is downscaling the application and deletes

a few pod requests that aren't needed anymore, it may actually race with the

K8S scheduler, who may be bringing up those executors. So they may have enough

time to connect back to the driver, register, to just be deleted soon after.

This wastes resources and causes misleading entries in the driver log.

The change (ab)uses the blacklisting mechanism to consider the deleted excess

pods as blacklisted, so that if they try to connect back, the driver will deny

it.

It also changes the executor registration slightly, since even with the above

change there were misleading logs. That was because the executor registration

message was an RPC that always succeeded (bar network issues), so the executor

would always try to send an unregistration message to the driver, which would

then log several messages about not knowing anything about the executor. The

change makes the registration RPC succeed or fail directly, instead of using

the separate failure message that would lead to this issue.

Note the last change required some changes in a standalone test suite related

to dynamic allocation, since it relied on the driver not throwing exceptions

when a duplicate executor registration happened.

Tested with existing unit tests, and with live cluster with dyn alloc on.

Closes#26586 from vanzin/SPARK-29950.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

1, add field `storageLevel` in `PeriodicRDDCheckpointer`

2, for ml.GBT/ml.RF set storageLevel=`StorageLevel.MEMORY_AND_DISK`

### Why are the changes needed?

Intermediate RDDs in ML are cached with storageLevel=StorageLevel.MEMORY_AND_DISK.

PeriodicRDDCheckpointer & PeriodicGraphCheckpointer now store RDD with storageLevel=StorageLevel.MEMORY_ONLY, it maybe nice to set the storageLevel of checkpointer.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing testsuites

Closes#27189 from zhengruifeng/checkpointer_storage.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

Spark SQL Kafka consumer connector considers delegation token usage even if the user configures `sasl.jaas.config` manually.

In this PR I've added `spark.security.credentials.kafka.enabled` and cluster configuration check to the condition.

### Why are the changes needed?

Now it's not possible to configure `sasl.jaas.config` manually.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing + additional unit tests.

Closes#27191 from gaborgsomogyi/SPARK-30495.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This patch addresses adding event filter to handle SQL related events. This patch is next task of SPARK-29779 (#27085), please refer the description of PR #27085 to see overall rationalization of this patch.

Below functionalities will be addressed in later parts:

* integrate compaction into FsHistoryProvider

* documentation about new configuration

### Why are the changes needed?

One of major goal of SPARK-28594 is to prevent the event logs to become too huge, and SPARK-29779 achieves the goal. We've got another approach in prior, but the old approach required models in both KVStore and live entities to guarantee compatibility, while they're not designed to do so.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added UTs.

Closes#27164 from HeartSaVioR/SPARK-30479.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

Skew Join is common and can severely downgrade performance of queries, especially those with joins. This PR aim to optimization the skew join based on the runtime Map output statistics by adding "OptimizeSkewedPartitions" rule. And The details design doc is [here](https://docs.google.com/document/d/1NkXN-ck8jUOS0COz3f8LUW5xzF8j9HFjoZXWGGX2HAg/edit). Currently we can support "Inner, Cross, LeftSemi, LeftAnti, LeftOuter, RightOuter" join type.

### Why are the changes needed?

To optimize the skewed partition in runtime based on AQE

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

UT

Closes#26434 from JkSelf/skewedPartitionBasedSize.

Lead-authored-by: jiake <ke.a.jia@intel.com>

Co-authored-by: Wenchen Fan <wenchen@databricks.com>

Co-authored-by: JiaKe <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### **What changes were proposed in this pull request?**

Fix task status inconsistent in `executorLost` which caused by `markPartitionCompleted`

### **Why are the changes needed?**

The inconsistent will cause app hung up.

The bugs occurs in the corer case as follows:

1. The stage occurs during stage retry, scheduler will resubmit a new stage with unfinished tasks.

2. Those unfinished tasks in origin stage finished and the same task on the new retry stage hasn't finished, it will mark the task partition on the current retry stage as succesuful in TSM `successful` array variable.

3. The executor crashed when it is running tasks which have succeeded by origin stage, it cause TSM run `executorLost` to rescheduler the task on the executor, and it will change the partition's running status in `copiesRunning` twice to -1.

4. 'dequeueTaskFromList' will use `copiesRunning` equal 0 as reschedule basis when rescheduler tasks, and now it is -1, can't to reschedule, and the app will hung forever.

### **Does this PR introduce any user-facing change?**

No

### **How was this patch tested?**

Closes#26975 from seayoun/fix_stageRetry_executorCrash_cause_problems.

Authored-by: yu <you@example.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to define a sub-class of `AppenderSkeleton` in `SparkFunSuite` and reuse it from other tests. The class stores incoming `LoggingEvent` in an array which is available to tests for future analysis of logged events.

### Why are the changes needed?

This eliminates code duplication in tests.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By existing test suites - `CSVSuite`, `OptimizerLoggingSuite`, `JoinHintSuite`, `CodeGenerationSuite` and `SQLConfSuite`.

Closes#27166 from MaxGekk/dedup-appender-skeleton.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

The Executor Computing Time in Time Line of Stage Page will be right

### Why are the changes needed?

The Executor Computing Time in Time Line of Stage Page is Wrong. It includes the Scheduler Delay Time, while the Proportion excludes the Scheduler Delay

<img width="1467" alt="Snipaste_2020-01-08_19-04-33" src="https://user-images.githubusercontent.com/3488126/71976714-f2795880-3251-11ea-869a-43ca6e0cf96a.png">

The right executor computing time is 1ms, but the number in UI is 3ms(include 2ms scheduler delay); the proportion is right.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manual

Closes#27135 from sddyljsx/SPARK-30458.

Lead-authored-by: Neal Song <neal_song@126.com>

Co-authored-by: neal_song <neal_song@126.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

update the doc of momery package

### Why are the changes needed?

From Spark 2.0, the storage memory also uses off heap memory. We update the doc here.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

No Tests Needed

Closes#27160 from sddyljsx/SPARK-30478.

Lead-authored-by: Neal Song <neal_song@126.com>

Co-authored-by: neal_song <neal_song@126.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch proposes to compact old event log files when end users enable rolling event log, and clean up these files after compaction.

Here the "compaction" really mean is filtering out listener events for finished/removed things - like jobs which take most of space for event log file except SQL related events. To achieve this, compactor does two phases reading: 1) tracking the live jobs (and more to add) 2) filtering events via leveraging the information about live things and rewriting to the "compacted" file.

This approach retains the ability of compatibility on event log file and adds the possibility of reducing the overall size of event logs. There's a downside here as well: executor metrics for tasks would be inaccurate, as compactor will filter out the task events which job is finished, but I don't feel it as a blocker.

Please note that SPARK-29779 leaves below functionalities for future JIRA issue as the patch for SPARK-29779 is too huge and we decided to break down:

* apply filter in SQL events

* integrate compaction into FsHistoryProvider

* documentation about new configuration

### Why are the changes needed?

One of major goal of SPARK-28594 is to prevent the event logs to become too huge, and SPARK-29779 achieves the goal. We've got another approach in prior, but the old approach required models in both KVStore and live entities to guarantee compatibility, while they're not designed to do so.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added UTs.

Closes#27085 from HeartSaVioR/SPARK-29779-part1.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This PR is to make sure cores is the limiting resource when using accelerator aware scheduling and fix a few issues with SparkContext.checkResourcesPerTask

For the first version of accelerator aware scheduling(SPARK-27495), the SPIP had a condition that we can support dynamic allocation because we were going to have a strict requirement that we don't waste any resources. This means that the number of slots each executor has could be calculated from the number of cores and task cpus just as is done today.

Somewhere along the line of development we relaxed that and only warn when we are wasting resources. This breaks the dynamic allocation logic if the limiting resource is no longer the cores because its using the cores and task cpus to calculate the number of executors it needs. This means we will request less executors then we really need to run everything. We have to enforce that cores is always the limiting resource so we should throw if its not.

The only issue with us enforcing this is on cluster managers (standalone and mesos coarse grained) where we don't know the executor cores up front by default. Meaning the spark.executor.cores config defaults to 1 but when the executor is started by default it gets all the cores of the Worker. So we have to add logic specifically to handle that and we can't enforce this requirements, we can just warn when dynamic allocation is enabled for those.

### Why are the changes needed?

Bug in dynamic allocation if cores is not limiting resource and warnings not correct.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

Unit test added and manually tested the confiditions on local mode, local cluster mode, standalone mode, and yarn.

Closes#27138 from tgravescs/SPARK-30446.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

There is a race condition in test case introduced in SPARK-30359 between reviveOffers in org.apache.spark.scheduler.TaskSchedulerImpl#submitTasks and org.apache.spark.scheduler.TaskSetManager#resourceOffer, in the testcase

No need to do resourceOffers as submitTask will revive offers from task set

### Why are the changes needed?

Fix flaky test

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Test case can pass after the change

Closes#27115 from ajithme/testflaky.

Authored-by: Ajith <ajith2489@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Previously in https://github.com/apache/spark/pull/26614/files#diff-bad3987c83bd22d46416d3dd9d208e76R90, we compare the number of tasks with `(conf.get(EXECUTOR_CORES) / sched.CPUS_PER_TASK)`. In standalone mode if the value is not explicitly set by default, the conf value would be 1 but the executor would actually use all the cores of the worker. So it is allowed to have `CPUS_PER_TASK` greater than `EXECUTOR_CORES`. To handle this case, we change the condition to be `numTasks <= Math.max(conf.get(EXECUTOR_CORES) / sched.CPUS_PER_TASK, 1)`

### Why are the changes needed?

For standalone mode if the user set the `spark.task.cpus` to be greater than 1 but didn't set the `spark.executor.cores`. Even though there is only 1 task in the stage it would not be speculative run.

### Does this PR introduce any user-facing change?

Solve the problem above by allowing speculative run when there is only 1 task in the stage.

### How was this patch tested?

Existing tests and one more test in TaskSetManagerSuite

Closes#27126 from yuchenhuo/SPARK-30417.

Authored-by: Yuchen Huo <yuchen.huo@databricks.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

Handle the accelerator aware configs being set to 0. This PR will just ignore the requests when the amount is 0.

### Why are the changes needed?

Better user experience

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

Unit tests added and manually tested on yarn, standalone, local, k8s.

Closes#27118 from tgravescs/SPARK-30445.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

1, for primitive types `Array.fill(n)(0)` -> `Array.ofDim(n)`;

2, for `AnyRef` types `Array.fill(n)(null)` -> `Array.ofDim(n)`;

3, for primitive types `Array.empty[XXX]` -> `Array.emptyXXXArray`

### Why are the changes needed?

`Array.ofDim` avoid assignments;

`Array.emptyXXXArray` avoid create new object;

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing testsuites

Closes#27133 from zhengruifeng/minor_fill_ofDim.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Use `println()` instead of `print()` to show process bar in console.

### Why are the changes needed?

Logs are polluted by process bar:

This is easy to reproduce:

1. start `./bin/spark-shell`

2. `sc.setLogLevel("INFO")`

3. run: `spark.range(100000000).coalesce(1).write.parquet("/tmp/result")`

### Does this PR introduce any user-facing change?

Yeah, more friendly format in console.

### How was this patch tested?

Tested manually.

Closes#27061 from Ngone51/fix-processbar.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This patch fixes flaky tests "master/worker web ui available" & "master/worker web ui available with reverseProxy" in MasterSuite.

Tracking back from stack trace below,

```

19/12/19 13:48:39.160 dispatcher-event-loop-4 INFO Worker: WorkerWebUI is available at http://localhost:8080/proxy/worker-20191219

134839-localhost-36054

19/12/19 13:48:39.296 WorkerUI-52072 WARN JettyUtils: GET /json/ failed: java.lang.NullPointerException

java.lang.NullPointerException

at org.apache.spark.deploy.worker.ui.WorkerPage.renderJson(WorkerPage.scala:39)

at org.apache.spark.ui.WebUI.$anonfun$attachPage$2(WebUI.scala:91)

at org.apache.spark.ui.JettyUtils$$anon$1.doGet(JettyUtils.scala:80)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:687)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:790)

at org.eclipse.jetty.servlet.ServletHolder.handle(ServletHolder.java:873)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1623)

at org.apache.spark.ui.HttpSecurityFilter.doFilter(HttpSecurityFilter.scala:95)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1610)

at org.eclipse.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:540)

```

there's possible race condition in `Dispatcher.registerRpcEndpoint()`:

481fb63f97/core/src/main/scala/org/apache/spark/rpc/netty/Dispatcher.scala (L64-L77)

`getMessageLoop()` initializes a new Inbox for this endpoint for both DedicatedMessageLoop

and SharedMessageLoop, which calls `onStart()` "asynchronously" and "eventually" via posting `OnStart` message. `onStart()` will initialize UI page instance(s), so the execution of `endpointRefs.put()` and initializing UI page instance(s) are "concurrent".

MasterPage and WorkerPage retrieve endpoint ref and store it as "val" assuming endpoint ref is valid when they're initialized - so in bad case they could store "null" as endpoint ref, and don't change.

481fb63f97/core/src/main/scala/org/apache/spark/deploy/master/ui/MasterPage.scala (L33-L38)481fb63f97/core/src/main/scala/org/apache/spark/deploy/worker/ui/WorkerPage.scala (L35-L41)

This patch breaks down the step to `find the right message loop` and `register endpoint to message loop`, and ensure endpoint ref is set "before" registering endpoint to message loop.

### Why are the changes needed?

We observed the test failures from Jenkins; below are the links:

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/115583/testReport/https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/115700/testReport/

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing UTs.

You can also reproduce the bug consistently via adding `Thread.sleep(1000)` just before `endpointRefs.put(endpoint, endpointRef)` in `Dispatcher.registerRpcEndpoint(...)`.

Closes#27010 from HeartSaVioR/SPARK-30313.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

Remove `executorsPendingToRemove.clear()` from `CoarseGrainedSchedulerBackend.reset()`.

### Why are the changes needed?

Clear `executorsPendingToRemove` before remove executors will cause all tasks running on those "pending to remove" executors to count failures. But that's not true for the case of `executorsPendingToRemove(execId)=true`.

Besides, `executorsPendingToRemove` will be cleaned up within `removeExecutor()` at the end just as same as `executorsPendingLossReason`.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added a new test in `TaskSetManagerSuite`.

Closes#27017 from Ngone51/dont-clear-eptr-in-reset.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This bug manifested itself when another stream would potentially make a call

to NioBufferedFileInputStream.read() after it had reached EOF in the wrapped

stream. In that case, the refill() code would clear the output buffer the

first time EOF was found, leaving it in a readable state for subsequent

read() calls. If any of those calls were made, bad data would be returned.

By flipping the buffer before returning, even in the EOF case, you get the

correct behavior in subsequent calls. I picked that approach to avoid keeping

more state in this class, although it means calling the underlying stream

even after EOF (which is fine, but perhaps a little more expensive).

This showed up (at least) when using encryption, because the commons-crypto

StreamInput class does not track EOF internally, leaving it for the wrapped

stream to behave correctly.

Tested with added unit test + slightly modified test case attached to SPARK-18105.

Closes#27084 from vanzin/SPARK-30225.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Needs to improve the Column name and tooltips for the Fair Scheduler Pool Table.

### Why are the changes needed?

Need to correct SchedulingMode column name to -> 'Scheduling Mode' and tooltips need to add for Minimum Share, Pool Weight and Scheduling Mode (require meaning full Tool tips for the end user to understand.)

### Does this PR introduce any user-facing change?

YES

### How was this patch tested?

Manual Testing.

Closes#27047 from 07ARB/SPARK-30384.

Lead-authored-by: 07ARB <ankitrajboudh@gmail.com>

Co-authored-by: Ankitraj <8948111+07ARB@users.noreply.github.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

There is a deadlock between `LiveListenerBus#stop` and `AsyncEventQueue#removeListenerOnError`.

We can reproduce as follows:

1. Post some events to `LiveListenerBus`

2. Call `LiveListenerBus#stop` and hold the synchronized lock of `bus`(5e92301723/core/src/main/scala/org/apache/spark/scheduler/LiveListenerBus.scala (L229)), waiting until all the events are processed by listeners, then remove all the queues

3. Event queue would drain out events by posting to its listeners. If a listener is interrupted, it will call `AsyncEventQueue#removeListenerOnError`, inside it will call `bus.removeListener`(7b1b60c758/core/src/main/scala/org/apache/spark/scheduler/AsyncEventQueue.scala (L207)), trying to acquire synchronized lock of bus, resulting in deadlock

This PR removes the `synchronized` from `LiveListenerBus.stop` because underlying data structures themselves are thread-safe.

### Why are the changes needed?

To fix deadlock.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New UT.

Closes#26924 from wangshuo128/event-queue-race-condition.

Authored-by: Wang Shuo <wangshuo128@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This patch proposes to only convert first few elements of collection accumulators in `LiveEntityHelpers.newAccumulatorInfos`.

### Why are the changes needed?

One Spark job on our cluster uses collection accumulator to collect something and has encountered an exception like:

```

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOf(Arrays.java:3332)

at java.lang.AbstractStringBuilder.ensureCapacityInternal(AbstractStringBuilder.java:124)

at java.lang.AbstractStringBuilder.append(AbstractStringBuilder.java:448)

at java.lang.StringBuilder.append(StringBuilder.java:136)

at java.lang.StringBuilder.append(StringBuilder.java:131)

at java.util.AbstractCollection.toString(AbstractCollection.java:462)

at java.util.Collections$UnmodifiableCollection.toString(Collections.java:1035)

at org.apache.spark.status.LiveEntityHelpers$$anonfun$newAccumulatorInfos$2$$anonfun$apply$3.apply(LiveEntity.scala:596)

at org.apache.spark.status.LiveEntityHelpers$$anonfun$newAccumulatorInfos$2$$anonfun$apply$3.apply(LiveEntity.scala:596)

at scala.Option.map(Option.scala:146)

at org.apache.spark.status.LiveEntityHelpers$$anonfun$newAccumulatorInfos$2.apply(LiveEntity.scala:596)

at org.apache.spark.status.LiveEntityHelpers$$anonfun$newAccumulatorInfos$2.apply(LiveEntity.scala:591)

```

`LiveEntityHelpers.newAccumulatorInfos` converts `AccumulableInfo`s to `v1.AccumulableInfo` by calling `toString` on accumulator's value. For collection accumulator, it might take much more memory when in string representation, for example, collection accumulator of long values, and cause OOM (in this job, the driver memory is 6g).

Looks like the results of `newAccumulatorInfos` are used in api and ui. For such usage, it also does not make sense to have very long string of complete collection accumulators.

### Does this PR introduce any user-facing change?

Yes. Collection accumulator now only shows first few elements in api and ui.

### How was this patch tested?

Unit test.

Manual test. Launched a Spark shell, ran:

```scala

val accum = sc.collectionAccumulator[Long]("Collection Accumulator Example")

sc.range(0, 10000, 1, 1).foreach(x => accum.add(x))

accum.value

```

<img width="2533" alt="Screen Shot 2019-12-30 at 2 03 43 PM" src="https://user-images.githubusercontent.com/68855/71602488-6eb2c400-2b0d-11ea-8725-dba36478198f.png">

Closes#27038 from viirya/partial-collect-accu.

Lead-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Co-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

UI and event logs redact sensitive information. But the monitoring URL, https://spark.apache.org/docs/latest/monitoring.html#rest-api , specifically /applications/[app-id]/environment does not, which is a security issue.

### What changes were proposed in this pull request?

REST api response is redacted before sending it

### Why are the changes needed?

If no redaction is done for rest API call, it can leak security information

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Tested manually

Closes#27018 from ajithme/redactrest.

Authored-by: Ajith <ajith2489@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Remove meaning less tooltip from All pages.

### Why are the changes needed?

If we can't come up with meaningful tooltips, then tooltips not require to add.

### Does this PR introduce any user-facing change?

Yes

tooltips like highlight in above image got removed

### How was this patch tested?

Manual test.

Closes#27043 from 07ARB/SPARK-30383.

Authored-by: 07ARB <ankitrajboudh@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch fixes the flaky test failure on MasterSuite, "SPARK-27510: Master should avoid dead loop while launching executor failed in Worker".

The culprit of test failure was ironically the test ran too fast; the interval of `eventually` is by default "15 ms", but it took only "8 ms" from submitting driver to removing app from master.

```

19/12/23 15:45:06.533 dispatcher-event-loop-6 INFO Master: Registering worker localhost:9999 with 10 cores, 3.6 GiB RAM

19/12/23 15:45:06.534 dispatcher-event-loop-6 INFO Master: Driver submitted org.apache.spark.FakeClass

19/12/23 15:45:06.535 dispatcher-event-loop-6 INFO Master: Launching driver driver-20191223154506-0000 on worker 10001

19/12/23 15:45:06.536 dispatcher-event-loop-9 INFO Master: Registering app name

19/12/23 15:45:06.537 dispatcher-event-loop-9 INFO Master: Registered app name with ID app-20191223154506-0000

19/12/23 15:45:06.537 dispatcher-event-loop-9 INFO Master: Launching executor app-20191223154506-0000/0 on worker 10001

19/12/23 15:45:06.537 dispatcher-event-loop-10 INFO Master: Removing executor app-20191223154506-0000/0 because it is FAILED

...

19/12/23 15:45:06.542 dispatcher-event-loop-19 ERROR Master: Application name with ID app-20191223154506-0000 failed 10 times; removing it

```

Given the interval is already tiny, instead of lowering interval, the patch considers above case as well when verifying the status.

### Why are the changes needed?

We observed intermittent test failure in Jenkins build which should be fixed.

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/115664/testReport/

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Modified UT.

Closes#27004 from HeartSaVioR/SPARK-30348.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Remove it from `CoarseGrainedSchedulerBackend` when `HeartbeatReceiver` recognizes a lost executor.

### Why are the changes needed?

Currently, an application may hang if we don't remove a lost executor from `CoarseGrainedSchedulerBackend` as it may happens due to:

1) In `expireDeadHosts()`, `HeartbeatReceiver` calls `scheduler.executorLost()`;

2) Before `HeartbeatReceiver` calls `sc.killAndReplaceExecutor()`(which would mark the lost executor as "pendingToRemove") in a separate thread, `CoarseGrainedSchedulerBackend` may begins to launch tasks on that executor without realizing it has been lost indeed.

3) If that lost executor doesn't shut down gracefully, `CoarseGrainedSchedulerBackend ` may never receive a disconnect event. As a result, tasks launched on that lost executor become orphans. While at the same time, driver just thinks that those tasks are still running and waits forever.

Removing the lost executor from `CoarseGrainedSchedulerBackend` would let `TaskSetManager` mark those tasks as failed which avoids app hang. Furthermore, it cleans up records in `executorDataMap`, which may never be removed in such case.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Updated existed tests.

Close#24350.

Closes#26980 from Ngone51/SPARK-27348.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>