## What changes were proposed in this pull request?

Improving the test coverage of window functions focusing on missing test for window aggregate functions. No new UDAF test is added as it has been tested already.

## How was this patch tested?

Only new tests were added, automated tests were executed.

Author: “attilapiros” <piros.attila.zsolt@gmail.com>

Author: Attila Zsolt Piros <2017933+attilapiros@users.noreply.github.com>

Closes#20046 from attilapiros/SPARK-22362.

## What changes were proposed in this pull request?

In Spark SQL, we usually reuse the `UnsafeRow` instance and need to copy the data when a place buffers non-serialized objects.

Shuffle may buffer objects if we don't make it to the bypass merge shuffle or unsafe shuffle.

`ShuffleExchangeExec.needToCopyObjectsBeforeShuffle` misses the case that, if `spark.sql.shuffle.partitions` is large enough, we could fail to run unsafe shuffle and go with the non-serialized shuffle.

This bug is very hard to hit since users wouldn't set such a large number of partitions(16 million) for Spark SQL exchange.

TODO: test

## How was this patch tested?

todo.

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21101 from cloud-fan/shuffle.

## What changes were proposed in this pull request?

The PR adds the SQL function `element_at`. The behavior of the function is based on Presto's one.

This function returns element of array at given index in value if column is array, or returns value for the given key in value if column is map.

## How was this patch tested?

Added UTs

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#21053 from kiszk/SPARK-23924.

## What changes were proposed in this pull request?

The PR adds the SQL function `array_position`. The behavior of the function is based on Presto's one.

The function returns the position of the first occurrence of the element in array x (or 0 if not found) using 1-based index as BigInt.

## How was this patch tested?

Added UTs

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#21037 from kiszk/SPARK-23919.

## What changes were proposed in this pull request?

DataFrameRangeSuite.test("Cancelling stage in a query with Range.") stays sometimes in an infinite loop and times out the build.

There were multiple issues with the test:

1. The first valid stageId is zero when the test started alone and not in a suite and the following code waits until timeout:

```

eventually(timeout(10.seconds), interval(1.millis)) {

assert(DataFrameRangeSuite.stageToKill > 0)

}

```

2. The `DataFrameRangeSuite.stageToKill` was overwritten by the task's thread after the reset which ended up in canceling the same stage 2 times. This caused the infinite wait.

This PR solves this mentioned flakyness by removing the shared `DataFrameRangeSuite.stageToKill` and using `wait` and `CountDownLatch` for synhronization.

## How was this patch tested?

Existing unit test.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes#20888 from gaborgsomogyi/SPARK-23775.

## What changes were proposed in this pull request?

```

Py4JJavaError: An error occurred while calling o153.sql.

: org.apache.spark.SparkException: Job aborted.

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.write(FileFormatWriter.scala:223)

at org.apache.spark.sql.execution.datasources.InsertIntoHadoopFsRelationCommand.run(InsertIntoHadoopFsRelationCommand.scala:189)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.executeCollect(commands.scala:79)

at org.apache.spark.sql.Dataset$$anonfun$6.apply(Dataset.scala:190)

at org.apache.spark.sql.Dataset$$anonfun$6.apply(Dataset.scala:190)

at org.apache.spark.sql.Dataset$$anonfun$59.apply(Dataset.scala:3021)

at org.apache.spark.sql.execution.SQLExecution$.withCustomExecutionEnv(SQLExecution.scala:89)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:127)

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3020)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:190)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:74)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:646)

at sun.reflect.GeneratedMethodAccessor153.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:380)

at py4j.Gateway.invoke(Gateway.java:293)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:226)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.spark.SparkException: Exception thrown in Future.get:

at org.apache.spark.sql.execution.exchange.BroadcastExchangeExec.doExecuteBroadcast(BroadcastExchangeExec.scala:190)

at org.apache.spark.sql.execution.InputAdapter.doExecuteBroadcast(WholeStageCodegenExec.scala:267)

at org.apache.spark.sql.execution.joins.BroadcastNestedLoopJoinExec.doConsume(BroadcastNestedLoopJoinExec.scala:530)

at org.apache.spark.sql.execution.CodegenSupport$class.consume(WholeStageCodegenExec.scala:155)

at org.apache.spark.sql.execution.ProjectExec.consume(basicPhysicalOperators.scala:37)

at org.apache.spark.sql.execution.ProjectExec.doConsume(basicPhysicalOperators.scala:69)

at org.apache.spark.sql.execution.CodegenSupport$class.consume(WholeStageCodegenExec.scala:155)

at org.apache.spark.sql.execution.FilterExec.consume(basicPhysicalOperators.scala:144)

...

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.write(FileFormatWriter.scala:190)

... 23 more

Caused by: java.util.concurrent.ExecutionException: org.apache.spark.SparkException: Task not serializable

at java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.util.concurrent.FutureTask.get(FutureTask.java:206)

at org.apache.spark.sql.execution.exchange.BroadcastExchangeExec.doExecuteBroadcast(BroadcastExchangeExec.scala:179)

... 276 more

Caused by: org.apache.spark.SparkException: Task not serializable

at org.apache.spark.util.ClosureCleaner$.ensureSerializable(ClosureCleaner.scala:340)

at org.apache.spark.util.ClosureCleaner$.org$apache$spark$util$ClosureCleaner$$clean(ClosureCleaner.scala:330)

at org.apache.spark.util.ClosureCleaner$.clean(ClosureCleaner.scala:156)

at org.apache.spark.SparkContext.clean(SparkContext.scala:2380)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitionsWithIndex$1.apply(RDD.scala:850)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitionsWithIndex$1.apply(RDD.scala:849)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:112)

at org.apache.spark.rdd.RDD.withScope(RDD.scala:371)

at org.apache.spark.rdd.RDD.mapPartitionsWithIndex(RDD.scala:849)

at org.apache.spark.sql.execution.WholeStageCodegenExec.doExecute(WholeStageCodegenExec.scala:417)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:123)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:118)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$3.apply(SparkPlan.scala:152)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:149)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:118)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec.prepareShuffleDependency(ShuffleExchangeExec.scala:89)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec$$anonfun$doExecute$1.apply(ShuffleExchangeExec.scala:125)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec$$anonfun$doExecute$1.apply(ShuffleExchangeExec.scala:116)

at org.apache.spark.sql.catalyst.errors.package$.attachTree(package.scala:52)

at org.apache.spark.sql.execution.exchange.ShuffleExchangeExec.doExecute(ShuffleExchangeExec.scala:116)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:123)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:118)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$3.apply(SparkPlan.scala:152)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:149)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:118)

at org.apache.spark.sql.execution.InputAdapter.inputRDDs(WholeStageCodegenExec.scala:271)

at org.apache.spark.sql.execution.aggregate.HashAggregateExec.inputRDDs(HashAggregateExec.scala:181)

at org.apache.spark.sql.execution.WholeStageCodegenExec.doExecute(WholeStageCodegenExec.scala:414)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:123)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:118)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$3.apply(SparkPlan.scala:152)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:149)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:118)

at org.apache.spark.sql.execution.collect.Collector$.collect(Collector.scala:61)

at org.apache.spark.sql.execution.collect.Collector$.collect(Collector.scala:70)

at org.apache.spark.sql.execution.SparkPlan.executeCollectResult(SparkPlan.scala:264)

at org.apache.spark.sql.execution.exchange.BroadcastExchangeExec$$anon$1$$anonfun$call$1.apply(BroadcastExchangeExec.scala:93)

at org.apache.spark.sql.execution.exchange.BroadcastExchangeExec$$anon$1$$anonfun$call$1.apply(BroadcastExchangeExec.scala:81)

at org.apache.spark.sql.execution.SQLExecution$.withExecutionId(SQLExecution.scala:150)

at org.apache.spark.sql.execution.exchange.BroadcastExchangeExec$$anon$1.call(BroadcastExchangeExec.scala:80)

at org.apache.spark.sql.execution.exchange.BroadcastExchangeExec$$anon$1.call(BroadcastExchangeExec.scala:76)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

... 1 more

Caused by: java.nio.BufferUnderflowException

at java.nio.HeapByteBuffer.get(HeapByteBuffer.java:151)

at java.nio.ByteBuffer.get(ByteBuffer.java:715)

at org.apache.parquet.io.api.Binary$ByteBufferBackedBinary.getBytes(Binary.java:405)

at org.apache.parquet.io.api.Binary$ByteBufferBackedBinary.getBytesUnsafe(Binary.java:414)

at org.apache.parquet.io.api.Binary$ByteBufferBackedBinary.writeObject(Binary.java:484)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at java.io.ObjectStreamClass.invokeWriteObject(ObjectStreamClass.java:1128)

at java.io.ObjectOutputStream.writeSerialData(ObjectOutputStream.java:1496)

```

The Parquet filters are serializable but not thread safe. SparkPlan.prepare() could be called in different threads (BroadcastExchange will call it in a thread pool). Thus, we could serialize the same Parquet filter at the same time. This is not easily reproduced. The fix is to avoid serializing these Parquet filters in the driver. This PR is to avoid serializing these Parquet filters by moving the parquet filter generation from the driver to executors.

## How was this patch tested?

Having two queries one is a 1000-line SQL query and a 3000-line SQL query. Need to run at least one hour with a heavy write workload to reproduce once.

Author: gatorsmile <gatorsmile@gmail.com>

Closes#21086 from gatorsmile/taskNotSerializable.

## What changes were proposed in this pull request?

Each data source implementation can define its own options and teach its users how to set them. Spark doesn't have any restrictions about what options a data source should or should not have. It's possible that some options are very common and many data sources use them. However different data sources may define the common options(key and meaning) differently, which is quite confusing to end users.

This PR defines some standard options that data sources can optionally adopt: path, table and database.

## How was this patch tested?

a new test case.

Author: Wenchen Fan <wenchen@databricks.com>

Closes#20535 from cloud-fan/options.

## What changes were proposed in this pull request?

There was no check on nullability for arguments of `Tuple`s. This could lead to have weird behavior when a null value had to be deserialized into a non-nullable Scala object: in those cases, the `null` got silently transformed in a valid value (like `-1` for `Int`), corresponding to the default value we are using in the SQL codebase. This situation was very likely to happen when deserializing to a Tuple of primitive Scala types (like Double, Int, ...).

The PR adds the `AssertNotNull` to arguments of tuples which have been asked to be converted to non-nullable types.

## How was this patch tested?

added UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20976 from mgaido91/SPARK-23835.

## What changes were proposed in this pull request?

Unit tests for EpochCoordinator that test correct sequencing of committed epochs. Several tests are ignored since they test functionality implemented in SPARK-23503 which is not yet merged, otherwise they fail.

Author: Efim Poberezkin <efim@poberezkin.ru>

Closes#20983 from efimpoberezkin/pr/EpochCoordinator-tests.

## What changes were proposed in this pull request?

Add a memory source for continuous processing.

Note that only one of the ContinuousSuite tests is migrated to minimize the diff here. I'll submit a second PR for SPARK-23688 to change the rest and get rid of waitForRateSourceTriggers.

## How was this patch tested?

unit test

Author: Jose Torres <torres.joseph.f+github@gmail.com>

Closes#20828 from jose-torres/continuousMemory.

## What changes were proposed in this pull request?

The PR adds the SQL function `array_min`. It takes an array as argument and returns the minimum value in it.

## How was this patch tested?

added UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21025 from mgaido91/SPARK-23918.

## What changes were proposed in this pull request?

The PR adds the SQL function `array_max`. It takes an array as argument and returns the maximum value in it.

## How was this patch tested?

added UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#21024 from mgaido91/SPARK-23917.

## What changes were proposed in this pull request?

Checkpoint files (offset log files, state store files) in Structured Streaming must be written atomically such that no partial files are generated (would break fault-tolerance guarantees). Currently, there are 3 locations which try to do this individually, and in some cases, incorrectly.

1. HDFSOffsetMetadataLog - This uses a FileManager interface to use any implementation of `FileSystem` or `FileContext` APIs. It preferably loads `FileContext` implementation as FileContext of HDFS has atomic renames.

1. HDFSBackedStateStore (aka in-memory state store)

- Writing a version.delta file - This uses FileSystem APIs only to perform a rename. This is incorrect as rename is not atomic in HDFS FileSystem implementation.

- Writing a snapshot file - Same as above.

#### Current problems:

1. State Store behavior is incorrect - HDFS FileSystem implementation does not have atomic rename.

1. Inflexible - Some file systems provide mechanisms other than write-to-temp-file-and-rename for writing atomically and more efficiently. For example, with S3 you can write directly to the final file and it will be made visible only when the entire file is written and closed correctly. Any failure can be made to terminate the writing without making any partial files visible in S3. The current code does not abstract out this mechanism enough that it can be customized.

#### Solution:

1. Introduce a common interface that all 3 cases above can use to write checkpoint files atomically.

2. This interface must provide the necessary interfaces that allow customization of the write-and-rename mechanism.

This PR does that by introducing the interface `CheckpointFileManager` and modifying `HDFSMetadataLog` and `HDFSBackedStateStore` to use the interface. Similar to earlier `FileManager`, there are implementations based on `FileSystem` and `FileContext` APIs, and the latter implementation is preferred to make it work correctly with HDFS.

The key method this interface has is `createAtomic(path, overwrite)` which returns a `CancellableFSDataOutputStream` that has the method `cancel()`. All users of this method need to either call `close()` to successfully write the file, or `cancel()` in case of an error.

## How was this patch tested?

New tests in `CheckpointFileManagerSuite` and slightly modified existing tests.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#21048 from tdas/SPARK-23966.

## What changes were proposed in this pull request?

Currently `PartitioningAwareFileIndex` accepts an optional parameter `userPartitionSchema`. If provided, it will combine the inferred partition schema with the parameter.

However,

1. to get `userPartitionSchema`, we need to combine inferred partition schema with `userSpecifiedSchema`

2. to get the inferred partition schema, we have to create a temporary file index.

Only after that, a final version of `PartitioningAwareFileIndex` can be created.

This can be improved by passing `userSpecifiedSchema` to `PartitioningAwareFileIndex`.

With the improvement, we can reduce redundant code and avoid parsing the file partition twice.

## How was this patch tested?

Unit test

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#21004 from gengliangwang/PartitioningAwareFileIndex.

## What changes were proposed in this pull request?

Add UDF weekday

## How was this patch tested?

A new test

Author: yucai <yyu1@ebay.com>

Closes#21009 from yucai/SPARK-23905.

## What changes were proposed in this pull request?

Many suites currently leak Spark sessions (sometimes with stopped SparkContexts) via the thread-local active Spark session and default Spark session. We should attempt to clean these up and detect when this happens to improve the reproducibility of tests.

## How was this patch tested?

Existing tests

Author: Eric Liang <ekl@databricks.com>

Closes#21058 from ericl/clear-session.

## What changes were proposed in this pull request?

This PR proposes to add `collect` to a query executor as an action.

Seems `collect` / `collect` with Arrow are not recognised via `QueryExecutionListener` as an action. For example, if we have a custom listener as below:

```scala

package org.apache.spark.sql

import org.apache.spark.internal.Logging

import org.apache.spark.sql.execution.QueryExecution

import org.apache.spark.sql.util.QueryExecutionListener

class TestQueryExecutionListener extends QueryExecutionListener with Logging {

override def onSuccess(funcName: String, qe: QueryExecution, durationNs: Long): Unit = {

logError("Look at me! I'm 'onSuccess'")

}

override def onFailure(funcName: String, qe: QueryExecution, exception: Exception): Unit = { }

}

```

and set `spark.sql.queryExecutionListeners` to `org.apache.spark.sql.TestQueryExecutionListener`

Other operations in PySpark or Scala side seems fine:

```python

>>> sql("SELECT * FROM range(1)").show()

```

```

18/04/09 17:02:04 ERROR TestQueryExecutionListener: Look at me! I'm 'onSuccess'

+---+

| id|

+---+

| 0|

+---+

```

```scala

scala> sql("SELECT * FROM range(1)").collect()

```

```

18/04/09 16:58:41 ERROR TestQueryExecutionListener: Look at me! I'm 'onSuccess'

res1: Array[org.apache.spark.sql.Row] = Array([0])

```

but ..

**Before**

```python

>>> sql("SELECT * FROM range(1)").collect()

```

```

[Row(id=0)]

```

```python

>>> spark.conf.set("spark.sql.execution.arrow.enabled", "true")

>>> sql("SELECT * FROM range(1)").toPandas()

```

```

id

0 0

```

**After**

```python

>>> sql("SELECT * FROM range(1)").collect()

```

```

18/04/09 16:57:58 ERROR TestQueryExecutionListener: Look at me! I'm 'onSuccess'

[Row(id=0)]

```

```python

>>> spark.conf.set("spark.sql.execution.arrow.enabled", "true")

>>> sql("SELECT * FROM range(1)").toPandas()

```

```

18/04/09 17:53:26 ERROR TestQueryExecutionListener: Look at me! I'm 'onSuccess'

id

0 0

```

## How was this patch tested?

I have manually tested as described above and unit test was added.

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21007 from HyukjinKwon/SPARK-23942.

## What changes were proposed in this pull request?

Current SS continuous doesn't support processing on temp table or `df.as("xxx")`, SS will throw an exception as LogicalPlan not supported, details described in [here](https://issues.apache.org/jira/browse/SPARK-23748).

So here propose to add this support.

## How was this patch tested?

new UT.

Author: jerryshao <sshao@hortonworks.com>

Closes#21017 from jerryshao/SPARK-23748.

SQLMetricsTestUtils.currentExecutionIds() was racing with the listener

bus, which lead to some flaky tests. We should wait till the listener bus is

empty.

I tested by adding some Thread.sleep()s in SQLAppStatusListener, which

reproduced the exceptions I saw on Jenkins. With this change, they went

away.

Author: Imran Rashid <irashid@cloudera.com>

Closes#21041 from squito/SPARK-23962.

## What changes were proposed in this pull request?

Mark `HashAggregateExec.bufVars` as transient to avoid it from being serialized.

Also manually null out this field at the end of `doProduceWithoutKeys()` to shorten its lifecycle, because it'll no longer be used after that.

## How was this patch tested?

Existing tests.

Author: Kris Mok <kris.mok@databricks.com>

Closes#21039 from rednaxelafx/codegen-improve.

## What changes were proposed in this pull request?

This PR slightly refactors the newly added `ExprValue` API by quite a bit. The following changes are introduced:

1. `ExprValue` now uses the actual class instead of the class name as its type. This should give some more flexibility with generating code in the future.

2. Renamed `StatementValue` to `SimpleExprValue`. The statement concept is broader then an expression (untyped and it cannot be on the right hand side of an assignment), and this was not really what we were using it for. I have added a top level `JavaCode` trait that can be used in the future to reinstate (no pun intended) a statement a-like code fragment.

3. Added factory methods to the `JavaCode` companion object to make it slightly less verbose to create `JavaCode`/`ExprValue` objects. This is also what makes the diff quite large.

4. Added one more factory method to `ExprCode` to make it easier to create code-less expressions.

## How was this patch tested?

Existing tests.

Author: Herman van Hovell <hvanhovell@databricks.com>

Closes#21026 from hvanhovell/SPARK-23951.

## What changes were proposed in this pull request?

In the PR #20886, I mistakenly check the table location only when `ignoreIfExists` is false, which was following the original deprecated PR.

That was wrong. When `ignoreIfExists` is true and the target table doesn't exist, we should also check the table location. In other word, **`ignoreIfExists` has nothing to do with table location validation**.

This is a follow-up PR to fix the mistake.

## How was this patch tested?

Add one unit test.

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#21001 from gengliangwang/SPARK-19724-followup.

## What changes were proposed in this pull request?

The codegen output of `Expression`, aka `ExprCode`, now encapsulates only strings of output value (`value`) and nullability (`isNull`). It makes difficulty for us to know what the output really is. I think it is better if we can add wrappers for the value and nullability that let us to easily know that.

## How was this patch tested?

Existing tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20043 from viirya/SPARK-22856.

## What changes were proposed in this pull request?

This PR avoids possible overflow at an operation `long = (long)(int * int)`. The multiplication of large positive integer values may set one to MSB. This leads to a negative value in long while we expected a positive value (e.g. `0111_0000_0000_0000 * 0000_0000_0000_0010`).

This PR performs long cast before the multiplication to avoid this situation.

## How was this patch tested?

Existing UTs

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#21002 from kiszk/SPARK-23893.

## What changes were proposed in this pull request?

Proposed tests checks that only subset of input dataset is touched during schema inferring.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#20963 from MaxGekk/json-sampling-tests.

## What changes were proposed in this pull request?

Column.scala and Functions.scala have asc_nulls_first, asc_nulls_last, desc_nulls_first and desc_nulls_last. Add the corresponding python APIs in column.py and functions.py

## How was this patch tested?

Add doctest

Author: Huaxin Gao <huaxing@us.ibm.com>

Closes#20962 from huaxingao/spark-23847.

## What changes were proposed in this pull request?

Add docstring to clarify default window frame boundaries with and without orderBy clause

## How was this patch tested?

Manually generate doc and check.

Author: Li Jin <ice.xelloss@gmail.com>

Closes#20978 from icexelloss/SPARK-23861-window-doc.

## What changes were proposed in this pull request?

This pull request tries to improve the error message for spark while reading parquet files with different schemas, e.g. One with a STRING column and the other with a INT column. A new ParquetSchemaColumnConvertNotSupportedException is added to replace the old UnsupportedOperationException. The Exception is again wrapped in FileScanRdd.scala to throw a more a general QueryExecutionException with the actual parquet file name which trigger the exception.

## How was this patch tested?

Unit tests added to check the new exception and verify the error messages.

Also manually tested with two parquet with different schema to check the error message.

<img width="1125" alt="screen shot 2018-03-30 at 4 03 04 pm" src="https://user-images.githubusercontent.com/37087310/38156580-dd58a140-3433-11e8-973a-b816d859fbe1.png">

Author: Yuchen Huo <yuchen.huo@databricks.com>

Closes#20953 from yuchenhuo/SPARK-23822.

## What changes were proposed in this pull request?

This PR is to finish https://github.com/apache/spark/pull/17272

This JIRA is a follow up work after SPARK-19583

As we discussed in that PR

The following DDL for a managed table with an existed default location should throw an exception:

CREATE TABLE ... (PARTITIONED BY ...) AS SELECT ...

CREATE TABLE ... (PARTITIONED BY ...)

Currently there are some situations which are not consist with above logic:

CREATE TABLE ... (PARTITIONED BY ...) succeed with an existed default location

situation: for both hive/datasource(with HiveExternalCatalog/InMemoryCatalog)

CREATE TABLE ... (PARTITIONED BY ...) AS SELECT ...

situation: hive table succeed with an existed default location

This PR is going to make above two situations consist with the logic that it should throw an exception

with an existed default location.

## How was this patch tested?

unit test added

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#20886 from gengliangwang/pr-17272.

## What changes were proposed in this pull request?

This PR allows us to use one of several types of `MemoryBlock`, such as byte array, int array, long array, or `java.nio.DirectByteBuffer`. To use `java.nio.DirectByteBuffer` allows to have off heap memory which is automatically deallocated by JVM. `MemoryBlock` class has primitive accessors like `Platform.getInt()`, `Platform.putint()`, or `Platform.copyMemory()`.

This PR uses `MemoryBlock` for `OffHeapColumnVector`, `UTF8String`, and other places. This PR can improve performance of operations involving memory accesses (e.g. `UTF8String.trim`) by 1.8x.

For now, this PR does not use `MemoryBlock` for `BufferHolder` based on cloud-fan's [suggestion](https://github.com/apache/spark/pull/11494#issuecomment-309694290).

Since this PR is a successor of #11494, close#11494. Many codes were ported from #11494. Many efforts were put here. **I think this PR should credit to yzotov.**

This PR can achieve **1.1-1.4x performance improvements** for operations in `UTF8String` or `Murmur3_x86_32`. Other operations are almost comparable performances.

Without this PR

```

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Hash byte arrays with length 268435487: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Murmur3_x86_32 526 / 536 0.0 131399881.5 1.0X

UTF8String benchmark: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

hashCode 525 / 552 1022.6 1.0 1.0X

substring 414 / 423 1298.0 0.8 1.3X

```

With this PR

```

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Hash byte arrays with length 268435487: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Murmur3_x86_32 474 / 488 0.0 118552232.0 1.0X

UTF8String benchmark: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

hashCode 476 / 480 1127.3 0.9 1.0X

substring 287 / 291 1869.9 0.5 1.7X

```

Benchmark program

```

test("benchmark Murmur3_x86_32") {

val length = 8192 * 32768 + 31

val seed = 42L

val iters = 1 << 2

val random = new Random(seed)

val arrays = Array.fill[MemoryBlock](numArrays) {

val bytes = new Array[Byte](length)

random.nextBytes(bytes)

new ByteArrayMemoryBlock(bytes, Platform.BYTE_ARRAY_OFFSET, length)

}

val benchmark = new Benchmark("Hash byte arrays with length " + length,

iters * numArrays, minNumIters = 20)

benchmark.addCase("HiveHasher") { _: Int =>

var sum = 0L

for (_ <- 0L until iters) {

sum += HiveHasher.hashUnsafeBytesBlock(

arrays(i), Platform.BYTE_ARRAY_OFFSET, length)

}

}

benchmark.run()

}

test("benchmark UTF8String") {

val N = 512 * 1024 * 1024

val iters = 2

val benchmark = new Benchmark("UTF8String benchmark", N, minNumIters = 20)

val str0 = new java.io.StringWriter() { { for (i <- 0 until N) { write(" ") } } }.toString

val s0 = UTF8String.fromString(str0)

benchmark.addCase("hashCode") { _: Int =>

var h: Int = 0

for (_ <- 0L until iters) { h += s0.hashCode }

}

benchmark.addCase("substring") { _: Int =>

var s: UTF8String = null

for (_ <- 0L until iters) { s = s0.substring(N / 2 - 5, N / 2 + 5) }

}

benchmark.run()

}

```

I run [this benchmark program](https://gist.github.com/kiszk/94f75b506c93a663bbbc372ffe8f05de) using [the commit](ee5a79861c). I got the following results:

```

OpenJDK 64-Bit Server VM 1.8.0_151-8u151-b12-0ubuntu0.16.04.2-b12 on Linux 4.4.0-66-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Memory access benchmarks: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

ByteArrayMemoryBlock get/putInt() 220 / 221 609.3 1.6 1.0X

Platform get/putInt(byte[]) 220 / 236 610.9 1.6 1.0X

Platform get/putInt(Object) 492 / 494 272.8 3.7 0.4X

OnHeapMemoryBlock get/putLong() 322 / 323 416.5 2.4 0.7X

long[] 221 / 221 608.0 1.6 1.0X

Platform get/putLong(long[]) 321 / 321 418.7 2.4 0.7X

Platform get/putLong(Object) 561 / 563 239.2 4.2 0.4X

```

I also run [this benchmark program](https://gist.github.com/kiszk/5fdb4e03733a5d110421177e289d1fb5) for comparing performance of `Platform.copyMemory()`.

```

OpenJDK 64-Bit Server VM 1.8.0_151-8u151-b12-0ubuntu0.16.04.2-b12 on Linux 4.4.0-66-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Platform copyMemory: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Object to Object 1961 / 1967 8.6 116.9 1.0X

System.arraycopy Object to Object 1917 / 1921 8.8 114.3 1.0X

byte array to byte array 1961 / 1968 8.6 116.9 1.0X

System.arraycopy byte array to byte array 1909 / 1937 8.8 113.8 1.0X

int array to int array 1921 / 1990 8.7 114.5 1.0X

double array to double array 1918 / 1923 8.7 114.3 1.0X

Object to byte array 1961 / 1967 8.6 116.9 1.0X

Object to short array 1965 / 1972 8.5 117.1 1.0X

Object to int array 1910 / 1915 8.8 113.9 1.0X

Object to float array 1971 / 1978 8.5 117.5 1.0X

Object to double array 1919 / 1944 8.7 114.4 1.0X

byte array to Object 1959 / 1967 8.6 116.8 1.0X

int array to Object 1961 / 1970 8.6 116.9 1.0X

double array to Object 1917 / 1924 8.8 114.3 1.0X

```

These results show three facts:

1. According to the second/third or sixth/seventh results in the first experiment, if we use `Platform.get/putInt(Object)`, we achieve more than 2x worse performance than `Platform.get/putInt(byte[])` with concrete type (i.e. `byte[]`).

2. According to the second/third or fourth/fifth/sixth results in the first experiment, the fastest way to access an array element on Java heap is `array[]`. **Cons of `array[]` is that it is not possible to support unaligned-8byte access.**

3. According to the first/second/third or fourth/sixth/seventh results in the first experiment, `getInt()/putInt() or getLong()/putLong()` in subclasses of `MemoryBlock` can achieve comparable performance to `Platform.get/putInt()` or `Platform.get/putLong()` with concrete type (second or sixth result). There is no overhead regarding virtual call.

4. According to results in the second experiment, for `Platform.copy()`, to pass `Object` can achieve the same performance as to pass any type of primitive array as source or destination.

5. According to second/fourth results in the second experiment, `Platform.copy()` can achieve the same performance as `System.arrayCopy`. **It would be good to use `Platform.copy()` since `Platform.copy()` can take any types for src and dst.**

We are incrementally replace `Platform.get/putXXX` with `MemoryBlock.get/putXXX`. This is because we have two advantages.

1) Achieve better performance due to having a concrete type for an array.

2) Use simple OO design instead of passing `Object`

It is easy to use `MemoryBlock` in `InternalRow`, `BufferHolder`, `TaskMemoryManager`, and others that are already abstracted. It is not easy to use `MemoryBlock` in utility classes related to hashing or others.

Other candidates are

- UnsafeRow, UnsafeArrayData, UnsafeMapData, SpecificUnsafeRowJoiner

- UTF8StringBuffer

- BufferHolder

- TaskMemoryManager

- OnHeapColumnVector

- BytesToBytesMap

- CachedBatch

- classes for hash

- others.

## How was this patch tested?

Added `UnsafeMemoryAllocator`

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#19222 from kiszk/SPARK-10399.

## What changes were proposed in this pull request?

Currently, the active spark session is set inconsistently (e.g., in createDataFrame, prior to query execution). Many places in spark also incorrectly query active session when they should be calling activeSession.getOrElse(defaultSession) and so might get None even if a Spark session exists.

The semantics here can be cleaned up if we also set the active session when the default session is set.

Related: https://github.com/apache/spark/pull/20926/files

## How was this patch tested?

Unit test, existing test. Note that if https://github.com/apache/spark/pull/20926 merges first we should also update the tests there.

Author: Eric Liang <ekl@databricks.com>

Closes#20927 from ericl/active-session-cleanup.

## What changes were proposed in this pull request?

Migrate foreach sink to DataSourceV2.

Since the previous attempt at this PR #20552, we've changed and strictly defined the lifecycle of writer components. This means we no longer need the complicated lifecycle shim from that PR; it just naturally works.

## How was this patch tested?

existing tests

Author: Jose Torres <torres.joseph.f+github@gmail.com>

Closes#20951 from jose-torres/foreach.

## What changes were proposed in this pull request?

This PR implemented the following cleanups related to `UnsafeWriter` class:

- Remove code duplication between `UnsafeRowWriter` and `UnsafeArrayWriter`

- Make `BufferHolder` class internal by delegating its accessor methods to `UnsafeWriter`

- Replace `UnsafeRow.setTotalSize(...)` with `UnsafeRowWriter.setTotalSize()`

## How was this patch tested?

Tested by existing UTs

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#20850 from kiszk/SPARK-23713.

## What changes were proposed in this pull request?

Currently, the requiredChildDistribution does not specify the partitions. This can cause the weird corner cases where the child's distribution is `SinglePartition` which satisfies the required distribution of `ClusterDistribution(no-num-partition-requirement)`, thus eliminating the shuffle needed to repartition input data into the required number of partitions (i.e. same as state stores). That can lead to "file not found" errors on the state store delta files as the micro-batch-with-no-shuffle will not run certain tasks and therefore not generate the expected state store delta files.

This PR adds the required constraint on the number of partitions.

## How was this patch tested?

Modified test harness to always check that ANY stateful operator should have a constraint on the number of partitions. As part of that, the existing opt-in checks on child output partitioning were removed, as they are redundant.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#20941 from tdas/SPARK-23827.

## What changes were proposed in this pull request?

This PR is to improve the test coverage of the original PR https://github.com/apache/spark/pull/20687

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20911 from gatorsmile/addTests.

## What changes were proposed in this pull request?

Roll forward c68ec4e (#20688).

There are two minor test changes required:

* An error which used to be TreeNodeException[ArithmeticException] is no longer wrapped and is now just ArithmeticException.

* The test framework simply does not set the active Spark session. (Or rather, it doesn't do so early enough - I think it only happens when a query is analyzed.) I've added the required logic to SQLTestUtils.

## How was this patch tested?

existing tests

Author: Jose Torres <torres.joseph.f+github@gmail.com>

Author: jerryshao <sshao@hortonworks.com>

Closes#20922 from jose-torres/ratefix.

## What changes were proposed in this pull request?

This PR supports for pushing down filters for DateType in parquet

## How was this patch tested?

Added UT and tested in local.

Author: yucai <yyu1@ebay.com>

Closes#20851 from yucai/SPARK-23727.

## What changes were proposed in this pull request?

Set default Spark session in the TestSparkSession and TestHiveSparkSession constructors.

## How was this patch tested?

new unit tests

Author: Jose Torres <torres.joseph.f+github@gmail.com>

Closes#20926 from jose-torres/test3.

## What changes were proposed in this pull request?

This PR proposes to add lineSep option for a configurable line separator in text datasource.

It supports this option by using `LineRecordReader`'s functionality with passing it to the constructor.

The approach is similar with https://github.com/apache/spark/pull/20727; however, one main difference is, it uses text datasource's `lineSep` option to parse line by line in JSON's schema inference.

## How was this patch tested?

Manually tested and unit tests were added.

Author: hyukjinkwon <gurwls223@apache.org>

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20877 from HyukjinKwon/linesep-json.

## What changes were proposed in this pull request?

This PR migrate micro batch rate source to V2 API and rewrite UTs to suite V2 test.

## How was this patch tested?

UTs.

Author: jerryshao <sshao@hortonworks.com>

Closes#20688 from jerryshao/SPARK-23096.

## What changes were proposed in this pull request?

This PR fixes an incorrect comparison in SQL between timestamp and date. This is because both of them are casted to `string` and then are compared lexicographically. This implementation shows `false` regarding this query `spark.sql("select cast('2017-03-01 00:00:00' as timestamp) between cast('2017-02-28' as date) and cast('2017-03-01' as date)").show`.

This PR shows `true` for this query by casting `date("2017-03-01")` to `timestamp("2017-03-01 00:00:00")`.

(Please fill in changes proposed in this fix)

## How was this patch tested?

Added new UTs to `TypeCoercionSuite`.

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#20774 from kiszk/SPARK-23549.

## What changes were proposed in this pull request?

This pr added TPCDS v2.7 (latest) queries in `TPCDSQuerySuite` because the current `TPCDSQuerySuite` tests older one (v1.4) and some queries are different from v1.4 and v2.7. Since the original v2.7 queries have the syntaxes that Spark cannot parse, I changed these queries in a following way:

- [date] + 14 days -> date + `INTERVAL` 14 days

- [column name] as "30 days" -> [column name] as \`30 days\`

- Fix some syntax errors, e.g., missing brackets

## How was this patch tested?

Added tests in `TPCDSQuerySuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#20343 from maropu/TPCDSV2_7.

## What changes were proposed in this pull request?

The serializability test uses the same MemoryStream instance for 3 different queries. If any of those queries ask it to commit before the others have run, the rest will see empty dataframes. This can fail the test if q3 is affected.

We should use one instance per query instead.

## How was this patch tested?

Existing unit test. If I move q2.processAllAvailable() before starting q3, the test always fails without the fix.

Author: Jose Torres <torres.joseph.f+github@gmail.com>

Closes#20896 from jose-torres/fixrace.

## What changes were proposed in this pull request?

We should provide customized canonicalize plan for `InMemoryRelation` and `InMemoryTableScanExec`. Otherwise, we can wrongly treat two different cached plans as same result. It causes wrongly reused exchange then.

For a test query like this:

```scala

val cached = spark.createDataset(Seq(TestDataUnion(1, 2, 3), TestDataUnion(4, 5, 6))).cache()

val group1 = cached.groupBy("x").agg(min(col("y")) as "value")

val group2 = cached.groupBy("x").agg(min(col("z")) as "value")

group1.union(group2)

```

Canonicalized plans before:

First exchange:

```

Exchange hashpartitioning(none#0, 5)

+- *(1) HashAggregate(keys=[none#0], functions=[partial_min(none#1)], output=[none#0, none#4])

+- *(1) InMemoryTableScan [none#0, none#1]

+- InMemoryRelation [x#4253, y#4254, z#4255], true, 10000, StorageLevel(disk, memory, deserialized, 1 replicas)

+- LocalTableScan [x#4253, y#4254, z#4255]

```

Second exchange:

```

Exchange hashpartitioning(none#0, 5)

+- *(3) HashAggregate(keys=[none#0], functions=[partial_min(none#1)], output=[none#0, none#4])

+- *(3) InMemoryTableScan [none#0, none#1]

+- InMemoryRelation [x#4253, y#4254, z#4255], true, 10000, StorageLevel(disk, memory, deserialized, 1 replicas)

+- LocalTableScan [x#4253, y#4254, z#4255]

```

You can find that they have the canonicalized plans are the same, although we use different columns in two `InMemoryTableScan`s.

Canonicalized plan after:

First exchange:

```

Exchange hashpartitioning(none#0, 5)

+- *(1) HashAggregate(keys=[none#0], functions=[partial_min(none#1)], output=[none#0, none#4])

+- *(1) InMemoryTableScan [none#0, none#1]

+- InMemoryRelation [none#0, none#1, none#2], true, 10000, StorageLevel(memory, 1 replicas)

+- LocalTableScan [none#0, none#1, none#2]

```

Second exchange:

```

Exchange hashpartitioning(none#0, 5)

+- *(3) HashAggregate(keys=[none#0], functions=[partial_min(none#1)], output=[none#0, none#4])

+- *(3) InMemoryTableScan [none#0, none#2]

+- InMemoryRelation [none#0, none#1, none#2], true, 10000, StorageLevel(memory, 1 replicas)

+- LocalTableScan [none#0, none#1, none#2]

```

## How was this patch tested?

Added unit test.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20831 from viirya/SPARK-23614.

## What changes were proposed in this pull request?

As stated in Jira, there are problems with current `Uuid` expression which uses `java.util.UUID.randomUUID` for UUID generation.

This patch uses the newly added `RandomUUIDGenerator` for UUID generation. So we can make `Uuid` deterministic between retries.

## How was this patch tested?

Added unit tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20861 from viirya/SPARK-23599-2.

## What changes were proposed in this pull request?

Currently we allow writing data frames with empty schema into a file based datasource for certain file formats such as JSON, ORC etc. For formats such as Parquet and Text, we raise error at different times of execution. For text format, we return error from the driver early on in processing where as for format such as parquet, the error is raised from executor.

**Example**

spark.emptyDataFrame.write.format("parquet").mode("overwrite").save(path)

**Results in**

``` SQL

org.apache.parquet.schema.InvalidSchemaException: Cannot write a schema with an empty group: message spark_schema {

}

at org.apache.parquet.schema.TypeUtil$1.visit(TypeUtil.java:27)

at org.apache.parquet.schema.TypeUtil$1.visit(TypeUtil.java:37)

at org.apache.parquet.schema.MessageType.accept(MessageType.java:58)

at org.apache.parquet.schema.TypeUtil.checkValidWriteSchema(TypeUtil.java:23)

at org.apache.parquet.hadoop.ParquetFileWriter.<init>(ParquetFileWriter.java:225)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:342)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:302)

at org.apache.spark.sql.execution.datasources.parquet.ParquetOutputWriter.<init>(ParquetOutputWriter.scala:37)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anon$1.newInstance(ParquetFileFormat.scala:151)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$SingleDirectoryWriteTask.newOutputWriter(FileFormatWriter.scala:376)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$SingleDirectoryWriteTask.execute(FileFormatWriter.scala:387)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$org$apache$spark$sql$execution$datasources$FileFormatWriter$$executeTask$3.apply(FileFormatWriter.scala:278)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$org$apache$spark$sql$execution$datasources$FileFormatWriter$$executeTask$3.apply(FileFormatWriter.scala:276)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1411)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.org$apache$spark$sql$execution$datasources$FileFormatWriter$$executeTask(FileFormatWriter.scala:281)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$write$1.apply(FileFormatWriter.scala:206)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$write$1.apply(FileFormatWriter.scala:205)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87)

at org.apache.spark.scheduler.Task.run(Task.scala:109)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.

```

In this PR, we unify the error processing and raise error on attempt to write empty schema based dataframes into file based datasource (orc, parquet, text , csv, json etc) early on in the processing.

## How was this patch tested?

Unit tests added in FileBasedDatasourceSuite.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#20579 from dilipbiswal/spark-23372.

## What changes were proposed in this pull request?

Output metrics were not filled when parquet sink used.

This PR fixes this problem by passing a `BasicWriteJobStatsTracker` in `FileStreamSink`.

## How was this patch tested?

Additional unit test added.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes#20745 from gaborgsomogyi/SPARK-23288.

## What changes were proposed in this pull request?

To fix `scala.MatchError` in `literals.sql.out`, this pr added an entry for `CalendarIntervalType` in `QueryExecution.toHiveStructString`.

## How was this patch tested?

Existing tests and added tests in `literals.sql`

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#20872 from maropu/FixIntervalTests.

## What changes were proposed in this pull request?

This PR proposes to add `lineSep` option for a configurable line separator in text datasource.

It supports this option by using `LineRecordReader`'s functionality with passing it to the constructor.

## How was this patch tested?

Manual tests and unit tests were added.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20727 from HyukjinKwon/linesep-text.

## What changes were proposed in this pull request?

To drop `exprId`s for `Alias` in user-facing info., this pr added an entry for `Alias` in `NonSQLExpression.sql`

## How was this patch tested?

Added tests in `UDFSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#20827 from maropu/SPARK-23666.

## What changes were proposed in this pull request?

Report SinglePartition in DataSourceV2ScanExec when there's exactly 1 data reader factory.

Note that this means reader factories end up being constructed as partitioning is checked; let me know if you think that could be a problem.

## How was this patch tested?

existing unit tests

Author: Jose Torres <jose@databricks.com>

Author: Jose Torres <torres.joseph.f+github@gmail.com>

Closes#20726 from jose-torres/SPARK-23574.

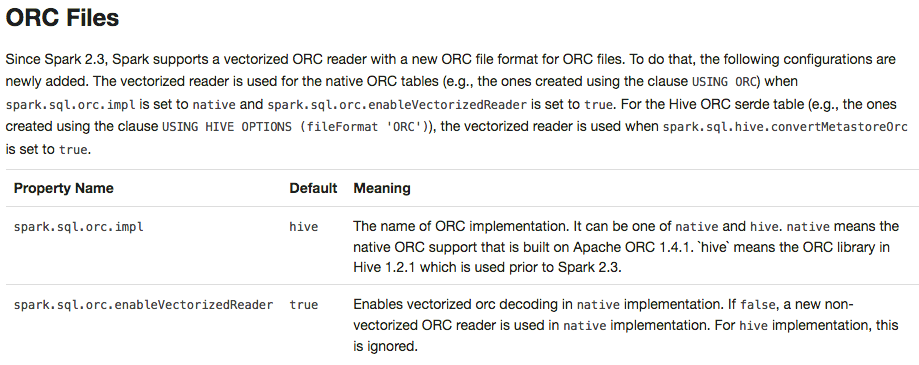

## What changes were proposed in this pull request?

Currently, some tests have an assumption that `spark.sql.sources.default=parquet`. In fact, that is a correct assumption, but that assumption makes it difficult to test new data source format.

This PR aims to

- Improve test suites more robust and makes it easy to test new data sources in the future.

- Test new native ORC data source with the full existing Apache Spark test coverage.

As an example, the PR uses `spark.sql.sources.default=orc` during reviews. The value should be `parquet` when this PR is accepted.

## How was this patch tested?

Pass the Jenkins with updated tests.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20705 from dongjoon-hyun/SPARK-23553.

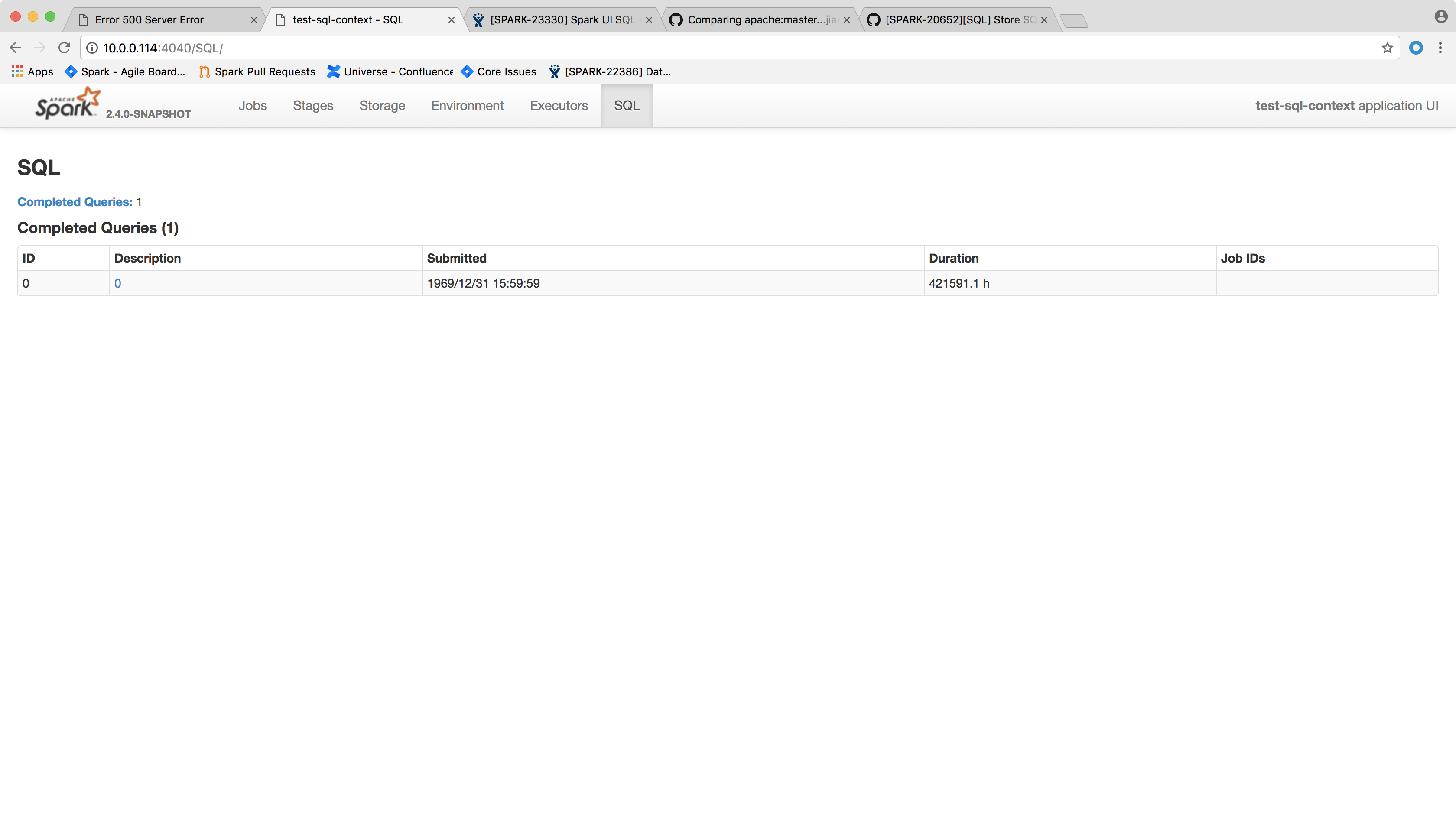

Clean up SparkPlanGraphWrapper objects from InMemoryStore together with cleaning up SQLExecutionUIData

existing unit test was extended to check also SparkPlanGraphWrapper object count

vanzin

Author: myroslavlisniak <acnipin@gmail.com>

Closes#20813 from myroslavlisniak/master.

## What changes were proposed in this pull request?

As discussion in #20675, we need add a new interface `ContinuousDataReaderFactory` to support the requirements of setting start offset in Continuous Processing.

## How was this patch tested?

Existing UT.

Author: Yuanjian Li <xyliyuanjian@gmail.com>

Closes#20689 from xuanyuanking/SPARK-23533.

## What changes were proposed in this pull request?

Revise doc of method pushFilters in SupportsPushDownFilters/SupportsPushDownCatalystFilters

In `FileSourceStrategy`, except `partitionKeyFilters`(the references of which is subset of partition keys), all filters needs to be evaluated after scanning. Otherwise, Spark will get wrong result from data sources like Orc/Parquet.

This PR is to improve the doc.

Author: Wang Gengliang <gengliang.wang@databricks.com>

Closes#20769 from gengliangwang/revise_pushdown_doc.

## What changes were proposed in this pull request?

The from_json() function accepts an additional parameter, where the user might specify the schema. The issue is that the specified schema might not be compatible with data. In particular, the JSON data might be missing data for fields declared as non-nullable in the schema. The from_json() function does not verify the data against such errors. When data with missing fields is sent to the parquet encoder, there is no verification either. The end results is a corrupt parquet file.

To avoid corruptions, make sure that all fields in the user-specified schema are set to be nullable.

Since this changes the behavior of a public function, we need to include it in release notes.

The behavior can be reverted by setting `spark.sql.fromJsonForceNullableSchema=false`

## How was this patch tested?

Added two new tests.

Author: Michał Świtakowski <michal.switakowski@databricks.com>

Closes#20694 from mswit-databricks/SPARK-23173.

## What changes were proposed in this pull request?

Below are the two cases.

``` SQL

case 1

scala> List.empty[String].toDF().rdd.partitions.length

res18: Int = 1

```

When we write the above data frame as parquet, we create a parquet file containing

just the schema of the data frame.

Case 2

``` SQL

scala> val anySchema = StructType(StructField("anyName", StringType, nullable = false) :: Nil)

anySchema: org.apache.spark.sql.types.StructType = StructType(StructField(anyName,StringType,false))

scala> spark.read.schema(anySchema).csv("/tmp/empty_folder").rdd.partitions.length

res22: Int = 0

```

For the 2nd case, since number of partitions = 0, we don't call the write task (the task has logic to create the empty metadata only parquet file)

The fix is to create a dummy single partition RDD and set up the write task based on it to ensure

the metadata-only file.

## How was this patch tested?

A new test is added to DataframeReaderWriterSuite.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#20525 from dilipbiswal/spark-23271.

## What changes were proposed in this pull request?

There was a bug in `calculateParamLength` which caused it to return always 1 + the number of expressions. This could lead to Exceptions especially with expressions of type long.

## How was this patch tested?

added UT + fixed previous UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20772 from mgaido91/SPARK-23628.

## What changes were proposed in this pull request?

This PR proposes to support an alternative function from with group aggregate pandas UDF.

The current form:

```

def foo(pdf):

return ...

```

Takes a single arg that is a pandas DataFrame.

With this PR, an alternative form is supported:

```

def foo(key, pdf):

return ...

```

The alternative form takes two argument - a tuple that presents the grouping key, and a pandas DataFrame represents the data.

## How was this patch tested?

GroupbyApplyTests

Author: Li Jin <ice.xelloss@gmail.com>

Closes#20295 from icexelloss/SPARK-23011-groupby-apply-key.

## What changes were proposed in this pull request?

The following query doesn't work as expected:

```

CREATE EXTERNAL TABLE ext_table(a STRING, b INT, c STRING) PARTITIONED BY (d STRING)

LOCATION 'sql/core/spark-warehouse/ext_table';

ALTER TABLE ext_table CHANGE a a STRING COMMENT "new comment";

DESC ext_table;

```

The comment of column `a` is not updated, that's because `HiveExternalCatalog.doAlterTable` ignores table schema changes. To fix the issue, we should call `doAlterTableDataSchema` instead of `doAlterTable`.

## How was this patch tested?

Updated `DDLSuite.testChangeColumn`.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20696 from jiangxb1987/alterColumnComment.

A few different things going on:

- Remove unused methods.

- Move JSON methods to the only class that uses them.

- Move test-only methods to TestUtils.

- Make getMaxResultSize() a config constant.

- Reuse functionality from existing libraries (JRE or JavaUtils) where possible.

The change also includes changes to a few tests to call `Utils.createTempFile` correctly,

so that temp dirs are created under the designated top-level temp dir instead of

potentially polluting git index.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20706 from vanzin/SPARK-23550.

…lValue

## What changes were proposed in this pull request?

Parquet 1.9 will change the semantics of Statistics.isEmpty slightly

to reflect if the null value count has been set. That breaks a

timestamp interoperability test that cares only about whether there

are column values present in the statistics of a written file for an

INT96 column. Fix by using Statistics.hasNonNullValue instead.

## How was this patch tested?

Unit tests continue to pass against Parquet 1.8, and also pass against

a Parquet build including PARQUET-1217.

Author: Henry Robinson <henry@cloudera.com>

Closes#20740 from henryr/spark-23604.

## What changes were proposed in this pull request?

Add an epoch ID argument to DataWriterFactory for use in streaming. As a side effect of passing in this value, DataWriter will now have a consistent lifecycle; commit() or abort() ends the lifecycle of a DataWriter instance in any execution mode.

I considered making a separate streaming interface and adding the epoch ID only to that one, but I think it requires a lot of extra work for no real gain. I think it makes sense to define epoch 0 as the one and only epoch of a non-streaming query.

## How was this patch tested?

existing unit tests

Author: Jose Torres <jose@databricks.com>

Closes#20710 from jose-torres/api2.

## What changes were proposed in this pull request?

Provide more details in trigonometric function documentations. Referenced `java.lang.Math` for further details in the descriptions.

## How was this patch tested?

Ran full build, checked generated documentation manually

Author: Mihaly Toth <misutoth@gmail.com>

Closes#20618 from misutoth/trigonometric-doc.

## What changes were proposed in this pull request?

A current `CodegenContext` class has immutable value or method without mutable state, too.

This refactoring moves them to `CodeGenerator` object class which can be accessed from anywhere without an instantiated `CodegenContext` in the program.

## How was this patch tested?

Existing tests

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#20700 from kiszk/SPARK-23546.

## What changes were proposed in this pull request?

In https://github.com/apache/spark/pull/20679 I missed a few places in SQL tests.

For hygiene, they should also use the sessionState interface where possible.

## How was this patch tested?

Modified existing tests.

Author: Juliusz Sompolski <julek@databricks.com>

Closes#20718 from juliuszsompolski/SPARK-23514-followup.

## What changes were proposed in this pull request?

This PR moves structured streaming text socket source to V2.

Questions: do we need to remove old "socket" source?

## How was this patch tested?

Unit test and manual verification.

Author: jerryshao <sshao@hortonworks.com>

Closes#20382 from jerryshao/SPARK-23097.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/18944 added one patch, which allowed a spark session to be created when the hive metastore server is down. However, it did not allow running any commands with the spark session. This brings troubles to the user who only wants to read / write data frames without metastore setup.

## How was this patch tested?

Added some unit tests to read and write data frames based on the original HiveMetastoreLazyInitializationSuite.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Feng Liu <fengliu@databricks.com>

Closes#20681 from liufengdb/completely-lazy.

## What changes were proposed in this pull request?

Inside `OptimizeMetadataOnlyQuery.getPartitionAttrs`, avoid using `zip` to generate attribute map.

Also include other minor update of comments and format.

## How was this patch tested?

Existing test cases.

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20693 from jiangxb1987/SPARK-23523.

## What changes were proposed in this pull request?

A few places in `spark-sql` were using `sc.hadoopConfiguration` directly. They should be using `sessionState.newHadoopConf()` to blend in configs that were set through `SQLConf`.

Also, for better UX, for these configs blended in from `SQLConf`, we should consider removing the `spark.hadoop` prefix, so that the settings are recognized whether or not they were specified by the user.

## How was this patch tested?

Tested that AlterTableRecoverPartitions now correctly recognizes settings that are passed in to the FileSystem through SQLConf.

Author: Juliusz Sompolski <julek@databricks.com>

Closes#20679 from juliuszsompolski/SPARK-23514.

## What changes were proposed in this pull request?

Clarify JSON and CSV reader behavior in document.

JSON doesn't support partial results for corrupted records.

CSV only supports partial results for the records with more or less tokens.

## How was this patch tested?

Pass existing tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20666 from viirya/SPARK-23448-2.

## What changes were proposed in this pull request?

```Scala

val tablePath = new File(s"${path.getCanonicalPath}/cOl3=c/cOl1=a/cOl5=e")

Seq(("a", "b", "c", "d", "e")).toDF("cOl1", "cOl2", "cOl3", "cOl4", "cOl5")

.write.json(tablePath.getCanonicalPath)

val df = spark.read.json(path.getCanonicalPath).select("CoL1", "CoL5", "CoL3").distinct()

df.show()

```

It generates a wrong result.

```

[c,e,a]

```

We have a bug in the rule `OptimizeMetadataOnlyQuery `. We should respect the attribute order in the original leaf node. This PR is to fix it.

## How was this patch tested?

Added a test case

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20684 from gatorsmile/optimizeMetadataOnly.

## What changes were proposed in this pull request?

Refactor ColumnStat to be more flexible.

* Split `ColumnStat` and `CatalogColumnStat` just like `CatalogStatistics` is split from `Statistics`. This detaches how the statistics are stored from how they are processed in the query plan. `CatalogColumnStat` keeps `min` and `max` as `String`, making it not depend on dataType information.

* For `CatalogColumnStat`, parse column names from property names in the metastore (`KEY_VERSION` property), not from metastore schema. This means that `CatalogColumnStat`s can be created for columns even if the schema itself is not stored in the metastore.

* Make all fields optional. `min`, `max` and `histogram` for columns were optional already. Having them all optional is more consistent, and gives flexibility to e.g. drop some of the fields through transformations if they are difficult / impossible to calculate.

The added flexibility will make it possible to have alternative implementations for stats, and separates stats collection from stats and estimation processing in plans.

## How was this patch tested?

Refactored existing tests to work with refactored `ColumnStat` and `CatalogColumnStat`.

New tests added in `StatisticsSuite` checking that backwards / forwards compatibility is not broken.

Author: Juliusz Sompolski <julek@databricks.com>

Closes#20624 from juliuszsompolski/SPARK-23445.

## What changes were proposed in this pull request?

Remove queryExecutionThread.interrupt() from ContinuousExecution. As detailed in the JIRA, interrupting the thread is only relevant in the microbatch case; for continuous processing the query execution can quickly clean itself up without.

## How was this patch tested?

existing tests

Author: Jose Torres <jose@databricks.com>

Closes#20622 from jose-torres/SPARK-23441.

## What changes were proposed in this pull request?

This PR avoids to print schema internal information when unknown column is specified in partition columns. This PR prints column names in the schema with more readable format.

The following is an example.

Source code

```

test("save with an unknown partition column") {

withTempDir { dir =>

val path = dir.getCanonicalPath

Seq(1L -> "a").toDF("i", "j").write

.format("parquet")

.partitionBy("unknownColumn")

.save(path)

}

```

Output without this PR

```

Partition column unknownColumn not found in schema StructType(StructField(i,LongType,false), StructField(j,StringType,true));

```

Output with this PR

```

Partition column unknownColumn not found in schema struct<i:bigint,j:string>;

```

## How was this patch tested?

Manually tested

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#20653 from kiszk/SPARK-23459.

**The best way to review this PR is to ignore whitespace/indent changes. Use this link - https://github.com/apache/spark/pull/20650/files?w=1**

## What changes were proposed in this pull request?

The stream-stream join tests add data to multiple sources and expect it all to show up in the next batch. But there's a race condition; the new batch might trigger when only one of the AddData actions has been reached.

Prior attempt to solve this issue by jose-torres in #20646 attempted to simultaneously synchronize on all memory sources together when consecutive AddData was found in the actions. However, this carries the risk of deadlock as well as unintended modification of stress tests (see the above PR for a detailed explanation). Instead, this PR attempts the following.

- A new action called `StreamProgressBlockedActions` that allows multiple actions to be executed while the streaming query is blocked from making progress. This allows data to be added to multiple sources that are made visible simultaneously in the next batch.

- An alias of `StreamProgressBlockedActions` called `MultiAddData` is explicitly used in the `Streaming*JoinSuites` to add data to two memory sources simultaneously.

This should avoid unintentional modification of the stress tests (or any other test for that matter) while making sure that the flaky tests are deterministic.

## How was this patch tested?

Modified test cases in `Streaming*JoinSuites` where there are consecutive `AddData` actions.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#20650 from tdas/SPARK-23408.

## What changes were proposed in this pull request?

For CreateTable with Append mode, we should check if `storage.locationUri` is the same with existing table in `PreprocessTableCreation`

In the current code, there is only a simple exception if the `storage.locationUri` is different with existing table:

`org.apache.spark.sql.AnalysisException: Table or view not found:`

which can be improved.

## How was this patch tested?

Unit test

Author: Wang Gengliang <gengliang.wang@databricks.com>

Closes#20660 from gengliangwang/locationUri.

## What changes were proposed in this pull request?

DataSourceV2 initially allowed user-supplied schemas when a source doesn't implement `ReadSupportWithSchema`, as long as the schema was identical to the source's schema. This is confusing behavior because changes to an underlying table can cause a previously working job to fail with an exception that user-supplied schemas are not allowed.

This reverts commit adcb25a0624, which was added to #20387 so that it could be removed in a separate JIRA issue and PR.

## How was this patch tested?

Existing tests.

Author: Ryan Blue <blue@apache.org>

Closes#20603 from rdblue/SPARK-23418-revert-adcb25a0624.

## What changes were proposed in this pull request?

This PR always adds `codegenStageId` in comment of the generated class. This is a replication of #20419 for post-Spark 2.3.

Closes#20419

```

/* 001 */ public Object generate(Object[] references) {

/* 002 */ return new GeneratedIteratorForCodegenStage1(references);

/* 003 */ }

/* 004 */

/* 005 */ // codegenStageId=1

/* 006 */ final class GeneratedIteratorForCodegenStage1 extends org.apache.spark.sql.execution.BufferedRowIterator {

/* 007 */ private Object[] references;

...

```

## How was this patch tested?

Existing tests

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#20612 from kiszk/SPARK-23424.

## What changes were proposed in this pull request?

In a kerberized cluster, when Spark reads a file path (e.g. `people.json`), it warns with a wrong warning message during looking up `people.json/_spark_metadata`. The root cause of this situation is the difference between `LocalFileSystem` and `DistributedFileSystem`. `LocalFileSystem.exists()` returns `false`, but `DistributedFileSystem.exists` raises `org.apache.hadoop.security.AccessControlException`.

```scala

scala> spark.version

res0: String = 2.4.0-SNAPSHOT

scala> spark.read.json("file:///usr/hdp/current/spark-client/examples/src/main/resources/people.json").show

+----+-------+

| age| name|

+----+-------+

|null|Michael|

| 30| Andy|

| 19| Justin|

+----+-------+

scala> spark.read.json("hdfs:///tmp/people.json")

18/02/15 05:00:48 WARN streaming.FileStreamSink: Error while looking for metadata directory.

18/02/15 05:00:48 WARN streaming.FileStreamSink: Error while looking for metadata directory.

```

After this PR,

```scala

scala> spark.read.json("hdfs:///tmp/people.json").show

+----+-------+

| age| name|

+----+-------+

|null|Michael|

| 30| Andy|

| 19| Justin|

+----+-------+

```

## How was this patch tested?

Manual.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20616 from dongjoon-hyun/SPARK-23434.

## What changes were proposed in this pull request?

SPARK-23203: DataSourceV2 should use immutable catalyst trees instead of wrapping a mutable DataSourceV2Reader. This commit updates DataSourceV2Relation and consolidates much of the DataSourceV2 API requirements for the read path in it. Instead of wrapping a reader that changes, the relation lazily produces a reader from its configuration.

This commit also updates the predicate and projection push-down. Instead of the implementation from SPARK-22197, this reuses the rule matching from the Hive and DataSource read paths (using `PhysicalOperation`) and copies most of the implementation of `SparkPlanner.pruneFilterProject`, with updates for DataSourceV2. By reusing the implementation from other read paths, this should have fewer regressions from other read paths and is less code to maintain.

The new push-down rules also supports the following edge cases:

* The output of DataSourceV2Relation should be what is returned by the reader, in case the reader can only partially satisfy the requested schema projection

* The requested projection passed to the DataSourceV2Reader should include filter columns

* The push-down rule may be run more than once if filters are not pushed through projections

## How was this patch tested?

Existing push-down and read tests.

Author: Ryan Blue <blue@apache.org>

Closes#20387 from rdblue/SPARK-22386-push-down-immutable-trees.

## What changes were proposed in this pull request?

Before the patch, Spark could infer as Date a partition value which cannot be casted to Date (this can happen when there are extra characters after a valid date, like `2018-02-15AAA`).

When this happens and the input format has metadata which define the schema of the table, then `null` is returned as a value for the partition column, because the `cast` operator used in (`PartitioningAwareFileIndex.inferPartitioning`) is unable to convert the value.

The PR checks in the partition inference that values can be casted to Date and Timestamp, in order to infer that datatype to them.

## How was this patch tested?

added UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20621 from mgaido91/SPARK-23436.

## What changes were proposed in this pull request?

ParquetFileFormat leaks opened files in some cases. This PR prevents that by registering task completion listers first before initialization.

- [spark-branch-2.3-test-sbt-hadoop-2.7](https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-branch-2.3-test-sbt-hadoop-2.7/205/testReport/org.apache.spark.sql/FileBasedDataSourceSuite/_It_is_not_a_test_it_is_a_sbt_testing_SuiteSelector_/)

- [spark-master-test-sbt-hadoop-2.6](https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-sbt-hadoop-2.6/4228/testReport/junit/org.apache.spark.sql.execution.datasources.parquet/ParquetQuerySuite/_It_is_not_a_test_it_is_a_sbt_testing_SuiteSelector_/)

```

Caused by: sbt.ForkMain$ForkError: java.lang.Throwable: null

at org.apache.spark.DebugFilesystem$.addOpenStream(DebugFilesystem.scala:36)

at org.apache.spark.DebugFilesystem.open(DebugFilesystem.scala:70)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:769)

at org.apache.parquet.hadoop.ParquetFileReader.<init>(ParquetFileReader.java:538)

at org.apache.spark.sql.execution.datasources.parquet.SpecificParquetRecordReaderBase.initialize(SpecificParquetRecordReaderBase.java:149)

at org.apache.spark.sql.execution.datasources.parquet.VectorizedParquetRecordReader.initialize(VectorizedParquetRecordReader.java:133)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anonfun$buildReaderWithPartitionValues$1.apply(ParquetFileFormat.scala:400)

at

```

## How was this patch tested?

Manual. The following test case generates the same leakage.