8 commits

| Author | SHA1 | Message | Date | |

|---|---|---|---|---|

|

|

ebf01ec3c1 |

[SPARK-34950][TESTS] Update benchmark results to the ones created by GitHub Actions machines

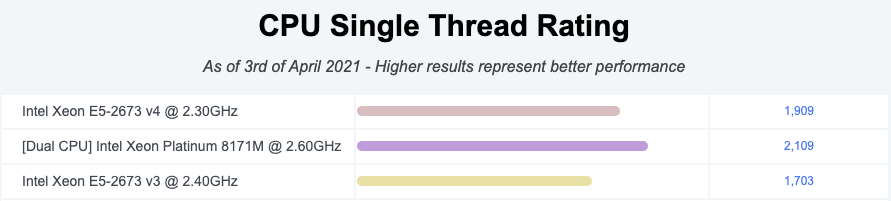

### What changes were proposed in this pull request? https://github.com/apache/spark/pull/32015 added a way to run benchmarks much more easily in the same GitHub Actions build. This PR updates the benchmark results by using the way. **NOTE** that looks like GitHub Actions use four types of CPU given my observations: - Intel(R) Xeon(R) Platinum 8171M CPU 2.60GHz - Intel(R) Xeon(R) CPU E5-2673 v4 2.30GHz - Intel(R) Xeon(R) CPU E5-2673 v3 2.40GHz - Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz Given my quick research, seems like they perform roughly similarly:  I couldn't find enough information about Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz but the performance seems roughly similar given the numbers. So shouldn't be a big deal especially given that this way is much easier, encourages contributors to run more and guarantee the same number of cores and same memory with the same softwares. ### Why are the changes needed? To have a base line of the benchmarks accordingly. ### Does this PR introduce _any_ user-facing change? No, dev-only. ### How was this patch tested? It was generated from: - [Run benchmarks: * (JDK 11)](https://github.com/HyukjinKwon/spark/actions/runs/713575465) - [Run benchmarks: * (JDK 8)](https://github.com/HyukjinKwon/spark/actions/runs/713154337) Closes #32044 from HyukjinKwon/SPARK-34950. Authored-by: HyukjinKwon <gurwls223@apache.org> Signed-off-by: Max Gekk <max.gekk@gmail.com> |

||

|

|

c1f160e097 |

[SPARK-30648][SQL] Support filters pushdown in JSON datasource

### What changes were proposed in this pull request?

In the PR, I propose to support pushed down filters in JSON datasource. The reason of pushing a filter up to `JacksonParser` is to apply the filter as soon as all its attributes become available i.e. converted from JSON field values to desired values according to the schema. This allows to skip parsing of the rest of JSON record and conversions of other values if the filter returns `false`. This can improve performance when pushed filters are highly selective and conversion of JSON string fields to desired values are comparably expensive ( for example, the conversion to `TIMESTAMP` values).

The main idea behind of `JsonFilters` is to group pushdown filters by their references, convert the grouped filters to expressions, and then compile to predicates. The predicates are indexed by schema field positions. Each predicate has a state with reference counter to non-set row fields. As soon as the counter reaches `0`, it can be applied to the row because all its dependencies has been set. Before processing new row, predicate's reference counter is reset to total number of predicate references (dependencies in a row).

The common code shared between `CSVFilters` and `JsonFilters` is moved to the `StructFilters` class and its companion object.

### Why are the changes needed?

The changes improve performance on synthetic benchmarks up to **27 times** on JDK 8 and **25** times on JDK 11:

```

OpenJDK 64-Bit Server VM 1.8.0_242-8u242-b08-0ubuntu3~18.04-b08 on Linux 4.15.0-1044-aws

Intel(R) Xeon(R) CPU E5-2670 v2 2.50GHz

Filters pushdown: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------------------------------

w/o filters 25230 25255 22 0.0 252299.6 1.0X

pushdown disabled 25248 25282 33 0.0 252475.6 1.0X

w/ filters 905 911 8 0.1 9047.9 27.9X

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- Added new test suites `JsonFiltersSuite` and `JacksonParserSuite`.

- By new end-to-end and case sensitivity tests in `JsonSuite`.

- By `CSVFiltersSuite`, `UnivocityParserSuite` and `CSVSuite`.

- Re-running `CSVBenchmark` and `JsonBenchmark` using Amazon EC2:

| Item | Description |

| ---- | ----|

| Region | us-west-2 (Oregon) |

| Instance | r3.xlarge (spot instance) |

| AMI | ami-06f2f779464715dc5 (ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1) |

| Java | OpenJDK8/11 installed by`sudo add-apt-repository ppa:openjdk-r/ppa` & `sudo apt install openjdk-11-jdk`|

and `./dev/run-benchmarks`:

```python

#!/usr/bin/env python3

import os

from sparktestsupport.shellutils import run_cmd

benchmarks = [

['sql/test', 'org.apache.spark.sql.execution.datasources.csv.CSVBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.datasources.json.JsonBenchmark']

]

print('Set SPARK_GENERATE_BENCHMARK_FILES=1')

os.environ['SPARK_GENERATE_BENCHMARK_FILES'] = '1'

for b in benchmarks:

print("Run benchmark: %s" % b[1])

run_cmd(['build/sbt', '%s:runMain %s' % (b[0], b[1])])

```

Closes #27366 from MaxGekk/json-filters-pushdown.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

|

||

|

|

42f01e314b |

[SPARK-32130][SQL][FOLLOWUP] Enable timestamps inference in JsonBenchmark

### What changes were proposed in this pull request? Set the JSON option `inferTimestamp` to `true` for the cases that measure perf of timestamp inference. ### Why are the changes needed? The PR https://github.com/apache/spark/pull/28966 disabled timestamp inference by default. As a consequence, some benchmarks don't measure perf of timestamp inference from JSON fields. This PR explicitly enable such inference. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By re-generating results of `JsonBenchmark`. Closes #28981 from MaxGekk/json-inferTimestamps-disable-by-default-followup. Authored-by: Max Gekk <max.gekk@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> |

||

|

|

bcf23307f4 |

[SPARK-32130][SQL] Disable the JSON option inferTimestamp by default

### What changes were proposed in this pull request? Set the JSON option `inferTimestamp` to `false` if an user don't pass it as datasource option. ### Why are the changes needed? To prevent perf regression while inferring schemas from JSON with potential timestamps fields. ### Does this PR introduce _any_ user-facing change? Yes ### How was this patch tested? - Modified existing tests in `JsonSuite` and `JsonInferSchemaSuite`. - Regenerated results of `JsonBenchmark` in the environment: | Item | Description | | ---- | ----| | Region | us-west-2 (Oregon) | | Instance | r3.xlarge | | AMI | ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1 (ami-06f2f779464715dc5) | | Java | OpenJDK 64-Bit Server VM 1.8.0_252 and OpenJDK 64-Bit Server VM 11.0.7+10 | Closes #28966 from MaxGekk/json-inferTimestamps-disable-by-default. Authored-by: Max Gekk <max.gekk@gmail.com> Signed-off-by: Dongjoon Hyun <dongjoon@apache.org> |

||

|

|

92685c0148 |

[SPARK-31755][SQL][FOLLOWUP] Update date-time, CSV and JSON benchmark results

### What changes were proposed in this pull request? Re-generate results of: - DateTimeBenchmark - CSVBenchmark - JsonBenchmark in the environment: | Item | Description | | ---- | ----| | Region | us-west-2 (Oregon) | | Instance | r3.xlarge | | AMI | ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1 (ami-06f2f779464715dc5) | | Java | OpenJDK 64-Bit Server VM 1.8.0_242 and OpenJDK 64-Bit Server VM 11.0.6+10 | ### Why are the changes needed? 1. The PR https://github.com/apache/spark/pull/28576 changed date-time parser. The `DateTimeBenchmark` should confirm that the PR didn't slow down date/timestamp parsing. 2. CSV/JSON datasources are affected by the above PR too. This PR updates the benchmark results in the same environment as other benchmarks to have a base line for future optimizations. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? By running benchmarks via the script: ```python #!/usr/bin/env python3 import os from sparktestsupport.shellutils import run_cmd benchmarks = [ ['sql/test', 'org.apache.spark.sql.execution.benchmark.DateTimeBenchmark'], ['sql/test', 'org.apache.spark.sql.execution.datasources.csv.CSVBenchmark'], ['sql/test', 'org.apache.spark.sql.execution.datasources.json.JsonBenchmark'] ] print('Set SPARK_GENERATE_BENCHMARK_FILES=1') os.environ['SPARK_GENERATE_BENCHMARK_FILES'] = '1' for b in benchmarks: print("Run benchmark: %s" % b[1]) run_cmd(['build/sbt', '%s:runMain %s' % (b[0], b[1])]) ``` Closes #28613 from MaxGekk/missing-hour-year-benchmarks. Authored-by: Max Gekk <max.gekk@gmail.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> |

||

|

|

d65f534c5a |

[SPARK-31414][SQL] Fix performance regression with new TimestampFormatter for json and csv time parsing

### What changes were proposed in this pull request?

With benchmark original, where the timestamp values are valid to the new parser

the result is

```scala

[info] Running benchmark: Read dates and timestamps

[info] Running case: timestamp strings

[info] Stopped after 3 iterations, 5781 ms

[info] Running case: parse timestamps from Dataset[String]

[info] Stopped after 3 iterations, 44764 ms

[info] Running case: infer timestamps from Dataset[String]

[info] Stopped after 3 iterations, 93764 ms

[info] Running case: from_json(timestamp)

[info] Stopped after 3 iterations, 59021 ms

```

When we modify the benchmark to

```scala

def timestampStr: Dataset[String] = {

spark.range(0, rowsNum, 1, 1).mapPartitions { iter =>

iter.map(i => s"""{"timestamp":"1970-01-01T01:02:03.${i % 100}"}""")

}.select($"value".as("timestamp")).as[String]

}

readBench.addCase("timestamp strings", numIters) { _ =>

timestampStr.noop()

}

readBench.addCase("parse timestamps from Dataset[String]", numIters) { _ =>

spark.read.schema(tsSchema).json(timestampStr).noop()

}

readBench.addCase("infer timestamps from Dataset[String]", numIters) { _ =>

spark.read.json(timestampStr).noop()

}

```

where the timestamp values are invalid for the new parser which causes a fallback to legacy parser(2.4).

the result is

```scala

[info] Running benchmark: Read dates and timestamps

[info] Running case: timestamp strings

[info] Stopped after 3 iterations, 5623 ms

[info] Running case: parse timestamps from Dataset[String]

[info] Stopped after 3 iterations, 506637 ms

[info] Running case: infer timestamps from Dataset[String]

[info] Stopped after 3 iterations, 509076 ms

```

About 10x perf-regression

BUT if we modify the timestamp pattern to `....HH:mm:ss[.SSS][XXX]` which make all timestamp values valid for the new parser to prohibit fallback, the result is

```scala

[info] Running benchmark: Read dates and timestamps

[info] Running case: timestamp strings

[info] Stopped after 3 iterations, 5623 ms

[info] Running case: parse timestamps from Dataset[String]

[info] Stopped after 3 iterations, 506637 ms

[info] Running case: infer timestamps from Dataset[String]

[info] Stopped after 3 iterations, 509076 ms

```

### Why are the changes needed?

Fix performance regression.

### Does this PR introduce any user-facing change?

NO

### How was this patch tested?

new tests added.

Closes #28181 from yaooqinn/SPARK-31414.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

|

||

|

|

f5118f81e3 |

[SPARK-30409][SPARK-29173][SQL][TESTS] Use NoOp datasource in SQL benchmarks

### What changes were proposed in this pull request?

In the PR, I propose to replace `.collect()`, `.count()` and `.foreach(_ => ())` in SQL benchmarks and use the `NoOp` datasource. I added an implicit class to `SqlBasedBenchmark` with the `.noop()` method. It can be used in benchmark like: `ds.noop()`. The last one is unfolded to `ds.write.format("noop").mode(Overwrite).save()`.

### Why are the changes needed?

To avoid additional overhead that `collect()` (and other actions) has. For example, `.collect()` has to convert values according to external types and pull data to the driver. This can hide actual performance regressions or improvements of benchmarked operations.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Re-run all modified benchmarks using Amazon EC2.

| Item | Description |

| ---- | ----|

| Region | us-west-2 (Oregon) |

| Instance | r3.xlarge (spot instance) |

| AMI | ami-06f2f779464715dc5 (ubuntu/images/hvm-ssd/ubuntu-bionic-18.04-amd64-server-20190722.1) |

| Java | OpenJDK8/10 |

- Run `TPCDSQueryBenchmark` using instructions from the PR #26049

```

# `spark-tpcds-datagen` needs this. (JDK8)

$ git clone https://github.com/apache/spark.git -b branch-2.4 --depth 1 spark-2.4

$ export SPARK_HOME=$PWD

$ ./build/mvn clean package -DskipTests

# Generate data. (JDK8)

$ git clone gitgithub.com:maropu/spark-tpcds-datagen.git

$ cd spark-tpcds-datagen/

$ build/mvn clean package

$ mkdir -p /data/tpcds

$ ./bin/dsdgen --output-location /data/tpcds/s1 // This need `Spark 2.4`

```

- Other benchmarks ran by the script:

```

#!/usr/bin/env python3

import os

from sparktestsupport.shellutils import run_cmd

benchmarks = [

['sql/test', 'org.apache.spark.sql.execution.benchmark.AggregateBenchmark'],

['avro/test', 'org.apache.spark.sql.execution.benchmark.AvroReadBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.BloomFilterBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.DataSourceReadBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.DateTimeBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.ExtractBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.FilterPushdownBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.InExpressionBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.IntervalBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.JoinBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.MakeDateTimeBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.MiscBenchmark'],

['hive/test', 'org.apache.spark.sql.execution.benchmark.ObjectHashAggregateExecBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.OrcNestedSchemaPruningBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.OrcV2NestedSchemaPruningBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.ParquetNestedSchemaPruningBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.RangeBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.UDFBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.WideSchemaBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.benchmark.WideTableBenchmark'],

['hive/test', 'org.apache.spark.sql.hive.orc.OrcReadBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.datasources.csv.CSVBenchmark'],

['sql/test', 'org.apache.spark.sql.execution.datasources.json.JsonBenchmark']

]

print('Set SPARK_GENERATE_BENCHMARK_FILES=1')

os.environ['SPARK_GENERATE_BENCHMARK_FILES'] = '1'

for b in benchmarks:

print("Run benchmark: %s" % b[1])

run_cmd(['build/sbt', '%s:runMain %s' % (b[0], b[1])])

```

Closes #27078 from MaxGekk/noop-in-benchmarks.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

|

||

|

|

4e0e4e51c4 |

[MINOR][TESTS] Rename JSONBenchmark to JsonBenchmark

### What changes were proposed in this pull request? This PR renames `object JSONBenchmark` to `object JsonBenchmark` and the benchmark result file `JSONBenchmark-results.txt` to `JsonBenchmark-results.txt`. ### Why are the changes needed? Since the file name doesn't match with `object JSONBenchmark`, it makes a confusion when we run the benchmark. In addition, this makes the automation difficult. ``` $ find . -name JsonBenchmark.scala ./sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/json/JsonBenchmark.scala ``` ``` $ build/sbt "sql/test:runMain org.apache.spark.sql.execution.datasources.json.JsonBenchmark" [info] Running org.apache.spark.sql.execution.datasources.json.JsonBenchmark [error] Error: Could not find or load main class org.apache.spark.sql.execution.datasources.json.JsonBenchmark ``` ### Does this PR introduce any user-facing change? No. ### How was this patch tested? This is just renaming. Closes #26008 from dongjoon-hyun/SPARK-RENAME-JSON. Authored-by: Dongjoon Hyun <dhyun@apple.com> Signed-off-by: Dongjoon Hyun <dhyun@apple.com> |

Renamed from sql/core/benchmarks/JSONBenchmark-results.txt (Browse further)