## What changes were proposed in this pull request?

Kolmogorov-Smirnoff test Python API in `pyspark.ml`

**Note** API with `CDF` is a little difficult to support in python. We can add it in following PR.

## How was this patch tested?

doctest

Author: WeichenXu <weichen.xu@databricks.com>

Closes#20904 from WeichenXu123/ks-test-py.

## What changes were proposed in this pull request?

unpersist the last cached nodeIdsForInstances in `deleteAllCheckpoints`

## How was this patch tested?

existing tests

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#20956 from zhengruifeng/NodeIdCache_cleanup.

## What changes were proposed in this pull request?

This is a follow-up pr of #19108 which broke Scala 2.12 build.

```

[error] /Users/ueshin/workspace/apache-spark/spark/mllib/src/main/scala/org/apache/spark/ml/stat/KolmogorovSmirnovTest.scala:86: overloaded method value test with alternatives:

[error] (dataset: org.apache.spark.sql.DataFrame,sampleCol: String,cdf: org.apache.spark.api.java.function.Function[java.lang.Double,java.lang.Double])org.apache.spark.sql.DataFrame <and>

[error] (dataset: org.apache.spark.sql.DataFrame,sampleCol: String,cdf: scala.Double => scala.Double)org.apache.spark.sql.DataFrame

[error] cannot be applied to (org.apache.spark.sql.DataFrame, String, scala.Double => java.lang.Double)

[error] test(dataset, sampleCol, (x: Double) => cdf.call(x))

[error] ^

[error] one error found

```

## How was this patch tested?

Existing tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#20994 from ueshin/issues/SPARK-21898/fix_scala-2.12.

## What changes were proposed in this pull request?

Initial PR for Instrumentation improvements: UUID and logging levels.

This PR takes over #20837Closes#20837

## How was this patch tested?

Manual.

Author: Bago Amirbekian <bago@databricks.com>

Author: WeichenXu <weichen.xu@databricks.com>

Closes#20982 from WeichenXu123/better-instrumentation.

## What changes were proposed in this pull request?

`handleInvalid` Param was forwarded to the VectorAssembler used by RFormula.

## How was this patch tested?

added a test and ran all tests for RFormula and VectorAssembler

Author: Yogesh Garg <yogesh(dot)garg()databricks(dot)com>

Closes#20970 from yogeshg/spark_23562.

## What changes were proposed in this pull request?

This PR allows us to use one of several types of `MemoryBlock`, such as byte array, int array, long array, or `java.nio.DirectByteBuffer`. To use `java.nio.DirectByteBuffer` allows to have off heap memory which is automatically deallocated by JVM. `MemoryBlock` class has primitive accessors like `Platform.getInt()`, `Platform.putint()`, or `Platform.copyMemory()`.

This PR uses `MemoryBlock` for `OffHeapColumnVector`, `UTF8String`, and other places. This PR can improve performance of operations involving memory accesses (e.g. `UTF8String.trim`) by 1.8x.

For now, this PR does not use `MemoryBlock` for `BufferHolder` based on cloud-fan's [suggestion](https://github.com/apache/spark/pull/11494#issuecomment-309694290).

Since this PR is a successor of #11494, close#11494. Many codes were ported from #11494. Many efforts were put here. **I think this PR should credit to yzotov.**

This PR can achieve **1.1-1.4x performance improvements** for operations in `UTF8String` or `Murmur3_x86_32`. Other operations are almost comparable performances.

Without this PR

```

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Hash byte arrays with length 268435487: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Murmur3_x86_32 526 / 536 0.0 131399881.5 1.0X

UTF8String benchmark: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

hashCode 525 / 552 1022.6 1.0 1.0X

substring 414 / 423 1298.0 0.8 1.3X

```

With this PR

```

OpenJDK 64-Bit Server VM 1.8.0_121-8u121-b13-0ubuntu1.16.04.2-b13 on Linux 4.4.0-22-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Hash byte arrays with length 268435487: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Murmur3_x86_32 474 / 488 0.0 118552232.0 1.0X

UTF8String benchmark: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

hashCode 476 / 480 1127.3 0.9 1.0X

substring 287 / 291 1869.9 0.5 1.7X

```

Benchmark program

```

test("benchmark Murmur3_x86_32") {

val length = 8192 * 32768 + 31

val seed = 42L

val iters = 1 << 2

val random = new Random(seed)

val arrays = Array.fill[MemoryBlock](numArrays) {

val bytes = new Array[Byte](length)

random.nextBytes(bytes)

new ByteArrayMemoryBlock(bytes, Platform.BYTE_ARRAY_OFFSET, length)

}

val benchmark = new Benchmark("Hash byte arrays with length " + length,

iters * numArrays, minNumIters = 20)

benchmark.addCase("HiveHasher") { _: Int =>

var sum = 0L

for (_ <- 0L until iters) {

sum += HiveHasher.hashUnsafeBytesBlock(

arrays(i), Platform.BYTE_ARRAY_OFFSET, length)

}

}

benchmark.run()

}

test("benchmark UTF8String") {

val N = 512 * 1024 * 1024

val iters = 2

val benchmark = new Benchmark("UTF8String benchmark", N, minNumIters = 20)

val str0 = new java.io.StringWriter() { { for (i <- 0 until N) { write(" ") } } }.toString

val s0 = UTF8String.fromString(str0)

benchmark.addCase("hashCode") { _: Int =>

var h: Int = 0

for (_ <- 0L until iters) { h += s0.hashCode }

}

benchmark.addCase("substring") { _: Int =>

var s: UTF8String = null

for (_ <- 0L until iters) { s = s0.substring(N / 2 - 5, N / 2 + 5) }

}

benchmark.run()

}

```

I run [this benchmark program](https://gist.github.com/kiszk/94f75b506c93a663bbbc372ffe8f05de) using [the commit](ee5a79861c). I got the following results:

```

OpenJDK 64-Bit Server VM 1.8.0_151-8u151-b12-0ubuntu0.16.04.2-b12 on Linux 4.4.0-66-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Memory access benchmarks: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

ByteArrayMemoryBlock get/putInt() 220 / 221 609.3 1.6 1.0X

Platform get/putInt(byte[]) 220 / 236 610.9 1.6 1.0X

Platform get/putInt(Object) 492 / 494 272.8 3.7 0.4X

OnHeapMemoryBlock get/putLong() 322 / 323 416.5 2.4 0.7X

long[] 221 / 221 608.0 1.6 1.0X

Platform get/putLong(long[]) 321 / 321 418.7 2.4 0.7X

Platform get/putLong(Object) 561 / 563 239.2 4.2 0.4X

```

I also run [this benchmark program](https://gist.github.com/kiszk/5fdb4e03733a5d110421177e289d1fb5) for comparing performance of `Platform.copyMemory()`.

```

OpenJDK 64-Bit Server VM 1.8.0_151-8u151-b12-0ubuntu0.16.04.2-b12 on Linux 4.4.0-66-generic

Intel(R) Xeon(R) CPU E5-2667 v3 3.20GHz

Platform copyMemory: Best/Avg Time(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------

Object to Object 1961 / 1967 8.6 116.9 1.0X

System.arraycopy Object to Object 1917 / 1921 8.8 114.3 1.0X

byte array to byte array 1961 / 1968 8.6 116.9 1.0X

System.arraycopy byte array to byte array 1909 / 1937 8.8 113.8 1.0X

int array to int array 1921 / 1990 8.7 114.5 1.0X

double array to double array 1918 / 1923 8.7 114.3 1.0X

Object to byte array 1961 / 1967 8.6 116.9 1.0X

Object to short array 1965 / 1972 8.5 117.1 1.0X

Object to int array 1910 / 1915 8.8 113.9 1.0X

Object to float array 1971 / 1978 8.5 117.5 1.0X

Object to double array 1919 / 1944 8.7 114.4 1.0X

byte array to Object 1959 / 1967 8.6 116.8 1.0X

int array to Object 1961 / 1970 8.6 116.9 1.0X

double array to Object 1917 / 1924 8.8 114.3 1.0X

```

These results show three facts:

1. According to the second/third or sixth/seventh results in the first experiment, if we use `Platform.get/putInt(Object)`, we achieve more than 2x worse performance than `Platform.get/putInt(byte[])` with concrete type (i.e. `byte[]`).

2. According to the second/third or fourth/fifth/sixth results in the first experiment, the fastest way to access an array element on Java heap is `array[]`. **Cons of `array[]` is that it is not possible to support unaligned-8byte access.**

3. According to the first/second/third or fourth/sixth/seventh results in the first experiment, `getInt()/putInt() or getLong()/putLong()` in subclasses of `MemoryBlock` can achieve comparable performance to `Platform.get/putInt()` or `Platform.get/putLong()` with concrete type (second or sixth result). There is no overhead regarding virtual call.

4. According to results in the second experiment, for `Platform.copy()`, to pass `Object` can achieve the same performance as to pass any type of primitive array as source or destination.

5. According to second/fourth results in the second experiment, `Platform.copy()` can achieve the same performance as `System.arrayCopy`. **It would be good to use `Platform.copy()` since `Platform.copy()` can take any types for src and dst.**

We are incrementally replace `Platform.get/putXXX` with `MemoryBlock.get/putXXX`. This is because we have two advantages.

1) Achieve better performance due to having a concrete type for an array.

2) Use simple OO design instead of passing `Object`

It is easy to use `MemoryBlock` in `InternalRow`, `BufferHolder`, `TaskMemoryManager`, and others that are already abstracted. It is not easy to use `MemoryBlock` in utility classes related to hashing or others.

Other candidates are

- UnsafeRow, UnsafeArrayData, UnsafeMapData, SpecificUnsafeRowJoiner

- UTF8StringBuffer

- BufferHolder

- TaskMemoryManager

- OnHeapColumnVector

- BytesToBytesMap

- CachedBatch

- classes for hash

- others.

## How was this patch tested?

Added `UnsafeMemoryAllocator`

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#19222 from kiszk/SPARK-10399.

## What changes were proposed in this pull request?

Introduce `handleInvalid` parameter in `VectorAssembler` that can take in `"keep", "skip", "error"` options. "error" throws an error on seeing a row containing a `null`, "skip" filters out all such rows, and "keep" adds relevant number of NaN. "keep" figures out an example to find out what this number of NaN s should be added and throws an error when no such number could be found.

## How was this patch tested?

Unit tests are added to check the behavior of `assemble` on specific rows and the transformer is called on `DataFrame`s of different configurations to test different corner cases.

Author: Yogesh Garg <yogesh(dot)garg()databricks(dot)com>

Author: Bago Amirbekian <bago@databricks.com>

Author: Yogesh Garg <1059168+yogeshg@users.noreply.github.com>

Closes#20829 from yogeshg/rformula_handleinvalid.

## What changes were proposed in this pull request?

The maxDF parameter is for filtering out frequently occurring terms. This param was recently added to the Scala CountVectorizer and needs to be added to Python also.

## How was this patch tested?

add test

Author: Huaxin Gao <huaxing@us.ibm.com>

Closes#20777 from huaxingao/spark-23615.

## What changes were proposed in this pull request?

Adds PMML export support to Spark ML pipelines in the style of Spark's DataSource API to allow library authors to add their own model export formats.

Includes a specific implementation for Spark ML linear regression PMML export.

In addition to adding PMML to reach parity with our current MLlib implementation, this approach will allow other libraries & formats (like PFA) to implement and export models with a unified API.

## How was this patch tested?

Basic unit test.

Author: Holden Karau <holdenkarau@google.com>

Author: Holden Karau <holden@pigscanfly.ca>

Closes#19876 from holdenk/SPARK-11171-SPARK-11237-Add-PMML-export-for-ML-KMeans-r2.

## What changes were proposed in this pull request?

Fix lint-java from https://github.com/apache/spark/pull/19108 addition of JavaKolmogorovSmirnovTestSuite

Author: Joseph K. Bradley <joseph@databricks.com>

Closes#20875 from jkbradley/kstest-lint-fix.

## What changes were proposed in this pull request?

Support prediction on single instance for regression and classification related models (i.e., PredictionModel, ClassificationModel and their sub classes).

Add corresponding test cases.

## How was this patch tested?

Test cases added.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19381 from WeichenXu123/single_prediction.

## What changes were proposed in this pull request?

Silhouette need to know the number of features. This was taken using `first` and checking the size of the vector. Despite this works fine, if the number of attributes is present in metadata, we can avoid to trigger a job for this and use the metadata value. This can help improving performances of course.

## How was this patch tested?

existing UTs + added UT

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20719 from mgaido91/SPARK-23568.

## What changes were proposed in this pull request?

Feature parity for KolmogorovSmirnovTest in MLlib.

Implement `DataFrame` interface for `KolmogorovSmirnovTest` in `mllib.stat`.

## How was this patch tested?

Test suite added.

Author: WeichenXu <weichen.xu@databricks.com>

Author: jkbradley <joseph.kurata.bradley@gmail.com>

Closes#19108 from WeichenXu123/ml-ks-test.

## What changes were proposed in this pull request?

The PR adds the option to specify a distance measure in BisectingKMeans. Moreover, it introduces the ability to use the cosine distance measure in it.

## How was this patch tested?

added UTs + existing UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20600 from mgaido91/SPARK-23412.

## What changes were proposed in this pull request?

As I mentioned in [SPARK-22751](https://issues.apache.org/jira/browse/SPARK-22751?jql=project%20%3D%20SPARK%20AND%20component%20%3D%20ML%20AND%20text%20~%20randomforest), there is a shuffle performance problem in ML Randomforest when train a RF in high dimensional data.

The reason is that, in _org.apache.spark.tree.impl.RandomForest_, the function _findSplitsBySorting_ will actually flatmap a sparse vector into a dense vector, then in groupByKey there will be a huge shuffle write size.

To avoid this, we can add a filter in flatmap, to filter out zero value. And in function _findSplitsForContinuousFeature_, we can infer the number of zero value by _metadata_.

In addition, if a feature only contains zero value, _continuousSplits_ will not has the key of feature id. So I add a check when using _continuousSplits_.

## How was this patch tested?

Ran model locally using spark-submit.

Author: lucio <576632108@qq.com>

Closes#20472 from lucio-yz/master.

## What changes were proposed in this pull request?

adding Structured Streaming tests for all Models/Transformers in spark.ml.classification

## How was this patch tested?

N/A

Author: WeichenXu <weichen.xu@databricks.com>

Closes#20121 from WeichenXu123/ml_stream_test_classification.

## What changes were proposed in this pull request?

Added subtree pruning in the translation from LearningNode to Node: a learning node having a single prediction value for all the leaves in the subtree rooted at it is translated into a LeafNode, instead of a (redundant) InternalNode

## How was this patch tested?

Added two unit tests under "mllib/src/test/scala/org/apache/spark/ml/tree/impl/RandomForestSuite.scala":

- test("SPARK-3159 tree model redundancy - classification")

- test("SPARK-3159 tree model redundancy - regression")

4 existing unit tests relying on the tree structure (existence of a specific redundant subtree) had to be adapted as the tested components in the output tree are now pruned (fixed by adding an extra _prune_ parameter which can be used to disable pruning for testing)

Author: Alessandro Solimando <18898964+asolimando@users.noreply.github.com>

Closes#20632 from asolimando/master.

## What changes were proposed in this pull request?

Adds structured streaming tests using testTransformer for these suites:

* BinarizerSuite

* BucketedRandomProjectionLSHSuite

* BucketizerSuite

* ChiSqSelectorSuite

* CountVectorizerSuite

* DCTSuite.scala

* ElementwiseProductSuite

* FeatureHasherSuite

* HashingTFSuite

## How was this patch tested?

It tests itself because it is a bunch of tests!

Author: Joseph K. Bradley <joseph@databricks.com>

Closes#20111 from jkbradley/SPARK-22883-streaming-featureAM.

## What changes were proposed in this pull request?

Converting spark.ml.recommendation tests to also check code with structured streaming, using the ML testing infrastructure implemented in SPARK-22882.

## How was this patch tested?

Automated: Pass the Jenkins.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes#20362 from gaborgsomogyi/SPARK-22886.

## What changes were proposed in this pull request?

Murmur3 hash generates a different value from the original and other implementations (like Scala standard library and Guava or so) when the length of a bytes array is not multiple of 4.

## How was this patch tested?

Added a unit test.

**Note: When we merge this PR, please give all the credits to Shintaro Murakami.**

Author: Shintaro Murakami <mrkm4ntrgmail.com>

Author: gatorsmile <gatorsmile@gmail.com>

Author: Shintaro Murakami <mrkm4ntr@gmail.com>

Closes#20630 from gatorsmile/pr-20568.

## What changes were proposed in this pull request?

#### Problem:

Since 2.3, `Bucketizer` supports multiple input/output columns. We will check if exclusive params are set during transformation. E.g., if `inputCols` and `outputCol` are both set, an error will be thrown.

However, when we write `Bucketizer`, looks like the default params and user-supplied params are merged during writing. All saved params are loaded back and set to created model instance. So the default `outputCol` param in `HasOutputCol` trait will be set in `paramMap` and become an user-supplied param. That makes the check of exclusive params failed.

#### Fix:

This changes the saving logic of Bucketizer to handle this case. This is a quick fix to catch the time of 2.3. We should consider modify the persistence mechanism later.

Please see the discussion in the JIRA.

Note: The multi-column `QuantileDiscretizer` also has the same issue.

## How was this patch tested?

Modified tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20594 from viirya/SPARK-23377-2.

## What changes were proposed in this pull request?

The PR provided an implementation of ClusteringEvaluator using the cosine distance measure.

This allows to evaluate clustering results created using the cosine distance, introduced in SPARK-22119.

In the corresponding JIRA, there is a design document for the algorithm implemented here.

## How was this patch tested?

Added UT which compares the result to the one provided by python sklearn.

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20396 from mgaido91/SPARK-23217.

## What changes were proposed in this pull request?

Add some test cases for images feature

## How was this patch tested?

Add some test cases in ImageSchemaSuite

Author: xubo245 <601450868@qq.com>

Closes#20583 from xubo245/CARBONDATA23392_AddTestForImage.

## What changes were proposed in this pull request?

Cache the RDD of items in ml.FPGrowth before passing it to mllib.FPGrowth. Cache only when the user did not cache the input dataset of transactions. This fixes the warning about uncached data emerging from mllib.FPGrowth.

## How was this patch tested?

Manually:

1. Run ml.FPGrowthExample - warning is there

2. Apply the fix

3. Run ml.FPGrowthExample again - no warning anymore

Author: Arseniy Tashoyan <tashoyan@gmail.com>

Closes#20578 from tashoyan/SPARK-23318.

## What changes were proposed in this pull request?

In #19340 some comments considered needed to use spherical KMeans when cosine distance measure is specified, as Matlab does; instead of the implementation based on the behavior of other tools/libraries like Rapidminer, nltk and ELKI, ie. the centroids are computed as the mean of all the points in the clusters.

The PR introduce the approach used in spherical KMeans. This behavior has the nice feature to minimize the within-cluster cosine distance.

## How was this patch tested?

existing/improved UTs

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20518 from mgaido91/SPARK-22119_followup.

## What changes were proposed in this pull request?

Audit new APIs and docs in 2.3.0.

## How was this patch tested?

No test.

Author: Yanbo Liang <ybliang8@gmail.com>

Closes#20459 from yanboliang/SPARK-23107.

## What changes were proposed in this pull request?

Currently, the CountVectorizer has a minDF parameter.

It might be useful to also have a maxDF parameter.

It will be used as a threshold for filtering all the terms that occur very frequently in a text corpus, because they are not very informative or could even be stop-words.

This is analogous to scikit-learn, CountVectorizer, max_df.

Other changes:

- Refactored code to invoke "filter()" conditioned on maxDF or minDF set.

- Refactored code to unpersist input after counting is done.

## How was this patch tested?

Unit tests.

Author: Yacine Mazari <y.mazari@gmail.com>

Closes#20367 from ymazari/SPARK-23166.

## What changes were proposed in this pull request?

Currently shuffle repartition uses RoundRobinPartitioning, the generated result is nondeterministic since the sequence of input rows are not determined.

The bug can be triggered when there is a repartition call following a shuffle (which would lead to non-deterministic row ordering), as the pattern shows below:

upstream stage -> repartition stage -> result stage

(-> indicate a shuffle)

When one of the executors process goes down, some tasks on the repartition stage will be retried and generate inconsistent ordering, and some tasks of the result stage will be retried generating different data.

The following code returns 931532, instead of 1000000:

```

import scala.sys.process._

import org.apache.spark.TaskContext

val res = spark.range(0, 1000 * 1000, 1).repartition(200).map { x =>

x

}.repartition(200).map { x =>

if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId < 2) {

throw new Exception("pkill -f java".!!)

}

x

}

res.distinct().count()

```

In this PR, we propose a most straight-forward way to fix this problem by performing a local sort before partitioning, after we make the input row ordering deterministic, the function from rows to partitions is fully deterministic too.

The downside of the approach is that with extra local sort inserted, the performance of repartition() will go down, so we add a new config named `spark.sql.execution.sortBeforeRepartition` to control whether this patch is applied. The patch is default enabled to be safe-by-default, but user may choose to manually turn it off to avoid performance regression.

This patch also changes the output rows ordering of repartition(), that leads to a bunch of test cases failure because they are comparing the results directly.

## How was this patch tested?

Add unit test in ExchangeSuite.

With this patch(and `spark.sql.execution.sortBeforeRepartition` set to true), the following query returns 1000000:

```

import scala.sys.process._

import org.apache.spark.TaskContext

spark.conf.set("spark.sql.execution.sortBeforeRepartition", "true")

val res = spark.range(0, 1000 * 1000, 1).repartition(200).map { x =>

x

}.repartition(200).map { x =>

if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId < 2) {

throw new Exception("pkill -f java".!!)

}

x

}

res.distinct().count()

res7: Long = 1000000

```

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#20393 from jiangxb1987/shuffle-repartition.

## What changes were proposed in this pull request?

Currently there is a mixed situation when both single- and multi-column are supported. In some cases exceptions are thrown, in others only a warning log is emitted. In this discussion https://issues.apache.org/jira/browse/SPARK-8418?focusedCommentId=16275049&page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel#comment-16275049, the decision was to throw an exception.

The PR throws an exception in `Bucketizer`, instead of logging a warning.

## How was this patch tested?

modified UT

Author: Marco Gaido <marcogaido91@gmail.com>

Author: Joseph K. Bradley <joseph@databricks.com>

Closes#19993 from mgaido91/SPARK-22799.

## What changes were proposed in this pull request?

When parsing raw image data in ImageSchema.decode(), we use a [java.awt.Color](https://docs.oracle.com/javase/7/docs/api/java/awt/Color.html#Color(int)) constructor that sets alpha = 255, even for four-channel images (which may have different alpha values). This PR fixes this issue & adds a unit test to verify correctness of reading four-channel images.

## How was this patch tested?

Updates an existing unit test ("readImages pixel values test" in `ImageSchemaSuite`) to also verify correctness when reading a four-channel image.

Author: Sid Murching <sid.murching@databricks.com>

Closes#20389 from smurching/image-schema-bugfix.

## What changes were proposed in this pull request?

Correctly guard against empty datasets in `org.apache.spark.ml.classification.Classifier`

## How was this patch tested?

existing tests

Author: Matthew Tovbin <mtovbin@salesforce.com>

Closes#20321 from tovbinm/SPARK-23152.

## What changes were proposed in this pull request?

Currently, KMeans assumes the only possible distance measure to be used is the Euclidean. This PR aims to add the cosine distance support to the KMeans algorithm.

## How was this patch tested?

existing and added UTs.

Author: Marco Gaido <marcogaido91@gmail.com>

Author: Marco Gaido <mgaido@hortonworks.com>

Closes#19340 from mgaido91/SPARK-22119.

## What changes were proposed in this pull request?

`ML.Vectors#sparse(size: Int, elements: Seq[(Int, Double)])` support zero-length

## How was this patch tested?

existing tests

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#20275 from zhengruifeng/SparseVector_size.

## What changes were proposed in this pull request?

Fixed some typos found in ML scaladocs

## How was this patch tested?

NA

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#20300 from BryanCutler/ml-doc-typos-MINOR.

## What changes were proposed in this pull request?

RFormula should use VectorSizeHint & OneHotEncoderEstimator in its pipeline to avoid using the deprecated OneHotEncoder & to ensure the model produced can be used in streaming.

## How was this patch tested?

Unit tests.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Bago Amirbekian <bago@databricks.com>

Closes#20229 from MrBago/rFormula.

## What changes were proposed in this pull request?

This patch bumps the master branch version to `2.4.0-SNAPSHOT`.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20222 from gatorsmile/bump24.

## What changes were proposed in this pull request?

Including VectorSizeHint in RFormula piplelines will allow them to be applied to streaming dataframes.

## How was this patch tested?

Unit tests.

Author: Bago Amirbekian <bago@databricks.com>

Closes#20238 from MrBago/rFormulaVectorSize.

## What changes were proposed in this pull request?

Add a note to the `HasCheckpointInterval` parameter doc that clarifies that this setting is ignored when no checkpoint directory has been set on the spark context.

## How was this patch tested?

No tests necessary, just a doc update.

Author: sethah <shendrickson@cloudera.com>

Closes#20188 from sethah/als_checkpoint_doc.

## What changes were proposed in this pull request?

Follow-up cleanups for the OneHotEncoderEstimator PR. See some discussion in the original PR: https://github.com/apache/spark/pull/19527 or read below for what this PR includes:

* configedCategorySize: I reverted this to return an Array. I realized the original setup (which I had recommended in the original PR) caused the whole model to be serialized in the UDF.

* encoder: I reorganized the logic to show what I meant in the comment in the previous PR. I think it's simpler but am open to suggestions.

I also made some small style cleanups based on IntelliJ warnings.

## How was this patch tested?

Existing unit tests

Author: Joseph K. Bradley <joseph@databricks.com>

Closes#20132 from jkbradley/viirya-SPARK-13030.

## What changes were proposed in this pull request?

Avoid holding all models in memory for `TrainValidationSplit`.

## How was this patch tested?

Existing tests.

Author: Bago Amirbekian <bago@databricks.com>

Closes#20143 from MrBago/trainValidMemoryFix.

## What changes were proposed in this pull request?

This patch adds a new class `OneHotEncoderEstimator` which extends `Estimator`. The `fit` method returns `OneHotEncoderModel`.

Common methods between existing `OneHotEncoder` and new `OneHotEncoderEstimator`, such as transforming schema, are extracted and put into `OneHotEncoderCommon` to reduce code duplication.

### Multi-column support

`OneHotEncoderEstimator` adds simpler multi-column support because it is new API and can be free from backward compatibility.

### handleInvalid Param support

`OneHotEncoderEstimator` supports `handleInvalid` Param. It supports `error` and `keep`.

## How was this patch tested?

Added new test suite `OneHotEncoderEstimatorSuite`.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#19527 from viirya/SPARK-13030.

Previously, `FeatureHasher` always treats numeric type columns as numbers and never as categorical features. It is quite common to have categorical features represented as numbers or codes in data sources.

In order to hash these features as categorical, users must first explicitly convert them to strings which is cumbersome.

Add a new param `categoricalCols` which specifies the numeric columns that should be treated as categorical features.

## How was this patch tested?

New unit tests.

Author: Nick Pentreath <nickp@za.ibm.com>

Closes#19991 from MLnick/hasher-num-cat.

## What changes were proposed in this pull request?

add multi columns support to QuantileDiscretizer.

When calculating the splits, we can either merge together all the probabilities into one array by calculating approxQuantiles on multiple columns at once, or compute approxQuantiles separately for each column. After doing the performance comparision, we found it’s better to calculating approxQuantiles on multiple columns at once.

Here is how we measuring the performance time:

```

var duration = 0.0

for (i<- 0 until 10) {

val start = System.nanoTime()

discretizer.fit(df)

val end = System.nanoTime()

duration += (end - start) / 1e9

}

println(duration/10)

```

Here is the performance test result:

|numCols |NumRows | compute each approxQuantiles separately|compute multiple columns approxQuantiles at one time|

|--------|----------|--------------------------------|-------------------------------------------|

|10 |60 |0.3623195839 |0.1626658607 |

|10 |6000 |0.7537239841 |0.3869370046 |

|22 |6000 |1.6497598557 |0.4767903059 |

|50 |6000 |3.2268305752 |0.7217818396 |

## How was this patch tested?

add UT in QuantileDiscretizerSuite to test multi columns supports

Author: Huaxin Gao <huaxing@us.ibm.com>

Closes#19715 from huaxingao/spark_22397.

## What changes were proposed in this pull request?

ML regression package testsuite add StructuredStreaming test

In order to make testsuite easier to modify, new helper function added in `MLTest`:

```

def testTransformerByGlobalCheckFunc[A : Encoder](

dataframe: DataFrame,

transformer: Transformer,

firstResultCol: String,

otherResultCols: String*)

(globalCheckFunction: Seq[Row] => Unit): Unit

```

## How was this patch tested?

N/A

Author: WeichenXu <weichen.xu@databricks.com>

Author: Bago Amirbekian <bago@databricks.com>

Closes#19979 from WeichenXu123/ml_stream_test.

(Please fill in changes proposed in this fix)

Python API for VectorSizeHint Transformer.

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

doc-tests.

Author: Bago Amirbekian <bago@databricks.com>

Closes#20112 from MrBago/vectorSizeHint-PythonAPI.

## What changes were proposed in this pull request?

make sure model data is stored in order. WeichenXu123

## How was this patch tested?

existing tests

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#20113 from zhengruifeng/gmm_save.

## What changes were proposed in this pull request?

Currently, in `ChiSqSelectorModel`, save:

```

spark.createDataFrame(dataArray).repartition(1).write...

```

The default partition number used by createDataFrame is "defaultParallelism",

Current RoundRobinPartitioning won't guarantee the "repartition" generating the same order result with local array. We need fix it.

## How was this patch tested?

N/A

Author: WeichenXu <weichen.xu@databricks.com>

Closes#20088 from WeichenXu123/fix_chisq_model_save.

## What changes were proposed in this pull request?

Fix OneVsRestModel transform on streaming data failed.

## How was this patch tested?

UT will be added soon, once #19979 merged. (Need a helper test method there)

Author: WeichenXu <weichen.xu@databricks.com>

Closes#20077 from WeichenXu123/fix_ovs_model_transform.

## What changes were proposed in this pull request?

Via some test I found CrossValidator still exists memory issue, it will still occupy `O(n*sizeof(model))` memory for holding models when fitting, if well optimized, it should be `O(parallelism*sizeof(model))`

This is because modelFutures will hold the reference to model object after future is complete (we can use `future.value.get.get` to fetch it), and the `Future.sequence` and the `modelFutures` array holds references to each model future. So all model object are keep referenced. So it will still occupy `O(n*sizeof(model))` memory.

I fix this by merging the `modelFuture` and `foldMetricFuture` together, and use `atomicInteger` to statistic complete fitting tasks and when all done, trigger `trainingDataset.unpersist`.

I ever commented this issue on the old PR [SPARK-19357]

https://github.com/apache/spark/pull/16774#pullrequestreview-53674264

unfortunately, at that time I do not realize that the issue still exists, but now I confirm it and create this PR to fix it.

## Discussion

I give 3 approaches which we can compare, after discussion I realized none of them is ideal, we have to make a trade-off.

**After discussion with jkbradley , choose approach 3**

### Approach 1

~~The approach proposed by MrBago at~~ https://github.com/apache/spark/pull/19904#discussion_r156751569

~~This approach resolve the model objects referenced issue, allow the model objects to be GCed in time. **BUT, in some cases, it still do not resolve the O(N) model memory occupation issue**. Let me use an extreme case to describe it:~~

~~suppose we set `parallelism = 1`, and there're 100 paramMaps. So we have 100 fitting & evaluation tasks. In this approach, because of `parallelism = 1`, the code have to wait 100 fitting tasks complete, **(at this time the memory occupation by models already reach 100 * sizeof(model) )** and then it will unpersist training dataset and then do 100 evaluation tasks.~~

### Approach 2

~~This approach is my PR old version code~~ 2cc7c28f38

~~This approach can make sure at any case, the peak memory occupation by models to be `O(numParallelism * sizeof(model))`, but, it exists an issue that, in some extreme case, the "unpersist training dataset" will be delayed until most of the evaluation tasks complete. Suppose the case

`parallelism = 1`, and there're 100 fitting & evaluation tasks, each fitting&evaluation task have to be executed one by one, so only after the first 99 fitting&evaluation tasks and the 100th fitting task complete, the "unpersist training dataset" will be triggered.~~

### Approach 3

After I compared approach 1 and approach 2, I realized that, in the case which parallelism is low but there're many fitting & evaluation tasks, we cannot achieve both of the following two goals:

- Make the peak memory occupation by models(driver-side) to be O(parallelism * sizeof(model))

- unpersist training dataset before most of the evaluation tasks started.

So I vote for a simpler approach, move the unpersist training dataset to the end (Does this really matters ?)

Because the goal 1 is more important, we must make sure the peak memory occupation by models (driver-side) to be O(parallelism * sizeof(model)), otherwise it will bring high risk of OOM.

Like following code:

```

val foldMetricFutures = epm.zipWithIndex.map { case (paramMap, paramIndex) =>

Future[Double] {

val model = est.fit(trainingDataset, paramMap).asInstanceOf[Model[_]]

//...other minor codes

val metric = eval.evaluate(model.transform(validationDataset, paramMap))

logDebug(s"Got metric metricformodeltrainedwithparamMap.")

metric

} (executionContext)

}

val foldMetrics = foldMetricFutures.map(ThreadUtils.awaitResult(_, Duration.Inf))

trainingDataset.unpersist() // <------- unpersist at the end

validationDataset.unpersist()

```

## How was this patch tested?

N/A

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19904 from WeichenXu123/fix_cross_validator_memory_issue.

## What changes were proposed in this pull request?

A new VectorSizeHint transformer was added. This transformer is meant to be used as a pipeline stage ahead of VectorAssembler, on vector columns, so that VectorAssembler can join vectors in a streaming context where the size of the input vectors is otherwise not known.

## How was this patch tested?

Unit tests.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Bago Amirbekian <bago@databricks.com>

Closes#19746 from MrBago/vector-size-hint.

## What changes were proposed in this pull request?

register following classes in Kryo:

`org.apache.spark.mllib.regression.LabeledPoint`

`org.apache.spark.mllib.clustering.VectorWithNorm`

`org.apache.spark.ml.feature.LabeledPoint`

`org.apache.spark.ml.tree.impl.TreePoint`

`org.apache.spark.ml.tree.impl.BaggedPoint` seems also need to be registered, but I don't know how to do it in this safe way.

WeichenXu123 cloud-fan

## How was this patch tested?

added tests

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#19950 from zhengruifeng/labeled_kryo.

## What changes were proposed in this pull request?

Make several improvements in dataframe vectorized summarizer.

1. Make the summarizer return `Vector` type for all metrics (except "count").

It will return "WrappedArray" type before which won't be very convenient.

2. Make `MetricsAggregate` inherit `ImplicitCastInputTypes` trait. So it can check and implicitly cast input values.

3. Add "weight" parameter for all single metric method.

4. Update doc and improve the example code in doc.

5. Simplified test cases.

## How was this patch tested?

Test added and simplified.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19156 from WeichenXu123/improve_vec_summarizer.

## What changes were proposed in this pull request?

unpersist unused datasets

## How was this patch tested?

existing tests and local check in Spark-Shell

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#20017 from zhengruifeng/bkm_unpersist.

## What changes were proposed in this pull request?

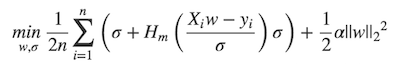

MLlib ```LinearRegression``` supports _huber_ loss addition to _leastSquares_ loss. The huber loss objective function is:

Refer Eq.(6) and Eq.(8) in [A robust hybrid of lasso and ridge regression](http://statweb.stanford.edu/~owen/reports/hhu.pdf). This objective is jointly convex as a function of (w, σ) ∈ R × (0,∞), we can use L-BFGS-B to solve it.

The current implementation is a straight forward porting for Python scikit-learn [```HuberRegressor```](http://scikit-learn.org/stable/modules/generated/sklearn.linear_model.HuberRegressor.html). There are some differences:

* We use mean loss (```lossSum/weightSum```), but sklearn uses total loss (```lossSum```).

* We multiply the loss function and L2 regularization by 1/2. It does not affect the result if we multiply the whole formula by a factor, we just keep consistent with _leastSquares_ loss.

So if fitting w/o regularization, MLlib and sklearn produce the same output. If fitting w/ regularization, MLlib should set ```regParam``` divide by the number of instances to match the output of sklearn.

## How was this patch tested?

Unit tests.

Author: Yanbo Liang <ybliang8@gmail.com>

Closes#19020 from yanboliang/spark-3181.

## What changes were proposed in this pull request?

only drops the rows containing NaN in the input columns

## How was this patch tested?

existing tests and added tests

Author: Ruifeng Zheng <ruifengz@foxmail.com>

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#19894 from zhengruifeng/bucketizer_nan.

## What changes were proposed in this pull request?

We need to add some helper code to make testing ML transformers & models easier with streaming data. These tests might help us catch any remaining issues and we could encourage future PRs to use these tests to prevent new Models & Transformers from having issues.

I add a `MLTest` trait which extends `StreamTest` trait, and override `createSparkSession`. So ML testsuite can only extend `MLTest`, to use both ML & Stream test util functions.

I only modify one testcase in `LinearRegressionSuite`, for first pass review.

Link to #19746

## How was this patch tested?

`MLTestSuite` added.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19843 from WeichenXu123/ml_stream_test_helper.

## What changes were proposed in this pull request?

#19208 modified ```sharedParams.scala```, but didn't generated by ```SharedParamsCodeGen.scala```. This involves mismatch between them.

## How was this patch tested?

Existing test.

Author: Yanbo Liang <ybliang8@gmail.com>

Closes#19958 from yanboliang/spark-21087.

## What changes were proposed in this pull request?

jira: https://issues.apache.org/jira/browse/SPARK-22289

add JSON encoding/decoding for Param[Matrix].

The issue was reported by Nic Eggert during saving LR model with LowerBoundsOnCoefficients.

There're two ways to resolve this as I see:

1. Support save/load on LogisticRegressionParams, and also adjust the save/load in LogisticRegression and LogisticRegressionModel.

2. Directly support Matrix in Param.jsonEncode, similar to what we have done for Vector.

After some discussion in jira, we prefer the fix to support Matrix as a valid Param type, for simplicity and convenience for other classes.

Note that in the implementation, I added a "class" field in the JSON object to match different JSON converters when loading, which is for preciseness and future extension.

## How was this patch tested?

new unit test to cover the LR case and JsonMatrixConverter

Author: Yuhao Yang <yuhao.yang@intel.com>

Closes#19525 from hhbyyh/lrsave.

## What changes were proposed in this pull request?

make `Imputer` inherit `HasOutputCols`

## How was this patch tested?

existing tests

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#19889 from zhengruifeng/using_HasOutputCols.

## What changes were proposed in this pull request?

Adding spark image reader, an implementation of schema for representing images in spark DataFrames

The code is taken from the spark package located here:

(https://github.com/Microsoft/spark-images)

Please see the JIRA for more information (https://issues.apache.org/jira/browse/SPARK-21866)

Please see mailing list for SPIP vote and approval information:

(http://apache-spark-developers-list.1001551.n3.nabble.com/VOTE-SPIP-SPARK-21866-Image-support-in-Apache-Spark-td22510.html)

# Background and motivation

As Apache Spark is being used more and more in the industry, some new use cases are emerging for different data formats beyond the traditional SQL types or the numerical types (vectors and matrices). Deep Learning applications commonly deal with image processing. A number of projects add some Deep Learning capabilities to Spark (see list below), but they struggle to communicate with each other or with MLlib pipelines because there is no standard way to represent an image in Spark DataFrames. We propose to federate efforts for representing images in Spark by defining a representation that caters to the most common needs of users and library developers.

This SPIP proposes a specification to represent images in Spark DataFrames and Datasets (based on existing industrial standards), and an interface for loading sources of images. It is not meant to be a full-fledged image processing library, but rather the core description that other libraries and users can rely on. Several packages already offer various processing facilities for transforming images or doing more complex operations, and each has various design tradeoffs that make them better as standalone solutions.

This project is a joint collaboration between Microsoft and Databricks, which have been testing this design in two open source packages: MMLSpark and Deep Learning Pipelines.

The proposed image format is an in-memory, decompressed representation that targets low-level applications. It is significantly more liberal in memory usage than compressed image representations such as JPEG, PNG, etc., but it allows easy communication with popular image processing libraries and has no decoding overhead.

## How was this patch tested?

Unit tests in scala ImageSchemaSuite, unit tests in python

Author: Ilya Matiach <ilmat@microsoft.com>

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#19439 from imatiach-msft/ilmat/spark-images.

## What changes were proposed in this pull request?

Add python api for VectorIndexerModel support handle unseen categories via handleInvalid.

## How was this patch tested?

doctest added.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19753 from WeichenXu123/vector_indexer_invalid_py.

## What changes were proposed in this pull request?

`SQLTransformer.transform` unpersists input dataset when dropping temporary view. We should not change input dataset's cache status.

## How was this patch tested?

Added test.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#19772 from viirya/SPARK-22538.

## What changes were proposed in this pull request?

I added adjusted R2 as a regression metric which was implemented in all major statistical analysis tools.

In practice, no one looks at R2 alone. The reason is R2 itself is misleading. If we add more parameters, R2 will not decrease but only increase (or stay the same). This leads to overfitting. Adjusted R2 addressed this issue by using number of parameters as "weight" for the sum of errors.

## How was this patch tested?

- Added a new unit test and passed.

- ./dev/run-tests all passed.

Author: test <joseph.peng@quetica.com>

Author: tengpeng <tengpeng@users.noreply.github.com>

Closes#19638 from tengpeng/master.

## What changes were proposed in this pull request?

Support skip/error/keep strategy, similar to `StringIndexer`.

Implemented via `try...catch`, so that it can avoid possible performance impact.

## How was this patch tested?

Unit test added.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19588 from WeichenXu123/handle_invalid_for_vector_indexer.

## What changes were proposed in this pull request?

We add a parameter whether to collect the full model list when CrossValidator/TrainValidationSplit training (Default is NOT), avoid the change cause OOM)

- Add a method in CrossValidatorModel/TrainValidationSplitModel, allow user to get the model list

- CrossValidatorModelWriter add a “option”, allow user to control whether to persist the model list to disk (will persist by default).

- Note: when persisting the model list, use indices as the sub-model path

## How was this patch tested?

Test cases added.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19208 from WeichenXu123/expose-model-list.

## What changes were proposed in this pull request?

In `ALS.predict` currently we are using `blas.sdot` function to perform a dot product on two `Seq`s. It turns out that this is not the most efficient way.

I used the following code to compare the implementations:

```

def time[R](block: => R): Unit = {

val t0 = System.nanoTime()

block

val t1 = System.nanoTime()

println("Elapsed time: " + (t1 - t0) + "ns")

}

val r = new scala.util.Random(100)

val input = (1 to 500000).map(_ => (1 to 100).map(_ => r.nextFloat).toSeq)

def f(a:Seq[Float], b:Seq[Float]): Float = {

var r = 0.0f

for(i <- 0 until a.length) {

r+=a(i)*b(i)

}

r

}

import com.github.fommil.netlib.BLAS.{getInstance => blas}

val b = (1 to 100).map(_ => r.nextFloat).toSeq

time { input.foreach(a=>blas.sdot(100, a.toArray, 1, b.toArray, 1)) }

// on average it takes 2968718815 ns

time { input.foreach(a=>f(a,b)) }

// on average it takes 515510185 ns

```

Thus this PR proposes the old-style for loop implementation for performance reasons.

## How was this patch tested?

existing UTs

Author: Marco Gaido <mgaido@hortonworks.com>

Closes#19685 from mgaido91/SPARK-19759.

## What changes were proposed in this pull request?

There are still some algorithms based on mllib, such as KMeans. For now, many mllib common class (such as: Vector, DenseVector, SparseVector, Matrix, DenseMatrix, SparseMatrix) are not registered in Kryo. So there are some performance issues for those object serialization or deserialization.

Previously dicussed: https://github.com/apache/spark/pull/19586

## How was this patch tested?

New test case.

Author: Xianyang Liu <xianyang.liu@intel.com>

Closes#19661 from ConeyLiu/register_vector.

## What changes were proposed in this pull request?

(Provided featureSubset Strategy to GBTClassifier

a) Moved featureSubsetStrategy to TreeEnsembleParams

b) Changed GBTClassifier to pass featureSubsetStrategy

val firstTreeModel = firstTree.train(input, treeStrategy, featureSubsetStrategy))

## How was this patch tested?

a) Tested GradientBoostedTreeClassifierExample by adding .setFeatureSubsetStrategy with GBTClassifier

b)Added test cases in GBTClassifierSuite and GBTRegressorSuite

Author: Pralabh Kumar <pralabhkumar@gmail.com>

Closes#18118 from pralabhkumar/develop.

## What changes were proposed in this pull request?

Current ML's Bucketizer can only bin a column of continuous features. If a dataset has thousands of of continuous columns needed to bin, we will result in thousands of ML stages. It is inefficient regarding query planning and execution.

We should have a type of bucketizer that can bin a lot of columns all at once. It would need to accept an list of arrays of split points to correspond to the columns to bin, but it might make things more efficient by replacing thousands of stages with just one.

This current approach in this patch is to add a new `MultipleBucketizerInterface` for this purpose. `Bucketizer` now extends this new interface.

### Performance

Benchmarking using the test dataset provided in JIRA SPARK-20392 (blockbuster.csv).

The ML pipeline includes 2 `StringIndexer`s and 1 `MultipleBucketizer` or 137 `Bucketizer`s to bin 137 input columns with the same splits. Then count the time to transform the dataset.

MultipleBucketizer: 3352 ms

Bucketizer: 51512 ms

## How was this patch tested?

Jenkins tests.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#17819 from viirya/SPARK-20542.

## What changes were proposed in this pull request?

UDFs that can cause runtime exception on invalid data are not safe to pushdown, because its behavior depends on its position in the query plan. Pushdown of it will risk to change its original behavior.

The example reported in the JIRA and taken as test case shows this issue. We should declare UDFs that can cause runtime exception on invalid data as non-determinstic.

This updates the document of `deterministic` property in `Expression` and states clearly an UDF that can cause runtime exception on some specific input, should be declared as non-determinstic.

## How was this patch tested?

Added test. Manually test.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#19662 from viirya/SPARK-22446.

## What changes were proposed in this pull request?

Move ```ClusteringEvaluatorSuite``` test data(iris) to data/mllib, to prevent from re-creating a new folder.

## How was this patch tested?

Existing tests.

Author: Yanbo Liang <ybliang8@gmail.com>

Closes#19648 from yanboliang/spark-14516.

## What changes were proposed in this pull request?

Expose the common params from Spark ML as a Developer API.

## How was this patch tested?

Existing tests.

Author: Holden Karau <holden@us.ibm.com>

Author: Holden Karau <holdenkarau@google.com>

Closes#18699 from holdenk/SPARK-7146-ml-shared-params-developer-api.

## What changes were proposed in this pull request?

Scala test source files like TestHiveSingleton.scala should be in scala source root

## How was this patch tested?

Just move scala file from java directory to scala directory

No new test case in this PR.

```

renamed: mllib/src/test/java/org/apache/spark/ml/util/IdentifiableSuite.scala -> mllib/src/test/scala/org/apache/spark/ml/util/IdentifiableSuite.scala

renamed: streaming/src/test/java/org/apache/spark/streaming/JavaTestUtils.scala -> streaming/src/test/scala/org/apache/spark/streaming/JavaTestUtils.scala

renamed: streaming/src/test/java/org/apache/spark/streaming/api/java/JavaStreamingListenerWrapperSuite.scala -> streaming/src/test/scala/org/apache/spark/streaming/api/java/JavaStreamingListenerWrapperSuite.scala

renamed: sql/hive/src/test/java/org/apache/spark/sql/hive/test/TestHiveSingleton.scala sql/hive/src/test/scala/org/apache/spark/sql/hive/test/TestHiveSingleton.scala

```

Author: xubo245 <601450868@qq.com>

Closes#19639 from xubo245/scalaDirectory.

## What changes were proposed in this pull request?

Update the url of reference paper.

## How was this patch tested?

It is comments, so nothing tested.

Author: bomeng <bmeng@us.ibm.com>

Closes#19614 from bomeng/22399.

## What changes were proposed in this pull request?

Add parallelism support for ML tuning in pyspark.

## How was this patch tested?

Test updated.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19122 from WeichenXu123/par-ml-tuning-py.

## What changes were proposed in this pull request?

Fix NaiveBayes unit test occasionly fail:

Set seed for `BrzMultinomial.sample`, make `generateNaiveBayesInput` output deterministic dataset.

(If we do not set seed, the generated dataset will be random, and the model will be possible to exceed the tolerance in the test, which trigger this failure)

## How was this patch tested?

Manually run tests multiple times and check each time output models contains the same values.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19558 from WeichenXu123/fix_nb_test_seed.

## What changes were proposed in this pull request?

Remove unused param in `LDAModel.getTopicDistributionMethod`

## How was this patch tested?

existing tests

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#19530 from zhengruifeng/lda_bc.

Hi,

# What changes were proposed in this pull request?

as it was proposed by jkbradley , ```gammat``` are not collected to the driver anymore.

# How was this patch tested?

existing test suite.

Author: Valeriy Avanesov <avanesov@wias-berlin.de>

Author: Valeriy Avanesov <acopich@gmail.com>

Closes#18924 from akopich/master.

## What changes were proposed in this pull request?

Currently percentile_approx never returns the first element when percentile is in (relativeError, 1/N], where relativeError default 1/10000, and N is the total number of elements. But ideally, percentiles in [0, 1/N] should all return the first element as the answer.

For example, given input data 1 to 10, if a user queries 10% (or even less) percentile, it should return 1, because the first value 1 already reaches 10%. Currently it returns 2.

Based on the paper, targetError is not rounded up, and searching index should start from 0 instead of 1. By following the paper, we should be able to fix the cases mentioned above.

## How was this patch tested?

Added a new test case and fix existing test cases.

Author: Zhenhua Wang <wzh_zju@163.com>

Closes#19438 from wzhfy/improve_percentile_approx.

## What changes were proposed in this pull request?

Fix probabilisticClassificationModel corner case: normalization of all-zero raw predictions, throw IllegalArgumentException with description.

## How was this patch tested?

Test case added.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19106 from WeichenXu123/SPARK-21770.

This PR adds methods `recommendForUserSubset` and `recommendForItemSubset` to `ALSModel`. These allow recommending for a specified set of user / item ids rather than for every user / item (as in the `recommendForAllX` methods).

The subset methods take a `DataFrame` as input, containing ids in the column specified by the param `userCol` or `itemCol`. The model will generate recommendations for each _unique_ id in this input dataframe.

## How was this patch tested?

New unit tests in `ALSSuite` and Python doctests in `ALS`. Ran updated examples locally.

Author: Nick Pentreath <nickp@za.ibm.com>

Closes#18748 from MLnick/als-recommend-df.

## What changes were proposed in this pull request?

Current equation of learning rate is incorrect when `numIterations` > `1`.

This PR is based on [original C code](https://github.com/tmikolov/word2vec/blob/master/word2vec.c#L393).

cc: mengxr

## How was this patch tested?

manual tests

I modified [this example code](https://spark.apache.org/docs/2.1.1/mllib-feature-extraction.html#example).

### `numIteration=1`

#### Code

```scala

import org.apache.spark.mllib.feature.{Word2Vec, Word2VecModel}

val input = sc.textFile("data/mllib/sample_lda_data.txt").map(line => line.split(" ").toSeq)

val word2vec = new Word2Vec()

val model = word2vec.fit(input)

val synonyms = model.findSynonyms("1", 5)

for((synonym, cosineSimilarity) <- synonyms) {

println(s"$synonym $cosineSimilarity")

}

```

#### Result

```

2 0.175856813788414

0 0.10971353203058243

4 0.09818313270807266

3 0.012947646901011467

9 -0.09881238639354706

```

### `numIteration=5`

#### Code

```scala

import org.apache.spark.mllib.feature.{Word2Vec, Word2VecModel}

val input = sc.textFile("data/mllib/sample_lda_data.txt").map(line => line.split(" ").toSeq)

val word2vec = new Word2Vec()

word2vec.setNumIterations(5)

val model = word2vec.fit(input)

val synonyms = model.findSynonyms("1", 5)

for((synonym, cosineSimilarity) <- synonyms) {

println(s"$synonym $cosineSimilarity")

}

```

#### Result

```

0 0.9898583889007568

2 0.9808019399642944

4 0.9794934391975403

3 0.9506527781486511

9 -0.9065656661987305

```

Author: Kento NOZAWA <k_nzw@klis.tsukuba.ac.jp>

Closes#19372 from nzw0301/master.

## What changes were proposed in this pull request?

SPARK-21690 makes one-pass `Imputer` by parallelizing the computation of all input columns. When we transform dataset with `ImputerModel`, we do `withColumn` on all input columns sequentially. We can also do this on all input columns at once by adding a `withColumns` API to `Dataset`.

The new `withColumns` API is for internal use only now.

## How was this patch tested?

Existing tests for `ImputerModel`'s change. Added tests for `withColumns` API.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#19229 from viirya/SPARK-22001.

## What changes were proposed in this pull request?

Enable Scala 2.12 REPL. Fix most remaining issues with 2.12 compilation and warnings, including:

- Selecting Kafka 0.10.1+ for Scala 2.12 and patching over a minor API difference

- Fixing lots of "eta expansion of zero arg method deprecated" warnings

- Resolving the SparkContext.sequenceFile implicits compile problem

- Fixing an odd but valid jetty-server missing dependency in hive-thriftserver

## How was this patch tested?

Existing tests

Author: Sean Owen <sowen@cloudera.com>

Closes#19307 from srowen/Scala212.

## What changes were proposed in this pull request?

Currently the param of CrossValidator/TrainValidationSplit persist/loading is hardcoding, which is different with other ML estimators. This cause persist bug for new added `parallelism` param.

I refactor related code, avoid hardcoding persist/load param. And in the same time, it solve the `parallelism` persisting bug.

This refactoring is very useful because we will add more new params in #19208 , hardcoding param persisting/loading making the thing adding new params very troublesome.

## How was this patch tested?

Test added.

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19278 from WeichenXu123/fix-tuning-param-bug.

## What changes were proposed in this pull request?

I test on a dataset of about 13M instances, and found that using `treeAggregate` give a speedup in following algs:

|Algs| SpeedUp |

|------|-----------|

|OneHotEncoder| 5% |

|StatFunctions.calculateCov| 7% |

|StatFunctions.multipleApproxQuantiles| 9% |

|RegressionEvaluator| 8% |

## How was this patch tested?

existing tests

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#19232 from zhengruifeng/use_treeAggregate.

## What changes were proposed in this pull request?

`PeriodicRDDCheckpointer` will automatically persist the last 3 datasets called by `PeriodicRDDCheckpointer.update()`.

In GBTs, the last 3 intermediate rdds are still cached after `fit()`

## How was this patch tested?

existing tests and local test in spark-shell

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#19288 from zhengruifeng/gbt_unpersist.

## What changes were proposed in this pull request?

Change a data transformation while saving a Word2VecModel to happen with distributed data instead of local driver data.

## How was this patch tested?

Unit tests for the ML sub-component still pass.

Running this patch against v2.2.0 in a fully distributed production cluster allows a 4.0G model to save and load correctly, where it would not do so without the patch.

Author: Travis Hegner <thegner@trilliumit.com>

Closes#19191 from travishegner/master.

## What changes were proposed in this pull request?

Added LogisticRegressionTrainingSummary for MultinomialLogisticRegression in Python API

## How was this patch tested?

Added unit test

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Ming Jiang <mjiang@fanatics.com>

Author: Ming Jiang <jmwdpk@gmail.com>

Author: jmwdpk <jmwdpk@gmail.com>

Closes#19185 from jmwdpk/SPARK-21854.

## What changes were proposed in this pull request?

parallelize the computation of all columns

performance tests:

|numColums| Mean(Old) | Median(Old) | Mean(RDD) | Median(RDD) | Mean(DF) | Median(DF) |

|------|----------|------------|----------|------------|----------|------------|

|1|0.0771394713|0.0658712813|0.080779802|0.048165981499999996|0.10525509870000001|0.0499620203|

|10|0.7234340630999999|0.5954440414|0.0867935197|0.13263428659999998|0.09255724889999999|0.1573943635|

|100|7.3756451568|6.2196631259|0.1911931552|0.8625376817000001|0.5557462431|1.7216837982000002|

## How was this patch tested?

existing tests

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#18902 from zhengruifeng/parallelize_imputer.

## What changes were proposed in this pull request?

add missing since tag for `setParallelism` in #19110

## How was this patch tested?

N/A

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19214 from WeichenXu123/minor01.

## What changes were proposed in this pull request?

`df.rdd.getStorageLevel` => `df.storageLevel`

using cmd `find . -name '*.scala' | xargs -i bash -c 'egrep -in "\.rdd\.getStorageLevel" {} && echo {}'` to make sure all algs involved in this issue are fixed.

Previous discussion in other PRs: https://github.com/apache/spark/pull/19107, https://github.com/apache/spark/pull/17014

## How was this patch tested?

existing tests

Author: Zheng RuiFeng <ruifengz@foxmail.com>

Closes#19197 from zhengruifeng/double_caching.

# What changes were proposed in this pull request?

Added tunable parallelism to the pyspark implementation of one vs. rest classification. Added a parallelism parameter to the Scala implementation of one vs. rest along with functionality for using the parameter to tune the level of parallelism.

I take this PR #18281 over because the original author is busy but we need merge this PR soon.

After this been merged, we can close#18281 .

## How was this patch tested?

Test suite added.

Author: Ajay Saini <ajays725@gmail.com>

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19110 from WeichenXu123/spark-21027.

## What changes were proposed in this pull request?

This PR adds the ClusteringEvaluator Evaluator which contains two metrics:

- **cosineSilhouette**: the Silhouette measure using the cosine distance;

- **squaredSilhouette**: the Silhouette measure using the squared Euclidean distance.

The implementation of the two metrics refers to the algorithm proposed and explained [here](https://drive.google.com/file/d/0B0Hyo%5f%5fbG%5f3fdkNvSVNYX2E3ZU0/view). These algorithms have been thought for a distributed and parallel environment, thus they have reasonable performance, unlike a naive Silhouette implementation following its definition.

## How was this patch tested?

The patch has been tested with the additional unit tests added (comparing the results with the ones provided by [Python sklearn library](http://scikit-learn.org/stable/modules/generated/sklearn.metrics.silhouette_score.html)).

Author: Marco Gaido <mgaido@hortonworks.com>

Closes#18538 from mgaido91/SPARK-14516.

## What changes were proposed in this pull request?

this PR describe remove the import class that are unused.

## How was this patch tested?

N/A

Author: caoxuewen <cao.xuewen@zte.com.cn>

Closes#19131 from heary-cao/unuse_import.

https://issues.apache.org/jira/browse/SPARK-19866

## What changes were proposed in this pull request?

Add Python API for findSynonymsArray matching Scala API.

## How was this patch tested?

Manual test

`./python/run-tests --python-executables=python2.7 --modules=pyspark-ml`

Author: Xin Ren <iamshrek@126.com>

Author: Xin Ren <renxin.ubc@gmail.com>

Author: Xin Ren <keypointt@users.noreply.github.com>

Closes#17451 from keypointt/SPARK-19866.

## What changes were proposed in this pull request?

Modified `CrossValidator` and `TrainValidationSplit` to be able to evaluate models in parallel for a given parameter grid. The level of parallelism is controlled by a parameter `numParallelEval` used to schedule a number of models to be trained/evaluated so that the jobs can be run concurrently. This is a naive approach that does not check the cluster for needed resources, so care must be taken by the user to tune the parameter appropriately. The default value is `1` which will train/evaluate in serial.

## How was this patch tested?

Added unit tests for CrossValidator and TrainValidationSplit to verify that model selection is the same when run in serial vs parallel. Manual testing to verify tasks run in parallel when param is > 1. Added parameter usage to relevant examples.

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#16774 from BryanCutler/parallel-model-eval-SPARK-19357.