## What changes were proposed in this pull request?

Change the format of the build command in the README to start with a `./` prefix

./build/mvn -DskipTests clean package

This increases stylistic consistency across the README- all the other commands have a `./` prefix. Having a visible `./` prefix also makes it clear to the user that the shell command requires the current working directory to be at the repository root.

## How was this patch tested?

README.md was reviewed both in raw markdown and in the Github rendered landing page for stylistic consistency.

Closes#25231 from Mister-Meeseeks/master.

Lead-authored-by: Douglas R Colkitt <douglas.colkitt@gmail.com>

Co-authored-by: Mister-Meeseeks <douglas.colkitt@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Add AL2 license to metadata of all .md files.

This seemed to be the tidiest way as it will get ignored by .md renderers and other tools. Attempts to write them as markdown comments revealed that there is no such standard thing.

## How was this patch tested?

Doc build

Closes#24243 from srowen/SPARK-26918.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Added docs for running spark jobs with Mesos on SSL

Closes#23342 from jomach/master.

Lead-authored-by: Jorge Machado <jorge.w.machado@hotmail.com>

Co-authored-by: Jorge Machado <dxc.machado@extaccount.com>

Co-authored-by: Jorge Machado <jorge.machado.ext@kiwigrid.com>

Co-authored-by: Jorge Machado <JorgeWilson.Machado@ext.gfk.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

… incorrect.

## What changes were proposed in this pull request?

In the reported heartbeat information, the unit of the memory data is bytes, which is converted by the formatBytes() function in the utils.js file before being displayed in the interface. The cardinality of the unit conversion in the formatBytes function is 1000, which should be 1024.

Change the cardinality of the unit conversion in the formatBytes function to 1024.

## How was this patch tested?

manual tests

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#22683 from httfighter/SPARK-25696.

Lead-authored-by: 韩田田00222924 <han.tiantian@zte.com.cn>

Co-authored-by: han.tiantian@zte.com.cn <han.tiantian@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Fix Typos.

## How was this patch tested?

NA

Closes#23145 from kjmrknsn/docUpdate.

Authored-by: Keiji Yoshida <kjmrknsn@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Clarify documentation about security.

## How was this patch tested?

None, just documentation

Closes#22852 from tgravescs/SPARK-25023.

Authored-by: Thomas Graves <tgraves@thirteenroutine.corp.gq1.yahoo.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

Fix a few broken links and typos, and, nit, use HTTPS more consistently esp. on scripts and Apache links

## How was this patch tested?

Doc build

Closes#22172 from srowen/DocTypo.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

(a) disabled rest submission server by default in standalone mode

(b) fails the standalone master if rest server enabled & authentication secret set

(c) fails the mesos cluster dispatcher if authentication secret set

(d) doc updates

(e) when submitting a standalone app, only try the rest submission first if spark.master.rest.enabled=true

otherwise you'd see a 10 second pause like

18/08/09 08:13:22 INFO RestSubmissionClient: Submitting a request to launch an application in spark://...

18/08/09 08:13:33 WARN RestSubmissionClient: Unable to connect to server spark://...

I also made sure the mesos cluster dispatcher failed with the secret enabled, though I had to do that on slightly different code as I don't have mesos native libs around.

## How was this patch tested?

I ran the tests in the mesos module & in core for org.apache.spark.deploy.*

I ran a test on a cluster with standalone master to make sure I could still start with the right configs, and would fail the right way too.

Closes#22071 from squito/rest_doc_updates.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

* Adds support for local:// scheme like in k8s case for image based deployments where the jar is already in the image. Affects cluster mode and the mesos dispatcher. Covers also file:// scheme. Keeps the default case where jar resolution happens on the host.

## How was this patch tested?

Dispatcher image with the patch, use it to start DC/OS Spark service:

skonto/spark-local-disp:test

Test image with my application jar located at the root folder:

skonto/spark-local:test

Dockerfile for that image.

From mesosphere/spark:2.3.0-2.2.1-2-hadoop-2.6

COPY spark-examples_2.11-2.2.1.jar /

WORKDIR /opt/spark/dist

Tests:

The following work as expected:

* local normal example

```

dcos spark run --submit-args="--conf spark.mesos.appJar.local.resolution.mode=container --conf spark.executor.memory=1g --conf spark.mesos.executor.docker.image=skonto/spark-local:test

--conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi local:///spark-examples_2.11-2.2.1.jar"

```

* make sure the flag does not affect other uris

```

dcos spark run --submit-args="--conf spark.mesos.appJar.local.resolution.mode=container --conf spark.executor.memory=1g --conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi https://s3-eu-west-1.amazonaws.com/fdp-stavros-test/spark-examples_2.11-2.1.1.jar"

```

* normal example no local

```

dcos spark run --submit-args="--conf spark.executor.memory=1g --conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi https://s3-eu-west-1.amazonaws.com/fdp-stavros-test/spark-examples_2.11-2.1.1.jar"

```

The following fails

* uses local with no setting, default is host.

```

dcos spark run --submit-args="--conf spark.executor.memory=1g --conf spark.mesos.executor.docker.image=skonto/spark-local:test

--conf spark.executor.cores=2 --conf spark.cores.max=8

--class org.apache.spark.examples.SparkPi local:///spark-examples_2.11-2.2.1.jar"

```

Author: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com>

Closes#21378 from skonto/local-upstream.

## What changes were proposed in this pull request?

Easy fix in the documentation.

## How was this patch tested?

N/A

Closes#20948

Author: Daniel Sakuma <dsakuma@gmail.com>

Closes#20928 from dsakuma/fix_typo_configuration_docs.

This commit modifies the Mesos submission client to allow the principal

and secret to be provided indirectly via files. The path to these files

can be specified either via Spark configuration or via environment

variable.

Assuming these files are appropriately protected by FS/OS permissions

this means we don't ever leak the actual values in process info like ps

Environment variable specification is useful because it allows you to

interpolate the location of this file when using per-user Mesos

credentials.

For some background as to why we have taken this approach I will briefly describe our set up. On our systems we provide each authorised user account with their own Mesos credentials to provide certain security and audit guarantees to our customers. These credentials are managed by a central Secret management service. In our `spark-env.sh` we determine the appropriate secret and principal files to use depending on the user who is invoking Spark hence the need to inject these via environment variables as well as by configuration properties. So we set these environment variables appropriately and our Spark read in the contents of those files to authenticate itself with Mesos.

This is functionality we have been using it in production across multiple customer sites for some time. This has been in the field for around 18 months with no reported issues. These changes have been sufficient to meet our customer security and audit requirements.

We have been building and deploying custom builds of Apache Spark with various minor tweaks like this which we are now looking to contribute back into the community in order that we can rely upon stock Apache Spark builds and stop maintaining our own internal fork.

Author: Rob Vesse <rvesse@dotnetrdf.org>

Closes#20167 from rvesse/SPARK-16501.

## What changes were proposed in this pull request?

Fix spelling in quick-start doc.

## How was this patch tested?

Doc only.

Author: Shashwat Anand <me@shashwat.me>

Closes#20336 from ashashwat/SPARK-23165.

## What changes were proposed in this pull request?

Easy fix in the link.

## How was this patch tested?

Tested manually

Author: Mahmut CAVDAR <mahmutcvdr@gmail.com>

Closes#19996 from mcavdar/master.

## What changes were proposed in this pull request?

A discussed in SPARK-19606, the addition of a new config property named "spark.mesos.constraints.driver" for constraining drivers running on a Mesos cluster

## How was this patch tested?

Corresponding unit test added also tested locally on a Mesos cluster

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Paul Mackles <pmackles@adobe.com>

Closes#19543 from pmackles/SPARK-19606.

## What changes were proposed in this pull request?

Documentation about Mesos Reject Offer Configurations

## Related PR

https://github.com/apache/spark/pull/19510 for `spark.mem.max`

Author: Li, YanKit | Wilson | RIT <yankit.li@rakuten.com>

Closes#19555 from windkit/spark_22133.

## Background

In #18837 , ArtRand added Mesos secrets support to the dispatcher. **This PR is to add the same secrets support to the drivers.** This means if the secret configs are set, the driver will launch executors that have access to either env or file-based secrets.

One use case for this is to support TLS in the driver <=> executor communication.

## What changes were proposed in this pull request?

Most of the changes are a refactor of the dispatcher secrets support (#18837) - moving it to a common place that can be used by both the dispatcher and drivers. The same goes for the unit tests.

## How was this patch tested?

There are four config combinations: [env or file-based] x [value or reference secret]. For each combination:

- Added a unit test.

- Tested in DC/OS.

Author: Susan X. Huynh <xhuynh@mesosphere.com>

Closes#19437 from susanxhuynh/sh-mesos-driver-secret.

Mesos has secrets primitives for environment and file-based secrets, this PR adds that functionality to the Spark dispatcher and the appropriate configuration flags.

Unit tested and manually tested against a DC/OS cluster with Mesos 1.4.

Author: ArtRand <arand@soe.ucsc.edu>

Closes#18837 from ArtRand/spark-20812-dispatcher-secrets-and-labels.

History Server Launch uses SparkClassCommandBuilder for launching the server. It is observed that SPARK_CLASSPATH has been removed and deprecated. For spark-submit this takes a different route and spark.driver.extraClasspath takes care of specifying additional jars in the classpath that were previously specified in the SPARK_CLASSPATH. Right now the only way specify the additional jars for launching daemons such as history server is using SPARK_DIST_CLASSPATH (https://spark.apache.org/docs/latest/hadoop-provided.html) but this I presume is a distribution classpath. It would be nice to have a similar config like spark.driver.extraClasspath for launching daemons similar to history server.

Added new environment variable SPARK_DAEMON_CLASSPATH to set classpath for launching daemons. Tested and verified for History Server and Standalone Mode.

## How was this patch tested?

Initially, history server start script would fail for the reason being that it could not find the required jars for launching the server in the java classpath. Same was true for running Master and Worker in standalone mode. By adding the environment variable SPARK_DAEMON_CLASSPATH to the java classpath, both the daemons(History Server, Standalone daemons) are starting up and running.

Author: pgandhi <pgandhi@yahoo-inc.com>

Author: pgandhi999 <parthkgandhi9@gmail.com>

Closes#19047 from pgandhi999/master.

JIRA ticket: https://issues.apache.org/jira/browse/SPARK-21694

## What changes were proposed in this pull request?

Spark already supports launching containers attached to a given CNI network by specifying it via the config `spark.mesos.network.name`.

This PR adds support to pass in network labels to CNI plugins via a new config option `spark.mesos.network.labels`. These network labels are key-value pairs that are set in the `NetworkInfo` of both the driver and executor tasks. More details in the related Mesos documentation: http://mesos.apache.org/documentation/latest/cni/#mesos-meta-data-to-cni-plugins

## How was this patch tested?

Unit tests, for both driver and executor tasks.

Manual integration test to submit a job with the `spark.mesos.network.labels` option, hit the mesos/state.json endpoint, and check that the labels are set in the driver and executor tasks.

ArtRand skonto

Author: Susan X. Huynh <xhuynh@mesosphere.com>

Closes#18910 from susanxhuynh/sh-mesos-cni-labels.

## What changes were proposed in this pull request?

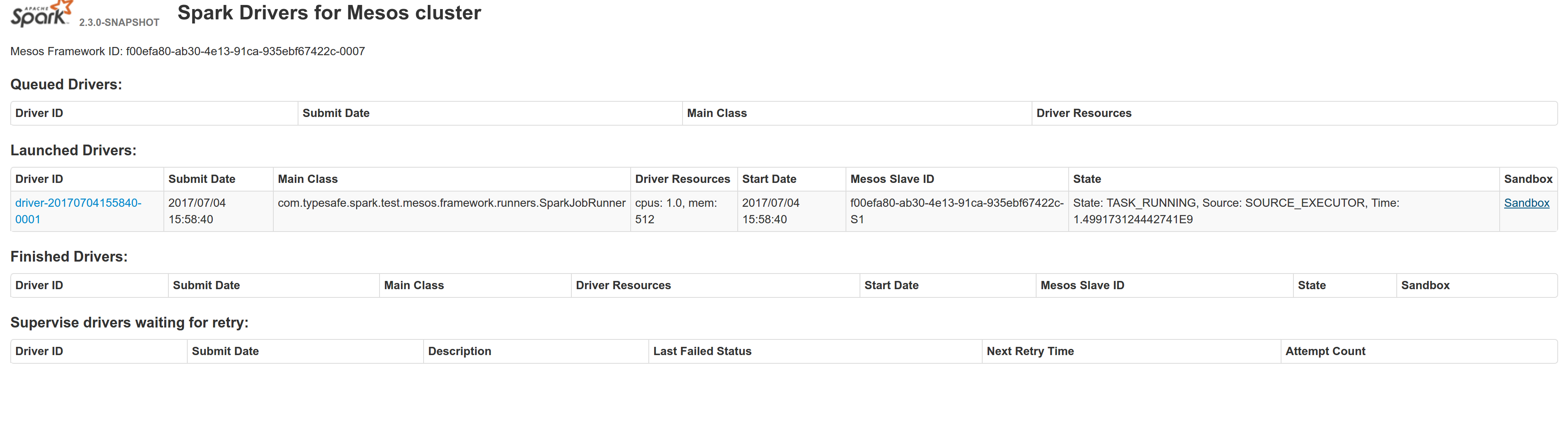

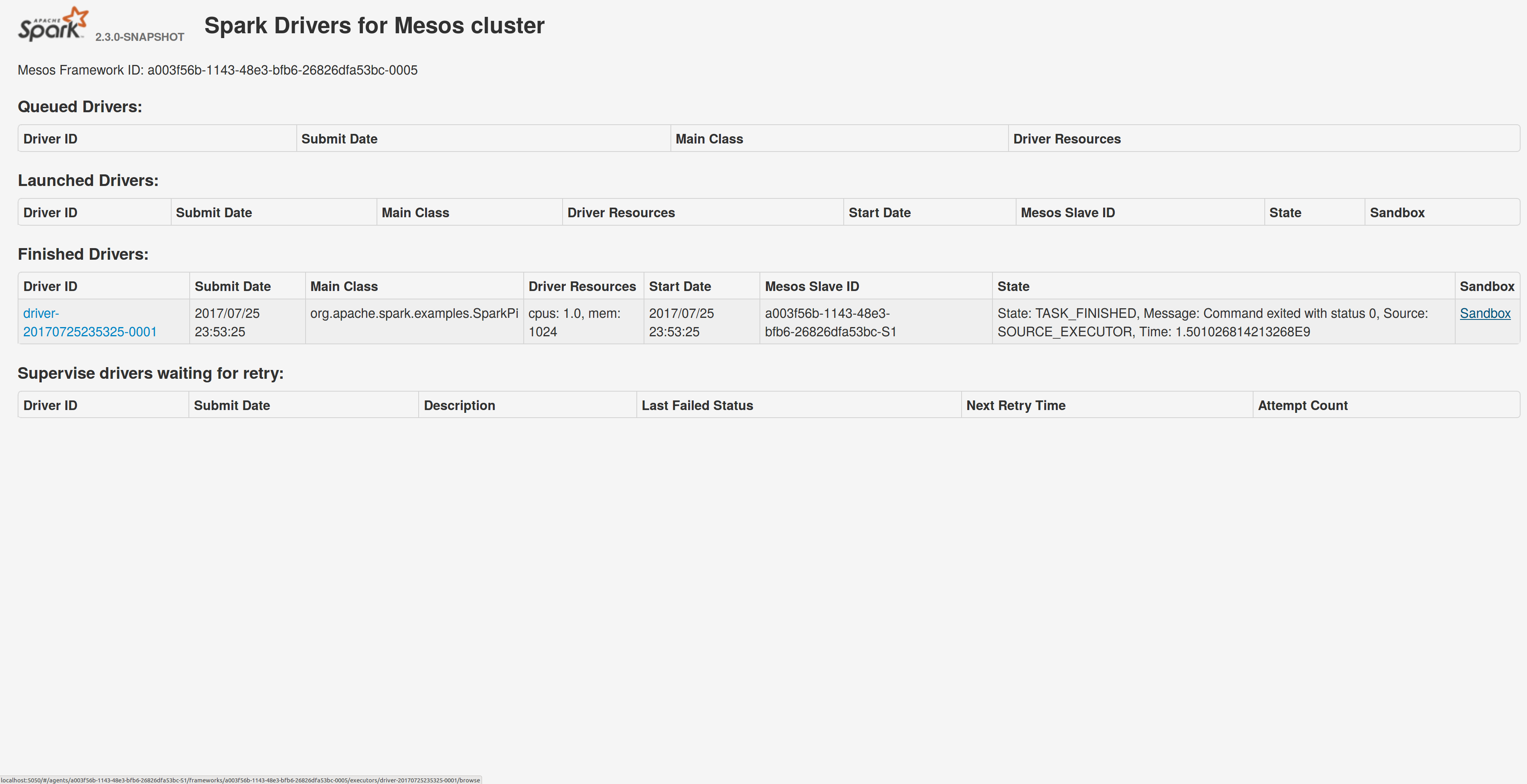

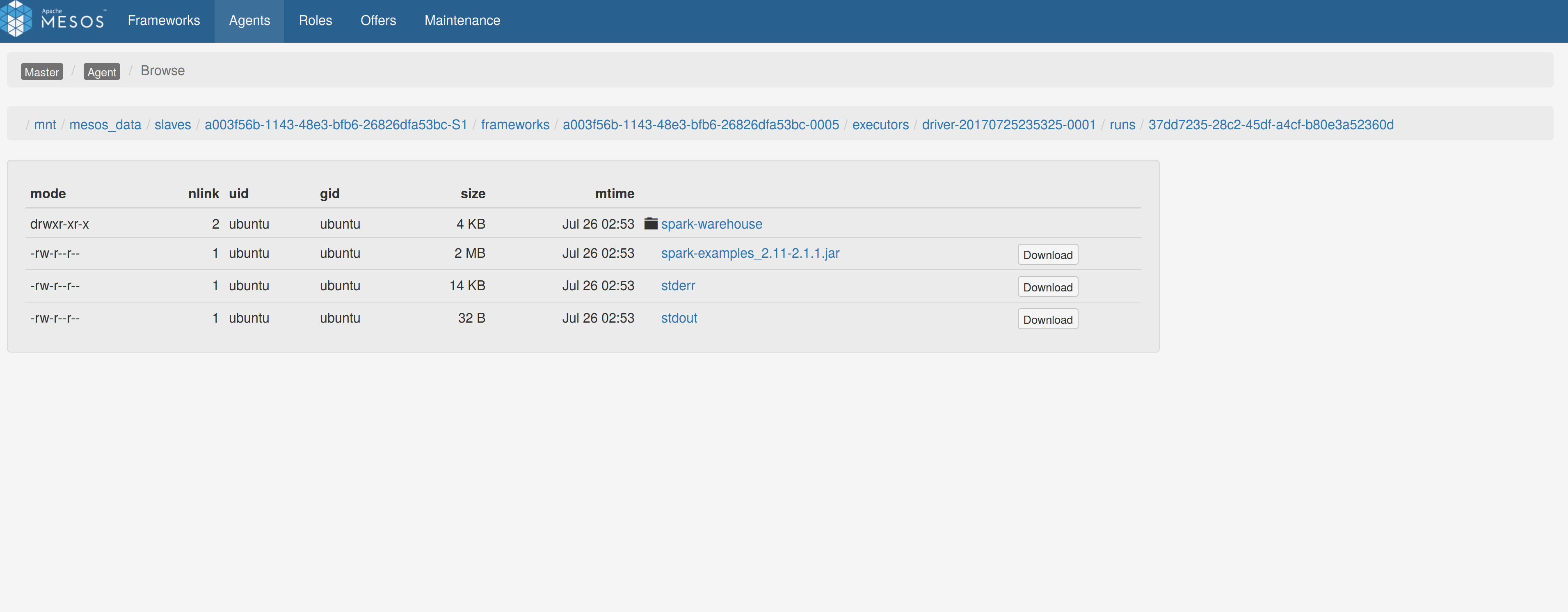

Adds a sandbox link per driver in the dispatcher ui with minimal changes after a bug was fixed here:

https://issues.apache.org/jira/browse/MESOS-4992

The sandbox uri has the following format:

http://<proxy_uri>/#/slaves/\<agent-id\>/ frameworks/ \<scheduler-id\>/executors/\<driver-id\>/browse

For dc/os the proxy uri is <dc/os uri>/mesos. For the dc/os deployment scenario and to make things easier I introduced a new config property named `spark.mesos.proxy.baseURL` which should be passed to the dispatcher when launched using --conf. If no such configuration is detected then no sandbox uri is depicted, and there is an empty column with a header (this can be changed so nothing is shown).

Within dc/os the base url must be a property for the dispatcher that we should add in the future here:

9e7c909c3b/repo/packages/S/spark/26/config.json

It is not easy to detect in different environments what is that uri so user should pass it.

## How was this patch tested?

Tested with the mesos test suite here: https://github.com/typesafehub/mesos-spark-integration-tests.

Attached image shows the ui modification where the sandbox header is added.

Tested the uri redirection the way it was suggested here:

https://issues.apache.org/jira/browse/MESOS-4992

Built mesos 1.4 from the master branch and started the mesos dispatcher with the command:

`./sbin/start-mesos-dispatcher.sh --conf spark.mesos.proxy.baseURL=http://localhost:5050 -m mesos://127.0.0.1:5050`

Run a spark example:

`./bin/spark-submit --class org.apache.spark.examples.SparkPi --master mesos://10.10.1.79:7078 --deploy-mode cluster --executor-memory 2G --total-executor-cores 2 http://<path>/spark-examples_2.11-2.1.1.jar 10`

Sandbox uri is shown at the bottom of the page:

Redirection works as expected:

Author: Stavros Kontopoulos <st.kontopoulos@gmail.com>

Closes#18528 from skonto/adds_the_sandbox_uri.

## What changes were proposed in this pull request?

Current behavior: in Mesos cluster mode, the driver failover_timeout is set to zero. If the driver temporarily loses connectivity with the Mesos master, the framework will be torn down and all executors killed.

Proposed change: make the failover_timeout configurable via a new option, spark.mesos.driver.failoverTimeout. The default value is still zero.

Note: with non-zero failover_timeout, an explicit teardown is needed in some cases. This is captured in https://issues.apache.org/jira/browse/SPARK-21458

## How was this patch tested?

Added a unit test to make sure the config option is set while creating the scheduler driver.

Ran an integration test with mesosphere/spark showing that with a non-zero failover_timeout the Spark job finishes after a driver is disconnected from the master.

Author: Susan X. Huynh <xhuynh@mesosphere.com>

Closes#18674 from susanxhuynh/sh-mesos-failover-timeout.

## What changes were proposed in this pull request?

Some link fixes for the documentation [Running Spark on Mesos](https://spark.apache.org/docs/latest/running-on-mesos.html):

* Updated Link to Mesos Frameworks (Projects built on top of Mesos)

* Update Link to Mesos binaries from Mesosphere (former link was redirected to dcos install page)

## How was this patch tested?

Documentation was built and changed page manually/visually inspected.

No code was changed, hence no dev tests.

Since these changes are rather trivial I did not open a new JIRA ticket.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Joachim Hereth <joachim.hereth@numberfour.eu>

Closes#18564 from daten-kieker/mesos_doc_fixes.

## What changes were proposed in this pull request?

Add Mesos labels support to the Spark Dispatcher

## How was this patch tested?

unit tests

Author: Michael Gummelt <mgummelt@mesosphere.io>

Closes#18220 from mgummelt/SPARK-21000-dispatcher-labels.

## What changes were proposed in this pull request?

After SPARK-10997, client mode Netty RpcEnv doesn't require to start server, so port configurations are not used any more, here propose to remove these two configurations: "spark.executor.port" and "spark.am.port".

## How was this patch tested?

Existing UTs.

Author: jerryshao <sshao@hortonworks.com>

Closes#17866 from jerryshao/SPARK-20605.

## What changes were proposed in this pull request?

Allow passing in arbitrary parameters into docker when launching spark executors on mesos with docker containerizer tnachen

## How was this patch tested?

Manually built and tested with passed in parameter

Author: Ji Yan <jiyan@Jis-MacBook-Air.local>

Closes#17109 from yanji84/ji/allow_set_docker_user.

## What changes were proposed in this pull request?

Add spark.mesos.task.labels configuration option to add mesos key:value labels to the executor.

"k1:v1,k2:v2" as the format, colons separating key-value and commas to list out more than one.

Discussion of labels with mgummelt at #17404

## How was this patch tested?

Added unit tests to verify labels were added correctly, with incorrect labels being ignored and added a test to test the name of the executor.

Tested with: `./build/sbt -Pmesos mesos/test`

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Kalvin Chau <kalvin.chau@viasat.com>

Closes#17413 from kalvinnchau/mesos-labels.

## What changes were proposed in this pull request?

Adds support for CNI-isolated containers

## How was this patch tested?

I launched SparkPi both with and without `spark.mesos.network.name`, and verified the job completed successfully.

Author: Michael Gummelt <mgummelt@mesosphere.io>

Closes#15740 from mgummelt/spark-342-cni.

Mesos 0.23.0 introduces a Fetch Cache feature http://mesos.apache.org/documentation/latest/fetcher/ which allows caching of resources specified in command URIs.

This patch:

- Updates the Mesos shaded protobuf dependency to 0.23.0

- Allows setting `spark.mesos.fetcherCache.enable` to enable the fetch cache for all specified URIs. (URIs must be specified for the setting to have any affect)

- Updates documentation for Mesos configuration with the new setting.

This patch does NOT:

- Allow for per-URI caching configuration. The cache setting is global to ALL URIs for the command.

Author: Charles Allen <charles@allen-net.com>

Closes#13713 from drcrallen/SPARK15994.

## What changes were proposed in this pull request?

Enable GPU resources to be used when running coarse grain mode with Mesos.

## How was this patch tested?

Manual test with GPU.

Author: Timothy Chen <tnachen@gmail.com>

Closes#14644 from tnachen/gpu_mesos.

## What changes were proposed in this pull request?

- adds documentation for https://issues.apache.org/jira/browse/SPARK-11714

## How was this patch tested?

Doc no test needed.

Author: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com>

Closes#14667 from skonto/add_doc.

## What changes were proposed in this pull request?

- enable setting default properties for all jobs submitted through the dispatcher [SPARK-16927]

- remove duplication of conf vars on cluster submitted jobs [SPARK-16923] (this is a small fix, so I'm including in the same PR)

## How was this patch tested?

mesos/spark integration test suite

manual testing

Author: Timothy Chen <tnachen@gmail.com>

Closes#14511 from mgummelt/override-props.

## What changes were proposed in this pull request?

Links the Spark Mesos Dispatcher UI to the history server UI

- adds spark.mesos.dispatcher.historyServer.url

- explicitly generates frameworkIDs for the launched drivers, so the dispatcher knows how to correlate drivers and frameworkIDs

## How was this patch tested?

manual testing

Author: Michael Gummelt <mgummelt@mesosphere.io>

Author: Sergiusz Urbaniak <sur@mesosphere.io>

Closes#14414 from mgummelt/history-server.

## What changes were proposed in this pull request?

New config var: spark.mesos.docker.containerizer={"mesos","docker" (default)}

This adds support for running docker containers via the Mesos unified containerizer: http://mesos.apache.org/documentation/latest/container-image/

The benefit is losing the dependency on `dockerd`, and all the costs which it incurs.

I've also updated the supported Mesos version to 0.28.2 for support of the required protobufs.

This is blocked on: https://github.com/apache/spark/pull/14167

## How was this patch tested?

- manually testing jobs submitted with both "mesos" and "docker" settings for the new config var.

- spark/mesos integration test suite

Author: Michael Gummelt <mgummelt@mesosphere.io>

Closes#14275 from mgummelt/unified-containerizer.

## What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Mesos agents by default will not pull docker images which are cached

locally already. In order to run Spark executors from mutable tags like

`:latest` this commit introduces a Spark setting

(`spark.mesos.executor.docker.forcePullImage`). Setting this flag to

true will tell the Mesos agent to force pull the docker image (default is `false` which is consistent with the previous

implementation and Mesos' default

behaviour).

Author: Philipp Hoffmann <mail@philipphoffmann.de>

Closes#14348 from philipphoffmann/force-pull-image.

## What changes were proposed in this pull request?

Mesos agents by default will not pull docker images which are cached

locally already. In order to run Spark executors from mutable tags like

`:latest` this commit introduces a Spark setting

`spark.mesos.executor.docker.forcePullImage`. Setting this flag to

true will tell the Mesos agent to force pull the docker image (default is `false` which is consistent with the previous

implementation and Mesos' default

behaviour).

## How was this patch tested?

I ran a sample application including this change on a Mesos cluster and verified the correct behaviour for both, with and without, force pulling the executor image. As expected the image is being force pulled if the flag is set.

Author: Philipp Hoffmann <mail@philipphoffmann.de>

Closes#13051 from philipphoffmann/force-pull-image.

## What changes were proposed in this pull request?

Added new configuration namespace: spark.mesos.env.*

This allows a user submitting a job in cluster mode to set arbitrary environment variables on the driver.

spark.mesos.driverEnv.KEY=VAL will result in the env var "KEY" being set to "VAL"

I've also refactored the tests a bit so we can re-use code in MesosClusterScheduler.

And I've refactored the command building logic in `buildDriverCommand`. Command builder values were very intertwined before, and now it's easier to determine exactly how each variable is set.

## How was this patch tested?

unit tests

Author: Michael Gummelt <mgummelt@mesosphere.io>

Closes#14167 from mgummelt/driver-env-vars.

## What changes were proposed in this pull request?

Documentation changes to indicate that fine-grained mode is now deprecated. No code changes were made, and all fine-grained mode instructions were left in place. We can remove all of that once the deprecation cycle completes (Does Spark have a standard deprecation cycle? One major version?)

Blocked on https://github.com/apache/spark/pull/14059

## How was this patch tested?

Viewed in Github

Author: Michael Gummelt <mgummelt@mesosphere.io>

Closes#14078 from mgummelt/deprecate-fine-grained.

## What changes were proposed in this pull request?

docs

## How was this patch tested?

viewed the docs in github

Author: Michael Gummelt <mgummelt@mesosphere.io>

Closes#14059 from mgummelt/coarse-grained.

## What changes were proposed in this pull request?

Since not having the correct zk url causes job failure, the documentation should include all parameters

## How was this patch tested?

no tests necessary

Author: Malte <elmalto@users.noreply.github.com>

Closes#12218 from elmalto/patch-1.

## What changes were proposed in this pull request?

Straggler references to Tachyon were removed:

- for docs, `tachyon` has been generalized as `off-heap memory`;

- for Mesos test suits, the key-value `tachyon:true`/`tachyon:false` has been changed to `os:centos`/`os:ubuntu`, since `os` is an example constrain used by the [Mesos official docs](http://mesos.apache.org/documentation/attributes-resources/).

## How was this patch tested?

Existing test suites.

Author: Liwei Lin <lwlin7@gmail.com>

Closes#12129 from lw-lin/tachyon-cleanup.

## What changes were proposed in this pull request?

This PR updates Scala and Hadoop versions in the build description and commands in `Building Spark` documents.

## How was this patch tested?

N/A

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#11838 from dongjoon-hyun/fix_doc_building_spark.

## What changes were proposed in this pull request?

Previously the Mesos framework webui URL was being derived only from the Spark UI address leaving no possibility to configure it. This commit makes it configurable. If unset it falls back to the previous behavior.

Motivation:

This change is necessary in order to be able to install Spark on DCOS and to be able to give it a custom service link. The configured `webui_url` is configured to point to a reverse proxy in the DCOS environment.

## How was this patch tested?

Locally, using unit tests and on DCOS testing and stable revision.

Author: Sergiusz Urbaniak <sur@mesosphere.io>

Closes#11369 from s-urbaniak/sur-webui-url.

## What changes were proposed in this pull request?

Move many top-level files in dev/ or other appropriate directory. In particular, put `make-distribution.sh` in `dev` and update docs accordingly. Remove deprecated `sbt/sbt`.

I was (so far) unable to figure out how to move `tox.ini`. `scalastyle-config.xml` should be movable but edits to the project `.sbt` files didn't work; config file location is updatable for compile but not test scope.

## How was this patch tested?

`./dev/run-tests` to verify RAT and checkstyle work. Jenkins tests for the rest.

Author: Sean Owen <sowen@cloudera.com>

Closes#11522 from srowen/SPARK-13596.

## What changes were proposed in this pull request?

This PR tries to fix all typos in all markdown files under `docs` module,

and fixes similar typos in other comments, too.

## How was the this patch tested?

manual tests.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#11300 from dongjoon-hyun/minor_fix_typos.

## What changes were proposed in this pull request?

Clarify that 0.21 is only a **minimum** requirement.

## How was the this patch tested?

It's a doc change, so no tests.

Author: Iulian Dragos <jaguarul@gmail.com>

Closes#11271 from dragos/patch-1.

This is the next iteration of tnachen's previous PR: https://github.com/apache/spark/pull/4027

In that PR, we resolved with andrewor14 and pwendell to implement the Mesos scheduler's support of `spark.executor.cores` to be consistent with YARN and Standalone. This PR implements that resolution.

This PR implements two high-level features. These two features are co-dependent, so they're implemented both here:

- Mesos support for spark.executor.cores

- Multiple executors per slave

We at Mesosphere have been working with Typesafe on a Spark/Mesos integration test suite: https://github.com/typesafehub/mesos-spark-integration-tests, which passes for this PR.

The contribution is my original work and I license the work to the project under the project's open source license.

Author: Michael Gummelt <mgummelt@mesosphere.io>

Closes#10993 from mgummelt/executor_sizing.