## What changes were proposed in this pull request?

When `SHOW CREATE TABLE` for Datasource tables, we are missing `TBLPROPERTIES` and `COMMENT`, and we should use `LOCATION` instead of path in `OPTION`.

## How was this patch tested?

Splitted `ShowCreateTableSuite` to confirm to work with both `InMemoryCatalog` and `HiveExternalCatalog`, and added some tests.

Closes#22892 from ueshin/issues/SPARK-25884/show_create_table.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

When `spark.sql.hive.metastore.version` is misconfigured, we had better give a directional error message.

**BEFORE**

```scala

scala> sql("show databases").show

scala.MatchError: 2.4 (of class java.lang.String)

```

**AFTER**

```scala

scala> sql("show databases").show

java.lang.UnsupportedOperationException: Unsupported Hive Metastore version (2.4).

Please set spark.sql.hive.metastore.version with a valid version.

```

## How was this patch tested?

Manual.

Closes#22902 from dongjoon-hyun/SPARK-25893.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Set main args correctly in BenchmarkBase, to make it accessible for its subclass.

It will benefit:

- BuiltInDataSourceWriteBenchmark

- AvroWriteBenchmark

## How was this patch tested?

manual tests

Closes#22872 from yucai/main_args.

Authored-by: yucai <yyu1@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The instance of `FileSplit` is redundant for `ParquetFileFormat` and `hive\orc\OrcFileFormat` class.

## How was this patch tested?

Existing unit tests in `ParquetQuerySuite.scala` and `HiveOrcQuerySuite.scala`

Closes#22802 from 10110346/FileSplitnotneed.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Change the version number in comment of `HiveUtils.newClientForExecution` from `13` to `1.2.1` .

## How was this patch tested?

N/A

Closes#22850 from laskfla/HiveUtils-Comment.

Authored-by: laskfla <wwlsax11@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Refactor ObjectHashAggregateExecBenchmark to use main method

## How was this patch tested?

Manually tested:

```

bin/spark-submit --class org.apache.spark.sql.execution.benchmark.ObjectHashAggregateExecBenchmark --jars sql/catalyst/target/spark-catalyst_2.11-3.0.0-SNAPSHOT-tests.jar,core/target/spark-core_2.11-3.0.0-SNAPSHOT-tests.jar,sql/hive/target/spark-hive_2.11-3.0.0-SNAPSHOT.jar --packages org.spark-project.hive:hive-exec:1.2.1.spark2 sql/hive/target/spark-hive_2.11-3.0.0-SNAPSHOT-tests.jar

```

Generated results with:

```

SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt "hive/test:runMain org.apache.spark.sql.execution.benchmark.ObjectHashAggregateExecBenchmark"

```

Closes#22804 from peter-toth/SPARK-25665.

Lead-authored-by: Peter Toth <peter.toth@gmail.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

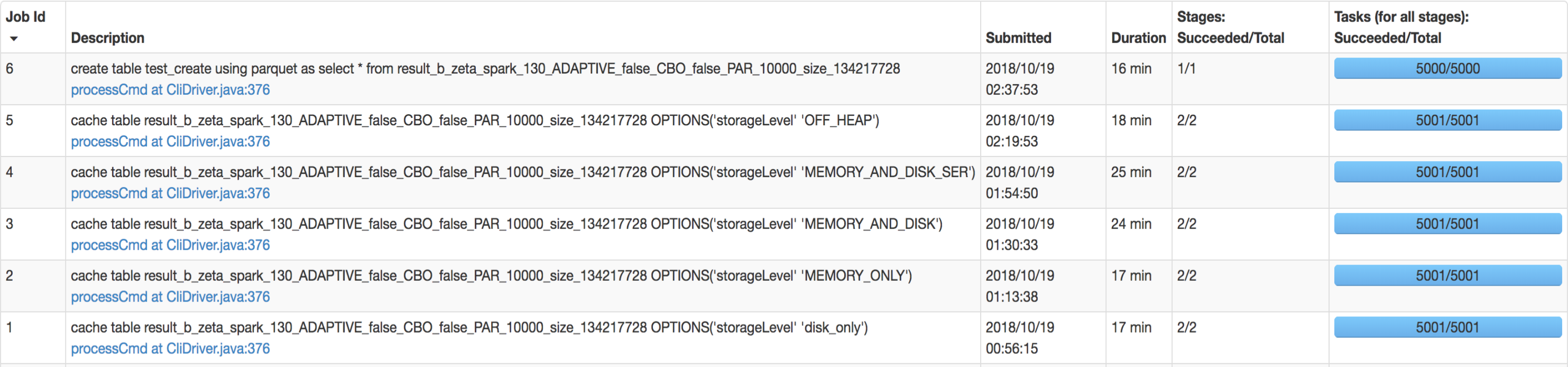

## What changes were proposed in this pull request?

SQL interface support specify `StorageLevel` when cache table. The semantic is:

```sql

CACHE TABLE tableName OPTIONS('storageLevel' 'DISK_ONLY');

```

All supported `StorageLevel` are:

eefdf9f9dd/core/src/main/scala/org/apache/spark/storage/StorageLevel.scala (L172-L183)

## How was this patch tested?

unit tests and manual tests.

manual tests configuration:

```

--executor-memory 15G --executor-cores 5 --num-executors 50

```

Data:

Input Size / Records: 1037.7 GB / 11732805788

Result:

Closes#22263 from wangyum/SPARK-25269.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Without this PR some UDAFs like `GenericUDAFPercentileApprox` can throw an exception because expecting a constant parameter (object inspector) as a particular argument.

The exception is thrown because `toPrettySQL` call in `ResolveAliases` analyzer rule transforms a `Literal` parameter to a `PrettyAttribute` which is then transformed to an `ObjectInspector` instead of a `ConstantObjectInspector`.

The exception comes from `getEvaluator` method of `GenericUDAFPercentileApprox` that actually shouldn't be called during `toPrettySQL` transformation. The reason why it is called are the non lazy fields in `HiveUDAFFunction`.

This PR makes all fields of `HiveUDAFFunction` lazy.

## How was this patch tested?

added new UT

Closes#22766 from peter-toth/SPARK-25768.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

this PR correct some comment error:

1. change from "as low a possible" to "as low as possible" in RewriteDistinctAggregates.scala

2. delete redundant word “with” in HiveTableScanExec’s doExecute() method

## How was this patch tested?

Existing unit tests.

Closes#22694 from CarolinePeng/update_comment.

Authored-by: 彭灿00244106 <00244106@zte.intra>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

LOAD DATA INPATH didn't work if the defaultFS included a port for hdfs.

Handling this just requires a small change to use the correct URI

constructor.

## How was this patch tested?

Added a unit test, ran all tests via jenkins

Closes#22733 from squito/SPARK-25738.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

According to the SQL standard, when a query contains `HAVING`, it indicates an aggregate operator. For more details please refer to https://blog.jooq.org/2014/12/04/do-you-really-understand-sqls-group-by-and-having-clauses/

However, in Spark SQL parser, we treat HAVING as a normal filter when there is no GROUP BY, which breaks SQL semantic and lead to wrong result. This PR fixes the parser.

## How was this patch tested?

new test

Closes#22696 from cloud-fan/having.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR can correctly cause assertion failure when incorrect nullable of DataType in the result is generated by a target function to be tested.

Let us think the following example. In the future, a developer would write incorrect code that returns unexpected result. We have to correctly cause fail in this test since `valueContainsNull=false` while `expr` includes `null`. However, without this PR, this test passes. This PR can correctly cause fail.

```

test("test TARGETFUNCTON") {

val expr = TARGETMAPFUNCTON()

// expr = UnsafeMap(3 -> 6, 7 -> null)

// expr.dataType = (IntegerType, IntegerType, false)

expected = Map(3 -> 6, 7 -> null)

checkEvaluation(expr, expected)

```

In [`checkEvaluationWithUnsafeProjection`](https://github.com/apache/spark/blob/master/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ExpressionEvalHelper.scala#L208-L235), the results are compared using `UnsafeRow`. When the given `expected` is [converted](https://github.com/apache/spark/blob/master/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ExpressionEvalHelper.scala#L226-L227)) to `UnsafeRow` using the `DataType` of `expr`.

```

val expectedRow = UnsafeProjection.create(Array(expression.dataType, expression.dataType)).apply(lit)

```

In summary, `expr` is `[0,1800000038,5000000038,18,2,0,700000003,2,0,6,18,2,0,700000003,2,0,6]` with and w/o this PR. `expected` is converted to

* w/o this PR, `[0,1800000038,5000000038,18,2,0,700000003,2,0,6,18,2,0,700000003,2,0,6]`

* with this PR, `[0,1800000038,5000000038,18,2,0,700000003,2,2,6,18,2,0,700000003,2,2,6]`

As a result, w/o this PR, the test unexpectedly passes.

This is because, w/o this PR, based on given `dataType`, generated code of projection for `expected` avoids to set nullbit.

```

// tmpInput_2 is expected

/* 155 */ for (int index_1 = 0; index_1 < numElements_1; index_1++) {

/* 156 */ mutableStateArray_1[1].write(index_1, tmpInput_2.getInt(index_1));

/* 157 */ }

```

With this PR, generated code of projection for `expected` always checks whether nullbit should be set by `isNullAt`

```

// tmpInput_2 is expected

/* 161 */ for (int index_1 = 0; index_1 < numElements_1; index_1++) {

/* 162 */

/* 163 */ if (tmpInput_2.isNullAt(index_1)) {

/* 164 */ mutableStateArray_1[1].setNull4Bytes(index_1);

/* 165 */ } else {

/* 166 */ mutableStateArray_1[1].write(index_1, tmpInput_2.getInt(index_1));

/* 167 */ }

/* 168 */

/* 169 */ }

```

## How was this patch tested?

Existing UTs

Closes#22375 from kiszk/SPARK-25388.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Remove Hadoop 2.6 references and make 2.7 the default.

Obviously, this is for master/3.0.0 only.

After this we can also get rid of the separate test jobs for Hadoop 2.6.

## How was this patch tested?

Existing tests

Closes#22615 from srowen/SPARK-25016.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Inspired by https://github.com/apache/spark/pull/22574 .

We can partially push down top level conjunctive predicates to Orc.

This PR improves Orc predicate push down in both SQL and Hive module.

## How was this patch tested?

New unit test.

Closes#22684 from gengliangwang/pushOrcFilters.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

Reduced the combination of codecs from 9 to 3 to improve the test runtime.

## How was this patch tested?

This is a test fix.

Closes#22641 from dilipbiswal/SPARK-25611.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

There was 5 suites extends `HadoopFsRelationTest`, for testing "orc"/"parquet"/"text"/"json" data sources.

This PR refactor the base trait `HadoopFsRelationTest`:

1. Rename unnecessary loop for setting parquet conf

2. The test case `SPARK-8406: Avoids name collision while writing files` takes about 14 to 20 seconds. As now all the file format data source are using common code, for creating result files, we can test one data source(Parquet) only to reduce test time.

To run related 5 suites:

```

./build/sbt "hive/testOnly *HadoopFsRelationSuite"

```

The total test run time is reduced from 5 minutes 40 seconds to 3 minutes 50 seconds.

## How was this patch tested?

Unit test

Closes#22643 from gengliangwang/refactorHadoopFsRelationTest.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The total run time of `HiveSparkSubmitSuite` is about 10 minutes.

While the related code is stable, add tag `ExtendedHiveTest` for it.

## How was this patch tested?

Unit test.

Closes#22642 from gengliangwang/addTagForHiveSparkSubmitSuite.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Before ORC 1.5.3, `orc.dictionary.key.threshold` and `hive.exec.orc.dictionary.key.size.threshold` are applied for all columns. This has been a big huddle to enable dictionary encoding. From ORC 1.5.3, `orc.column.encoding.direct` is added to enforce direct encoding selectively in a column-wise manner. This PR aims to add that feature by upgrading ORC from 1.5.2 to 1.5.3.

The followings are the patches in ORC 1.5.3 and this feature is the only one related to Spark directly.

```

ORC-406: ORC: Char(n) and Varchar(n) writers truncate to n bytes & corrupts multi-byte data (gopalv)

ORC-403: [C++] Add checks to avoid invalid offsets in InputStream

ORC-405: Remove calcite as a dependency from the benchmarks.

ORC-375: Fix libhdfs on gcc7 by adding #include <functional> two places.

ORC-383: Parallel builds fails with ConcurrentModificationException

ORC-382: Apache rat exclusions + add rat check to travis

ORC-401: Fix incorrect quoting in specification.

ORC-385: Change RecordReader to extend Closeable.

ORC-384: [C++] fix memory leak when loading non-ORC files

ORC-391: [c++] parseType does not accept underscore in the field name

ORC-397: Allow selective disabling of dictionary encoding. Original patch was by Mithun Radhakrishnan.

ORC-389: Add ability to not decode Acid metadata columns

```

## How was this patch tested?

Pass the Jenkins with newly added test cases.

Closes#22622 from dongjoon-hyun/SPARK-25635.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Improve the runtime by reducing the number of partitions created in the test. The number of partitions are reduced from 280 to 60.

Here are the test times for the `getPartitionsByFilter returns all partitions` test on my laptop.

```

[info] - 0.13: getPartitionsByFilter returns all partitions when hive.metastore.try.direct.sql=false (4 seconds, 230 milliseconds)

[info] - 0.14: getPartitionsByFilter returns all partitions when hive.metastore.try.direct.sql=false (3 seconds, 576 milliseconds)

[info] - 1.0: getPartitionsByFilter returns all partitions when hive.metastore.try.direct.sql=false (3 seconds, 495 milliseconds)

[info] - 1.1: getPartitionsByFilter returns all partitions when hive.metastore.try.direct.sql=false (6 seconds, 728 milliseconds)

[info] - 1.2: getPartitionsByFilter returns all partitions when hive.metastore.try.direct.sql=false (7 seconds, 260 milliseconds)

[info] - 2.0: getPartitionsByFilter returns all partitions when hive.metastore.try.direct.sql=false (8 seconds, 270 milliseconds)

[info] - 2.1: getPartitionsByFilter returns all partitions when hive.metastore.try.direct.sql=false (6 seconds, 856 milliseconds)

[info] - 2.2: getPartitionsByFilter returns all partitions when hive.metastore.try.direct.sql=false (7 seconds, 587 milliseconds)

[info] - 2.3: getPartitionsByFilter returns all partitions when hive.metastore.try.direct.sql=false (7 seconds, 230 milliseconds)

## How was this patch tested?

Test only.

Closes#22644 from dilipbiswal/SPARK-25626.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Rename method `benchmark` in `BenchmarkBase` as `runBenchmarkSuite `. Also add comments.

Currently the method name `benchmark` is a bit confusing. Also the name is the same as instances of `Benchmark`:

f246813afb/sql/hive/src/test/scala/org/apache/spark/sql/hive/orc/OrcReadBenchmark.scala (L330-L339)

## How was this patch tested?

Unit test.

Closes#22599 from gengliangwang/renameBenchmarkSuite.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.0.0-SNAPSHOT.

## How was this patch tested?

N/A

Closes#22606 from gatorsmile/bump3.0.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR adds a rule to force `.toLowerCase(Locale.ROOT)` or `toUpperCase(Locale.ROOT)`.

It produces an error as below:

```

[error] Are you sure that you want to use toUpperCase or toLowerCase without the root locale? In most cases, you

[error] should use toUpperCase(Locale.ROOT) or toLowerCase(Locale.ROOT) instead.

[error] If you must use toUpperCase or toLowerCase without the root locale, wrap the code block with

[error] // scalastyle:off caselocale

[error] .toUpperCase

[error] .toLowerCase

[error] // scalastyle:on caselocale

```

This PR excludes the cases above for SQL code path for external calls like table name, column name and etc.

For test suites, or when it's clear there's no locale problem like Turkish locale problem, it uses `Locale.ROOT`.

One minor problem is, `UTF8String` has both methods, `toLowerCase` and `toUpperCase`, and the new rule detects them as well. They are ignored.

## How was this patch tested?

Manually tested, and Jenkins tests.

Closes#22581 from HyukjinKwon/SPARK-25565.

Authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Refactor OrcReadBenchmark to use main method.

Generate benchmark result:

```

SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt "hive/test:runMain org.apache.spark.sql.hive.orc.OrcReadBenchmark"

```

## How was this patch tested?

manual tests

Closes#22580 from yucai/SPARK-25508.

Lead-authored-by: yucai <yyu1@ebay.com>

Co-authored-by: Yucai Yu <yucai.yu@foxmail.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

This PR aims to prevent test slowdowns at `HiveExternalCatalogVersionsSuite` by using the latest Apache Spark 2.3.2 link because the Apache mirrors will remove the old Spark 2.3.1 binaries eventually. `HiveExternalCatalogVersionsSuite` will not fail because [SPARK-24813](https://issues.apache.org/jira/browse/SPARK-24813) implements a fallback logic. However, it will cause many trials and fallbacks in all builds over `branch-2.3/branch-2.4/master`. We had better fix this issue.

## How was this patch tested?

Pass the Jenkins with the updated version.

Closes#22587 from dongjoon-hyun/SPARK-25570.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

**Description from the JIRA :**

Currently, to collect the statistics of all the columns, users need to specify the names of all the columns when calling the command "ANALYZE TABLE ... FOR COLUMNS...". This is not user friendly. Instead, we can introduce the following SQL command to achieve it without specifying the column names.

```

ANALYZE TABLE [db_name.]tablename COMPUTE STATISTICS FOR ALL COLUMNS;

```

## How was this patch tested?

Added new tests in SparkSqlParserSuite and StatisticsSuite

Closes#22566 from dilipbiswal/SPARK-25458.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The `show create table` will show a lot of generated attributes for views that created by older Spark version. This PR will basically revert https://issues.apache.org/jira/browse/SPARK-19272 back, so when you `DESC [FORMATTED|EXTENDED] view` will show the original view DDL text.

## How was this patch tested?

Unit test.

Closes#22458 from zheyuan28/testbranch.

Lead-authored-by: Chris Zhao <chris.zhao@databricks.com>

Co-authored-by: Christopher Zhao <chris.zhao@databricks.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Currently, Spark has 7 `withTempPath` and 6 `withSQLConf` functions. This PR aims to remove duplicated and inconsistent code and reduce them to the following meaningful implementations.

**withTempPath**

- `SQLHelper.withTempPath`: The one which was used in `SQLTestUtils`.

**withSQLConf**

- `SQLHelper.withSQLConf`: The one which was used in `PlanTest`.

- `ExecutorSideSQLConfSuite.withSQLConf`: The one which doesn't throw `AnalysisException` on StaticConf changes.

- `SQLTestUtils.withSQLConf`: The one which overrides intentionally to change the active session.

```scala

protected override def withSQLConf(pairs: (String, String)*)(f: => Unit): Unit = {

SparkSession.setActiveSession(spark)

super.withSQLConf(pairs: _*)(f)

}

```

## How was this patch tested?

Pass the Jenkins with the existing tests.

Closes#22548 from dongjoon-hyun/SPARK-25534.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

The function actually exists in current selected database, and it's failed to init during `lookupFunciton`, but the exception message is:

```

This function is neither a registered temporary function nor a permanent function registered in the database 'default'.

```

This is not conducive to positioning problems. This PR fix the problem.

## How was this patch tested?

new test case + manual tests

Closes#18544 from stanzhai/fix-udf-error-message.

Authored-by: Stan Zhai <mail@stanzhai.site>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Current the file [parquetSuites.scala](f29c2b5287/sql/hive/src/test/scala/org/apache/spark/sql/hive/parquetSuites.scala) is not recognizable.

When I tried to find test suites for built-in Parquet conversions for Hive serde, I can only find [HiveParquetSuite](f29c2b5287/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveParquetSuite.scala) in the first few minutes.

This PR is to:

1. Rename `ParquetMetastoreSuite` to `HiveParquetMetastoreSuite`, and create a single file for it.

2. Rename `ParquetSourceSuite` to `HiveParquetSourceSuite`, and create a single file for it.

3. Create a single file for `ParquetPartitioningTest`.

4. Delete `parquetSuites.scala` .

## How was this patch tested?

Unit test

Closes#22467 from gengliangwang/refactor_parquet_suites.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Currently there are two classes with the same naming BenchmarkBase:

1. `org.apache.spark.util.BenchmarkBase`

2. `org.apache.spark.sql.execution.benchmark.BenchmarkBase`

This is very confusing. And the benchmark object `org.apache.spark.sql.execution.benchmark.FilterPushdownBenchmark` is using the one in `org.apache.spark.util.BenchmarkBase`, while there is another class `BenchmarkBase` in the same package of it...

Here I propose:

1. the package `org.apache.spark.util.BenchmarkBase` should be in test package of core module. Move it to package `org.apache.spark.benchmark` .

2. Move `org.apache.spark.util.Benchmark` to test package of core module. Move it to package `org.apache.spark.benchmark` .

3. Rename the class `org.apache.spark.sql.execution.benchmark.BenchmarkBase` as `BenchmarkWithCodegen`

## How was this patch tested?

Unit test

Closes#22513 from gengliangwang/refactorBenchmarkBase.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This goes to revert sequential PRs based on some discussion and comments at https://github.com/apache/spark/pull/16677#issuecomment-422650759.

#22344#22330#22239#16677

## How was this patch tested?

Existing tests.

Closes#22481 from viirya/revert-SPARK-19355-1.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Spark supports BloomFilter creation for ORC files. This PR aims to add test coverages to prevent accidental regressions like [SPARK-12417](https://issues.apache.org/jira/browse/SPARK-12417).

## How was this patch tested?

Pass the Jenkins with newly added test cases.

Closes#22418 from dongjoon-hyun/SPARK-25427.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

What changes were proposed in this pull request

Updated the Migration guide for the behavior changes done in the JIRA issue SPARK-23425.

How was this patch tested?

Manually verified.

Closes#22396 from sujith71955/master_newtest.

Authored-by: s71955 <sujithchacko.2010@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the dev list, we can still discuss whether the next version is 2.5.0 or 3.0.0. Let us first bump the master branch version to `2.5.0-SNAPSHOT`.

## How was this patch tested?

N/A

Closes#22426 from gatorsmile/bumpVersionMaster.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

When Hive support enabled, Hive catalog puts extra storage properties into table metadata even for DataSource tables, but we should not have them.

## How was this patch tested?

Modified a test.

Closes#22410 from ueshin/issues/SPARK-25418/hive_metadata.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR ensures to call `super.afterAll()` in `override afterAll()` method for test suites.

* Some suites did not call `super.afterAll()`

* Some suites may call `super.afterAll()` only under certain condition

* Others never call `super.afterAll()`.

This PR also ensures to call `super.beforeAll()` in `override beforeAll()` for test suites.

## How was this patch tested?

Existing UTs

Closes#22337 from kiszk/SPARK-25338.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Like `INSERT OVERWRITE DIRECTORY USING` syntax, `INSERT OVERWRITE DIRECTORY STORED AS` should not generate files with duplicate fields because Spark cannot read those files back.

**INSERT OVERWRITE DIRECTORY USING**

```scala

scala> sql("INSERT OVERWRITE DIRECTORY 'file:///tmp/parquet' USING parquet SELECT 'id', 'id2' id")

... ERROR InsertIntoDataSourceDirCommand: Failed to write to directory ...

org.apache.spark.sql.AnalysisException: Found duplicate column(s) when inserting into file:/tmp/parquet: `id`;

```

**INSERT OVERWRITE DIRECTORY STORED AS**

```scala

scala> sql("INSERT OVERWRITE DIRECTORY 'file:///tmp/parquet' STORED AS parquet SELECT 'id', 'id2' id")

// It generates corrupted files

scala> spark.read.parquet("/tmp/parquet").show

18/09/09 22:09:57 WARN DataSource: Found duplicate column(s) in the data schema and the partition schema: `id`;

```

## How was this patch tested?

Pass the Jenkins with newly added test cases.

Closes#22378 from dongjoon-hyun/SPARK-25389.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

How to reproduce:

```scala

spark.sql("CREATE TABLE tbl(id long)")

spark.sql("INSERT OVERWRITE TABLE tbl VALUES 4")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"INSERT OVERWRITE LOCAL DIRECTORY '/tmp/spark/parquet' " +

"STORED AS PARQUET SELECT ID FROM view1")

spark.read.parquet("/tmp/spark/parquet").schema

scala> spark.read.parquet("/tmp/spark/parquet").schema

res10: org.apache.spark.sql.types.StructType = StructType(StructField(id,LongType,true))

```

The schema should be `StructType(StructField(ID,LongType,true))` as we `SELECT ID FROM view1`.

This pr fix this issue.

## How was this patch tested?

unit tests

Closes#22359 from wangyum/SPARK-25313-FOLLOW-UP.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

At Spark 2.0.0, SPARK-14335 adds some [commented-out test coverages](https://github.com/apache/spark/pull/12117/files#diff-dd4b39a56fac28b1ced6184453a47358R177

). This PR enables them because it's supported since 2.0.0.

## How was this patch tested?

Pass the Jenkins with re-enabled test coverage.

Closes#22363 from dongjoon-hyun/SPARK-25375.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

Before Apache Spark 2.3, table properties were ignored when writing data to a hive table(created with STORED AS PARQUET/ORC syntax), because the compression configurations were not passed to the FileFormatWriter in hadoopConf. Then it was fixed in #20087. But actually for CTAS with USING PARQUET/ORC syntax, table properties were ignored too when convertMastore, so the test case for CTAS not supported.

Now it has been fixed in #20522 , the test case should be enabled too.

## How was this patch tested?

This only re-enables the test cases of previous PR.

Closes#22302 from fjh100456/compressionCodec.

Authored-by: fjh100456 <fu.jinhua6@zte.com.cn>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

In SharedSparkSession and TestHive, we need to disable the rule ConvertToLocalRelation for better test case coverage.

## How was this patch tested?

Identify the failures after excluding "ConvertToLocalRelation" rule.

Closes#22270 from dilipbiswal/SPARK-25267-final.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

`HiveExternalCatalogVersionsSuite` Scala-2.12 test has been failing due to class path issue. It is marked as `ABORTED` because it fails at `beforeAll` during data population stage.

- https://amplab.cs.berkeley.edu/jenkins/view/Spark%20QA%20Test%20(Dashboard)/job/spark-master-test-maven-hadoop-2.7-ubuntu-scala-2.12/

```

org.apache.spark.sql.hive.HiveExternalCatalogVersionsSuite *** ABORTED ***

Exception encountered when invoking run on a nested suite - spark-submit returned with exit code 1.

```

The root cause of the failure is that `runSparkSubmit` mixes 2.4.0-SNAPSHOT classes and old Spark (2.1.3/2.2.2/2.3.1) together during `spark-submit`. This PR aims to provide `non-test` mode execution mode to `runSparkSubmit` by removing the followings.

- SPARK_TESTING

- SPARK_SQL_TESTING

- SPARK_PREPEND_CLASSES

- SPARK_DIST_CLASSPATH

Previously, in the class path, new Spark classes are behind the old Spark classes. So, new ones are unseen. However, Spark 2.4.0 reveals this bug due to the recent data source class changes.

## How was this patch tested?

Manual test. After merging, it will be tested via Jenkins.

```scala

$ dev/change-scala-version.sh 2.12

$ build/mvn -DskipTests -Phive -Pscala-2.12 clean package

$ build/mvn -Phive -Pscala-2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.hive.HiveExternalCatalogVersionsSuite test

...

HiveExternalCatalogVersionsSuite:

- backward compatibility

...

Tests: succeeded 1, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

Closes#22340 from dongjoon-hyun/SPARK-25337.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

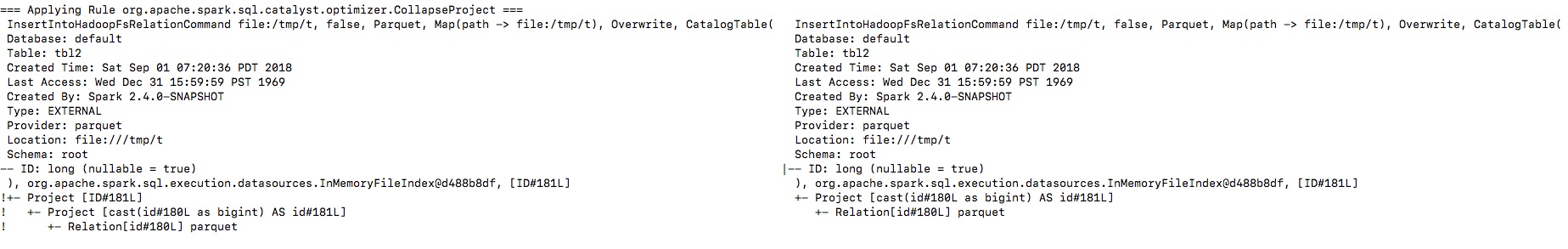

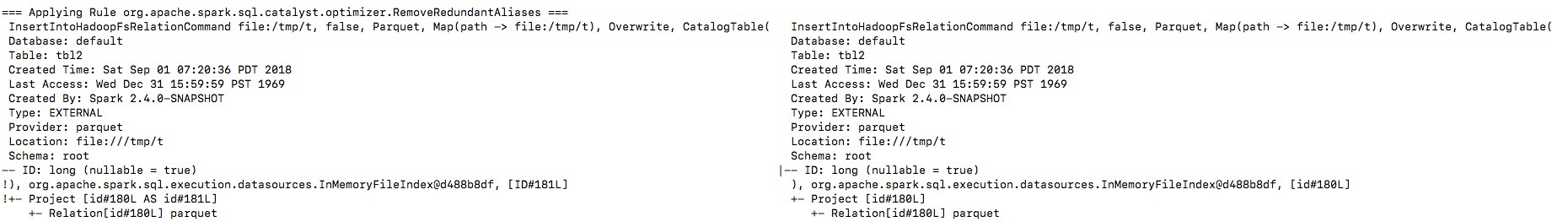

## What changes were proposed in this pull request?

Let's see the follow example:

```

val location = "/tmp/t"

val df = spark.range(10).toDF("id")

df.write.format("parquet").saveAsTable("tbl")

spark.sql("CREATE VIEW view1 AS SELECT id FROM tbl")

spark.sql(s"CREATE TABLE tbl2(ID long) USING parquet location $location")

spark.sql("INSERT OVERWRITE TABLE tbl2 SELECT ID FROM view1")

println(spark.read.parquet(location).schema)

spark.table("tbl2").show()

```

The output column name in schema will be `id` instead of `ID`, thus the last query shows nothing from `tbl2`.

By enabling the debug message we can see that the output naming is changed from `ID` to `id`, and then the `outputColumns` in `InsertIntoHadoopFsRelationCommand` is changed in `RemoveRedundantAliases`.

**To guarantee correctness**, we should change the output columns from `Seq[Attribute]` to `Seq[String]` to avoid its names being replaced by optimizer.

I will fix project elimination related rules in https://github.com/apache/spark/pull/22311 after this one.

## How was this patch tested?

Unit test.

Closes#22320 from gengliangwang/fixOutputSchema.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In both ORC data sources, `createFilter` function has exponential time complexity due to its skewed filter tree generation. This PR aims to improve it by using new `buildTree` function.

**REPRODUCE**

```scala

// Create and read 1 row table with 1000 columns

sql("set spark.sql.orc.filterPushdown=true")

val selectExpr = (1 to 1000).map(i => s"id c$i")

spark.range(1).selectExpr(selectExpr: _*).write.mode("overwrite").orc("/tmp/orc")

print(s"With 0 filters, ")

spark.time(spark.read.orc("/tmp/orc").count)

// Increase the number of filters

(20 to 30).foreach { width =>

val whereExpr = (1 to width).map(i => s"c$i is not null").mkString(" and ")

print(s"With $width filters, ")

spark.time(spark.read.orc("/tmp/orc").where(whereExpr).count)

}

```

**RESULT**

```scala

With 0 filters, Time taken: 653 ms

With 20 filters, Time taken: 962 ms

With 21 filters, Time taken: 1282 ms

With 22 filters, Time taken: 1982 ms

With 23 filters, Time taken: 3855 ms

With 24 filters, Time taken: 6719 ms

With 25 filters, Time taken: 12669 ms

With 26 filters, Time taken: 25032 ms

With 27 filters, Time taken: 49585 ms

With 28 filters, Time taken: 98980 ms // over 1 min 38 seconds

With 29 filters, Time taken: 198368 ms // over 3 mins

With 30 filters, Time taken: 393744 ms // over 6 mins

```

**AFTER THIS PR**

```scala

With 0 filters, Time taken: 774 ms

With 20 filters, Time taken: 601 ms

With 21 filters, Time taken: 399 ms

With 22 filters, Time taken: 679 ms

With 23 filters, Time taken: 363 ms

With 24 filters, Time taken: 342 ms

With 25 filters, Time taken: 336 ms

With 26 filters, Time taken: 352 ms

With 27 filters, Time taken: 322 ms

With 28 filters, Time taken: 302 ms

With 29 filters, Time taken: 307 ms

With 30 filters, Time taken: 301 ms

```

## How was this patch tested?

Pass the Jenkins with newly added test cases.

Closes#22313 from dongjoon-hyun/SPARK-25306.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

remove test-2.10.jar and add test-2.12.jar.

## How was this patch tested?

```

$ sbt -Dscala-2.12

> ++ 2.12.6

> project hive

> testOnly *HiveSparkSubmitSuite -- -z "8489"

```

Closes#22308 from sadhen/SPARK-8489-FOLLOWUP.

Authored-by: Darcy Shen <sadhen@zoho.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

### For `SPARK-5775 read array from partitioned_parquet_with_key_and_complextypes`:

scala2.12

```

scala> (1 to 10).toString

res4: String = Range 1 to 10

```

scala2.11

```

scala> (1 to 10).toString

res2: String = Range(1, 2, 3, 4, 5, 6, 7, 8, 9, 10)

```

And

```

def prepareAnswer(answer: Seq[Row], isSorted: Boolean): Seq[Row] = {

val converted: Seq[Row] = answer.map(prepareRow)

if (!isSorted) converted.sortBy(_.toString()) else converted

}

```

sortBy `_.toString` is not a good idea.

### Other failures are caused by

```

Array(Int.box(1)).toSeq == Array(Double.box(1.0)).toSeq

```

It is false in 2.12.2 + and is true in 2.11.x , 2.12.0, 2.12.1

## How was this patch tested?

This is a patch on a specific unit test.

Closes#22264 from sadhen/SPARK25256.

Authored-by: 忍冬 <rendong@wacai.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Since https://github.com/apache/spark/pull/21696. Spark uses Parquet schema instead of Hive metastore schema to do pushdown.

That change can avoid wrong records returned when Hive metastore schema and parquet schema are in different letter cases. This pr add a test case for it.

More details:

https://issues.apache.org/jira/browse/SPARK-25206

## How was this patch tested?

unit tests

Closes#22267 from wangyum/SPARK-24716-TESTS.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Introduced by #21320 and #11744

```

$ sbt

> ++2.12.6

> project sql

> compile

...

[error] [warn] spark/sql/core/src/main/scala/org/apache/spark/sql/execution/ProjectionOverSchema.scala:41: match may not be exhaustive.

[error] It would fail on the following inputs: (_, ArrayType(_, _)), (_, _)

[error] [warn] getProjection(a.child).map(p => (p, p.dataType)).map {

[error] [warn]

[error] [warn] spark/sql/core/src/main/scala/org/apache/spark/sql/execution/ProjectionOverSchema.scala:52: match may not be exhaustive.

[error] It would fail on the following input: (_, _)

[error] [warn] getProjection(child).map(p => (p, p.dataType)).map {

[error] [warn]

...

```

And

```

$ sbt

> ++2.12.6

> project hive

> testOnly *ParquetMetastoreSuite

...

[error] /Users/rendong/wdi/spark/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala:22: object tools is not a member of package scala

[error] import scala.tools.nsc.Properties

[error] ^

[error] /Users/rendong/wdi/spark/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveSparkSubmitSuite.scala:146: not found: value Properties

[error] val version = Properties.versionNumberString match {

[error] ^

[error] two errors found

...

```

## How was this patch tested?

Existing tests.

Closes#22260 from sadhen/fix_exhaustive_match.

Authored-by: 忍冬 <rendong@wacai.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to not perform recursive parallel listening of files in the `scanPartitions` method because it can cause a deadlock. Instead of that I propose to do `scanPartitions` in parallel for top level partitions only.

## How was this patch tested?

I extended an existing test to trigger the deadlock.

Author: Maxim Gekk <maxim.gekk@databricks.com>

Closes#22233 from MaxGekk/fix-recover-partitions.

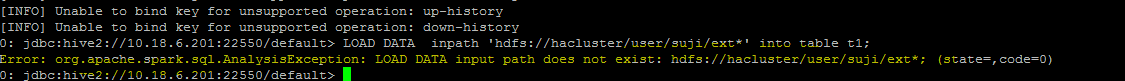

## What changes were proposed in this pull request?

**Problem statement**

load data command with hdfs file paths consists of wild card strings like * are not working

eg:

"load data inpath 'hdfs://hacluster/user/ext* into table t1"

throws Analysis exception while executing this query

**Analysis -**

Currently fs.exists() API which is used for path validation in load command API cannot resolve the path with wild card pattern, To mitigate this problem i am using globStatus() API another api which can resolve the paths with hdfs supported wildcards like *,? etc(inline with hive wildcard support).

**Improvement identified as part of this issue -**

Currently system wont support wildcard character to be used for folder level path in a local file system. This PR has handled this scenario, the same globStatus API will unify the validation logic of local and non local file systems, this will ensure the behavior consistency between the hdfs and local file path in load command.

with this improvement user will be able to use a wildcard character in folder level path of a local file system in load command inline with hive behaviour, in older versions user can use wildcards only in file path of the local file system if they use in folder path system use to give an error by mentioning that not supported.

eg: load data local inpath '/localfilesystem/folder* into table t1

## How was this patch tested?

a) Manually tested by executing test-cases in HDFS yarn cluster. Reports is been attached in below section.

b) Existing test-case can verify the impact and functionality for local file path scenarios

c) A test-case is been added for verifying the functionality when wild card is been used in folder level path of a local file system

## Test Results

Note: all ip's were updated to localhost for security reasons.

HDFS path details

```

vm1:/opt/ficlient # hadoop fs -ls /user/data/sujith1

Found 2 items

-rw-r--r-- 3 shahid hadoop 4802 2018-03-26 15:45 /user/data/sujith1/typeddata60.txt

-rw-r--r-- 3 shahid hadoop 4883 2018-03-26 15:45 /user/data/sujith1/typeddata61.txt

vm1:/opt/ficlient # hadoop fs -ls /user/data/sujith2

Found 2 items

-rw-r--r-- 3 shahid hadoop 4802 2018-03-26 15:45 /user/data/sujith2/typeddata60.txt

-rw-r--r-- 3 shahid hadoop 4883 2018-03-26 15:45 /user/data/sujith2/typeddata61.txt

```

positive scenario by specifying complete file path to know about record size

```

0: jdbc:hive2://localhost:22550/default> create table wild_spark (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.217 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/typeddata60.txt' into table wild_spark;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (4.236 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/typeddata61.txt' into table wild_spark;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.602 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from wild_spark;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

1 row selected (18.529 seconds)

0: jdbc:hive2://localhost:22550/default>

```

With wild card character in file path

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.409 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/type*' into table spark_withWildChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.502 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

```

with ? wild card scenario

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar_DiffChar (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.489 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/?ypeddata60.txt' into table spark_withWildChar_DiffChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.152 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujith1/?ypeddata61.txt' into table spark_withWildChar_DiffChar;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.644 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar_DiffChar;

+-----------+--+

| count(1) |

+-----------+--+

| 121 |

+-----------+--+

1 row selected (16.078 seconds)

```

with folder level wild card scenario

```

0: jdbc:hive2://localhost:22550/default> create table spark_withWildChar_folderlevel (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) row format delimited fields terminated by ',';

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.489 seconds)

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/suji*/*' into table spark_withWildChar_folderlevel;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.152 seconds)

0: jdbc:hive2://localhost:22550/default> select count(*) from spark_withWildChar_folderlevel;

+-----------+--+

| count(1) |

+-----------+--+

| 242 |

+-----------+--+

1 row selected (16.078 seconds)

```

Negative scenario invalid path

```

0: jdbc:hive2://localhost:22550/default> load data inpath '/user/data/sujiinvalid*/*' into table spark_withWildChar_folder;

Error: org.apache.spark.sql.AnalysisException: LOAD DATA input path does not exist: /user/data/sujiinvalid*/*; (state=,code=0)

0: jdbc:hive2://localhost:22550/default>

```

Hive Test results- file level

```

0: jdbc:hive2://localhost:21066/> create table hive_withWildChar_files (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) stored as TEXTFILE;

No rows affected (0.723 seconds)

0: jdbc:hive2://localhost:21066/> load data inpath '/user/data/sujith1/type*' into table hive_withWildChar_files;

INFO : Loading data to table default.hive_withwildchar_files from hdfs://hacluster/user/sujith1/type*

No rows affected (0.682 seconds)

0: jdbc:hive2://localhost:21066/> select count(*) from hive_withWildChar_files;

+------+--+

| _c0 |

+------+--+

| 121 |

+------+--+

1 row selected (50.832 seconds)

```

Hive Test results- folder level

```

0: jdbc:hive2://localhost:21066/> create table hive_withWildChar_folder (time timestamp, name string, isright boolean, datetoday date, num binary, height double, score float, decimaler decimal(10,0), id tinyint, age int, license bigint, length smallint) stored as TEXTFILE;

No rows affected (0.459 seconds)

0: jdbc:hive2://localhost:21066/> load data inpath '/user/data/suji*/*' into table hive_withWildChar_folder;

INFO : Loading data to table default.hive_withwildchar_folder from hdfs://hacluster/user/data/suji*/*

No rows affected (0.76 seconds)

0: jdbc:hive2://localhost:21066/> select count(*) from hive_withWildChar_folder;

+------+--+

| _c0 |

+------+--+

| 242 |

+------+--+

1 row selected (46.483 seconds)

```

Closes#20611 from sujith71955/master_wldcardsupport.

Lead-authored-by: s71955 <sujithchacko.2010@gmail.com>

Co-authored-by: sujith71955 <sujithchacko.2010@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

[SPARK-25126] (https://issues.apache.org/jira/browse/SPARK-25126)

reports loading a large number of orc files consumes a lot of memory

in both 2.0 and 2.3. The issue is caused by creating a Reader for every

orc file in order to infer the schema.

In OrFileOperator.ReadSchema, a Reader is created for every file

although only the first valid one is used. This uses significant

amount of memory when there `paths` have a lot of files. In 2.3

a different code path (OrcUtils.readSchema) is used for inferring

schema for orc files. This commit changes both functions to create

Reader lazily.

## How was this patch tested?

Pass the Jenkins with a newly added test case by dongjoon-hyun

Closes#22157 from raofu/SPARK-25126.

Lead-authored-by: Rao Fu <rao@coupang.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Co-authored-by: Rao Fu <raofu04@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This pr proposed to show RDD/relation names in RDD/Hive table scan nodes.

This change made these names show up in the webUI and explain results.

For example;

```

scala> sql("CREATE TABLE t(c1 int) USING hive")

scala> sql("INSERT INTO t VALUES(1)")

scala> spark.table("t").explain()

== Physical Plan ==

Scan hive default.t [c1#8], HiveTableRelation `default`.`t`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, [c1#8]

^^^^^^^^^^^

```

<img width="212" alt="spark-pr-hive" src="https://user-images.githubusercontent.com/692303/44501013-51264c80-a6c6-11e8-94f8-0704aee83bb6.png">

Closes#20226

## How was this patch tested?

Added tests in `DataFrameSuite`, `DatasetSuite`, and `HiveExplainSuite`

Closes#22153 from maropu/pr20226.

Lead-authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Co-authored-by: Tejas Patil <tejasp@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Fix issues arising from the fact that builtins __file__, __long__, __raw_input()__, __unicode__, __xrange()__, etc. were all removed from Python 3. __Undefined names__ have the potential to raise [NameError](https://docs.python.org/3/library/exceptions.html#NameError) at runtime.

## How was this patch tested?

* $ __python2 -m flake8 . --count --select=E9,F82 --show-source --statistics__

* $ __python3 -m flake8 . --count --select=E9,F82 --show-source --statistics__

holdenk

flake8 testing of https://github.com/apache/spark on Python 3.6.3

$ __python3 -m flake8 . --count --select=E901,E999,F821,F822,F823 --show-source --statistics__

```

./dev/merge_spark_pr.py:98:14: F821 undefined name 'raw_input'

result = raw_input("\n%s (y/n): " % prompt)

^

./dev/merge_spark_pr.py:136:22: F821 undefined name 'raw_input'

primary_author = raw_input(

^

./dev/merge_spark_pr.py:186:16: F821 undefined name 'raw_input'

pick_ref = raw_input("Enter a branch name [%s]: " % default_branch)

^

./dev/merge_spark_pr.py:233:15: F821 undefined name 'raw_input'

jira_id = raw_input("Enter a JIRA id [%s]: " % default_jira_id)

^

./dev/merge_spark_pr.py:278:20: F821 undefined name 'raw_input'

fix_versions = raw_input("Enter comma-separated fix version(s) [%s]: " % default_fix_versions)

^

./dev/merge_spark_pr.py:317:28: F821 undefined name 'raw_input'

raw_assignee = raw_input(

^

./dev/merge_spark_pr.py:430:14: F821 undefined name 'raw_input'

pr_num = raw_input("Which pull request would you like to merge? (e.g. 34): ")

^

./dev/merge_spark_pr.py:442:18: F821 undefined name 'raw_input'

result = raw_input("Would you like to use the modified title? (y/n): ")

^

./dev/merge_spark_pr.py:493:11: F821 undefined name 'raw_input'

while raw_input("\n%s (y/n): " % pick_prompt).lower() == "y":

^

./dev/create-release/releaseutils.py:58:16: F821 undefined name 'raw_input'

response = raw_input("%s [y/n]: " % msg)

^

./dev/create-release/releaseutils.py:152:38: F821 undefined name 'unicode'

author = unidecode.unidecode(unicode(author, "UTF-8")).strip()

^

./python/setup.py:37:11: F821 undefined name '__version__'

VERSION = __version__

^

./python/pyspark/cloudpickle.py:275:18: F821 undefined name 'buffer'

dispatch[buffer] = save_buffer

^

./python/pyspark/cloudpickle.py:807:18: F821 undefined name 'file'

dispatch[file] = save_file

^

./python/pyspark/sql/conf.py:61:61: F821 undefined name 'unicode'

if not isinstance(obj, str) and not isinstance(obj, unicode):

^

./python/pyspark/sql/streaming.py:25:21: F821 undefined name 'long'

intlike = (int, long)

^

./python/pyspark/streaming/dstream.py:405:35: F821 undefined name 'long'

return self._sc._jvm.Time(long(timestamp * 1000))

^

./sql/hive/src/test/resources/data/scripts/dumpdata_script.py:21:10: F821 undefined name 'xrange'

for i in xrange(50):

^

./sql/hive/src/test/resources/data/scripts/dumpdata_script.py:22:14: F821 undefined name 'xrange'

for j in xrange(5):

^

./sql/hive/src/test/resources/data/scripts/dumpdata_script.py:23:18: F821 undefined name 'xrange'

for k in xrange(20022):

^

20 F821 undefined name 'raw_input'

20

```

Closes#20838 from cclauss/fix-undefined-names.

Authored-by: cclauss <cclauss@bluewin.ch>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

Fixing typos is sometimes very hard. It's not so easy to visually review them. Recently, I discovered a very useful tool for it, [misspell](https://github.com/client9/misspell).

This pull request fixes minor typos detected by [misspell](https://github.com/client9/misspell) except for the false positives. If you would like me to work on other files as well, let me know.

## How was this patch tested?

### before

```

$ misspell . | grep -v '.js'

R/pkg/R/SQLContext.R:354:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:424:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:445:43: "definiton" is a misspelling of "definition"

R/pkg/R/SQLContext.R:495:43: "definiton" is a misspelling of "definition"

NOTICE-binary:454:16: "containd" is a misspelling of "contained"

R/pkg/R/context.R:46:43: "definiton" is a misspelling of "definition"

R/pkg/R/context.R:74:43: "definiton" is a misspelling of "definition"

R/pkg/R/DataFrame.R:591:48: "persistance" is a misspelling of "persistence"

R/pkg/R/streaming.R:166:44: "occured" is a misspelling of "occurred"

R/pkg/inst/worker/worker.R:65:22: "ouput" is a misspelling of "output"

R/pkg/tests/fulltests/test_utils.R:106:25: "environemnt" is a misspelling of "environment"

common/kvstore/src/test/java/org/apache/spark/util/kvstore/InMemoryStoreSuite.java:38:39: "existant" is a misspelling of "existent"

common/kvstore/src/test/java/org/apache/spark/util/kvstore/LevelDBSuite.java:83:39: "existant" is a misspelling of "existent"

common/network-common/src/main/java/org/apache/spark/network/crypto/TransportCipher.java:243:46: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:234:19: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:238:63: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:244:46: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/sasl/SaslEncryption.java:276:39: "transfered" is a misspelling of "transferred"

common/network-common/src/main/java/org/apache/spark/network/util/AbstractFileRegion.java:27:20: "transfered" is a misspelling of "transferred"

common/unsafe/src/test/scala/org/apache/spark/unsafe/types/UTF8StringPropertyCheckSuite.scala:195:15: "orgin" is a misspelling of "origin"

core/src/main/scala/org/apache/spark/api/python/PythonRDD.scala:621:39: "gauranteed" is a misspelling of "guaranteed"

core/src/main/scala/org/apache/spark/status/storeTypes.scala:113:29: "ect" is a misspelling of "etc"

core/src/main/scala/org/apache/spark/storage/DiskStore.scala:282:18: "transfered" is a misspelling of "transferred"

core/src/main/scala/org/apache/spark/util/ListenerBus.scala:64:17: "overriden" is a misspelling of "overridden"

core/src/test/scala/org/apache/spark/ShuffleSuite.scala:211:7: "substracted" is a misspelling of "subtracted"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:1922:49: "agriculteur" is a misspelling of "agriculture"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:2468:84: "truely" is a misspelling of "truly"

core/src/test/scala/org/apache/spark/storage/FlatmapIteratorSuite.scala:25:18: "persistance" is a misspelling of "persistence"

core/src/test/scala/org/apache/spark/storage/FlatmapIteratorSuite.scala:26:69: "persistance" is a misspelling of "persistence"

data/streaming/AFINN-111.txt:1219:0: "humerous" is a misspelling of "humorous"

dev/run-pip-tests:55:28: "enviroments" is a misspelling of "environments"

dev/run-pip-tests:91:37: "virutal" is a misspelling of "virtual"

dev/merge_spark_pr.py:377:72: "accross" is a misspelling of "across"

dev/merge_spark_pr.py:378:66: "accross" is a misspelling of "across"

dev/run-pip-tests:126:25: "enviroments" is a misspelling of "environments"

docs/configuration.md:1830:82: "overriden" is a misspelling of "overridden"

docs/structured-streaming-programming-guide.md:525:45: "processs" is a misspelling of "processes"

docs/structured-streaming-programming-guide.md:1165:61: "BETWEN" is a misspelling of "BETWEEN"

docs/sql-programming-guide.md:1891:810: "behaivor" is a misspelling of "behavior"

examples/src/main/python/sql/arrow.py:98:8: "substract" is a misspelling of "subtract"

examples/src/main/python/sql/arrow.py:103:27: "substract" is a misspelling of "subtract"

licenses/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/hungarian.txt:170:0: "teh" is a misspelling of "the"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/portuguese.txt:53:0: "eles" is a misspelling of "eels"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:99:20: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:539:11: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala:77:36: "Teh" is a misspelling of "The"

mllib/src/main/scala/org/apache/spark/mllib/clustering/StreamingKMeans.scala:230:24: "inital" is a misspelling of "initial"

mllib/src/main/scala/org/apache/spark/mllib/stat/MultivariateOnlineSummarizer.scala:276:9: "Euclidian" is a misspelling of "Euclidean"

mllib/src/test/scala/org/apache/spark/ml/clustering/KMeansSuite.scala:237:26: "descripiton" is a misspelling of "descriptions"

python/pyspark/find_spark_home.py:30:13: "enviroment" is a misspelling of "environment"

python/pyspark/context.py:937:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:938:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:939:12: "supress" is a misspelling of "suppress"

python/pyspark/context.py:940:12: "supress" is a misspelling of "suppress"

python/pyspark/heapq3.py:6:63: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:7:2: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:263:29: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:263:39: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:270:49: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:270:59: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:275:2: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:275:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:277:29: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:277:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:713:8: "probabilty" is a misspelling of "probability"

python/pyspark/ml/clustering.py:1038:8: "Currenlty" is a misspelling of "Currently"

python/pyspark/ml/stat.py:339:23: "Euclidian" is a misspelling of "Euclidean"

python/pyspark/ml/regression.py:1378:20: "paramter" is a misspelling of "parameter"

python/pyspark/mllib/stat/_statistics.py:262:8: "probabilty" is a misspelling of "probability"

python/pyspark/rdd.py:1363:32: "paramter" is a misspelling of "parameter"

python/pyspark/streaming/tests.py:825:42: "retuns" is a misspelling of "returns"

python/pyspark/sql/tests.py:768:29: "initalization" is a misspelling of "initialization"

python/pyspark/sql/tests.py:3616:31: "initalize" is a misspelling of "initialize"

resource-managers/mesos/src/main/scala/org/apache/spark/scheduler/cluster/mesos/MesosSchedulerBackendUtil.scala:120:39: "arbitary" is a misspelling of "arbitrary"

resource-managers/mesos/src/test/scala/org/apache/spark/deploy/mesos/MesosClusterDispatcherArgumentsSuite.scala:26:45: "sucessfully" is a misspelling of "successfully"

resource-managers/mesos/src/main/scala/org/apache/spark/scheduler/cluster/mesos/MesosSchedulerUtils.scala:358:27: "constaints" is a misspelling of "constraints"

resource-managers/yarn/src/test/scala/org/apache/spark/deploy/yarn/YarnClusterSuite.scala:111:24: "senstive" is a misspelling of "sensitive"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/catalog/SessionCatalog.scala:1063:5: "overwirte" is a misspelling of "overwrite"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala:1348:17: "compatability" is a misspelling of "compatibility"

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/basicLogicalOperators.scala:77:36: "paramter" is a misspelling of "parameter"

sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala:1374:22: "precendence" is a misspelling of "precedence"

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/analysis/AnalysisSuite.scala:238:27: "unnecassary" is a misspelling of "unnecessary"

sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/expressions/ConditionalExpressionSuite.scala:212:17: "whn" is a misspelling of "when"

sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/StreamingSymmetricHashJoinHelper.scala:147:60: "timestmap" is a misspelling of "timestamp"

sql/core/src/test/scala/org/apache/spark/sql/TPCDSQuerySuite.scala:150:45: "precentage" is a misspelling of "percentage"

sql/core/src/test/scala/org/apache/spark/sql/execution/datasources/csv/CSVInferSchemaSuite.scala:135:29: "infered" is a misspelling of "inferred"

sql/hive/src/test/resources/golden/udf_instr-1-2e76f819563dbaba4beb51e3a130b922:1:52: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_instr-2-32da357fc754badd6e3898dcc8989182:1:52: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_locate-1-6e41693c9c6dceea4d7fab4c02884e4e:1:63: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_locate-2-d9b5934457931447874d6bb7c13de478:1:63: "occurance" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_translate-2-f7aa38a33ca0df73b7a1e6b6da4b7fe8:9:79: "occurence" is a misspelling of "occurrence"

sql/hive/src/test/resources/golden/udf_translate-2-f7aa38a33ca0df73b7a1e6b6da4b7fe8:13:110: "occurence" is a misspelling of "occurrence"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/annotate_stats_join.q:46:105: "distint" is a misspelling of "distinct"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/auto_sortmerge_join_11.q:29:3: "Currenly" is a misspelling of "Currently"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/avro_partitioned.q:72:15: "existant" is a misspelling of "existent"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/decimal_udf.q:25:3: "substraction" is a misspelling of "subtraction"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/groupby2_map_multi_distinct.q:16:51: "funtion" is a misspelling of "function"

sql/hive/src/test/resources/ql/src/test/queries/clientpositive/groupby_sort_8.q:15:30: "issueing" is a misspelling of "issuing"

sql/hive/src/test/scala/org/apache/spark/sql/sources/HadoopFsRelationTest.scala:669:52: "wiht" is a misspelling of "with"

sql/hive-thriftserver/src/main/java/org/apache/hive/service/cli/session/HiveSessionImpl.java:474:9: "Refering" is a misspelling of "Referring"

```

### after

```

$ misspell . | grep -v '.js'

common/network-common/src/main/java/org/apache/spark/network/util/AbstractFileRegion.java:27:20: "transfered" is a misspelling of "transferred"

core/src/main/scala/org/apache/spark/status/storeTypes.scala:113:29: "ect" is a misspelling of "etc"

core/src/test/scala/org/apache/spark/scheduler/DAGSchedulerSuite.scala:1922:49: "agriculteur" is a misspelling of "agriculture"

data/streaming/AFINN-111.txt:1219:0: "humerous" is a misspelling of "humorous"

licenses/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:5:63: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:6:2: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:262:29: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:262:39: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:269:49: "Stichting" is a misspelling of "Stitching"

licenses-binary/LICENSE-heapq.txt:269:59: "Mathematisch" is a misspelling of "Mathematics"

licenses-binary/LICENSE-heapq.txt:274:2: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:274:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

licenses-binary/LICENSE-heapq.txt:276:29: "STICHTING" is a misspelling of "STITCHING"

licenses-binary/LICENSE-heapq.txt:276:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/hungarian.txt:170:0: "teh" is a misspelling of "the"

mllib/src/main/resources/org/apache/spark/ml/feature/stopwords/portuguese.txt:53:0: "eles" is a misspelling of "eels"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:99:20: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/ml/stat/Summarizer.scala:539:11: "Euclidian" is a misspelling of "Euclidean"

mllib/src/main/scala/org/apache/spark/mllib/clustering/LDAOptimizer.scala:77:36: "Teh" is a misspelling of "The"

mllib/src/main/scala/org/apache/spark/mllib/stat/MultivariateOnlineSummarizer.scala:276:9: "Euclidian" is a misspelling of "Euclidean"

python/pyspark/heapq3.py:6:63: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:7:2: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:263:29: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:263:39: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:270:49: "Stichting" is a misspelling of "Stitching"

python/pyspark/heapq3.py:270:59: "Mathematisch" is a misspelling of "Mathematics"

python/pyspark/heapq3.py:275:2: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:275:12: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/heapq3.py:277:29: "STICHTING" is a misspelling of "STITCHING"

python/pyspark/heapq3.py:277:39: "MATHEMATISCH" is a misspelling of "MATHEMATICS"

python/pyspark/ml/stat.py:339:23: "Euclidian" is a misspelling of "Euclidean"

```

Closes#22070 from seratch/fix-typo.

Authored-by: Kazuhiro Sera <seratch@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

## What changes were proposed in this pull request?

A logical `Limit` is performed physically by two operations `LocalLimit` and `GlobalLimit`.

Most of time, we gather all data into a single partition in order to run `GlobalLimit`. If we use a very big limit number, shuffling data causes performance issue also reduces parallelism.

We can avoid shuffling into single partition if we don't care data ordering. This patch implements this idea by doing a map stage during global limit. It collects the info of row numbers at each partition. For each partition, we locally retrieves limited data without any shuffling to finish this global limit.

For example, we have three partitions with rows (100, 100, 50) respectively. In global limit of 100 rows, we may take (34, 33, 33) rows for each partition locally. After global limit we still have three partitions.

If the data partition has certain ordering, we can't distribute required rows evenly to each partitions because it could change data ordering. But we still can avoid shuffling.

## How was this patch tested?

Jenkins tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#16677 from viirya/improve-global-limit-parallelism.

## What changes were proposed in this pull request?

Currently, Analyze table calculates table size sequentially for each partition. We can parallelize size calculations over partitions.

Results : Tested on a table with 100 partitions and data stored in S3.

With changes :

- 10.429s

- 10.557s

- 10.439s

- 9.893s

Without changes :

- 110.034s

- 99.510s

- 100.743s

- 99.106s

## How was this patch tested?

Simple unit test.

Closes#21608 from Achuth17/improveAnalyze.

Lead-authored-by: Achuth17 <Achuth.narayan@gmail.com>

Co-authored-by: arajagopal17 <arajagopal@qubole.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR fixes typo regarding `auxiliary verb + verb[s]`. This is a follow-on of #21956.

## How was this patch tested?

N/A

Closes#22040 from kiszk/spellcheck1.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This is a follow-up pr of #22014.

We still have some more compilation errors in scala-2.12 with sbt:

```

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/DataFrameNaFunctions.scala:493: match may not be exhaustive.

[error] It would fail on the following input: (_, _)

[error] [warn] val typeMatches = (targetType, f.dataType) match {

[error] [warn]

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/MicroBatchExecution.scala:393: match may not be exhaustive.

[error] It would fail on the following input: (_, _)

[error] [warn] prevBatchOff.get.toStreamProgress(sources).foreach {

[error] [warn]

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/execution/aggregate/AggUtils.scala:173: match may not be exhaustive.

[error] It would fail on the following input: AggregateExpression(_, _, false, _)

[error] [warn] val rewrittenDistinctFunctions = functionsWithDistinct.map {

[error] [warn]

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/execution/streaming/state/SymmetricHashJoinStateManager.scala:271: match may not be exhaustive.

[error] It would fail on the following input: (_, _)

[error] [warn] keyWithIndexToValueMetrics.customMetrics.map {

[error] [warn]

[error] [warn] /.../sql/core/src/main/scala/org/apache/spark/sql/execution/command/tables.scala:959: match may not be exhaustive.

[error] It would fail on the following input: CatalogTableType(_)

[error] [warn] val tableTypeString = metadata.tableType match {

[error] [warn]

[error] [warn] /.../sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala:923: match may not be exhaustive.

[error] It would fail on the following input: CatalogTableType(_)

[error] [warn] hiveTable.setTableType(table.tableType match {

[error] [warn]

```

## How was this patch tested?

Manually build with Scala-2.12.

Closes#22039 from ueshin/issues/SPARK-25036/fix_match.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

## What changes were proposed in this pull request?

The design details is attached to the JIRA issue [here](https://drive.google.com/file/d/1zKm3aNZ3DpsqIuoMvRsf0kkDkXsAasxH/view)

High level overview of the changes are:

- Enhance the qualifier to be more than one string

- Add support to store the qualifier. Enhance the lookupRelation to keep the qualifier appropriately.

- Enhance the table matching column resolution algorithm to account for qualifier being more than a string.