this commit makes sure that for File Source V2 partition filters are

also taken into account when the readDataSchema is empty.

This is the case for queries like:

SELECT count(*) FROM tbl WHERE partition=foo

SELECT input_file_name() FROM tbl WHERE partition=foo

### What changes were proposed in this pull request?

As described in SPARK-35985 there is bug in the File Datasource V2 which prevents it to push down to the FileScanner for queries like the ones listed above.

### Why are the changes needed?

If partitions filters are not pushed down, the whole dataset will be scanned while only one partition is interesting.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

An extra test was added which relies on the output of explain, as is done in other places.

Closes#33191 from steven-aerts/SPARK-35985.

Authored-by: Steven Aerts <steven.aerts@airties.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

(cherry picked from commit f06aa4a3f3)

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When exec the command `SHOW CREATE TABLE`, we should not lost the info null flag if the table column that

is specified `NOT NULL`

### Why are the changes needed?

[SPARK-36012](https://issues.apache.org/jira/browse/SPARK-36012)

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Add UT test for V1 and existed UT for V2

Closes#33219 from Peng-Lei/SPARK-36012.

Authored-by: PengLei <peng.8lei@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

(cherry picked from commit e071721a51)

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

As described in #32831, Spark has compatible issues when querying a view created by an

older version. The root cause is that Spark changed the auto-generated alias name. To avoid

this in the future, we could ask the user to specify explicit column names when creating

a view.

### Why are the changes needed?

Avoid compatible issue when querying a view

### Does this PR introduce _any_ user-facing change?

Yes. User will get error when running query below after this change

```

CREATE OR REPLACE VIEW v AS SELECT CAST(t.a AS INT), to_date(t.b, 'yyyyMMdd') FROM t

```

### How was this patch tested?

not yet

Closes#32832 from linhongliu-db/SPARK-35686-no-auto-alias.

Authored-by: Linhong Liu <linhong.liu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In a secure Yarn cluster, even though HBase or Kafka, or Hive services are not used in the user application, yarn client unnecessarily trying to generate Delegations token from these services. This will add additional delays while submitting spark application in a yarn cluster

Also during HBase delegation token generation step in the application submit stage, HBaseDelegationTokenProvider prints a full Exception Stack trace and it causes a noisy warning.

Apart from printing exception stack trace, Application submission taking more time as it retries connection to HBase master multiple times before it gives up. So, if HBase is not used in the user Applications, it is better to suggest User disable HBase Delegation Token generation.

This PR aims to avoid printing full Exception Stack by just printing just Exception name and also add a suggestion message to disable `Delegation Token generation` if service is not used in the Spark Application.

eg: `If HBase is not used, set spark.security.credentials.hbase.enabled to false`

### Why are the changes needed?

To avoid printing full Exception stack trace in WARN log

#### Before the fix

----------------

```

spark-shell --master yarn

.......

.......

21/06/12 14:29:41 WARN security.HBaseDelegationTokenProvider: Failed to get token from service hbase

java.lang.reflect.InvocationTargetException

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.security.HBaseDelegationTokenProvider.obtainDelegationTokensWithHBaseConn(HBaseDelegationT

okenProvider.scala:93)

at org.apache.spark.deploy.security.HBaseDelegationTokenProvider.obtainDelegationTokens(HBaseDelegationTokenProvider.

scala:60)

at org.apache.spark.deploy.security.HadoopDelegationTokenManager$$anonfun$6.apply(HadoopDelegationTokenManager.scala:

166)

at org.apache.spark.deploy.security.HadoopDelegationTokenManager$$anonfun$6.apply(HadoopDelegationTokenManager.scala:

164)

at scala.collection.TraversableLike$$anonfun$flatMap$1.apply(TraversableLike.scala:241)

at scala.collection.TraversableLike$$anonfun$flatMap$1.apply(TraversableLike.scala:241)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at scala.collection.AbstractIterator.foreach(Iterator.scala:1334)

at scala.collection.MapLike$DefaultValuesIterable.foreach(MapLike.scala:206)

at scala.collection.TraversableLike$class.flatMap(TraversableLike.scala:241)

at scala.collection.AbstractTraversable.flatMap(Traversable.scala:104)

at org.apache.spark.deploy.security.HadoopDelegationTokenManager.obtainDelegationTokens(HadoopDelegationTokenManager.

scala:164)

```

#### After the fix

------------

```

spark-shell --master yarn

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

21/06/13 02:10:02 WARN security.HBaseDelegationTokenProvider: Failed to get token from service hbase due to java.lang.reflect.InvocationTargetException Retrying to fetch HBase security token with hbase connection parameter.

21/06/13 02:10:40 WARN security.HBaseDelegationTokenProvider: Failed to get token from service hbase java.lang.reflect.InvocationTargetException. If HBase is not used, set spark.security.credentials.hbase.enabled to false

21/06/13 02:10:47 WARN cluster.YarnSchedulerBackend$YarnSchedulerEndpoint: Attempted to request executors before the AM has registered!

```

### Does this PR introduce _any_ user-facing change?

Yes, in the log, it avoids printing full Exception stack trace.

Instread prints this.

**WARN security.HBaseDelegationTokenProvider: Failed to get token from service hbase java.lang.reflect.InvocationTargetException. If HBase is not used, set spark.security.credentials.hbase.enabled to false**

### How was this patch tested?

Tested manually as it can be verified only in a secure cluster

Closes#32894 from vinodkc/br_fix_Hbase_DT_Exception_stack_printing.

Authored-by: Vinod KC <vinod.kc.in@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Check all day-time interval types in HiveInspectors tests.

### Why are the changes needed?

New tests should improve test coverage for day-time interval types.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Added UT.

Closes#33036 from AngersZhuuuu/SPARK-35733.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Make the ANSI flag part of expression `Cast`'s parameter list, instead of fetching it from the sessional SQLConf.

### Why are the changes needed?

For Views, it is important to show consistent results even the ANSI configuration is different in the running session. This is why many expressions like 'Add'/'Divide' making the ANSI flag part of its case class parameter list.

We should make it consistent for the expression `Cast`

### Does this PR introduce _any_ user-facing change?

Yes, the `Cast` inside a View always behaves the same, independent of the ANSI model SQL configuration in the current session.

### How was this patch tested?

Existing UT

Closes#33027 from gengliangwang/ansiFlagInCast.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

Check all year-month interval types in HiveInspectors tests.

### Why are the changes needed?

To improve test coverage.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added UT.

Closes#32970 from AngersZhuuuu/SPARK-35772.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/33001 , to provide a more direct fix.

The regression in 3.1 was caused by the fact that we changed the parser and allow the parser to return CHAR/VARCHAR type. We should have replaced CHAR/VARCHAR with STRING before the data type flows into the query engine, however, `OrcUtils` is missed.

When reading ORC files, at the task side we will read the real schema from ORC file metadata, then apply filter pushdown. For some reason, the implementation turns ORC schema to Spark schema before filter pushdown, and this step does not strip CHAR/VARCHAR. Note, for Parquet we use the Parquet schema directly in filter pushdown, and do not this have problem.

This PR proposes to replace the CHAR/VARCHAR with STRING when turning ORC schema to Spark schema.

### Why are the changes needed?

a more directly bug fix

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

existing tests

Closes#33030 from cloud-fan/help.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

The char/varchar type should be mapped to orc's string type too, see https://orc.apache.org/docs/types.html

### Why are the changes needed?

fix a regression

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

new tests

Closes#33001 from yaooqinn/SPARK-35700.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

There might be `SparkPlan` nodes where canonicalization on executor side can cause issues. This is a follow-up fix to conversation https://github.com/apache/spark/pull/32885/files#r651019687.

### Why are the changes needed?

To avoid potential NPEs when canonicalization happens on executors.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing UTs.

Closes#32947 from peter-toth/SPARK-35798-fix-sparkplan.sqlcontext-usage.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

`RelationConversions` is actually an optimization rule while it's executed in the analysis phase.

For view, it's designed to only capture semantic configs, so we should ignore the optimization

configs that will be used in the analysis phase.

This PR also fixes the issue that view resolution will always use the default value for uncaptured config

### Why are the changes needed?

Bugfix

### Does this PR introduce _any_ user-facing change?

Yes, after this PR view resolution will respect the values set in the current session for the below configs

```

"spark.sql.hive.convertMetastoreParquet"

"spark.sql.hive.convertMetastoreOrc"

"spark.sql.hive.convertInsertingPartitionedTable"

"spark.sql.hive.convertMetastoreCtas"

```

### How was this patch tested?

By running new UT:

```

$ build/sbt -Phive-2.3 -Phive-thriftserver "test:testOnly *HiveSQLViewSuite"

```

Closes#32941 from linhongliu-db/SPARK-35792-ignore-convert-configs.

Authored-by: Linhong Liu <linhong.liu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This upgrade default Hadoop version from 3.2.1 to 3.3.1. The changes here are simply update the version number and dependency file.

### Why are the changes needed?

Hadoop 3.3.1 just came out, which comes with many client-side improvements such as for S3A/ABFS (20% faster when accessing S3). These are important for users who want to use Spark in a cloud environment.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

- Existing unit tests in Spark

- Manually tested using my S3 bucket for event log dir:

```

bin/spark-shell \

-c spark.hadoop.fs.s3a.access.key=$AWS_ACCESS_KEY_ID \

-c spark.hadoop.fs.s3a.secret.key=$AWS_SECRET_ACCESS_KEY \

-c spark.eventLog.enabled=true

-c spark.eventLog.dir=s3a://<my-bucket>

```

- Manually tested against docker-based YARN dev cluster, by running `SparkPi`.

Closes#30135 from sunchao/SPARK-29250.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

It will not generate `tmpOutputFile`, `tmpErrOutputFile` and `sessionDirs` since [SPARK-35286](https://issues.apache.org/jira/browse/SPARK-35286). So we can remove `HiveClientImpl.closeState` to avoid these exceptions:

```

java.lang.NoSuchMethodError: org.apache.hadoop.hive.ql.session.SessionState.getTmpErrOutputFile()Ljava/io/File

```

### Why are the changes needed?

1. Avoid incompatible exceptions.

2. Remove useless code.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

- Pass the GitHub Action

- Manual test:

Execute

```

mvn clean install -DskipTests -pl sql/hive -am

mvn test -pl sql/hive -DwildcardSuites=org.apache.spark.sql.hive.client.VersionsSuite -Dtest=none

```

**Before**

```

Run completed in 17 minutes, 18 seconds.

Total number of tests run: 867

Suites: completed 2, aborted 0

Tests: succeeded 867, failed 0, canceled 0, ignored 1, pending 0

All tests passed.

15:04:02.407 WARN org.apache.hadoop.hive.metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 2.3.0

15:04:02.408 WARN org.apache.hadoop.hive.metastore.ObjectStore: setMetaStoreSchemaVersion called but recording version is disabled: version = 2.3.0, comment = Set by MetaStore yangjie010.2.30.21

15:04:02.441 WARN org.apache.hadoop.hive.metastore.ObjectStore: Failed to get database default, returning NoSuchObjectException

15:04:03.140 ERROR org.apache.spark.util.Utils: Uncaught exception in thread shutdown-hook-0

java.lang.NoSuchMethodError: org.apache.hadoop.hive.ql.session.SessionState.getTmpErrOutputFile()Ljava/io/File;

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$closeState$1(HiveClientImpl.scala:168)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$withHiveState$1(HiveClientImpl.scala:312)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:243)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:242)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:292)

at org.apache.spark.sql.hive.client.HiveClientImpl.closeState(HiveClientImpl.scala:158)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$new$1(HiveClientImpl.scala:175)

at org.apache.spark.util.SparkShutdownHook.run(ShutdownHookManager.scala:214)

at org.apache.spark.util.SparkShutdownHookManager.$anonfun$runAll$2(ShutdownHookManager.scala:188)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at org.apache.spark.util.Utils$.logUncaughtExceptions(Utils.scala:1994)

at org.apache.spark.util.SparkShutdownHookManager.$anonfun$runAll$1(ShutdownHookManager.scala:188)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at scala.util.Try$.apply(Try.scala:213)

at org.apache.spark.util.SparkShutdownHookManager.runAll(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anon$2.run(ShutdownHookManager.scala:178)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

15:04:03.141 WARN org.apache.hadoop.util.ShutdownHookManager: ShutdownHook '$anon$2' failed, java.util.concurrent.ExecutionException: java.lang.NoSuchMethodError: org.apache.hadoop.hive.ql.session.SessionState.getTmpErrOutputFile()Ljava/io/File;

java.util.concurrent.ExecutionException: java.lang.NoSuchMethodError: org.apache.hadoop.hive.ql.session.SessionState.getTmpErrOutputFile()Ljava/io/File;

at java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.util.concurrent.FutureTask.get(FutureTask.java:206)

at org.apache.hadoop.util.ShutdownHookManager.executeShutdown(ShutdownHookManager.java:124)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:95)

Caused by: java.lang.NoSuchMethodError: org.apache.hadoop.hive.ql.session.SessionState.getTmpErrOutputFile()Ljava/io/File;

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$closeState$1(HiveClientImpl.scala:168)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$withHiveState$1(HiveClientImpl.scala:312)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:243)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:242)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:292)

at org.apache.spark.sql.hive.client.HiveClientImpl.closeState(HiveClientImpl.scala:158)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$new$1(HiveClientImpl.scala:175)

at org.apache.spark.util.SparkShutdownHook.run(ShutdownHookManager.scala:214)

at org.apache.spark.util.SparkShutdownHookManager.$anonfun$runAll$2(ShutdownHookManager.scala:188)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at org.apache.spark.util.Utils$.logUncaughtExceptions(Utils.scala:1994)

at org.apache.spark.util.SparkShutdownHookManager.$anonfun$runAll$1(ShutdownHookManager.scala:188)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at scala.util.Try$.apply(Try.scala:213)

at org.apache.spark.util.SparkShutdownHookManager.runAll(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anon$2.run(ShutdownHookManager.scala:178)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

```

**After**

```

Run completed in 11 minutes, 41 seconds.

Total number of tests run: 867

Suites: completed 2, aborted 0

Tests: succeeded 867, failed 0, canceled 0, ignored 1, pending 0

All tests passed.

```

Closes#32693 from LuciferYang/SPARK-35556.

Lead-authored-by: YangJie <yangjie01@baidu.com>

Co-authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Yuming Wang <yumwang@ebay.com>

### What changes were proposed in this pull request?

Remove commons-httpclient as a direct dependency for Hadoop-3.2 profile.

Hadoop-2.7 profile distribution still has it, hadoop-client has a compile dependency on commons-httpclient, thus we cannot remove it for Hadoop-2.7 profile.

```

[INFO] +- org.apache.hadoop:hadoop-client:jar:2.7.4:compile

[INFO] | +- org.apache.hadoop:hadoop-common:jar:2.7.4:compile

[INFO] | | +- commons-cli:commons-cli:jar:1.2:compile

[INFO] | | +- xmlenc:xmlenc:jar:0.52:compile

[INFO] | | +- commons-httpclient:commons-httpclient:jar:3.1:compile

```

### Why are the changes needed?

Spark is pulling in commons-httpclient as a dependency directly. commons-httpclient went EOL years ago and there are most likely CVEs not being reported against it, thus we should remove it.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

- Existing unittests

- Checked the dependency tree before and after introducing the changes

Before:

```

./build/mvn dependency:tree -Phadoop-3.2 | grep -i "commons-httpclient"

Using `mvn` from path: /usr/bin/mvn

[INFO] +- commons-httpclient:commons-httpclient:jar:3.1:compile

[INFO] | +- commons-httpclient:commons-httpclient:jar:3.1:provided

```

After

```

./build/mvn dependency:tree | grep -i "commons-httpclient"

Using `mvn` from path: /Users/sumeet.gajjar/cloudera/upstream-spark/build/apache-maven-3.6.3/bin/mvn

```

P.S. Reopening this since [spark upgraded](463daabd5a) its `hive.version` to `2.3.9` which does not have a dependency on `commons-httpclient`.

Closes#32912 from sumeetgajjar/SPARK-35429.

Authored-by: Sumeet Gajjar <sumeetgajjar93@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Extend `YearMonthIntervalType` to support interval fields. Valid interval field values:

- 0 (YEAR)

- 1 (MONTH)

After the changes, the following year-month interval types are supported:

1. `YearMonthIntervalType(0, 0)` or `YearMonthIntervalType(YEAR, YEAR)`

2. `YearMonthIntervalType(0, 1)` or `YearMonthIntervalType(YEAR, MONTH)`. **It is the default one**.

3. `YearMonthIntervalType(1, 1)` or `YearMonthIntervalType(MONTH, MONTH)`

Closes#32825

### Why are the changes needed?

In the current implementation, Spark supports only `interval year to month` but the SQL standard allows to specify the start and end fields. The changes will allow to follow ANSI SQL standard more precisely.

### Does this PR introduce _any_ user-facing change?

Yes but `YearMonthIntervalType` has not been released yet.

### How was this patch tested?

By existing test suites.

Closes#32909 from MaxGekk/add-fields-to-YearMonthIntervalType.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Instantiate a new Hive client through `Hive.getWithoutRegisterFns(conf, false)` instead of `Hive.get(conf)`, if `Hive` version is >= '2.3.9' (the built-in version).

### Why are the changes needed?

[HIVE-10319](https://issues.apache.org/jira/browse/HIVE-10319) introduced a new API `get_all_functions` which is only supported in Hive 1.3.0/2.0.0 and up. As result, when Spark 3.x talks to a HMS service of version 1.2 or lower, the following error will occur:

```

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.thrift.TApplicationException: Invalid method name: 'get_all_functions'

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3897)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:248)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:231)

... 96 more

Caused by: org.apache.thrift.TApplicationException: Invalid method name: 'get_all_functions'

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:79)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_get_all_functions(ThriftHiveMetastore.java:3845)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_all_functions(ThriftHiveMetastore.java:3833)

```

The `get_all_functions` is called only when `doRegisterAllFns` is set to true:

```java

private Hive(HiveConf c, boolean doRegisterAllFns) throws HiveException {

conf = c;

if (doRegisterAllFns) {

registerAllFunctionsOnce();

}

}

```

what this does is to register all Hive permanent functions defined in HMS in Hive's `FunctionRegistry` class, via iterating through results from `get_all_functions`. To Spark, this seems unnecessary as it loads Hive permanent (not built-in) UDF via directly calling the HMS API, i.e., `get_function`. The `FunctionRegistry` is only used in loading Hive's built-in function that is not supported by Spark. At this time, it only applies to `histogram_numeric`.

[HIVE-21563](https://issues.apache.org/jira/browse/HIVE-21563) introduced a new API `getWithoutRegisterFns` which skips the above registration and is available in Hive 2.3.9. Therefore, Spark should adopt it to avoid the cost.

### Does this PR introduce _any_ user-facing change?

Yes with this fix Spark now should be able to talk to HMS server with Hive 1.2.x and lower.

### How was this patch tested?

Manually started a HMS server of Hive version 1.2.2. Without the PR it failed with the above exception. With the PR the error disappeared and I can successfully perform common operations such as create table, create database, list tables, etc.

Closes#32887 from sunchao/SPARK-35321-new.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Yuming Wang <yumwang@ebay.com>

### What changes were proposed in this pull request?

Extend DayTimeIntervalType to support interval fields. Valid interval field values:

- 0 (DAY)

- 1 (HOUR)

- 2 (MINUTE)

- 3 (SECOND)

After the changes, the following day-time interval types are supported:

1. `DayTimeIntervalType(0, 0)` or `DayTimeIntervalType(DAY, DAY)`

2. `DayTimeIntervalType(0, 1)` or `DayTimeIntervalType(DAY, HOUR)`

3. `DayTimeIntervalType(0, 2)` or `DayTimeIntervalType(DAY, MINUTE)`

4. `DayTimeIntervalType(0, 3)` or `DayTimeIntervalType(DAY, SECOND)`. **It is the default one**. The second fraction precision is microseconds.

5. `DayTimeIntervalType(1, 1)` or `DayTimeIntervalType(HOUR, HOUR)`

6. `DayTimeIntervalType(1, 2)` or `DayTimeIntervalType(HOUR, MINUTE)`

7. `DayTimeIntervalType(1, 3)` or `DayTimeIntervalType(HOUR, SECOND)`

8. `DayTimeIntervalType(2, 2)` or `DayTimeIntervalType(MINUTE, MINUTE)`

9. `DayTimeIntervalType(2, 3)` or `DayTimeIntervalType(MINUTE, SECOND)`

10. `DayTimeIntervalType(3, 3)` or `DayTimeIntervalType(SECOND, SECOND)`

### Why are the changes needed?

In the current implementation, Spark supports only `interval day to second` but the SQL standard allows to specify the start and end fields. The changes will allow to follow ANSI SQL standard more precisely.

### Does this PR introduce _any_ user-facing change?

Yes but `DayTimeIntervalType` has not been released yet.

### How was this patch tested?

By existing test suites.

Closes#32849 from MaxGekk/day-time-interval-type-units.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This pr upgrades built-in Hive to 2.3.9. Hive 2.3.9 changes:

- [HIVE-17155] - findConfFile() in HiveConf.java has some issues with the conf path

- [HIVE-24797] - Disable validate default values when parsing Avro schemas

- [HIVE-24608] - Switch back to get_table in HMS client for Hive 2.3.x

- [HIVE-21200] - Vectorization: date column throwing java.lang.UnsupportedOperationException for parquet

- [HIVE-21563] - Improve Table#getEmptyTable performance by disabling registerAllFunctionsOnce

- [HIVE-19228] - Remove commons-httpclient 3.x usage

### Why are the changes needed?

Fix regression caused by AVRO-2035.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test.

Closes#32750 from wangyum/SPARK-34512.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR group exception messages in `sql/hive/src/main/scala/org/apache/spark/sql/hive/client`.

### Why are the changes needed?

It will largely help with standardization of error messages and its maintenance.

### Does this PR introduce _any_ user-facing change?

No. Error messages remain unchanged.

### How was this patch tested?

No new tests - pass all original tests to make sure it doesn't break any existing behavior.

Closes#32763 from beliefer/SPARK-35058.

Lead-authored-by: beliefer <beliefer@163.com>

Co-authored-by: gengjiaan <gengjiaan@360.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Currently, Spark eagerly executes commands on the caller side of `QueryExecution`, which is a bit hacky as `QueryExecution` is not aware of it and leads to confusion.

For example, if you run `sql("show tables").collect()`, you will see two queries with identical query plans in the web UI.

The first query is triggered at `Dataset.logicalPlan`, which eagerly executes the command.

The second query is triggered at `Dataset.collect`, which is the normal query execution.

From the web UI, it's hard to tell that these two queries are caused by eager command execution.

This PR proposes to move the eager command execution to `QueryExecution`, and turn the command plan to `CommandResult` to indicate that command has been executed already. Now `sql("show tables").collect()` still triggers two queries, but the quey plans are not identical. The second query becomes:

In addition to the UI improvements, this PR also has other benefits:

1. Simplifies code as caller side no need to worry about eager command execution. `QueryExecution` takes care of it.

2. It helps https://github.com/apache/spark/pull/32442 , where there can be more plan nodes above commands, and we need to replace commands with something like local relation that produces unsafe rows.

### Why are the changes needed?

Explained above.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

existing tests

Closes#32513 from beliefer/SPARK-35378.

Lead-authored-by: gengjiaan <gengjiaan@360.cn>

Co-authored-by: beliefer <beliefer@163.com>

Co-authored-by: Jiaan Geng <beliefer@163.com>

Co-authored-by: Wenchen Fan <cloud0fan@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR group exception messages in `sql/hive/src/main/scala/org/apache/spark/sql/hive/execution`.

### Why are the changes needed?

It will largely help with standardization of error messages and its maintenance.

### Does this PR introduce _any_ user-facing change?

No. Error messages remain unchanged.

### How was this patch tested?

No new tests - pass all original tests to make sure it doesn't break any existing behavior.

Closes#32694 from beliefer/SPARK-35059.

Authored-by: gengjiaan <gengjiaan@360.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to support special datetime values introduced by #25708 and by #25716 only in typed literals, and don't recognize them in parsing strings to dates/timestamps. The following string values are supported only in typed timestamp literals:

- `epoch [zoneId]` - `1970-01-01 00:00:00+00 (Unix system time zero)`

- `today [zoneId]` - midnight today.

- `yesterday [zoneId]` - midnight yesterday

- `tomorrow [zoneId]` - midnight tomorrow

- `now` - current query start time.

For example:

```sql

spark-sql> SELECT timestamp 'tomorrow';

2019-09-07 00:00:00

```

Similarly, the following special date values are supported only in typed date literals:

- `epoch [zoneId]` - `1970-01-01`

- `today [zoneId]` - the current date in the time zone specified by `spark.sql.session.timeZone`.

- `yesterday [zoneId]` - the current date -1

- `tomorrow [zoneId]` - the current date + 1

- `now` - the date of running the current query. It has the same notion as `today`.

For example:

```sql

spark-sql> SELECT date 'tomorrow' - date 'yesterday';

2

```

### Why are the changes needed?

In the current implementation, Spark supports the special date/timestamp value in any input strings casted to dates/timestamps that leads to the following problems:

- If executors have different system time, the result is inconsistent, and random. Column values depend on where the conversions were performed.

- The special values play the role of distributed non-deterministic functions though users might think of the values as constants.

### Does this PR introduce _any_ user-facing change?

Yes but the probability should be small.

### How was this patch tested?

By running existing test suites:

```

$ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z interval.sql"

$ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z date.sql"

$ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite -- -z timestamp.sql"

$ build/sbt "test:testOnly *DateTimeUtilsSuite"

```

Closes#32714 from MaxGekk/remove-datetime-special-values.

Lead-authored-by: Max Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

After SPARK-29291 and SPARK-33352, there are still some compilation warnings about `procedure syntax is deprecated` as follows:

```

[WARNING] [Warn] /spark/core/src/main/scala/org/apache/spark/MapOutputTracker.scala:723: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `registerMergeResult`'s return type

[WARNING] [Warn] /spark/core/src/main/scala/org/apache/spark/MapOutputTracker.scala:748: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `unregisterMergeResult`'s return type

[WARNING] [Warn] /spark/core/src/test/scala/org/apache/spark/util/collection/ExternalAppendOnlyMapSuite.scala:223: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `testSimpleSpillingForAllCodecs`'s return type

[WARNING] [Warn] /spark/mllib-local/src/test/scala/org/apache/spark/ml/linalg/BLASBenchmark.scala:53: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `runBLASBenchmark`'s return type

[WARNING] [Warn] /spark/sql/core/src/main/scala/org/apache/spark/sql/execution/command/DataWritingCommand.scala:110: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `assertEmptyRootPath`'s return type

[WARNING] [Warn] /spark/sql/hive/src/test/scala/org/apache/spark/sql/hive/execution/SQLQuerySuite.scala:602: [deprecation | origin= | version=2.13.0] procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `executeCTASWithNonEmptyLocation`'s return type

```

So the main change of this pr is cleanup these compilation warnings.

### Why are the changes needed?

Eliminate compilation warnings in Scala 2.13 and this change should be compatible with Scala 2.12

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#32669 from LuciferYang/re-clean-procedure-syntax.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR fixes `HiveExternalCatalogVersionsSuite`.

With this change, only <major>.<minor> version is set to `spark.sql.hive.metastore.version`.

### Why are the changes needed?

I'm personally checking whether all the tests pass with Java 11 for the current `master` and I found `HiveExternalCatalogVersionsSuite` fails.

The reason is that Spark 3.0.2 and 3.1.1 doesn't accept `2.3.8` as a hive metastore version.

`HiveExternalCatalogVersionsSuite` downloads Spark releases from https://dist.apache.org/repos/dist/release/spark/ and run test for each release. The Spark releases are `3.0.2` and `3.1.1` for the current `master` for now.

e47e615c0e/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveExternalCatalogVersionsSuite.scala (L239-L259)

With Java 11, the suite run with a hive metastore version which corresponds to the builtin Hive version and it's `2.3.8` for the current `master`.

20750a3f9e/sql/hive/src/test/scala/org/apache/spark/sql/hive/HiveExternalCatalogVersionsSuite.scala (L62-L66)

But `branch-3.0` and `branch-3.1` doesn't accept `2.3.8`, the suite with Java 11 fails.

Another solution would be backporting SPARK-34271 (#31371) but after [a discussion](https://github.com/apache/spark/pull/32668#issuecomment-848435170), we prefer to fix the test,

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests with CI.

Closes#32670 from sarutak/fix-version-suite-for-java11.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add the function type, such as "scala_udf", "python_udf", "java_udf", "hive", "built-in" to the `ExpressionInfo` for UDF.

### Why are the changes needed?

Make the `ExpressionInfo` of UDF more meaningful

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

existing and newly added UT

Closes#32587 from linhongliu-db/udf-language.

Authored-by: Linhong Liu <linhong.liu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/32411, to fix a mistake and use `sparkSession.sessionState.newHadoopConf` which includes SQL configs instead of `sparkSession.sparkContext.hadoopConfiguration` .

### Why are the changes needed?

fix mistake

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

existing tests

Closes#32618 from cloud-fan/follow1.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Kent Yao <yao@apache.org>

### What changes were proposed in this pull request?

CTAS with location clause acts as an insert overwrite. This can cause problems when there are subdirectories within a location directory.

This causes some users to accidentally wipe out directories with very important data. We should not allow CTAS with location to a non-empty directory.

### Why are the changes needed?

Hive already handled this scenario: HIVE-11319

Steps to reproduce:

```scala

sql("""create external table `demo_CTAS`( `comment` string) PARTITIONED BY (`col1` string, `col2` string) STORED AS parquet location '/tmp/u1/demo_CTAS'""")

sql("""INSERT OVERWRITE TABLE demo_CTAS partition (col1='1',col2='1') VALUES ('abc')""")

sql("select* from demo_CTAS").show

sql("""create table ctas1 location '/tmp/u2/ctas1' as select * from demo_CTAS""")

sql("select* from ctas1").show

sql("""create table ctas2 location '/tmp/u2' as select * from demo_CTAS""")

```

Before the fix: Both create table operations will succeed. But values in table ctas1 will be replaced by ctas2 accidentally.

After the fix: `create table ctas2...` will throw `AnalysisException`:

```

org.apache.spark.sql.AnalysisException: CREATE-TABLE-AS-SELECT cannot create table with location to a non-empty directory /tmp/u2 . To allow overwriting the existing non-empty directory, set 'spark.sql.legacy.allowNonEmptyLocationInCTAS' to true.

```

### Does this PR introduce _any_ user-facing change?

Yes, if the location directory is not empty, CTAS with location will throw AnalysisException

```

sql("""create table ctas2 location '/tmp/u2' as select * from demo_CTAS""")

```

```

org.apache.spark.sql.AnalysisException: CREATE-TABLE-AS-SELECT cannot create table with location to a non-empty directory /tmp/u2 . To allow overwriting the existing non-empty directory, set 'spark.sql.legacy.allowNonEmptyLocationInCTAS' to true.

```

`CREATE TABLE AS SELECT` with non-empty `LOCATION` will throw `AnalysisException`. To restore the behavior before Spark 3.2, need to set `spark.sql.legacy.allowNonEmptyLocationInCTAS` to `true`. , default value is `false`.

Updated SQL migration guide.

### How was this patch tested?

Test case added in SQLQuerySuite.scala

Closes#32411 from vinodkc/br_fixCTAS_nonempty_dir.

Authored-by: Vinod KC <vinod.kc.in@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. In HadoopMapReduceCommitProtocol, create parent directory before renaming custom partition path staging files

2. In InMemoryCatalog and HiveExternalCatalog, create new partition directory before renaming old partition path

3. Check return value of FileSystem#rename, if false, throw exception to avoid silent data loss cause by rename failure

4. Change DebugFilesystem#rename behavior to make it match HDFS's behavior (return false without rename when dst parent directory not exist)

### Why are the changes needed?

Depends on FileSystem#rename implementation, when destination directory does not exist, file system may

1. return false without renaming file nor throwing exception (e.g. HDFS), or

2. create destination directory, rename files, and return true (e.g. LocalFileSystem)

In the first case above, renames in HadoopMapReduceCommitProtocol for custom partition path will fail silently if the destination partition path does not exist. Failed renames can happen when

1. dynamicPartitionOverwrite == true, the custom partition path directories are deleted by the job before the rename; or

2. the custom partition path directories do not exist before the job; or

3. something else is wrong when file system handle `rename`

The renames in MemoryCatalog and HiveExternalCatalog for partition renaming also have similar issue.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Modified DebugFilesystem#rename, and added new unit tests.

Without the fix in src code, five InsertSuite tests and one AlterTableRenamePartitionSuite test failed:

InsertSuite.SPARK-20236: dynamic partition overwrite with custom partition path (existing test with modified FS)

```

== Results ==

!== Correct Answer - 1 == == Spark Answer - 0 ==

struct<> struct<>

![2,1,1]

```

InsertSuite.SPARK-35106: insert overwrite with custom partition path

```

== Results ==

!== Correct Answer - 1 == == Spark Answer - 0 ==

struct<> struct<>

![2,1,1]

```

InsertSuite.SPARK-35106: dynamic partition overwrite with custom partition path

```

== Results ==

!== Correct Answer - 2 == == Spark Answer - 1 ==

!struct<> struct<i:int,part1:int,part2:int>

[1,1,1] [1,1,1]

![1,1,2]

```

InsertSuite.SPARK-35106: Throw exception when rename custom partition paths returns false

```

Expected exception org.apache.spark.SparkException to be thrown, but no exception was thrown

```

InsertSuite.SPARK-35106: Throw exception when rename dynamic partition paths returns false

```

Expected exception org.apache.spark.SparkException to be thrown, but no exception was thrown

```

AlterTableRenamePartitionSuite.ALTER TABLE .. RENAME PARTITION V1: multi part partition (existing test with modified FS)

```

== Results ==

!== Correct Answer - 1 == == Spark Answer - 0 ==

struct<> struct<>

![3,123,3]

```

Closes#32530 from YuzhouSun/SPARK-35106.

Authored-by: Yuzhou Sun <yuzhosun@amazon.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Instantiate a new Hive client through `Hive.getWithFastCheck(conf, false)` instead of `Hive.get(conf)`.

### Why are the changes needed?

[HIVE-10319](https://issues.apache.org/jira/browse/HIVE-10319) introduced a new API `get_all_functions` which is only supported in Hive 1.3.0/2.0.0 and up. As result, when Spark 3.x talks to a HMS service of version 1.2 or lower, the following error will occur:

```

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.thrift.TApplicationException: Invalid method name: 'get_all_functions'

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3897)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:248)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:231)

... 96 more

Caused by: org.apache.thrift.TApplicationException: Invalid method name: 'get_all_functions'

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:79)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_get_all_functions(ThriftHiveMetastore.java:3845)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_all_functions(ThriftHiveMetastore.java:3833)

```

The `get_all_functions` is called only when `doRegisterAllFns` is set to true:

```java

private Hive(HiveConf c, boolean doRegisterAllFns) throws HiveException {

conf = c;

if (doRegisterAllFns) {

registerAllFunctionsOnce();

}

}

```

what this does is to register all Hive permanent functions defined in HMS in Hive's `FunctionRegistry` class, via iterating through results from `get_all_functions`. To Spark, this seems unnecessary as it loads Hive permanent (not built-in) UDF via directly calling the HMS API, i.e., `get_function`. The `FunctionRegistry` is only used in loading Hive's built-in function that is not supported by Spark. At this time, it only applies to `histogram_numeric`.

### Does this PR introduce _any_ user-facing change?

Yes with this fix Spark now should be able to talk to HMS server with Hive 1.2.x and lower (with HIVE-24608 too)

### How was this patch tested?

Manually started a HMS server of Hive version 1.2.2, with patched Hive 2.3.8 using HIVE-24608. Without the PR it failed with the above exception. With the PR the error disappeared and I can successfully perform common operations such as create table, create database, list tables, etc.

Closes#32446 from sunchao/SPARK-35321.

Authored-by: Chao Sun <sunchao@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR extends `ADD FILE/JAR/ARCHIVE` commands to be able to take multiple path arguments like Hive.

### Why are the changes needed?

To make those commands more useful.

### Does this PR introduce _any_ user-facing change?

Yes. In the current implementation, those commands can take a path which contains whitespaces without enclose it by neither `'` nor `"` but after this change, users need to enclose such paths.

I've note this incompatibility in the migration guide.

### How was this patch tested?

New tests.

Closes#32205 from sarutak/add-multiple-files.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

This PR proposes to make `CREATE FUNCTION USING` syntax can take archives as resources.

### Why are the changes needed?

It would be useful.

`CREATE FUNCTION USING` syntax doesn't support archives as resources because archives were not supported in Spark SQL.

Now Spark SQL supports archives so I think we can support them for the syntax.

### Does this PR introduce _any_ user-facing change?

Yes. Users can specify archives for `CREATE FUNCTION USING` syntax.

### How was this patch tested?

New test.

Closes#32359 from sarutak/load-function-using-archive.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a re-proposal of https://github.com/apache/spark/pull/23163. Currently spark always requires a [local sort](https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/FileFormatWriter.scala#L188) before writing to output table with dynamic partition/bucket columns. The sort can be unnecessary if cardinality of partition/bucket values is small, and can be avoided by keeping multiple output writers concurrently.

This PR introduces a config `spark.sql.maxConcurrentOutputFileWriters` (which disables this feature by default), where user can tune the maximal number of concurrent writers. The config is needed here as we cannot keep arbitrary number of writers in task memory which can cause OOM (especially for Parquet/ORC vectorization writer).

The feature is to first use concurrent writers to write rows. If the number of writers exceeds the above config specified limit. Sort rest of rows and write rows one by one (See `DynamicPartitionDataConcurrentWriter.writeWithIterator()`).

In addition, interface `WriteTaskStatsTracker` and its implementation `BasicWriteTaskStatsTracker` are also changed because previously they are relying on the assumption that only one writer is active for writing dynamic partitions and bucketed table.

### Why are the changes needed?

Avoid the sort before writing output for dynamic partitioned query and bucketed table.

Help improve CPU and IO performance for these queries.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Added unit test in `DataFrameReaderWriterSuite.scala`.

Closes#32198 from c21/writer.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

DayTimeIntervalType/YearMonthIntervalString show different between Hive SerDe and row format delimited.

Create this pr to add a test and have disscuss.

For this problem I think we have two direction:

1. leave it as current and add a item t explain this in migration guide docs.

2. Since we should not change hive serde's behavior, so we can cast spark row format delimited's behavior to use cast DayTimeIntervalType/YearMonthIntervalType as HIVE_STYLE

### Why are the changes needed?

Add UT

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

added ut

Closes#32335 from AngersZhuuuu/SPARK-35220.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

A simple test that writes and reads an encrypted parquet and verifies that it's encrypted by checking its magic string (in encrypted footer mode).

### Why are the changes needed?

To provide a test coverage for Parquet encryption.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

- [x] [SBT / Hadoop 3.2 / Java8 (the default)](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/137785/testReport)

- [ ] ~SBT / Hadoop 3.2 / Java11 by adding [test-java11] to the PR title.~ (Jenkins Java11 build is broken due to missing JDK11 installation)

- [x] [SBT / Hadoop 2.7 / Java8 by adding [test-hadoop2.7] to the PR title.](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/137836/testReport)

- [x] Maven / Hadoop 3.2 / Java8 by adding [test-maven] to the PR title.

- [x] Maven / Hadoop 2.7 / Java8 by adding [test-maven][test-hadoop2.7] to the PR title.

Closes#32146 from andersonm-ibm/pme_testing.

Authored-by: Maya Anderson <mayaa@il.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Refactor ScriptTransformation to remove input parameter and replace it by child.output

### Why are the changes needed?

refactor code

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existed UT

Closes#32228 from AngersZhuuuu/SPARK-34035.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Firstly let's take a look at the definition and comment.

```

// A fake config which is only here for backward compatibility reasons. This config has no effect

// to Spark, just for reporting the builtin Hive version of Spark to existing applications that

// already rely on this config.

val FAKE_HIVE_VERSION = buildConf("spark.sql.hive.version")

.doc(s"deprecated, please use ${HIVE_METASTORE_VERSION.key} to get the Hive version in Spark.")

.version("1.1.1")

.fallbackConf(HIVE_METASTORE_VERSION)

```

It is used for reporting the built-in Hive version but the current status is unsatisfactory, as it is could be changed in many ways e.g. --conf/SET syntax.

It is marked as deprecated but kept a long way until now. I guess it is hard for us to remove it and not even necessary.

On second thought, it's actually good for us to keep it to work with the `spark.sql.hive.metastore.version`. As when `spark.sql.hive.metastore.version` is changed, it could be used to report the compiled hive version statically, it's useful when an error occurs in this case. So this parameter should be fixed to compiled hive version.

### Why are the changes needed?

`spark.sql.hive.version` is useful in certain cases and should be read-only

### Does this PR introduce _any_ user-facing change?

`spark.sql.hive.version` now is read-only

### How was this patch tested?

new test cases

Closes#32200 from yaooqinn/SPARK-35102.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Now that `AnalysisOnlyCommand` in introduced in #32032, `CacheTable` and `UncacheTable` can extend `AnalysisOnlyCommand` to simplify the code base. For example, the logic to handle these commands such that the tables are only analyzed is scattered across different places.

### Why are the changes needed?

To simplify the code base to handle these two commands.

### Does this PR introduce _any_ user-facing change?

No, just internal refactoring.

### How was this patch tested?

The existing tests (e.g., `CachedTableSuite`) cover the changes in this PR. For example, if I make `CacheTable`/`UncacheTable` extend `LeafCommand`, there are few failures in `CachedTableSuite`.

Closes#32220 from imback82/cache_cmd_analysis_only.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR refactors three parts of the comments in `HiveClientImpl.withHiveState`

One is about the following comment.

```

// The classloader in clientLoader could be changed after addJar, always use the latest

// classloader.

```

The comment was added in SPARK-10810 (#8909) because `IsolatedClientLoader.classLoader` was declared as `var`.

But the field is now `val` and cannot be changed after instanciation.

So, the comment can confuse developers.

One is about the following code and comment.

```

// classloader. We explicitly set the context class loader since "conf.setClassLoader" does

// not do that, and the Hive client libraries may need to load classes defined by the client's

// class loader.

Thread.currentThread().setContextClassLoader(clientLoader.classLoader)

```

It's not trivial why this part is necessary and it's difficult when we can remove this code in the future.

So, I revised the comment by adding the reference of the related JIRA.

And the last one is about the following code and comment.

```

// Replace conf in the thread local Hive with current conf

Hive.get(conf)

```

It's also not trivial why this part is necessary.

I revised the comment by adding the reference of the related discussion.

### Why are the changes needed?

To make code more readable.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

It's just a comment refactoring so I add no new test.

Closes#32162 from sarutak/refactor-HiveClientImpl.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR fixes an issue that `LIST FILES/JARS/ARCHIVES path1 path2 ...` cannot list all paths if at least one path is quoted.

An example here.

```

ADD FILE /tmp/test1;

ADD FILE /tmp/test2;

LIST FILES /tmp/test1 /tmp/test2;

file:/tmp/test1

file:/tmp/test2

LIST FILES /tmp/test1 "/tmp/test2";

file:/tmp/test2

```

In this example, the second `LIST FILES` doesn't show `file:/tmp/test1`.

To resolve this issue, I modified the syntax rule to be able to handle this case.

I also changed `SparkSQLParser` to be able to handle paths which contains white spaces.

### Why are the changes needed?

This is a bug.

I also have a plan which extends `ADD FILE/JAR/ARCHIVE` to take multiple paths like Hive and the syntax rule change is necessary for that.

### Does this PR introduce _any_ user-facing change?

Yes. Users can pass quoted paths when using `ADD FILE/JAR/ARCHIVE`.

### How was this patch tested?

New test.

Closes#32074 from sarutak/fix-list-files-bug.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Kousuke Saruta <sarutak@oss.nttdata.com>

### What changes were proposed in this pull request?

One of the main performance bottlenecks in query compilation is overly-generic tree transformation methods, namely `mapChildren` and `withNewChildren` (defined in `TreeNode`). These methods have an overly-generic implementation to iterate over the children and rely on reflection to create new instances. We have observed that, especially for queries with large query plans, a significant amount of CPU cycles are wasted in these methods. In this PR we make these methods more efficient, by delegating the iteration and instantiation to concrete node types. The benchmarks show that we can expect significant performance improvement in total query compilation time in queries with large query plans (from 30-80%) and about 20% on average.

#### Problem detail

The `mapChildren` method in `TreeNode` is overly generic and costly. To be more specific, this method:

- iterates over all the fields of a node using Scala’s product iterator. While the iteration is not reflection-based, thanks to the Scala compiler generating code for `Product`, we create many anonymous functions and visit many nested structures (recursive calls).

The anonymous functions (presumably compiled to Java anonymous inner classes) also show up quite high on the list in the object allocation profiles, so we are putting unnecessary pressure on GC here.

- does a lot of comparisons. Basically for each element returned from the product iterator, we check if it is a child (contained in the list of children) and then transform it. We can avoid that by just iterating over children, but in the current implementation, we need to gather all the fields (only transform the children) so that we can instantiate the object using the reflection.

- creates objects using reflection, by delegating to the `makeCopy` method, which is several orders of magnitude slower than using the constructor.

#### Solution

The proposed solution in this PR is rather straightforward: we rewrite the `mapChildren` method using the `children` and `withNewChildren` methods. The default `withNewChildren` method suffers from the same problems as `mapChildren` and we need to make it more efficient by specializing it in concrete classes. Similar to how each concrete query plan node already defines its children, it should also define how they can be constructed given a new list of children. Actually, the implementation is quite simple in most cases and is a one-liner thanks to the copy method present in Scala case classes. Note that we cannot abstract over the copy method, it’s generated by the compiler for case classes if no other type higher in the hierarchy defines it. For most concrete nodes, the implementation of `withNewChildren` looks like this:

```

override def withNewChildren(newChildren: Seq[LogicalPlan]): LogicalPlan = copy(children = newChildren)

```

The current `withNewChildren` method has two properties that we should preserve:

- It returns the same instance if the provided children are the same as its children, i.e., it preserves referential equality.

- It copies tags and maintains the origin links when a new copy is created.

These properties are hard to enforce in the concrete node type implementation. Therefore, we propose a template method `withNewChildrenInternal` that should be rewritten by the concrete classes and let the `withNewChildren` method take care of referential equality and copying:

```

override def withNewChildren(newChildren: Seq[LogicalPlan]): LogicalPlan = {

if (childrenFastEquals(children, newChildren)) {

this

} else {

CurrentOrigin.withOrigin(origin) {

val res = withNewChildrenInternal(newChildren)

res.copyTagsFrom(this)

res

}

}

}

```

With the refactoring done in a previous PR (https://github.com/apache/spark/pull/31932) most tree node types fall in one of the categories of `Leaf`, `Unary`, `Binary` or `Ternary`. These traits have a more efficient implementation for `mapChildren` and define a more specialized version of `withNewChildrenInternal` that avoids creating unnecessary lists. For example, the `mapChildren` method in `UnaryLike` is defined as follows:

```

override final def mapChildren(f: T => T): T = {

val newChild = f(child)

if (newChild fastEquals child) {

this.asInstanceOf[T]

} else {

CurrentOrigin.withOrigin(origin) {

val res = withNewChildInternal(newChild)

res.copyTagsFrom(this.asInstanceOf[T])

res

}

}

}

```

#### Results

With this PR, we have observed significant performance improvements in query compilation time, more specifically in the analysis and optimization phases. The table below shows the TPC-DS queries that had more than 25% speedup in compilation times. Biggest speedups are observed in queries with large query plans.

| Query | Speedup |

| ------------- | ------------- |

|q4 |29%|

|q9 |81%|

|q14a |31%|

|q14b |28%|

|q22 |33%|

|q33 |29%|

|q34 |25%|

|q39 |27%|

|q41 |27%|

|q44 |26%|

|q47 |28%|

|q48 |76%|

|q49 |46%|

|q56 |26%|

|q58 |43%|

|q59 |46%|

|q60 |50%|

|q65 |59%|

|q66 |46%|

|q67 |52%|

|q69 |31%|

|q70 |30%|

|q96 |26%|

|q98 |32%|

#### Binary incompatibility

Changing the `withNewChildren` in `TreeNode` breaks the binary compatibility of the code compiled against older versions of Spark because now it is expected that concrete `TreeNode` subclasses all implement the `withNewChildrenInternal` method. This is a problem, for example, when users write custom expressions. This change is the right choice, since it forces all newly added expressions to Catalyst implement it in an efficient manner and will prevent future regressions.

Please note that we have not completely removed the old implementation and renamed it to `legacyWithNewChildren`. This method will be removed in the future and for now helps the transition. There are expressions such as `UpdateFields` that have a complex way of defining children. Writing `withNewChildren` for them requires refactoring the expression. For now, these expressions use the old, slow method. In a future PR we address these expressions.

### Does this PR introduce _any_ user-facing change?

This PR does not introduce user facing changes but my break binary compatibility of the code compiled against older versions. See the binary compatibility section.

### How was this patch tested?

This PR is mainly a refactoring and passes existing tests.

Closes#32030 from dbaliafroozeh/ImprovedMapChildren.

Authored-by: Ali Afroozeh <ali.afroozeh@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

This PR fixes an issue that `ADD JAR` command can't add jar files which contain whitespaces in the path though `ADD FILE` and `ADD ARCHIVE` work with such files.

If we have `/some/path/test file.jar` and execute the following command:

```

ADD JAR "/some/path/test file.jar";

```

The following exception is thrown.

```

21/04/05 10:40:38 ERROR SparkSQLDriver: Failed in [add jar "/some/path/test file.jar"]

java.lang.IllegalArgumentException: Illegal character in path at index 9: /some/path/test file.jar

at java.net.URI.create(URI.java:852)

at org.apache.spark.sql.hive.HiveSessionResourceLoader.addJar(HiveSessionStateBuilder.scala:129)

at org.apache.spark.sql.execution.command.AddJarCommand.run(resources.scala:34)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.executeCollect(commands.scala:79)

```

This is because `HiveSessionStateBuilder` and `SessionStateBuilder` don't check whether the form of the path is URI or plain path and it always regards the path as URI form.

Whitespces should be encoded to `%20` so `/some/path/test file.jar` is rejected.

We can resolve this part by checking whether the given path is URI form or not.

Unfortunatelly, if we fix this part, another problem occurs.

When we execute `ADD JAR` command, Hive's `ADD JAR` command is executed in `HiveClientImpl.addJar` and `AddResourceProcessor.run` is transitively invoked.

In `AddResourceProcessor.run`, the command line is just split by `

s+` and the path is also split into `/some/path/test` and `file.jar` and passed to `ss.add_resources`.

f1e8713703/ql/src/java/org/apache/hadoop/hive/ql/processors/AddResourceProcessor.java (L56-L75)

So, the command still fails.

Even if we convert the form of the path to URI like `file:/some/path/test%20file.jar` and execute the following command:

```

ADD JAR "file:/some/path/test%20file";

```

The following exception is thrown.

```

21/04/05 10:40:53 ERROR SessionState: file:/some/path/test%20file.jar does not exist

java.lang.IllegalArgumentException: file:/some/path/test%20file.jar does not exist

at org.apache.hadoop.hive.ql.session.SessionState.validateFiles(SessionState.java:1168)

at org.apache.hadoop.hive.ql.session.SessionState$ResourceType.preHook(SessionState.java:1289)

at org.apache.hadoop.hive.ql.session.SessionState$ResourceType$1.preHook(SessionState.java:1278)

at org.apache.hadoop.hive.ql.session.SessionState.add_resources(SessionState.java:1378)

at org.apache.hadoop.hive.ql.session.SessionState.add_resources(SessionState.java:1336)

at org.apache.hadoop.hive.ql.processors.AddResourceProcessor.run(AddResourceProcessor.java:74)

```

The reason is `Utilities.realFile` invoked in `SessionState.validateFiles` returns `null` as the result of `fs.exists(path)` is `false`.

f1e8713703/ql/src/java/org/apache/hadoop/hive/ql/exec/Utilities.java (L1052-L1064)

`fs.exists` checks the existence of the given path by comparing the string representation of Hadoop's `Path`.

The string representation of `Path` is similar to URI but it's actually different.

`Path` doesn't encode the given path.

For example, the URI form of `/some/path/jar file.jar` is `file:/some/path/jar%20file.jar` but the `Path` form of it is `file:/some/path/jar file.jar`. So `fs.exists` returns false.

So the solution I come up with is removing Hive's `ADD JAR` from `HiveClientimpl.addJar`.

I think Hive's `ADD JAR` was used to add jar files to the class loader for metadata and isolate the class loader from the one for execution.

https://github.com/apache/spark/pull/6758/files#diff-cdb07de713c84779a5308f65be47964af865e15f00eb9897ccf8a74908d581bbR94-R103

But, as of SPARK-10810 and SPARK-10902 (#8909) are resolved, the class loaders for metadata and execution seem to be isolated with different way.

https://github.com/apache/spark/pull/8909/files#diff-8ef7cabf145d3fe7081da799fa415189d9708892ed76d4d13dd20fa27021d149R635-R641

In the current implementation, such class loaders seem to be isolated by `SharedState.jarClassLoader` and `IsolatedClientLoader.classLoader`.

https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/internal/SessionState.scala#L173-L188https://github.com/apache/spark/blob/master/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala#L956-L967

So I wonder we can remove Hive's `ADD JAR` from `HiveClientImpl.addJar`.

### Why are the changes needed?

This is a bug.

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

Closes#32052 from sarutak/add-jar-whitespace.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a followup for https://github.com/apache/spark/pull/31932.

In this PR we:

- Introduce the `QuaternaryLike` trait for node types with 4 children.

- Specialize more node types

- Fix a number of style errors that were introduced in the original PR.

### Why are the changes needed?

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

This is a refactoring, passes existing tests.

Closes#32065 from dbaliafroozeh/FollowupSPARK-34906.

Authored-by: Ali Afroozeh <ali.afroozeh@databricks.com>

Signed-off-by: herman <herman@databricks.com>

### What changes were proposed in this pull request?

This PR extends the current function registry and catalog to support table-valued functions by adding a table function registry. It also refactors `range` to be a built-in function in the table function registry.

### Why are the changes needed?

Currently, Spark resolves table-valued functions very differently from the other functions. This change is to make the behavior for table and non-table functions consistent. It also allows Spark to display information about built-in table-valued functions:

Before:

```scala

scala> sql("describe function range").show(false)

+--------------------------+

|function_desc |

+--------------------------+

|Function: range not found.|

+--------------------------+

```

After:

```scala

Function: range

Class: org.apache.spark.sql.catalyst.plans.logical.Range

Usage:

range(start: Long, end: Long, step: Long, numPartitions: Int)

range(start: Long, end: Long, step: Long)

range(start: Long, end: Long)

range(end: Long)

// Extended

Function: range

Class: org.apache.spark.sql.catalyst.plans.logical.Range

Usage:

range(start: Long, end: Long, step: Long, numPartitions: Int)

range(start: Long, end: Long, step: Long)

range(start: Long, end: Long)

range(end: Long)

Extended Usage:

Examples:

> SELECT * FROM range(1);

+---+

| id|

+---+

| 0|

+---+

> SELECT * FROM range(0, 2);

+---+

|id |

+---+

|0 |

|1 |

+---+

> SELECT range(0, 4, 2);

+---+

|id |

+---+

|0 |

|2 |

+---+

Since: 2.0.0

```

### Does this PR introduce _any_ user-facing change?

Yes. User will not be able to create a function with name `range` in the default database:

Before:

```scala

scala> sql("create function range as 'range'")

res3: org.apache.spark.sql.DataFrame = []

```

After:

```

scala> sql("create function range as 'range'")

org.apache.spark.sql.catalyst.analysis.FunctionAlreadyExistsException: Function 'default.range' already exists in database 'default'

```

### How was this patch tested?

Unit test

Closes#31791 from allisonwang-db/spark-34678-table-func-registry.

Authored-by: allisonwang-db <66282705+allisonwang-db@users.noreply.github.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

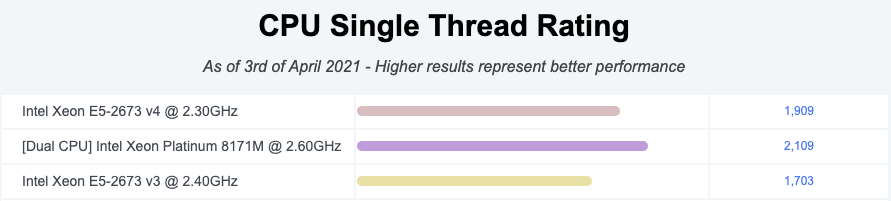

https://github.com/apache/spark/pull/32015 added a way to run benchmarks much more easily in the same GitHub Actions build. This PR updates the benchmark results by using the way.

**NOTE** that looks like GitHub Actions use four types of CPU given my observations:

- Intel(R) Xeon(R) Platinum 8171M CPU 2.60GHz

- Intel(R) Xeon(R) CPU E5-2673 v4 2.30GHz

- Intel(R) Xeon(R) CPU E5-2673 v3 2.40GHz

- Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz

Given my quick research, seems like they perform roughly similarly:

I couldn't find enough information about Intel(R) Xeon(R) Platinum 8272CL CPU 2.60GHz but the performance seems roughly similar given the numbers.

So shouldn't be a big deal especially given that this way is much easier, encourages contributors to run more and guarantee the same number of cores and same memory with the same softwares.

### Why are the changes needed?

To have a base line of the benchmarks accordingly.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

It was generated from:

- [Run benchmarks: * (JDK 11)](https://github.com/HyukjinKwon/spark/actions/runs/713575465)

- [Run benchmarks: * (JDK 8)](https://github.com/HyukjinKwon/spark/actions/runs/713154337)

Closes#32044 from HyukjinKwon/SPARK-34950.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

When SparkContext is initialed, if we want to start SparkSession, when we call

`SparkSession.builder.enableHiveSupport().getOrCreate()`, the SparkSession we created won't have hive support since

we have't reset existed SC's conf's `spark.sql.catalogImplementation`.

In this PR we use sharedState.conf to decide whether we should enable Hive Support.

### Why are the changes needed?

We should respect `enableHiveSupport`

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added UT

Closes#31680 from AngersZhuuuu/SPARK-34568.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Some `spark-submit` commands used to run benchmarks in the user's guide is wrong, we can't use these commands to run benchmarks successful.

So the major changes of this pr is correct these wrong commands, for example, run a benchmark which inherits from `SqlBasedBenchmark`, we must specify `--jars <spark core test jar>,<spark catalyst test jar>` because `SqlBasedBenchmark` based benchmark extends `BenchmarkBase(defined in spark core test jar)` and `SQLHelper(defined in spark catalyst test jar)`.

Another change of this pr is removed the `scalatest Assertions` dependency of Benchmarks because `scalatest-*.jar` are not in the distribution package, it will be troublesome to use.