## What changes were proposed in this pull request?

This patch introduces new options "startingOffsetsByTimestamp" and "endingOffsetsByTimestamp" to set specific timestamp per topic (since we're unlikely to set the different value per partition) to let source starts reading from offsets which have equal of greater timestamp, and ends reading until offsets which have equal of greater timestamp.

The new option would be optional of course, and take preference over existing offset options.

## How was this patch tested?

New unit tests added. Also manually tested basic functionality with Kafka 2.0.0 server.

Running query below

```

val df = spark.read.format("kafka")

.option("kafka.bootstrap.servers", "localhost:9092")

.option("subscribe", "spark_26848_test_v1,spark_26848_test_2_v1")

.option("startingOffsetsByTimestamp", """{"spark_26848_test_v1": 1549669142193, "spark_26848_test_2_v1": 1549669240965}""")

.option("endingOffsetsByTimestamp", """{"spark_26848_test_v1": 1549669265676, "spark_26848_test_2_v1": 1549699265676}""")

.load().selectExpr("CAST(value AS STRING)")

df.show()

```

with below records (one string which number part remarks when they're put after such timestamp) in

topic `spark_26848_test_v1`

```

hello1 1549669142193

world1 1549669142193

hellow1 1549669240965

world1 1549669240965

hello1 1549669265676

world1 1549669265676

```

topic `spark_26848_test_2_v1`

```

hello2 1549669142193

world2 1549669142193

hello2 1549669240965

world2 1549669240965

hello2 1549669265676

world2 1549669265676

```

the result of `df.show()` follows:

```

+--------------------+

| value|

+--------------------+

|world1 1549669240965|

|world1 1549669142193|

|world2 1549669240965|

|hello2 1549669240965|

|hellow1 154966924...|

|hello2 1549669265676|

|hello1 1549669142193|

|world2 1549669265676|

+--------------------+

```

Note that endingOffsets (as well as endingOffsetsByTimestamp) are exclusive.

Closes#23747 from HeartSaVioR/SPARK-26848.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Refactored SQL-related benchmark and made them depend on `SqlBasedBenchmark`. In particular, creation of Spark session are moved into `override def getSparkSession: SparkSession`.

### Why are the changes needed?

This should simplify maintenance of SQL-based benchmarks by reducing the number of dependencies. In the future, it should be easier to refactor & extend all SQL benchmarks by changing only one trait. Finally, all SQL-based benchmarks will look uniformly.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running the modified benchmarks.

Closes#25828 from MaxGekk/sql-benchmarks-refactoring.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This PR upgrade Scala to **2.12.10**.

Release notes:

- Fix regression in large string interpolations with non-String typed splices

- Revert "Generate shallower ASTs in pattern translation"

- Fix regression in classpath when JARs have 'a.b' entries beside 'a/b'

- Faster compiler: 5–10% faster since 2.12.8

- Improved compatibility with JDK 11, 12, and 13

- Experimental support for build pipelining and outline type checking

More details:

https://github.com/scala/scala/releases/tag/v2.12.10https://github.com/scala/scala/releases/tag/v2.12.9

## How was this patch tested?

Existing tests

Closes#25404 from wangyum/SPARK-28683.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

`KafkaDelegationTokenSuite` fails on different platforms with the following problem:

```

19/09/11 11:07:42.690 pool-1-thread-1-SendThread(localhost:44965) DEBUG ZooKeeperSaslClient: creating sasl client: Client=zkclient/localhostEXAMPLE.COM;service=zookeeper;serviceHostname=localhost.localdomain

...

NIOServerCxn.Factory:localhost/127.0.0.1:0: Zookeeper Server failed to create a SaslServer to interact with a client during session initiation:

javax.security.sasl.SaslException: Failure to initialize security context [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos credentails)]

at com.sun.security.sasl.gsskerb.GssKrb5Server.<init>(GssKrb5Server.java:125)

at com.sun.security.sasl.gsskerb.FactoryImpl.createSaslServer(FactoryImpl.java:85)

at javax.security.sasl.Sasl.createSaslServer(Sasl.java:524)

at org.apache.zookeeper.util.SecurityUtils$2.run(SecurityUtils.java:233)

at org.apache.zookeeper.util.SecurityUtils$2.run(SecurityUtils.java:229)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.zookeeper.util.SecurityUtils.createSaslServer(SecurityUtils.java:228)

at org.apache.zookeeper.server.ZooKeeperSaslServer.createSaslServer(ZooKeeperSaslServer.java:44)

at org.apache.zookeeper.server.ZooKeeperSaslServer.<init>(ZooKeeperSaslServer.java:38)

at org.apache.zookeeper.server.NIOServerCnxn.<init>(NIOServerCnxn.java:100)

at org.apache.zookeeper.server.NIOServerCnxnFactory.createConnection(NIOServerCnxnFactory.java:186)

at org.apache.zookeeper.server.NIOServerCnxnFactory.run(NIOServerCnxnFactory.java:227)

at java.lang.Thread.run(Thread.java:748)

Caused by: GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos credentails)

at sun.security.jgss.krb5.Krb5AcceptCredential.getInstance(Krb5AcceptCredential.java:87)

at sun.security.jgss.krb5.Krb5MechFactory.getCredentialElement(Krb5MechFactory.java:127)

at sun.security.jgss.GSSManagerImpl.getCredentialElement(GSSManagerImpl.java:193)

at sun.security.jgss.GSSCredentialImpl.add(GSSCredentialImpl.java:427)

at sun.security.jgss.GSSCredentialImpl.<init>(GSSCredentialImpl.java:62)

at sun.security.jgss.GSSManagerImpl.createCredential(GSSManagerImpl.java:154)

at com.sun.security.sasl.gsskerb.GssKrb5Server.<init>(GssKrb5Server.java:108)

... 13 more

NIOServerCxn.Factory:localhost/127.0.0.1:0: Client attempting to establish new session at /127.0.0.1:33742

SyncThread:0: Creating new log file: log.1

SyncThread:0: Established session 0x100003736ae0000 with negotiated timeout 10000 for client /127.0.0.1:33742

pool-1-thread-1-SendThread(localhost:35625): Session establishment complete on server localhost/127.0.0.1:35625, sessionid = 0x100003736ae0000, negotiated timeout = 10000

pool-1-thread-1-SendThread(localhost:35625): ClientCnxn:sendSaslPacket:length=0

pool-1-thread-1-SendThread(localhost:35625): saslClient.evaluateChallenge(len=0)

pool-1-thread-1-EventThread: zookeeper state changed (SyncConnected)

NioProcessor-1: No server entry found for kerberos principal name zookeeper/localhost.localdomainEXAMPLE.COM

NioProcessor-1: No server entry found for kerberos principal name zookeeper/localhost.localdomainEXAMPLE.COM

NioProcessor-1: Server not found in Kerberos database (7)

NioProcessor-1: Server not found in Kerberos database (7)

```

The problem reproducible if the `localhost` and `localhost.localdomain` order exhanged:

```

[systestgsomogyi-build spark]$ cat /etc/hosts

127.0.0.1 localhost.localdomain localhost localhost4 localhost4.localdomain4

::1 localhost.localdomain localhost localhost6 localhost6.localdomain6

```

The main problem is that `ZkClient` connects to the canonical loopback address (which is not necessarily `localhost`).

### Why are the changes needed?

`KafkaDelegationTokenSuite` failed in some environments.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing unit tests on different platforms.

Closes#25803 from gaborgsomogyi/SPARK-29027.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This patch adds new UT to ensure existing query (before Spark 3.0.0) with checkpoint doesn't break with default configuration of "includeHeaders" being introduced via SPARK-23539.

This patch also modifies existing test which checks type of columns to also check headers column as well.

### Why are the changes needed?

The patch adds missing tests which guarantees backward compatibility of the change of SPARK-23539.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

UT passed.

Closes#25792 from HeartSaVioR/SPARK-23539-FOLLOWUP.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This update adds support for Kafka Headers functionality in Structured Streaming.

## How was this patch tested?

With following unit tests:

- KafkaRelationSuite: "default starting and ending offsets with headers" (new)

- KafkaSinkSuite: "batch - write to kafka" (updated)

Closes#22282 from dongjinleekr/feature/SPARK-23539.

Lead-authored-by: Lee Dongjin <dongjin@apache.org>

Co-authored-by: Jungtaek Lim <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

reorganize the packages of DS v2 interfaces/classes:

1. `org.spark.sql.connector.catalog`: put `TableCatalog`, `Table` and other related interfaces/classes

2. `org.spark.sql.connector.expression`: put `Expression`, `Transform` and other related interfaces/classes

3. `org.spark.sql.connector.read`: put `ScanBuilder`, `Scan` and other related interfaces/classes

4. `org.spark.sql.connector.write`: put `WriteBuilder`, `BatchWrite` and other related interfaces/classes

### Why are the changes needed?

Data Source V2 has evolved a lot. It's a bit weird that `Expression` is in `org.spark.sql.catalog.v2` and `Table` is in `org.spark.sql.sources.v2`.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing tests

Closes#25700 from cloud-fan/package.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

JIRA :https://issues.apache.org/jira/browse/SPARK-29050

'a hdfs' change into 'an hdfs'

'an unique' change into 'a unique'

'an url' change into 'a url'

'a error' change into 'an error'

Closes#25756 from dengziming/feature_fix_typos.

Authored-by: dengziming <dengziming@growingio.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch enforces tests to prevent leaking newly created SparkContext while is created via initializing StreamingContext. Leaking SparkContext in test would make most of following tests being failed as well, so this patch applies defensive programming, trying its best to ensure SparkContext is cleaned up.

### Why are the changes needed?

We got some case in CI build where SparkContext is being leaked and other tests are affected by leaked SparkContext. Ideally we should isolate the environment among tests if possible.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Modified UTs.

Closes#25709 from HeartSaVioR/SPARK-29007.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

At the moment there are 3 places where communication protocol with Kafka cluster has to be set when delegation token used:

* On delegation token

* On source

* On sink

Most of the time users are using the same protocol on all these places (within one Kafka cluster). It would be better to declare it in one place (delegation token side) and Kafka sources/sinks can take this config over.

In this PR I've I've modified the code in a way that Kafka sources/sinks are taking over delegation token side `security.protocol` configuration when the token and the source/sink matches in `bootstrap.servers` configuration. This default configuration can be overwritten on each source/sink independently by using `kafka.security.protocol` configuration.

### Why are the changes needed?

The actual configuration's default behavior represents the minority of the use-cases and inconvenient.

### Does this PR introduce any user-facing change?

Yes, with this change users need to provide less configuration parameters by default.

### How was this patch tested?

Existing + additional unit tests.

Closes#25631 from gaborgsomogyi/SPARK-28928.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

- Remove SQLContext.createExternalTable and Catalog.createExternalTable, deprecated in favor of createTable since 2.2.0, plus tests of deprecated methods

- Remove HiveContext, deprecated in 2.0.0, in favor of `SparkSession.builder.enableHiveSupport`

- Remove deprecated KinesisUtils.createStream methods, plus tests of deprecated methods, deprecate in 2.2.0

- Remove deprecated MLlib (not Spark ML) linear method support, mostly utility constructors and 'train' methods, and associated docs. This includes methods in LinearRegression, LogisticRegression, Lasso, RidgeRegression. These have been deprecated since 2.0.0

- Remove deprecated Pyspark MLlib linear method support, including LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD

- Remove 'runs' argument in KMeans.train() method, which has been a no-op since 2.0.0

- Remove deprecated ChiSqSelector isSorted protected method

- Remove deprecated 'yarn-cluster' and 'yarn-client' master argument in favor of 'yarn' and deploy mode 'cluster', etc

Notes:

- I was not able to remove deprecated DataFrameReader.json(RDD) in favor of DataFrameReader.json(Dataset); the former was deprecated in 2.2.0, but, it is still needed to support Pyspark's .json() method, which can't use a Dataset.

- Looks like SQLContext.createExternalTable was not actually deprecated in Pyspark, but, almost certainly was meant to be? Catalog.createExternalTable was.

- I afterwards noted that the toDegrees, toRadians functions were almost removed fully in SPARK-25908, but Felix suggested keeping just the R version as they hadn't been technically deprecated. I'd like to revisit that. Do we really want the inconsistency? I'm not against reverting it again, but then that implies leaving SQLContext.createExternalTable just in Pyspark too, which seems weird.

- I *kept* LogisticRegressionWithSGD, LinearRegressionWithSGD, LassoWithSGD, RidgeRegressionWithSGD in Pyspark, though deprecated, as it is hard to remove them (still used by StreamingLogisticRegressionWithSGD?) and they are not fully removed in Scala. Maybe should not have been deprecated.

### Why are the changes needed?

Deprecated items are easiest to remove in a major release, so we should do so as much as possible for Spark 3. This does not target items deprecated 'recently' as of Spark 2.3, which is still 18 months old.

### Does this PR introduce any user-facing change?

Yes, in that deprecated items are removed from some public APIs.

### How was this patch tested?

Existing tests.

Closes#25684 from srowen/SPARK-28980.

Lead-authored-by: Sean Owen <sean.owen@databricks.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

KinesisInputDStream currently does not provide a way to disable

CloudWatch metrics push. Its default level is "DETAILED" which pushes

10s of metrics every 10 seconds. When dealing with multiple streaming

jobs this add up pretty quickly, leading to thousands of dollars in cost.

To address this problem, this PR adds interfaces for accessing

KinesisClientLibConfiguration's `withMetrics` and

`withMetricsEnabledDimensions` methods to KinesisInputDStream

so that users can configure KCL's metrics levels and dimensions.

## How was this patch tested?

By running updated unit tests in KinesisInputDStreamBuilderSuite.

In addition, I ran a Streaming job with MetricsLevel.NONE and confirmed:

* there's no data point for the "Operation", "Operation, ShardId" and "WorkerIdentifier" dimensions on the AWS management console

* there's no DEBUG level message from Amazon KCL, such as "Successfully published xx datums."

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#24651 from sekikn/SPARK-27420.

Authored-by: Kengo Seki <sekikn@apache.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This patch does pooling for both kafka consumers as well as fetched data. The overall benefits of the patch are following:

* Both pools support eviction on idle objects, which will help closing invalid idle objects which topic or partition are no longer be assigned to any tasks.

* It also enables applying different policies on pool, which helps optimization of pooling for each pool.

* We concerned about multiple tasks pointing same topic partition as well as same group id, and existing code can't handle this hence excess seek and fetch could happen. This patch properly handles the case.

* It also makes the code always safe to leverage cache, hence no need to maintain reuseCache parameter.

Moreover, pooling kafka consumers is implemented based on Apache Commons Pool, which also gives couple of benefits:

* We can get rid of synchronization of KafkaDataConsumer object while acquiring and returning InternalKafkaConsumer.

* We can extract the feature of object pool to outside of the class, so that the behaviors of the pool can be tested easily.

* We can get various statistics for the object pool, and also be able to enable JMX for the pool.

FetchedData instances are pooled by custom implementation of pool instead of leveraging Apache Commons Pool, because they have CacheKey as first key and "desired offset" as second key which "desired offset" is changing - I haven't found any general pool implementations supporting this.

This patch brings additional dependency, Apache Commons Pool 2.6.0 into `spark-sql-kafka-0-10` module.

## How was this patch tested?

Existing unit tests as well as new tests for object pool.

Also did some experiment regarding proving concurrent access of consumers for same topic partition.

* Made change on both sides (master and patch) to log when creating Kafka consumer or fetching records from Kafka is happening.

* branches

* master: https://github.com/HeartSaVioR/spark/tree/SPARK-25151-master-ref-debugging

* patch: https://github.com/HeartSaVioR/spark/tree/SPARK-25151-debugging

* Test query (doing self-join)

* https://gist.github.com/HeartSaVioR/d831974c3f25c02846f4b15b8d232cc2

* Ran query from spark-shell, with using `local[*]` to maximize the chance to have concurrent access

* Collected the count of fetch requests on Kafka via command: `grep "creating new Kafka consumer" logfile | wc -l`

* Collected the count of creating Kafka consumers via command: `grep "fetching data from Kafka consumer" logfile | wc -l`

Topic and data distribution is follow:

```

truck_speed_events_stream_spark_25151_v1:0:99440

truck_speed_events_stream_spark_25151_v1:1:99489

truck_speed_events_stream_spark_25151_v1:2:397759

truck_speed_events_stream_spark_25151_v1:3:198917

truck_speed_events_stream_spark_25151_v1:4:99484

truck_speed_events_stream_spark_25151_v1:5:497320

truck_speed_events_stream_spark_25151_v1:6:99430

truck_speed_events_stream_spark_25151_v1:7:397887

truck_speed_events_stream_spark_25151_v1:8:397813

truck_speed_events_stream_spark_25151_v1:9:0

```

The experiment only used smallest 4 partitions (0, 1, 4, 6) from these partitions to finish the query earlier.

The result of experiment is below:

branch | create Kafka consumer | fetch request

-- | -- | --

master | 1986 | 2837

patch | 8 | 1706

Closes#22138 from HeartSaVioR/SPARK-25151.

Lead-authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Co-authored-by: Jungtaek Lim <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

The Experimental and Evolving annotations are both (like Unstable) used to express that a an API may change. However there are many things in the code that have been marked that way since even Spark 1.x. Per the dev thread, anything introduced at or before Spark 2.3.0 is pretty much 'stable' in that it would not change without a deprecation cycle. Therefore I'd like to remove most of these annotations. And, remove the `:: Experimental ::` scaladoc tag too. And likewise for Python, R.

The changes below can be summarized as:

- Generally, anything introduced at or before Spark 2.3.0 has been unmarked as neither Evolving nor Experimental

- Obviously experimental items like DSv2, Barrier mode, ExperimentalMethods are untouched

- I _did_ unmark a few MLlib classes introduced in 2.4, as I am quite confident they're not going to change (e.g. KolmogorovSmirnovTest, PowerIterationClustering)

It's a big change to review, so I'd suggest scanning the list of _files_ changed to see if any area seems like it should remain partly experimental and examine those.

### Why are the changes needed?

Many of these annotations are incorrect; the APIs are de facto stable. Leaving them also makes legitimate usages of the annotations less meaningful.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#25558 from srowen/SPARK-28855.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

At the moment Kafka parameter reduction is expecting `SparkEnv`. This must exist in normal queries but several unit tests are not providing it to make things simple. As an end-result such tests are throwing similar exception:

```

java.lang.NullPointerException

at org.apache.spark.kafka010.KafkaRedactionUtil$.redactParams(KafkaRedactionUtil.scala:29)

at org.apache.spark.kafka010.KafkaRedactionUtilSuite.$anonfun$new$1(KafkaRedactionUtilSuite.scala:33)

at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85)

at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83)

at org.scalatest.OutcomeOf$.outcomeOf(OutcomeOf.scala:104)

at org.scalatest.Transformer.apply(Transformer.scala:22)

at org.scalatest.Transformer.apply(Transformer.scala:20)

at org.scalatest.FunSuiteLike$$anon$1.apply(FunSuiteLike.scala:186)

at org.apache.spark.SparkFunSuite.withFixture(SparkFunSuite.scala:149)

at org.scalatest.FunSuiteLike.invokeWithFixture$1(FunSuiteLike.scala:184)

at org.scalatest.FunSuiteLike.$anonfun$runTest$1(FunSuiteLike.scala:196)

at org.scalatest.SuperEngine.runTestImpl(Engine.scala:289)

at org.scalatest.FunSuiteLike.runTest(FunSuiteLike.scala:196)

at org.scalatest.FunSuiteLike.runTest$(FunSuiteLike.scala:178)

at org.apache.spark.SparkFunSuite.org$scalatest$BeforeAndAfterEach$$super$runTest(SparkFunSuite.scala:56)

at org.scalatest.BeforeAndAfterEach.runTest(BeforeAndAfterEach.scala:221)

at org.scalatest.BeforeAndAfterEach.runTest$(BeforeAndAfterEach.scala:214)

at org.apache.spark.SparkFunSuite.runTest(SparkFunSuite.scala:56)

at org.scalatest.FunSuiteLike.$anonfun$runTests$1(FunSuiteLike.scala:229)

at org.scalatest.SuperEngine.$anonfun$runTestsInBranch$1(Engine.scala:396)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.scalatest.SuperEngine.traverseSubNodes$1(Engine.scala:384)

at org.scalatest.SuperEngine.runTestsInBranch(Engine.scala:379)

at org.scalatest.SuperEngine.runTestsImpl(Engine.scala:461)

at org.scalatest.FunSuiteLike.runTests(FunSuiteLike.scala:229)

at org.scalatest.FunSuiteLike.runTests$(FunSuiteLike.scala:228)

at org.scalatest.FunSuite.runTests(FunSuite.scala:1560)

at org.scalatest.Suite.run(Suite.scala:1147)

at org.scalatest.Suite.run$(Suite.scala:1129)

at org.scalatest.FunSuite.org$scalatest$FunSuiteLike$$super$run(FunSuite.scala:1560)

at org.scalatest.FunSuiteLike.$anonfun$run$1(FunSuiteLike.scala:233)

at org.scalatest.SuperEngine.runImpl(Engine.scala:521)

at org.scalatest.FunSuiteLike.run(FunSuiteLike.scala:233)

at org.scalatest.FunSuiteLike.run$(FunSuiteLike.scala:232)

at org.apache.spark.SparkFunSuite.org$scalatest$BeforeAndAfterAll$$super$run(SparkFunSuite.scala:56)

at org.scalatest.BeforeAndAfterAll.liftedTree1$1(BeforeAndAfterAll.scala:213)

at org.scalatest.BeforeAndAfterAll.run(BeforeAndAfterAll.scala:210)

at org.scalatest.BeforeAndAfterAll.run$(BeforeAndAfterAll.scala:208)

at org.apache.spark.SparkFunSuite.run(SparkFunSuite.scala:56)

at org.scalatest.tools.SuiteRunner.run(SuiteRunner.scala:45)

at org.scalatest.tools.Runner$.$anonfun$doRunRunRunDaDoRunRun$13(Runner.scala:1346)

at org.scalatest.tools.Runner$.$anonfun$doRunRunRunDaDoRunRun$13$adapted(Runner.scala:1340)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.scalatest.tools.Runner$.doRunRunRunDaDoRunRun(Runner.scala:1340)

at org.scalatest.tools.Runner$.$anonfun$runOptionallyWithPassFailReporter$24(Runner.scala:1031)

at org.scalatest.tools.Runner$.$anonfun$runOptionallyWithPassFailReporter$24$adapted(Runner.scala:1010)

at org.scalatest.tools.Runner$.withClassLoaderAndDispatchReporter(Runner.scala:1506)

at org.scalatest.tools.Runner$.runOptionallyWithPassFailReporter(Runner.scala:1010)

at org.scalatest.tools.Runner$.run(Runner.scala:850)

at org.scalatest.tools.Runner.run(Runner.scala)

at org.jetbrains.plugins.scala.testingSupport.scalaTest.ScalaTestRunner.runScalaTest2(ScalaTestRunner.java:131)

at org.jetbrains.plugins.scala.testingSupport.scalaTest.ScalaTestRunner.main(ScalaTestRunner.java:28)

```

These are annoying and only red herrings so I would like to make them disappear.

There are basically 2 ways to handle this situation:

* Add default value for `SparkEnv` in `KafkaReductionUtil`

* Add `SparkEnv` to all such tests => I think it would be overkill and would just increase number of lines without real value

Considering this I've chosen the first approach.

### Why are the changes needed?

Couple of tests are throwing exceptions even if no real problem.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New + additional unit tests.

Closes#25621 from gaborgsomogyi/safe-reduct.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

At the moment no end-to-end Kafka delegation token test exists which was mainly because of missing embedded KDC. KDC is missing in general from the testing side so I've discovered what kind of possibilities are there. The most obvious choice is the MiniKDC inside the Hadoop library where Apache Kerby runs in the background. What this PR contains:

* Added MiniKDC as test dependency from Hadoop

* Added `maven-bundle-plugin` because couple of dependencies are coming in bundle format

* Added security mode to `KafkaTestUtils`. Namely start KDC -> start Zookeeper in secure mode -> start Kafka in secure mode

* Added a roundtrip test (saves and reads back data from Kafka)

### Why are the changes needed?

No such test exists + security testing with KDC is completely missing.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing + additional unit tests.

I've put the additional test into a loop and was consuming ~10 sec average.

Closes#25477 from gaborgsomogyi/SPARK-28760.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Currently we have 2 configs to specify which v2 sources should fallback to v1 code path. One config for read path, and one config for write path.

However, I found it's awkward to work with these 2 configs:

1. for `CREATE TABLE USING format`, should this be read path or write path?

2. for `V2SessionCatalog.loadTable`, we need to return `UnresolvedTable` if it's a DS v1 or we need to fallback to v1 code path. However, at that time, we don't know if the returned table will be used for read or write.

We don't have any new features or perf improvement in file source v2. The fallback API is just a safeguard if we have bugs in v2 implementations. There are not many benefits to support falling back to v1 for read and write path separately.

This PR proposes to merge these 2 configs into one.

## How was this patch tested?

existing tests

Closes#25465 from cloud-fan/merge-conf.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch proposes to reuse KafkaSourceInitialOffsetWriter to remove identical code in KafkaSource.

Credit to jaceklaskowski for finding this.

https://lists.apache.org/thread.html/7faa6ac29d871444eaeccefc520e3543a77f4362af4bb0f12a3f7cb2%3Cdev.spark.apache.org%3E

### Why are the changes needed?

The code is duplicated with identical code, which opens the chance to maintain the code separately and might end up with bugs not addressed one side.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs, as it's simple refactor.

Closes#25583 from HeartSaVioR/MINOR-SS-reuse-KafkaSourceInitialOffsetWriter.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

When Task retry happens with Kafka source then it's not known whether the consumer is the issue so the old consumer removed from cache and new consumer created. The feature works fine but not covered with tests.

In this PR I've added such test for DStreams + Structured Streaming.

### Why are the changes needed?

No such tests are there.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing + new unit tests.

Closes#25582 from gaborgsomogyi/SPARK-28875.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

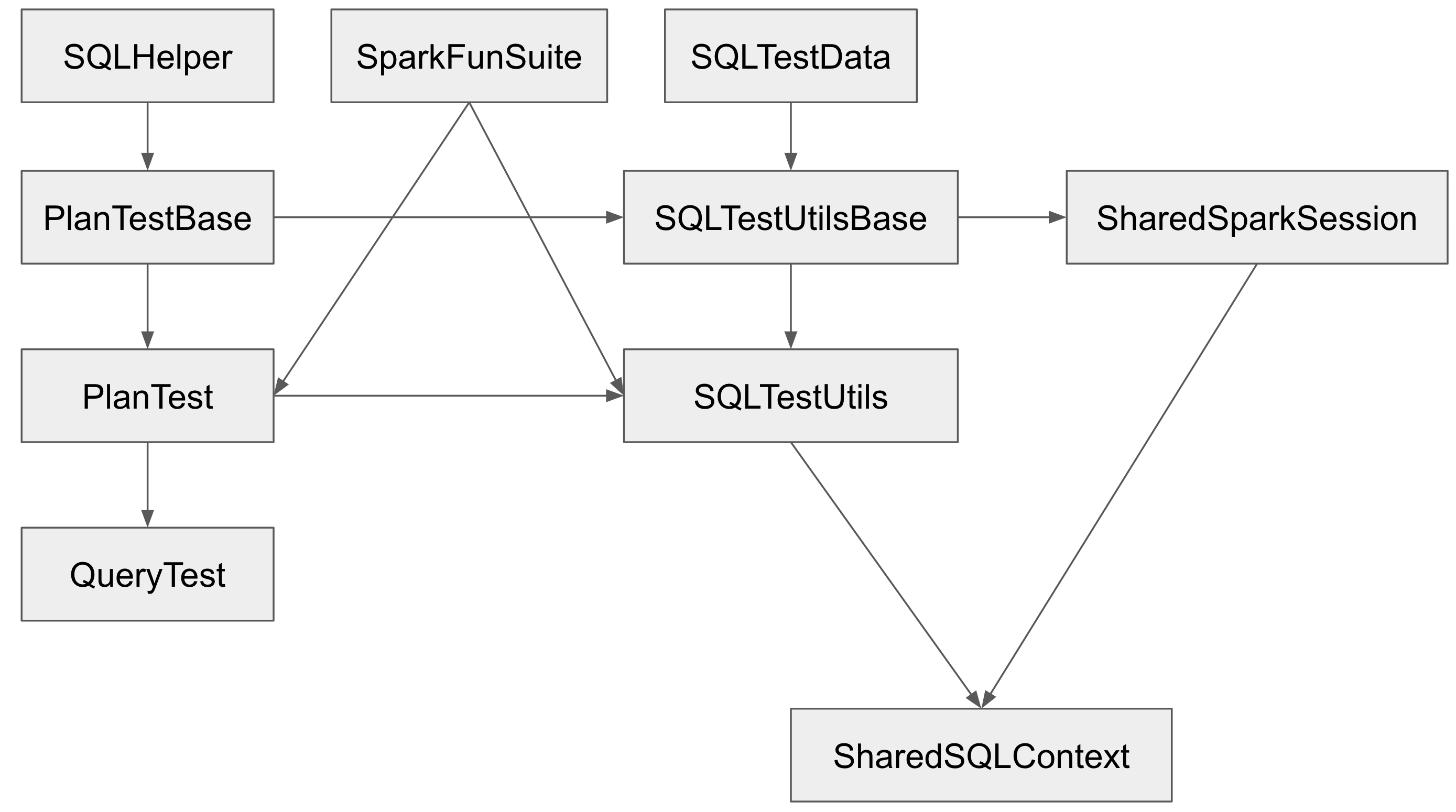

The Spark SQL test framework needs to support 2 kinds of tests:

1. tests inside Spark to test Spark itself (extends `SparkFunSuite`)

2. test outside of Spark to test Spark applications (introduced at b57ed2245c)

The class hierarchy of the major testing traits:

`PlanTestBase`, `SQLTestUtilsBase` and `SharedSparkSession` intentionally don't extend `SparkFunSuite`, so that they can be used for tests outside of Spark. Tests in Spark should extends `QueryTest` and/or `SharedSQLContext` in most cases.

However, the name is a little confusing. As a result, some test suites extend `SharedSparkSession` instead of `SharedSQLContext`. `SharedSparkSession` doesn't work well with `SparkFunSuite` as it doesn't have the special handling of thread auditing in `SharedSQLContext`. For example, you will see a warning starting with `===== POSSIBLE THREAD LEAK IN SUITE` when you run `DataFrameSelfJoinSuite`.

This PR proposes to rename `SharedSparkSession` to `SharedSparkSessionBase`, and rename `SharedSQLContext` to `SharedSparkSession`.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review https://spark.apache.org/contributing.html before opening a pull request.

Closes#25463 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

[SPARK-28163](https://issues.apache.org/jira/browse/SPARK-28163) fixed a bug and during the analysis we've concluded it would be more robust to use `CaseInsensitiveMap` inside Kafka source. This case less lower/upper case problem would rise in the future.

Please note this PR doesn't intend to solve any kind of actual problem but finish the concept added in [SPARK-28163](https://issues.apache.org/jira/browse/SPARK-28163) (in a fix PR I didn't want to add too invasive changes). In this PR I've changed `Map[String, String]` to `CaseInsensitiveMap[String]` to enforce the usage. These are the main use-cases:

* `contains` => `CaseInsensitiveMap` solves it

* `get...` => `CaseInsensitiveMap` solves it

* `filter` => keys must be converted to lowercase because there is no guarantee that the incoming map has such key set

* `find` => keys must be converted to lowercase because there is no guarantee that the incoming map has such key set

* passing parameters to Kafka consumer/producer => keys must be converted to lowercase because there is no guarantee that the incoming map has such key set

## How was this patch tested?

Existing unit tests.

Closes#25418 from gaborgsomogyi/SPARK-28695.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The mapping of Spark schema to Avro schema is many-to-many. (See https://spark.apache.org/docs/latest/sql-data-sources-avro.html#supported-types-for-spark-sql---avro-conversion)

The default schema mapping might not be exactly what users want. For example, by default, a "string" column is always written as "string" Avro type, but users might want to output the column as "enum" Avro type.

With PR https://github.com/apache/spark/pull/21847, Spark supports user-specified schema in the batch writer.

For the function `to_avro`, we should support user-specified output schema as well.

## How was this patch tested?

Unit test.

Closes#25419 from gengliangwang/to_avro.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR fixed typos in comments and replace the explicit type with '<>' for Java 8+.

## How was this patch tested?

Manually tested.

Closes#25338 from younggyuchun/younggyu.

Authored-by: younggyu chun <younggyuchun@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

There are "unsafe" conversions in the Kafka connector.

`CaseInsensitiveStringMap` comes in which is then converted the following way:

```

...

options.asScala.toMap

...

```

The main problem with this is that such case it looses its case insensitive nature

(case insensitive map is converting the key to lower case when get/contains called).

In this PR I'm using `CaseInsensitiveMap` to solve this problem.

## How was this patch tested?

Existing + additional unit tests.

Closes#24967 from gaborgsomogyi/SPARK-28163.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

`KafkaOffsetRangeCalculator.getRanges` may drop offsets due to round off errors. The test added in this PR is one example.

This PR rewrites the logic in `KafkaOffsetRangeCalculator.getRanges` to ensure it never drops offsets.

## How was this patch tested?

The regression test.

Closes#25237 from zsxwing/fix-range.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This PR change `CalendarIntervalType`'s readable string representation from `calendarinterval` to `interval`.

## How was this patch tested?

Existing UT

Closes#25225 from wangyum/SPARK-28469.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

PostgreSQL doesn't have `TINYINT`, which would map directly, but `SMALLINT`s are sufficient for uni-directional translation.

A side-effect of this fix is that `AggregatedDialect` is now usable with multiple dialects targeting `jdbc:postgresql`, as `PostgresDialect.getJDBCType` no longer throws (for which reason backporting this fix would be lovely):

1217996f15/sql/core/src/main/scala/org/apache/spark/sql/jdbc/AggregatedDialect.scala (L42)

`dialects.flatMap` currently throws on the first attempt to get a JDBC type preventing subsequent dialects in the chain from providing an alternative.

## How was this patch tested?

Unit tests.

Closes#24845 from mojodna/postgres-byte-type-mapping.

Authored-by: Seth Fitzsimmons <seth@mojodna.net>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

#25010 breaks the integration test suite due to the changing the user-facing exception like the following. This PR fixes the integration test suite.

```scala

- require(

- decimalVal.precision <= precision,

- s"Decimal precision ${decimalVal.precision} exceeds max precision $precision")

+ if (decimalVal.precision > precision) {

+ throw new ArithmeticException(

+ s"Decimal precision ${decimalVal.precision} exceeds max precision $precision")

+ }

```

## How was this patch tested?

Manual test.

```

$ build/mvn install -DskipTests

$ build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 test

```

Closes#25165 from dongjoon-hyun/SPARK-28201.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR aims to correct mappings in `MsSqlServerDialect`. `ShortType` is mapped to `SMALLINT` and `FloatType` is mapped to `REAL` per [JBDC mapping]( https://docs.microsoft.com/en-us/sql/connect/jdbc/using-basic-data-types?view=sql-server-2017) respectively.

ShortType and FloatTypes are not correctly mapped to right JDBC types when using JDBC connector. This results in tables and spark data frame being created with unintended types. The issue was observed when validating against SQLServer.

Refer [JBDC mapping]( https://docs.microsoft.com/en-us/sql/connect/jdbc/using-basic-data-types?view=sql-server-2017 ) for guidance on mappings between SQLServer, JDBC and Java. Note that java "Short" type should be mapped to JDBC "SMALLINT" and java Float should be mapped to JDBC "REAL".

Some example issue that can happen because of wrong mappings

- Write from df with column type results in a SQL table of with column type as INTEGER as opposed to SMALLINT.Thus a larger table that expected.

- Read results in a dataframe with type INTEGER as opposed to ShortType

- ShortType has a problem in both the the write and read path

- FloatTypes only have an issue with read path. In the write path Spark data type 'FloatType' is correctly mapped to JDBC equivalent data type 'Real'. But in the read path when JDBC data types need to be converted to Catalyst data types ( getCatalystType) 'Real' gets incorrectly gets mapped to 'DoubleType' rather than 'FloatType'.

Refer #28151 which contained this fix as one part of a larger PR. Following PR #28151 discussion it was decided to file seperate PRs for each of the fixes.

## How was this patch tested?

UnitTest added in JDBCSuite.scala and these were tested.

Integration test updated and passed in MsSqlServerDialect.scala

E2E test done with SQLServer

Closes#25146 from shivsood/float_short_type_fix.

Authored-by: shivsood <shivsood@microsoft.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This patch proposes moving all Trigger implementations to `Triggers.scala`, to avoid exposing these implementations to the end users and let end users only deal with `Trigger.xxx` static methods. This fits the intention of deprecation of `ProcessingTIme`, and we agree to move others without deprecation as this patch will be shipped in major version (Spark 3.0.0).

## How was this patch tested?

UTs modified to work with newly introduced class.

Closes#24996 from HeartSaVioR/SPARK-28199.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

There are some hardcoded configs, using config entry to replace them.

## How was this patch tested?

Existing UT

Closes#25059 from WangGuangxin/ConfigEntry.

Authored-by: wangguangxin.cn <wangguangxin.cn@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

At the moment Kafka delegation tokens are fetched through `AdminClient` but there is no possibility to add custom configuration parameters. In [options](https://spark.apache.org/docs/2.4.3/structured-streaming-kafka-integration.html#kafka-specific-configurations) there is already a possibility to add custom configurations.

In this PR I've added similar this possibility to `AdminClient`.

## How was this patch tested?

Existing + added unit tests.

```

cd docs/

SKIP_API=1 jekyll build

```

Manual webpage check.

Closes#24875 from gaborgsomogyi/SPARK-28055.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

`DirectKafkaStreamSuite.offset recovery from kafka` commits offsets to Kafka with `Consumer.commitAsync` API (and then reads it back). Since this API is asynchronous it may send notifications late(or not at all). The actual test makes the assumption if the data sent and collected then the offset must be committed as well. This is not true.

In this PR I've made the following modifications:

* Wait for async offset commit before context stopped

* Added commit succeed log to see whether it arrived at all

* Using `ConcurrentHashMap` for committed offsets because 2 threads are using the variable (`JobGenerator` and `ScalaTest...`)

## How was this patch tested?

Existing unit test in a loop + jenkins runs.

Closes#25100 from gaborgsomogyi/SPARK-28335.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Migrate Avro to File source V2.

## How was this patch tested?

Unit test

Closes#25017 from gengliangwang/avroV2.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This pr upgrades Postgres docker image for integration tests.

## How was this patch tested?

manual tests:

```

./build/mvn install -DskipTests

./build/mvn test -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12

```

Closes#25050 from wangyum/SPARK-28248.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This patch adds missing UT which tests the changed behavior of original patch #24942.

## How was this patch tested?

Newly added UT.

Closes#24999 from HeartSaVioR/SPARK-28142-FOLLOWUP.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

According to the documentation `groupIdPrefix` should be available for `streaming and batch`.

It is not the case because the batch part is missing.

In this PR I've added:

* Structured Streaming test for v1 and v2 to cover `groupIdPrefix`

* Batch test for v1 and v2 to cover `groupIdPrefix`

* Added `groupIdPrefix` usage in batch

## How was this patch tested?

Additional + existing unit tests.

Closes#25030 from gaborgsomogyi/SPARK-28232.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Kafka batch data source is using v1 at the moment. In the PR I've migrated to v2. Majority of the change is moving code.

What this PR contains:

* useV1Sources usage fixed in `DataFrameReader` and `DataFrameWriter`

* `KafkaBatch` added to handle DSv2 batch reading

* `KafkaBatchWrite` added to handle DSv2 batch writing

* `KafkaBatchPartitionReader` extracted to share between batch and microbatch

* `KafkaDataWriter` extracted to share between batch, microbatch and continuous

* Batch related source/sink tests are now executing on v1 and v2 connectors

* Couple of classes hidden now, functions moved + couple of minor fixes

## How was this patch tested?

Existing + added unit tests.

Closes#24738 from gaborgsomogyi/SPARK-23098.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This patch addresses a missing spot which Map should be passed as CaseInsensitiveStringMap - KafkaContinuousStream seems to be only the missed one.

Before this fix, it has a relevant bug where `pollTimeoutMs` is always set to default value, as the value of `KafkaSourceProvider.CONSUMER_POLL_TIMEOUT` is `kafkaConsumer.pollTimeoutMs` which key-lowercased map has been provided as `sourceOptions`.

## How was this patch tested?

N/A.

Closes#24942 from HeartSaVioR/MINOR-use-case-insensitive-map-for-kafka-continuous-source.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

When using `from_avro` to deserialize avro data to catalyst StructType format, if `ConvertToLocalRelation` is applied at the time, `from_avro` produces only the last value (overriding previous values).

The cause is `AvroDeserializer` reuses output row for StructType. Normally, it should be fine in Spark SQL. But `ConvertToLocalRelation` just uses `InterpretedProjection` to project local rows. `InterpretedProjection` creates new row for each output thro, it includes the same nested row object from `AvroDeserializer`. By the end, converted local relation has only last value.

I think there're two possible options:

1. Make `AvroDeserializer` output new row for StructType.

2. Use `InterpretedMutableProjection` in `ConvertToLocalRelation` and call `copy()` on output rows.

Option 2 is chose because previously `ConvertToLocalRelation` also creates new rows, this `InterpretedMutableProjection` + `copy()` shoudn't bring too much performance penalty. `ConvertToLocalRelation` should be arguably less critical, compared with `AvroDeserializer`.

## How was this patch tested?

Added test.

Closes#24805 from viirya/SPARK-27798.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Kafka parameters are logged at several places and the following parameters has to be redacted:

* Delegation token

* `ssl.truststore.password`

* `ssl.keystore.password`

* `ssl.key.password`

This PR contains:

* Spark central redaction framework used to redact passwords (`spark.redaction.regex`)

* Custom redaction added to handle `sasl.jaas.config` (delegation token)

* Redaction code added into consumer/producer code

* Test refactor

## How was this patch tested?

Existing + additional unit tests.

Closes#24627 from gaborgsomogyi/SPARK-27748.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This PR aims to fix an issue on a union avro type with more than one non-null value (for instance `["string", "null", "int"]`) whose the deserialization to a DataFrame would throw a `java.lang.ArrayIndexOutOfBoundsException`. The issue was that the `fieldWriter` relied on the index from the avro schema before nulls were filtered out.

## How was this patch tested?

A test for the case of multiple non-null values was added and the tests were run using sbt by running `testOnly org.apache.spark.sql.avro.AvroSuite`

Closes#24722 from gcmerz/master.

Authored-by: Gabbi Merz <gmerz@palantir.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

When the data is read from the sources, the catalyst schema is always nullable. Since Avro uses Union type to represent nullable, when any non-nullable avro file is read and then written out, the schema will always be changed.

This PR provides a solution for users to keep the Avro schema without being forced to use Union type.

## How was this patch tested?

One test is added.

Closes#24682 from dbtsai/avroNull.

Authored-by: DB Tsai <d_tsai@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

Use https URL for CRAN repo (and for a Scala download in a Dockerfile)

## How was this patch tested?

Existing tests.

Closes#24664 from srowen/SPARK-27794.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Remove obsolete spark-mesos Dockerfile example. This isn't tested and apparently hasn't been updated in 4 years.

## How was this patch tested?

N/A

Closes#24667 from srowen/SPARK-27796.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Spark Avro reader supports reading avro files with provided schema with different field orderings. However, the avro writer doesn't support this feature. This PR enables the Spark avro writer with this feature.

## How was this patch tested?

New test is added.

Closes#24635 from dbtsai/avroFix.

Lead-authored-by: DB Tsai <d_tsai@apple.com>

Co-authored-by: Brian Lindblom <blindblom@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

Kafka related Spark parameters has to start with `spark.kafka.` and not with `spark.sql.`. Because of this I've renamed `spark.sql.kafkaConsumerCache.capacity`.

Since Kafka consumer caching is not documented I've added this also.

## How was this patch tested?

Existing + added unit test.

```

cd docs

SKIP_API=1 jekyll build

```

and manual webpage check.

Closes#24590 from gaborgsomogyi/SPARK-27687.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This removes usage of `Traversable`, which is removed in Scala 2.13. This is mostly an internal change, except for the change in the `SparkConf.setAll` method. See additional comments below.

## How was this patch tested?

Existing tests.

Closes#24584 from srowen/SPARK-27680.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

[SPARK-27343](https://issues.apache.org/jira/projects/SPARK/issues/SPARK-27343)

Based on the previous PR: https://github.com/apache/spark/pull/24270

Extracting parameters , building the objects of ConfigEntry.

For example:

for the parameter "spark.kafka.producer.cache.timeout",we build

```

private[kafka010] val PRODUCER_CACHE_TIMEOUT =

ConfigBuilder("spark.kafka.producer.cache.timeout")

.doc("The expire time to remove the unused producers.")

.timeConf(TimeUnit.MILLISECONDS)

.createWithDefaultString("10m")

```

Closes#24574 from hehuiyuan/hehuiyuan-patch-9.

Authored-by: hehuiyuan <hehuiyuan@ZBMAC-C02WD3K5H.local>

Signed-off-by: Sean Owen <sean.owen@databricks.com>