### What changes were proposed in this pull request?

**Hive 2.3.7** fixed these issues:

- HIVE-21508: ClassCastException when initializing HiveMetaStoreClient on JDK10 or newer

- HIVE-21980:Parsing time can be high in case of deeply nested subqueries

- HIVE-22249: Support Parquet through HCatalog

### Why are the changes needed?

Fix CCE during creating HiveMetaStoreClient in JDK11 environment: [SPARK-29245](https://issues.apache.org/jira/browse/SPARK-29245).

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

- [x] Test Jenkins with Hadoop 2.7 (https://github.com/apache/spark/pull/28148#issuecomment-616757840)

- [x] Test Jenkins with Hadoop 3.2 on JDK11 (https://github.com/apache/spark/pull/28148#issuecomment-616294353)

- [x] Manual test with remote hive metastore.

Hive side:

```

export JAVA_HOME=/usr/lib/jdk1.8.0_221

export PATH=$JAVA_HOME/bin:$PATH

cd /usr/lib/hive-2.3.6 # Start Hive metastore with Hive 2.3.6

bin/schematool -dbType derby -initSchema --verbose

bin/hive --service metastore

```

Spark side:

```

export JAVA_HOME=/usr/lib/jdk-11.0.3

export PATH=$JAVA_HOME/bin:$PATH

build/sbt clean package -Phive -Phadoop-3.2 -Phive-thriftserver

export SPARK_PREPEND_CLASSES=true

bin/spark-sql --conf spark.hadoop.hive.metastore.uris=thrift://localhost:9083

```

Closes#28148 from wangyum/SPARK-31381.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR is the follow-up PR of https://github.com/apache/spark/pull/28003

- add a migration guide

- add an end-to-end test case.

### Why are the changes needed?

The original PR made the major behavior change in the user-facing RESET command.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added a new end-to-end test

Closes#28265 from gatorsmile/spark-31234followup.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

This adds newly supported `nfs` volume type description into the document for Apache Spark 3.1.0.

### Why are the changes needed?

To complete the document.

### Does this PR introduce any user-facing change?

Yes. (Doc)

### How was this patch tested?

Manually generate doc and check it.

```

SKIP_API=1 jekyll build

```

Closes#28236 from dongjoon-hyun/SPARK-NFS-DOC.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Update the document and shell script to warn user about the deprecation of multiple workers on the same host support.

### Why are the changes needed?

This is a sub-task of [SPARK-30978](https://issues.apache.org/jira/browse/SPARK-30978), which plans to totally remove support of multiple workers in Spark 3.1. This PR makes the first step to deprecate it firstly in Spark 3.0.

### Does this PR introduce any user-facing change?

Yeah, user see warning when they run start worker script.

### How was this patch tested?

Tested manually.

Closes#27768 from Ngone51/deprecate_spark_worker_instances.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

Add a SQL example for UDAF

### Why are the changes needed?

To make SQL Reference complete

### Does this PR introduce any user-facing change?

Yes.

Add the following page, also change ```Sql``` to ```SQL``` in the example tab for all the sql examples.

<img width="1110" alt="Screen Shot 2020-04-13 at 6 09 24 PM" src="https://user-images.githubusercontent.com/13592258/79175240-06cd7400-7db2-11ea-8f3e-af71a591a64b.png">

### How was this patch tested?

Manually build and check

Closes#28209 from huaxingao/udf_followup.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR intends to drop the built-in function pages from SQL references. We've already had a complete list of built-in functions in the API documents.

See related discussions for more details:

https://github.com/apache/spark/pull/28170#issuecomment-611917191

### Why are the changes needed?

For better SQL documents.

### Does this PR introduce any user-facing change?

### How was this patch tested?

Manually checked.

Closes#28203 from maropu/DropBuiltinFunctionDocs.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR intends to clean up the SQL documents in `doc/sql-ref*`.

Main changes are as follows;

- Fixes wrong syntaxes and capitalize sub-titles

- Adds some DDL queries in `Examples` so that users can run examples there

- Makes query output in `Examples` follows the `Dataset.showString` (right-aligned) format

- Adds/Removes spaces, Indents, or blank lines to follow the format below;

```

---

license...

---

### Description

Writes what's the syntax is.

### Syntax

{% highlight sql %}

SELECT...

WHERE... // 4 indents after the second line

...

{% endhighlight %}

### Parameters

<dl>

<dt><code><em>Param Name</em></code></dt>

<dd>

Param Description

</dd>

...

</dl>

### Examples

{% highlight sql %}

-- It is better that users are able to execute example queries here.

-- So, we prepare test data in the first section if possible.

CREATE TABLE t (key STRING, value DOUBLE);

INSERT INTO t VALUES

('a', 1.0), ('a', 2.0), ('b', 3.0), ('c', 4.0);

-- query output has 2 indents and it follows the `Dataset.showString`

-- format (right-aligned).

SELECT * FROM t;

+---+-----+

|key|value|

+---+-----+

| a| 1.0|

| a| 2.0|

| b| 3.0|

| c| 4.0|

+---+-----+

-- Query statements after the second line have 4 indents.

SELECT key, SUM(value)

FROM t

GROUP BY key;

+---+----------+

|key|sum(value)|

+---+----------+

| c| 4.0|

| b| 3.0|

| a| 3.0|

+---+----------+

...

{% endhighlight %}

### Related Statements

* [XXX](xxx.html)

* ...

```

### Why are the changes needed?

The most changes of this PR are pretty minor, but I think the consistent formats/rules to write documents are important for long-term maintenance in our community

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

Manually checked.

Closes#28151 from maropu/MakeRightAligned.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This pull request adds SparkR wrapper for `FMRegressor`:

- Supporting ` org.apache.spark.ml.r.FMRegressorWrapper`.

- `FMRegressionModel` S4 class.

- Corresponding `spark.fmRegressor`, `predict`, `summary` and `write.ml` generics.

- Corresponding docs and tests.

### Why are the changes needed?

Feature parity.

### Does this PR introduce any user-facing change?

No (new API).

### How was this patch tested?

New unit tests.

Closes#27571 from zero323/SPARK-30819.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Document Spark integration with Hive UDFs/UDAFs/UDTFs

### Why are the changes needed?

To make SQL Reference complete

### Does this PR introduce any user-facing change?

Yes

<img width="1031" alt="Screen Shot 2020-04-02 at 2 22 42 PM" src="https://user-images.githubusercontent.com/13592258/78301971-cc7cf080-74ee-11ea-93c8-7d4c75213b47.png">

### How was this patch tested?

Manually build and check

Closes#28104 from huaxingao/hive-udfs.

Lead-authored-by: Huaxin Gao <huaxing@us.ibm.com>

Co-authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR explicitly mention that the requirement of Iterator of Series to Iterator of Series and Iterator of Multiple Series to Iterator of Series (previously Scalar Iterator pandas UDF).

The actual limitation of this UDF is the same length of the _entire input and output_, instead of each series's length. Namely you can do something as below:

```python

from typing import Iterator, Tuple

import pandas as pd

from pyspark.sql.functions import pandas_udf

pandas_udf("long")

def func(

iterator: Iterator[pd.Series]) -> Iterator[pd.Series]:

return iter([pd.concat(iterator)])

spark.range(100).select(func("id")).show()

```

This characteristic allows you to prefetch the data from the iterator to speed up, compared to the regular Scalar to Scalar (previously Scalar pandas UDF).

### Why are the changes needed?

To document the correct restriction and characteristics of a feature.

### Does this PR introduce any user-facing change?

Yes in the documentation but only in unreleased branches.

### How was this patch tested?

Github Actions should test the documentation build

Closes#28160 from HyukjinKwon/SPARK-30722-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This pull request adds SparkR wrapper for `LinearRegression`

- Supporting `org.apache.spark.ml.rLinearRegressionWrapper`.

- `LinearRegressionModel` S4 class.

- Corresponding `spark.lm` predict, summary and write.ml generics.

- Corresponding docs and tests.

### Why are the changes needed?

Feature parity.

### Does this PR introduce any user-facing change?

No (new API).

### How was this patch tested?

New unit tests.

Closes#27593 from zero323/SPARK-30818.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

HyukjinKwon have ported back all the PR about version to branch-3.0.

I make a double check and found GraphX table lost version head.

This PR will fix the issue.

HyukjinKwon, please help me merge this PR to master and branch-3.0

### Why are the changes needed?

Add version head of GraphX table

### Does this PR introduce any user-facing change?

'No'.

### How was this patch tested?

Jenkins test.

Closes#28149 from beliefer/fix-head-of-graphx-table.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Previously, user can issue `SHOW TABLES` to get info of both tables and views.

This PR (SPARK-31113) implements `SHOW VIEWS` SQL command similar to HIVE to get views only.(https://cwiki.apache.org/confluence/display/Hive/LanguageManual+DDL#LanguageManualDDL-ShowViews)

**Hive** -- Only show view names

```

hive> SHOW VIEWS;

OK

view_1

view_2

...

```

**Spark(Hive-Compatible)** -- Only show view names, used in tests and `SparkSQLDriver` for CLI applications

```

SHOW VIEWS IN showdb;

view_1

view_2

...

```

**Spark** -- Show more information database/viewName/isTemporary

```

spark-sql> SHOW VIEWS;

userdb view_1 false

userdb view_2 false

...

```

### Why are the changes needed?

`SHOW VIEWS` command provides better granularity to only get information of views.

### Does this PR introduce any user-facing change?

Add new `SHOW VIEWS` SQL command

### How was this patch tested?

Add new test `show-views.sql` and pass existing tests

Closes#27897 from Eric5553/ShowViews.

Authored-by: Eric Wu <492960551@qq.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This pull request adds SparkR wrapper for `FMClassifier`:

- Supporting ` org.apache.spark.ml.r.FMClassifierWrapper`.

- `FMClassificationModel` S4 class.

- Corresponding `spark.fmClassifier`, `predict`, `summary` and `write.ml` generics.

- Corresponding docs and tests.

### Why are the changes needed?

Feature parity.

### Does this PR introduce any user-facing change?

No (new API).

### How was this patch tested?

New unit tests.

Closes#27570 from zero323/SPARK-30820.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Add migration guide for extracting second from datetimes

### Why are the changes needed?

doc the behavior change for extract expression

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A, just passing jenkins

Closes#28140 from yaooqinn/SPARK-29311.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR intends to improve the SQL document of `GROUP BY`; it added the description about FILTER clauses of aggregate functions.

### Why are the changes needed?

To improve the SQL documents

### Does this PR introduce any user-facing change?

Yes.

<img src="https://user-images.githubusercontent.com/692303/78558612-e2234a80-784d-11ea-9353-b3feac4d57a7.png" width="500">

### How was this patch tested?

Manually checked.

Closes#28134 from maropu/SPARK-31358.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR fixes the outdated requirement for `spark.dynamicAllocation.enabled=true`.

### Why are the changes needed?

This is found during 3.0.0 RC1 document review and testing. As described at `spark.dynamicAllocation.shuffleTracking.enabled` in the same table, we can enabled Dynamic Allocation without external shuffle service.

### Does this PR introduce any user-facing change?

Yes. (Doc.)

### How was this patch tested?

Manually generate the doc by `SKIP_API=1 jekyll build`

**BEFORE**

**AFTER**

Closes#28132 from dongjoon-hyun/SPARK-DA-DOC.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR intends to add a new SQL config for controlling a plan explain mode in the events of (e.g., `SparkListenerSQLExecutionStart` and `SparkListenerSQLAdaptiveExecutionUpdate`) SQL listeners. In the current master, the output of `QueryExecution.toString` (this is equivalent to the "extended" explain mode) is stored in these events. I think it is useful to control the content via `SQLConf`. For example, the query "Details" content (TPCDS q66 query) of a SQL tab in a Spark web UI will be changed as follows;

Before this PR:

After this PR:

### Why are the changes needed?

For better usability.

### Does this PR introduce any user-facing change?

Yes; since Spark 3.1, SQL UI data adopts the `formatted` mode for the query plan explain results. To restore the behavior before Spark 3.0, you can set `spark.sql.ui.explainMode` to `extended`.

### How was this patch tested?

Added unit tests.

Closes#28097 from maropu/SPARK-31325.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

For the stage level scheduling feature, add the ability to optionally merged resource profiles if they were specified on multiple RDD within a stage. There is a config to enable this feature, its off by default (spark.scheduler.resourceProfile.mergeConflicts). When the config is set to true, Spark will merge the profiles selecting the max value of each resource (cores, memory, gpu, etc). further documentation will be added with SPARK-30322.

This also added in the ability to check if an equivalent resource profile already exists. This is so that if a user is running stages and combining the same profiles over and over again we don't get an explosion in the number of profiles.

### Why are the changes needed?

To allow users to specify resource on multiple RDD and not worry as much about if they go into the same stage and fail.

### Does this PR introduce any user-facing change?

Yes, when the config is turned on it now merges the profiles instead of errorring out.

### How was this patch tested?

Unit tests

Closes#28053 from tgravescs/SPARK-29153.

Lead-authored-by: Thomas Graves <tgraves@apache.org>

Co-authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

This PR adds description for `Shuffle Write Time` to `web-ui.md`.

### Why are the changes needed?

#27837 added `Shuffle Write Time` metric to task metrics summary but it's not documented yet.

### Does this PR introduce any user-facing change?

Yes.

We can see the description for `Shuffle Write Time` in the new `web-ui.html`.

<img width="956" alt="shuffle-write-time-description" src="https://user-images.githubusercontent.com/4736016/78175342-a9722280-7495-11ea-9cc6-62c6f3619aa3.png">

### How was this patch tested?

Built docs by `SKIP_API=1 jekyll build` in `doc` directory and then confirmed `web-ui.html`.

Closes#28093 from sarutak/SPARK-31073-doc.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Add back the deprecated R APIs removed by https://github.com/apache/spark/pull/22843/ and https://github.com/apache/spark/pull/22815.

These APIs are

- `sparkR.init`

- `sparkRSQL.init`

- `sparkRHive.init`

- `registerTempTable`

- `createExternalTable`

- `dropTempTable`

No need to port the function such as

```r

createExternalTable <- function(x, ...) {

dispatchFunc("createExternalTable(tableName, path = NULL, source = NULL, ...)", x, ...)

}

```

because this was for the backward compatibility when SQLContext exists before assuming from https://github.com/apache/spark/pull/9192, but seems we don't need it anymore since SparkR replaced SQLContext with Spark Session at https://github.com/apache/spark/pull/13635.

### Why are the changes needed?

Amend Spark's Semantic Versioning Policy

### Does this PR introduce any user-facing change?

Yes

The removed R APIs are put back.

### How was this patch tested?

Add back the removed tests

Closes#28058 from huaxingao/r.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR (SPARK-30775) aims to improve the description of the executor metrics in the monitoring documentation.

### Why are the changes needed?

Improve and clarify monitoring documentation by:

- adding reference to the Prometheus end point, as implemented in [SPARK-29064]

- extending the list and descripion of executor metrics, following up from [SPARK-27157]

### Does this PR introduce any user-facing change?

Documentation update.

### How was this patch tested?

n.a.

Closes#27526 from LucaCanali/docPrometheusMetricsFollowupSpark29064.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR (SPARK-31293) fixes wrong command examples, parameter descriptions and help message format for Amazon Kinesis integration with Spark Streaming.

### Why are the changes needed?

To improve usability of those commands.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

I ran the fixed commands manually and confirmed they worked as expected.

Closes#28063 from sekikn/SPARK-31293.

Authored-by: Kengo Seki <sekikn@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Fix a broken link and make the relevant docs reference to the new doc

### Why are the changes needed?

### Does this PR introduce any user-facing change?

Yes, make CACHE TABLE, UNCACHE TABLE, CLEAR CACHE, REFRESH TABLE link to the new doc

### How was this patch tested?

Manually build and check

Closes#28065 from huaxingao/spark-30363-follow-up.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR adds "Pandas Function API" into the menu.

### Why are the changes needed?

To be consistent and to make easier to navigate.

### Does this PR introduce any user-facing change?

No, master only.

### How was this patch tested?

Manually verified by `SKIP_API=1 jekyll build`.

Closes#28054 from HyukjinKwon/followup-spark-30722.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Based on the discussion in the mailing list [[Proposal] Modification to Spark's Semantic Versioning Policy](http://apache-spark-developers-list.1001551.n3.nabble.com/Proposal-Modification-to-Spark-s-Semantic-Versioning-Policy-td28938.html) , this PR is to add back the following APIs whose maintenance cost are relatively small.

- HiveContext

- createExternalTable APIs

### Why are the changes needed?

Avoid breaking the APIs that are commonly used.

### Does this PR introduce any user-facing change?

Adding back the APIs that were removed in 3.0 branch does not introduce the user-facing changes, because Spark 3.0 has not been released.

### How was this patch tested?

add a new test suite for createExternalTable APIs.

Closes#27815 from gatorsmile/addAPIsBack.

Lead-authored-by: gatorsmile <gatorsmile@gmail.com>

Co-authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Skew join handling comes with an overhead: we need to read some data repeatedly. We should treat a partition as skewed if it's large enough so that it's beneficial to do so.

Currently the size threshold is the advisory partition size, which is 64 MB by default. This is not large enough for the skewed partition size threshold.

This PR adds a new config for the threshold and set default value as 256 MB.

### Why are the changes needed?

Avoid skew join handling that may introduce a perf regression.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

existing tests

Closes#27967 from cloud-fan/aqe.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Currently, ResetCommand clear all configurations, including sql configs, static sql configs and spark context level configs.

for example:

```sql

spark-sql> set xyz=abc;

xyz abc

spark-sql> set;

spark.app.id local-1585055396930

spark.app.name SparkSQL::10.242.189.214

spark.driver.host 10.242.189.214

spark.driver.port 65094

spark.executor.id driver

spark.jars

spark.master local[*]

spark.sql.catalogImplementation hive

spark.sql.hive.version 1.2.1

spark.submit.deployMode client

xyz abc

spark-sql> reset;

spark-sql> set;

spark-sql> set spark.sql.hive.version;

spark.sql.hive.version 1.2.1

spark-sql> set spark.app.id;

spark.app.id <undefined>

```

In this PR, we restore spark confs to RuntimeConfig after it is cleared

### Why are the changes needed?

reset command overkills configs which are static.

### Does this PR introduce any user-facing change?

yes, the ResetCommand do not change static configs now

### How was this patch tested?

add ut

Closes#28003 from yaooqinn/SPARK-31234.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

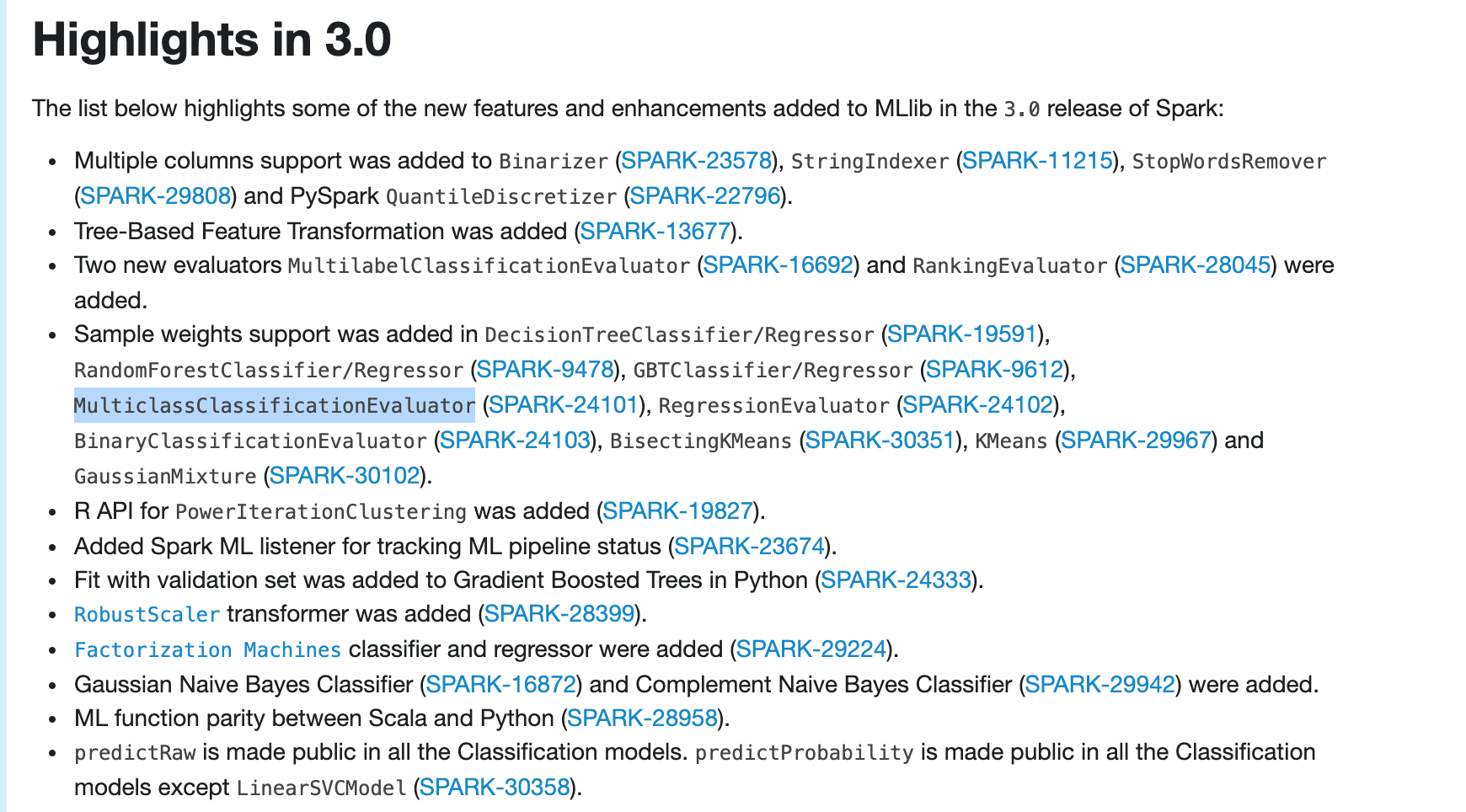

### What changes were proposed in this pull request?

Update ml-guide to include ```MulticlassClassificationEvaluator``` weight support in highlights

### Why are the changes needed?

```MulticlassClassificationEvaluator``` weight support is very important, so should include it in highlights

### Does this PR introduce any user-facing change?

Yes

after:

### How was this patch tested?

manually build and check

Closes#28031 from huaxingao/highlights-followup.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>