### What changes were proposed in this pull request?

Add SparkR wrapper for `o.a.s.ml.functions.vector_to_array`

### Why are the changes needed?

- Currently ML vectors, including predictions, are almost inaccessible to R users. That's is a serious loss of functionality.

- Feature parity.

### Does this PR introduce _any_ user-facing change?

Yes, new R function is added.

### How was this patch tested?

- New unit tests.

- Manual verification.

Closes#29917 from zero323/SPARK-33040.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Re-indent lines of SparkR `timestamp_seconds`.

### Why are the changes needed?

Current indentation is not aligned with the opening line.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#29940 from zero323/SPARK-32949-FOLLOW-UP.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Adds `nth_value` function to SparkR.

### Why are the changes needed?

Feature parity. The function has been already added to [Scala](https://issues.apache.org/jira/browse/SPARK-27951) and [Python](https://issues.apache.org/jira/browse/SPARK-33020).

### Does this PR introduce _any_ user-facing change?

Yes. New function is exposed to R users.

### How was this patch tested?

New unit tests.

Closes#29905 from zero323/SPARK-33030.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Explicitly document that `current_date` and `current_timestamp` are executed at the start of query evaluation. And all calls of `current_date`/`current_timestamp` within the same query return the same value

### Why are the changes needed?

Users could expect that `current_date` and `current_timestamp` return the current date/timestamp at the moment of query execution but in fact the functions are folded by the optimizer at the start of query evaluation:

0df8dd6073/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/optimizer/finishAnalysis.scala (L71-L91)

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

by running `./dev/scalastyle`.

Closes#29892 from MaxGekk/doc-current_date.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

More precise description of the result of the `percentile_approx()` function and its synonym `approx_percentile()`. The proposed sentence clarifies that the function returns **one of elements** (or array of elements) from the input column.

### Why are the changes needed?

To improve Spark docs and avoid misunderstanding of the function behavior.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

`./dev/scalastyle`

Closes#29835 from MaxGekk/doc-percentile_approx.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

This PR adds R wrapper for `timestamp_seconds` function.

### Why are the changes needed?

Feature parity.

### Does this PR introduce _any_ user-facing change?

Yes, it adds a new R function.

### How was this patch tested?

New unit tests.

Closes#29822 from zero323/SPARK-32949.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR adds `withColumn` function SparkR.

### Why are the changes needed?

### Does this PR introduce _any_ user-facing change?

Yes, new function, equivalent to Scala and PySpark equivalents, is exposed to the end user.

### How was this patch tested?

New unit tests added.

Closes#29814 from zero323/SPARK-32946.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add optional `allowMissingColumns` argument to SparkR `unionByName`.

### Why are the changes needed?

Feature parity.

### Does this PR introduce _any_ user-facing change?

`unionByName` supports `allowMissingColumns`.

### How was this patch tested?

Existing unit tests. New unit tests targeting this feature.

Closes#29813 from zero323/SPARK-32799.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

Fix the R style issue which is not catched by the R style checker. Got error:

```

R/DataFrame.R:1244:17: style: Closing curly-braces should always be on their own line, unless it's followed by an else.

}, finally = {

^

lintr checks failed.

```

Closes#29574 from lu-wang-dl/fix-r-style.

Lead-authored-by: Lu WANG <lu.wang@databricks.com>

Co-authored-by: Lu Wang <38018689+lu-wang-dl@users.noreply.github.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to deduplicate configuration set/unset in `test_sparkSQL_arrow.R`.

Setting `spark.sql.execution.arrow.sparkr.enabled` can be globally done instead of doing it in each test case.

### Why are the changes needed?

To duduplicate the codes.

### Does this PR introduce _any_ user-facing change?

No, dev-only

### How was this patch tested?

Manually ran the tests.

Closes#29592 from HyukjinKwon/SPARK-32747.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the docs concerning the approx_count_distinct I have changed the description of the rsd parameter from **_maximum estimation error allowed_** to _**maximum relative standard deviation allowed**_

### Why are the changes needed?

Maximum estimation error allowed can be misleading. You can set the target relative standard deviation, which affects the estimation error, but on given runs the estimation error can still be above the rsd parameter.

### Does this PR introduce _any_ user-facing change?

This PR should make it easier for users reading the docs to understand that the rsd parameter in approx_count_distinct doesn't cap the estimation error, but just sets the target deviation instead,

### How was this patch tested?

No tests, as no code changes were made.

Closes#29424 from Comonut/fix-approx_count_distinct-rsd-param-description.

Authored-by: alexander-daskalov <alexander.daskalov@adevinta.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR aims to add `StorageLevel.DISK_ONLY_3` as a built-in `StorageLevel`.

### Why are the changes needed?

In a YARN cluster, HDFS uaually provides storages with replication factor 3. So, we can save the result to HDFS to get `StorageLevel.DISK_ONLY_3` technically. However, disaggregate clusters or clusters without storage services are rising. Previously, in that situation, the users were able to use similar `MEMORY_AND_DISK_2` or a user-created `StorageLevel`. This PR aims to support those use cases officially for better UX.

### Does this PR introduce _any_ user-facing change?

Yes. This provides a new built-in option.

### How was this patch tested?

Pass the GitHub Action or Jenkins with the revised test cases.

Closes#29331 from dongjoon-hyun/SPARK-32517.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

SparkR increased the minimal version of Arrow R version to 1.0.0 at SPARK-32452, and Arrow R 0.14 dropped `as_tibble`. We can remove the usage in SparkR.

### Why are the changes needed?

To remove codes unused anymore.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

GitHub Actions will test them out.

Closes#29361 from HyukjinKwon/SPARK-32543.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR proposes to:

1. Fix the error message when the output schema is misbatched with R DataFrame from the given function. For example,

```R

df <- createDataFrame(list(list(a=1L, b="2")))

count(gapply(df, "a", function(key, group) { group }, structType("a int, b int")))

```

**Before:**

```

Error in handleErrors(returnStatus, conn) :

...

java.lang.UnsupportedOperationException

...

```

**After:**

```

Error in handleErrors(returnStatus, conn) :

...

java.lang.AssertionError: assertion failed: Invalid schema from gapply: expected IntegerType, IntegerType, got IntegerType, StringType

...

```

2. Update documentation about the schema matching for `gapply` and `dapply`.

### Why are the changes needed?

To show which schema is not matched, and let users know what's going on.

### Does this PR introduce _any_ user-facing change?

Yes, error message is updated as above, and documentation is updated.

### How was this patch tested?

Manually tested and unitttests were added.

Closes#29283 from HyukjinKwon/r-vectorized-error.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to set the minimum Arrow version as 1.0.0 to minimise the maintenance overhead and keep the minimal version up to date.

Other required changes to support 1.0.0 were already made in SPARK-32451.

### Why are the changes needed?

R side, people rather aggressively encourage people to use the latest version, and SparkR vectorization is very experimental that was added from Spark 3.0.

Also, we're technically not testing old Arrow versions in SparkR for now.

### Does this PR introduce _any_ user-facing change?

Yes, users wouldn't be able to use SparkR with old Arrow.

### How was this patch tested?

GitHub Actions and AppVeyor are already testing them.

Closes#29253 from HyukjinKwon/SPARK-32452.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR will remove references to these "blacklist" and "whitelist" terms besides the blacklisting feature as a whole, which can be handled in a separate JIRA/PR.

This touches quite a few files, but the changes are straightforward (variable/method/etc. name changes) and most quite self-contained.

### Why are the changes needed?

As per discussion on the Spark dev list, it will be beneficial to remove references to problematic language that can alienate potential community members. One such reference is "blacklist" and "whitelist". While it seems to me that there is some valid debate as to whether these terms have racist origins, the cultural connotations are inescapable in today's world.

### Does this PR introduce _any_ user-facing change?

In the test file `HiveQueryFileTest`, a developer has the ability to specify the system property `spark.hive.whitelist` to specify a list of Hive query files that should be tested. This system property has been renamed to `spark.hive.includelist`. The old property has been kept for compatibility, but will log a warning if used. I am open to feedback from others on whether keeping a deprecated property here is unnecessary given that this is just for developers running tests.

### How was this patch tested?

Existing tests should be suitable since no behavior changes are expected as a result of this PR.

Closes#28874 from xkrogen/xkrogen-SPARK-32036-rename-blacklists.

Authored-by: Erik Krogen <ekrogen@linkedin.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Adding support to Association Rules in Spark ml.fpm.

### Why are the changes needed?

Support is an indication of how frequently the itemset of an association rule appears in the database and suggests if the rules are generally applicable to the dateset. Refer to [wiki](https://en.wikipedia.org/wiki/Association_rule_learning#Support) for more details.

### Does this PR introduce _any_ user-facing change?

Yes. Associate Rules now have support measure

### How was this patch tested?

existing and new unit test

Closes#28903 from huaxingao/fpm.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Spark 3.0 accidentally dropped R < 3.5. It is built by R 3.6.3 which not support R < 3.5:

```

Error in readRDS(pfile) : cannot read workspace version 3 written by R 3.6.3; need R 3.5.0 or newer version.

```

In fact, with SPARK-31918, we will have to drop R < 3.5 entirely to support R 4.0.0. This is inevitable to release on CRAN because they require to make the tests pass with the latest R.

### Why are the changes needed?

To show the supported versions correctly, and support R 4.0.0 to unblock the releases.

### Does this PR introduce _any_ user-facing change?

In fact, no because Spark 3.0.0 already does not work with R < 3.5.

Compared to Spark 2.4, yes. R < 3.5 would not work.

### How was this patch tested?

Jenkins should test it out.

Closes#28908 from HyukjinKwon/SPARK-32073.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to ignore S4 generic methods under SparkR namespace in closure cleaning to support R 4.0.0+.

Currently, when you run the codes that runs R native codes, it fails as below with R 4.0.0:

```r

df <- createDataFrame(lapply(seq(100), function (e) list(value=e)))

count(dapply(df, function(x) as.data.frame(x[x$value < 50,]), schema(df)))

```

```

org.apache.spark.SparkException: R unexpectedly exited.

R worker produced errors: Error in lapply(part, FUN) : attempt to bind a variable to R_UnboundValue

```

The root cause seems to be related to when an S4 generic method is manually included into the closure's environment via `SparkR:::cleanClosure`. For example, when an RRDD is created via `createDataFrame` with calling `lapply` to convert, `lapply` itself:

f53d8c63e8/R/pkg/R/RDD.R (L484)

is added into the environment of the cleaned closure - because this is not an exposed namespace; however, this is broken in R 4.0.0+ for an unknown reason with an error message such as "attempt to bind a variable to R_UnboundValue".

Actually, we don't need to add the `lapply` into the environment of the closure because it is not supposed to be called in worker side. In fact, there is no private generic methods supposed to be called in worker side in SparkR at all from my understanding.

Therefore, this PR takes a simpler path to work around just by explicitly excluding the S4 generic methods under SparkR namespace to support R 4.0.0. in SparkR.

### Why are the changes needed?

To support R 4.0.0+ with SparkR, and unblock the releases on CRAN. CRAN requires the tests pass with the latest R.

### Does this PR introduce _any_ user-facing change?

Yes, it will support R 4.0.0 to end-users.

### How was this patch tested?

Manually tested. Both CRAN and tests with R 4.0.1:

```

══ testthat results ═══════════════════════════════════════════════════════════

[ OK: 13 | SKIPPED: 0 | WARNINGS: 0 | FAILED: 0 ]

✔ | OK F W S | Context

✔ | 11 | binary functions [2.5 s]

✔ | 4 | functions on binary files [2.1 s]

✔ | 2 | broadcast variables [0.5 s]

✔ | 5 | functions in client.R

✔ | 46 | test functions in sparkR.R [6.3 s]

✔ | 2 | include R packages [0.3 s]

✔ | 2 | JVM API [0.2 s]

✔ | 75 | MLlib classification algorithms, except for tree-based algorithms [86.3 s]

✔ | 70 | MLlib clustering algorithms [44.5 s]

✔ | 6 | MLlib frequent pattern mining [3.0 s]

✔ | 8 | MLlib recommendation algorithms [9.6 s]

✔ | 136 | MLlib regression algorithms, except for tree-based algorithms [76.0 s]

✔ | 8 | MLlib statistics algorithms [0.6 s]

✔ | 94 | MLlib tree-based algorithms [85.2 s]

✔ | 29 | parallelize() and collect() [0.5 s]

✔ | 428 | basic RDD functions [25.3 s]

✔ | 39 | SerDe functionality [2.2 s]

✔ | 20 | partitionBy, groupByKey, reduceByKey etc. [3.9 s]

✔ | 4 | functions in sparkR.R

✔ | 16 | SparkSQL Arrow optimization [19.2 s]

✔ | 6 | test show SparkDataFrame when eager execution is enabled. [1.1 s]

✔ | 1175 | SparkSQL functions [134.8 s]

✔ | 42 | Structured Streaming [478.2 s]

✔ | 16 | tests RDD function take() [1.1 s]

✔ | 14 | the textFile() function [2.9 s]

✔ | 46 | functions in utils.R [0.7 s]

✔ | 0 1 | Windows-specific tests

────────────────────────────────────────────────────────────────────────────────

test_Windows.R:22: skip: sparkJars tag in SparkContext

Reason: This test is only for Windows, skipped

────────────────────────────────────────────────────────────────────────────────

══ Results ═════════════════════════════════════════════════════════════════════

Duration: 987.3 s

OK: 2304

Failed: 0

Warnings: 0

Skipped: 1

...

Status: OK

+ popd

Tests passed.

```

Note that I tested to build SparkR in R 4.0.0, and run the tests with R 3.6.3. It all passed. See also [the comment in the JIRA](https://issues.apache.org/jira/browse/SPARK-31918?focusedCommentId=17142837&page=com.atlassian.jira.plugin.system.issuetabpanels:comment-tabpanel#comment-17142837).

Closes#28907 from HyukjinKwon/SPARK-31918.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to set the minimum Arrow version as 0.15.1 to be consistent with PySpark side at.

### Why are the changes needed?

It will reduce the maintenance overhead to match the Arrow versions, and minimize the supported range. SparkR Arrow optimization is experimental yet.

### Does this PR introduce _any_ user-facing change?

No, it's the change in unreleased branches only.

### How was this patch tested?

0.15.x was already tested at SPARK-29378, and we're testing the latest version of SparkR currently in AppVeyor. I already manually tested too.

Closes#28520 from HyukjinKwon/SPARK-31701.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Internal usages like `{stop,warning,message}({paste,paste0,sprintf}` and `{stop,warning,message}(some_literal_string_as_variable` have been removed and replaced as appropriate.

### Why are the changes needed?

CRAN policy recommends against using such constructions to build error messages, in particular because it makes the process of creating portable error messages for the package more onerous.

### Does this PR introduce any user-facing change?

There may be some small grammatical changes visible in error messaging.

### How was this patch tested?

Not done

Closes#28365 from MichaelChirico/r-stop-paste.

Authored-by: Michael Chirico <michael.chirico@grabtaxi.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

`requireNamespace1` was used to get `SparkR` on CRAN while Suggesting `arrow` while `arrow` was not yet available on CRAN.

### Why are the changes needed?

Now `arrow` is on CRAN, we can properly use `requireNamespace` without triggering CRAN failures.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

AppVeyor will test, and CRAN check in Jenkins build.

Closes#28387 from MichaelChirico/r-require-arrow.

Authored-by: Michael Chirico <michael.chirico@grabtaxi.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

For regex functions in base R (`gsub`, `grep`, `grepl`, `strsplit`, `gregexpr`), supplying the `fixed=TRUE` option will be more performant.

### Why are the changes needed?

This is a minor fix for performance

### Does this PR introduce any user-facing change?

No (although some internal code was applying fixed-as-regex in some cases that could technically have been over-broad and caught unintended patterns)

### How was this patch tested?

Not

Closes#28367 from MichaelChirico/r-regex-fixed.

Authored-by: Michael Chirico <michael.chirico@grabtaxi.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Repeated `sapply` avoided in internal `checkSchemaInArrow`

### Why are the changes needed?

Current implementation is doubly inefficient:

1. Repeatedly doing the same (95%) `sapply` loop

2. Doing scalar `==` on a vector (`==` should be done over the whole vector for efficiency)

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By my trusty friend the CI bots

Closes#28372 from MichaelChirico/vectorize-types.

Authored-by: Michael Chirico <michael.chirico@grabtaxi.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

All instances of `paste(..., sep = "")` in the code are replaced with `paste0` which is more performant

### Why are the changes needed?

Performance & consistency (`paste0` is already used extensively in the R package)

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

None

Closes#28374 from MichaelChirico/r-paste0.

Authored-by: Michael Chirico <michael.chirico@grabtaxi.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Improve documentation for `gapply` in `SparkR`

### Why are the changes needed?

Spent a long time this weekend trying to figure out just what exactly `key` is in `gapply`'s `func`. I had assumed it would be a _named_ list, but apparently not -- the examples are working because `schema` is applying the name and the names of the output `data.frame` don't matter.

As near as I can tell the description I've added is correct, namely, that `key` is an unnamed list.

### Does this PR introduce any user-facing change?

No? Not in code. Only documentation.

### How was this patch tested?

Not. Documentation only

Closes#28350 from MichaelChirico/r-gapply-key-doc.

Authored-by: Michael Chirico <michael.chirico@grabtaxi.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This pull request adds SparkR wrapper for `FMRegressor`:

- Supporting ` org.apache.spark.ml.r.FMRegressorWrapper`.

- `FMRegressionModel` S4 class.

- Corresponding `spark.fmRegressor`, `predict`, `summary` and `write.ml` generics.

- Corresponding docs and tests.

### Why are the changes needed?

Feature parity.

### Does this PR introduce any user-facing change?

No (new API).

### How was this patch tested?

New unit tests.

Closes#27571 from zero323/SPARK-30819.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This pull request adds SparkR wrapper for `LinearRegression`

- Supporting `org.apache.spark.ml.rLinearRegressionWrapper`.

- `LinearRegressionModel` S4 class.

- Corresponding `spark.lm` predict, summary and write.ml generics.

- Corresponding docs and tests.

### Why are the changes needed?

Feature parity.

### Does this PR introduce any user-facing change?

No (new API).

### How was this patch tested?

New unit tests.

Closes#27593 from zero323/SPARK-30818.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This pull request adds SparkR wrapper for `FMClassifier`:

- Supporting ` org.apache.spark.ml.r.FMClassifierWrapper`.

- `FMClassificationModel` S4 class.

- Corresponding `spark.fmClassifier`, `predict`, `summary` and `write.ml` generics.

- Corresponding docs and tests.

### Why are the changes needed?

Feature parity.

### Does this PR introduce any user-facing change?

No (new API).

### How was this patch tested?

New unit tests.

Closes#27570 from zero323/SPARK-30820.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Add back the deprecated R APIs removed by https://github.com/apache/spark/pull/22843/ and https://github.com/apache/spark/pull/22815.

These APIs are

- `sparkR.init`

- `sparkRSQL.init`

- `sparkRHive.init`

- `registerTempTable`

- `createExternalTable`

- `dropTempTable`

No need to port the function such as

```r

createExternalTable <- function(x, ...) {

dispatchFunc("createExternalTable(tableName, path = NULL, source = NULL, ...)", x, ...)

}

```

because this was for the backward compatibility when SQLContext exists before assuming from https://github.com/apache/spark/pull/9192, but seems we don't need it anymore since SparkR replaced SQLContext with Spark Session at https://github.com/apache/spark/pull/13635.

### Why are the changes needed?

Amend Spark's Semantic Versioning Policy

### Does this PR introduce any user-facing change?

Yes

The removed R APIs are put back.

### How was this patch tested?

Add back the removed tests

Closes#28058 from huaxingao/r.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

A small documentation change to clarify that the `rand()` function produces values in `[0.0, 1.0)`.

### Why are the changes needed?

`rand()` uses `Rand()` - which generates values in [0, 1) ([documented here](a1dbcd13a3/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/randomExpressions.scala (L71))). The existing documentation suggests that 1.0 is a possible value returned by rand (i.e for a distribution written as `X ~ U(a, b)`, x can be a or b, so `U[0.0, 1.0]` suggests the value returned could include 1.0).

### Does this PR introduce any user-facing change?

Only documentation changes.

### How was this patch tested?

Documentation changes only.

Closes#28071 from Smeb/master.

Authored-by: Ben Ryves <benjamin.ryves@getyourguide.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose to update the doc for the `timeZone` option in JSON/CSV datasources and for the `tz` parameter of the `from_utc_timestamp()`/`to_utc_timestamp()` functions, and to restrict format of config's values to 2 forms:

1. Geographical regions, such as `America/Los_Angeles`.

2. Fixed offsets - a fully resolved offset from UTC. For example, `-08:00`.

### Why are the changes needed?

Other formats such as three-letter time zone IDs are ambitious, and depend on the locale. For example, `CST` could be U.S. `Central Standard Time` and `China Standard Time`. Such formats have been already deprecated in JDK, see [Three-letter time zone IDs](https://docs.oracle.com/javase/8/docs/api/java/util/TimeZone.html).

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By running `./dev/scalastyle`, and manual testing.

Closes#28051 from MaxGekk/doc-time-zone-option.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

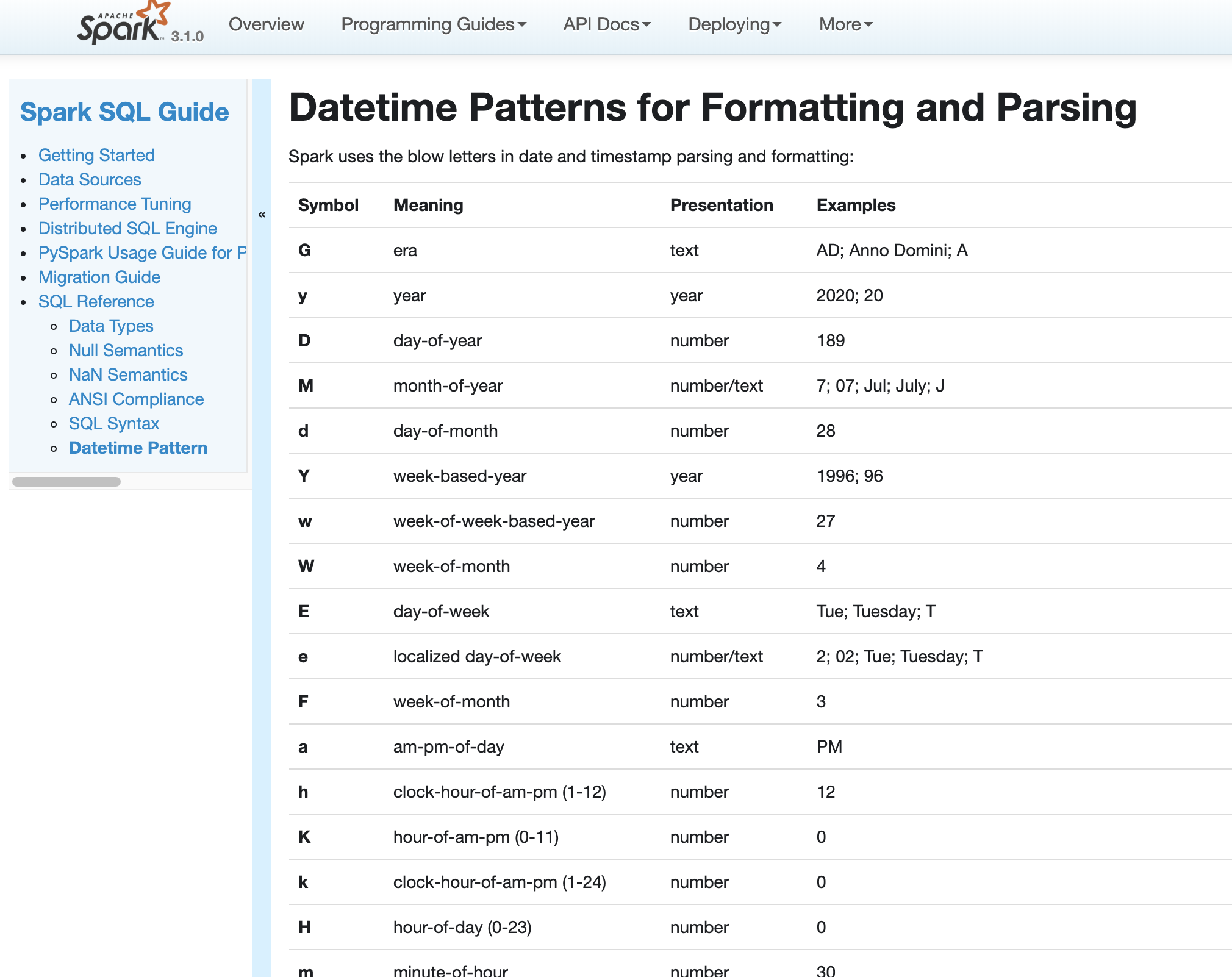

### What changes were proposed in this pull request?

Use our own docs for data pattern instructions to replace java doc.

### Why are the changes needed?

fix doc

### Does this PR introduce any user-facing change?

yes. doc changed

### How was this patch tested?

pass jenkins

Closes#27975 from yaooqinn/SPARK-31189-2.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

- Adds following overloaded variants to Scala `o.a.s.sql.functions`:

- `percentile_approx(e: Column, percentage: Array[Double], accuracy: Long): Column`

- `percentile_approx(columnName: String, percentage: Array[Double], accuracy: Long): Column`

- `percentile_approx(e: Column, percentage: Double, accuracy: Long): Column`

- `percentile_approx(columnName: String, percentage: Double, accuracy: Long): Column`

- `percentile_approx(e: Column, percentage: Seq[Double], accuracy: Long): Column` (primarily for

Python interop).

- `percentile_approx(columnName: String, percentage: Seq[Double], accuracy: Long): Column`

- Adds `percentile_approx` to `pyspark.sql.functions`.

- Adds `percentile_approx` function to SparkR.

### Why are the changes needed?

Currently we support `percentile_approx` only in SQL expression. It is inconvenient and makes this function relatively unknown.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New unit tests for SparkR an PySpark.

As for now there are no additional tests in Scala API ‒ `ApproximatePercentile` is well tested and Python (including docstrings) and R tests provide additional tests, so it seems unnecessary.

Closes#27278 from zero323/SPARK-30569.

Lead-authored-by: zero323 <mszymkiewicz@gmail.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In Spark version 2.4 and earlier, datetime parsing, formatting and conversion are performed by using the hybrid calendar (Julian + Gregorian).

Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen one in ANSI SQL standard, Spark 3.0 switches to it by using Java 8 API classes (the java.time packages that are based on ISO chronology ). The switching job is completed in SPARK-26651.

But after the switching, there are some patterns not compatible between Java 8 and Java 7, Spark needs its own definition on the patterns rather than depends on Java API.

In this PR, we achieve this by writing the document and shadow the incompatible letters. See more details in [SPARK-31030](https://issues.apache.org/jira/browse/SPARK-31030)

### Why are the changes needed?

For backward compatibility.

### Does this PR introduce any user-facing change?

No.

After we define our own datetime parsing and formatting patterns, it's same to old Spark version.

### How was this patch tested?

Existing and new added UT.

Locally document test:

Closes#27830 from xuanyuanking/SPARK-31030.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR add R API for invoking following higher functions:

- `transform` -> `array_transform` (to avoid conflict with `base::transform`).

- `exists` -> `array_exists` (to avoid conflict with `base::exists`).

- `forall` -> `array_forall` (no conflicts, renamed for consistency)

- `filter` -> `array_filter` (to avoid conflict with `stats::filter`).

- `aggregate` -> `array_aggregate` (to avoid conflict with `stats::transform`).

- `zip_with` -> `arrays_zip_with` (no conflicts, renamed for consistency)

- `transform_keys`

- `transform_values`

- `map_filter`

- `map_zip_with`

Overall implementation follows the same pattern as proposed for PySpark (#27406) and reuses object supporting Scala implementation (SPARK-27297).

### Why are the changes needed?

Currently higher order functions are available only using SQL and Scala API and can use only SQL expressions:

```r

select(df, expr("transform(xs, x -> x + 1)")

```

This is error-prone, and hard to do right, when complex logic is used (`when` / `otherwise`, complex objects).

If this PR is accepted, above function could be simply rewritten as:

```r

select(df, transform("xs", function(x) x + 1))

```

### Does this PR introduce any user-facing change?

No (but new user-facing functions are added).

### How was this patch tested?

Added new unit tests.

Closes#27433 from zero323/SPARK-30682.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.1.0-SNAPSHOT.

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27698 from gatorsmile/updateVersion.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

There are currently the R test failures after upgrading `testthat` to 2.0.0, and R version 3.5.2 as of SPARK-23435. This PR targets to fix the tests and make the tests pass. See the explanations and causes below:

```

test_context.R:49: failure: Check masked functions

length(maskedCompletely) not equal to length(namesOfMaskedCompletely).

1/1 mismatches

[1] 6 - 4 == 2

test_context.R:53: failure: Check masked functions

sort(maskedCompletely, na.last = TRUE) not equal to sort(namesOfMaskedCompletely, na.last = TRUE).

5/6 mismatches

x[2]: "endsWith"

y[2]: "filter"

x[3]: "filter"

y[3]: "not"

x[4]: "not"

y[4]: "sample"

x[5]: "sample"

y[5]: NA

x[6]: "startsWith"

y[6]: NA

```

From my cursory look, R base and R's version are mismatched. I fixed accordingly and Jenkins will test it out.

```

test_includePackage.R:31: error: include inside function

package or namespace load failed for ���plyr���:

package ���plyr��� was installed by an R version with different internals; it needs to be reinstalled for use with this R version

Seems it's a package installation issue. Looks like plyr has to be re-installed.

```

From my cursory look, previously installed `plyr` remains and it's not compatible with the new R version. I fixed accordingly and Jenkins will test it out.

```

test_sparkSQL.R:499: warning: SPARK-17811: can create DataFrame containing NA as date and time

Your system is mis-configured: ���/etc/localtime��� is not a symlink

```

Seems a env problem. I suppressed the warnings for now.

```

test_sparkSQL.R:499: warning: SPARK-17811: can create DataFrame containing NA as date and time

It is strongly recommended to set envionment variable TZ to ���America/Los_Angeles��� (or equivalent)

```

Seems a env problem. I suppressed the warnings for now.

```

test_sparkSQL.R:1814: error: string operators

unable to find an inherited method for function ���startsWith��� for signature ���"character"���

1: expect_true(startsWith("Hello World", "Hello")) at /home/jenkins/workspace/SparkPullRequestBuilder2/R/pkg/tests/fulltests/test_sparkSQL.R:1814

2: quasi_label(enquo(object), label)

3: eval_bare(get_expr(quo), get_env(quo))

4: startsWith("Hello World", "Hello")

5: (function (classes, fdef, mtable)

{

methods <- .findInheritedMethods(classes, fdef, mtable)

if (length(methods) == 1L)

return(methods[[1L]])

else if (length(methods) == 0L) {

cnames <- paste0("\"", vapply(classes, as.character, ""), "\"", collapse = ", ")

stop(gettextf("unable to find an inherited method for function %s for signature %s",

sQuote(fdefgeneric), sQuote(cnames)), domain = NA)

}

else stop("Internal error in finding inherited methods; didn't return a unique method",

domain = NA)

})(list("character"), new("nonstandardGenericFunction", .Data = function (x, prefix)

{

standardGeneric("startsWith")

}, generic = structure("startsWith", package = "SparkR"), package = "SparkR", group = list(),

valueClass = character(0), signature = c("x", "prefix"), default = NULL, skeleton = (function (x,

prefix)

stop("invalid call in method dispatch to 'startsWith' (no default method)", domain = NA))(x,

prefix)), <environment>)

6: stop(gettextf("unable to find an inherited method for function %s for signature %s",

sQuote(fdefgeneric), sQuote(cnames)), domain = NA)

```

From my cursory look, R base and R's version are mismatched. I fixed accordingly and Jenkins will test it out.

Also, this PR causes a CRAN check failure as below:

```

* creating vignettes ... ERROR

Error: processing vignette 'sparkr-vignettes.Rmd' failed with diagnostics:

package ���htmltools��� was installed by an R version with different internals; it needs to be reinstalled for use with this R version

```

This PR disables it for now.

### Why are the changes needed?

To unblock other PRs.

### Does this PR introduce any user-facing change?

No. Test only and dev only.

### How was this patch tested?

No. I am going to use Jenkins to test.

Closes#27460 from HyukjinKwon/r-test-failure.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Remove duplicate `name` tags from `read.df` and `read.stream`.

### Why are the changes needed?

These tags are already present in

1adf3520e3/R/pkg/R/SQLContext.R (L546)

and

1adf3520e3/R/pkg/R/SQLContext.R (L678)

for `read.df` and `read.stream` respectively.

As only one `name` tag per block is allowed, this causes build warnings with recent `roxygen2` versions:

```

Warning: [/path/to/spark/R/pkg/R/SQLContext.R:559] name May only use one name per block

Warning: [/path/to/spark/R/pkg/R/SQLContext.R:690] name May only use one name per block

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27437 from zero323/roxygen-warnings-names.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

- Update `testthat` to >= 2.0.0

- Replace of `testthat:::run_tests` with `testthat:::test_package_dir`

- Add trivial assertions for tests, without any expectations, to avoid skipping.

- Update related docs.

### Why are the changes needed?

`testthat` version has been frozen by [SPARK-22817](https://issues.apache.org/jira/browse/SPARK-22817) / https://github.com/apache/spark/pull/20003, but 1.0.2 is pretty old, and we shouldn't keep things in this state forever.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

- Existing CI pipeline:

- Windows build on AppVeyor, R 3.6.2, testthtat 2.3.1

- Linux build on Jenkins, R 3.1.x, testthat 1.0.2

- Additional builds with thesthat 2.3.1 using [sparkr-build-sandbox](https://github.com/zero323/sparkr-build-sandbox) on c7ed64af9e697b3619779857dd820832176b3be3

R 3.4.4 (image digest ec9032f8cf98)

```

docker pull zero323/sparkr-build-sandbox:3.4.4

docker run zero323/sparkr-build-sandbox:3.4.4 zero323 --branch SPARK-23435 --commit c7ed64af9e697b3619779857dd820832176b3be3 --public-key https://keybase.io/zero323/pgp_keys.asc

```

3.5.3 (image digest 0b1759ee4d1d)

```

docker pull zero323/sparkr-build-sandbox:3.5.3

docker run zero323/sparkr-build-sandbox:3.5.3 zero323 --branch SPARK-23435 --commit

c7ed64af9e697b3619779857dd820832176b3be3 --public-key https://keybase.io/zero323/pgp_keys.asc

```

and 3.6.2 (image digest 6594c8ceb72f)

```

docker pull zero323/sparkr-build-sandbox:3.6.2

docker run zero323/sparkr-build-sandbox:3.6.2 zero323 --branch SPARK-23435 --commit c7ed64af9e697b3619779857dd820832176b3be3 --public-key https://keybase.io/zero323/pgp_keys.asc

````

Corresponding [asciicast](https://asciinema.org/) are available as 10.5281/zenodo.3629431

[](https://doi.org/10.5281/zenodo.3629431)

(a bit to large to burden asciinema.org, but can run locally via `asciinema play`).

----------------------------

Continued from #27328Closes#27359 from zero323/SPARK-23435.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

This reverts commit 1d20d13149.

Closes#27351 from gatorsmile/revertSPARK25496.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Disabling test for cleaning closure of recursive function.

### Why are the changes needed?

As of 9514b822a7 this test is no longer valid, and recursive calls, even simple ones:

```lead

f <- function(x) {

if(x > 0) {

f(x - 1)

} else {

x

}

}

```

lead to

```

Error: node stack overflow

```

This is issue is silenced when tested with `testthat` 1.x (reason unknown), but cause failures when using `testthat` 2.x (issue can be reproduced outside test context).

Problem is known and tracked by [SPARK-30629](https://issues.apache.org/jira/browse/SPARK-30629)

Therefore, keeping this test active doesn't make sense, as it will lead to continuous test failures, when `testthat` is updated (https://github.com/apache/spark/pull/27359 / SPARK-23435).

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

CC falaki

Closes#27363 from zero323/SPARK-29777-FOLLOWUP.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

add a param `bootstrap` to control whether bootstrap samples are used.

### Why are the changes needed?

Current RF with numTrees=1 will directly build a tree using the orignial dataset,

while with numTrees>1 it will use bootstrap samples to build trees.

This design is for training a DecisionTreeModel by the impl of RandomForest, however, it is somewhat strange.

In Scikit-Learn, there is a param [bootstrap](https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html#sklearn.ensemble.RandomForestClassifier) to control whether bootstrap samples are used.

### Does this PR introduce any user-facing change?

Yes, new param is added

### How was this patch tested?

existing testsuites

Closes#27254 from zhengruifeng/add_bootstrap.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

This PR adds:

- `pyspark.sql.functions.overlay` function to PySpark

- `overlay` function to SparkR

### Why are the changes needed?

Feature parity. At the moment R and Python users can access this function only using SQL or `expr` / `selectExpr`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New unit tests.

Closes#27325 from zero323/SPARK-30607.

Authored-by: zero323 <mszymkiewicz@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Fix all the failed tests when enable AQE.

### Why are the changes needed?

Run more tests with AQE to catch bugs, and make it easier to enable AQE by default in the future.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing unit tests

Closes#26813 from JkSelf/enableAQEDefault.

Authored-by: jiake <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR adds a note first and last can be non-deterministic in SQL function docs as well.

This is already documented in `functions.scala`.

### Why are the changes needed?

Some people look reading SQL docs only.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Jenkins will test.

Closes#27099 from HyukjinKwon/SPARK-30335.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>