### What changes were proposed in this pull request?

This PR introduces a new analysis rule `DeduplicateRelations`, which deduplicates any duplicate relations in a plan first and then deduplicates conflicting attributes(which resued the `dedupRight` of `ResolveReferences`).

### Why are the changes needed?

`CostBasedJoinReorder` could fail when applying on self-join, e.g.,

```scala

// test in JoinReorderSuite

test("join reorder with self-join") {

val plan = t2.join(t1, Inner, Some(nameToAttr("t1.k-1-2") === nameToAttr("t2.k-1-5")))

.select(nameToAttr("t1.v-1-10"))

.join(t2, Inner, Some(nameToAttr("t1.v-1-10") === nameToAttr("t2.k-1-5")))

// this can fail

Optimize.execute(plan.analyze)

}

```

Besides, with the new rule `DeduplicateRelations`, we'd be able to enable some optimizations, e.g., LeftSemiAnti pushdown, redundant project removal, as reflects in updated unit tests.

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

Added and updated unit tests.

Closes#31470 from Ngone51/join-reorder.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When SparkContext is initialed, if we want to start SparkSession, when we call

`SparkSession.builder.enableHiveSupport().getOrCreate()`, the SparkSession we created won't have hive support since

we have't reset existed SC's conf's `spark.sql.catalogImplementation`.

In this PR we use sharedState.conf to decide whether we should enable Hive Support.

### Why are the changes needed?

We should respect `enableHiveSupport`

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added UT

Closes#31680 from AngersZhuuuu/SPARK-34568.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. Add the SQL config `spark.sql.legacy.interval.enabled` which will control when Spark SQL should use `CalendarIntervalType` instead of ANSI intervals.

2. Modify the `SubtractDates` expression to return values of `DayTimeIntervalType` when `spark.sql.legacy.interval.enabled` is set to `false` (which is the default).

### Why are the changes needed?

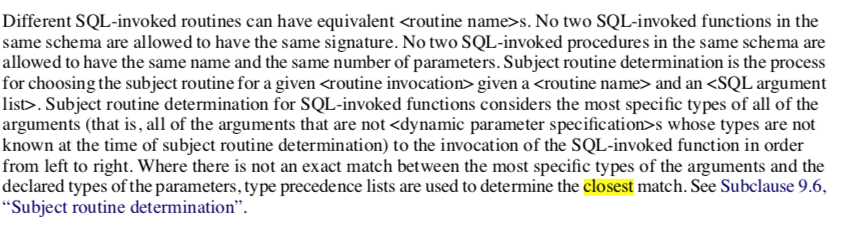

To conform to the ANSI SQL standard which requires ANSI intervals as the result of dates subtraction, see

<img width="656" alt="Screenshot 2021-03-29 at 19 09 34" src="https://user-images.githubusercontent.com/1580697/112866455-7e2f0d00-90c2-11eb-96e6-3feb7eea7e09.png">

### Does this PR introduce _any_ user-facing change?

Yes.

### How was this patch tested?

By running new tests:

```

$ build/sbt "test:testOnly *DateExpressionsSuite"

$ build/sbt "test:testOnly *ColumnExpressionSuite"

```

and some tests from `SQLQueryTestSuite`:

```

$ build/sbt "sql/testOnly *SQLQueryTestSuite -- -z date.sql"

$ build/sbt "sql/testOnly *SQLQueryTestSuite -- -z datetime.sql"

$ build/sbt "sql/testOnly *SQLQueryTestSuite -- -z interval.sql"

```

Closes#31996 from MaxGekk/subtract-dates-to-intervals.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to add a new job in GitHub Actions to check the output of TPC-DS queries.

NOTE: I've checked that the new job took 17m 35s in the GitHub Actions env.

### Why are the changes needed?

There are some cases where we noticed runtime-realted bugs after merging commits (e.g. .SPARK-33822). Therefore, I think it is worth adding a new job in GitHub Actions to check query output of TPC-DS (sf=1).

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

The new test added.

Closes#31886 from maropu/TPCDSQueryTestSuite.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Spark query plan node hierarchy has specialized traits (or abstract classes) for handling nodes with fixed number of children, for example `UnaryExpression`, `UnaryNode` and `UnaryExec` for representing an expression, a logical plan and a physical plan with only one child, respectively. This PR refactors the `TreeNode` hierarchy by extracting the children handling functionality into the following traits. `UnaryExpression` and other similar classes now extend the corresponding new trait:

```

trait LeafLike[T <: TreeNode[T]] { self: TreeNode[T] =>

override final def children: Seq[T] = Nil

}

trait UnaryLike[T <: TreeNode[T]] { self: TreeNode[T] =>

def child: T

transient override final lazy val children: Seq[T] = child :: Nil

}

trait BinaryLike[T <: TreeNode[T]] { self: TreeNode[T] =>

def left: T

def right: T

transient override final lazy val children: Seq[T] = left :: right :: Nil

}

trait TernaryLike[T <: TreeNode[T]] { self: TreeNode[T] =>

def first: T

def second: T

def third: T

transient override final lazy val children: Seq[T] = first :: second :: third :: Nil

}

```

This refactoring, which is part of a bigger effort to make tree transformations in Spark more efficient, has two benefits:

- It moves the children handling methods to a single place, instead of being spread in specific subclasses, which will help the future optimizations for tree traversals.

- It allows to mix in these traits with some concrete node types that could not extend the previous classes. For example, expressions with one child that extend `AggregateFunction` cannot extend `UnaryExpression` as `AggregateFunction` defines the `foldable` method final while `UnaryExpression` defines it as non final. With the new traits, we can directly extend the concrete class from `UnaryLike` in these cases. Classes with more specific child handling will make tree traversal methods faster.

In this PR we have also updated many concrete node types to extend these traits to benefit from more specific child handling.

### Why are the changes needed?

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

This is a refactoring, passes existing tests.

Closes#31932 from dbaliafroozeh/FactorOutChildHandlnigIntoSeparateTraits.

Authored-by: Ali Afroozeh <ali.afroozeh@databricks.com>

Signed-off-by: herman <herman@databricks.com>

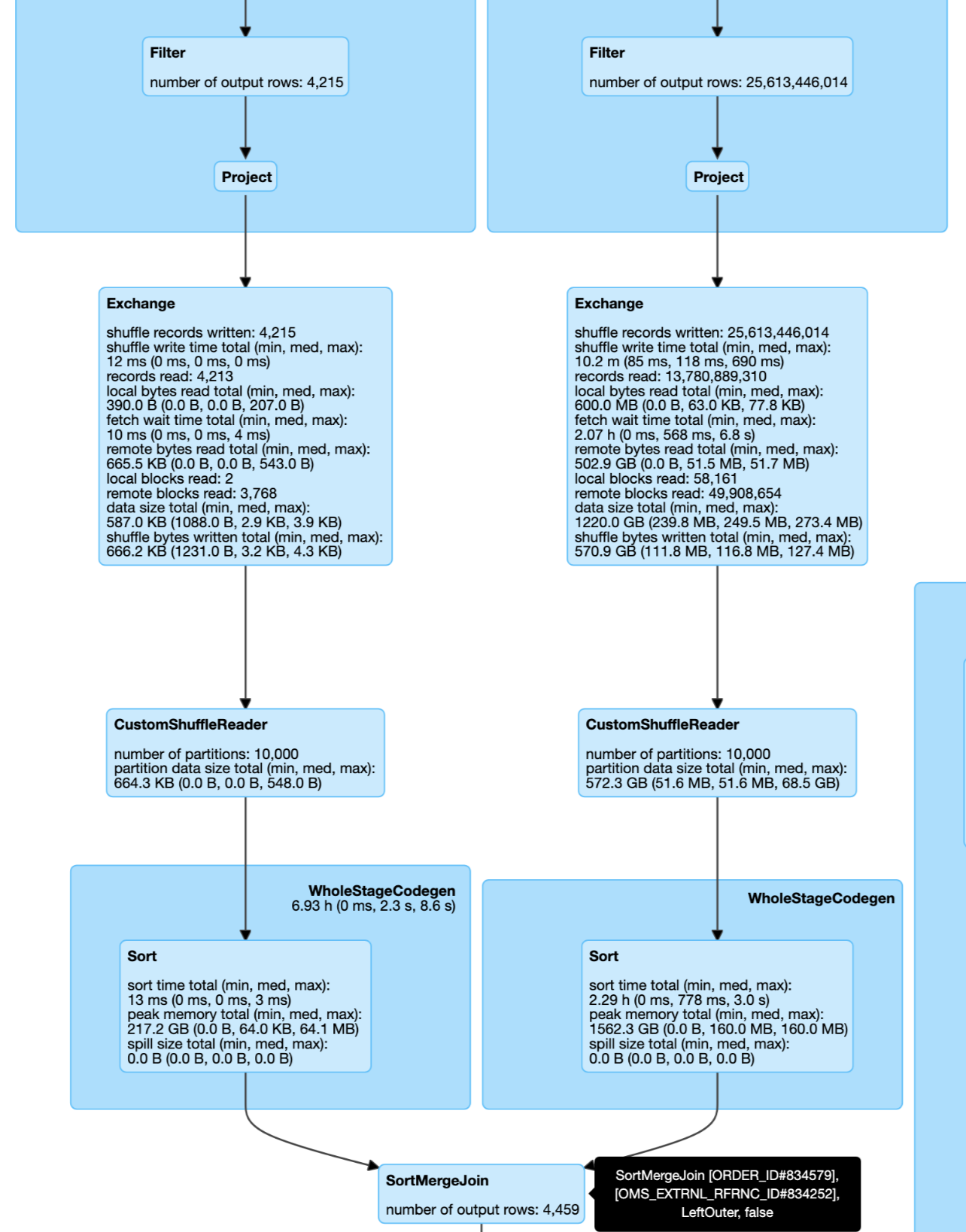

### What changes were proposed in this pull request?

Add check if `CoalesceShufflePartitions` really coalesce shuffle partition number.

### Why are the changes needed?

The `CoalesceShufflePartitions` can not coalesce such case if the total shuffle partitions size of mappers are big enough. Then it's confused to use `CustomShuffleReaderExec` which marked as `coalesced` but has no affect with partition number.

### Does this PR introduce _any_ user-facing change?

Probably yes, the plan changed.

### How was this patch tested?

Add test.

Closes#31994 from ulysses-you/SPARK-34899.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Improve dynamic partition pruning evaluation to make filtering side must can broadcast by size or broadcast by hint.

### Why are the changes needed?

1. Fast fail if filtering side can not broadcast by size or broadcast by hint.

2. We can safely disable `spark.sql.optimizer.dynamicPartitionPruning.reuseBroadcastOnly`.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing unit test.

Closes#31984 from wangyum/SPARK-34884.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

As discussed in

https://github.com/apache/spark/pull/30145#discussion_r514728642https://github.com/apache/spark/pull/30145#discussion_r514734648

We need to rewrite current Grouping Analytics grammar to support as flexible as Postgres SQL to support subsequent development.

In postgres sql, it support

```

select a, b, c, count(1) from t group by cube (a, b, c);

select a, b, c, count(1) from t group by cube(a, b, c);

select a, b, c, count(1) from t group by cube (a, b, c, (a, b), (a, b, c));

select a, b, c, count(1) from t group by rollup(a, b, c);

select a, b, c, count(1) from t group by rollup (a, b, c);

select a, b, c, count(1) from t group by rollup (a, b, c, (a, b), (a, b, c));

```

In this pr, we have done three things as below, and we will split it to different pr:

- Refactor CUBE/ROLLUP (regarding them as ANTLR tokens in a parser)

- Refactor GROUPING SETS (the logical node -> a new expr)

- Support new syntax for CUBE/ROLLUP (e.g., GROUP BY CUBE ((a, b), (a, c)))

### Why are the changes needed?

Rewrite current Grouping Analytics grammar to support as flexible as Postgres SQL to support subsequent development.

### Does this PR introduce _any_ user-facing change?

User can write Grouping Analytics grammar as flexible as Postgres SQL to support subsequent development.

### How was this patch tested?

Added UT

Closes#30212 from AngersZhuuuu/refact-grouping-analytics.

Lead-authored-by: angerszhu <angers.zhu@gmail.com>

Co-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Co-authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR is a followup change to address comments in https://github.com/apache/spark/pull/31413#discussion_r603280965 and https://github.com/apache/spark/pull/31413#discussion_r603296475 . Minor change in `FileSourceScanExec`. No actual logic change here.

### Why are the changes needed?

Better readability.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing unit tests.

Closes#32000 from c21/bucket-scan.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Some `spark-submit` commands used to run benchmarks in the user's guide is wrong, we can't use these commands to run benchmarks successful.

So the major changes of this pr is correct these wrong commands, for example, run a benchmark which inherits from `SqlBasedBenchmark`, we must specify `--jars <spark core test jar>,<spark catalyst test jar>` because `SqlBasedBenchmark` based benchmark extends `BenchmarkBase(defined in spark core test jar)` and `SQLHelper(defined in spark catalyst test jar)`.

Another change of this pr is removed the `scalatest Assertions` dependency of Benchmarks because `scalatest-*.jar` are not in the distribution package, it will be troublesome to use.

### Why are the changes needed?

Make sure benchmarks can run using spark-submit cmd described in the guide

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Use the corrected `spark-submit` commands to run benchmarks successfully.

Closes#31995 from LuciferYang/fix-benchmark-guide.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to extend the functionality of requirement for distribution and ordering on V2 write to specify the number of partitioning on repartition, so that data source is able to control the parallelism and determine the data distribution per partition in prior.

The partitioning with static number is optional, and by default disabled via default method, so only implementations required to restrict the number of partition statically need to override the method and provide the number.

Note that we don't support static number of partitions with unspecified distribution for this PR, as we haven't found the real use cases, and for hypothetical case the static number isn't good enough. Javadoc clearly describes the limitation.

### Why are the changes needed?

The use case comes from feature parity with DSv1.

I have state data source which enables the state in SS to be rewritten, which enables repartitioning, schema evolution, etc via batch query. The writer requires hash partitioning against group key, with the "desired number of partitions", which is same as what Spark does read and write against state.

This is now implemented as DSv1, and the requirement is simply done by calling repartition with the "desired number".

```

val fullPathsForKeyColumns = keySchema.map(key => new Column(s"key.${key.name}"))

data

.repartition(newPartitions, fullPathsForKeyColumns: _*)

.queryExecution

.toRdd

.foreachPartition(

writeFn(resolvedCpLocation, version, operatorId, storeName, keySchema, valueSchema,

storeConf, hadoopConfBroadcast, queryId))

```

Thanks to SPARK-34026, it's now possible to require the hash partitioning, but still not able to require the number of partitions. This PR will enable to let data source require the number of partitions.

### Does this PR introduce _any_ user-facing change?

Yes, but only for data source implementors. Even for them, this is no breaking change as default method is added.

### How was this patch tested?

Added UTs.

Closes#31355 from HeartSaVioR/SPARK-34255.

Lead-authored-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

Co-authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes an issue that `quoteIfNeeded` quotes a name only if it contains `.` or ``` ` ```.

This method should quote it if it contains non-word characters.

### Why are the changes needed?

It's a potential bug.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

New test.

Closes#31964 from sarutak/fix-quoteIfNeeded.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Filled the `defaultResult` field on non-nullable aggregates

### Why are the changes needed?

The `defaultResult` defaults to `None` and in some situations (like correlated scalar subqueries) it is used for the value of the aggregation.

The UT result before the fix:

```

-- !query

SELECT t1a,

(SELECT count(t2d) FROM t2 WHERE t2a = t1a) count_t2,

(SELECT count_if(t2d > 0) FROM t2 WHERE t2a = t1a) count_if_t2,

(SELECT approx_count_distinct(t2d) FROM t2 WHERE t2a = t1a) approx_count_distinct_t2,

(SELECT collect_list(t2d) FROM t2 WHERE t2a = t1a) collect_list_t2,

(SELECT collect_set(t2d) FROM t2 WHERE t2a = t1a) collect_set_t2,

(SELECT hex(count_min_sketch(t2d, 0.5d, 0.5d, 1)) FROM t2 WHERE t2a = t1a) collect_set_t2

FROM t1

-- !query schema

struct<t1a:string,count_t2:bigint,count_if_t2:bigint,approx_count_distinct_t2:bigint,collect_list_t2:array<bigint>,collect_set_t2:array<bigint>,collect_set_t2:string>

-- !query output

val1a 0 0 NULL NULL NULL NULL

val1a 0 0 NULL NULL NULL NULL

val1a 0 0 NULL NULL NULL NULL

val1a 0 0 NULL NULL NULL NULL

val1b 6 6 3 [19,119,319,19,19,19] [19,119,319] 0000000100000000000000060000000100000004000000005D8D6AB90000000000000000000000000000000400000000000000010000000000000001

val1c 2 2 2 [219,19] [219,19] 0000000100000000000000020000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000001

val1d 0 0 NULL NULL NULL NULL

val1d 0 0 NULL NULL NULL NULL

val1d 0 0 NULL NULL NULL NULL

val1e 1 1 1 [19] [19] 0000000100000000000000010000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000000

val1e 1 1 1 [19] [19] 0000000100000000000000010000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000000

val1e 1 1 1 [19] [19] 0000000100000000000000010000000100000004000000005D8D6AB90000000000000000000000000000000100000000000000000000000000000000

```

### Does this PR introduce _any_ user-facing change?

Bugfix

### How was this patch tested?

UT

Closes#31973 from tanelk/SPARK-34876_non_nullable_agg_subquery.

Authored-by: Tanel Kiis <tanel.kiis@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The changes being proposed are to increase the accuracy of JDBCRelation's stride calculation, as outlined in: https://issues.apache.org/jira/browse/SPARK-34843

In summary:

Currently, in JDBCRelation (line 123), the stride size is calculated as follows:

val stride: Long = upperBound / numPartitions - lowerBound / numPartitions

Due to truncation happening on both divisions, the stride size can fall short of what it should be. This can lead to a big difference between the provided upper bound and the actual start of the last partition.

I'm proposing a different formula that doesn't truncate to early, and also maintains accuracy using fixed-point decimals. This helps tremendously with the size of the last partition, which can be even more amplified if there is data skew in that direction. In a real-life test, I've seen a 27% increase in performance with this more proper stride alignment. The reason for fixed-point decimals instead of floating-point decimals is because inaccuracy due to limitation of what the float can represent. This may seem small, but could shift the midpoint a bit, and depending on how granular the data is, that could translate to quite a difference. It's also just inaccurate, and I'm striving to make the partitioning as accurate as possible, within reason.

Lastly, since the last partition's predicate is determined by how the strides align starting from the lower bound (plus one stride), there can be skew introduced creating a larger last partition compared to the first partition. Therefore, after calculating a more precise stride size, I've also introduced logic to move the first partition's predicate (which is an offset from the lower bound) to a position that closely matches the offset of the last partition's predicate (in relation to the upper bound). This makes the first and last partition more evenly distributed compared to each other, and helps with the last task being the largest (reducing its size).

### Why are the changes needed?

The current implementation is inaccurate and can lead to the last task/partition running much longer than previous tasks. Therefore, you can end up with a single node/core running for an extended period while other nodes/cores are sitting idle.

### Does this PR introduce _any_ user-facing change?

No. I would suspect some users will just get a good performance increase. As stated above, if we were to run our code on Spark that has this change implemented, we would have all of the sudden got a 27% increase in performance.

### How was this patch tested?

I've added two new unit tests. I did need to update one unit test, but when you look at the comparison of the before and after, you'll see better alignment of the partitioning with the new implementation. Given that the lower partition's predicate is exclusive and the upper's is inclusive, the offset of the lower was 3 days, and the offset of the upper was 6 days... that's potentially twice the amount of data in that upper partition (could be much more depending on how the user's data is distributed).

Other unit tests that utilize timestamps and two partitions have maintained their midpoint.

### Examples

I've added results with and without the realignment logic to better highlight both improvements this PR brings.

**Example 1:**

Given the following partition config:

"lowerBound" -> "1930-01-01"

"upperBound" -> "2020-12-31"

"numPartitions" -> 1000

_Old method (exactly what it would be BEFORE this PR):_

First partition: "PartitionColumn" < '1930-02-02' or "PartitionColumn" is null

Last partition: "PartitionColumn" >= '2017-07-11'

_Old method, but with new realingment logic of first partition:_

First partition: "PartitionColumn" < '1931-10-14' or "PartitionColumn" is null

Last partition: "PartitionColumn" >= '2019-03-22'

_New method:_

First partition: "PartitionColumn" < '1930-02-03' or "PartitionColumn" is null

Last partition: "PartitionColumn" >= '2020-04-05'

_New with new realingment logic of first partition (exactly what it would be AFTER this PR):_

First partition: "PartitionColumn" < '1930-06-02' or "PartitionColumn" is null

Last partition: "PartitionColumn" >= '2020-08-02'

**Example 2:**

Given the following partition config:

"lowerBound" -> "1927-04-05",

"upperBound" -> "2020-10-16"

"numPartitions" -> 2000

_Old method (exactly what it would be BEFORE this PR):_

First partition: "PartitionColumn" < '1927-04-21' or "PartitionColumn" is null

Last partition: "PartitionColumn" >= '2014-10-29'

_Old method, but with new realingment logic of first partition::_

First partition: "PartitionColumn" < '1930-04-07' or "PartitionColumn" is null

Last partition: "PartitionColumn" >= '2017-10-15'

_New method:_

First partition: "PartitionColumn" < '1927-04-22' or "PartitionColumn" is null

Last partition: "PartitionColumn" >= '2020-04-19'

_New method with new realingment logic of first partition (exactly what it would be AFTER this PR):_

First partition: "PartitionColumn" < '1927-07-13' or "PartitionColumn" is null

Last partition: "PartitionColumn" >= '2020-07-10'

Closes#31965 from hanover-fiste/SPARK-34843.

Authored-by: hanover-fiste <jyarbrough.git@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR fixes a correctness issue with higher order functions. The results of function expressions needs to be copied in some higher order functions as such an expression can return with internal buffers and higher order functions can call multiple times the expression.

The issue was discovered with typed `ScalaUDF`s after https://github.com/apache/spark/pull/28979.

### Why are the changes needed?

To fix a bug.

### Does this PR introduce _any_ user-facing change?

Yes, some queries return the right results again.

### How was this patch tested?

Added new UT.

Closes#31955 from peter-toth/SPARK-34829-fix-scalaudf-resultconversion.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Fix code format in `SQLConf` and comment in `PartitionPruning`.

### Why are the changes needed?

Make code more readable.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

N/A

Closes#31969 from wangyum/SPARK-32855-2.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Apache Parquet 1.12.0 switches its ZSTD compression from Hadoop codec to its own codec.

### Why are the changes needed?

**Apache Spark 3.1 (It requires libhadoop built with zstd)**

```scala

scala> spark.range(10).write.option("compression", "zstd").parquet("/tmp/a")

21/03/27 08:49:38 ERROR Executor: Exception in task 11.0 in stage 0.0 (TID 11)2]

java.lang.RuntimeException: native zStandard library not available:

this version of libhadoop was built without zstd support.

```

**Apache Spark 3.2 (No libhadoop requirement)**

```scala

scala> spark.range(10).write.option("compression", "zstd").parquet("/tmp/a")

```

### Does this PR introduce _any_ user-facing change?

Yes, this is an improvement.

### How was this patch tested?

Pass the CI with the newly added test coverage.

Closes#31981 from dongjoon-hyun/SPARK-34880.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Improve error message for casting cause overflow error. We should use DataType's catalogString.

### Why are the changes needed?

Improve error message

### Does this PR introduce _any_ user-facing change?

For example:

```

set spark.sql.ansi.enabled=true;

select tinyint(128) * tinyint(2);

```

Error message before this pr:

```

Casting 128 to scala.Byte$ causes overflow

```

After this pr:

```

Casting 128 to tinyint causes overflow

```

### How was this patch tested?

Added UT

Closes#31971 from AngersZhuuuu/SPARK-34744.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Kent Yao <yao@apache.org>

### What changes were proposed in this pull request?

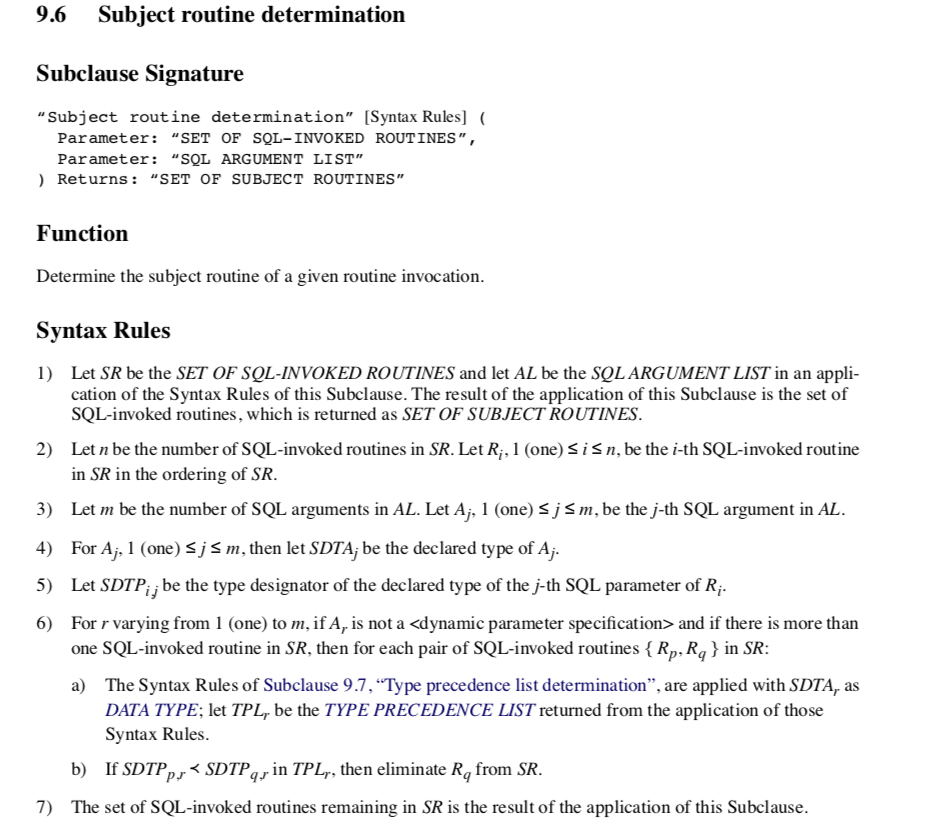

1. Add new expression `DivideDTInterval` which multiplies a `DayTimeIntervalType` expression by a `NumericType` expression including ByteType, ShortType, IntegerType, LongType, FloatType, DoubleType, DecimalType.

2. Extend binary arithmetic rules to support `day-time interval / numeric`.

### Why are the changes needed?

To conform the ANSI SQL standard which requires such operation over day-time intervals:

<img width="656" alt="Screenshot 2021-03-25 at 18 44 58" src="https://user-images.githubusercontent.com/1580697/112501559-68f07080-8d9a-11eb-8781-66e6631bb7ef.png">

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running new tests:

```

$ build/sbt "test:testOnly *IntervalExpressionsSuite"

$ build/sbt "test:testOnly *ColumnExpressionSuite"

```

Closes#31972 from MaxGekk/div-dt-interval-by-num.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. Add new expression `DivideYMInterval` which multiplies a `YearMonthIntervalType` expression by a `NumericType` expression including ByteType, ShortType, IntegerType, LongType, FloatType, DoubleType, DecimalType.

2. Extend binary arithmetic rules to support `year-month interval / numeric`.

### Why are the changes needed?

To conform the ANSI SQL standard which requires such operation over year-month intervals:

<img width="656" alt="Screenshot 2021-03-25 at 18 44 58" src="https://user-images.githubusercontent.com/1580697/112501559-68f07080-8d9a-11eb-8781-66e6631bb7ef.png">

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running new tests:

```

$ build/sbt "test:testOnly *IntervalExpressionsSuite"

$ build/sbt "test:testOnly *ColumnExpressionSuite"

```

Closes#31961 from MaxGekk/div-ym-interval-by-num.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This pr improve the cost model in `pruningHasBenefit` for filtering side can not build broadcast by join type:

1. The filtering side must be small enough to build broadcast by size.

2. The estimated size of the pruning side must be big enough: `estimatePruningSideSize * spark.sql.optimizer.dynamicPartitionPruning.pruningSideExtraFilterRatio > overhead`.

### Why are the changes needed?

Improve query performance for these cases.

This a real case from cluster. Left join and left size very small and right side can build DPP:

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test.

Closes#29726 from wangyum/SPARK-32855.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

A companion PR for SPARK-34817, when we handle the unsigned int(<=32) logical types. In this PR, we map the unsigned int64 to decimal(20, 0) for better compatibility.

### Why are the changes needed?

Spark won't have unsigned types, but spark should be able to read existing parquet files written by other systems that support unsigned types for better compatibility.

### Does this PR introduce _any_ user-facing change?

yes, we can read parquet uint64 now

### How was this patch tested?

new unit tests

Closes#31960 from yaooqinn/SPARK-34786-2.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Kent Yao <yao@apache.org>

### What changes were proposed in this pull request?

Move the checkpoint location resolving into the rule ResolveWriteToStream, which is added in SPARK-34748.

### Why are the changes needed?

After SPARK-34748, we have a rule ResolveWriteToStream for the analysis logic for the resolving logic of stream write plans. Based on it, we can further move the checkpoint location resolving work in the rule. Then, all the checkpoint resolving logic was done in the analyzer.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing UT.

Closes#31963 from xuanyuanking/SPARK-34871.

Authored-by: Yuanjian Li <yuanjian.li@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR groups exception messages in `execution/datasources/v2`.

### Why are the changes needed?

It will largely help with standardization of error messages and its maintenance.

### Does this PR introduce _any_ user-facing change?

No. Error messages remain unchanged.

### How was this patch tested?

No new tests - pass all original tests to make sure it doesn't break any existing behavior.

Closes#31619 from karenfeng/spark-33600.

Authored-by: Karen Feng <karen.feng@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Update the plan stability golden files even if only the `explain.txt` changes.

This is resubmition of #31927. The schema for one of the TPCDS tables was updated and that changed the `explain.txt` for the q17.

### Why are the changes needed?

Currently only `simplified.txt` change is checked. There are some PRs, that update the `explain.txt`, that do not change the `simplified.txt`.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

The updated golden files.

Closes#31957 from tanelk/SPARK-34822_update_plan_stability.

Lead-authored-by: Tanel Kiis <tanel.kiis@gmail.com>

Co-authored-by: tanel.kiis@gmail.com <tanel.kiis@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. Add new expression `MultiplyDTInterval` which multiplies a `DayTimeIntervalType` expression by a `NumericType` expression including ByteType, ShortType, IntegerType, LongType, FloatType, DoubleType, DecimalType.

2. Extend binary arithmetic rules to support `numeric * day-time interval` and `day-time interval * numeric`.

3. Invoke `DoubleMath.roundToInt` in `double/float * year-month interval`.

### Why are the changes needed?

To conform the ANSI SQL standard which requires such operation over day-time intervals:

<img width="667" alt="Screenshot 2021-03-22 at 16 33 16" src="https://user-images.githubusercontent.com/1580697/111997810-77d1eb80-8b2c-11eb-951d-e43911d9c5db.png">

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running new tests:

```

$ build/sbt "test:testOnly *IntervalExpressionsSuite"

$ build/sbt "test:testOnly *ColumnExpressionSuite"

```

Closes#31951 from MaxGekk/mul-day-time-interval.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Unsigned types may be used to produce smaller in-memory representations of the data. These types used by frameworks(e.g. hive, pig) using parquet. And parquet will map them to its base types.

see more https://github.com/apache/parquet-format/blob/master/LogicalTypes.mdhttps://github.com/apache/parquet-format/blob/master/src/main/thrift/parquet.thrift

```thrift

/**

* An unsigned integer value.

*

* The number describes the maximum number of meaningful data bits in

* the stored value. 8, 16 and 32 bit values are stored using the

* INT32 physical type. 64 bit values are stored using the INT64

* physical type.

*

*/

UINT_8 = 11;

UINT_16 = 12;

UINT_32 = 13;

UINT_64 = 14;

```

```

UInt8-[0:255]

UInt16-[0:65535]

UInt32-[0:4294967295]

UInt64-[0:18446744073709551615]

```

In this PR, we support read UINT_8 as ShortType, UINT_16 as IntegerType, UINT_32 as LongType to fit their range. Support for UINT_64 will be in another PR.

### Why are the changes needed?

better parquet support

### Does this PR introduce _any_ user-facing change?

yes, we can read unit[8/16/32] from parquet files

### How was this patch tested?

new tests

Closes#31921 from yaooqinn/SPARK-34817.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to remove re-analyzing the already analyzed plan for `CreateViewCommand` as discussed https://github.com/apache/spark/pull/31273/files#r581592786.

### Why are the changes needed?

No need to analyze the plan if it's already analyzed.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests should cover this.

Closes#31933 from imback82/remove_analyzed_from_create_temp_view.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR intends to fix the bug that does not apply right-padding for char types inside correlated subquries.

For example, a query below returns nothing in master, but a correct result is `c`.

```

scala> sql(s"CREATE TABLE t1(v VARCHAR(3), c CHAR(5)) USING parquet")

scala> sql(s"CREATE TABLE t2(v VARCHAR(5), c CHAR(7)) USING parquet")

scala> sql("INSERT INTO t1 VALUES ('c', 'b')")

scala> sql("INSERT INTO t2 VALUES ('a', 'b')")

scala> val df = sql("""

|SELECT v FROM t1

|WHERE 'a' IN (SELECT v FROM t2 WHERE t2.c = t1.c )""".stripMargin)

scala> df.show()

+---+

| v|

+---+

+---+

```

This is because `ApplyCharTypePadding` does not handle the case above to apply right-padding into `'abc'`. This PR modifies the code in `ApplyCharTypePadding` for handling it correctly.

```

// Before this PR:

scala> df.explain(true)

== Analyzed Logical Plan ==

v: string

Project [v#13]

+- Filter a IN (list#12 [c#14])

: +- Project [v#15]

: +- Filter (c#16 = outer(c#14))

: +- SubqueryAlias spark_catalog.default.t2

: +- Relation default.t2[v#15,c#16] parquet

+- SubqueryAlias spark_catalog.default.t1

+- Relation default.t1[v#13,c#14] parquet

scala> df.show()

+---+

| v|

+---+

+---+

// After this PR:

scala> df.explain(true)

== Analyzed Logical Plan ==

v: string

Project [v#43]

+- Filter a IN (list#42 [c#44])

: +- Project [v#45]

: +- Filter (c#46 = rpad(outer(c#44), 7, ))

: +- SubqueryAlias spark_catalog.default.t2

: +- Relation default.t2[v#45,c#46] parquet

+- SubqueryAlias spark_catalog.default.t1

+- Relation default.t1[v#43,c#44] parquet

scala> df.show()

+---+

| v|

+---+

| c|

+---+

```

This fix is lated to TPCDS q17; the query returns nothing because of this bug: https://github.com/apache/spark/pull/31886/files#r599333799

### Why are the changes needed?

Bugfix.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit tests added.

Closes#31940 from maropu/FixCharPadding.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

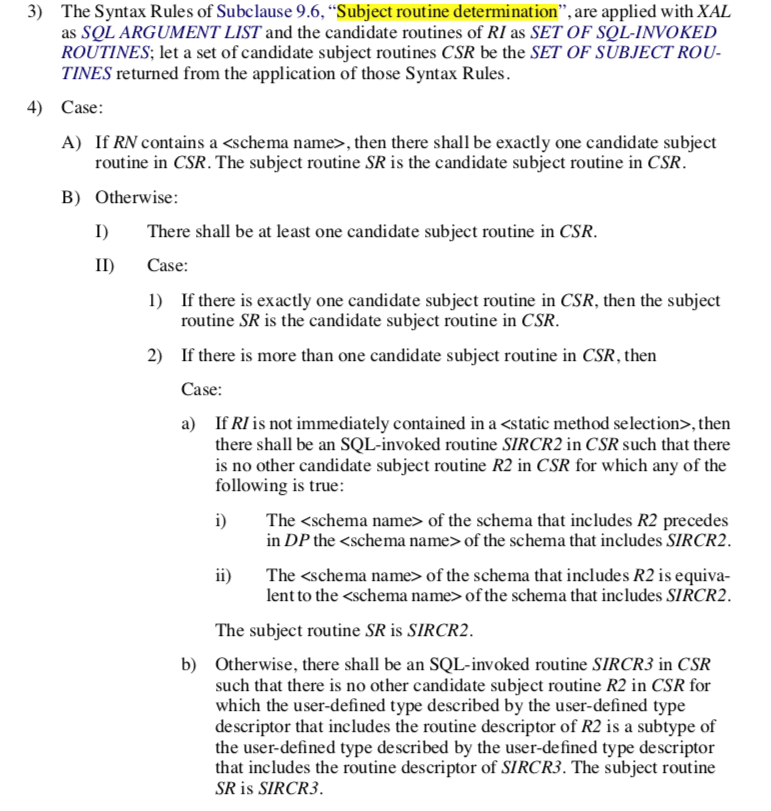

### What changes were proposed in this pull request?

Currently, when implicit casting a data type to a `TypeCollection`, Spark returns the first convertible data type among `TypeCollection`.

In ANSI mode, we can make the behavior more reasonable by returning the closet convertible data type in `TypeCollection`.

In details, we first try to find the all the expected types we can implicitly cast:

1. if there is no convertible data types, return None;

2. if there is only one convertible data type, cast input as it;

3. otherwise if there are multiple convertible data types, find the closet data

type among them. If there is no such closet data type, return None.

Note that if the closet type is Float type and the convertible types contains Double type, simply return Double type as the closet type to avoid potential

precision loss on converting the Integral type as Float type.

### Why are the changes needed?

Make the type coercion rule for TypeCollection more reasonable and ANSI compatible.

E.g. returning Long instead of Double for`implicast(int, TypeCollect(Double, Long))`.

From ANSI SQL Spec section 4.33 "SQL-invoked routines"

Section 9.6 "Subject routine determination"

Section 10.4 "routine invocation"

### Does this PR introduce _any_ user-facing change?

Yes, in ANSI mode, implicit casting to a `TypeCollection` returns the narrowest convertible data type instead of the first convertible one.

### How was this patch tested?

Unit tests.

Closes#31859 from gengliangwang/implicitCastTypeCollection.

Lead-authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Co-authored-by: Gengliang Wang <ltnwgl@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Update the plan stability golden files even if only the `explain.txt` changes.

### Why are the changes needed?

Currently only `simplified.txt` change is checked. There are some PRs, that update the `explain.txt`, that do not change the `simplified.txt`.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

The updated golden files.

Closes#31927 from tanelk/SPARK-34822_update_plan_stability.

Lead-authored-by: Tanel Kiis <tanel.kiis@gmail.com>

Co-authored-by: tanel.kiis@gmail.com <tanel.kiis@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Both local limit and global limit define the output partitioning and output ordering in the same way and this is duplicated (https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/execution/limit.scala#L159-L175 ). We can move the output partitioning and ordering into their parent trait - `BaseLimitExec`. This is doable as `BaseLimitExec` has no more other child class. This is a minor code refactoring.

### Why are the changes needed?

Clean up the code a little bit. Better readability.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pure refactoring. Rely on existing unit tests.

Closes#31950 from c21/limit-cleanup.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

SPARK-32160 add a config(`EXECUTOR_ALLOW_SPARK_CONTEXT`) to switch allow/disallow to create `SparkContext` in executors and the default value of the config is `false`

`ExternalAppendOnlyUnsafeRowArrayBenchmark` will run fail when `EXECUTOR_ALLOW_SPARK_CONTEXT` use the default value because the `ExternalAppendOnlyUnsafeRowArrayBenchmark#withFakeTaskContext` method try to create a `SparkContext` manually in Executor Side.

So the main change of this pr is set `EXECUTOR_ALLOW_SPARK_CONTEXT` to `true` to ensure `ExternalAppendOnlyUnsafeRowArrayBenchmark` run successfully.

### Why are the changes needed?

Bug fix.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manual test:

```

bin/spark-submit --class org.apache.spark.sql.execution.ExternalAppendOnlyUnsafeRowArrayBenchmark --jars spark-core_2.12-3.2.0-SNAPSHOT-tests.jar spark-sql_2.12-3.2.0-SNAPSHOT-tests.jar

```

**Before**

```

Exception in thread "main" java.lang.IllegalStateException: SparkContext should only be created and accessed on the driver.

at org.apache.spark.SparkContext$.org$apache$spark$SparkContext$$assertOnDriver(SparkContext.scala:2679)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:89)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:137)

at org.apache.spark.sql.execution.ExternalAppendOnlyUnsafeRowArrayBenchmark$.withFakeTaskContext(ExternalAppendOnlyUnsafeRowArrayBenchmark.scala:52)

at org.apache.spark.sql.execution.ExternalAppendOnlyUnsafeRowArrayBenchmark$.testAgainstRawArrayBuffer(ExternalAppendOnlyUnsafeRowArrayBenchmark.scala:119)

at org.apache.spark.sql.execution.ExternalAppendOnlyUnsafeRowArrayBenchmark$.$anonfun$runBenchmarkSuite$1(ExternalAppendOnlyUnsafeRowArrayBenchmark.scala:189)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at org.apache.spark.benchmark.BenchmarkBase.runBenchmark(BenchmarkBase.scala:40)

at org.apache.spark.sql.execution.ExternalAppendOnlyUnsafeRowArrayBenchmark$.runBenchmarkSuite(ExternalAppendOnlyUnsafeRowArrayBenchmark.scala:186)

at org.apache.spark.benchmark.BenchmarkBase.main(BenchmarkBase.scala:58)

at org.apache.spark.sql.execution.ExternalAppendOnlyUnsafeRowArrayBenchmark.main(ExternalAppendOnlyUnsafeRowArrayBenchmark.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:951)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1030)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1039)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

```

**After**

`ExternalAppendOnlyUnsafeRowArrayBenchmark` run successfully.

Closes#31939 from LuciferYang/SPARK-34832.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR fixes an issue that `col()`, `$"<name>"` and `df("name")` don't handle quoted column names like ``` `a``b.c` ```properly.

For example, if we have a following DataFrame.

```

val df1 = spark.sql("SELECT 'col1' AS `a``b.c`")

```

For the DataFrame, this query is successfully executed.

```

scala> df1.selectExpr("`a``b.c`").show

+-----+

|a`b.c|

+-----+

| col1|

+-----+

```

But the following query will fail because ``` df1("`a``b.c`") ``` throws an exception.

```

scala> df1.select(df1("`a``b.c`")).show

org.apache.spark.sql.AnalysisException: syntax error in attribute name: `a``b.c`;

at org.apache.spark.sql.catalyst.analysis.UnresolvedAttribute$.e$1(unresolved.scala:152)

at org.apache.spark.sql.catalyst.analysis.UnresolvedAttribute$.parseAttributeName(unresolved.scala:162)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.resolveQuoted(LogicalPlan.scala:121)

at org.apache.spark.sql.Dataset.resolve(Dataset.scala:221)

at org.apache.spark.sql.Dataset.col(Dataset.scala:1274)

at org.apache.spark.sql.Dataset.apply(Dataset.scala:1241)

... 49 elided

```

### Why are the changes needed?

It's a bug.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

New tests.

Closes#31854 from sarutak/fix-parseAttributeName.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

SPARK-34842 (#31012) has a typo in the type of `date_dim.d_quarter_name` in the TPCDS schema (`TPCDSBase`). This PR replace `CHAR(1)` with `CHAR(6)`. This fix comes from p28 in [the TPCDS official doc](http://www.tpc.org/tpc_documents_current_versions/pdf/tpc-ds_v2.9.0.pdf).

### Why are the changes needed?

Bugfix.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

N/A

Closes#31943 from maropu/SPARK-34083-FOLLOWUP.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

1. Add new expression `MultiplyYMInterval` which multiplies a `YearMonthIntervalType` expression by a `NumericType` expression including ByteType, ShortType, IntegerType, LongType, FloatType, DoubleType, DecimalType.

2. Extend binary arithmetic rules to support `numeric * year-month interval` and `year-month interval * numeric`.

### Why are the changes needed?

To conform the ANSI SQL standard which requires such operation over year-month intervals:

<img width="667" alt="Screenshot 2021-03-22 at 16 33 16" src="https://user-images.githubusercontent.com/1580697/111997810-77d1eb80-8b2c-11eb-951d-e43911d9c5db.png">

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

By running new tests:

```

$ build/sbt "test:testOnly *IntervalExpressionsSuite"

$ build/sbt "test:testOnly *ColumnExpressionSuite"

```

Closes#31929 from MaxGekk/interval-mul-div.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

forward-port https://github.com/apache/spark/pull/31811 to master

### What changes were proposed in this pull request?

For permanent views (and the new SQL temp view in Spark 3.1), we store the view SQL text and re-parse/analyze the view SQL text when reading the view. In the case of `SELECT * FROM ...`, we want to avoid view schema change (e.g. the referenced table changes its schema) and will record the view query output column names when creating the view, so that when reading the view we can add a `SELECT recorded_column_names FROM ...` to retain the original view query schema.

In Spark 3.1 and before, the final SELECT is added after the analysis phase: https://github.com/apache/spark/blob/branch-3.1/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/view.scala#L67

If the view query has duplicated output column names, we always pick the first column when reading a view. A simple repro:

```

scala> sql("create view c(x, y) as select 1 a, 2 a")

res0: org.apache.spark.sql.DataFrame = []

scala> sql("select * from c").show

+---+---+

| x| y|

+---+---+

| 1| 1|

+---+---+

```

In the master branch, we will fail at the view reading time due to b891862fb6 , which adds the final SELECT during analysis, so that the query fails with `Reference 'a' is ambiguous`

This PR proposes to resolve the view query output column names from the matching attributes by ordinal.

For example, `create view c(x, y) as select 1 a, 2 a`, the view query output column names are `[a, a]`. When we reading the view, there are 2 matching attributes (e.g.`[a#1, a#2]`) and we can simply match them by ordinal.

A negative example is

```

create table t(a int)

create view v as select *, 1 as col from t

replace table t(a int, col int)

```

When reading the view, the view query output column names are `[a, col]`, and there are two matching attributes of `col`, and we should fail the query. See the tests for details.

### Why are the changes needed?

bug fix

### Does this PR introduce _any_ user-facing change?

yes

### How was this patch tested?

new test

Closes#31930 from cloud-fan/view2.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This bug was introduced by SPARK-30428 at Apache Spark 3.0.0.

This PR fixes `FileScan.equals()`.

### Why are the changes needed?

- Without this fix `FileScan.equals` doesn't take `fileIndex` and `readSchema` into account.

- Partition filters and data filters added to `FileScan` (in #27112 and #27157) caused that canonicalized form of some `BatchScanExec` nodes don't match and this prevents some reuse possibilities.

### Does this PR introduce _any_ user-facing change?

Yes, before this fix incorrect reuse of `FileScan` and so `BatchScanExec` could have happed causing correctness issues.

### How was this patch tested?

Added new UTs.

Closes#31848 from peter-toth/SPARK-34756-fix-filescan-equality-check.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Move `ExecutionListenerBus` register (both `ListenerBus` and `ContextCleaner` register) into itself.

Also with a minor change that put `registerSparkListenerForCleanup` to a better place.

### Why are the changes needed?

improve code

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass existing tests.

Closes#31919 from Ngone51/SPARK-34087-followup.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch proposes to disable fetching shuffle blocks in batch when io encryption is enabled. Adaptive Query Execution fetch contiguous shuffle blocks for the same map task in batch to reduce IO and improve performance. However, we found that batch fetching is incompatible with io encryption.

### Why are the changes needed?

Before this patch, we set `spark.io.encryption.enabled` to true, then run some queries which coalesced partitions by AEQ, may got following error message:

```14:05:52.638 WARN org.apache.spark.scheduler.TaskSetManager: Lost task 1.0 in stage 2.0 (TID 3) (11.240.37.88 executor driver): FetchFailed(BlockManagerId(driver, 11.240.37.88, 63574, None), shuffleId=0, mapIndex=0, mapId=0, reduceId=2, message=

org.apache.spark.shuffle.FetchFailedException: Stream is corrupted

at org.apache.spark.storage.ShuffleBlockFetcherIterator.throwFetchFailedException(ShuffleBlockFetcherIterator.scala:772)

at org.apache.spark.storage.BufferReleasingInputStream.read(ShuffleBlockFetcherIterator.scala:845)

at java.io.BufferedInputStream.fill(BufferedInputStream.java:246)

at java.io.BufferedInputStream.read(BufferedInputStream.java:265)

at java.io.DataInputStream.readInt(DataInputStream.java:387)

at org.apache.spark.sql.execution.UnsafeRowSerializerInstance$$anon$2$$anon$3.readSize(UnsafeRowSerializer.scala:113)

at org.apache.spark.sql.execution.UnsafeRowSerializerInstance$$anon$2$$anon$3.next(UnsafeRowSerializer.scala:129)

at org.apache.spark.sql.execution.UnsafeRowSerializerInstance$$anon$2$$anon$3.next(UnsafeRowSerializer.scala:110)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:494)

at scala.collection.Iterator$$anon$10.next(Iterator.scala:459)

at org.apache.spark.util.CompletionIterator.next(CompletionIterator.scala:29)

at org.apache.spark.InterruptibleIterator.next(InterruptibleIterator.scala:40)

at scala.collection.Iterator$$anon$10.next(Iterator.scala:459)

at org.apache.spark.sql.execution.SparkPlan.$anonfun$getByteArrayRdd$1(SparkPlan.scala:345)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2(RDD.scala:898)

at org.apache.spark.rdd.RDD.$anonfun$mapPartitionsInternal$2$adapted(RDD.scala:898)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:373)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:337)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:498)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1437)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:501)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.io.IOException: Stream is corrupted

at net.jpountz.lz4.LZ4BlockInputStream.refill(LZ4BlockInputStream.java:200)

at net.jpountz.lz4.LZ4BlockInputStream.refill(LZ4BlockInputStream.java:226)

at net.jpountz.lz4.LZ4BlockInputStream.read(LZ4BlockInputStream.java:157)

at org.apache.spark.storage.BufferReleasingInputStream.read(ShuffleBlockFetcherIterator.scala:841)

... 25 more

)

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

New tests.

Closes#31898 from hezuojiao/fetch_shuffle_in_batch.

Authored-by: hezuojiao <hezuojiao@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Marked `RowNumberLike` and `RankLike` as not-nullable.

### Why are the changes needed?

`RowNumberLike` and `RankLike` SQL expressions never return null value. Marking them as non-nullable can have some performance benefits, because some optimizer rules apply only to non-nullable expressions

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Did not find any existing tests on the nullability of aggregate functions.

Plan stability suite partially covers this.

Closes#31924 from tanelk/SPARK-34812_nullability.

Authored-by: tanel.kiis@gmail.com <tanel.kiis@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Now that all the temporary views are wrapped with `TemporaryViewRelation`(#31273, #31652, and #31825), this PR proposes to update `SessionCatalog`'s APIs for temporary views to take or return more concrete types.

APIs that will take `TemporaryViewRelation` instead of `LogicalPlan`:

```

createTempView, createGlobalTempView, alterTempViewDefinition

```

APIs that will return `TemporaryViewRelation` instead of `LogicalPlan`:

```

getRawTempView, getRawGlobalTempView

```

APIs that will return `View` instead of `LogicalPlan`:

```

getTempView, getGlobalTempView, lookupTempView

```

### Why are the changes needed?

Internal refactoring to work with more concrete types.

### Does this PR introduce _any_ user-facing change?

No, this is internal refactoring.

### How was this patch tested?

Updated existing tests affected by the refactoring.

Closes#31906 from imback82/use_temporary_view_relation.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes the `HybridRowQueue ` to respect the configured memory mode.

Besides, this PR also refactored the constructor of `MemoryConsumer` to accept the memory mode explicitly.

### Why are the changes needed?

`HybridRowQueue` supports both onHeap and offHeap manipulation. But it inherited the wrong `MemoryConsumer` constructor, which hard-coded the memory mode to `onHeap`.

### Does this PR introduce _any_ user-facing change?

No. (Maybe yes in some cases where users can't complete the job before could complete successfully after the fix because of `HybridRowQueue` is able to spill under offHeap mode now. )

### How was this patch tested?

Updated the existing test to make it test both offHeap and onHeap modes.

Closes#31152 from Ngone51/fix-MemoryConsumer-memorymode.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR updates CSVBenchmark especially we have a fix like https://github.com/apache/spark/pull/31858 that could potentially improve the performance.

### Why are the changes needed?

To have the updated benchmark results.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually ran the benchmark

Closes#31917 from HyukjinKwon/SPARK-34815.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to optimize WAL commit phase via following changes:

* cache offset log to avoid FS get operation per batch

* just directly delete instead of employing FS list operation on purge

### Why are the changes needed?

There're inefficiency on WAL commit phase which can be easily optimized via using a small driver memory.

1. To provide the offset metadata to source side (via `source.commit()`), we read offset metadata for previous batch from file system, which is probably written by this driver in previous batches. Caching it into driver memory would reduce the get operation.

2. Spark calls purge against offset log & commit log per batch, which calls list operation. If the previous batch succeeded to purge, the current batch just needs to check one batch which can be simply done via direct delete operation, instead of calling list operation.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually tested with additional debug log. (Verified that cache is used, cache keeps the size as 2, only one delete call is used instead of list call)

Did some experiment with simple rate to console query. (NOTE: wasn't done with master branch - tested against Spark 2.4.x, but WAL commit phase hasn't been changed AFAIK during these versions)

AWS S3 + S3 guard:

> before the patch

<img width="1075" alt="aws-before" src="https://user-images.githubusercontent.com/1317309/107108721-6cc54380-687d-11eb-8f10-b906b9d58397.png">

> after the patch

<img width="1071" alt="aws-after" src="https://user-images.githubusercontent.com/1317309/107108724-7189f780-687d-11eb-88da-26912ac15c85.png">

Azure:

> before the patch

<img width="1074" alt="azure-before" src="https://user-images.githubusercontent.com/1317309/107108726-75b61500-687d-11eb-8c06-9048fa10ff9a.png">

> after the patch

<img width="1069" alt="azure-after" src="https://user-images.githubusercontent.com/1317309/107108729-79e23280-687d-11eb-8d97-e7f3aeec51be.png">

Closes#31495 from HeartSaVioR/SPARK-34383.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

### What changes were proposed in this pull request?

- Create a new rule `ResolveStreamWrite` for all analysis logic for streaming write.

- Add corresponding logical plans `WriteToStreamStatement` and `WriteToStream`.

### Why are the changes needed?

Currently, the analysis logic for streaming write is mixed in StreamingQueryManager. If we create a specific analyzer rule and separated logical plans, it should be helpful for further extension.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#31842 from xuanyuanking/SPARK-34748.

Authored-by: Yuanjian Li <yuanjian.li@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>