## What changes were proposed in this pull request?

This takes over original PR at #22019. The original proposal is to have null for float and double types. Later a more reasonable proposal is to disallow empty strings. This patch adds logic to throw exception when finding empty strings for non string types.

## How was this patch tested?

Added test.

Closes#22787 from viirya/SPARK-25040.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This goes to reduce test time for ContinuousStressSuite - from 8 mins 13 sec to 43 seconds.

The approach taken by this is to reduce the triggers and epochs to wait and to reduce the expected rows accordingly.

## How was this patch tested?

Existing tests.

Closes#22662 from viirya/SPARK-25627.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Fix the following issues in PythonWorkerFactory

1. MonitorThread.run uses a wrong lock.

2. `createSimpleWorker` misses `synchronized` when updating `simpleWorkers`.

Other changes are just to improve the code style to make the thread-safe contract clear.

## How was this patch tested?

Jenkins

Closes#22770 from zsxwing/pwf.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

## What changes were proposed in this pull request?

This is a follow up of #21601, `StreamFileInputFormat` and `WholeTextFileInputFormat` have the same problem.

`Minimum split size pernode 5123456 cannot be larger than maximum split size 4194304

java.io.IOException: Minimum split size pernode 5123456 cannot be larger than maximum split size 4194304

at org.apache.hadoop.mapreduce.lib.input.CombineFileInputFormat.getSplits(CombineFileInputFormat.java: 201)

at org.apache.spark.rdd.BinaryFileRDD.getPartitions(BinaryFileRDD.scala:52)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:254)

at org.apache.spark.rdd.RDD$$anonfun$partitions$2.apply(RDD.scala:252)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.rdd.RDD.partitions(RDD.scala:252)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2138)`

## How was this patch tested?

Added a unit test

Closes#22725 from 10110346/maxSplitSize_node_rack.

Authored-by: liuxian <liu.xian3@zte.com.cn>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

add R API for PrefixSpan

## How was this patch tested?

add test in test_mllib_fpm.R

Author: Huaxin Gao <huaxing@us.ibm.com>

Closes#21710 from huaxingao/spark-24207.

## What changes were proposed in this pull request?

Currently in PagedTable.scala pageNavigation() method, if it is having only one page, they were not using the pagination.

Now it is made to use the pagination, even if it is having one page.

## How was this patch tested?

This tested with Spark webUI and History page in spark local setup.

Author: shivusondur <shivusondur@gmail.com>

Closes#22668 from shivusondur/pagination.

## What changes were proposed in this pull request?

`removeExecutorFromSpark` tries to fetch the reason the executor exited from Kubernetes, which may be useful if the pod was OOMKilled. However, the code previously deleted the pod from Kubernetes first which made retrieving this status impossible. This fixes the ordering.

On a separate but related note, it would be nice to wait some time before removing the pod - to let the operator examine logs and such.

## How was this patch tested?

Running on my local cluster.

Author: Mike Kaplinskiy <mike.kaplinskiy@gmail.com>

Closes#22720 from mikekap/patch-1.

## What changes were proposed in this pull request?

Upgrade netty dependency from 4.1.17 to 4.1.30.

Explanation:

Currently when sending a ChunkedByteBuffer with more than 16 chunks over the network will trigger a "merge" of all the blocks into one big transient array that is then sent over the network. This is problematic as the total memory for all chunks can be high (2GB) and this would then trigger an allocation of 2GB to merge everything, which will create OOM errors.

And we can avoid this issue by upgrade the netty. https://github.com/netty/netty/pull/8038

## How was this patch tested?

Manual tests in some spark jobs.

Closes#22765 from lipzhu/SPARK-25757.

Authored-by: Zhu, Lipeng <lipzhu@ebay.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

Currently, there are some tests testing function descriptions:

```bash

$ grep -ir "describe function" sql/core/src/test/resources/sql-tests/inputs

sql/core/src/test/resources/sql-tests/inputs/json-functions.sql:describe function to_json;

sql/core/src/test/resources/sql-tests/inputs/json-functions.sql:describe function extended to_json;

sql/core/src/test/resources/sql-tests/inputs/json-functions.sql:describe function from_json;

sql/core/src/test/resources/sql-tests/inputs/json-functions.sql:describe function extended from_json;

```

Looks there are not quite good points about testing them since we're not going to test documentation itself.

For `DESCRIBE FCUNTION` functionality itself, they are already being tested here and there.

See the test failures in https://github.com/apache/spark/pull/18749 (where I added examples to function descriptions)

We better remove those tests so that people don't add such tests in the SQL tests.

## How was this patch tested?

Manual.

Closes#22776 from HyukjinKwon/SPARK-25779.

Authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

`needsUnsafeRowConversion` is used in 2 places:

1. `ColumnarBatchScan.produceRows`

2. `FileSourceScanExec.doExecute`

When we hit `ColumnarBatchScan.produceRows`, it means whole stage codegen is on but the vectorized reader is off. The vectorized reader can be off for several reasons:

1. the file format doesn't have a vectorized reader(json, csv, etc.)

2. the vectorized reader config is off

3. the schema is not supported

Anyway when the vectorized reader is off, file format reader will always return unsafe rows, and other `ColumnarBatchScan` implementations also always return unsafe rows, so `ColumnarBatchScan.needsUnsafeRowConversion` is not needed.

When we hit `FileSourceScanExec.doExecute`, it means whole stage codegen is off. For this case, we need the `needsUnsafeRowConversion` to convert `ColumnarRow` to `UnsafeRow`, if the file format reader returns batch.

This PR removes `ColumnarBatchScan.needsUnsafeRowConversion`, and keep this flag only in `FileSourceScanExec`

## How was this patch tested?

existing tests

Closes#22750 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Refactor `WideSchemaBenchmark` to use main method.

1. use `spark-submit`:

```console

bin/spark-submit --class org.apache.spark.sql.execution.benchmark.WideSchemaBenchmark --jars ./core/target/spark-core_2.11-3.0.0-SNAPSHOT-tests.jar ./sql/core/target/spark-sql_2.11-3.0.0-SNAPSHOT-tests.jar

```

2. Generate benchmark result:

```console

SPARK_GENERATE_BENCHMARK_FILES=1 build/sbt "sql/test:runMain org.apache.spark.sql.execution.benchmark.WideSchemaBenchmark"

```

## How was this patch tested?

manual tests

Closes#22501 from wangyum/SPARK-25492.

Lead-authored-by: Yuming Wang <yumwang@ebay.com>

Co-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

PIP installation requires to package bin scripts together.

https://github.com/apache/spark/blob/master/python/setup.py#L71

The recent fix introduced non-ascii compatible (non-breackable space I guess) at ec96d34e74 fix.

This is usually not the problem but looks Jenkins's default encoding is `ascii` and during copying the script, there looks implicit conversion between bytes and strings - where the default encoding is used

https://github.com/pypa/setuptools/blob/v40.4.3/setuptools/command/develop.py#L185-L189

## How was this patch tested?

Jenkins

Closes#22782 from HyukjinKwon/pip-failure-fix.

Authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Fix minor error in the code "sketch of pregel implementation" of GraphX guide.

This fixed error relates to `[SPARK-12995][GraphX] Remove deprecate APIs from Pregel`

## How was this patch tested?

N/A

Closes#22780 from WeichenXu123/minor_doc_update1.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Since we didn't test Java 9 ~ 11 up to now in the community, fix the document to describe Java 8 only.

## How was this patch tested?

N/A (This is a document only change.)

Closes#22781 from dongjoon-hyun/SPARK-JDK-DOC.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Fix some broken links in the new document. I have clicked through all the links. Hopefully i haven't missed any :-)

## How was this patch tested?

Built using jekyll and verified the links.

Closes#22772 from dilipbiswal/doc_check.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This PR adds `prettyNames` for `from_json`, `to_json`, `from_csv`, and `schema_of_json` so that appropriate names are used.

## How was this patch tested?

Unit tests

Closes#22773 from HyukjinKwon/minor-prettyNames.

Authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Adds error checking and handling to `docker` invocations ensuring the script terminates early in the event of any errors. This avoids subtle errors that can occur e.g. if the base image fails to build the Python/R images can end up being built from outdated base images and makes it more explicit to the user that something went wrong.

Additionally the provided `Dockerfiles` assume that Spark was first built locally or is a runnable distribution however it didn't previously enforce this. The script will now check the JARs folder to ensure that Spark JARs actually exist and if not aborts early reminding the user they need to build locally first.

## How was this patch tested?

- Tested with a `mvn clean` working copy and verified that the script now terminates early

- Tested with bad `Dockerfiles` that fail to build to see that early termination occurred

Closes#22748 from rvesse/SPARK-25745.

Authored-by: Rob Vesse <rvesse@dotnetrdf.org>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

JVMs don't you allocate arrays of length exactly Int.MaxValue, so leave

a little extra room. This is necessary when reading blocks >2GB off

the network (for remote reads or for cache replication).

Unit tests via jenkins, ran a test with blocks over 2gb on a cluster

Closes#22705 from squito/SPARK-25704.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

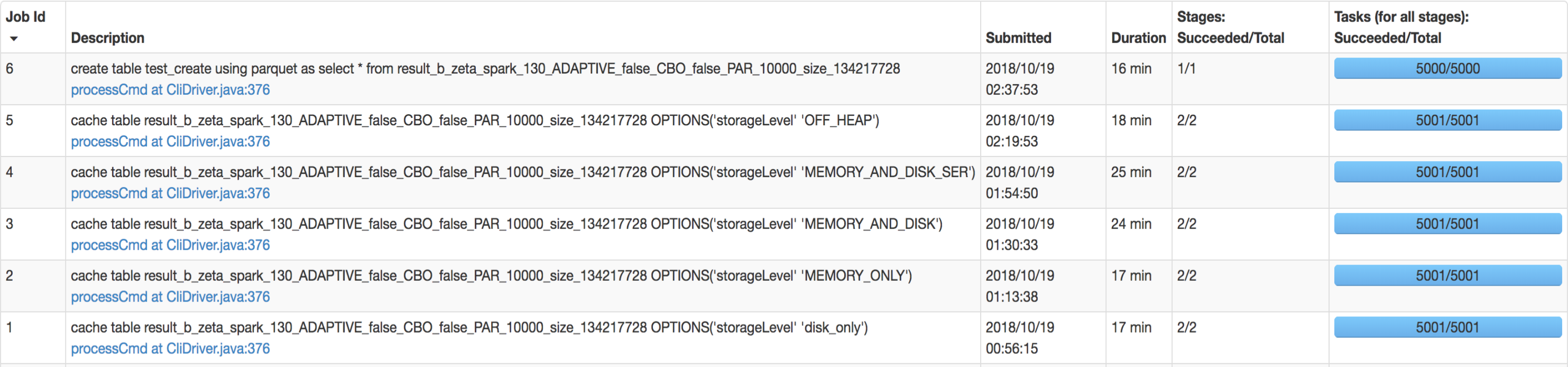

## What changes were proposed in this pull request?

SQL interface support specify `StorageLevel` when cache table. The semantic is:

```sql

CACHE TABLE tableName OPTIONS('storageLevel' 'DISK_ONLY');

```

All supported `StorageLevel` are:

eefdf9f9dd/core/src/main/scala/org/apache/spark/storage/StorageLevel.scala (L172-L183)

## How was this patch tested?

unit tests and manual tests.

manual tests configuration:

```

--executor-memory 15G --executor-cores 5 --num-executors 50

```

Data:

Input Size / Records: 1037.7 GB / 11732805788

Result:

Closes#22263 from wangyum/SPARK-25269.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Without this PR some UDAFs like `GenericUDAFPercentileApprox` can throw an exception because expecting a constant parameter (object inspector) as a particular argument.

The exception is thrown because `toPrettySQL` call in `ResolveAliases` analyzer rule transforms a `Literal` parameter to a `PrettyAttribute` which is then transformed to an `ObjectInspector` instead of a `ConstantObjectInspector`.

The exception comes from `getEvaluator` method of `GenericUDAFPercentileApprox` that actually shouldn't be called during `toPrettySQL` transformation. The reason why it is called are the non lazy fields in `HiveUDAFFunction`.

This PR makes all fields of `HiveUDAFFunction` lazy.

## How was this patch tested?

added new UT

Closes#22766 from peter-toth/SPARK-25768.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is a follow-up PR for #22259. The extra field added in `ScalaUDF` with the original PR was declared optional, but should be indeed required, otherwise callers of `ScalaUDF`'s constructor could ignore this new field and cause the result to be incorrect. This PR makes the new field required and changes its name to `handleNullForInputs`.

#22259 breaks the previous behavior for null-handling of primitive-type input parameters. For example, for `val f = udf({(x: Int, y: Any) => x})`, `f(null, "str")` should return `null` but would return `0` after #22259. In this PR, all UDF methods except `def udf(f: AnyRef, dataType: DataType): UserDefinedFunction` have been restored with the original behavior. The only exception is documented in the Spark SQL migration guide.

In addition, now that we have this extra field indicating if a null-test should be applied on the corresponding input value, we can also make use of this flag to avoid the rule `HandleNullInputsForUDF` being applied infinitely.

## How was this patch tested?

Added UT in UDFSuite

Passed affected existing UTs:

AnalysisSuite

UDFSuite

Closes#22732 from maryannxue/spark-25044-followup.

Lead-authored-by: maryannxue <maryannxue@apache.org>

Co-authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This allows an implementer of Spark Session Extensions to utilize a

method "injectFunction" which will add a new function to the default

Spark Session Catalogue.

## What changes were proposed in this pull request?

Adds a new function to SparkSessionExtensions

def injectFunction(functionDescription: FunctionDescription)

Where function description is a new type

type FunctionDescription = (FunctionIdentifier, FunctionBuilder)

The functions are loaded in BaseSessionBuilder when the function registry does not have a parent

function registry to get loaded from.

## How was this patch tested?

New unit tests are added for the extension in SparkSessionExtensionSuite

Closes#22576 from RussellSpitzer/SPARK-25560.

Authored-by: Russell Spitzer <Russell.Spitzer@gmail.com>

Signed-off-by: Herman van Hovell <hvanhovell@databricks.com>

## What changes were proposed in this pull request?

CSVs with windows style crlf ('\r\n') don't work in multiline mode. They work fine in single line mode because the line separation is done by Hadoop, which can handle all the different types of line separators. This PR fixes it by enabling Univocity's line separator detection in multiline mode, which will detect '\r\n', '\r', or '\n' automatically as it is done by hadoop in single line mode.

## How was this patch tested?

Unit test with a file with crlf line endings.

Closes#22503 from justinuang/fix-clrf-multiline.

Authored-by: Justin Uang <juang@palantir.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The PR updates the examples for `BisectingKMeans` so that they don't use the deprecated method `computeCost` (see SPARK-25758).

## How was this patch tested?

running examples

Closes#22763 from mgaido91/SPARK-25764.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

When the first dropEvent occurs, LastReportTimestamp was printing in the log as

Wed Dec 31 16:00:00 PST 1969

(Dropped 1 events from eventLog since Wed Dec 31 16:00:00 PST 1969.)

The reason is that lastReportTimestamp initialized with 0.

Now log is updated to print "... since the application starts" if 'lastReportTimestamp' == 0.

this will happens first dropEvent occurs.

## How was this patch tested?

Manually verified.

Closes#22677 from shivusondur/AsyncEvent1.

Authored-by: shivusondur <shivusondur@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

1. Split the main page of sql-programming-guide into 7 parts:

- Getting Started

- Data Sources

- Performance Turing

- Distributed SQL Engine

- PySpark Usage Guide for Pandas with Apache Arrow

- Migration Guide

- Reference

2. Add left menu for sql-programming-guide, keep first level index for each part in the menu.

## How was this patch tested?

Local test with jekyll build/serve.

Closes#22746 from xuanyuanking/SPARK-24499.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

The PR proposes to deprecate the `computeCost` method on `BisectingKMeans` in favor of the adoption of `ClusteringEvaluator` in order to evaluate the clustering.

## How was this patch tested?

NA

Closes#22756 from mgaido91/SPARK-25758.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

This way the image generated from both environments has the same layout,

with just a difference in contents that should not affect functionality.

Also added some minor error checking to the image script.

Closes#22681 from vanzin/SPARK-25682.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Currently each test in `SQLTest` in PySpark is not cleaned properly.

We should introduce and use more `contextmanager` to be convenient to clean up the context properly.

## How was this patch tested?

Modified tests.

Closes#22762 from ueshin/issues/SPARK-25763/cleanup_sqltests.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Only `AddJarCommand` return `0`, the user will be confused about what it means. This PR sets it to empty.

```sql

spark-sql> add jar /Users/yumwang/spark/sql/hive/src/test/resources/TestUDTF.jar;

ADD JAR /Users/yumwang/spark/sql/hive/src/test/resources/TestUDTF.jar

0

spark-sql>

```

## How was this patch tested?

manual tests

```sql

spark-sql> add jar /Users/yumwang/spark/sql/hive/src/test/resources/TestUDTF.jar;

ADD JAR /Users/yumwang/spark/sql/hive/src/test/resources/TestUDTF.jar

spark-sql>

```

Closes#22747 from wangyum/AddJarCommand.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Also update Kinesis SDK's Jackson to match Spark's

## How was this patch tested?

Existing tests, including Kinesis ones, which ought to be hereby triggered.

This was uncovered, I believe, in https://github.com/apache/spark/pull/22729#issuecomment-430666080Closes#22757 from srowen/SPARK-24601.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

Master

## What changes were proposed in this pull request?

Previously Pyspark used the private constructor for SparkSession when

building that object. This resulted in a SparkSession without checking

the sql.extensions parameter for additional session extensions. To fix

this we instead use the Session.builder() path as SparkR uses, this

loads the extensions and allows their use in PySpark.

## How was this patch tested?

An integration test was added which mimics the Scala test for the same feature.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#21990 from RussellSpitzer/SPARK-25003-master.

Authored-by: Russell Spitzer <Russell.Spitzer@gmail.com>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

The test fix is to allocate a `Resource` object only after the resource

types have been initialized. Otherwise the YARN classes get in a weird

state and throw a different exception than expected, because the resource

has a different view of the registered resources.

I also removed a test for a null resource since that seems unnecessary

and made the fix more complicated.

All the other changes are just cleanup; basically simplify the tests by

defining what is being tested and deriving the resource type registration

and the SparkConf from that data, instead of having redundant definitions

in the tests.

Ran tests with Hadoop 3 (and also without it).

Closes#22751 from vanzin/SPARK-20327.fix.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

Currently if we run

```

sh start-thriftserver.sh -h

```

we get

```

...

Thrift server options:

2018-10-15 21:45:39 INFO HiveThriftServer2:54 - Starting SparkContext

2018-10-15 21:45:40 INFO SparkContext:54 - Running Spark version 2.3.2

2018-10-15 21:45:40 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-10-15 21:45:40 ERROR SparkContext:91 - Error initializing SparkContext.

org.apache.spark.SparkException: A master URL must be set in your configuration

at org.apache.spark.SparkContext.<init>(SparkContext.scala:367)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2493)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:934)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:925)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:925)

at org.apache.spark.sql.hive.thriftserver.SparkSQLEnv$.init(SparkSQLEnv.scala:48)

at org.apache.spark.sql.hive.thriftserver.HiveThriftServer2$.main(HiveThriftServer2.scala:79)

at org.apache.spark.sql.hive.thriftserver.HiveThriftServer2.main(HiveThriftServer2.scala)

2018-10-15 21:45:40 ERROR Utils:91 - Uncaught exception in thread main

```

After fix, the usage output is clean:

```

...

Thrift server options:

--hiveconf <property=value> Use value for given property

```

Also exit with code 1, to follow other scripts(this is the behavior of parsing option `-h` for other linux commands as well).

## How was this patch tested?

Manual test.

Closes#22727 from gengliangwang/stsUsage.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

When deserializing values of ArrayType with struct elements in java beans, fields of structs get mixed up.

I suggest using struct data types retrieved from resolved input data instead of inferring them from java beans.

## What changes were proposed in this pull request?

MapObjects expression is used to map array elements to java beans. Struct type of elements is inferred from java bean structure and ends up with mixed up field order.

I used UnresolvedMapObjects instead of MapObjects, which allows to provide element type for MapObjects during analysis based on the resolved input data, not on the java bean.

## How was this patch tested?

Added a test case.

Built complete project on travis.

michalsenkyr cloud-fan marmbrus liancheng

Closes#22708 from vofque/SPARK-21402.

Lead-authored-by: Vladimir Kuriatkov <vofque@gmail.com>

Co-authored-by: Vladimir Kuriatkov <Vladimir_Kuriatkov@epam.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The SQL execution listener framework was created from scratch(see https://github.com/apache/spark/pull/9078). It didn't leverage what we already have in the spark listener framework, and one major problem is, the listener runs on the spark execution thread, which means a bad listener can block spark's query processing.

This PR re-implements the SQL execution listener framework. Now `ExecutionListenerManager` is just a normal spark listener, which watches the `SparkListenerSQLExecutionEnd` events and post events to the

user-provided SQL execution listeners.

## How was this patch tested?

existing tests.

Closes#22674 from cloud-fan/listener.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

this PR correct some comment error:

1. change from "as low a possible" to "as low as possible" in RewriteDistinctAggregates.scala

2. delete redundant word “with” in HiveTableScanExec’s doExecute() method

## How was this patch tested?

Existing unit tests.

Closes#22694 from CarolinePeng/update_comment.

Authored-by: 彭灿00244106 <00244106@zte.intra>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

`Literal.value` should have a value a value corresponding to `dataType`. This pr added code to verify it and fixed the existing tests to do so.

## How was this patch tested?

Modified the existing tests.

Closes#22724 from maropu/SPARK-25734.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The PR adds new function `from_csv()` similar to `from_json()` to parse columns with CSV strings. I added the following methods:

```Scala

def from_csv(e: Column, schema: StructType, options: Map[String, String]): Column

```

and this signature to call it from Python, R and Java:

```Scala

def from_csv(e: Column, schema: String, options: java.util.Map[String, String]): Column

```

## How was this patch tested?

Added new test suites `CsvExpressionsSuite`, `CsvFunctionsSuite` and sql tests.

Closes#22379 from MaxGekk/from_csv.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Hyukjin Kwon <gurwls223@gmail.com>

Co-authored-by: hyukjinkwon <gurwls223@apache.org>

Signed-off-by: hyukjinkwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Set a reasonable poll timeout thats used while consuming topics/partitions from kafka. In the

absence of it, a default of 2 minute is used as the timeout values. And all the negative tests take a minimum of 2 minute to execute.

After this change, we save about 4 minutes in this suite.

## How was this patch tested?

Test fix.

Closes#22670 from dilipbiswal/SPARK-25631.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>