## What changes were proposed in this pull request?

Update to Scala 2.12.8

## How was this patch tested?

Existing tests.

Closes#23218 from srowen/SPARK-26266.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

spark.kafka.sasl.kerberos.service.name is an optional parameter but most of the time value `kafka` has to be set. As I've written in the jira the following reasoning is behind:

* Kafka's configuration guide suggest the same value: https://kafka.apache.org/documentation/#security_sasl_kerberos_brokerconfig

* It would be easier for spark users by providing less configuration

* Other streaming engines are doing the same

In this PR I've changed the parameter from optional to `WithDefault` and set `kafka` as default value.

## How was this patch tested?

Available unit tests + on cluster.

Closes#23254 from gaborgsomogyi/SPARK-26304.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Add validationIndicatorCol and validationTol to GBT Python.

## How was this patch tested?

Add test in doctest to test the new API.

Closes#21465 from huaxingao/spark-24333.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

Parquet files appear to have nullability info when being written, not being read.

## How was this patch tested?

Some test code: (running spark 2.3, but the relevant code in DataSource looks identical on master)

case class NullTest(bo: Boolean, opbol: Option[Boolean])

val testDf = spark.createDataFrame(Seq(NullTest(true, Some(false))))

defined class NullTest

testDf: org.apache.spark.sql.DataFrame = [bo: boolean, opbol: boolean]

testDf.write.parquet("s3://asana-stats/tmp_dima/parquet_check_schema")

spark.read.parquet("s3://asana-stats/tmp_dima/parquet_check_schema/part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet4").printSchema()

root

|-- bo: boolean (nullable = true)

|-- opbol: boolean (nullable = true)

Meanwhile, the parquet file formed does have nullable info:

[]batchprod-report000:/tmp/dimakamalov-batch$ aws s3 ls s3://asana-stats/tmp_dima/parquet_check_schema/

2018-10-17 21:03:52 0 _SUCCESS

2018-10-17 21:03:50 504 part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet

[]batchprod-report000:/tmp/dimakamalov-batch$ aws s3 cp s3://asana-stats/tmp_dima/parquet_check_schema/part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet .

download: s3://asana-stats/tmp_dima/parquet_check_schema/part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet to ./part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet

[]batchprod-report000:/tmp/dimakamalov-batch$ java -jar parquet-tools-1.8.2.jar schema part-00000-b1bf4a19-d9fe-4ece-a2b4-9bbceb490857-c000.snappy.parquet

message spark_schema {

required boolean bo;

optional boolean opbol;

}

Closes#22759 from dima-asana/dima-asana-nullable-parquet-doc.

Authored-by: dima-asana <42555784+dima-asana@users.noreply.github.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Delete unnecessary If statement, because it Impossible execution when

records less than or equal to zero.it is only execution when records begin zero.

...................

if (inMemSorter == null || inMemSorter.numRecords() <= 0) {

return 0L;

}

....................

if (inMemSorter.numRecords() > 0) {

.....................

}

## How was this patch tested?

Existing tests

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23247 from wangjiaochun/inMemSorter.

Authored-by: 10087686 <wang.jiaochun@zte.com.cn>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

Adds a new method to SparkAppHandle called getError which returns

the exception (if present) that caused the underlying Spark app to

fail.

New tests added to SparkLauncherSuite for the new method.

Closes#21849Closes#23221 from vanzin/SPARK-24243.

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This is a follow-up of #23031 to rename the config name to `spark.sql.legacy.setCommandRejectsSparkCoreConfs`.

## How was this patch tested?

Existing tests.

Closes#23245 from ueshin/issues/SPARK-26060/rename_config.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

This PR aims to upgrade Janino compiler to the latest version 3.0.11. The followings are the changes from the [release note](http://janino-compiler.github.io/janino/changelog.html).

- Script with many "helper" variables.

- Java 9+ compatibility

- Compilation Error Messages Generated by JDK.

- Added experimental support for the "StackMapFrame" attribute; not active yet.

- Make Unparser more flexible.

- Fixed NPEs in various "toString()" methods.

- Optimize static method invocation with rvalue target expression.

- Added all missing "ClassFile.getConstant*Info()" methods, removing the necessity for many type casts.

## How was this patch tested?

Pass the Jenkins with the existing tests.

Closes#23250 from dongjoon-hyun/SPARK-26298.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

Currently if user provides data schema, partition column values are converted as per it. But if the conversion failed, e.g. converting string to int, the column value is null.

This PR proposes to throw exception in such case, instead of converting into null value silently:

1. These null partition column values doesn't make sense to users in most cases. It is better to show the conversion failure, and then users can adjust the schema or ETL jobs to fix it.

2. There are always exceptions on such conversion failure for non-partition data columns. Partition columns should have the same behavior.

We can reproduce the case above as following:

```

/tmp/testDir

├── p=bar

└── p=foo

```

If we run:

```

val schema = StructType(Seq(StructField("p", IntegerType, false)))

spark.read.schema(schema).csv("/tmp/testDir/").show()

```

We will get:

```

+----+

| p|

+----+

|null|

|null|

+----+

```

## How was this patch tested?

Unit test

Closes#23215 from gengliangwang/SPARK-26263.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

`enablePerfMetrics `was originally designed in `BytesToBytesMap `to control `getNumHashCollisions getTimeSpentResizingNs getAverageProbesPerLookup`.

However, as the Spark version gradual progress. this parameter is only used for `getAverageProbesPerLookup ` and always given to true when using `BytesToBytesMap`.

it is also dangerous to determine whether `getAverageProbesPerLookup `opens and throws an `IllegalStateException `exception.

So this pr will be remove `enablePerfMetrics `parameter from `BytesToBytesMap`. thanks.

## How was this patch tested?

the existed test cases.

Closes#23244 from heary-cao/enablePerfMetrics.

Authored-by: caoxuewen <cao.xuewen@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

This change modifies the logic in the SecurityManager to do two

things:

- generate unique app secrets also when k8s is being used

- only store the secret in the user's UGI on YARN

The latter is needed so that k8s won't unnecessarily create

k8s secrets for the UGI credentials when only the auth token

is stored there.

On the k8s side, the secret is propagated to executors using

an environment variable instead. This ensures it works in both

client and cluster mode.

Security doc was updated to mention the feature and clarify that

proper access control in k8s should be enabled for it to be secure.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#23174 from vanzin/SPARK-26194.

## What changes were proposed in this pull request?

Kafka delegation token support implemented in [PR#22598](https://github.com/apache/spark/pull/22598) but that didn't contain documentation because of rapid changes. Because it has been merged in this PR I've documented it.

## How was this patch tested?

jekyll build + manual html check

Closes#23195 from gaborgsomogyi/SPARK-26236.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

When executing `toPandas` with Arrow enabled, partitions that arrive in the JVM out-of-order must be buffered before they can be send to Python. This causes an excess of memory to be used in the driver JVM and increases the time it takes to complete because data must sit in the JVM waiting for preceding partitions to come in.

This change sends un-ordered partitions to Python as soon as they arrive in the JVM, followed by a list of partition indices so that Python can assemble the data in the correct order. This way, data is not buffered at the JVM and there is no waiting on particular partitions so performance will be increased.

Followup to #21546

## How was this patch tested?

Added new test with a large number of batches per partition, and test that forces a small delay in the first partition. These test that partitions are collected out-of-order and then are are put in the correct order in Python.

## Performance Tests - toPandas

Tests run on a 4 node standalone cluster with 32 cores total, 14.04.1-Ubuntu and OpenJDK 8

measured wall clock time to execute `toPandas()` and took the average best time of 5 runs/5 loops each.

Test code

```python

df = spark.range(1 << 25, numPartitions=32).toDF("id").withColumn("x1", rand()).withColumn("x2", rand()).withColumn("x3", rand()).withColumn("x4", rand())

for i in range(5):

start = time.time()

_ = df.toPandas()

elapsed = time.time() - start

```

Spark config

```

spark.driver.memory 5g

spark.executor.memory 5g

spark.driver.maxResultSize 2g

spark.sql.execution.arrow.enabled true

```

Current Master w/ Arrow stream | This PR

---------------------|------------

5.16207 | 4.342533

5.133671 | 4.399408

5.147513 | 4.468471

5.105243 | 4.36524

5.018685 | 4.373791

Avg Master | Avg This PR

------------------|--------------

5.1134364 | 4.3898886

Speedup of **1.164821449**

Closes#22275 from BryanCutler/arrow-toPandas-oo-batches-SPARK-25274.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

Looks this test is flaky

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99704/consolehttps://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99569/consolehttps://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99644/consolehttps://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99548/consolehttps://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99454/consolehttps://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/99609/console

```

======================================================================

FAIL: test_training_and_prediction (pyspark.mllib.tests.test_streaming_algorithms.StreamingLogisticRegressionWithSGDTests)

Test that the model improves on toy data with no. of batches

----------------------------------------------------------------------

Traceback (most recent call last):

File "/home/jenkins/workspace/SparkPullRequestBuilder/python/pyspark/mllib/tests/test_streaming_algorithms.py", line 367, in test_training_and_prediction

self._eventually(condition)

File "/home/jenkins/workspace/SparkPullRequestBuilder/python/pyspark/mllib/tests/test_streaming_algorithms.py", line 78, in _eventually

% (timeout, lastValue))

AssertionError: Test failed due to timeout after 30 sec, with last condition returning: Latest errors: 0.67, 0.71, 0.78, 0.7, 0.75, 0.74, 0.73, 0.69, 0.62, 0.71, 0.69, 0.75, 0.72, 0.77, 0.71, 0.74

----------------------------------------------------------------------

Ran 13 tests in 185.051s

FAILED (failures=1, skipped=1)

```

This looks happening after increasing the parallelism in Jenkins to speed up at https://github.com/apache/spark/pull/23111. I am able to reproduce this manually when the resource usage is heavy (with manual decrease of timeout).

## How was this patch tested?

Manually tested by

```

cd python

./run-tests --testnames 'pyspark.mllib.tests.test_streaming_algorithms StreamingLogisticRegressionWithSGDTests.test_training_and_prediction' --python-executables=python

```

Closes#23236 from HyukjinKwon/SPARK-26275.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Updated SQL migration guide according to changes in https://github.com/apache/spark/pull/23120Closes#23235 from MaxGekk/failuresafe-partial-result-followup.

Lead-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

this code come from PR: https://github.com/apache/spark/pull/11190,

but this code has never been used, only since PR: https://github.com/apache/spark/pull/14548,

Let's continue fix it. thanks.

## How was this patch tested?

N / A

Closes#23227 from heary-cao/unuseSparkPlan.

Authored-by: caoxuewen <cao.xuewen@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This fixes doc of renamed OneHotEncoder in PySpark.

## How was this patch tested?

N/A

Closes#23230 from viirya/remove_one_hot_encoder_followup.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

This PR proposes add a developer option, `--testnames`, to our testing script to allow run specific set of unittests and doctests.

**1. Run unittests in the class**

```bash

./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests'

```

```

Running PySpark tests. Output is in /.../spark/python/unit-tests.log

Will test against the following Python executables: ['python2.7', 'pypy']

Will test the following Python tests: ['pyspark.sql.tests.test_arrow ArrowTests']

Starting test(python2.7): pyspark.sql.tests.test_arrow ArrowTests

Starting test(pypy): pyspark.sql.tests.test_arrow ArrowTests

Finished test(python2.7): pyspark.sql.tests.test_arrow ArrowTests (14s)

Finished test(pypy): pyspark.sql.tests.test_arrow ArrowTests (14s) ... 22 tests were skipped

Tests passed in 14 seconds

Skipped tests in pyspark.sql.tests.test_arrow ArrowTests with pypy:

test_createDataFrame_column_name_encoding (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

test_createDataFrame_does_not_modify_input (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

test_createDataFrame_fallback_disabled (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

test_createDataFrame_fallback_enabled (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped

...

```

**2. Run single unittest in the class.**

```bash

./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion'

```

```

Running PySpark tests. Output is in /.../spark/python/unit-tests.log

Will test against the following Python executables: ['python2.7', 'pypy']

Will test the following Python tests: ['pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion']

Starting test(pypy): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion

Starting test(python2.7): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion

Finished test(pypy): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion (0s) ... 1 tests were skipped

Finished test(python2.7): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion (8s)

Tests passed in 8 seconds

Skipped tests in pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion with pypy:

test_null_conversion (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

```

**3. Run doctests in single PySpark module.**

```bash

./run-tests --testnames pyspark.sql.dataframe

```

```

Running PySpark tests. Output is in /.../spark/python/unit-tests.log

Will test against the following Python executables: ['python2.7', 'pypy']

Will test the following Python tests: ['pyspark.sql.dataframe']

Starting test(pypy): pyspark.sql.dataframe

Starting test(python2.7): pyspark.sql.dataframe

Finished test(python2.7): pyspark.sql.dataframe (47s)

Finished test(pypy): pyspark.sql.dataframe (48s)

Tests passed in 48 seconds

```

Of course, you can mix them:

```bash

./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests,pyspark.sql.dataframe'

```

```

Running PySpark tests. Output is in /.../spark/python/unit-tests.log

Will test against the following Python executables: ['python2.7', 'pypy']

Will test the following Python tests: ['pyspark.sql.tests.test_arrow ArrowTests', 'pyspark.sql.dataframe']

Starting test(pypy): pyspark.sql.dataframe

Starting test(pypy): pyspark.sql.tests.test_arrow ArrowTests

Starting test(python2.7): pyspark.sql.dataframe

Starting test(python2.7): pyspark.sql.tests.test_arrow ArrowTests

Finished test(pypy): pyspark.sql.tests.test_arrow ArrowTests (0s) ... 22 tests were skipped

Finished test(python2.7): pyspark.sql.tests.test_arrow ArrowTests (18s)

Finished test(python2.7): pyspark.sql.dataframe (50s)

Finished test(pypy): pyspark.sql.dataframe (52s)

Tests passed in 52 seconds

Skipped tests in pyspark.sql.tests.test_arrow ArrowTests with pypy:

test_createDataFrame_column_name_encoding (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

test_createDataFrame_does_not_modify_input (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

test_createDataFrame_fallback_disabled (pyspark.sql.tests.test_arrow.ArrowTests) ... skipped 'Pandas >= 0.19.2 must be installed; however, it was not found.'

```

and also you can use all other options (except `--modules`, which will be ignored)

```bash

./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion' --python-executables=python

```

```

Running PySpark tests. Output is in /.../spark/python/unit-tests.log

Will test against the following Python executables: ['python']

Will test the following Python tests: ['pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion']

Starting test(python): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion

Finished test(python): pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion (12s)

Tests passed in 12 seconds

```

See help below:

```bash

./run-tests --help

```

```

Usage: run-tests [options]

Options:

...

Developer Options:

--testnames=TESTNAMES

A comma-separated list of specific modules, classes

and functions of doctest or unittest to test. For

example, 'pyspark.sql.foo' to run the module as

unittests or doctests, 'pyspark.sql.tests FooTests' to

run the specific class of unittests,

'pyspark.sql.tests FooTests.test_foo' to run the

specific unittest in the class. '--modules' option is

ignored if they are given.

```

I intentionally grouped it as a developer option to be more conservative.

## How was this patch tested?

Manually tested. Negative tests were also done.

```bash

./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowTests.test_null_conversion1' --python-executables=python

```

```

...

AttributeError: type object 'ArrowTests' has no attribute 'test_null_conversion1'

...

```

```bash

./run-tests --testnames 'pyspark.sql.tests.test_arrow ArrowT' --python-executables=python

```

```

...

AttributeError: 'module' object has no attribute 'ArrowT'

...

```

```bash

./run-tests --testnames 'pyspark.sql.tests.test_ar' --python-executables=python

```

```

...

/.../python2.7: No module named pyspark.sql.tests.test_ar

```

Closes#23203 from HyukjinKwon/SPARK-26252.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

The PR starts from the [comment](https://github.com/apache/spark/pull/23124#discussion_r236112390) in the main one and it aims at:

- simplifying the code for `MapConcat`;

- be more precise in checking the limit size.

## How was this patch tested?

existing tests

Closes#23217 from mgaido91/SPARK-25829_followup.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

We explicitly avoid files with hdfs erasure coding for the streaming WAL

and for event logs, as hdfs EC does not support all relevant apis.

However, the new builder api used has different semantics -- it does not

create parent dirs, and it does not resolve relative paths. This

updates createNonEcFile to have similar semantics to the old api.

## How was this patch tested?

Ran tests with the WAL pointed at a non-existent dir, which failed before this change. Manually tested the new function with a relative path as well.

Unit tests via jenkins.

Closes#23092 from squito/SPARK-26094.

Authored-by: Imran Rashid <irashid@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

When we encode a Decimal from external source we don't check for overflow. That method is useful not only in order to enforce that we can represent the correct value in the specified range, but it also changes the underlying data to the right precision/scale. Since in our code generation we assume that a decimal has exactly the same precision and scale of its data type, missing to enforce it can lead to corrupted output/results when there are subsequent transformations.

## How was this patch tested?

added UT

Closes#23210 from mgaido91/SPARK-26233.

Authored-by: Marco Gaido <marcogaido91@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to use **java.time API** for parsing timestamps and dates from CSV content with microseconds precision. The SQL config `spark.sql.legacy.timeParser.enabled` allow to switch back to previous behaviour with using `java.text.SimpleDateFormat`/`FastDateFormat` for parsing/generating timestamps/dates.

## How was this patch tested?

It was tested by `UnivocityParserSuite`, `CsvExpressionsSuite`, `CsvFunctionsSuite` and `CsvSuite`.

Closes#23150 from MaxGekk/time-parser.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Maxim Gekk <maxim.gekk@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

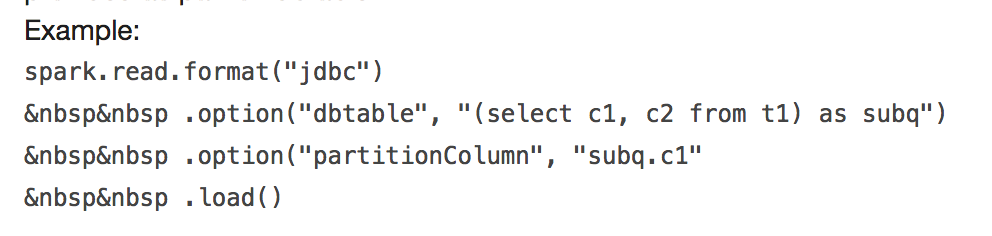

## What changes were proposed in this pull request?

It will throw:

```

requirement failed: When reading JDBC data sources, users need to specify all or none for the following options: 'partitionColumn', 'lowerBound', 'upperBound', and 'numPartitions'

```

and

```

User-defined partition column subq.c1 not found in the JDBC relation ...

```

This PR fix this error example.

## How was this patch tested?

manual tests

Closes#23170 from wangyum/SPARK-24499.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In the DDLSuit, there are four test cases have the same codes , writing a function can combine the same code.

## How was this patch tested?

existing tests.

Closes#23194 from CarolinePeng/Update_temp.

Authored-by: 彭灿00244106 <00244106@zte.intra>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

## What changes were proposed in this pull request?

In SPARK-23711, we have implemented the expression fallback logic to an interpreted mode. So, this pr fixed code to support the same fallback mode in `SafeProjection` based on `CodeGeneratorWithInterpretedFallback`.

## How was this patch tested?

Add tests in `CodeGeneratorWithInterpretedFallbackSuite` and `UnsafeRowConverterSuite`.

Closes#22468 from maropu/SPARK-25374-3.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Currently in the Analyzer, we have two methods 1) Resolve 2)ResolveExpressions that are called at different code paths to resolve attributes, column ordinal and extract value expressions. ~~In this PR, we combine the two into one method to make sure, there is only one method that is tasked with resolving the attributes.~~

Update the description of the methods and use better names to make it easier to know when to make use of one method vs the other.

## How was this patch tested?

Existing tests.

Closes#22899 from dilipbiswal/SPARK-25573-final.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

This pr is to return an empty config set when regenerating the golden files in `SQLQueryTestSuite`.

This is the follow-up of #22512.

## How was this patch tested?

N/A

Closes#23212 from maropu/SPARK-25498-FOLLOWUP.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

When I try to run `./bin/pyspark` cmd in a pod in Kubernetes(image built without change from pyspark Dockerfile), I'm getting an error:

```

$SPARK_HOME/bin/pyspark --deploy-mode client --master k8s://https://$KUBERNETES_SERVICE_HOST:$KUBERNETES_SERVICE_PORT_HTTPS ...

Python 2.7.15 (default, Aug 22 2018, 13:24:18)

[GCC 6.4.0] on linux2

Type "help", "copyright", "credits" or "license" for more information.

Could not open PYTHONSTARTUP

IOError: [Errno 2] No such file or directory: '/opt/spark/python/pyspark/shell.py'

```

This is because `pyspark` folder doesn't exist under `/opt/spark/python/`

## What changes were proposed in this pull request?

Added `COPY python/pyspark ${SPARK_HOME}/python/pyspark` to pyspark Dockerfile to resolve issue above.

## How was this patch tested?

Google Kubernetes Engine

Closes#23037 from AzureQ/master.

Authored-by: Qi Shao <qi.shao.nyu@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Adds proper labels when deleting executor pods.

## How was this patch tested?

Manually with tests.

Closes#23209 from skonto/fix-deletion-labels.

Authored-by: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This PR is a small follow up that puts some logic and functions into smaller scope and make it localized, and deduplicate.

## How was this patch tested?

Manually tested. Jenkins tests as well.

Closes#23200 from HyukjinKwon/followup-SPARK-26034-SPARK-26033.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

docker-image-tool.sh requires explicit argument to create the python

image now; do that from the sbt integration tests target too.

Closes#23172 from vanzin/SPARK-25957.followup.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Keeps K8s executor resources present if case of failure or normal termination.

Introduces a new boolean config option: `spark.kubernetes.deleteExecutors`, with default value set to true.

The idea is to update Spark K8s backend structures but leave the resources around.

The assumption is that since entries are not removed from the `removedExecutorsCache` we are immune to updates that refer to the the executor resources previously removed.

The only delete operation not touched is the one in the `doKillExecutors` method.

Reason is right now we dont support [blacklisting](https://issues.apache.org/jira/browse/SPARK-23485) and dynamic allocation with Spark on K8s. In both cases in the future we might want to handle these scenarios although its more complicated.

More tests can be added if approach is approved.

## How was this patch tested?

Manually by running a Spark job and verifying pods are not deleted.

Closes#23136 from skonto/keep_pods.

Authored-by: Stavros Kontopoulos <stavros.kontopoulos@lightbend.com>

Signed-off-by: Yinan Li <ynli@google.com>

## What changes were proposed in this pull request?

Since `AggregationIterator` uses `MutableProjection` for `UnsafeRow`, `InterpretedMutableProjection` needs to handle `UnsafeRow` as buffer internally for fixed-length types only.

## How was this patch tested?

Run 'SQLQueryTestSuite' with the interpreted mode.

Closes#22512 from maropu/InterpreterTest.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Let's keep this open for a while to see if other configuration tweaks are suggested

## What changes were proposed in this pull request?

Formatting configuration changes following up

https://github.com/apache/spark/pull/23148

## How was this patch tested?

./dev/scalafmt

Closes#23182 from koeninger/scalafmt-config.

Authored-by: cody koeninger <cody@koeninger.org>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In my local setup, I set log4j root category as ERROR (https://stackoverflow.com/questions/27781187/how-to-stop-info-messages-displaying-on-spark-console , first item show up if we google search "set spark log level".) When I run such command

```

spark-submit --class foo bar.jar

```

Nothing shows up, and the script exits.

After quick investigation, I think the log level for ClassNotFoundException/NoClassDefFoundError in SparkSubmit should be ERROR instead of WARN. Since the whole process exit because of the exception/error.

Before https://github.com/apache/spark/pull/20925, the message is not controlled by `log4j.rootCategory`.

## How was this patch tested?

Manual check.

Closes#23189 from gengliangwang/changeLogLevel.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

For now the `hasMinMaxStats` will return the same as `hasCountStats`, which is obviously not as expected.

## How was this patch tested?

Existing tests.

Closes#23152 from adrian-wang/minmaxstats.

Authored-by: Daoyuan Wang <me@daoyuan.wang>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Partition column name is required to be unique under the same directory. The following paths are invalid partitioned directory:

```

hdfs://host:9000/path/a=1

hdfs://host:9000/path/b=2

```

If case sensitive, the following paths should be invalid too:

```

hdfs://host:9000/path/a=1

hdfs://host:9000/path/A=2

```

Since column 'a' and 'A' are different, and it is wrong to use either one as the column name in partition schema.

Also, there is a `TODO` comment in the code. Currently the Spark doesn't validate such case when `CASE_SENSITIVE` enabled.

This PR is to resolve the problem.

## How was this patch tested?

Add unit test

Closes#23186 from gengliangwang/SPARK-26230.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to change behaviour of `UnivocityParser` and `FailureSafeParser`, and return all fields that were parsed and converted to expected types successfully instead of just returning a row with all `null`s for a bad input in the `PERMISSIVE` mode. For example, for CSV line `0,2013-111-11 12:13:14` and DDL schema `a int, b timestamp`, new result is `Row(0, null)`.

## How was this patch tested?

It was checked by existing tests from `CsvSuite` and `CsvFunctionsSuite`.

Closes#23120 from MaxGekk/failuresafe-partial-result.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

When build hash Map with one row of data and run out of memory, we should throw a SparkOutOfMemoryError exception, which is more accurate than SparkException. this PR fix it.

## How was this patch tested?

N / A

Closes#23190 from heary-cao/throwUnsafeHashedRelation.

Authored-by: caoxuewen <cao.xuewen@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

How to reproduce this issue:

```scala

scala> val meta = new org.apache.spark.sql.types.MetadataBuilder().putNull("key").build().json

java.lang.NullPointerException

at org.apache.spark.sql.types.Metadata$.org$apache$spark$sql$types$Metadata$$toJsonValue(Metadata.scala:196)

at org.apache.spark.sql.types.Metadata$$anonfun$1.apply(Metadata.scala:180)

```

This pr fix `NullPointerException` when `Metadata` serialize `null` values.

## How was this patch tested?

unit tests

Closes#23164 from wangyum/SPARK-26198.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

`resource` package is a Unix specific package. See https://docs.python.org/2/library/resource.html and https://docs.python.org/3/library/resource.html.

Note that we document Windows support:

> Spark runs on both Windows and UNIX-like systems (e.g. Linux, Mac OS).

This should be backported into branch-2.4 to restore Windows support in Spark 2.4.1.

## How was this patch tested?

Manually mocking the changed logics.

Closes#23055 from HyukjinKwon/SPARK-26080.

Lead-authored-by: hyukjinkwon <gurwls223@apache.org>

Co-authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Add headers to empty csv files when header=true, because otherwise these files are invalid when reading.

## How was this patch tested?

Added test for roundtrip of empty dataframe to csv file with headers and back in CSVSuite

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#23173 from koertkuipers/feat-empty-csv-with-header.

Authored-by: Koert Kuipers <koert@tresata.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

UnsupportedOperationException messages are not the same with method name.This PR correct these messages.

## How was this patch tested?

NA

Closes#23154 from lcqzte10192193/wid-lcq-1127.

Authored-by: lichaoqun <li.chaoqun@zte.com.cn>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

It's a bad idea to use case class as public API, as it has a very wide surface. For example, the `copy` method, its fields, the companion object, etc.

For a particular case, `UserDefinedFunction`. It has a private constructor, and I believe we only want users to access a few methods:`apply`, `nullable`, `asNonNullable`, etc.

However, all its fields, and `copy` method, and the companion object are public unexpectedly. As a result, we made many tricks to work around the binary compatibility issues.

This PR proposes to only make interfaces public, and hide implementations behind with a private class. Now `UserDefinedFunction` is a pure trait, and the concrete implementation is `SparkUserDefinedFunction`, which is private.

Changing class to interface is not binary compatible(but source compatible), so 3.0 is a good chance to do it.

This is the first PR to go with this direction. If it's accepted, I'll create a umbrella JIRA and fix all the public case classes.

## How was this patch tested?

existing tests.

Closes#23178 from cloud-fan/udf.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose filtering out all empty files inside of `FileSourceScanExec` and exclude them from file splits. It should reduce overhead of opening and reading files without any data, and as consequence datasources will not produce empty partitions for such files.

## How was this patch tested?

Added a test which creates an empty and non-empty files. If empty files are ignored in load, Text datasource in the `wholetext` mode must create only one partition for non-empty file.

Closes#23130 from MaxGekk/ignore-empty-files.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is a follow pr of #23176.

`In` and `InSet` are semantically equal, so the tests for `In` should pass with `InSet`, and vice versa.

This combines those test cases.

## How was this patch tested?

The combined tests and existing tests.

Closes#23187 from ueshin/issues/SPARK-26211/in_inset_tests.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This is a small change for better debugging: to pass query uuid in IncrementalExecution, when we look at the QueryExecution in isolation to trace back the query.

## How was this patch tested?

N/A - just add some field for better debugging.

Closes#23192 from rxin/SPARK-26241.

Authored-by: Reynold Xin <rxin@databricks.com>

Signed-off-by: gatorsmile <gatorsmile@gmail.com>