## What changes were proposed in this pull request?

This copies the material from the spark.mllib user guide page for Naive Bayes to the spark.ml user guide page. I also improved the wording and organization slightly.

## How was this patch tested?

Built docs locally.

Author: Joseph K. Bradley <joseph@databricks.com>

Closes#21272 from jkbradley/nb-doc-update.

## What changes were proposed in this pull request?

This updates Parquet to 1.10.0 and updates the vectorized path for buffer management changes. Parquet 1.10.0 uses ByteBufferInputStream instead of byte arrays in encoders. This allows Parquet to break allocations into smaller chunks that are better for garbage collection.

## How was this patch tested?

Existing Parquet tests. Running in production at Netflix for about 3 months.

Author: Ryan Blue <blue@apache.org>

Closes#21070 from rdblue/SPARK-23972-update-parquet-to-1.10.0.

## What changes were proposed in this pull request?

In Apache Spark 2.4, [SPARK-23355](https://issues.apache.org/jira/browse/SPARK-23355) fixes a bug which ignores table properties during convertMetastore for tables created by STORED AS ORC/PARQUET.

For some Parquet tables having table properties like TBLPROPERTIES (parquet.compression 'NONE'), it was ignored by default before Apache Spark 2.4. After upgrading cluster, Spark will write uncompressed file which is different from Apache Spark 2.3 and old.

This PR adds a migration note for that.

## How was this patch tested?

N/A

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#21269 from dongjoon-hyun/SPARK-23355-DOC.

## What changes were proposed in this pull request?

This PR fixes the migration note for SPARK-23291 since it's going to backport to 2.3.1. See the discussion in https://issues.apache.org/jira/browse/SPARK-23291

## How was this patch tested?

N/A

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21249 from HyukjinKwon/SPARK-23291.

## What changes were proposed in this pull request?

`from_utc_timestamp` assumes its input is in UTC timezone and shifts it to the specified timezone. When the timestamp contains timezone(e.g. `2018-03-13T06:18:23+00:00`), Spark breaks the semantic and respect the timezone in the string. This is not what user expects and the result is different from Hive/Impala. `to_utc_timestamp` has the same problem.

More details please refer to the JIRA ticket.

This PR fixes this by returning null if the input timestamp contains timezone.

## How was this patch tested?

new tests

Author: Wenchen Fan <wenchen@databricks.com>

Closes#21169 from cloud-fan/from_utc_timezone.

## What changes were proposed in this pull request?

Added support to specify the 'spark.executor.extraJavaOptions' value in terms of the `{{APP_ID}}` and/or `{{EXECUTOR_ID}}`, `{{APP_ID}}` will be replaced by Application Id and `{{EXECUTOR_ID}}` will be replaced by Executor Id while starting the executor.

## How was this patch tested?

I have verified this by checking the executor process command and gc logs. I verified the same in different deployment modes(Standalone, YARN, Mesos) client and cluster modes.

Author: Devaraj K <devaraj@apache.org>

Closes#21088 from devaraj-kavali/SPARK-24003.

## What changes were proposed in this pull request?

By default, the dynamic allocation will request enough executors to maximize the

parallelism according to the number of tasks to process. While this minimizes the

latency of the job, with small tasks this setting can waste a lot of resources due to

executor allocation overhead, as some executor might not even do any work.

This setting allows to set a ratio that will be used to reduce the number of

target executors w.r.t. full parallelism.

The number of executors computed with this setting is still fenced by

`spark.dynamicAllocation.maxExecutors` and `spark.dynamicAllocation.minExecutors`

## How was this patch tested?

Units tests and runs on various actual workloads on a Yarn Cluster

Author: Julien Cuquemelle <j.cuquemelle@criteo.com>

Closes#19881 from jcuquemelle/AddTaskPerExecutorSlot.

## What changes were proposed in this pull request?

Currently we find the wider common type by comparing the two types from left to right, this can be a problem when you have two data types which don't have a common type but each can be promoted to StringType.

For instance, if you have a table with the schema:

[c1: date, c2: string, c3: int]

The following succeeds:

SELECT coalesce(c1, c2, c3) FROM table

While the following produces an exception:

SELECT coalesce(c1, c3, c2) FROM table

This is only a issue when the seq of dataTypes contains `StringType` and all the types can do string promotion.

close#19033

## How was this patch tested?

Add test in `TypeCoercionSuite`

Author: Xingbo Jiang <xingbo.jiang@databricks.com>

Closes#21074 from jiangxb1987/typeCoercion.

## What changes were proposed in this pull request?

This PR proposes to fix `roxygen2` to `5.0.1` in `docs/README.md` for SparkR documentation generation.

If I use higher version and creates the doc, it shows the diff below. Not a big deal but it bothered me.

```diff

diff --git a/R/pkg/DESCRIPTION b/R/pkg/DESCRIPTION

index 855eb5bf77f..159fca61e06 100644

--- a/R/pkg/DESCRIPTION

+++ b/R/pkg/DESCRIPTION

-57,6 +57,6 Collate:

'types.R'

'utils.R'

'window.R'

-RoxygenNote: 5.0.1

+RoxygenNote: 6.0.1

VignetteBuilder: knitr

NeedsCompilation: no

```

## How was this patch tested?

Manually tested. I met this every time I set the new environment for Spark dev but I have kept forgetting to fix it.

Author: hyukjinkwon <gurwls223@apache.org>

Closes#21020 from HyukjinKwon/minor-r-doc.

## What changes were proposed in this pull request?

Easy fix in the documentation.

## How was this patch tested?

N/A

Closes#20948

Author: Daniel Sakuma <dsakuma@gmail.com>

Closes#20928 from dsakuma/fix_typo_configuration_docs.

## What changes were proposed in this pull request?

This PR is to finish https://github.com/apache/spark/pull/17272

This JIRA is a follow up work after SPARK-19583

As we discussed in that PR

The following DDL for a managed table with an existed default location should throw an exception:

CREATE TABLE ... (PARTITIONED BY ...) AS SELECT ...

CREATE TABLE ... (PARTITIONED BY ...)

Currently there are some situations which are not consist with above logic:

CREATE TABLE ... (PARTITIONED BY ...) succeed with an existed default location

situation: for both hive/datasource(with HiveExternalCatalog/InMemoryCatalog)

CREATE TABLE ... (PARTITIONED BY ...) AS SELECT ...

situation: hive table succeed with an existed default location

This PR is going to make above two situations consist with the logic that it should throw an exception

with an existed default location.

## How was this patch tested?

unit test added

Author: Gengliang Wang <gengliang.wang@databricks.com>

Closes#20886 from gengliangwang/pr-17272.

## What changes were proposed in this pull request?

Easy fix in the markdown.

## How was this patch tested?

jekyII build test manually.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: lemonjing <932191671@qq.com>

Closes#20897 from Lemonjing/master.

## What changes were proposed in this pull request?

As mentioned in SPARK-23285, this PR introduces a new configuration property `spark.kubernetes.executor.cores` for specifying the physical CPU cores requested for each executor pod. This is to avoid changing the semantics of `spark.executor.cores` and `spark.task.cpus` and their role in task scheduling, task parallelism, dynamic resource allocation, etc. The new configuration property only determines the physical CPU cores available to an executor. An executor can still run multiple tasks simultaneously by using appropriate values for `spark.executor.cores` and `spark.task.cpus`.

## How was this patch tested?

Unit tests.

felixcheung srowen jiangxb1987 jerryshao mccheah foxish

Author: Yinan Li <ynli@google.com>

Author: Yinan Li <liyinan926@gmail.com>

Closes#20553 from liyinan926/master.

This change basically rewrites the security documentation so that it's

up to date with new features, more correct, and more complete.

Because security is such an important feature, I chose to move all the

relevant configuration documentation to the security page, instead of

having them peppered all over the place in the configuration page. This

allows an almost one-stop shop for security configuration in Spark. The

only exceptions are some YARN-specific minor features which I left in

the YARN page.

I also re-organized the page's topics, since they didn't make a lot of

sense. You had kerberos features described inside paragraphs talking

about UI access control, and other oddities. It should be easier now

to find information about specific Spark security features. I also

enabled TOCs for both the Security and YARN pages, since that makes it

easier to see what is covered.

I removed most of the comments from the SecurityManager javadoc since

they just replicated information in the security doc, with different

levels of out-of-dateness.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20742 from vanzin/SPARK-23572.

## What changes were proposed in this pull request?

This PR fixes an incorrect comparison in SQL between timestamp and date. This is because both of them are casted to `string` and then are compared lexicographically. This implementation shows `false` regarding this query `spark.sql("select cast('2017-03-01 00:00:00' as timestamp) between cast('2017-02-28' as date) and cast('2017-03-01' as date)").show`.

This PR shows `true` for this query by casting `date("2017-03-01")` to `timestamp("2017-03-01 00:00:00")`.

(Please fill in changes proposed in this fix)

## How was this patch tested?

Added new UTs to `TypeCoercionSuite`.

Author: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Closes#20774 from kiszk/SPARK-23549.

## What changes were proposed in this pull request?

Currently we allow writing data frames with empty schema into a file based datasource for certain file formats such as JSON, ORC etc. For formats such as Parquet and Text, we raise error at different times of execution. For text format, we return error from the driver early on in processing where as for format such as parquet, the error is raised from executor.

**Example**

spark.emptyDataFrame.write.format("parquet").mode("overwrite").save(path)

**Results in**

``` SQL

org.apache.parquet.schema.InvalidSchemaException: Cannot write a schema with an empty group: message spark_schema {

}

at org.apache.parquet.schema.TypeUtil$1.visit(TypeUtil.java:27)

at org.apache.parquet.schema.TypeUtil$1.visit(TypeUtil.java:37)

at org.apache.parquet.schema.MessageType.accept(MessageType.java:58)

at org.apache.parquet.schema.TypeUtil.checkValidWriteSchema(TypeUtil.java:23)

at org.apache.parquet.hadoop.ParquetFileWriter.<init>(ParquetFileWriter.java:225)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:342)

at org.apache.parquet.hadoop.ParquetOutputFormat.getRecordWriter(ParquetOutputFormat.java:302)

at org.apache.spark.sql.execution.datasources.parquet.ParquetOutputWriter.<init>(ParquetOutputWriter.scala:37)

at org.apache.spark.sql.execution.datasources.parquet.ParquetFileFormat$$anon$1.newInstance(ParquetFileFormat.scala:151)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$SingleDirectoryWriteTask.newOutputWriter(FileFormatWriter.scala:376)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$SingleDirectoryWriteTask.execute(FileFormatWriter.scala:387)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$org$apache$spark$sql$execution$datasources$FileFormatWriter$$executeTask$3.apply(FileFormatWriter.scala:278)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$org$apache$spark$sql$execution$datasources$FileFormatWriter$$executeTask$3.apply(FileFormatWriter.scala:276)

at org.apache.spark.util.Utils$.tryWithSafeFinallyAndFailureCallbacks(Utils.scala:1411)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.org$apache$spark$sql$execution$datasources$FileFormatWriter$$executeTask(FileFormatWriter.scala:281)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$write$1.apply(FileFormatWriter.scala:206)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$$anonfun$write$1.apply(FileFormatWriter.scala:205)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:87)

at org.apache.spark.scheduler.Task.run(Task.scala:109)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:345)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.

```

In this PR, we unify the error processing and raise error on attempt to write empty schema based dataframes into file based datasource (orc, parquet, text , csv, json etc) early on in the processing.

## How was this patch tested?

Unit tests added in FileBasedDatasourceSuite.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#20579 from dilipbiswal/spark-23372.

## What changes were proposed in this pull request?

To drop `exprId`s for `Alias` in user-facing info., this pr added an entry for `Alias` in `NonSQLExpression.sql`

## How was this patch tested?

Added tests in `UDFSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#20827 from maropu/SPARK-23666.

## What changes were proposed in this pull request?

Removal of the init-container for downloading remote dependencies. Built off of the work done by vanzin in an attempt to refactor driver/executor configuration elaborated in [this](https://issues.apache.org/jira/browse/SPARK-22839) ticket.

## How was this patch tested?

This patch was tested with unit and integration tests.

Author: Ilan Filonenko <if56@cornell.edu>

Closes#20669 from ifilonenko/remove-init-container.

## What changes were proposed in this pull request?

In the PR https://github.com/apache/spark/pull/20671, I forgot to update the doc about this new support.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20789 from gatorsmile/docUpdate.

## What changes were proposed in this pull request?

Below are the two cases.

``` SQL

case 1

scala> List.empty[String].toDF().rdd.partitions.length

res18: Int = 1

```

When we write the above data frame as parquet, we create a parquet file containing

just the schema of the data frame.

Case 2

``` SQL

scala> val anySchema = StructType(StructField("anyName", StringType, nullable = false) :: Nil)

anySchema: org.apache.spark.sql.types.StructType = StructType(StructField(anyName,StringType,false))

scala> spark.read.schema(anySchema).csv("/tmp/empty_folder").rdd.partitions.length

res22: Int = 0

```

For the 2nd case, since number of partitions = 0, we don't call the write task (the task has logic to create the empty metadata only parquet file)

The fix is to create a dummy single partition RDD and set up the write task based on it to ensure

the metadata-only file.

## How was this patch tested?

A new test is added to DataframeReaderWriterSuite.

Author: Dilip Biswal <dbiswal@us.ibm.com>

Closes#20525 from dilipbiswal/spark-23271.

## What changes were proposed in this pull request?

This PR adds a configuration to control the fallback of Arrow optimization for `toPandas` and `createDataFrame` with Pandas DataFrame.

## How was this patch tested?

Manually tested and unit tests added.

You can test this by:

**`createDataFrame`**

```python

spark.conf.set("spark.sql.execution.arrow.enabled", False)

pdf = spark.createDataFrame([[{'a': 1}]]).toPandas()

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", True)

spark.createDataFrame(pdf, "a: map<string, int>")

```

```python

spark.conf.set("spark.sql.execution.arrow.enabled", False)

pdf = spark.createDataFrame([[{'a': 1}]]).toPandas()

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", False)

spark.createDataFrame(pdf, "a: map<string, int>")

```

**`toPandas`**

```python

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", True)

spark.createDataFrame([[{'a': 1}]]).toPandas()

```

```python

spark.conf.set("spark.sql.execution.arrow.enabled", True)

spark.conf.set("spark.sql.execution.arrow.fallback.enabled", False)

spark.createDataFrame([[{'a': 1}]]).toPandas()

```

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20678 from HyukjinKwon/SPARK-23380-conf.

## What changes were proposed in this pull request?

Seems R's substr API treats Scala substr API as zero based and so subtracts the given starting position by 1.

Because Scala's substr API also accepts zero-based starting position (treated as the first element), so the current R's substr test results are correct as they all use 1 as starting positions.

## How was this patch tested?

Modified tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20464 from viirya/SPARK-23291.

These options were used to configure the built-in JRE SSL libraries

when downloading files from HTTPS servers. But because they were also

used to set up the now (long) removed internal HTTPS file server,

their default configuration chose convenience over security by having

overly lenient settings.

This change removes the configuration options that affect the JRE SSL

libraries. The JRE trust store can still be configured via system

properties (or globally in the JRE security config). The only lost

functionality is not being able to disable the default hostname

verifier when using spark-submit, which should be fine since Spark

itself is not using https for any internal functionality anymore.

I also removed the HTTP-related code from the REPL class loader, since

we haven't had a HTTP server for REPL-generated classes for a while.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20723 from vanzin/SPARK-23538.

## What changes were proposed in this pull request?

If spark is run with "spark.authenticate=true", then it will fail to start in local mode.

This PR generates secret in local mode when authentication on.

## How was this patch tested?

Modified existing unit test.

Manually started spark-shell.

Author: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Closes#20652 from gaborgsomogyi/SPARK-23476.

## What changes were proposed in this pull request?

- Added clear information about triggers

- Made the semantics guarantees of watermarks more clear for streaming aggregations and stream-stream joins.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#20631 from tdas/SPARK-23454.

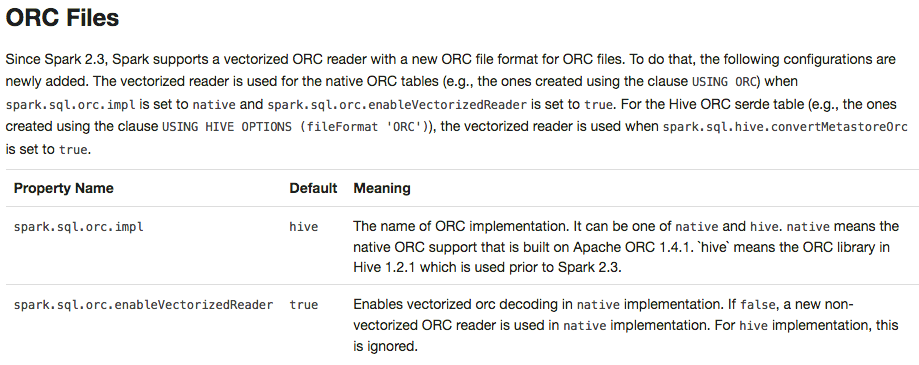

## What changes were proposed in this pull request?

Apache Spark 2.3 introduced `native` ORC supports with vectorization and many fixes. However, it's shipped as a not-default option. This PR enables `native` ORC implementation and predicate-pushdown by default for Apache Spark 2.4. We will improve and stabilize ORC data source before Apache Spark 2.4. And, eventually, Apache Spark will drop old Hive-based ORC code.

## How was this patch tested?

Pass the Jenkins with existing tests.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20634 from dongjoon-hyun/SPARK-23456.

## What changes were proposed in this pull request?

To prevent any regressions, this PR changes ORC implementation to `hive` by default like Spark 2.2.X.

Users can enable `native` ORC. Also, ORC PPD is also restored to `false` like Spark 2.2.X.

## How was this patch tested?

Pass all test cases.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#20610 from dongjoon-hyun/SPARK-ORC-DISABLE.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/19579 introduces a behavior change. We need to document it in the migration guide.

## How was this patch tested?

Also update the HiveExternalCatalogVersionsSuite to verify it.

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20606 from gatorsmile/addMigrationGuide.

## What changes were proposed in this pull request?

Added documentation about what MLlib guarantees in terms of loading ML models and Pipelines from old Spark versions. Discussed & confirmed on linked JIRA.

Author: Joseph K. Bradley <joseph@databricks.com>

Closes#20592 from jkbradley/SPARK-23154-backwards-compat-doc.

## What changes were proposed in this pull request?

This PR targets to explicitly specify supported types in Pandas UDFs.

The main change here is to add a deduplicated and explicit type checking in `returnType` ahead with documenting this; however, it happened to fix multiple things.

1. Currently, we don't support `BinaryType` in Pandas UDFs, for example, see:

```python

from pyspark.sql.functions import pandas_udf

pudf = pandas_udf(lambda x: x, "binary")

df = spark.createDataFrame([[bytearray(1)]])

df.select(pudf("_1")).show()

```

```

...

TypeError: Unsupported type in conversion to Arrow: BinaryType

```

We can document this behaviour for its guide.

2. Also, the grouped aggregate Pandas UDF fails fast on `ArrayType` but seems we can support this case.

```python

from pyspark.sql.functions import pandas_udf, PandasUDFType

foo = pandas_udf(lambda v: v.mean(), 'array<double>', PandasUDFType.GROUPED_AGG)

df = spark.range(100).selectExpr("id", "array(id) as value")

df.groupBy("id").agg(foo("value")).show()

```

```

...

NotImplementedError: ArrayType, StructType and MapType are not supported with PandasUDFType.GROUPED_AGG

```

3. Since we can check the return type ahead, we can fail fast before actual execution.

```python

# we can fail fast at this stage because we know the schema ahead

pandas_udf(lambda x: x, BinaryType())

```

## How was this patch tested?

Manually tested and unit tests for `BinaryType` and `ArrayType(...)` were added.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20531 from HyukjinKwon/pudf-cleanup.

This commit modifies the Mesos submission client to allow the principal

and secret to be provided indirectly via files. The path to these files

can be specified either via Spark configuration or via environment

variable.

Assuming these files are appropriately protected by FS/OS permissions

this means we don't ever leak the actual values in process info like ps

Environment variable specification is useful because it allows you to

interpolate the location of this file when using per-user Mesos

credentials.

For some background as to why we have taken this approach I will briefly describe our set up. On our systems we provide each authorised user account with their own Mesos credentials to provide certain security and audit guarantees to our customers. These credentials are managed by a central Secret management service. In our `spark-env.sh` we determine the appropriate secret and principal files to use depending on the user who is invoking Spark hence the need to inject these via environment variables as well as by configuration properties. So we set these environment variables appropriately and our Spark read in the contents of those files to authenticate itself with Mesos.

This is functionality we have been using it in production across multiple customer sites for some time. This has been in the field for around 18 months with no reported issues. These changes have been sufficient to meet our customer security and audit requirements.

We have been building and deploying custom builds of Apache Spark with various minor tweaks like this which we are now looking to contribute back into the community in order that we can rely upon stock Apache Spark builds and stop maintaining our own internal fork.

Author: Rob Vesse <rvesse@dotnetrdf.org>

Closes#20167 from rvesse/SPARK-16501.

## What changes were proposed in this pull request?

This PR proposes to disallow default value None when 'to_replace' is not a dictionary.

It seems weird we set the default value of `value` to `None` and we ended up allowing the case as below:

```python

>>> df.show()

```

```

+----+------+-----+

| age|height| name|

+----+------+-----+

| 10| 80|Alice|

...

```

```python

>>> df.na.replace('Alice').show()

```

```

+----+------+----+

| age|height|name|

+----+------+----+

| 10| 80|null|

...

```

**After**

This PR targets to disallow the case above:

```python

>>> df.na.replace('Alice').show()

```

```

...

TypeError: value is required when to_replace is not a dictionary.

```

while we still allow when `to_replace` is a dictionary:

```python

>>> df.na.replace({'Alice': None}).show()

```

```

+----+------+----+

| age|height|name|

+----+------+----+

| 10| 80|null|

...

```

## How was this patch tested?

Manually tested, tests were added in `python/pyspark/sql/tests.py` and doctests were fixed.

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#20499 from HyukjinKwon/SPARK-19454-followup.

## What changes were proposed in this pull request?

Further clarification of caveats in using stream-stream outer joins.

## How was this patch tested?

N/A

Author: Tathagata Das <tathagata.das1565@gmail.com>

Closes#20494 from tdas/SPARK-23064-2.

Add breaking changes, as well as update behavior changes, to `2.3` ML migration guide.

## How was this patch tested?

Doc only

Author: Nick Pentreath <nickp@za.ibm.com>

Closes#20421 from MLnick/SPARK-23112-ml-guide.

## What changes were proposed in this pull request?

Rename the public APIs and names of pandas udfs.

- `PANDAS SCALAR UDF` -> `SCALAR PANDAS UDF`

- `PANDAS GROUP MAP UDF` -> `GROUPED MAP PANDAS UDF`

- `PANDAS GROUP AGG UDF` -> `GROUPED AGG PANDAS UDF`

## How was this patch tested?

The existing tests

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20428 from gatorsmile/renamePandasUDFs.

## What changes were proposed in this pull request?

User guide and examples are updated to reflect multiclass logistic regression summary which was added in [SPARK-17139](https://issues.apache.org/jira/browse/SPARK-17139).

I did not make a separate summary example, but added the summary code to the multiclass example that already existed. I don't see the need for a separate example for the summary.

## How was this patch tested?

Docs and examples only. Ran all examples locally using spark-submit.

Author: sethah <shendrickson@cloudera.com>

Closes#20332 from sethah/multiclass_summary_example.

## What changes were proposed in this pull request?

Adding user facing documentation for working with Arrow in Spark

Author: Bryan Cutler <cutlerb@gmail.com>

Author: Li Jin <ice.xelloss@gmail.com>

Author: hyukjinkwon <gurwls223@gmail.com>

Closes#19575 from BryanCutler/arrow-user-docs-SPARK-2221.

Update ML user guide with highlights and migration guide for `2.3`.

## How was this patch tested?

Doc only.

Author: Nick Pentreath <nickp@za.ibm.com>

Closes#20363 from MLnick/SPARK-23112-ml-guide.

## What changes were proposed in this pull request?

Added documentation for new transformer.

Author: Bago Amirbekian <bago@databricks.com>

Closes#20285 from MrBago/sizeHintDocs.

## What changes were proposed in this pull request?

The example jar file is now in ./examples/jars directory of Spark distribution.

Author: Arseniy Tashoyan <tashoyan@users.noreply.github.com>

Closes#20349 from tashoyan/patch-1.

## What changes were proposed in this pull request?

streaming programming guide changes

## How was this patch tested?

manually

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#20340 from felixcheung/rstreamdoc.

## What changes were proposed in this pull request?

Fix spelling in quick-start doc.

## How was this patch tested?

Doc only.

Author: Shashwat Anand <me@shashwat.me>

Closes#20336 from ashashwat/SPARK-23165.

## What changes were proposed in this pull request?

Docs changes:

- Adding a warning that the backend is experimental.

- Removing a defunct internal-only option from documentation

- Clarifying that node selectors can be used right away, and other minor cosmetic changes

## How was this patch tested?

Docs only change

Author: foxish <ramanathana@google.com>

Closes#20314 from foxish/ambiguous-docs.

## What changes were proposed in this pull request?

We have `OneHotEncoderEstimator` now and `OneHotEncoder` will be deprecated since 2.3.0. We should add `OneHotEncoderEstimator` into mllib document.

We also need to provide corresponding examples for `OneHotEncoderEstimator` which are used in the document too.

## How was this patch tested?

Existing tests.

Author: Liang-Chi Hsieh <viirya@gmail.com>

Closes#20257 from viirya/SPARK-23048.

Update user guide entry for `FeatureHasher` to match the Scala / Python doc, to describe the `categoricalCols` parameter.

## How was this patch tested?

Doc only

Author: Nick Pentreath <nickp@za.ibm.com>

Closes#20293 from MLnick/SPARK-23127-catCol-userguide.

## What changes were proposed in this pull request?

In latest structured-streaming-kafka-integration document, Java code example for Kafka integration is using `DataFrame<Row>`, shouldn't it be changed to `DataSet<Row>`?

## How was this patch tested?

manual test has been performed to test the updated example Java code in Spark 2.2.1 with Kafka 1.0

Author: brandonJY <brandonJY@users.noreply.github.com>

Closes#20312 from brandonJY/patch-2.

## What changes were proposed in this pull request?

In the Kubernetes mode, fails fast in the submission process if any submission client local dependencies are used as the use case is not supported yet.

## How was this patch tested?

Unit tests, integration tests, and manual tests.

vanzin foxish

Author: Yinan Li <liyinan926@gmail.com>

Closes#20320 from liyinan926/master.

## What changes were proposed in this pull request?

This PR completes the docs, specifying the default units assumed in configuration entries of type size.

This is crucial since unit-less values are accepted and the user might assume the base unit is bytes, which in most cases it is not, leading to hard-to-debug problems.

## How was this patch tested?

This patch updates only documentation only.

Author: Fernando Pereira <fernando.pereira@epfl.ch>

Closes#20269 from ferdonline/docs_units.

## What changes were proposed in this pull request?

When there is an operation between Decimals and the result is a number which is not representable exactly with the result's precision and scale, Spark is returning `NULL`. This was done to reflect Hive's behavior, but it is against SQL ANSI 2011, which states that "If the result cannot be represented exactly in the result type, then whether it is rounded or truncated is implementation-defined". Moreover, Hive now changed its behavior in order to respect the standard, thanks to HIVE-15331.

Therefore, the PR propose to:

- update the rules to determine the result precision and scale according to the new Hive's ones introduces in HIVE-15331;

- round the result of the operations, when it is not representable exactly with the result's precision and scale, instead of returning `NULL`

- introduce a new config `spark.sql.decimalOperations.allowPrecisionLoss` which default to `true` (ie. the new behavior) in order to allow users to switch back to the previous one.

Hive behavior reflects SQLServer's one. The only difference is that the precision and scale are adjusted for all the arithmetic operations in Hive, while SQL Server is said to do so only for multiplications and divisions in the documentation. This PR follows Hive's behavior.

A more detailed explanation is available here: https://mail-archives.apache.org/mod_mbox/spark-dev/201712.mbox/%3CCAEorWNAJ4TxJR9NBcgSFMD_VxTg8qVxusjP%2BAJP-x%2BJV9zH-yA%40mail.gmail.com%3E.

## How was this patch tested?

modified and added UTs. Comparisons with results of Hive and SQLServer.

Author: Marco Gaido <marcogaido91@gmail.com>

Closes#20023 from mgaido91/SPARK-22036.

## What changes were proposed in this pull request?

This patch bumps the master branch version to `2.4.0-SNAPSHOT`.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20222 from gatorsmile/bump24.

## What changes were proposed in this pull request?

Small typing correction - double word

## How was this patch tested?

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Matthias Beaupère <matthias.beaupere@gmail.com>

Closes#20212 from matthiasbe/patch-1.

This change allows a user to submit a Spark application on kubernetes

having to provide a single image, instead of one image for each type

of container. The image's entry point now takes an extra argument that

identifies the process that is being started.

The configuration still allows the user to provide different images

for each container type if they so desire.

On top of that, the entry point was simplified a bit to share more

code; mainly, the same env variable is used to propagate the user-defined

classpath to the different containers.

Aside from being modified to match the new behavior, the

'build-push-docker-images.sh' script was renamed to 'docker-image-tool.sh'

to more closely match its purpose; the old name was a little awkward

and now also not entirely correct, since there is a single image. It

was also moved to 'bin' since it's not necessarily an admin tool.

Docs have been updated to match the new behavior.

Tested locally with minikube.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20192 from vanzin/SPARK-22994.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/18164 introduces the behavior changes. We need to document it.

## How was this patch tested?

N/A

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20234 from gatorsmile/docBehaviorChange.

## What changes were proposed in this pull request?

doc update

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#20198 from felixcheung/rrefreshdoc.

[SPARK-21786][SQL] When acquiring 'compressionCodecClassName' in 'ParquetOptions', `parquet.compression` needs to be considered.

## What changes were proposed in this pull request?

Since Hive 1.1, Hive allows users to set parquet compression codec via table-level properties parquet.compression. See the JIRA: https://issues.apache.org/jira/browse/HIVE-7858 . We do support orc.compression for ORC. Thus, for external users, it is more straightforward to support both. See the stackflow question: https://stackoverflow.com/questions/36941122/spark-sql-ignores-parquet-compression-propertie-specified-in-tblproperties

In Spark side, our table-level compression conf compression was added by #11464 since Spark 2.0.

We need to support both table-level conf. Users might also use session-level conf spark.sql.parquet.compression.codec. The priority rule will be like

If other compression codec configuration was found through hive or parquet, the precedence would be compression, parquet.compression, spark.sql.parquet.compression.codec. Acceptable values include: none, uncompressed, snappy, gzip, lzo.

The rule for Parquet is consistent with the ORC after the change.

Changes:

1.Increased acquiring 'compressionCodecClassName' from `parquet.compression`,and the precedence order is `compression`,`parquet.compression`,`spark.sql.parquet.compression.codec`, just like what we do in `OrcOptions`.

2.Change `spark.sql.parquet.compression.codec` to support "none".Actually in `ParquetOptions`,we do support "none" as equivalent to "uncompressed", but it does not allowed to configured to "none".

3.Change `compressionCode` to `compressionCodecClassName`.

## How was this patch tested?

Add test.

Author: fjh100456 <fu.jinhua6@zte.com.cn>

Closes#20076 from fjh100456/ParquetOptionIssue.

## What changes were proposed in this pull request?

This pr modified `elt` to output binary for binary inputs.

`elt` in the current master always output data as a string. But, in some databases (e.g., MySQL), if all inputs are binary, `elt` also outputs binary (Also, this might be a small surprise).

This pr is related to #19977.

## How was this patch tested?

Added tests in `SQLQueryTestSuite` and `TypeCoercionSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#20135 from maropu/SPARK-22937.

- Make it possible to build images from a git clone.

- Make it easy to use minikube to test things.

Also fixed what seemed like a bug: the base image wasn't getting the tag

provided in the command line. Adding the tag allows users to use multiple

Spark builds in the same kubernetes cluster.

Tested by deploying images on minikube and running spark-submit from a dev

environment; also by building the images with different tags and verifying

"docker images" in minikube.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#20154 from vanzin/SPARK-22960.

## What changes were proposed in this pull request?

update R migration guide and vignettes

## How was this patch tested?

manually

Author: Felix Cheung <felixcheung_m@hotmail.com>

Closes#20106 from felixcheung/rreleasenote23.

## What changes were proposed in this pull request?

Fixing three small typos in the docs, in particular:

It take a `RDD` -> It takes an `RDD` (twice)

It take an `JavaRDD` -> It takes a `JavaRDD`

I didn't create any Jira issue for this minor thing, I hope it's ok.

## How was this patch tested?

visually by clicking on 'preview'

Author: Jirka Kremser <jkremser@redhat.com>

Closes#20108 from Jiri-Kremser/docs-typo.

## What changes were proposed in this pull request?

Currently, we do not guarantee an order evaluation of conjuncts in either Filter or Join operator. This is also true to the mainstream RDBMS vendors like DB2 and MS SQL Server. Thus, we should also push down the deterministic predicates that are after the first non-deterministic, if possible.

## How was this patch tested?

Updated the existing test cases.

Author: gatorsmile <gatorsmile@gmail.com>

Closes#20069 from gatorsmile/morePushDown.

## What changes were proposed in this pull request?

This pr modified `concat` to concat binary inputs into a single binary output.

`concat` in the current master always output data as a string. But, in some databases (e.g., PostgreSQL), if all inputs are binary, `concat` also outputs binary.

## How was this patch tested?

Added tests in `SQLQueryTestSuite` and `TypeCoercionSuite`.

Author: Takeshi Yamamuro <yamamuro@apache.org>

Closes#19977 from maropu/SPARK-22771.

## What changes were proposed in this pull request?

This PR updates the Kubernetes documentation corresponding to the following features/changes in #19954.

* Ability to use remote dependencies through the init-container.

* Ability to mount user-specified secrets into the driver and executor pods.

vanzin jiangxb1987 foxish

Author: Yinan Li <liyinan926@gmail.com>

Closes#20059 from liyinan926/doc-update.

What changes were proposed in this pull request?

This PR contains documentation on the usage of Kubernetes scheduler in Spark 2.3, and a shell script to make it easier to build docker images required to use the integration. The changes detailed here are covered by https://github.com/apache/spark/pull/19717 and https://github.com/apache/spark/pull/19468 which have merged already.

How was this patch tested?

The script has been in use for releases on our fork. Rest is documentation.

cc rxin mateiz (shepherd)

k8s-big-data SIG members & contributors: foxish ash211 mccheah liyinan926 erikerlandson ssuchter varunkatta kimoonkim tnachen ifilonenko

reviewers: vanzin felixcheung jiangxb1987 mridulm

TODO:

- [x] Add dockerfiles directory to built distribution. (https://github.com/apache/spark/pull/20007)

- [x] Change references to docker to instead say "container" (https://github.com/apache/spark/pull/19995)

- [x] Update configuration table.

- [x] Modify spark.kubernetes.allocation.batch.delay to take time instead of int (#20032)

Author: foxish <ramanathana@google.com>

Closes#19946 from foxish/update-k8s-docs.

## What changes were proposed in this pull request?

Like `Parquet`, users can use `ORC` with Apache Spark structured streaming. This PR adds `orc()` to `DataStreamReader`(Scala/Python) in order to support creating streaming dataset with ORC file format more easily like the other file formats. Also, this adds a test coverage for ORC data source and updates the document.

**BEFORE**

```scala

scala> spark.readStream.schema("a int").orc("/tmp/orc_ss").writeStream.format("console").start()

<console>:24: error: value orc is not a member of org.apache.spark.sql.streaming.DataStreamReader

spark.readStream.schema("a int").orc("/tmp/orc_ss").writeStream.format("console").start()

```

**AFTER**

```scala

scala> spark.readStream.schema("a int").orc("/tmp/orc_ss").writeStream.format("console").start()

res0: org.apache.spark.sql.streaming.StreamingQuery = org.apache.spark.sql.execution.streaming.StreamingQueryWrapper678b3746

scala>

-------------------------------------------

Batch: 0

-------------------------------------------

+---+

| a|

+---+

| 1|

+---+

```

## How was this patch tested?

Pass the newly added test cases.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#19975 from dongjoon-hyun/SPARK-22781.

## What changes were proposed in this pull request?

Easy fix in the link.

## How was this patch tested?

Tested manually

Author: Mahmut CAVDAR <mahmutcvdr@gmail.com>

Closes#19996 from mcavdar/master.

This PR contains implementation of the basic submission client for the cluster mode of Spark on Kubernetes. It's step 2 from the step-wise plan documented [here](https://github.com/apache-spark-on-k8s/spark/issues/441#issuecomment-330802935).

This addition is covered by the [SPIP](http://apache-spark-developers-list.1001551.n3.nabble.com/SPIP-Spark-on-Kubernetes-td22147.html) vote which passed on Aug 31.

This PR and #19468 together form a MVP of Spark on Kubernetes that allows users to run Spark applications that use resources locally within the driver and executor containers on Kubernetes 1.6 and up. Some changes on pom and build/test setup are copied over from #19468 to make this PR self contained and testable.

The submission client is mainly responsible for creating the Kubernetes pod that runs the Spark driver. It follows a step-based approach to construct the driver pod, as the code under the `submit.steps` package shows. The steps are orchestrated by `DriverConfigurationStepsOrchestrator`. `Client` creates the driver pod and waits for the application to complete if it's configured to do so, which is the case by default.

This PR also contains Dockerfiles of the driver and executor images. They are included because some of the environment variables set in the code would not make sense without referring to the Dockerfiles.

* The patch contains unit tests which are passing.

* Manual testing: ./build/mvn -Pkubernetes clean package succeeded.

* It is a subset of the entire changelist hosted at http://github.com/apache-spark-on-k8s/spark which is in active use in several organizations.

* There is integration testing enabled in the fork currently hosted by PepperData which is being moved over to RiseLAB CI.

* Detailed documentation on trying out the patch in its entirety is in: https://apache-spark-on-k8s.github.io/userdocs/running-on-kubernetes.html

cc rxin felixcheung mateiz (shepherd)

k8s-big-data SIG members & contributors: mccheah foxish ash211 ssuchter varunkatta kimoonkim erikerlandson tnachen ifilonenko liyinan926

Author: Yinan Li <liyinan926@gmail.com>

Closes#19717 from liyinan926/spark-kubernetes-4.

## What changes were proposed in this pull request?

Update broadcast behavior changes in migration section.

## How was this patch tested?

N/A

Author: Yuming Wang <wgyumg@gmail.com>

Closes#19858 from wangyum/SPARK-22489-migration.

## What changes were proposed in this pull request?

The spark properties for configuring the ContextCleaner are not documented in the official documentation at https://spark.apache.org/docs/latest/configuration.html#available-properties.

This PR adds the doc.

## How was this patch tested?

Manual.

```

cd docs

jekyll build

open _site/configuration.html

```

Author: gaborgsomogyi <gabor.g.somogyi@gmail.com>

Closes#19826 from gaborgsomogyi/SPARK-22428.

## What changes were proposed in this pull request?

How to reproduce:

```scala

import org.apache.spark.sql.execution.joins.BroadcastHashJoinExec

spark.createDataFrame(Seq((1, "4"), (2, "2"))).toDF("key", "value").createTempView("table1")

spark.createDataFrame(Seq((1, "1"), (2, "2"))).toDF("key", "value").createTempView("table2")

val bl = sql("SELECT /*+ MAPJOIN(t1) */ * FROM table1 t1 JOIN table2 t2 ON t1.key = t2.key").queryExecution.executedPlan

println(bl.children.head.asInstanceOf[BroadcastHashJoinExec].buildSide)

```

The result is `BuildRight`, but should be `BuildLeft`. This PR fix this issue.

## How was this patch tested?

unit tests

Author: Yuming Wang <wgyumg@gmail.com>

Closes#19714 from wangyum/SPARK-22489.

## What changes were proposed in this pull request?

This is a stripped down version of the `KubernetesClusterSchedulerBackend` for Spark with the following components:

- Static Allocation of Executors

- Executor Pod Factory

- Executor Recovery Semantics

It's step 1 from the step-wise plan documented [here](https://github.com/apache-spark-on-k8s/spark/issues/441#issuecomment-330802935).

This addition is covered by the [SPIP vote](http://apache-spark-developers-list.1001551.n3.nabble.com/SPIP-Spark-on-Kubernetes-td22147.html) which passed on Aug 31 .

## How was this patch tested?

- The patch contains unit tests which are passing.

- Manual testing: `./build/mvn -Pkubernetes clean package` succeeded.

- It is a **subset** of the entire changelist hosted in http://github.com/apache-spark-on-k8s/spark which is in active use in several organizations.

- There is integration testing enabled in the fork currently [hosted by PepperData](spark-k8s-jenkins.pepperdata.org:8080) which is being moved over to RiseLAB CI.

- Detailed documentation on trying out the patch in its entirety is in: https://apache-spark-on-k8s.github.io/userdocs/running-on-kubernetes.html

cc rxin felixcheung mateiz (shepherd)

k8s-big-data SIG members & contributors: mccheah ash211 ssuchter varunkatta kimoonkim erikerlandson liyinan926 tnachen ifilonenko

Author: Yinan Li <liyinan926@gmail.com>

Author: foxish <ramanathana@google.com>

Author: mcheah <mcheah@palantir.com>

Closes#19468 from foxish/spark-kubernetes-3.

## What changes were proposed in this pull request?

When converting Pandas DataFrame/Series from/to Spark DataFrame using `toPandas()` or pandas udfs, timestamp values behave to respect Python system timezone instead of session timezone.

For example, let's say we use `"America/Los_Angeles"` as session timezone and have a timestamp value `"1970-01-01 00:00:01"` in the timezone. Btw, I'm in Japan so Python timezone would be `"Asia/Tokyo"`.

The timestamp value from current `toPandas()` will be the following:

```

>>> spark.conf.set("spark.sql.session.timeZone", "America/Los_Angeles")

>>> df = spark.createDataFrame([28801], "long").selectExpr("timestamp(value) as ts")

>>> df.show()

+-------------------+

| ts|

+-------------------+

|1970-01-01 00:00:01|

+-------------------+

>>> df.toPandas()

ts

0 1970-01-01 17:00:01

```

As you can see, the value becomes `"1970-01-01 17:00:01"` because it respects Python timezone.

As we discussed in #18664, we consider this behavior is a bug and the value should be `"1970-01-01 00:00:01"`.

## How was this patch tested?

Added tests and existing tests.

Author: Takuya UESHIN <ueshin@databricks.com>

Closes#19607 from ueshin/issues/SPARK-22395.

## What changes were proposed in this pull request?

A discussed in SPARK-19606, the addition of a new config property named "spark.mesos.constraints.driver" for constraining drivers running on a Mesos cluster

## How was this patch tested?

Corresponding unit test added also tested locally on a Mesos cluster

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: Paul Mackles <pmackles@adobe.com>

Closes#19543 from pmackles/SPARK-19606.

## What changes were proposed in this pull request?

Documentation about Mesos Reject Offer Configurations

## Related PR

https://github.com/apache/spark/pull/19510 for `spark.mem.max`

Author: Li, YanKit | Wilson | RIT <yankit.li@rakuten.com>

Closes#19555 from windkit/spark_22133.

## What changes were proposed in this pull request?

Add incompatible Hive UDF describe to DOC.

## How was this patch tested?

N/A

Author: Yuming Wang <wgyumg@gmail.com>

Closes#18833 from wangyum/SPARK-21625.

## What changes were proposed in this pull request?

Easy fix in the documentation, which is reporting that only numeric types and string are supported in type inference for partition columns, while Date and Timestamp are supported too since 2.1.0, thanks to SPARK-17388.

## How was this patch tested?

n/a

Author: Marco Gaido <mgaido@hortonworks.com>

Closes#19628 from mgaido91/SPARK-22398.

## What changes were proposed in this pull request?

Using zstd compression for Spark jobs spilling 100s of TBs of data, we could reduce the amount of data written to disk by as much as 50%. This translates to significant latency gain because of reduced disk io operations. There is a degradation CPU time by 2 - 5% because of zstd compression overhead, but for jobs which are bottlenecked by disk IO, this hit can be taken.

## Benchmark

Please note that this benchmark is using real world compute heavy production workload spilling TBs of data to disk

| | zstd performance as compred to LZ4 |

| ------------- | -----:|

| spill/shuffle bytes | -48% |

| cpu time | + 3% |

| cpu reservation time | -40%|

| latency | -40% |

## How was this patch tested?

Tested by running few jobs spilling large amount of data on the cluster and amount of intermediate data written to disk reduced by as much as 50%.

Author: Sital Kedia <skedia@fb.com>

Closes#18805 from sitalkedia/skedia/upstream_zstd.

## What changes were proposed in this pull request?

Update the url of reference paper.

## How was this patch tested?

It is comments, so nothing tested.

Author: bomeng <bmeng@us.ibm.com>

Closes#19614 from bomeng/22399.

Often times we want to impute custom values other than 'NaN'. My addition helps people locate this function without reading the API.

## What changes were proposed in this pull request?

(Please fill in changes proposed in this fix)

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: tengpeng <tengpeng@users.noreply.github.com>

Closes#19600 from tengpeng/patch-5.

## Background

In #18837 , ArtRand added Mesos secrets support to the dispatcher. **This PR is to add the same secrets support to the drivers.** This means if the secret configs are set, the driver will launch executors that have access to either env or file-based secrets.

One use case for this is to support TLS in the driver <=> executor communication.

## What changes were proposed in this pull request?

Most of the changes are a refactor of the dispatcher secrets support (#18837) - moving it to a common place that can be used by both the dispatcher and drivers. The same goes for the unit tests.

## How was this patch tested?

There are four config combinations: [env or file-based] x [value or reference secret]. For each combination:

- Added a unit test.

- Tested in DC/OS.

Author: Susan X. Huynh <xhuynh@mesosphere.com>

Closes#19437 from susanxhuynh/sh-mesos-driver-secret.

## What changes were proposed in this pull request?

Event Log Server has a total of five configuration parameters, and now the description of the other two configuration parameters on the doc, user-friendly access and use.

## How was this patch tested?

manual tests

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: guoxiaolong <guo.xiaolong1@zte.com.cn>

Closes#19242 from guoxiaolongzte/addEventLogConf.

## What changes were proposed in this pull request?

The HTTP Strict-Transport-Security response header (often abbreviated as HSTS) is a security feature that lets a web site tell browsers that it should only be communicated with using HTTPS, instead of using HTTP.

Note: The Strict-Transport-Security header is ignored by the browser when your site is accessed using HTTP; this is because an attacker may intercept HTTP connections and inject the header or remove it. When your site is accessed over HTTPS with no certificate errors, the browser knows your site is HTTPS capable and will honor the Strict-Transport-Security header.

The HTTP X-XSS-Protection response header is a feature of Internet Explorer, Chrome and Safari that stops pages from loading when they detect reflected cross-site scripting (XSS) attacks.

The HTTP X-Content-Type-Options response header is used to protect against MIME sniffing vulnerabilities.

## How was this patch tested?

Checked on my system locally.

<img width="750" alt="screen shot 2017-10-03 at 6 49 20 pm" src="https://user-images.githubusercontent.com/6433184/31127234-eadf7c0c-a86b-11e7-8e5d-f6ea3f97b210.png">

Author: krishna-pandey <krish.pandey21@gmail.com>

Author: Krishna Pandey <krish.pandey21@gmail.com>

Closes#19419 from krishna-pandey/SPARK-22188.

Hive delegation tokens are only needed when the Spark driver has no access

to the kerberos TGT. That happens only in two situations:

- when using a proxy user

- when using cluster mode without a keytab

This change modifies the Hive provider so that it only generates delegation

tokens in those situations, and tweaks the YARN AM so that it makes the proper

user visible to the Hive code when running with keytabs, so that the TGT

can be used instead of a delegation token.

The effect of this change is that now it's possible to initialize multiple,

non-concurrent SparkContext instances in the same JVM. Before, the second

invocation would fail to fetch a new Hive delegation token, which then could

make the second (or third or...) application fail once the token expired.

With this change, the TGT will be used to authenticate to the HMS instead.

This change also avoids polluting the current logged in user's credentials

when launching applications. The credentials are copied only when running

applications as a proxy user. This makes it possible to implement SPARK-11035

later, where multiple threads might be launching applications, and each app

should have its own set of credentials.

Tested by verifying HDFS and Hive access in following scenarios:

- client and cluster mode

- client and cluster mode with proxy user

- client and cluster mode with principal / keytab

- long-running cluster app with principal / keytab

- pyspark app that creates (and stops) multiple SparkContext instances

through its lifetime

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#19509 from vanzin/SPARK-22290.

## What changes were proposed in this pull request?

I see that block updates are not logged to the event log.

This makes sense as a default for performance reasons.

However, I find it helpful when trying to get a better understanding of caching for a job to be able to log these updates.

This PR adds a configuration setting `spark.eventLog.blockUpdates` (defaulting to false) which allows block updates to be recorded in the log.

This contribution is original work which is licensed to the Apache Spark project.

## How was this patch tested?

Current and additional unit tests.

Author: Michael Mior <mmior@uwaterloo.ca>

Closes#19263 from michaelmior/log-block-updates.

## What changes were proposed in this pull request?

In the current BlockManager's `getRemoteBytes`, it will call `BlockTransferService#fetchBlockSync` to get remote block. In the `fetchBlockSync`, Spark will allocate a temporary `ByteBuffer` to store the whole fetched block. This will potentially lead to OOM if block size is too big or several blocks are fetched simultaneously in this executor.

So here leveraging the idea of shuffle fetch, to spill the large block to local disk before consumed by upstream code. The behavior is controlled by newly added configuration, if block size is smaller than the threshold, then this block will be persisted in memory; otherwise it will first spill to disk, and then read from disk file.

To achieve this feature, what I did is:

1. Rename `TempShuffleFileManager` to `TempFileManager`, since now it is not only used by shuffle.

2. Add a new `TempFileManager` to manage the files of fetched remote blocks, the files are tracked by weak reference, will be deleted when no use at all.

## How was this patch tested?

This was tested by adding UT, also manual verification in local test to perform GC to clean the files.

Author: jerryshao <sshao@hortonworks.com>

Closes#19476 from jerryshao/SPARK-22062.

## What changes were proposed in this pull request?

Adds links to the fork that provides integration with Nomad, in the same places the k8s integration is linked to.

## How was this patch tested?

I clicked on the links to make sure they're correct ;)

Author: Ben Barnard <barnardb@gmail.com>

Closes#19354 from barnardb/link-to-nomad-integration.

## What changes were proposed in this pull request?

Added documentation for loading csv files into Dataframes

## How was this patch tested?

/dev/run-tests

Author: Jorge Machado <jorge.w.machado@hotmail.com>

Closes#19429 from jomach/master.

## What changes were proposed in this pull request?

The number of cores assigned to each executor is configurable. When this is not explicitly set, multiple executors from the same application may be launched on the same worker too.

## How was this patch tested?

N/A

Author: liuxian <liu.xian3@zte.com.cn>

Closes#18711 from 10110346/executorcores.

## What changes were proposed in this pull request?

Move flume behind a profile, take 2. See https://github.com/apache/spark/pull/19365 for most of the back-story.

This change should fix the problem by removing the examples module dependency and moving Flume examples to the module itself. It also adds deprecation messages, per a discussion on dev about deprecating for 2.3.0.

## How was this patch tested?

Existing tests, which still enable flume integration.

Author: Sean Owen <sowen@cloudera.com>

Closes#19412 from srowen/SPARK-22142.2.

## What changes were proposed in this pull request?

Add 'flume' profile to enable Flume-related integration modules

## How was this patch tested?

Existing tests; no functional change

Author: Sean Owen <sowen@cloudera.com>

Closes#19365 from srowen/SPARK-22142.

The application listing is still generated from event logs, but is now stored

in a KVStore instance. By default an in-memory store is used, but a new config

allows setting a local disk path to store the data, in which case a LevelDB

store will be created.

The provider stores things internally using the public REST API types; I believe

this is better going forward since it will make it easier to get rid of the

internal history server API which is mostly redundant at this point.

I also added a finalizer to LevelDBIterator, to make sure that resources are

eventually released. This helps when code iterates but does not exhaust the

iterator, thus not triggering the auto-close code.

HistoryServerSuite was modified to not re-start the history server unnecessarily;

this makes the json validation tests run more quickly.

Author: Marcelo Vanzin <vanzin@cloudera.com>

Closes#18887 from vanzin/SPARK-20642.

## What changes were proposed in this pull request?

The `percentile_approx` function previously accepted numeric type input and output double type results.

But since all numeric types, date and timestamp types are represented as numerics internally, `percentile_approx` can support them easily.

After this PR, it supports date type, timestamp type and numeric types as input types. The result type is also changed to be the same as the input type, which is more reasonable for percentiles.

This change is also required when we generate equi-height histograms for these types.

## How was this patch tested?

Added a new test and modified some existing tests.

Author: Zhenhua Wang <wangzhenhua@huawei.com>

Closes#19321 from wzhfy/approx_percentile_support_types.

## What changes were proposed in this pull request?

Updated docs so that a line of python in the quick start guide executes. Closes#19283

## How was this patch tested?

Existing tests.

Author: John O'Leary <jgoleary@gmail.com>

Closes#19326 from jgoleary/issues/22107.

Change-Id: I88c272444ca734dc2cbc2592607c11287b90a383

## What changes were proposed in this pull request?

The documentation on File DStreams is enhanced to

1. Detail the exact timestamp logic for examining directories and files.

1. Detail how object stores different from filesystems, and so how using them as a source of data should be treated with caution, possibly publishing data to the store differently (direct PUTs as opposed to stage + rename)

## How was this patch tested?

n/a

Author: Steve Loughran <stevel@hortonworks.com>

Closes#17743 from steveloughran/cloud/SPARK-20448-document-dstream-blobstore.

## What changes were proposed in this pull request?

In the current Spark, when submitting application on YARN with remote resources `./bin/spark-shell --jars http://central.maven.org/maven2/com/github/swagger-akka-http/swagger-akka-http_2.11/0.10.1/swagger-akka-http_2.11-0.10.1.jar --master yarn-client -v`, Spark will be failed with:

```

java.io.IOException: No FileSystem for scheme: http

at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2586)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2593)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:91)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2632)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2614)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:370)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296)

at org.apache.spark.deploy.yarn.Client.copyFileToRemote(Client.scala:354)

at org.apache.spark.deploy.yarn.Client.org$apache$spark$deploy$yarn$Client$$distribute$1(Client.scala:478)

at org.apache.spark.deploy.yarn.Client$$anonfun$prepareLocalResources$11$$anonfun$apply$6.apply(Client.scala:600)

at org.apache.spark.deploy.yarn.Client$$anonfun$prepareLocalResources$11$$anonfun$apply$6.apply(Client.scala:599)

at scala.collection.mutable.ArraySeq.foreach(ArraySeq.scala:74)

at org.apache.spark.deploy.yarn.Client$$anonfun$prepareLocalResources$11.apply(Client.scala:599)

at org.apache.spark.deploy.yarn.Client$$anonfun$prepareLocalResources$11.apply(Client.scala:598)

at scala.collection.immutable.List.foreach(List.scala:381)

at org.apache.spark.deploy.yarn.Client.prepareLocalResources(Client.scala:598)

at org.apache.spark.deploy.yarn.Client.createContainerLaunchContext(Client.scala:848)

at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:173)

```

This is because `YARN#client` assumes resources are on the Hadoop compatible FS. To fix this problem, here propose to download remote http(s) resources to local and add this local downloaded resources to dist cache. This solution has one downside: remote resources are downloaded and uploaded again, but it only restricted to only remote http(s) resources, also the overhead is not so big. The advantages of this solution is that it is simple and the code changes restricts to only `SparkSubmit`.

## How was this patch tested?

Unit test added, also verified in local cluster.

Author: jerryshao <sshao@hortonworks.com>

Closes#19130 from jerryshao/SPARK-21917.

## What changes were proposed in this pull request?

https://github.com/apache/spark/pull/18266 add a new feature to support read JDBC table use custom schema, but we must specify all the fields. For simplicity, this PR support specify partial fields.

## How was this patch tested?

unit tests

Author: Yuming Wang <wgyumg@gmail.com>

Closes#19231 from wangyum/SPARK-22002.

## What changes were proposed in this pull request?

Auto generated Oracle schema some times not we expect:

- `number(1)` auto mapped to BooleanType, some times it's not we expect, per [SPARK-20921](https://issues.apache.org/jira/browse/SPARK-20921).

- `number` auto mapped to Decimal(38,10), It can't read big data, per [SPARK-20427](https://issues.apache.org/jira/browse/SPARK-20427).

This PR fix this issue by custom schema as follows:

```scala

val props = new Properties()

props.put("customSchema", "ID decimal(38, 0), N1 int, N2 boolean")

val dfRead = spark.read.schema(schema).jdbc(jdbcUrl, "tableWithCustomSchema", props)

dfRead.show()

```

or

```sql

CREATE TEMPORARY VIEW tableWithCustomSchema

USING org.apache.spark.sql.jdbc

OPTIONS (url '$jdbcUrl', dbTable 'tableWithCustomSchema', customSchema'ID decimal(38, 0), N1 int, N2 boolean')

```

## How was this patch tested?

unit tests

Author: Yuming Wang <wgyumg@gmail.com>

Closes#18266 from wangyum/SPARK-20427.

## What changes were proposed in this pull request?

Put Kafka 0.8 support behind a kafka-0-8 profile.

## How was this patch tested?

Existing tests, but, until PR builder and Jenkins configs are updated the effect here is to not build or test Kafka 0.8 support at all.

Author: Sean Owen <sowen@cloudera.com>

Closes#19134 from srowen/SPARK-21893.

# What changes were proposed in this pull request?

Added tunable parallelism to the pyspark implementation of one vs. rest classification. Added a parallelism parameter to the Scala implementation of one vs. rest along with functionality for using the parameter to tune the level of parallelism.

I take this PR #18281 over because the original author is busy but we need merge this PR soon.

After this been merged, we can close#18281 .

## How was this patch tested?

Test suite added.

Author: Ajay Saini <ajays725@gmail.com>

Author: WeichenXu <weichen.xu@databricks.com>

Closes#19110 from WeichenXu123/spark-21027.

## What changes were proposed in this pull request?

Recently, I found two unreachable links in the document and fixed them.

Because of small changes related to the document, I don't file this issue in JIRA but please suggest I should do it if you think it's needed.

## How was this patch tested?

Tested manually.

Author: Kousuke Saruta <sarutak@oss.nttdata.co.jp>

Closes#19195 from sarutak/fix-unreachable-link.

## What changes were proposed in this pull request?

Fixed wrong documentation for Mean Absolute Error.

Even though the code is correct for the MAE:

```scala

Since("1.2.0")

def meanAbsoluteError: Double = {

summary.normL1(1) / summary.count

}

```

In the documentation the division by N is missing.

## How was this patch tested?

All of spark tests were run.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Author: FavioVazquez <favio.vazquezp@gmail.com>

Author: faviovazquez <favio.vazquezp@gmail.com>

Author: Favio André Vázquez <favio.vazquezp@gmail.com>

Closes#19190 from FavioVazquez/mae-fix.

## What changes were proposed in this pull request?

```

echo '{"field": 1}

{"field": 2}

{"field": "3"}' >/tmp/sample.json

```

```scala

import org.apache.spark.sql.types._

val schema = new StructType()

.add("field", ByteType)

.add("_corrupt_record", StringType)

val file = "/tmp/sample.json"

val dfFromFile = spark.read.schema(schema).json(file)

scala> dfFromFile.show(false)

+-----+---------------+

|field|_corrupt_record|

+-----+---------------+

|1 |null |

|2 |null |

|null |{"field": "3"} |

+-----+---------------+

scala> dfFromFile.filter($"_corrupt_record".isNotNull).count()

res1: Long = 0

scala> dfFromFile.filter($"_corrupt_record".isNull).count()

res2: Long = 3

```

When the `requiredSchema` only contains `_corrupt_record`, the derived `actualSchema` is empty and the `_corrupt_record` are all null for all rows. This PR captures above situation and raise an exception with a reasonable workaround messag so that users can know what happened and how to fix the query.

## How was this patch tested?

Added test case.

Author: Jen-Ming Chung <jenmingisme@gmail.com>

Closes#18865 from jmchung/SPARK-21610.

## What changes were proposed in this pull request?

Since [SPARK-15639](https://github.com/apache/spark/pull/13701), `spark.sql.parquet.cacheMetadata` and `PARQUET_CACHE_METADATA` is not used. This PR removes from SQLConf and docs.

## How was this patch tested?

Pass the existing Jenkins.

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#19129 from dongjoon-hyun/SPARK-13656.

## What changes were proposed in this pull request?

Modified `CrossValidator` and `TrainValidationSplit` to be able to evaluate models in parallel for a given parameter grid. The level of parallelism is controlled by a parameter `numParallelEval` used to schedule a number of models to be trained/evaluated so that the jobs can be run concurrently. This is a naive approach that does not check the cluster for needed resources, so care must be taken by the user to tune the parameter appropriately. The default value is `1` which will train/evaluate in serial.

## How was this patch tested?

Added unit tests for CrossValidator and TrainValidationSplit to verify that model selection is the same when run in serial vs parallel. Manual testing to verify tasks run in parallel when param is > 1. Added parameter usage to relevant examples.

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#16774 from BryanCutler/parallel-model-eval-SPARK-19357.

## What changes were proposed in this pull request?

Update the line "For example, the data (12:09, cat) is out of order and late, and it falls in windows 12:05 - 12:15 and 12:10 - 12:20." as follow "For example, the data (12:09, cat) is out of order and late, and it falls in windows 12:00 - 12:10 and 12:05 - 12:15." under the programming structured streaming programming guide.

Author: Riccardo Corbella <r.corbella@reply.it>

Closes#19137 from riccardocorbella/bugfix.

## What changes were proposed in this pull request?

All built-in data sources support `Partition Discovery`. We had better update the document to give the users more benefit clearly.

**AFTER**

<img width="906" alt="1" src="https://user-images.githubusercontent.com/9700541/30083628-14278908-9244-11e7-98dc-9ad45fe233a9.png">

## How was this patch tested?

```

SKIP_API=1 jekyll serve --watch

```

Author: Dongjoon Hyun <dongjoon@apache.org>

Closes#19139 from dongjoon-hyun/partitiondiscovery.

Mesos has secrets primitives for environment and file-based secrets, this PR adds that functionality to the Spark dispatcher and the appropriate configuration flags.

Unit tested and manually tested against a DC/OS cluster with Mesos 1.4.

Author: ArtRand <arand@soe.ucsc.edu>

Closes#18837 from ArtRand/spark-20812-dispatcher-secrets-and-labels.

This patch adds statsd sink to the current metrics system in spark core.

Author: Xiaofeng Lin <xlin@twilio.com>

Closes#9518 from xflin/statsd.

Change-Id: Ib8720e86223d4a650df53f51ceb963cd95b49a44

## What changes were proposed in this pull request?

This PR adds ML examples for the FeatureHasher transform in Scala, Java, Python.

## How was this patch tested?

Manually ran examples and verified that output is consistent for different APIs

Author: Bryan Cutler <cutlerb@gmail.com>

Closes#19024 from BryanCutler/ml-examples-FeatureHasher-SPARK-21810.

## What changes were proposed in this pull request?

Fair Scheduler can be built via one of the following options:

- By setting a `spark.scheduler.allocation.file` property,

- By setting `fairscheduler.xml` into classpath.

These options are checked **in order** and fair-scheduler is built via first found option. If invalid path is found, `FileNotFoundException` will be expected.

This PR aims unit test coverage of these use cases and a minor documentation change has been added for second option(`fairscheduler.xml` into classpath) to inform the users.

Also, this PR was related with #16813 and has been created separately to keep patch content as isolated and to help the reviewers.

## How was this patch tested?

Added new Unit Tests.

Author: erenavsarogullari <erenavsarogullari@gmail.com>

Closes#16992 from erenavsarogullari/SPARK-19662.

History Server Launch uses SparkClassCommandBuilder for launching the server. It is observed that SPARK_CLASSPATH has been removed and deprecated. For spark-submit this takes a different route and spark.driver.extraClasspath takes care of specifying additional jars in the classpath that were previously specified in the SPARK_CLASSPATH. Right now the only way specify the additional jars for launching daemons such as history server is using SPARK_DIST_CLASSPATH (https://spark.apache.org/docs/latest/hadoop-provided.html) but this I presume is a distribution classpath. It would be nice to have a similar config like spark.driver.extraClasspath for launching daemons similar to history server.

Added new environment variable SPARK_DAEMON_CLASSPATH to set classpath for launching daemons. Tested and verified for History Server and Standalone Mode.

## How was this patch tested?