### What changes were proposed in this pull request?

This PR fixes a build error of `OracleIntegrationSuite` with Scala 2.13.

### Why are the changes needed?

Build should pass with Scala 2.13.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I confirmed that the build pass with the following command.

```

$ build/sbt -Pdocker-integration-tests -Pscala-2.13 "docker-integration-tests/test:compile"

```

Closes#30660 from sarutak/fix-docker-integration-tests-for-scala-2.13.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR aims to update `master` branch version to 3.2.0-SNAPSHOT.

### Why are the changes needed?

Start to prepare Apache Spark 3.2.0.

### Does this PR introduce _any_ user-facing change?

N/A.

### How was this patch tested?

Pass the CIs.

Closes#30606 from dongjoon-hyun/SPARK-3.2.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR extends the connection timeout to the DB server for DB2IntegrationSuite and its variants.

The container image ibmcom/db2 creates a database when it starts up.

The database creation can take over 2 minutes.

DB2IntegrationSuite and its variants use the container image but the connection timeout is set to 2 minutes so these suites almost always fail.

### Why are the changes needed?

To pass those suites.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I confirmed the suites pass with the following commands.

```

$ build/sbt -Pdocker-integration-tests -Phive -Phive-thriftserver package "testOnly org.apache.spark.sql.jdbc.DB2IntegrationSuite"

$ build/sbt -Pdocker-integration-tests -Phive -Phive-thriftserver package "testOnly org.apache.spark.sql.jdbc.v2.DB2IntegrationSuite"

$ build/sbt -Pdocker-integration-tests -Phive -Phive-thriftserver package "testOnly org.apache.spark.sql.jdbc.DB2KrbIntegrationSuite"

Closes#30583 from sarutak/extend-timeout-for-db2.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR add an option to keep container after DockerJDBCIntegrationSuites (e.g. DB2IntegrationSuite, PostgresIntegrationSuite) finish.

By setting a system property `spark.test.docker.keepContainer` to `true`, we can use this option.

### Why are the changes needed?

If some error occur during the tests, it would be useful to keep the container for debug.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I confirmed that the container is kept after the test by the following commands.

```

# With sbt

$ build/sbt -Dspark.test.docker.keepContainer=true -Pdocker-integration-tests -Phive -Phive-thriftserver package "testOnly org.apache.spark.sql.jdbc.MariaDBKrbIntegrationSuite"

# With Maven

$ build/mvn -Dspark.test.docker.keepContainer=true -Pdocker-integration-tests -Phive -Phive-thriftserver -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.MariaDBKrbIntegrationSuite test

$ docker container ls

```

I also confirmed that there are no regression for all the subclasses of `DockerJDBCIntegrationSuite` with sbt/Maven.

* MariaDBKrbIntegrationSuite

* DB2KrbIntegrationSuite

* PostgresKrbIntegrationSuite

* MySQLIntegrationSuite

* PostgresIntegrationSuite

* DB2IntegrationSuite

* MsSqlServerintegrationsuite

* OracleIntegrationSuite

* v2.MySQLIntegrationSuite

* v2.PostgresIntegrationSuite

* v2.DB2IntegrationSuite

* v2.MsSqlServerIntegrationSuite

* v2.OracleIntegrationSuite

NOTE: `DB2IntegrationSuite`, `v2.DB2IntegrationSuite` and `DB2KrbIntegrationSuite` can fail due to the too much short connection timeout. It's a separate issue and I'll fix it in #30583Closes#30601 from sarutak/keepContainer.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Add namespaces support in JDBC v2 Table Catalog by making ```JDBCTableCatalog``` extends```SupportsNamespaces```

### Why are the changes needed?

make v2 JDBC implementation complete

### Does this PR introduce _any_ user-facing change?

Yes. Add the following to ```JDBCTableCatalog```

- listNamespaces

- listNamespaces(String[] namespace)

- namespaceExists(String[] namespace)

- loadNamespaceMetadata(String[] namespace)

- createNamespace

- alterNamespace

- dropNamespace

### How was this patch tested?

Add new docker tests

Closes#30473 from huaxingao/name_space.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

remove USING _ in CREATE TABLE in JDBCTableCatalog docker tests

### Why are the changes needed?

Previously CREATE TABLE syntax forces users to specify a provider so we have to add a USING _ . Now the problem was fix and we need to remove it.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests

Closes#30599 from huaxingao/remove_USING.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR changes mariadb_docker_entrypoint.sh to set the proper version automatically for mariadb-plugin-gssapi-server.

The proper version is based on the one of mariadb-server.

Also, this PR enables to use arbitrary docker image by setting the environment variable `MARIADB_CONTAINER_IMAGE_NAME`.

### Why are the changes needed?

For `MariaDBKrbIntegrationSuite`, the version of `mariadb-plugin-gssapi-server` is currently set to `10.5.5` in `mariadb_docker_entrypoint.sh` but it's no longer available in the official apt repository and `MariaDBKrbIntegrationSuite` doesn't pass for now.

It seems that only the most recent three versions are available for each major version and they are `10.5.6`, `10.5.7` and `10.5.8` for now.

Further, the release cycle of MariaDB seems to be very rapid (1 ~ 2 months) so I don't think it's a good idea to set to an specific version for `mariadb-plugin-gssapi-server`.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Confirmed that `MariaDBKrbIntegrationSuite` passes with the following commands.

```

$ build/sbt -Pdocker-integration-tests -Phive -Phive-thriftserver package "testOnly org.apache.spark.sql.jdbc.MariaDBKrbIntegrationSuite"

```

In this case, we can see what version of `mariadb-plugin-gssapi-server` is going to be installed in the following container log message.

```

Installing mariadb-plugin-gssapi-server=1:10.5.8+maria~focal

```

Or, we can set MARIADB_CONTAINER_IMAGE_NAME for a specific version of MariaDB.

```

$ MARIADB_DOCKER_IMAGE_NAME=mariadb:10.5.6 build/sbt -Pdocker-integration-tests -Phive -Phive-thriftserver package "testOnly org.apache.spark.sql.jdbc.MariaDBKrbIntegrationSuite"

```

```

Installing mariadb-plugin-gssapi-server=1:10.5.6+maria~focal

```

Closes#30515 from sarutak/fix-MariaDBKrbIntegrationSuite.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

change

```

./build/sbt -Pdocker-integration-tests "testOnly *xxxIntegrationSuite"

```

to

```

./build/sbt -Pdocker-integration-tests "testOnly org.apache.spark.sql.jdbc.xxxIntegrationSuite"

```

### Why are the changes needed?

We only want to start v1 ```xxxIntegrationSuite```, not the newly added```v2.xxxIntegrationSuite```.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Manually checked

Closes#30448 from huaxingao/dockertest.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR intends to fix typos in the sub-modules: graphx, external, and examples.

Split per holdenk https://github.com/apache/spark/pull/30323#issuecomment-725159710

NOTE: The misspellings have been reported at 706a726f87 (commitcomment-44064356)

### Why are the changes needed?

Misspelled words make it harder to read / understand content.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

No testing was performed

Closes#30326 from jsoref/spelling-graphx.

Authored-by: Josh Soref <jsoref@users.noreply.github.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Currently in JDBCTableCatalog, we ignore the table options when creating table.

```

// TODO (SPARK-32405): Apply table options while creating tables in JDBC Table Catalog

if (!properties.isEmpty) {

logWarning("Cannot create JDBC table with properties, these properties will be " +

"ignored: " + properties.asScala.map { case (k, v) => s"$k=$v" }.mkString("[", ", ", "]"))

}

```

### Why are the changes needed?

need to apply the table options when we create table

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

add new test

Closes#30154 from huaxingao/table_options.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

There are two similar compilation warnings about procedure-like declaration in Scala 2.13:

```

[WARNING] [Warn] /spark/core/src/main/scala/org/apache/spark/HeartbeatReceiver.scala:70: procedure syntax is deprecated for constructors: add `=`, as in method definition

```

and

```

[WARNING] [Warn] /spark/core/src/main/scala/org/apache/spark/storage/BlockManagerDecommissioner.scala:211: procedure syntax is deprecated: instead, add `: Unit =` to explicitly declare `run`'s return type

```

this pr is the first part to resolve SPARK-33352:

- For constructors method definition add `=` to convert to function syntax

- For without `return type` methods definition add `: Unit =` to convert to function syntax

### Why are the changes needed?

Eliminate compilation warnings in Scala 2.13 and this change should be compatible with Scala 2.12

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#30255 from LuciferYang/SPARK-29392-FOLLOWUP.1.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Override the default SQL strings for:

ALTER TABLE RENAME COLUMN

ALTER TABLE UPDATE COLUMN NULLABILITY

in the following MsSQLServer JDBC dialect according to official documentation.

Write MsSqlServer integration tests for JDBC.

### Why are the changes needed?

To add the support for alter table when interacting with MSSql Server.

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

added tests

Closes#30038 from ScrapCodes/mssql-dialect.

Authored-by: Prashant Sharma <prashsh1@in.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR renames some part of `Seq` in `PostgresIntegrationSuite` to `scala.collection.Seq`.

When I run `docker-integration-test`, I noticed that `PostgresIntegrationSuite` failed due to `ClassCastException`.

The reason is the same as what is resolved in SPARK-29292.

### Why are the changes needed?

To pass `docker-integration-test` for Scala 2.13.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Ran `PostgresIntegrationSuite` fixed and confirmed it successfully finished.

Closes#30166 from sarutak/fix-toseq-postgresql.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Support rename column for mysql dialect.

### Why are the changes needed?

At the moment, it does not work for mysql version 5.x. So, we should throw proper exception for that case.

### Does this PR introduce _any_ user-facing change?

Yes, `column rename` with mysql dialect should work correctly.

### How was this patch tested?

Added tests for rename column.

Ran the tests to pass with both versions of mysql.

* `export MYSQL_DOCKER_IMAGE_NAME=mysql:5.7.31`

* `export MYSQL_DOCKER_IMAGE_NAME=mysql:8.0`

Closes#30142 from ScrapCodes/mysql-dialect-rename.

Authored-by: Prashant Sharma <prashsh1@in.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Override the default SQL strings in Postgres Dialect for:

- ALTER TABLE UPDATE COLUMN TYPE

- ALTER TABLE UPDATE COLUMN NULLABILITY

Add new docker integration test suite `jdbc/v2/PostgreSQLIntegrationSuite.scala`

### Why are the changes needed?

supports Postgres specific ALTER TABLE syntax.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Add new test `PostgreSQLIntegrationSuite`

Closes#30089 from huaxingao/postgres_docker.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Override the default SQL strings for:

ALTER TABLE UPDATE COLUMN TYPE

ALTER TABLE UPDATE COLUMN NULLABILITY

in the following MySQL JDBC dialect according to official documentation.

Write MySQL integration tests for JDBC.

### Why are the changes needed?

Improved code coverage and support mysql dialect for jdbc.

### Does this PR introduce _any_ user-facing change?

Yes, Support ALTER TABLE in JDBC v2 Table Catalog: add, update type and nullability of columns (MySQL dialect)

### How was this patch tested?

Added tests.

Closes#30025 from ScrapCodes/mysql-dialect.

Authored-by: Prashant Sharma <prashsh1@in.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

test-only - > testOnly in docs across the project.

### Why are the changes needed?

Since the sbt version is updated, the older way or running i.e. `test-only` is no longer valid.

### Does this PR introduce _any_ user-facing change?

docs update.

### How was this patch tested?

Manually.

Closes#30028 from ScrapCodes/fix-build/sbt-sample.

Authored-by: Prashant Sharma <prashsh1@in.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

- Override the default SQL strings in the DB2 Dialect for:

* ALTER TABLE UPDATE COLUMN TYPE

* ALTER TABLE UPDATE COLUMN NULLABILITY

- Add new docker integration test suite jdbc/v2/DB2IntegrationSuite.scala

### Why are the changes needed?

In SPARK-24907, we implemented JDBC v2 Table Catalog but it doesn't support some ALTER TABLE at the moment. This PR supports DB2 specific ALTER TABLE.

### Does this PR introduce _any_ user-facing change?

Yes

### How was this patch tested?

By running new integration test suite:

$ ./build/sbt -Pdocker-integration-tests "test-only *.DB2IntegrationSuite"

Closes#29972 from huaxingao/db2_docker.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. Override the default SQL strings in the Oracle Dialect for:

- ALTER TABLE ADD COLUMN

- ALTER TABLE UPDATE COLUMN TYPE

- ALTER TABLE UPDATE COLUMN NULLABILITY

2. Add new docker integration test suite `jdbc/v2/OracleIntegrationSuite.scala`

### Why are the changes needed?

In SPARK-24907, we implemented JDBC v2 Table Catalog but it doesn't support some `ALTER TABLE` at the moment. This PR supports Oracle specific `ALTER TABLE`.

### Does this PR introduce _any_ user-facing change?

Yes

### How was this patch tested?

By running new integration test suite:

```

$ ./build/sbt -Pdocker-integration-tests "test-only *.OracleIntegrationSuite"

```

Closes#29912 from MaxGekk/jdbcv2-oracle-alter-table.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR intends to update database versions below for integration tests;

- ibmcom/db2:11.5.0.0a => ibmcom/db2:11.5.4.0 in `DB2[Krb]IntegrationSuite`

- mysql:5.7.28 => mysql:5.7.31 in `MySQLIntegrationSuite`

- postgres:12.0 => postgres:13.0 in `Postgres[Krb]IntegrationSuite`

- mariadb:10.4 => mariadb:10.5 in `MariaDBKrbIntegrationSuite`

Also, this added environmental variables so that we can test with any database version and all the variables are as follows (see documents in the code for how to use all the variables);

- DB2_DOCKER_IMAGE_NAME

- MSSQLSERVER_DOCKER_IMAGE_NAME

- MYSQL_DOCKER_IMAGE_NAME

- POSTGRES_DOCKER_IMAGE_NAME

### Why are the changes needed?

To improve tests.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually checked.

Closes#29932 from maropu/UpdateIntegrationTests.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

At the moment only the baked in JDBC connection providers can be used but there is a need to support additional databases and use-cases. In this PR I'm proposing a new developer API name `JdbcConnectionProvider`. To show how an external JDBC connection provider can be implemented I've created an example [here](https://github.com/gaborgsomogyi/spark-jdbc-connection-provider).

The PR contains the following changes:

* Added connection provider developer API

* Made JDBC connection providers constructor to noarg => needed to load them w/ service loader

* Connection providers are now loaded w/ service loader

* Added tests to load providers independently

* Moved `SecurityConfigurationLock` into a central place because other areas will change global JVM security config

### Why are the changes needed?

No custom authentication possibility.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

* Existing + additional unit tests

* Docker integration tests

* Tested manually the newly created external JDBC connection provider

Closes#29024 from gaborgsomogyi/SPARK-32001.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a follow-up PR of #29192 that adds integration tests for character arrays in `PostgresIntegrationSuite`.

### Why are the changes needed?

For better test coverage.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Add tests.

Closes#29397 from maropu/SPARK-32576-FOLLOWUP.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR intends to add tests to check if all the character types in PostgreSQL supported.

The document for character types in PostgreSQL: https://www.postgresql.org/docs/current/datatype-character.htmlCloses#29192.

### Why are the changes needed?

For better test coverage.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Add tests.

Closes#29394 from maropu/pr29192.

Lead-authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Co-authored-by: kujon <jakub.korzeniowski@vortexa.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR will remove references to these "blacklist" and "whitelist" terms besides the blacklisting feature as a whole, which can be handled in a separate JIRA/PR.

This touches quite a few files, but the changes are straightforward (variable/method/etc. name changes) and most quite self-contained.

### Why are the changes needed?

As per discussion on the Spark dev list, it will be beneficial to remove references to problematic language that can alienate potential community members. One such reference is "blacklist" and "whitelist". While it seems to me that there is some valid debate as to whether these terms have racist origins, the cultural connotations are inescapable in today's world.

### Does this PR introduce _any_ user-facing change?

In the test file `HiveQueryFileTest`, a developer has the ability to specify the system property `spark.hive.whitelist` to specify a list of Hive query files that should be tested. This system property has been renamed to `spark.hive.includelist`. The old property has been kept for compatibility, but will log a warning if used. I am open to feedback from others on whether keeping a deprecated property here is unnecessary given that this is just for developers running tests.

### How was this patch tested?

Existing tests should be suitable since no behavior changes are expected as a result of this PR.

Closes#28874 from xkrogen/xkrogen-SPARK-32036-rename-blacklists.

Authored-by: Erik Krogen <ekrogen@linkedin.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

`MariaDBKrbIntegrationSuite` fails because the docker image contains MariaDB version `1:10.4.12+maria~bionic` but `1:10.4.13+maria~bionic` came out and `mariadb-plugin-gssapi-server` installation triggered unwanted database upgrade inside the docker image. The main problem is that the docker image scripts are prepared to handle `1:10.4.12+maria~bionic` version and not any future development.

### Why are the changes needed?

Failing test.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Executed `MariaDBKrbIntegrationSuite` manually.

Closes#29025 from gaborgsomogyi/SPARK-32211.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

`OracleIntegrationSuite` is not using the latest oracle JDBC driver. In this PR I've upgraded the driver to the latest which supports JDK8, JDK9, and JDK11.

### Why are the changes needed?

Old JDBC driver.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing unit tests.

Existing integration tests (especially `OracleIntegrationSuite`)

Closes#28893 from gaborgsomogyi/SPARK-32049.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

When loading DataFrames from JDBC datasource with Kerberos authentication, remote executors (yarn-client/cluster etc. modes) fail to establish a connection due to lack of Kerberos ticket or ability to generate it.

This is a real issue when trying to ingest data from kerberized data sources (SQL Server, Oracle) in enterprise environment where exposing simple authentication access is not an option due to IT policy issues.

In this PR I've added MS SQL support.

What this PR contains:

* Added `MSSQLConnectionProvider`

* Added `MSSQLConnectionProviderSuite`

* Changed MS SQL JDBC driver to use the latest (test scope only)

* Changed `MsSqlServerIntegrationSuite` docker image to use the latest

* Added a version comment to `MariaDBConnectionProvider` to increase trackability

### Why are the changes needed?

Missing JDBC kerberos support.

### Does this PR introduce _any_ user-facing change?

Yes, now user is able to connect to MS SQL using kerberos.

### How was this patch tested?

* Additional + existing unit tests

* Existing integration tests

* Test on cluster manually

Closes#28635 from gaborgsomogyi/SPARK-31337.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@apache.org>

### What changes were proposed in this pull request?

This is a followup PR discussed [here](https://github.com/apache/spark/pull/28215#discussion_r410748547).

### Why are the changes needed?

It would be good to re-enable `DB2IntegrationSuite` and upgrade the docker image inside to use the latest.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing docker integration tests.

Closes#28325 from gaborgsomogyi/SPARK-31533.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

When loading DataFrames from JDBC datasource with Kerberos authentication, remote executors (yarn-client/cluster etc. modes) fail to establish a connection due to lack of Kerberos ticket or ability to generate it.

This is a real issue when trying to ingest data from kerberized data sources (SQL Server, Oracle) in enterprise environment where exposing simple authentication access is not an option due to IT policy issues.

In this PR I've added DB2 support (other supported databases will come in later PRs).

What this PR contains:

* Added `DB2ConnectionProvider`

* Added `DB2ConnectionProviderSuite`

* Added `DB2KrbIntegrationSuite` docker integration test

* Changed DB2 JDBC driver to use the latest (test scope only)

* Changed test table data type to a type which is supported by all the databases

* Removed double connection creation on test side

* Increased connection timeout in docker tests because DB2 docker takes quite a time to start

### Why are the changes needed?

Missing JDBC kerberos support.

### Does this PR introduce any user-facing change?

Yes, now user is able to connect to DB2 using kerberos.

### How was this patch tested?

* Additional + existing unit tests

* Additional + existing integration tests

* Test on cluster manually

Closes#28215 from gaborgsomogyi/SPARK-31272.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@apache.org>

### What changes were proposed in this pull request?

When loading DataFrames from JDBC datasource with Kerberos authentication, remote executors (yarn-client/cluster etc. modes) fail to establish a connection due to lack of Kerberos ticket or ability to generate it.

This is a real issue when trying to ingest data from kerberized data sources (SQL Server, Oracle) in enterprise environment where exposing simple authentication access is not an option due to IT policy issues.

In this PR I've added MariaDB support (other supported databases will come in later PRs).

What this PR contains:

* Introduced `SecureConnectionProvider` and added basic secure functionalities

* Added `MariaDBConnectionProvider`

* Added `MariaDBConnectionProviderSuite`

* Added `MariaDBKrbIntegrationSuite` docker integration test

* Added some missing code documentation

### Why are the changes needed?

Missing JDBC kerberos support.

### Does this PR introduce any user-facing change?

Yes, now user is able to connect to MariaDB using kerberos.

### How was this patch tested?

* Additional + existing unit tests

* Additional + existing integration tests

* Test on cluster manually

Closes#28019 from gaborgsomogyi/SPARK-31021.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@apache.org>

### What changes were proposed in this pull request?

Upgrdade `docker-client` version.

### Why are the changes needed?

`docker-client` what Spark uses is super old. Snippet from the project page:

```

Spotify no longer uses recent versions of this project internally.

The version of docker-client we're using is whatever helios has in its pom.xml. => 8.14.1

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

```

build/mvn install -DskipTests

build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.DB2IntegrationSuite test`

build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.MsSqlServerIntegrationSuite test`

build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 -Dtest=none -DwildcardSuites=org.apache.spark.sql.jdbc.PostgresIntegrationSuite test`

```

Closes#27892 from gaborgsomogyi/docker-client.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

When loading DataFrames from JDBC datasource with Kerberos authentication, remote executors (yarn-client/cluster etc. modes) fail to establish a connection due to lack of Kerberos ticket or ability to generate it.

This is a real issue when trying to ingest data from kerberized data sources (SQL Server, Oracle) in enterprise environment where exposing simple authentication access is not an option due to IT policy issues.

In this PR I've added Postgres support (other supported databases will come in later PRs).

What this PR contains:

* Added `keytab` and `principal` JDBC options

* Added `ConnectionProvider` trait and it's impementations:

* `BasicConnectionProvider` => unsecure connection

* `PostgresConnectionProvider` => postgres secure connection

* Added `ConnectionProvider` tests

* Added `PostgresKrbIntegrationSuite` docker integration test

* Created `SecurityUtils` to concentrate re-usable security related functionalities

* Documentation

### Why are the changes needed?

Missing JDBC kerberos support.

### Does this PR introduce any user-facing change?

Yes, 2 additional JDBC options added:

* keytab

* principal

If both provided then Spark does kerberos authentication.

### How was this patch tested?

To demonstrate the functionality with a standalone application I've created this repository: https://github.com/gaborgsomogyi/docker-kerberos

* Additional + existing unit tests

* Additional docker integration test

* Test on cluster manually

* `SKIP_API=1 jekyll build`

Closes#27637 from gaborgsomogyi/SPARK-30874.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@apache.org>

### What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.1.0-SNAPSHOT.

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27698 from gatorsmile/updateVersion.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a follow-up for https://github.com/apache/spark/pull/25248 .

### Why are the changes needed?

The new behavior cannot access the existing table which is created by old behavior.

This PR provides a way to avoid new behavior for the existing users.

### Does this PR introduce any user-facing change?

Yes. This will fix the broken behavior on the existing tables.

### How was this patch tested?

Pass the Jenkins and manually run JDBC integration test.

```

build/mvn install -DskipTests

build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 test

```

Closes#27184 from dongjoon-hyun/SPARK-28152-CONF.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

1. Revert "Preparing development version 3.0.1-SNAPSHOT": 56dcd79

2. Revert "Preparing Spark release v3.0.0-preview2-rc2": c216ef1

### Why are the changes needed?

Shouldn't change master.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

manual test:

https://github.com/apache/spark/compare/5de5e46..wangyum:revert-masterCloses#26915 from wangyum/revert-master.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

Corrected ShortType and ByteType mapping to SmallInt and TinyInt, corrected setter methods to set ShortType and ByteType as setShort() and setByte(). Changes in JDBCUtils.scala

Fixed Unit test cases to where applicable and added new E2E test cases in to test table read/write using ShortType and ByteType.

#### Problems

- In master in JDBCUtils.scala line number 547 and 551 have a problem where ShortType and ByteType are set as Integers rather than set as Short and Byte respectively.

```

case ShortType =>

(stmt: PreparedStatement, row: Row, pos: Int) =>

stmt.setInt(pos + 1, row.getShort(pos))

The issue was pointed out by maropu

case ByteType =>

(stmt: PreparedStatement, row: Row, pos: Int) =>

stmt.setInt(pos + 1, row.getByte(pos))

```

- Also at line JDBCUtils.scala 247 TinyInt is interpreted wrongly as IntergetType in getCatalystType()

``` case java.sql.Types.TINYINT => IntegerType ```

- At line 172 ShortType was wrongly interpreted as IntegerType

``` case ShortType => Option(JdbcType("INTEGER", java.sql.Types.SMALLINT)) ```

- All thru out tests, ShortType and ByteType were being interpreted as IntegerTypes.

### Why are the changes needed?

A given type should be set using the right type.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Corrected Unit test cases where applicable. Validated in CI/CD

Added a test case in MsSqlServerIntegrationSuite.scala, PostgresIntegrationSuite.scala , MySQLIntegrationSuite.scala to write/read tables from dataframe with cols as shorttype and bytetype. Validated by manual as follows.

```

./build/mvn install -DskipTests

./build/mvn test -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12

```

Closes#26301 from shivsood/shorttype_fix_maropu.

Authored-by: shivsood <shivsood@microsoft.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

To push the built jars to maven release repository, we need to remove the 'SNAPSHOT' tag from the version name.

Made the following changes in this PR:

* Update all the `3.0.0-SNAPSHOT` version name to `3.0.0-preview`

* Update the sparkR version number check logic to allow jvm version like `3.0.0-preview`

**Please note those changes were generated by the release script in the past, but this time since we manually add tags on master branch, we need to manually apply those changes too.**

We shall revert the changes after 3.0.0-preview release passed.

### Why are the changes needed?

To make the maven release repository to accept the built jars.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

### What changes were proposed in this pull request?

To push the built jars to maven release repository, we need to remove the 'SNAPSHOT' tag from the version name.

Made the following changes in this PR:

* Update all the `3.0.0-SNAPSHOT` version name to `3.0.0-preview`

* Update the PySpark version from `3.0.0.dev0` to `3.0.0`

**Please note those changes were generated by the release script in the past, but this time since we manually add tags on master branch, we need to manually apply those changes too.**

We shall revert the changes after 3.0.0-preview release passed.

### Why are the changes needed?

To make the maven release repository to accept the built jars.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#26243 from jiangxb1987/3.0.0-preview-prepare.

Lead-authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

This PR updates JDBC Integration Test DBMS Docker Images.

| DBMS | Docker Image Tag | Release |

| ------ | ------------------ | ------ |

| MySQL | mysql:5.7.28 | Oct 13, 2019 |

| PostgreSQL | postgres:12.0-alpine | Oct 3, 2019 |

* For `MySQL`, `SET GLOBAL sql_mode = ''` is added to disable all strict modes because `test("Basic write test")` creates a table like the following. The latest MySQL rejects `0000-00-00 00:00:00` as TIMESTAMP and causes the test case failure.

```

mysql> desc datescopy;

+-------+-----------+------+-----+---------------------+-----------------------------+

| Field | Type | Null | Key | Default | Extra |

+-------+-----------+------+-----+---------------------+-----------------------------+

| d | date | YES | | NULL | |

| t | timestamp | NO | | CURRENT_TIMESTAMP | on update CURRENT_TIMESTAMP |

| dt | timestamp | NO | | 0000-00-00 00:00:00 | |

| ts | timestamp | NO | | 0000-00-00 00:00:00 | |

| yr | date | YES | | NULL | |

+-------+-----------+------+-----+---------------------+-----------------------------+

```

* For `PostgreSQL`, I chose the smallest image in `12` releases. It reduces the image size a lot, `312MB` -> `72.8MB`. This is good for CI/CI testing environment.

```

$ docker images | grep postgres

postgres 12.0-alpine 5b681acb1cfc 2 days ago 72.8MB

postgres 11.4 53912975086f 3 months ago 312MB

```

Note that

- For `MsSqlServer`, we are using `2017-GA-ubuntu` and the next version `2019-CTP3.2-ubuntu` is still `Community Technology Preview` status.

- For `DB2` and `Oracle`, the official images are not available.

### Why are the changes needed?

This is to make it sure we are testing with the latest DBMS images during preparing `3.0.0`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Since this is the integration test, we need to run this manually.

```

build/mvn install -DskipTests

build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 test

```

Closes#26224 from dongjoon-hyun/SPARK-29567.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently, `docker-integration-tests` is broken in both JDK8/11.

This PR aims to recover JDBC integration test for JDK8/11.

### Why are the changes needed?

While SPARK-28737 upgraded `Jersey` to 2.29 for JDK11, `docker-integration-tests` is broken because `com.spotify.docker-client` still depends on `jersey-guava`. The latest `com.spotify.docker-client` also has this problem.

- https://mvnrepository.com/artifact/com.spotify/docker-client/5.0.2

-> https://mvnrepository.com/artifact/org.glassfish.jersey.core/jersey-client/2.19

-> https://mvnrepository.com/artifact/org.glassfish.jersey.core/jersey-common/2.19

-> https://mvnrepository.com/artifact/org.glassfish.jersey.bundles.repackaged/jersey-guava/2.19

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manual because this is an integration test suite.

```

$ java -version

openjdk version "1.8.0_222"

OpenJDK Runtime Environment (AdoptOpenJDK)(build 1.8.0_222-b10)

OpenJDK 64-Bit Server VM (AdoptOpenJDK)(build 25.222-b10, mixed mode)

$ build/mvn install -DskipTests

$ build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 test

```

```

$ java -version

openjdk version "11.0.5" 2019-10-15

OpenJDK Runtime Environment AdoptOpenJDK (build 11.0.5+10)

OpenJDK 64-Bit Server VM AdoptOpenJDK (build 11.0.5+10, mixed mode)

$ build/mvn install -DskipTests

$ build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 test

```

**BEFORE**

```

*** RUN ABORTED ***

com.spotify.docker.client.exceptions.DockerException: java.util.concurrent.ExecutionException: javax.ws.rs.ProcessingException: java.lang.NoClassDefFoundError: jersey/repackaged/com/google/common/util/concurrent/MoreExecutors

at com.spotify.docker.client.DefaultDockerClient.propagate(DefaultDockerClient.java:1607)

at com.spotify.docker.client.DefaultDockerClient.request(DefaultDockerClient.java:1538)

at com.spotify.docker.client.DefaultDockerClient.ping(DefaultDockerClient.java:387)

at org.apache.spark.sql.jdbc.DockerJDBCIntegrationSuite.beforeAll(DockerJDBCIntegrationSuite.scala:81)

```

**AFTER**

```

Run completed in 47 seconds, 999 milliseconds.

Total number of tests run: 30

Suites: completed 6, aborted 0

Tests: succeeded 30, failed 0, canceled 0, ignored 6, pending 0

All tests passed.

```

Closes#26203 from dongjoon-hyun/SPARK-29546.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

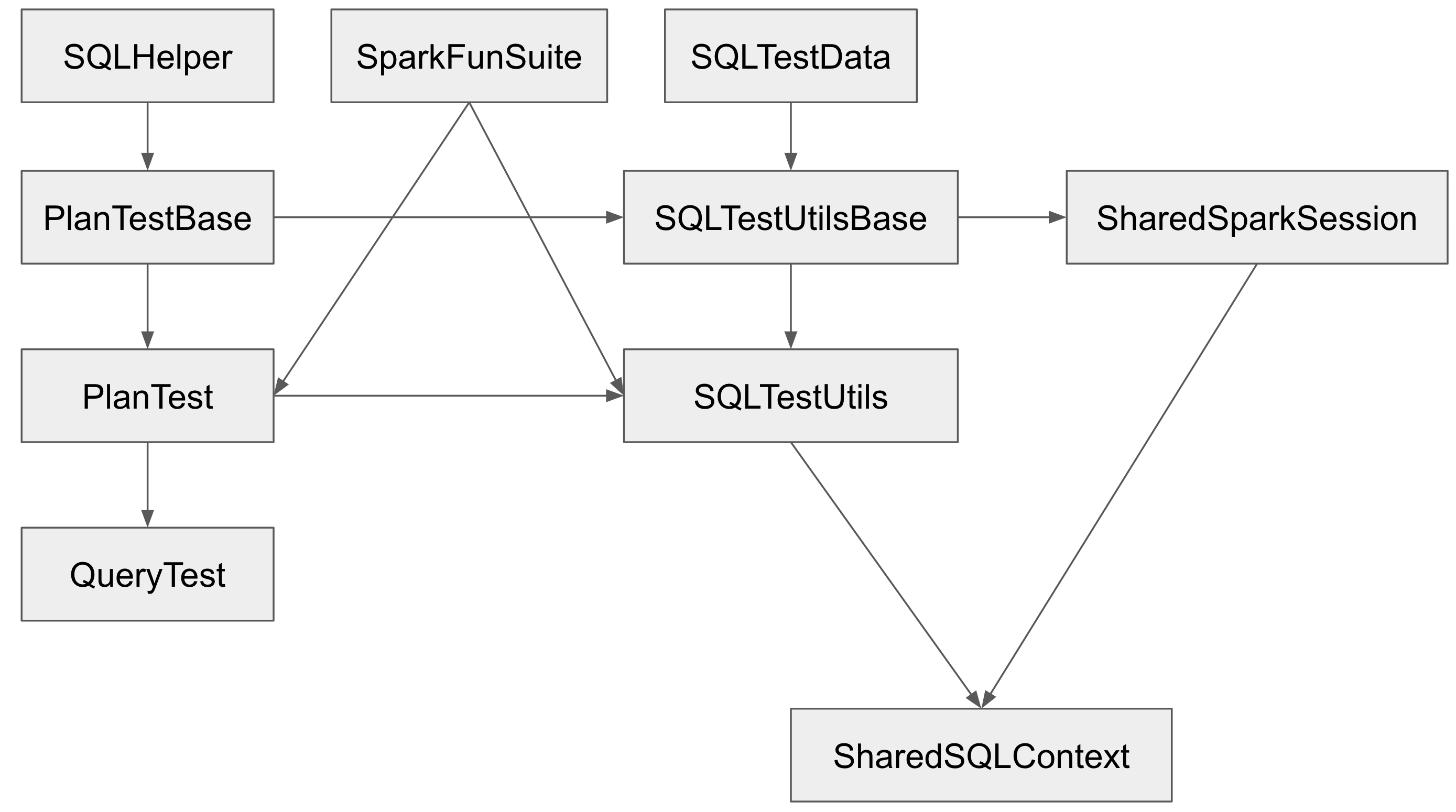

## What changes were proposed in this pull request?

The Spark SQL test framework needs to support 2 kinds of tests:

1. tests inside Spark to test Spark itself (extends `SparkFunSuite`)

2. test outside of Spark to test Spark applications (introduced at b57ed2245c)

The class hierarchy of the major testing traits:

`PlanTestBase`, `SQLTestUtilsBase` and `SharedSparkSession` intentionally don't extend `SparkFunSuite`, so that they can be used for tests outside of Spark. Tests in Spark should extends `QueryTest` and/or `SharedSQLContext` in most cases.

However, the name is a little confusing. As a result, some test suites extend `SharedSparkSession` instead of `SharedSQLContext`. `SharedSparkSession` doesn't work well with `SparkFunSuite` as it doesn't have the special handling of thread auditing in `SharedSQLContext`. For example, you will see a warning starting with `===== POSSIBLE THREAD LEAK IN SUITE` when you run `DataFrameSelfJoinSuite`.

This PR proposes to rename `SharedSparkSession` to `SharedSparkSessionBase`, and rename `SharedSQLContext` to `SharedSparkSession`.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review https://spark.apache.org/contributing.html before opening a pull request.

Closes#25463 from cloud-fan/minor.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

PostgreSQL doesn't have `TINYINT`, which would map directly, but `SMALLINT`s are sufficient for uni-directional translation.

A side-effect of this fix is that `AggregatedDialect` is now usable with multiple dialects targeting `jdbc:postgresql`, as `PostgresDialect.getJDBCType` no longer throws (for which reason backporting this fix would be lovely):

1217996f15/sql/core/src/main/scala/org/apache/spark/sql/jdbc/AggregatedDialect.scala (L42)

`dialects.flatMap` currently throws on the first attempt to get a JDBC type preventing subsequent dialects in the chain from providing an alternative.

## How was this patch tested?

Unit tests.

Closes#24845 from mojodna/postgres-byte-type-mapping.

Authored-by: Seth Fitzsimmons <seth@mojodna.net>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

#25010 breaks the integration test suite due to the changing the user-facing exception like the following. This PR fixes the integration test suite.

```scala

- require(

- decimalVal.precision <= precision,

- s"Decimal precision ${decimalVal.precision} exceeds max precision $precision")

+ if (decimalVal.precision > precision) {

+ throw new ArithmeticException(

+ s"Decimal precision ${decimalVal.precision} exceeds max precision $precision")

+ }

```

## How was this patch tested?

Manual test.

```

$ build/mvn install -DskipTests

$ build/mvn -Pdocker-integration-tests -pl :spark-docker-integration-tests_2.12 test

```

Closes#25165 from dongjoon-hyun/SPARK-28201.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This PR aims to correct mappings in `MsSqlServerDialect`. `ShortType` is mapped to `SMALLINT` and `FloatType` is mapped to `REAL` per [JBDC mapping]( https://docs.microsoft.com/en-us/sql/connect/jdbc/using-basic-data-types?view=sql-server-2017) respectively.

ShortType and FloatTypes are not correctly mapped to right JDBC types when using JDBC connector. This results in tables and spark data frame being created with unintended types. The issue was observed when validating against SQLServer.

Refer [JBDC mapping]( https://docs.microsoft.com/en-us/sql/connect/jdbc/using-basic-data-types?view=sql-server-2017 ) for guidance on mappings between SQLServer, JDBC and Java. Note that java "Short" type should be mapped to JDBC "SMALLINT" and java Float should be mapped to JDBC "REAL".

Some example issue that can happen because of wrong mappings

- Write from df with column type results in a SQL table of with column type as INTEGER as opposed to SMALLINT.Thus a larger table that expected.

- Read results in a dataframe with type INTEGER as opposed to ShortType

- ShortType has a problem in both the the write and read path

- FloatTypes only have an issue with read path. In the write path Spark data type 'FloatType' is correctly mapped to JDBC equivalent data type 'Real'. But in the read path when JDBC data types need to be converted to Catalyst data types ( getCatalystType) 'Real' gets incorrectly gets mapped to 'DoubleType' rather than 'FloatType'.

Refer #28151 which contained this fix as one part of a larger PR. Following PR #28151 discussion it was decided to file seperate PRs for each of the fixes.

## How was this patch tested?

UnitTest added in JDBCSuite.scala and these were tested.

Integration test updated and passed in MsSqlServerDialect.scala

E2E test done with SQLServer

Closes#25146 from shivsood/float_short_type_fix.

Authored-by: shivsood <shivsood@microsoft.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>