### What changes were proposed in this pull request?

Remove the private method `checkIntervalStringDataType()` from `IntervalUtils` since it hasn't been used anymore after https://github.com/apache/spark/pull/33242.

### Why are the changes needed?

To improve code maintenance.

### Does this PR introduce _any_ user-facing change?

No. The method is private, and it existing in code base for short time.

### How was this patch tested?

By existing GAs/tests.

Closes#33321 from MaxGekk/SPARK-35735-remove-unused-method.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR allow the parser to parse unit list interval literals like `'3' day '10' hours '3' seconds` or `'8' years '3' months` as `YearMonthIntervalType` or `DayTimeIntervalType`.

### Why are the changes needed?

For ANSI compliance.

### Does this PR introduce _any_ user-facing change?

Yes. I noted the following things in the `sql-migration-guide.md`.

* Unit list interval literals are parsed as `YearMonthIntervaType` or `DayTimeIntervalType` instead of `CalendarIntervalType`.

* `WEEK`, `MILLISECONS`, `MICROSECOND` and `NANOSECOND` are not valid units for unit list interval literals.

* Units of year-month and day-time cannot be mixed like `1 YEAR 2 MINUTES`.

### How was this patch tested?

New tests and modified tests.

Closes#32949 from sarutak/day-time-multi-units.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add new SQL function `to_timestamp_ltz`

syntax:

```

to_timestamp_ltz(timestamp_str_column[, fmt])

to_timestamp_ltz(timestamp_column)

to_timestamp_ltz(date_column)

```

### Why are the changes needed?

As the result of to_timestamp become consistent with the SQL configuration spark.sql.timestmapType and there is already a SQL function to_timestmap_ntz, we need new function to_timestamp_ltz to construct timestamp with local time zone values.

### Does this PR introduce _any_ user-facing change?

Yes, a new function for constructing timestamp with local time zone values

### How was this patch tested?

Unit test

Closes#33318 from gengliangwang/to_timestamp_ltz.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

This PR modifies `DecorrelateInnerQuery` to handle the COUNT bug for lateral subqueries. Similar to SPARK-15370, rewriting lateral subqueries as joins can change the semantics of the subquery and lead to incorrect answers.

However we can't reuse the existing code to handle the count bug for correlated scalar subqueries because it assumes the subquery to have a specific shape (either with Filter + Aggregate or Aggregate as the root node). Instead, this PR proposes a more generic way to handle the COUNT bug. If an Aggregate is subject to the COUNT bug, we insert a left outer domain join between the outer query and the aggregate with a `alwaysTrue` marker and rewrite the final result conditioning on the marker. For example:

```sql

-- t1: [(0, 1), (1, 2)]

-- t2: [(0, 2), (0, 3)]

select * from t1 left outer join lateral (select count(*) from t2 where t2.c1 = t1.c1)

```

Without count bug handling, the query plan is

```

Project [c1#44, c2#45, count(1)#53L]

+- Join LeftOuter, (c1#48 = c1#44)

:- LocalRelation [c1#44, c2#45]

+- Aggregate [c1#48], [count(1) AS count(1)#53L, c1#48]

+- LocalRelation [c1#48]

```

and the answer is wrong:

```

+---+---+--------+

|c1 |c2 |count(1)|

+---+---+--------+

|0 |1 |2 |

|1 |2 |null |

+---+---+--------+

```

With the count bug handling:

```

Project [c1#1, c2#2, count(1)#10L]

+- Join LeftOuter, (c1#34 <=> c1#1)

:- LocalRelation [c1#1, c2#2]

+- Project [if (isnull(alwaysTrue#32)) 0 else count(1)#33L AS count(1)#10L, c1#34]

+- Join LeftOuter, (c1#5 = c1#34)

:- Aggregate [c1#1], [c1#1 AS c1#34]

: +- LocalRelation [c1#1]

+- Aggregate [c1#5], [count(1) AS count(1)#33L, c1#5, true AS alwaysTrue#32]

+- LocalRelation [c1#5]

```

and we have the correct answer:

```

+---+---+--------+

|c1 |c2 |count(1)|

+---+---+--------+

|0 |1 |2 |

|1 |2 |0 |

+---+---+--------+

```

### Why are the changes needed?

Fix a correctness bug with lateral join rewrite.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added SQL query tests. The results are consistent with Postgres' results.

Closes#33070 from allisonwang-db/spark-35551-lateral-count-bug.

Authored-by: allisonwang-db <allison.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Fix the executor ids that are declared at `allExecutorAndHostIds`.

### Why are the changes needed?

Currently, `HealthTrackerSuite.allExecutorAndHostIds` is mistakenly declared, which leads to the executor exclusion isn't correctly tested.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass existing tests in `HealthTrackerSuite`.

Closes#33262 from Ngone51/fix-healthtrackersuite.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: yi.wu <yi.wu@databricks.com>

### What changes were proposed in this pull request?

This patch proposes to check data after adding data to topic in `KafkaSourceStressSuite`.

### Why are the changes needed?

The test logic in `KafkaSourceStressSuite` is not stable. For example, https://github.com/apache/spark/runs/3049244904.

Once we add data to a topic and then delete the topic before checking data, the expected answer is different to retrieved data from the sink.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests.

Closes#33311 from viirya/stream-assert.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR upgrades SBT to `1.5.5`.

### Why are the changes needed?

SBT `1.5.5` was released, which includes 16 improvements/bug fixes.

https://github.com/sbt/sbt/releases/tag/v1.5.5

* Fixes remote caching not managing resource files

* Fixes launcher causing NoClassDefFoundError when launching sbt 1.4.0 - 1.4.2

* Fixes cross-Scala suffix conflict warning involving _3

* Fixes binaryScalaVersion of 3.0.1-SNAPSHOT

* Fixes carriage return in supershell progress state

* Fixes IntegrationTest configuration not tagged as test in BSP

* Fixes BSP task error handling

* Fixes handling of invalid range positions returned by Javac

* Fixes local class analysis

* Adds buildTarget/resources support for BSP

* Adds build.sbt support for BSP import

* Tracks source dependencies using OriginalTreeAttachments in Scala 2.13

* Reduces overhead in Analysis protobuf deserialization

* Minimizes unnecessary information in signature analysis

* Enables compile-to-jar for local Javac

* Enables Zinc cycle reporting when Scalac is not invoked

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

CI.

Closes#33312 from sarutak/upgrade-sbt-1.5.5.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a followup PR for SPARK-36104 (#33307) and removes unused import `typing.cast`.

After that change, Python linter fails.

```

./dev/lint-python

shell: sh -e {0}

env:

LC_ALL: C.UTF-8

LANG: C.UTF-8

pythonLocation: /__t/Python/3.6.13/x64

LD_LIBRARY_PATH: /__t/Python/3.6.13/x64/lib

starting python compilation test...

python compilation succeeded.

starting black test...

black checks passed.

starting pycodestyle test...

pycodestyle checks passed.

starting flake8 test...

flake8 checks failed:

./python/pyspark/pandas/data_type_ops/num_ops.py:19:1: F401 'typing.cast' imported but unused

from typing import cast, Any, Union

^

1 F401 'typing.cast' imported but unused

```

### Why are the changes needed?

To recover CI.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

CI.

Closes#33315 from sarutak/followup-SPARK-36104.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR reverts https://github.com/apache/spark/pull/32455 and its followup https://github.com/apache/spark/pull/32536 , because the new janino version has a bug that is not fixed yet: https://github.com/janino-compiler/janino/pull/148

### Why are the changes needed?

avoid regressions

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

existing tests

Closes#33302 from cloud-fan/revert.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Manage InternalField for DataTypeOps.neg/abs.

### Why are the changes needed?

The spark data type and nullability must be the same as the original when DataTypeOps.neg/abs.

We should manage InternalField for this case.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit tests.

Closes#33307 from xinrong-databricks/internalField.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

A followup of #32980. We should use `subExprCode` to avoid duplicate call of `addNewFunction`.

### Why are the changes needed?

Avoid duplicate all of `addNewFunction`.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing test.

Closes#33305 from viirya/fix-minor.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

Properly set `InternalField` for `DataTypeOps.invert`.

### Why are the changes needed?

The spark data type and nullability must be the same as the original when `DataTypeOps.invert`.

We should manage `InternalField` for this case.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#33306 from ueshin/issues/SPARK-36103/invert.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Support new functions make_timestamp_ntz and make_timestamp_ltz

Syntax:

* `make_timestamp_ntz(year, month, day, hour, min, sec)`: Create local date-time from year, month, day, hour, min, sec fields

* `make_timestamp_ltz(year, month, day, hour, min, sec[, timezone])`: Create current timestamp with local time zone from year, month, day, hour, min, sec and timezone fields

### Why are the changes needed?

As the result of `make_timestamp` become consistent with the SQL configuration `spark.sql.timestmapType`, we need these two new functions to construct timestamp literals. They align to the functions [`make_timestamp` and `make_timestamptz`](https://www.postgresql.org/docs/9.4/functions-datetime.html) in PostgreSQL

### Does this PR introduce _any_ user-facing change?

Yes, two new datetime functions: make_timestamp_ntz and make_timestamp_ltz.

### How was this patch tested?

End-to-end tests.

Closes#33299 from gengliangwang/make_timestamp_ntz_ltz.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR group exception messages in sql/core/src/main/scala/org/apache/spark/sql/execution/command

### Why are the changes needed?

It will largely help with standardization of error messages and its maintenance.

### Does this PR introduce any user-facing change?

No. Error messages remain unchanged.

### How was this patch tested?

No new tests - pass all original tests to make sure it doesn't break any existing behavior.

Closes#32951 from dgd-contributor/SPARK-33603_grouping_execution/command.

Authored-by: dgd-contributor <dgd_contributor@viettel.com.vn>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

This PR proposes to introduce a new feature "prefix match scan" on state store, which enables users of state store (mostly stateful operators) to group the keys into logical groups, and scan the keys in the same group efficiently.

For example, if the schema of the key of state store is `[ sessionId | session.start ]`, we can scan with prefix key which schema is `[ sessionId ]` (leftmost 1 column) and retrieve all key-value pairs in state store which keys are matched with given prefix key.

This PR will bring the API changes, though the changes are done in the developer API.

* Registering the prefix key

We propose to make an explicit change to the init() method of StateStoreProvider, as below:

```

def init(

stateStoreId: StateStoreId,

keySchema: StructType,

valueSchema: StructType,

numColsPrefixKey: Int,

storeConfs: StateStoreConf,

hadoopConf: Configuration): Unit

```

Please note that we remove an unused parameter “keyIndexOrdinal” as well. The parameter is coupled with getRange() which we will remove as well. See below for rationalization.

Here we provide the number of columns we take to project the prefix key from the full key. If the operator doesn’t leverage prefix match scan, the value can (and should) be 0, because the state store provider may optimize the underlying storage format which may bring extra overhead.

We would like to apply some restrictions on prefix key to simplify the functionality:

* Prefix key is a part of the full key. It can’t be the same as the full key.

* That said, the full key will be the (prefix key + remaining parts), and both prefix key and remaining parts should have at least one column.

* We always take the columns from the leftmost sequentially, like “seq.take(nums)”.

* We don’t allow reordering of the columns.

* We only guarantee “equality” comparison against prefix keys, and don’t support the prefix “range” scan.

* We only support scanning on the keys which match with the prefix key.

* E.g. We don’t support the range scan from user A to user B due to technical complexity. That’s the reason we can’t leverage the existing getRange API.

As we mentioned, we want to make an explicit change to the init() method of StateStoreProvider which would break backward compatibility, assuming that 3rd party state store providers need to update their code in any way to support prefix match scan. Given RocksDB state store provider is being donated to the OSS and plan to be available in Spark 3.2, the majority of the users would migrate to the built-in state store providers, which would remedy the concerns.

* Scanning key-value pairs matched to the prefix key

We propose to add a new method to the ReadStateStore (and StateStore by inheritance), as below:

```

def prefixScan(prefixKey: UnsafeRow): Iterator[UnsafeRowPair]

```

We require callers to pass the `prefixKey` which would have the same schema with the registered prefix key schema. In other words, the schema of the parameter `prefixKey` should match to the projection of the prefix key on the full key based on the number of columns for the prefix key.

The method contract is clear - the method will return the iterator which will give the key-value pairs whose prefix key is matched with the given prefix key. Callers should only rely on the contract and should not expect any other characteristics based on specific details on the state store provider.

In the caller’s point of view, the prefix key is only used for retrieving key-value pairs via prefix match scan. Callers should keep using the full key to do CRUD.

Note that this PR also proposes to make a breaking change, removal of getRange(), which is never be implemented properly and hence never be called properly.

### Why are the changes needed?

* Introducing prefix match scan feature

Currently, the API in state store is only based on key-value data structure. This lacks on advanced data structures like list-like one, which required us to implement the data structure on our own whenever we need it. We had one in stream-stream join, and we were about to have another one in native session window. The custom implementation of data structure based on the state store API tends to be complicated and has to deal with multiple state stores.

We decided to enhance the state store API a bit to remove the requirement for native session window to implement its own. From the operator of native session window, it will just need to do prefix scan on group key to retrieve all sessions belonging to the group key.

Thanks to adding the feature to the part of state store API, this would enable state store providers to optimize the implementation based on the characteristic. (e.g. We will implement this in RocksDB state store provider via leveraging the characteristic that RocksDB sorts the key by natural order of binary format.)

* Removal of getRange API

Before introducing this we sought the way to leverage getRange, but it's quite hard to implement efficiently, with respecting its method contract. Spark always calls the method with (None, None) parameter and all the state store providers (including built-in) implement it as just calling iterator(), which is not respecting the method contract. That said, we can replace all getRange() usages to iterator(), and remove the API to remove any confusions/concerns.

### Does this PR introduce _any_ user-facing change?

Yes for the end users & maintainers of 3rd party state store provider. They will need to upgrade their state store provider implementations to adopt this change.

### How was this patch tested?

Added UT, and also existing UTs to make sure it doesn't break anything.

Closes#33038 from HeartSaVioR/SPARK-35861.

Authored-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

Combine `readBatch` and `readIntegers` in `VectorizedRleValuesReader` by having them share the same `readBatchInternal` method.

### Why are the changes needed?

`readBatch` and `readIntegers` share similar code path and this Jira aims to combine them into one method for easier maintenance.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests as this is just a refactoring.

Closes#33271 from sunchao/SPARK-35743-read-integers.

Authored-by: Chao Sun <sunchao@apple.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to update the SQL migration guide, in particular the section about the migration from Spark 2.4 to 3.0. New item informs users about the following issue:

**What**: Spark doesn't detect encoding (charset) in CSV files with BOM correctly. Such files can be read only in the multiLine mode when the CSV option encoding matches to the actual encoding of CSV files. For example, Spark cannot read UTF-16BE CSV files when encoding is set to UTF-8 which is the default mode. This is the case of the current ES ticket.

**Why**: In previous Spark versions, encoding wasn't propagated to the underlying library that means the lib tried to detect file encoding automatically. It could success for some encodings that require BOM presents at the beginning of files. Starting from the versions 3.0, users can specify file encoding via the CSV option encoding which has UTF-8 as the default value. Spark propagates such default to the underlying library (uniVocity), and as a consequence this turned off encoding auto-detection.

**When**: Since Spark 3.0. In particular, the commit 2df34db586 causes the issue.

**Workaround**: Enabling the encoding auto-detection mechanism in uniVocity by passing null as the value of CSV option encoding. A more recommended approach is to set the encoding option explicitly.

### Why are the changes needed?

To improve user experience with Spark SQL. This should help to users in their migration from Spark 2.4.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Should be checked by building docs in GA/jenkins.

Closes#33300 from MaxGekk/csv-encoding-migration-guide.

Authored-by: Max Gekk <max.gekk@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Update AQE is `disabled` to `enabled` in sql-performance-tuning docs

### Why are the changes needed?

Make docs correct.

### Does this PR introduce _any_ user-facing change?

yes, docs changed.

### How was this patch tested?

Not need.

Closes#33295 from ulysses-you/enable-AQE.

Lead-authored-by: ulysses-you <ulyssesyou18@gmail.com>

Co-authored-by: Hyukjin Kwon <gurwls223@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The SQL function TO_TIMESTAMP should return different results based on the default timestamp type:

* when "spark.sql.timestampType" is TIMESTAMP_NTZ, return TimestampNTZType literal

* when "spark.sql.timestampType" is TIMESTAMP_LTZ, return TimestampType literal

This PR also refactor the class GetTimestamp and GetTimestampNTZ to reduce duplicated code.

### Why are the changes needed?

As "spark.sql.timestampType" sets the default timestamp type, the to_timestamp function should behave consistently with it.

### Does this PR introduce _any_ user-facing change?

Yes, when the value of "spark.sql.timestampType" is TIMESTAMP_NTZ, the result type of `TO_TIMESTAMP` is of TIMESTAMP_NTZ type.

### How was this patch tested?

Unit test

Closes#33280 from gengliangwang/to_timestamp.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

The functions `unix_timestamp`/`to_unix_timestamp` should be able to accept input of `TimestampNTZType`.

### Why are the changes needed?

The functions `unix_timestamp`/`to_unix_timestamp` should be able to accept input of `TimestampNTZType`.

### Does this PR introduce _any_ user-facing change?

'Yes'.

### How was this patch tested?

New tests.

Closes#33278 from beliefer/SPARK-36044.

Authored-by: gengjiaan <gengjiaan@360.cn>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Implement unary operator `invert` of integral ps.Series/Index.

### Why are the changes needed?

Currently, unary operator `invert` of integral ps.Series/Index is not supported. We ought to implement that following pandas' behaviors.

### Does this PR introduce _any_ user-facing change?

Yes.

Before:

```py

>>> import pyspark.pandas as ps

>>> psser = ps.Series([1, 2, 3])

>>> ~psser

Traceback (most recent call last):

...

NotImplementedError: Unary ~ can not be applied to integrals.

```

After:

```py

>>> import pyspark.pandas as ps

>>> psser = ps.Series([1, 2, 3])

>>> ~psser

0 -2

1 -3

2 -4

dtype: int64

```

### How was this patch tested?

Unit tests.

Closes#33285 from xinrong-databricks/numeric_invert.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

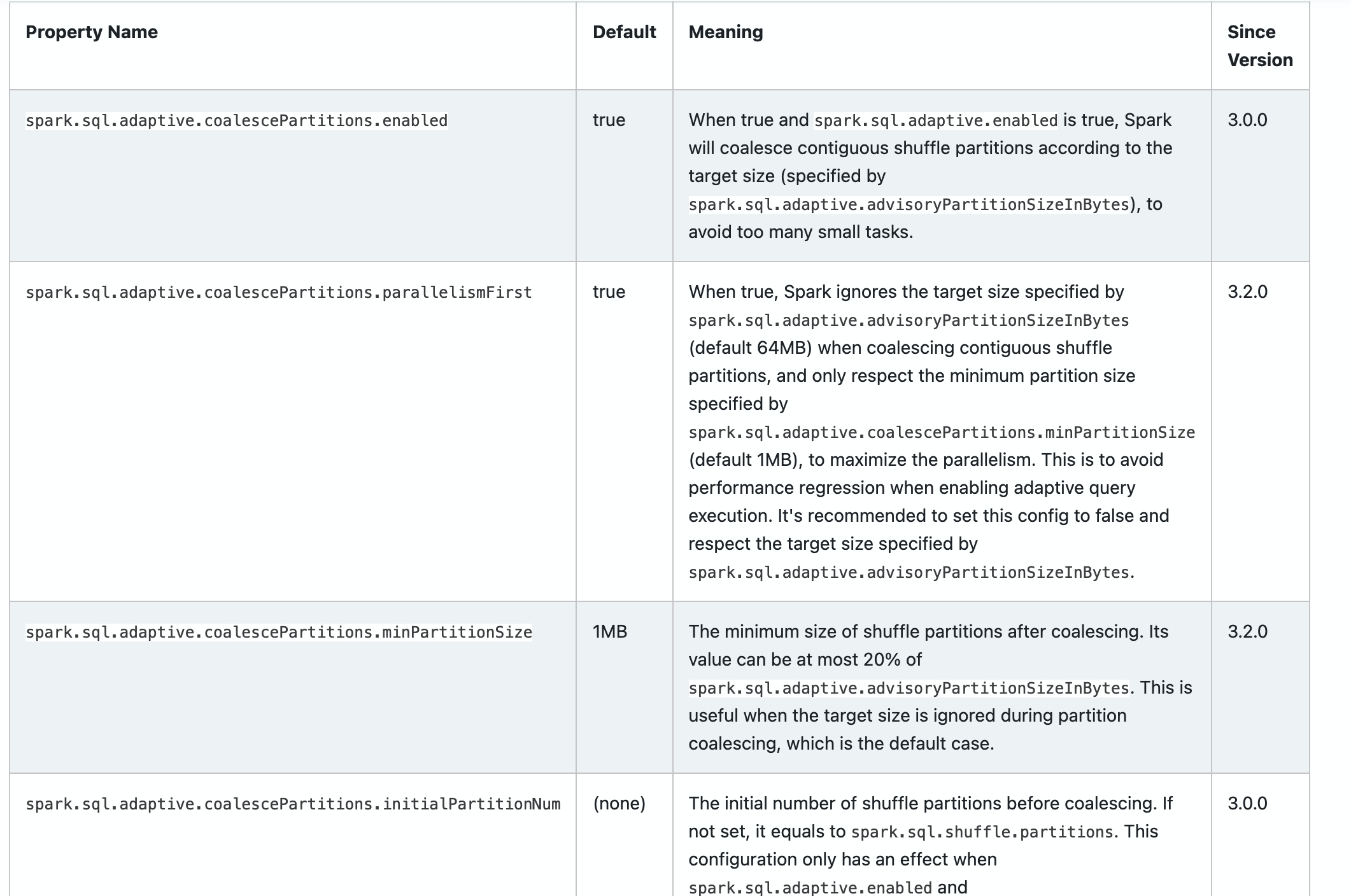

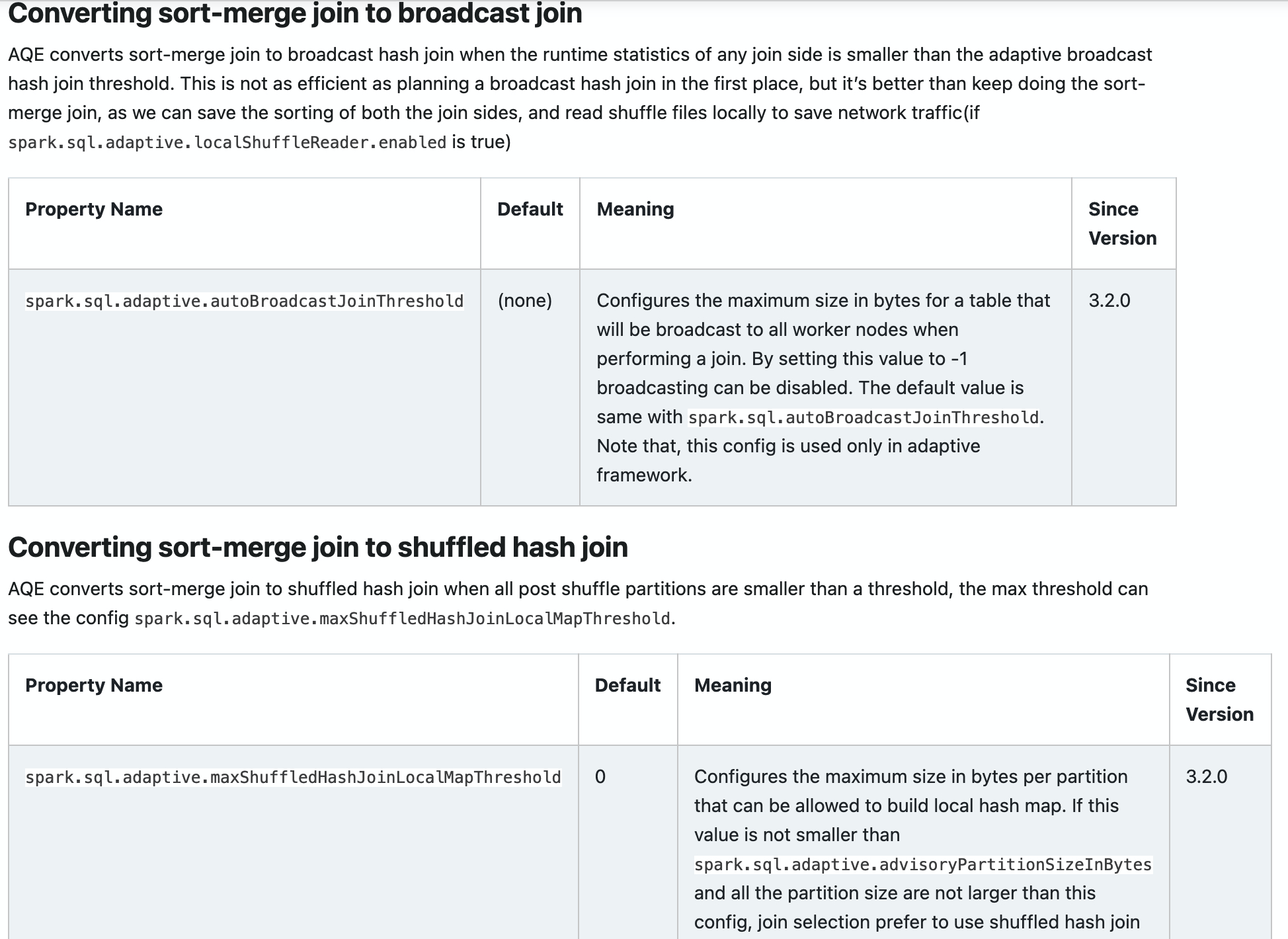

Add new configs in sql-performance-tuning docs.

* spark.sql.adaptive.coalescePartitions.parallelismFirst

* spark.sql.adaptive.coalescePartitions.minPartitionSize

* spark.sql.adaptive.autoBroadcastJoinThreshold

* spark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold

### Why are the changes needed?

Help user to find them.

### Does this PR introduce _any_ user-facing change?

yes, docs changed.

### How was this patch tested?

Closes#32960 from ulysses-you/SPARK-35813.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Properly set `InternalField` more in `DataTypeOps`.

### Why are the changes needed?

There are more places in `DataTypeOps` where we can manage `InternalField`.

We should manage `InternalField` for these cases.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#33275 from ueshin/issues/SPARK-36064/fields.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The SQL function MAKE_TIMESTAMP should return different results based on the default timestamp type:

* when "spark.sql.timestampType" is TIMESTAMP_NTZ, return TimestampNTZType literal

* when "spark.sql.timestampType" is TIMESTAMP_LTZ, return TimestampType literal

### Why are the changes needed?

As "spark.sql.timestampType" sets the default timestamp type, the make_timestamp function should behave consistently with it.

### Does this PR introduce _any_ user-facing change?

Yes, when the value of "spark.sql.timestampType" is TIMESTAMP_NTZ, the result type of `MAKE_TIMESTAMP` is of TIMESTAMP_NTZ type.

### How was this patch tested?

Unit test

Closes#33290 from gengliangwang/mkTS.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

There was a regression since Spark started storing large remote files on disk (https://issues.apache.org/jira/browse/SPARK-22062). In 2018 a refactoring introduced a hidden reference preventing the auto-deletion of the files (a97001d217 (diff-42a673b8fa5f2b999371dc97a5de7ebd2c2ec19447353d39efb7e8ebc012fe32L1677)). Since then all underlying files of DownloadFile instances are kept on disk for the duration of the Spark application which sometimes results in "no space left" errors.

`ReferenceWithCleanup` class uses `file` (the `DownloadFile`) in `cleanUp(): Unit` method so it has to keep a reference to it which prevents it from being garbage-collected.

```

def cleanUp(): Unit = {

logDebug(s"Clean up file $filePath")

if (!file.delete()) { <--- here

logDebug(s"Fail to delete file $filePath")

}

}

```

### Why are the changes needed?

Long-running Spark applications require freeing resources when they are not needed anymore, and iterative algorithms could use all the disk space quickly too.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Added a test in BlockManagerSuite and tested manually.

Closes#33251 from dtarima/fix-download-file-cleanup.

Authored-by: Denis Tarima <dtarima@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

The main change of this is use the static `Integer.compare()` method and `Long.compare()` method instead of the handwriting compare method in Java code.

### Why are the changes needed?

Removing unnecessary handwriting compare methods

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#33260 from LuciferYang/static-compare.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

We have a job that has a stage that contains about 8k tasks. Most tasks take about 1~10min to finish but 3 of them tasks run extremely slow with similar data sizes. They take about 1 hour each to finish and also do their speculations.

The root cause is most likely the delay of the storage system. But it's not straightforward enough to find where the performance issue occurs, in the phase of shuffle read, task execution, output, commitment e.t.c..

```log

2021-07-09 03:05:17 CST SparkHadoopMapRedUtil INFO - attempt_20210709022249_0003_m_007050_37351: Committed

2021-07-09 03:05:17 CST Executor INFO - Finished task 7050.0 in stage 3.0 (TID 37351). 3311 bytes result sent to driver

2021-07-09 04:06:10 CST ShuffleBlockFetcherIterator INFO - Getting 9 non-empty blocks including 0 local blocks and 9 remote blocks

2021-07-09 04:06:10 CST TransportClientFactory INFO - Found inactive connection to

```

### Why are the changes needed?

On the spark side, we can record the time cost in logs for better bug hunting or performance tuning.

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

passing GA

Closes#33279 from yaooqinn/SPARK-36070.

Authored-by: Kent Yao <yao@apache.org>

Signed-off-by: Kent Yao <yao@apache.org>

### What changes were proposed in this pull request?

This PR improve some implement for Spark.

### Why are the changes needed?

This PR improve some implement for Spark.

### Does this PR introduce _any_ user-facing change?

'No'.

### How was this patch tested?

Jenkins test.

Closes#33216 from beliefer/gather-code-format.

Authored-by: gengjiaan <gengjiaan@360.cn>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR fixes an issue that no tests in `hadoop-cloud` are compiled and run unless `hadoop-3.2` profile is activated explicitly.

The root cause seems similar to SPARK-36067 (#33276) so the solution is to activate `hadoop-3.2` profile in `hadoop-cloud/pom.xml` by default.

### Why are the changes needed?

`hadoop-3.2` profile should be activated by default so tests in `hadoop-cloud` also should be compiled and run without activating `hadoop-3.2` profile explicitly.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Confirmed tests in `hadoop-cloud` ran with both SBT and Maven.

```

build/sbt -Phadoop-cloud "hadoop-cloud/test"

...

[info] CommitterBindingSuite:

[info] - BindingParquetOutputCommitter binds to the inner committer (258 milliseconds)

[info] - committer protocol can be serialized and deserialized (11 milliseconds)

[info] - local filesystem instantiation (3 milliseconds)

[info] - reject dynamic partitioning (1 millisecond)

[info] Run completed in 1 second, 234 milliseconds.

[info] Total number of tests run: 4

[info] Suites: completed 1, aborted 0

[info] Tests: succeeded 4, failed 0, canceled 0, ignored 0, pending 0

[info] All tests passed.

build/mvn -Phadoop-cloud -pl hadoop-cloud test

...

CommitterBindingSuite:

- BindingParquetOutputCommitter binds to the inner committer

- committer protocol can be serialized and deserialized

- local filesystem instantiation

- reject dynamic partitioning

Run completed in 560 milliseconds.

Total number of tests run: 4

Suites: completed 2, aborted 0

Tests: succeeded 4, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

Closes#33277 from sarutak/fix-hadoop-3.2-cloud.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Adjust `test_astype`, `test_neg` for old pandas versions.

### Why are the changes needed?

There are issues in old pandas versions that fail tests in pandas API on Spark. We ought to adjust `test_astype` and `test_neg` for old pandas versions.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit tests. Please refer to https://github.com/apache/spark/pull/33272 for test results with pandas 1.0.1.

Closes#33250 from xinrong-databricks/SPARK-36035.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR fixes an issue that `YarnClusterSuite` fails due to `NoClassDefFoundError unless `hadoop-3.2` profile is activated explicitly regardless of building with SBT or Maven.

```

build/sbt -Pyarn "yarn/testOnly org.apache.spark.deploy.yarn.YarnClusterSuite"

...

[info] YarnClusterSuite:

[info] org.apache.spark.deploy.yarn.YarnClusterSuite *** ABORTED *** (598 milliseconds)

[info] java.lang.NoClassDefFoundError: org/bouncycastle/operator/OperatorCreationException

[info] at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager$RMActiveServices.serviceInit(ResourceManager.java:888)

[info] at org.apache.hadoop.service.AbstractService.init(AbstractService.java:164)

[info] at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.createAndInitActiveServices(ResourceManager.java:1410)

[info] at org.apache.hadoop.yarn.server.resourcemanager.ResourceManager.serviceInit(ResourceManager.java:344)

[info] at org.apache.hadoop.service.AbstractService.init(AbstractService.java:164)

[info] at org.apache.hadoop.yarn.server.MiniYARNCluster.initResourceManager(MiniYARNCluster.java:359)

```

The solution is modifying `yarn/pom.xml` to activate `hadoop-3.2` profiles by default.

### Why are the changes needed?

hadoop-3.2 profile should be enabled by default so `YarnClusterSuite` should also successfully finishes without `-Phadoop-3.2`.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Run `YarnClusterSuite` with both SBT and Maven without `-Phadoop-3.2` and it successfully finished.

```

build/sbt -Pyarn "yarn/testOnly org.apache.spark.deploy.yarn.YarnClusterSuite"

...

[info] Run completed in 5 minutes, 38 seconds.

[info] Total number of tests run: 27

[info] Suites: completed 1, aborted 0

[info] Tests: succeeded 27, failed 0, canceled 0, ignored 0, pending 0

[info] All tests passed.

build/mvn -Pyarn -pl resource-managers/yarn test -Dtest=none -DwildcardSuites=org.apache.spark.deploy.yarn.YarnClusterSuite

...

Run completed in 5 minutes, 49 seconds.

Total number of tests run: 27

Suites: completed 2, aborted 0

Tests: succeeded 27, failed 0, canceled 0, ignored 0, pending 0

All tests passed.

```

Closes#33276 from sarutak/fix-bouncy-issue.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Change `currentPhysicalPlan.logicalLink.get` to `inputPlan.logicalLink.get` for initial logical link.

### Why are the changes needed?

At `initialPlan` we may remove some Spark Plan with `queryStagePreparationRules`, if removed Spark Plan is top level node, then we will lose the linked logical node.

Since we support AQE side broadcast join config. It's more common that a join is SMJ at normal planner and changed to BHJ after AQE reOptimize. However, `RemoveRedundantSorts` is applied before reOptimize at `initialPlan`, then a local sort might be removed incorrectly if a join is SMJ at first but changed to BHJ during reOptimize.

### Does this PR introduce _any_ user-facing change?

yes, bug fix

### How was this patch tested?

add test

Closes#33244 from ulysses-you/SPARK-36032.

Authored-by: ulysses-you <ulyssesyou18@gmail.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

Merge test_decimal_ops into test_num_ops

- merge test_isnull() into test_num_ops.test_isnull()

- remove test_datatype_ops(), which already covered in 11fcbc73cb/python/pyspark/pandas/tests/data_type_ops/test_base.py (L58-L59)

### Why are the changes needed?

Tests for data-type-based operations of decimal Series are in two places:

- python/pyspark/pandas/tests/data_type_ops/test_decimal_ops.py

- python/pyspark/pandas/tests/data_type_ops/test_num_ops.py

We'd better merge test_decimal_ops into test_num_ops.

See also [SPARK-36002](https://issues.apache.org/jira/browse/SPARK-36002) .

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

unittests passed

Closes#33206 from Yikun/SPARK-36002.

Authored-by: Yikun Jiang <yikunkero@gmail.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

For tests with operations on different Series, sort index of results before comparing them with pandas.

### Why are the changes needed?

We have many tests with operations on different Series in `spark/python/pyspark/pandas/tests/data_type_ops/` that assume the result's index to be sorted and then compare to the pandas' behavior.

The assumption on the result's index ordering is wrong since Spark DataFrame join is used internally and the order is not preserved if the data being in different partitions.

So we should assume the result to be disordered and sort the index of such results before comparing them with pandas.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit tests.

Closes#33274 from xinrong-databricks/datatypeops_testdiffframe.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Try to capture the error message from the `faulthandler` when the Python worker crashes.

### Why are the changes needed?

Currently, we just see an error message saying `"exited unexpectedly (crashed)"` when the UDFs causes the Python worker to crash by like segmentation fault.

We should take advantage of [`faulthandler`](https://docs.python.org/3/library/faulthandler.html) and try to capture the error message from the `faulthandler`.

### Does this PR introduce _any_ user-facing change?

Yes, when a Spark config `spark.python.worker.faulthandler.enabled` is `true`, the stack trace will be seen in the error message when the Python worker crashes.

```py

>>> def f():

... import ctypes

... ctypes.string_at(0)

...

>>> sc.parallelize([1]).map(lambda x: f()).count()

```

```

org.apache.spark.SparkException: Python worker exited unexpectedly (crashed): Fatal Python error: Segmentation fault

Current thread 0x000000010965b5c0 (most recent call first):

File "/.../ctypes/__init__.py", line 525 in string_at

File "<stdin>", line 3 in f

File "<stdin>", line 1 in <lambda>

...

```

### How was this patch tested?

Added some tests, and manually.

Closes#33273 from ueshin/issues/SPARK-36062/faulthandler.

Authored-by: Takuya UESHIN <ueshin@databricks.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to remove the automatic build guides of documentation in `docs/README.md`.

### Why are the changes needed?

This doesn't work very well:

1. It doesn't detect the changes in RST files. But PySpark internally generates RST files so we can't just simply include it in the detection. Otherwise, it goes to an infinite loop

2. During PySpark documentation generation, it launches some jobs to generate plot images now. This is broken with `entr` command, and the job fails. Seems like it's related to how `entr` creates the process internally.

3. Minor issue but the documentation build directory was changed (`_build` -> `build` in `python/docs`)

I don't think it's worthwhile testing and fixing the docs to show an working example because dev people are already able to do it manually.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually tested.

Closes#33266 from HyukjinKwon/SPARK-36051.

Authored-by: Hyukjin Kwon <gurwls223@apache.org>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Remove IntervalUnit

### Why are the changes needed?

Clean code

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Not need

Closes#33265 from AngersZhuuuu/SPARK-36049.

Lead-authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

Support group by TimestampNTZ type column

### Why are the changes needed?

It's a basic SQL operation.

### Does this PR introduce _any_ user-facing change?

No, the new timestmap type is not released yet.

### How was this patch tested?

Unit test

Closes#33268 from gengliangwang/agg.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

The PR is proposed to standardize TypeError messages for unsupported basic operations by:

- Capitalize the first letter

- Leverage TypeError messages defined in `pyspark/pandas/data_type_ops/base.py`

- Take advantage of the utility `is_valid_operand_for_numeric_arithmetic` to save duplicated TypeError messages

Related unit tests should be adjusted as well.

### Why are the changes needed?

Inconsistent TypeError messages are shown for unsupported data-type-based basic operations.

Take addition's TypeError messages for example:

- addition can not be applied to given types.

- string addition can only be applied to string series or literals.

Standardizing TypeError messages would improve user experience and reduce maintenance costs.

### Does this PR introduce _any_ user-facing change?

No user-facing behavior change. Only TypeError messages are modified.

### How was this patch tested?

Unit tests.

Closes#33237 from xinrong-databricks/datatypeops_err.

Authored-by: Xinrong Meng <xinrong.meng@databricks.com>

Signed-off-by: Takuya UESHIN <ueshin@databricks.com>

### What changes were proposed in this pull request?

Currently the TimestampNTZ literals shows only long value instead of timestamp string in its SQL string and toString result.

Before changes (with default timestamp type as TIMESTAMP_NTZ)

```

– !query

select timestamp '2019-01-01\t'

– !query schema

struct<1546300800000000:timestamp_ntz>

```

After changes:

```

– !query

select timestamp '2019-01-01\t'

– !query schema

struct<TIMESTAMP_NTZ '2019-01-01 00:00:00':timestamp_ntz>

```

### Why are the changes needed?

Make the schema of TimestampNTZ literals readable.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Unit test

Closes#33269 from gengliangwang/ntzLiteralString.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

When exec the command `SHOW CREATE TABLE`, we should not lost the info null flag if the table column that

is specified `NOT NULL`

### Why are the changes needed?

[SPARK-36012](https://issues.apache.org/jira/browse/SPARK-36012)

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Add UT test for V1 and existed UT for V2

Closes#33219 from Peng-Lei/SPARK-36012.

Authored-by: PengLei <peng.8lei@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Currently, AQE uses a very tricky way to trigger and wait for the subqueries:

1. submitting stage calls `QueryStageExec.materialize`

2. `QueryStageExec.materialize` calls `executeQuery`

3. `executeQuery` does some preparation works, which goes to `QueryStageExec.doPrepare`

4. `QueryStageExec.doPrepare` calls `prepare` of shuffle/broadcast, which triggers all the subqueries in this stage

5. `executeQuery` then calls `waitForSubqueries`, which does nothing because `QueryStageExec` itself has no subqueries

6. then we submit the shuffle/broadcast job, without waiting for subqueries

7. for `ShuffleExchangeExec.mapOutputStatisticsFuture`, it calls `child.execute`, which calls `executeQuery` and wait for subqueries in the query tree of `child`

8. The only missing case is: `ShuffleExchangeExec` itself may contain subqueries(repartition expression) and AQE doesn't wait for it.

A simple fix would be overwriting `waitForSubqueries` in `QueryStageExec`, and forward the request to shuffle/broadcast, but this PR proposes a different and probably cleaner way: we follow `execute`/`doExecute` in `SparkPlan`, and add similar APIs in the AQE version of "execute", which gets a future from shuffle/broadcast.

### Why are the changes needed?

bug fix

### Does this PR introduce _any_ user-facing change?

a query fails without the fix and can run now

### How was this patch tested?

new test

Closes#33058 from cloud-fan/aqe.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Refactors the base Throwable trait `SparkError.scala` (introduced in SPARK-34920) an interface `SparkThrowable.java`.

### Why are the changes needed?

- Renaming `SparkError` to `SparkThrowable` better reflect sthat this is the base interface for both `Exception` and `Error`

- Migrating to Java maximizes its extensibility

### Does this PR introduce _any_ user-facing change?

Yes; the base trait has been renamed and the accessor methods have changed (eg. `sqlState` -> `getSqlState()`).

### How was this patch tested?

Unit tests.

Closes#33164 from karenfeng/SPARK-35958.

Authored-by: Karen Feng <karen.feng@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add the implementation for the RocksDBStateStoreProvider. It's the subclass of StateStoreProvider that leverages all the functionalities implemented in the RocksDB instance.

### Why are the changes needed?

The interface for the end-user to use the RocksDB state store.

### Does this PR introduce _any_ user-facing change?

Yes. New RocksDBStateStore can be used in their applications.

### How was this patch tested?

New UT added.

Closes#33187 from xuanyuanking/SPARK-35988.

Authored-by: Yuanjian Li <yuanjian.li@databricks.com>

Signed-off-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

### What changes were proposed in this pull request?

Run end-to-end tests with default timestamp type as TIMESTAMP_NTZ to increase test coverage.

### Why are the changes needed?

Inrease test coverage.

Also, there will be more and more expressions have different behaviors when the default timestamp type is TIMESTAMP_NTZ, for example, `to_timestamp`, `from_json`, `from_csv`, and so on. Having this new test suite helps future developments.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

CI tests.

Closes#33259 from gengliangwang/ntzTest.

Authored-by: Gengliang Wang <gengliang@apache.org>

Signed-off-by: Gengliang Wang <gengliang@apache.org>

### What changes were proposed in this pull request?

With more thought, all DT/YM function use field byte to keep consistence is better

### Why are the changes needed?

Keep code consistence

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Not need

Closes#33252 from AngersZhuuuu/SPARK-36021-FOLLOWUP.

Authored-by: Angerszhuuuu <angers.zhu@gmail.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This PR fixes an issue about `extract`.

`Extract` should process only existing fields of interval types. For example:

```

spark-sql> SELECT EXTRACT(MONTH FROM INTERVAL '2021-11' YEAR TO MONTH);

11

spark-sql> SELECT EXTRACT(MONTH FROM INTERVAL '2021' YEAR);

0

```

The last command should fail as the month field doesn't present in INTERVAL YEAR.

### Why are the changes needed?

Bug fix.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

New tests.

Closes#33247 from sarutak/fix-extract-interval.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Max Gekk <max.gekk@gmail.com>

### What changes were proposed in this pull request?

This is the followup from https://github.com/apache/spark/pull/33236#issuecomment-875242730, where we are fixing the config value of shuffled hash join, for all other test queries. Found all configs by searching in https://github.com/apache/spark/search?q=spark.sql.join.preferSortMergeJoin .

### Why are the changes needed?

Fix test to have better test coverage.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#33249 from c21/join-test.

Authored-by: Cheng Su <chengsu@fb.com>

Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>