### What changes were proposed in this pull request?

Spark's Web UI is using an older version of Bootstrap (v. 2.3.2) for the portal pages. Bootstrap 2.x was moved to EOL in Aug 2013 and Bootstrap 3.x was moved to EOL in July 2019 (https://github.com/twbs/release). Older versions of Bootstrap are also getting flagged in security scans for various CVEs:

https://snyk.io/vuln/SNYK-JS-BOOTSTRAP-72889https://snyk.io/vuln/SNYK-JS-BOOTSTRAP-173700https://snyk.io/vuln/npm:bootstrap:20180529https://snyk.io/vuln/npm:bootstrap:20160627

I haven't validated each CVE, but it would be nice to resolve any potential issues and get on a supported release.

The bad news is that there have been quite a few changes between Bootstrap 2 and Bootstrap 4. I've tried updating the library, refactoring/tweaking the CSS and JS to maintain a similar appearance and functionality, and testing the UI for functionality and appearance. This is a fairly large change so I'm sure additional testing and fixes will be needed.

### How was this patch tested?

This has been manually tested, but there is a ton of functionality and there are many pages and detail pages so it is very possible bugs introduced from the upgrade were missed. Additional testing and feedback is welcomed. If it appears a whole page was missed let me know and I'll take a pass at addressing that page/section.

Closes#27370 from clarkead/bootstrap4-core-upgrade.

Authored-by: Dale Clarke <a.dale.clarke@gmail.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

I found a lot scattered config in `Streaming`.I think should arrange these config in unified position.

### Why are the changes needed?

Arrange scattered config

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exists UT

Closes#27744 from beliefer/arrange-scattered-streaming-config.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.1.0-SNAPSHOT.

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27698 from gatorsmile/updateVersion.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is another PR for stage level scheduling. In particular this adds changes to the dynamic allocation manager and the scheduler backend to be able to track what executors are needed per ResourceProfile. Note the api is still private to Spark until the entire feature gets in, so this functionality will be there but only usable by tests for profiles other then the DefaultProfile.

The main changes here are simply tracking things on a ResourceProfile basis as well as sending the executor requests to the scheduler backend for all ResourceProfiles.

I introduce a ResourceProfileManager in this PR that will track all the actual ResourceProfile objects so that we can keep them all in a single place and just pass around and use in datastructures the resource profile id. The resource profile id can be used with the ResourceProfileManager to get the actual ResourceProfile contents.

There are various places in the code that use executor "slots" for things. The ResourceProfile adds functionality to keep that calculation in it. This logic is more complex then it should due to standalone mode and mesos coarse grained not setting the executor cores config. They default to all cores on the worker, so calculating slots is harder there.

This PR keeps the functionality to make the cores the limiting resource because the scheduler still uses that for "slots" for a few things.

This PR does also add the resource profile id to the Stage and stage info classes to be able to test things easier. That full set of changes will come with the scheduler PR that will be after this one.

The PR stops at the scheduler backend pieces for the cluster manager and the real YARN support hasn't been added in this PR, that again will be in a separate PR, so this has a few of the API changes up to the cluster manager and then just uses the default profile requests to continue.

The code for the entire feature is here for reference: https://github.com/apache/spark/pull/27053/files although it needs to be upmerged again as well.

### Why are the changes needed?

Needed for stage level scheduling feature.

### Does this PR introduce any user-facing change?

No user facing api changes added here.

### How was this patch tested?

Lots of unit tests and manually testing. I tested on yarn, k8s, standalone, local modes. Ran both failure and success cases.

Closes#27313 from tgravescs/SPARK-29148.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

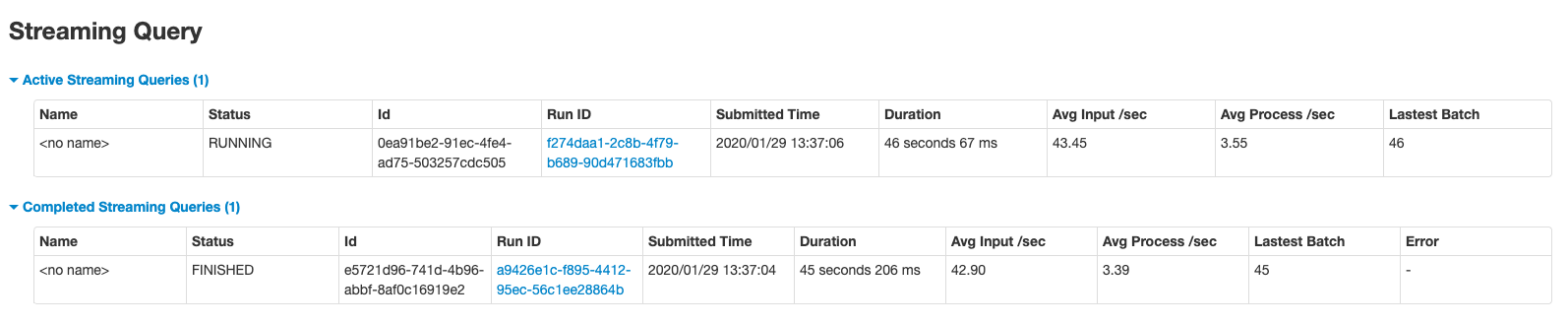

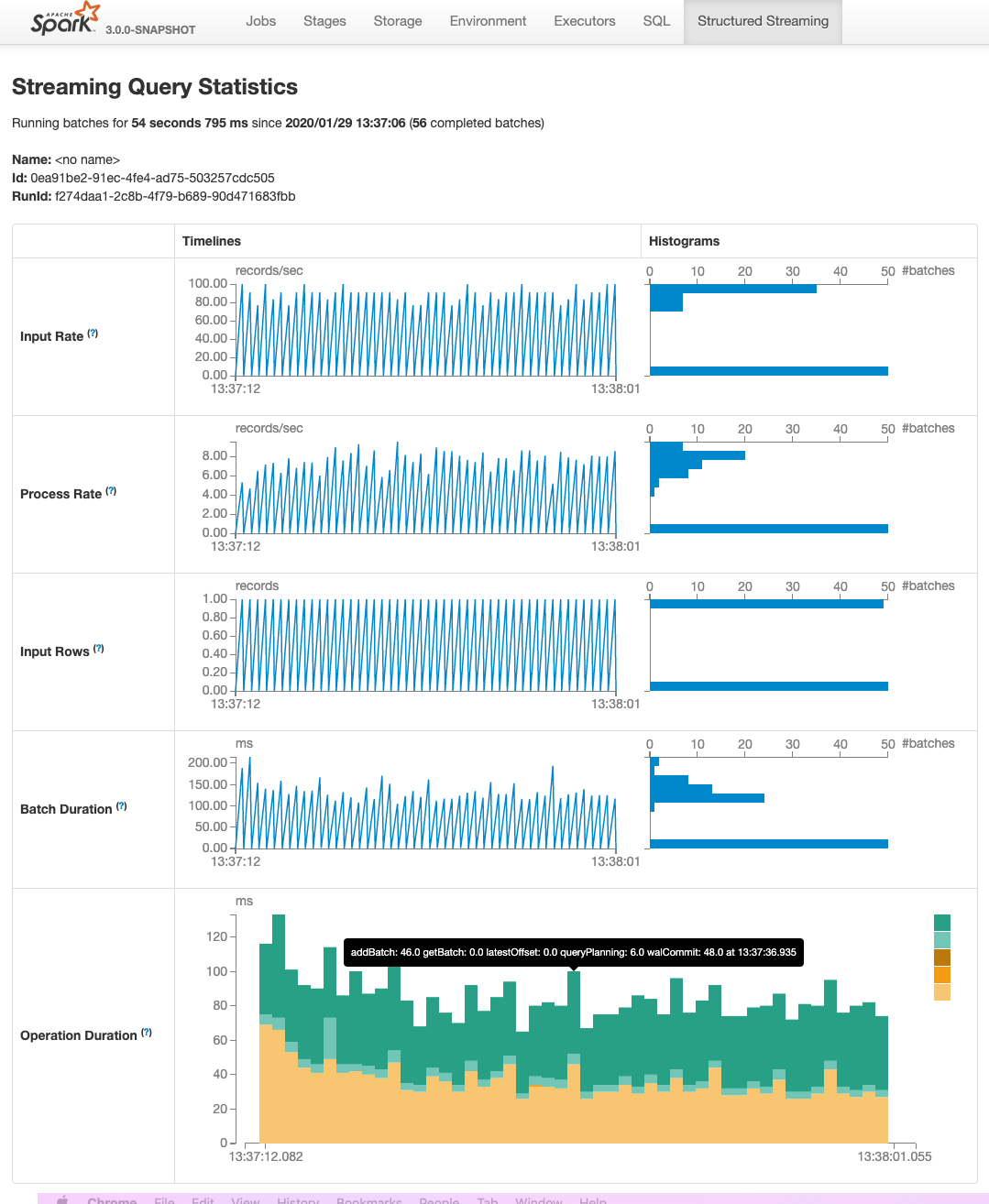

This PR adds two pages to Web UI for Structured Streaming:

- "/streamingquery": Streaming Query Page, providing some aggregate information for running/completed streaming queries.

- "/streamingquery/statistics": Streaming Query Statistics Page, providing detailed information for streaming query, including `Input Rate`, `Process Rate`, `Input Rows`, `Batch Duration` and `Operation Duration`

### Why are the changes needed?

It helps users to better monitor Structured Streaming query.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

- new added and existing UTs

- manual test

Closes#26201 from uncleGen/SPARK-29543.

Lead-authored-by: uncleGen <hustyugm@gmail.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: Genmao Yu <hustyugm@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

1. Revert "Preparing development version 3.0.1-SNAPSHOT": 56dcd79

2. Revert "Preparing Spark release v3.0.0-preview2-rc2": c216ef1

### Why are the changes needed?

Shouldn't change master.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

manual test:

https://github.com/apache/spark/compare/5de5e46..wangyum:revert-masterCloses#26915 from wangyum/revert-master.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

This PR aims to recover `spark.ui.port` and `spark.blockManager.port` from checkpoint like `spark.driver.port`.

### Why are the changes needed?

When the user configures these values, we can respect them.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with the newly added test cases.

Closes#26827 from dongjoon-hyun/SPARK-30199.

Authored-by: Aaruna <aaruna@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

See https://issues.apache.org/jira/browse/SPARK-30195 for the background; I won't repeat it here. This is sort of a grab-bag of related issues.

### Why are the changes needed?

To cross-compile with Scala 2.13 later.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests for 2.12. I've been manually checking that this actually resolves the compile problems in 2.13 separately.

Closes#26826 from srowen/SPARK-30195.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Added tooltip for duration columns in the batch table of streaming tab of Web UI.

### Why are the changes needed?

Tooltips will help users in understanding columns of batch table of streaming tab.

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

Manually tested.

Closes#26467 from iRakson/streaming_tab_tooltip.

Authored-by: root1 <raksonrakesh@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Use JUnit assertions in tests uniformly, not JVM assert() statements.

### Why are the changes needed?

assert() statements do not produce as useful errors when they fail, and, if they were somehow disabled, would fail to test anything.

### Does this PR introduce any user-facing change?

No. The assertion logic should be identical.

### How was this patch tested?

Existing tests.

Closes#26581 from srowen/assertToJUnit.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

All tooltips message will display in centre.

### Why are the changes needed?

Some time tooltips will hide the data of column and tooltips display position will be inconsistent in UI.

### Does this PR introduce any user-facing change?

yes.

### How was this patch tested?

Manual test.

Closes#26263 from 07ARB/SPARK-29570.

Lead-authored-by: Ankitraj <8948111+07ARB@users.noreply.github.com>

Co-authored-by: 07ARB <ankitrajboudh@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Executor's heartbeat will send synchronously to BlockManagerMaster to let it know that the block manager is still alive. In a heavy cluster, it will timeout and cause block manager re-register unexpected.

This improvement will separate a heartbeat endpoint from the driver endpoint. In our production environment, this was really helpful to prevent executors from unstable up and down.

### Why are the changes needed?

`BlockManagerMasterEndpoint` handles many events from executors like `RegisterBlockManager`, `GetLocations`, `RemoveShuffle`, `RemoveExecutor` etc. In a heavy cluster/app, it is always busy. The `BlockManagerHeartbeat` event also was handled in this endpoint. We found it may timeout when it's busy. So we add a new endpoint `BlockManagerMasterHeartbeatEndpoint` to handle heartbeat separately.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Exist UTs

Closes#25971 from LantaoJin/SPARK-29298.

Lead-authored-by: lajin <lajin@ebay.com>

Co-authored-by: Alan Jin <lajin@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The `assertEquals` method of JUnit Assert requires the first parameter to be the expected value. In this PR, I propose to change the order of parameters when the expected value is passed as the second parameter.

### Why are the changes needed?

Wrong order of assert parameters confuses when the assert fails and the parameters have special string representation. For example:

```java

assertEquals(input1.add(input2), new CalendarInterval(5, 5, 367200000000L));

```

```

java.lang.AssertionError:

Expected :interval 5 months 5 days 101 hours

Actual :interval 5 months 5 days 102 hours

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By existing tests.

Closes#26377 from MaxGekk/fix-order-in-assert-equals.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

To push the built jars to maven release repository, we need to remove the 'SNAPSHOT' tag from the version name.

Made the following changes in this PR:

* Update all the `3.0.0-SNAPSHOT` version name to `3.0.0-preview`

* Update the sparkR version number check logic to allow jvm version like `3.0.0-preview`

**Please note those changes were generated by the release script in the past, but this time since we manually add tags on master branch, we need to manually apply those changes too.**

We shall revert the changes after 3.0.0-preview release passed.

### Why are the changes needed?

To make the maven release repository to accept the built jars.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

### What changes were proposed in this pull request?

To push the built jars to maven release repository, we need to remove the 'SNAPSHOT' tag from the version name.

Made the following changes in this PR:

* Update all the `3.0.0-SNAPSHOT` version name to `3.0.0-preview`

* Update the PySpark version from `3.0.0.dev0` to `3.0.0`

**Please note those changes were generated by the release script in the past, but this time since we manually add tags on master branch, we need to manually apply those changes too.**

We shall revert the changes after 3.0.0-preview release passed.

### Why are the changes needed?

To make the maven release repository to accept the built jars.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#26243 from jiangxb1987/3.0.0-preview-prepare.

Lead-authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

Replace `Unit` with equivalent `()` where code refers to the `Unit` companion object.

### Why are the changes needed?

It doesn't compile otherwise in Scala 2.13.

- https://github.com/scala/scala/blob/v2.13.0/src/library/scala/Unit.scala#L30

### Does this PR introduce any user-facing change?

Should be no behavior change at all.

### How was this patch tested?

Existing tests.

Closes#26070 from srowen/SPARK-29411.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Syntax like `'foo` is deprecated in Scala 2.13. Replace usages with `Symbol("foo")`

### Why are the changes needed?

Avoids ~50 deprecation warnings when attempting to build with 2.13.

### Does this PR introduce any user-facing change?

None, should be no functional change at all.

### How was this patch tested?

Existing tests.

Closes#26061 from srowen/SPARK-29392.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Scala 2.13 removes the parallel collections classes to a separate library, so first, this establishes a `scala-2.13` profile to bring it back, for future use.

However the library enables use of `.par` implicit conversions via a new class that is not in 2.12, which makes cross-building hard. This implements a suggested workaround from https://github.com/scala/scala-parallel-collections/issues/22 to avoid `.par` entirely.

### Why are the changes needed?

To compile for 2.13 and later to work with 2.13.

### Does this PR introduce any user-facing change?

Should not, no.

### How was this patch tested?

Existing tests.

Closes#25980 from srowen/SPARK-29296.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR aims to remove `scalatest` deprecation warnings with the following changes.

- `org.scalatest.mockito.MockitoSugar` -> `org.scalatestplus.mockito.MockitoSugar`

- `org.scalatest.selenium.WebBrowser` -> `org.scalatestplus.selenium.WebBrowser`

- `org.scalatest.prop.Checkers` -> `org.scalatestplus.scalacheck.Checkers`

- `org.scalatest.prop.GeneratorDrivenPropertyChecks` -> `org.scalatestplus.scalacheck.ScalaCheckDrivenPropertyChecks`

### Why are the changes needed?

According to the Jenkins logs, there are 118 warnings about this.

```

grep "is deprecated" ~/consoleText | grep scalatest | wc -l

118

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

After Jenkins passes, we need to check the Jenkins log.

Closes#25982 from dongjoon-hyun/SPARK-29307.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Scala 2.13 emits a deprecation warning for procedure-like declarations:

```

def foo() {

...

```

This is equivalent to the following, so should be changed to avoid a warning:

```

def foo(): Unit = {

...

```

### Why are the changes needed?

It will avoid about a thousand compiler warnings when we start to support Scala 2.13. I wanted to make the change in 3.0 as there are less likely to be back-ports from 3.0 to 2.4 than 3.1 to 3.0, for example, minimizing that downside to touching so many files.

Unfortunately, that makes this quite a big change.

### Does this PR introduce any user-facing change?

No behavior change at all.

### How was this patch tested?

Existing tests.

Closes#25968 from srowen/SPARK-29291.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch changes ReceiverSuite."receiver_life_cycle" to record actual calls with timestamp in FakeReceiver/FakeReceiverSupervisor, which doesn't rely on timing of stopping and starting receiver in restarting receiver. It enables us to give enough huge timeout on verification of restart as we can verify both stopping and starting together.

### Why are the changes needed?

The test is flaky without this patch. We increased timeout to fix flakyness of this test (15adcc8273) but even with longer timeout it has been still failing intermittently.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

I've reproduced test failure artificially via below diff:

```

diff --git a/streaming/src/main/scala/org/apache/spark/streaming/receiver/ReceiverSupervisor.scala b/streaming/src/main/scala/org/apache/spark/streaming/receiver/ReceiverSupervisor.scala

index faf6db82d5..d8977543c0 100644

--- a/streaming/src/main/scala/org/apache/spark/streaming/receiver/ReceiverSupervisor.scala

+++ b/streaming/src/main/scala/org/apache/spark/streaming/receiver/ReceiverSupervisor.scala

-191,9 +191,11 private[streaming] abstract class ReceiverSupervisor(

// thread pool.

logWarning("Restarting receiver with delay " + delay + " ms: " + message,

error.getOrElse(null))

+ Thread.sleep(1000)

stopReceiver("Restarting receiver with delay " + delay + "ms: " + message, error)

logDebug("Sleeping for " + delay)

Thread.sleep(delay)

+ Thread.sleep(1000)

logInfo("Starting receiver again")

startReceiver()

logInfo("Receiver started again")

```

and confirmed this patch doesn't fail with the change.

Closes#25862 from HeartSaVioR/SPARK-23197-v2.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a follow-up of the [review comment](https://github.com/apache/spark/pull/25706#discussion_r321923311).

This patch unifies the default wait time to be 10 seconds as it would fit most of UTs (as they have smaller timeouts) and doesn't bring additional latency since it will return if the condition is met.

This patch doesn't touch the one which waits 100000 milliseconds (100 seconds), to not break anything unintentionally, though I'd rather questionable that we really need to wait for 100 seconds.

### Why are the changes needed?

It simplifies the test code and get rid of various heuristic values on timeout.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

CI build will test the patch, as it would be the best environment to test the patch (builds are running there).

Closes#25837 from HeartSaVioR/MINOR-unify-default-wait-time-for-wait-until-empty.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

HDFS doesn't update the file size reported by the NM if you just keep

writing to the file; this makes the SHS believe the file is inactive,

and so it may delete it after the configured max age for log files.

This change uses hsync to keep the log file as up to date as possible

when using HDFS. It also disables erasure coding by default for these

logs, since hsync (& friends) does not work with EC.

Tested with a SHS configured to aggressively clean up logs; verified

a spark-shell session kept updating the log, which was not deleted by

the SHS.

Closes#25819 from vanzin/SPARK-29105.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

[SPARK-27122](https://github.com/apache/spark/pull/24088) fixes `ClassCastException` at `yarn` module by introducing `DelegatingServletContextHandler`. Initially, this was discovered with JDK9+, but the class path issues affected JDK8 environment, too. After [SPARK-28709](https://github.com/apache/spark/pull/25439), I also hit the similar issue at `streaming` module.

This PR aims to fix `streaming` module by adding `getContextPath` to `DelegatingServletContextHandler` and using it.

### Why are the changes needed?

Currently, when we test `streaming` module independently, it fails like the following.

```

$ build/mvn test -pl streaming

...

UISeleniumSuite:

- attaching and detaching a Streaming tab *** FAILED ***

java.lang.ClassCastException: org.sparkproject.jetty.servlet.ServletContextHandler cannot be cast to org.eclipse.jetty.servlet.ServletContextHandler

...

Tests: succeeded 337, failed 1, canceled 0, ignored 1, pending 0

*** 1 TEST FAILED ***

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with the modified tests. And do the following manually.

Since you can observe this when you run `streaming` module test only (instead of running all), you need to install the changed `core` module and use it.

```

$ java -version

openjdk version "1.8.0_222"

OpenJDK Runtime Environment (AdoptOpenJDK)(build 1.8.0_222-b10)

OpenJDK 64-Bit Server VM (AdoptOpenJDK)(build 25.222-b10, mixed mode)

$ build/mvn install -DskipTests

$ build/mvn test -pl streaming

```

Closes#25791 from dongjoon-hyun/SPARK-29087.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

Replace use of `SerializationUtils.clone` with new `Utils.cloneProperties` method

Add benchmark + results showing dramatic speed up for effectively equivalent functionality.

### What changes were proposed in this pull request?

While I am not sure that SerializationUtils.clone is a performance issue in production, I am sure that it is overkill for the task it is doing (providing a distinct copy of a `Properties` object).

This PR provides a benchmark showing the dramatic improvement over the clone operation and replaces uses of `SerializationUtils.clone` on `Properties` with the more specialized `Utils.cloneProperties`.

### Does this PR introduce any user-facing change?

Strings are immutable so there is no reason to serialize and deserialize them, it just creates extra garbage.

The only functionality that would be changed is the unsupported insertion of non-String objects into the spark local properties.

### How was this patch tested?

1. Pass the Jenkins with the existing tests.

2. Since this is a performance improvement PR, manually run the benchmark.

Closes#25787 from databricks-david-lewis/SPARK-29081.

Authored-by: David Lewis <david.lewis@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

JIRA :https://issues.apache.org/jira/browse/SPARK-29050

'a hdfs' change into 'an hdfs'

'an unique' change into 'a unique'

'an url' change into 'a url'

'a error' change into 'an error'

Closes#25756 from dengziming/feature_fix_typos.

Authored-by: dengziming <dengziming@growingio.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch fixes the flaky test failure from StreamingContextSuite "stop slow receiver gracefully", via putting flag whether initializing slow receiver is completed, and wait for such flag to be true. As receiver should be submitted via job and initialized in executor, 500ms might not be enough for covering all cases.

### Why are the changes needed?

We got some reports for test failure on this test. Please refer [SPARK-24663](https://issues.apache.org/jira/browse/SPARK-24663)

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Modified UT. I've artificially made delay on handling job submission via adding below code in `DAGScheduler.submitJob`:

```

if (rdd != null && rdd.name != null && rdd.name.startsWith("Receiver")) {

println(s"Receiver Job! rdd name: ${rdd.name}")

Thread.sleep(1000)

}

```

and the test "stop slow receiver gracefully" failed on current master and passed on the patch.

Closes#25725 from HeartSaVioR/SPARK-24663.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This patch enforces tests to prevent leaking newly created SparkContext while is created via initializing StreamingContext. Leaking SparkContext in test would make most of following tests being failed as well, so this patch applies defensive programming, trying its best to ensure SparkContext is cleaned up.

### Why are the changes needed?

We got some case in CI build where SparkContext is being leaked and other tests are affected by leaked SparkContext. Ideally we should isolate the environment among tests if possible.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Modified UTs.

Closes#25709 from HeartSaVioR/SPARK-29007.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This patch fixes the bug regarding accessing `DStreamCheckpointData.currentCheckpointFiles` without guarding which makes the test `basic rdd checkpoints + dstream graph checkpoint recovery` being flaky.

There're two possible points to make test failing:

1. checkpoint logic is too slow so that checkpoint cannot be handled within real delay

2. There's multithreads-unsafe point in `DStreamCheckpointData.update`: it clears `currentCheckpointFiles` and adds new checkpointFiles. Race condition can happen between main thread for test and JobGenerator's event loop thread.

`lastProcessedBatch` guarantees that all events for given time are processed, as commented:

`// last batch whose completion,checkpointing and metadata cleanup has been completed`. That means, if we wait for time for exactly same amount as advanced the time in test (multiply of checkpoint interval as well as batch duration) we can expect nothing will happen in DStreamCheckpointData afterwards unless we advance the clock.

This patch applies the observation above.

### Why are the changes needed?

The test is reported as flaky as [SPARK-28214](https://issues.apache.org/jira/browse/SPARK-28214), and the test code seems unsafe.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Modified UT. I've added some debug messages and confirmed no method in DStreamCheckpointData is being called between "after waiting lastProcessedBatch" and "advancing clock" even I added huge amount of sleep between twos, which avoids race-condition.

I was also able to make existing test artificially failing (not 100% consistently but high likely) via adding sleep between `currentCheckpointFiles.clear()` and `currentCheckpointFiles ++= checkpointFiles` in `DStreamCheckpointData.update`, and confirmed modified test doesn't fail the test multiple times.

Closes#25731 from HeartSaVioR/SPARK-28214.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This change fixes issue SPARK-28912.

### Why are the changes needed?

If checkpoint directory is set to name which matches regex pattern used for checkpoint files then logs are flooded with MatchError exceptions and old checkpoint files are not removed.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually.

1. Start Hadoop in a pseudo-distributed mode.

2. In another terminal run command nc -lk 9999

3. In the Spark shell execute the following statements:

```scala

val ssc = new StreamingContext(sc, Seconds(30))

ssc.checkpoint("hdfs://localhost:9000/checkpoint-01")

val lines = ssc.socketTextStream("localhost", 9999)

val words = lines.flatMap(_.split(" "))

val pairs = words.map(word => (word, 1))

val wordCounts = pairs.reduceByKey(_ + _)

wordCounts.print()

ssc.start()

ssc.awaitTermination()

```

Closes#25654 from avkgh/SPARK-28912.

Authored-by: avk <nullp7r@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

The Experimental and Evolving annotations are both (like Unstable) used to express that a an API may change. However there are many things in the code that have been marked that way since even Spark 1.x. Per the dev thread, anything introduced at or before Spark 2.3.0 is pretty much 'stable' in that it would not change without a deprecation cycle. Therefore I'd like to remove most of these annotations. And, remove the `:: Experimental ::` scaladoc tag too. And likewise for Python, R.

The changes below can be summarized as:

- Generally, anything introduced at or before Spark 2.3.0 has been unmarked as neither Evolving nor Experimental

- Obviously experimental items like DSv2, Barrier mode, ExperimentalMethods are untouched

- I _did_ unmark a few MLlib classes introduced in 2.4, as I am quite confident they're not going to change (e.g. KolmogorovSmirnovTest, PowerIterationClustering)

It's a big change to review, so I'd suggest scanning the list of _files_ changed to see if any area seems like it should remain partly experimental and examine those.

### Why are the changes needed?

Many of these annotations are incorrect; the APIs are de facto stable. Leaving them also makes legitimate usages of the annotations less meaningful.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#25558 from srowen/SPARK-28855.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

During graceful shutdown of ``StreamingContext`` ``graph.stop()`` is invoked right after stopping of ``timer`` which generates new job. Thus it's possible that the latest jobs generated by timer are still in the middle of generation but invocation of ``graph.stop()`` closes some objects required to job generation, e.g. consumer for Kafka, and generation fails. That also leads to fully waiting of ``spark.streaming.gracefulStopTimeout`` which is equal to 10 batch intervals by default. Stopping of the graph should be performed later, after ``haveAllBatchesBeenProcessed`` is completed.

### How was this patch tested?

Added test to existing test suite.

Closes#25511 from choojoyq/SPARK-22955-job-generation-error-on-graceful-stop.

Authored-by: Nikita Gorbachevsky <nikitag@playtika.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In my application spark streaming is restarted programmatically by stopping StreamingContext without stopping of SparkContext and creating/starting a new one. I use it for automatic detection of Kafka topic/partition changes and automatic failover in case of non fatal exceptions.

However i notice that after multiple restarts driver fails with OOM. During investigation of heap dump i figured out that StreamingContext object isn't cleared by GC after stopping.

<img width="1901" alt="Screen Shot 2019-08-14 at 12 23 33" src="https://user-images.githubusercontent.com/13151161/63010149-83f4c200-be8e-11e9-9f48-12b6e97839f4.png">

There are several places which holds reference to it :

1. StreamingTab registers StreamingJobProgressListener which holds reference to Streaming Context directly to LiveListenerBus shared queue via ssc.sc.addSparkListener(listener) method invocation. However this listener isn't unregistered at stop method.

2. json handlers (/streaming/json and /streaming/batch/json) aren't unregistered in SparkUI, while they hold reference to StreamingJobProgressListener. Basically the same issue affects all the pages, i assume that renderJsonHandler should be added to pageToHandlers cache on attachPage method invocation in order to unregistered it as well on detachPage.

3. SparkUi holds reference to StreamingJobProgressListener in the corresponding local variable which isn't cleared after stopping of StreamingContext.

## How was this patch tested?

Added tests to existing test suites.

After i applied these changes via reflection in my app OOM on driver side gone.

Closes#25439 from choojoyq/SPARK-28709-fix-streaming-context-leak-on-stop.

Authored-by: Nikita Gorbachevsky <nikitag@playtika.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR fixed typos in comments and replace the explicit type with '<>' for Java 8+.

## How was this patch tested?

Manually tested.

Closes#25338 from younggyuchun/younggyu.

Authored-by: younggyu chun <younggyuchun@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This patch tries to keep consistency whenever UTF-8 charset is needed, as using `StandardCharsets.UTF_8` instead of using "UTF-8". If the String type is needed, `StandardCharsets.UTF_8.name()` is used.

This change also brings the benefit of getting rid of `UnsupportedEncodingException`, as we're providing `Charset` instead of `String` whenever possible.

This also changes some private Catalyst helper methods to operate on encodings as `Charset` objects rather than strings.

## How was this patch tested?

Existing unit tests.

Closes#25335 from HeartSaVioR/SPARK-28601.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

It seems that https://bugs.openjdk.java.net/browse/JDK-8068730 makes `InputStreamsSuite` very flaky.

<img width="903" alt="error" src="https://user-images.githubusercontent.com/9700541/59727067-017eb780-91e9-11e9-8bb0-ac5f4c1bc44d.png">

As we can see the Jenkins result, this can be reproduced frequently with JDK9+.

```

$ build/sbt "streaming/testOnly *.InputStreamsSuite"

[info] - Modified files are correctly detected. *** FAILED *** (134 milliseconds)

[info] Set("renamed") did not equal Set() (InputStreamsSuite.scala:312)

[info] org.scalatest.exceptions.TestFailedException:

```

The reason is the `renamed.txt`'s modification time becomes greater than the clock in JDK9+ and Spark ignored it with **not selected** message. In JDK8, the modification time generated by this test case doesn't have `milliseconds` part.

```

Getting new files for time 1560896662000, ignoring files older than 1560896659679

file:/.../streaming/subdir/renamed.txt not selected as mod time 1560896662679 > current time 1560896662000

file:/.../streaming/subdir/existing ignored as mod time 1560896657679 <= ignore time 1560896659679

Finding new files took 0 ms

New files at time 1560896662000 ms:

```

## How was this patch tested?

Pass the Jenkins and manually repeat the following with JDK11 10 times.

```

$ build/sbt "streaming/testOnly *.InputStreamsSuite"

```

Closes#24904 from dongjoon-hyun/SPARK-28101.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

This change refactors the portions of the ExecutorAllocationManager class that

track executor state into a new class, to achieve a few goals:

- make the code easier to understand

- better separate concerns (task backlog vs. executor state)

- less synchronization between event and allocation threads

- less coupling between the allocation code and executor state tracking

The executor tracking code was moved to a new class (ExecutorMonitor) that

encapsulates all the logic of tracking what happens to executors and when

they can be timed out. The logic to actually remove the executors remains

in the EAM, since it still requires information that is not tracked by the

new executor monitor code.

In the executor monitor itself, of interest, specifically, is a change in

how cached blocks are tracked; instead of polling the block manager, the

monitor now uses events to track which executors have cached blocks, and

is able to detect also unpersist events and adjust the time when the executor

should be removed accordingly. (That's the bug mentioned in the PR title.)

Because of the refactoring, a few tests in the old EAM test suite were removed,

since they're now covered by the newly added test suite. The EAM suite was

also changed a little bit to not instantiate a SparkContext every time. This

allowed some cleanup, and the tests also run faster.

Tested with new and updated unit tests, and with multiple TPC-DS workloads

running with dynamic allocation on; also some manual tests for the caching

behavior.

Closes#24704 from vanzin/SPARK-20286.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

## What changes were proposed in this pull request?

descending sort in HDFSMetadataLog.getLatest instead of two action of ascending sort and reverse

## How was this patch tested?

Jenkins

Closes#24711 from wenxuanguan/bug-fix-hdfsmetadatalog.

Authored-by: wenxuanguan <choose_home@126.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

# What changes were proposed in this pull request?

## Problem statement

An executor which has persisted blocks does not consider to be idle and this way ready to be released by dynamic allocation after the regular timeout `spark.dynamicAllocation.executorIdleTimeout` but there is separate configuration `spark.dynamicAllocation.cachedExecutorIdleTimeout` which defaults to `Integer.MAX_VALUE`. This is because releasing the executor also means losing the persisted blocks (as the metadata for individual blocks called `BlockInfo` are kept in memory) and when the RDD is referenced latter on this lost blocks will be recomputed.

On the other hand keeping the executors too long without any task to work on is also a waste of resources (as executors are reserved for the application by the resource manager).

## Solution

This PR focuses on the first part of SPARK-25888: it extends the external shuffle service with the capability to serve RDD blocks which are persisted on the local disk store by the executors. Moreover when this feature is enabled by setting the `spark.shuffle.service.fetch.rdd.enabled` config to true and a block is reported to be persisted on to disk the external shuffle service instance running on the same host as the executor is also registered (along with the reporting block manager) as a possible location for fetching it.

## Some implementation detail

Some explanation about the decisions made during the development:

- the location list to fetch a block was randomized but the groups (same host, same rack, others) order was kept. In this PR the order of groups are kept and external shuffle service added to the end of the each group.

- `BlockManagerInfo` is not introduced for external shuffle service but only a lightweight solution is taken. A hash map from `BlockId` to `BlockStatus` is introduced. A type alias would make the source more readable but I know it is discouraged. On the other hand a new class wrapping this hash map would introduce unnecessary indirection.

- when this feature is on the cleanup triggered during removing of executors (which is handled in `ExternalShuffleBlockResolver`) is modified to keep the disk persisted RDD blocks. This cleanup is triggered in standalone mode when the `spark.storage.cleanupFilesAfterExecutorExit` config is set.

- the unpersisting of an RDD is extended to use the external shuffle service for disk persisted RDD blocks when the original executor which created the blocks are already released. New block transport messages are introduced to support this: `RemoveBlocks` and `BlocksRemoved`.

# How was this patch tested?

## Unit tests

### ExternalShuffleServiceSuite

Here the complete use case is tested by the "SPARK-25888: using external shuffle service fetching disk persisted blocks" with a tiny difference: here the executor is killed manually, this way the test is a bit faster than waiting for the idle timeout.

### ExternalShuffleBlockHandlerSuite

Tests the fetching of the RDD blocks via the external shuffle service.

### BlockManagerInfoSuite

This a new suite. As the `BlockManagerInfo` behaviour depends very much on whether the external shuffle service enabled or not all the tests are executed with and without it.

### BlockManagerSuite

Tests the sorting of the block locations.

## Manually on YARN

Spark App was:

~~~scala

package com.mycompany

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkContext, SparkConf}

import org.apache.spark.storage.StorageLevel

object TestAppDiskOnlyLevel {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("test-app")

println("Attila: START")

val sc = new SparkContext(conf)

val rdd = sc.parallelize(0 until 100, 10)

.map { i =>

println(s"Attila: calculate first rdd i=$i")

Thread.sleep(1000)

i

}

rdd.persist(StorageLevel.DISK_ONLY)

rdd.count()

println("Attila: First RDD is processed, waiting for 60 sec")

Thread.sleep(60 * 1000)

println("Attila: Num executors must be 0 as executorIdleTimeout is way over")

val rdd2 = sc.parallelize(0 until 10, 1)

.map(i => (i, 1))

.persist(StorageLevel.DISK_ONLY)

rdd2.count()

println("Attila: Second RDD with one partition (only one executors must be alive)")

// reduce runs as user code to detect the empty seq (empty blocks)

println("Calling collect on the first RDD: " + rdd.collect().reduce(_ + _))

println("Attila: STOP")

}

}

~~~

I have submitted with the following configuration:

~~~bash

spark-submit --master yarn \

--conf spark.dynamicAllocation.enabled=true \

--conf spark.dynamicAllocation.executorIdleTimeout=30 \

--conf spark.dynamicAllocation.cachedExecutorIdleTimeout=90 \

--class com.mycompany.TestAppDiskOnlyLevel dyn_alloc_demo-core_2.11-0.1.0-SNAPSHOT-jar-with-dependencies.jar

~~~

Checked the result by filtering for the side effect of the task calculations:

~~~bash

[userserver ~]$ yarn logs -applicationId application_1556299359453_0001 | grep "Attila: calculate" | wc -l

WARNING: YARN_OPTS has been replaced by HADOOP_OPTS. Using value of YARN_OPTS.

19/04/26 10:31:59 INFO client.RMProxy: Connecting to ResourceManager at apiros-1.gce.company.com/172.31.115.165:8032

100

~~~

So it is only 100 task execution and not 200 (which would be the case for re-computation).

Moreover from the submit/launcher log we can see executors really stopped in between (see the new total is 0 before the last line):

~~~

[userserver ~]$ grep "Attila: Num executors must be 0" -B 2 spark-submit.log

19/04/26 10:24:27 INFO cluster.YarnScheduler: Executor 9 on apiros-3.gce.company.com killed by driver.

19/04/26 10:24:27 INFO spark.ExecutorAllocationManager: Existing executor 9 has been removed (new total is 0)

Attila: Num executors must be 0 as executorIdleTimeout is way over

~~~

[Full spark submit log](https://github.com/attilapiros/spark/files/3122465/spark-submit.log)

I have done a test also after changing the `DISK_ONLY` storage level to `MEMORY_ONLY` for the first RDD. After this change during the 60sec waiting no executor was removed.

Closes#24499 from attilapiros/SPARK-25888-final.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

Fixed the `spark-<version>-yarn-shuffle.jar` artifact packaging to shade the native netty libraries:

- shade the `META-INF/native/libnetty_*` native libraries when packagin

the yarn shuffle service jar. This is required as netty library loader

derives that based on shaded package name.

- updated the `org/spark_project` shade package prefix to `org/sparkproject`

(i.e. removed underscore) as the former breaks the netty native lib loading.

This was causing the yarn external shuffle service to fail

when spark.shuffle.io.mode=EPOLL

## How was this patch tested?

Manual tests

Closes#24502 from amuraru/SPARK-27610_master.

Authored-by: Adi Muraru <amuraru@adobe.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

I want to get rid of as much use of `scala.language.existentials` as possible for 3.0. It's a complicated language feature that generates warnings unless this value is imported. It might even be on the way out of Scala: https://contributors.scala-lang.org/t/proposal-to-remove-existential-types-from-the-language/2785

For Spark, it comes up mostly where the code plays fast and loose with generic types, not the advanced situations you'll often see referenced where this feature is explained. For example, it comes up in cases where a function returns something like `(String, Class[_])`. Scala doesn't like matching this to any other instance of `(String, Class[_])` because doing so requires inferring the existence of some type that satisfies both. Seems obvious if the generic type is a wildcard, but, not technically something Scala likes to let you get away with.

This is a large PR, and it only gets rid of _most_ instances of `scala.language.existentials`. The change should be all compile-time and shouldn't affect APIs or logic.

Many of the changes simply touch up sloppiness about generic types, making the known correct value explicit in the code.

Some fixes involve being more explicit about the existence of generic types in methods. For instance, `def foo(arg: Class[_])` seems innocent enough but should really be declared `def foo[T](arg: Class[T])` to let Scala select and fix a single type when evaluating calls to `foo`.

For kind of surprising reasons, this comes up in places where code evaluates a tuple of things that involve a generic type, but is OK if the two parts of the tuple are evaluated separately.

One key change was altering `Utils.classForName(...): Class[_]` to the more correct `Utils.classForName[T](...): Class[T]`. This caused a number of small but positive changes to callers that otherwise had to cast the result.

In several tests, `Dataset[_]` was used where `DataFrame` seems to be the clear intent.

Finally, in a few cases in MLlib, the return type `this.type` was used where there are no subclasses of the class that uses it. This really isn't needed and causes issues for Scala reasoning about the return type. These are just changed to be concrete classes as return types.

After this change, we have only a few classes that still import `scala.language.existentials` (because modifying them would require extensive rewrites to fix) and no build warnings.

## How was this patch tested?

Existing tests.

Closes#24431 from srowen/SPARK-27536.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This patch makes several test flakiness fixes.

## How was this patch tested?

N/A

Closes#24434 from gatorsmile/fixFlakyTest.

Lead-authored-by: gatorsmile <gatorsmile@gmail.com>

Co-authored-by: Hyukjin Kwon <gurwls223@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Fix build warnings -- see some details below.

But mostly, remove use of postfix syntax where it causes warnings without the `scala.language.postfixOps` import. This is mostly in expressions like "120000 milliseconds". Which, I'd like to simplify to things like "2.minutes" anyway.

## How was this patch tested?

Existing tests.

Closes#24314 from srowen/SPARK-27404.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

While I was reviewing #24235 I've found a minor addition possibility. Namely `FileSystem.delete` returns a boolean which is not yet checked. In this PR I've added a warning message when it returns false. I've added this as MINOR because no control flow change introduced.

## How was this patch tested?

Existing unit tests.

Closes#24263 from gaborgsomogyi/SPARK-27301-minor.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>