### What changes were proposed in this pull request?

Currently, ResetCommand clear all configurations, including sql configs, static sql configs and spark context level configs.

for example:

```sql

spark-sql> set xyz=abc;

xyz abc

spark-sql> set;

spark.app.id local-1585055396930

spark.app.name SparkSQL::10.242.189.214

spark.driver.host 10.242.189.214

spark.driver.port 65094

spark.executor.id driver

spark.jars

spark.master local[*]

spark.sql.catalogImplementation hive

spark.sql.hive.version 1.2.1

spark.submit.deployMode client

xyz abc

spark-sql> reset;

spark-sql> set;

spark-sql> set spark.sql.hive.version;

spark.sql.hive.version 1.2.1

spark-sql> set spark.app.id;

spark.app.id <undefined>

```

In this PR, we restore spark confs to RuntimeConfig after it is cleared

### Why are the changes needed?

reset command overkills configs which are static.

### Does this PR introduce any user-facing change?

yes, the ResetCommand do not change static configs now

### How was this patch tested?

add ut

Closes#28003 from yaooqinn/SPARK-31234.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

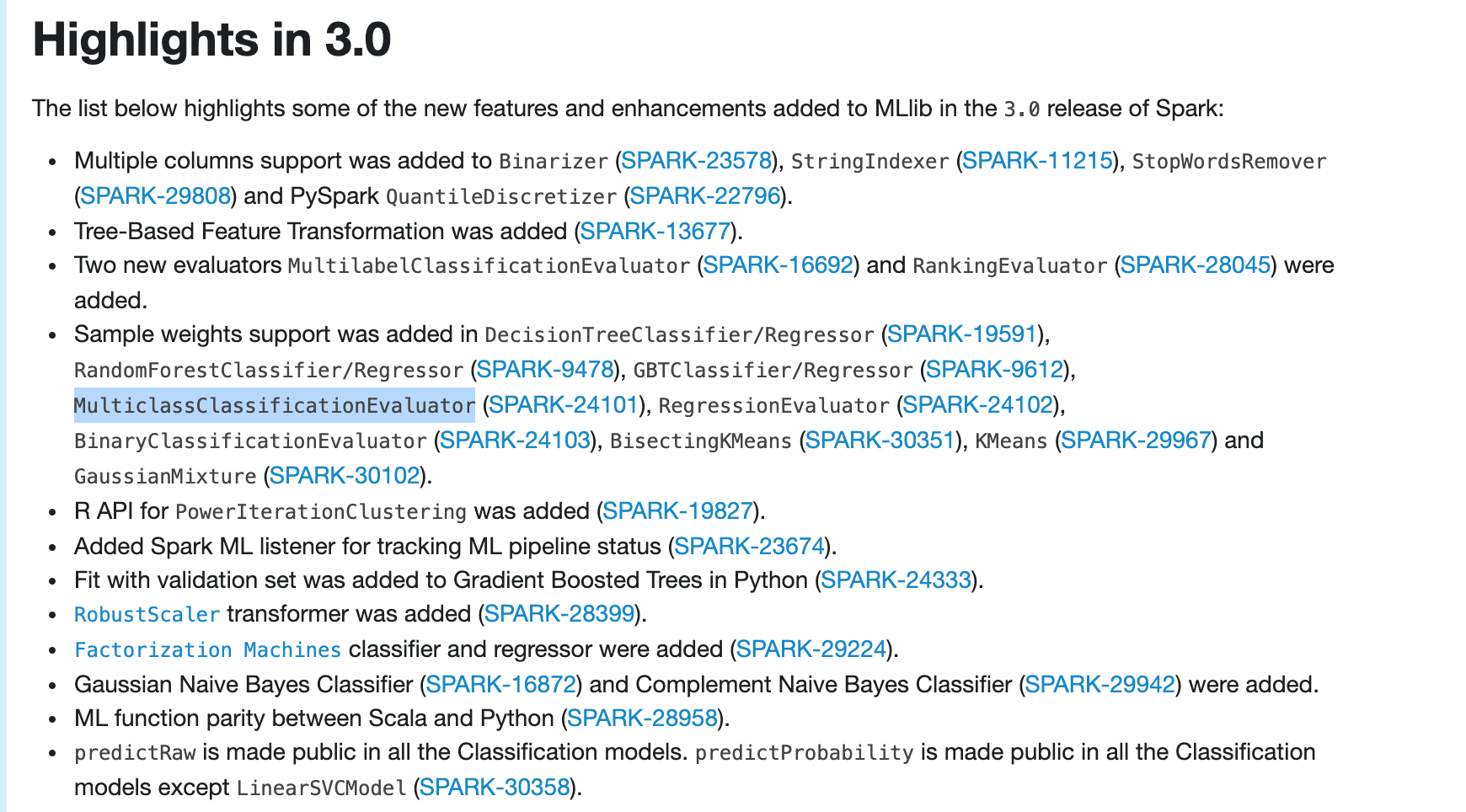

### What changes were proposed in this pull request?

Update ml-guide to include ```MulticlassClassificationEvaluator``` weight support in highlights

### Why are the changes needed?

```MulticlassClassificationEvaluator``` weight support is very important, so should include it in highlights

### Does this PR introduce any user-facing change?

Yes

after:

### How was this patch tested?

manually build and check

Closes#28031 from huaxingao/highlights-followup.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: zhengruifeng <ruifengz@foxmail.com>

### What changes were proposed in this pull request?

Spark introduced CHAR type for hive compatibility but it only works for hive tables. CHAR type is never documented and is treated as STRING type for non-Hive tables.

However, this leads to confusing behaviors

**Apache Spark 3.0.0-preview2**

```

spark-sql> CREATE TABLE t(a CHAR(3));

spark-sql> INSERT INTO TABLE t SELECT 'a ';

spark-sql> SELECT a, length(a) FROM t;

a 2

```

**Apache Spark 2.4.5**

```

spark-sql> CREATE TABLE t(a CHAR(3));

spark-sql> INSERT INTO TABLE t SELECT 'a ';

spark-sql> SELECT a, length(a) FROM t;

a 3

```

According to the SQL standard, `CHAR(3)` should guarantee all the values are of length 3. Since `CHAR(3)` is treated as STRING so Spark doesn't guarantee it.

This PR forbids CHAR type in non-Hive tables as it's not supported correctly.

### Why are the changes needed?

avoid confusing/wrong behavior

### Does this PR introduce any user-facing change?

yes, now users can't create/alter non-Hive tables with CHAR type.

### How was this patch tested?

new tests

Closes#27902 from cloud-fan/char.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

https://github.com/apache/spark/pull/26412 introduced a behavior change that `date_add`/`date_sub` functions can't accept string and double values in the second parameter. This is reasonable as it's error-prone to cast string/double to int at runtime.

However, using string literals as function arguments is very common in SQL databases. To avoid breaking valid use cases that the string literal is indeed an integer, this PR proposes to add ansi_cast for string literal in date_add/date_sub functions. If the string value is not a valid integer, we fail at query compiling time because of constant folding.

### Why are the changes needed?

avoid breaking changes

### Does this PR introduce any user-facing change?

Yes, now 3.0 can run `date_add('2011-11-11', '1')` like 2.4

### How was this patch tested?

new tests.

Closes#27965 from cloud-fan/string.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

`INSERT OVERWRITE DIRECTORY` can only use file format (class implements `org.apache.spark.sql.execution.datasources.FileFormat`). This PR fixes it and other minor improvement.

### Why are the changes needed?

### Does this PR introduce any user-facing change?

### How was this patch tested?

Closes#27891 from cloud-fan/doc.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Change BLAS for part of level-1 routines(axpy, dot, scal(double, denseVector)) from java implementation to NativeBLAS when vector size>256

### Why are the changes needed?

In current ML BLAS.scala, all level-1 routines are fixed to use java

implementation. But NativeBLAS(intel MKL, OpenBLAS) can bring up to 11X

performance improvement based on performance test which apply direct

calls against these methods. We should provide a way to allow user take

advantage of NativeBLAS for level-1 routines. Here we do it through

switching to NativeBLAS for these methods from f2jBLAS.

### Does this PR introduce any user-facing change?

Yes, methods axpy, dot, scal in level-1 routines will switch to NativeBLAS when it has more than nativeL1Threshold(fixed value 256) elements and will fallback to f2jBLAS if native BLAS is not properly configured in system.

### How was this patch tested?

Perf test direct calls level-1 routines

Closes#27546 from yma11/SPARK-30773.

Lead-authored-by: yan ma <yan.ma@intel.com>

Co-authored-by: Ma Yan <yan.ma@intel.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

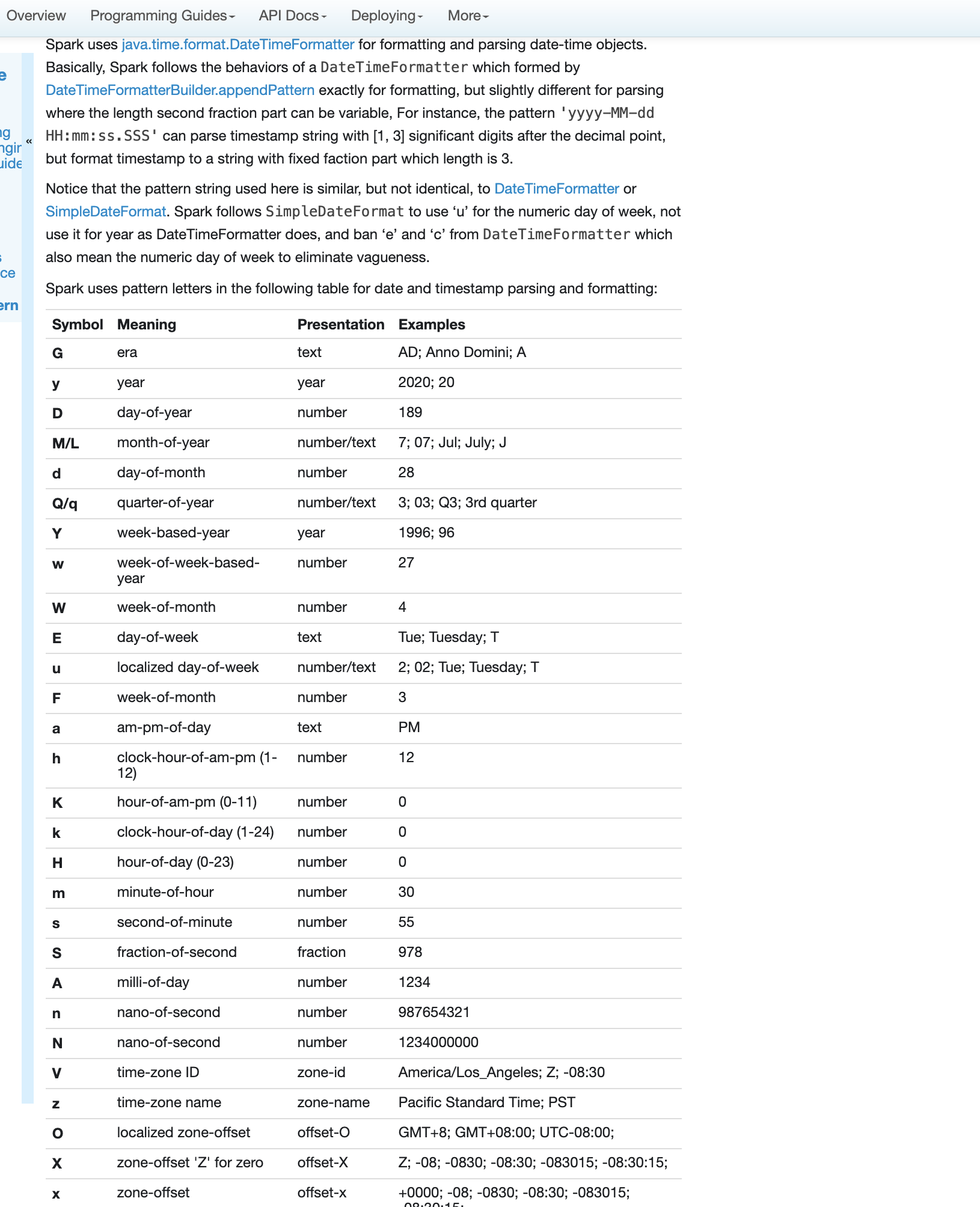

### What changes were proposed in this pull request?

Fix errors and missing parts for datetime pattern document

1. The pattern we use is similar to DateTimeFormatter and SimpleDateFormat but not identical. So we shouldn't use any of them in the API docs but use a link to the doc of our own.

2. Some pattern letters are missing

3. Some pattern letters are explicitly banned - Set('A', 'c', 'e', 'n', 'N')

4. the second fraction pattern different logic for parsing and formatting

### Why are the changes needed?

fix and improve doc

### Does this PR introduce any user-facing change?

yes, new and updated doc

### How was this patch tested?

pass Jenkins

viewed locally with `jekyll serve`

Closes#27956 from yaooqinn/SPARK-31189.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

A followup of https://github.com/apache/spark/pull/27936 to update document.

### Why are the changes needed?

correct document

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#27950 from cloud-fan/null.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

The meaning of 'u' was day number of the week in SimpleDateFormat, it was changed to year in DateTimeFormatter. Now we keep the old meaning of 'u' by substituting 'u' to 'e' internally and use DateTimeFormatter to parse the pattern string. In DateTimeFormatter, the 'e' and 'c' also represents day-of-week. e.g.

```sql

select date_format(timestamp '2019-10-06', 'yyyy-MM-dd uuuu');

select date_format(timestamp '2019-10-06', 'yyyy-MM-dd uuee');

select date_format(timestamp '2019-10-06', 'yyyy-MM-dd eeee');

```

Because of the substitution, they all goes to `.... eeee` silently. The users may congitive problems of their meanings, so we should mark them as illegal pattern characters to stay the same as before.

This pr move the method `convertIncompatiblePattern` from `DatetimeUtils` to `DateTimeFormatterHelper` object, since it is quite specific for `DateTimeFormatterHelper` class.

And 'e' and 'c' char checking in this method.

Besides,`convertIncompatiblePattern` has a bug that will lose the last `'` if it ends with it, this pr fixes this too. e.g.

```sql

spark-sql> select date_format(timestamp "2019-10-06", "yyyy-MM-dd'S'");

20/03/18 11:19:45 ERROR SparkSQLDriver: Failed in [select date_format(timestamp "2019-10-06", "yyyy-MM-dd'S'")]

java.lang.IllegalArgumentException: Pattern ends with an incomplete string literal: uuuu-MM-dd'S

spark-sql> select to_timestamp("2019-10-06S", "yyyy-MM-dd'S'");

NULL

```

### Why are the changes needed?

avoid vagueness

bug fix

### Does this PR introduce any user-facing change?

no, these are not exposed yet

### How was this patch tested?

add ut

Closes#27939 from yaooqinn/SPARK-31176.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR will add the user guide for AQE and the detailed configurations about the three mainly features in AQE.

### Why are the changes needed?

Add the detailed configurations.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

only add doc no need ut.

Closes#27616 from JkSelf/aqeuserguide.

Authored-by: jiake <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The current migration guide of SQL is too long for most readers to find the needed info. This PR is to group the items in the migration guide of Spark SQL based on the corresponding components.

Note. This PR does not change the contents of the migration guides. Attached figure is the screenshot after the change.

### Why are the changes needed?

The current migration guide of SQL is too long for most readers to find the needed info.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#27909 from gatorsmile/migrationGuideReorg.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Since we reverted the original change in https://github.com/apache/spark/pull/27540, this PR is to remove the corresponding migration guide made in the commit https://github.com/apache/spark/pull/24948

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27896 from gatorsmile/SPARK-28093Followup.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

When loading DataFrames from JDBC datasource with Kerberos authentication, remote executors (yarn-client/cluster etc. modes) fail to establish a connection due to lack of Kerberos ticket or ability to generate it.

This is a real issue when trying to ingest data from kerberized data sources (SQL Server, Oracle) in enterprise environment where exposing simple authentication access is not an option due to IT policy issues.

In this PR I've added Postgres support (other supported databases will come in later PRs).

What this PR contains:

* Added `keytab` and `principal` JDBC options

* Added `ConnectionProvider` trait and it's impementations:

* `BasicConnectionProvider` => unsecure connection

* `PostgresConnectionProvider` => postgres secure connection

* Added `ConnectionProvider` tests

* Added `PostgresKrbIntegrationSuite` docker integration test

* Created `SecurityUtils` to concentrate re-usable security related functionalities

* Documentation

### Why are the changes needed?

Missing JDBC kerberos support.

### Does this PR introduce any user-facing change?

Yes, 2 additional JDBC options added:

* keytab

* principal

If both provided then Spark does kerberos authentication.

### How was this patch tested?

To demonstrate the functionality with a standalone application I've created this repository: https://github.com/gaborgsomogyi/docker-kerberos

* Additional + existing unit tests

* Additional docker integration test

* Test on cluster manually

* `SKIP_API=1 jekyll build`

Closes#27637 from gaborgsomogyi/SPARK-30874.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@apache.org>

### What changes were proposed in this pull request?

spark.sql.legacy.timeParser.enabled should be removed from SQLConf and the migration guide

spark.sql.legacy.timeParsePolicy is the right one

### Why are the changes needed?

fix doc

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

Pass the jenkins

Closes#27889 from yaooqinn/SPARK-31131.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

1.Add version information to the configuration of `Status`.

2.Update the docs of `Status`.

3.By the way supplementary documentation about https://github.com/apache/spark/pull/27847

I sorted out some information show below.

Item name | Since version | JIRA ID | Commit ID | Note

-- | -- | -- | -- | --

spark.appStateStore.asyncTracking.enable | 2.3.0 | SPARK-20653 | 772e4648d95bda3353723337723543c741ea8476#diff-9ab674b7af7b2097f7d28cb6f5fd1e8c |

spark.ui.liveUpdate.period | 2.3.0 | SPARK-20644 | c7f38e5adb88d43ef60662c5d6ff4e7a95bff580#diff-9ab674b7af7b2097f7d28cb6f5fd1e8c |

spark.ui.liveUpdate.minFlushPeriod | 2.4.2 | SPARK-27394 | a8a2ba11ac10051423e58920062b50f328b06421#diff-9ab674b7af7b2097f7d28cb6f5fd1e8c |

spark.ui.retainedJobs | 1.2.0 | SPARK-2321 | 9530316887612dca060a128fca34dd5a6ab2a9a9#diff-1f32bcb61f51133bd0959a4177a066a5 |

spark.ui.retainedStages | 0.9.0 | None | 112c0a1776bbc866a1026a9579c6f72f293414c4#diff-1f32bcb61f51133bd0959a4177a066a5 | 0.9.0-incubating-SNAPSHOT

spark.ui.retainedTasks | 2.0.1 | SPARK-15083 | 55db26245d69bb02b7d7d5f25029b1a1cd571644#diff-6bdad48cfc34314e89599655442ff210 |

spark.ui.retainedDeadExecutors | 2.0.0 | SPARK-7729 | 9f4263392e492b5bc0acecec2712438ff9a257b7#diff-a0ba36f9b1f9829bf3c4689b05ab6cf2 |

spark.ui.dagGraph.retainedRootRDDs | 2.1.0 | SPARK-17171 | cc87280fcd065b01667ca7a59a1a32c7ab757355#diff-3f492c527ea26679d4307041b28455b8 |

spark.metrics.appStatusSource.enabled | 3.0.0 | SPARK-30060 | 60f20e5ea2000ab8f4a593b5e4217fd5637c5e22#diff-9f796ae06b0272c1f0a012652a5b68d0 |

### Why are the changes needed?

Supplemental configuration version information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exists UT

Closes#27848 from beliefer/add-version-to-status-config.

Lead-authored-by: beliefer <beliefer@163.com>

Co-authored-by: Jiaan Geng <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

A few improvements to the sql ref SELECT doc:

1. correct the syntax of SELECT query

2. correct the default of null sort order

3. correct the GROUP BY syntax

4. several minor fixes

### Why are the changes needed?

refine document

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27866 from cloud-fan/doc.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR reverts https://github.com/apache/spark/pull/26051 and https://github.com/apache/spark/pull/26066

### Why are the changes needed?

There is no standard requiring that `size(null)` must return null, and returning -1 looks reasonable as well. This is kind of a cosmetic change and we should avoid it if it breaks existing queries. This is similar to reverting TRIM function parameter order change.

### Does this PR introduce any user-facing change?

Yes, change the behavior of `size(null)` back to be the same as 2.4.

### How was this patch tested?

N/A

Closes#27834 from cloud-fan/revert.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

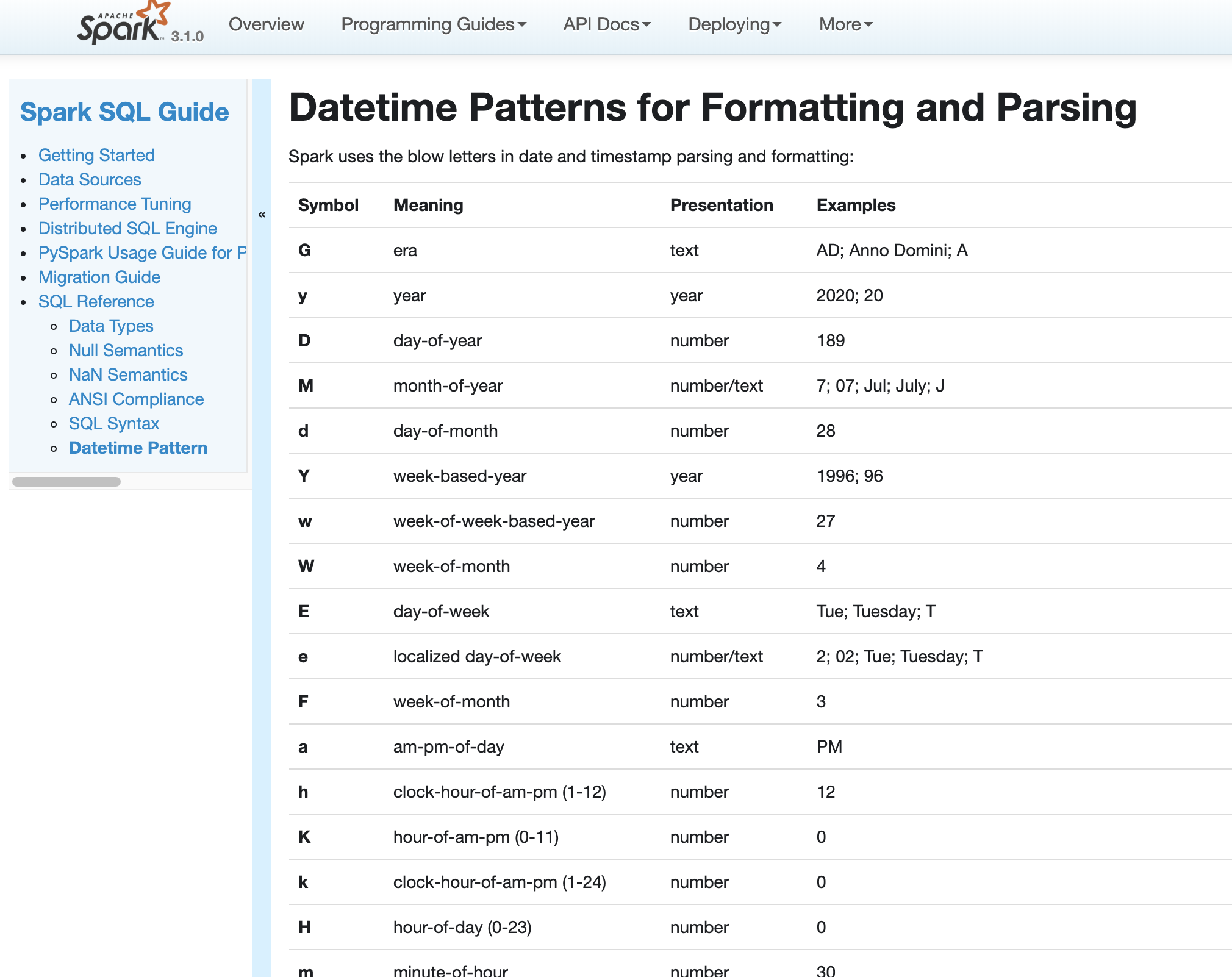

### What changes were proposed in this pull request?

In Spark version 2.4 and earlier, datetime parsing, formatting and conversion are performed by using the hybrid calendar (Julian + Gregorian).

Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen one in ANSI SQL standard, Spark 3.0 switches to it by using Java 8 API classes (the java.time packages that are based on ISO chronology ). The switching job is completed in SPARK-26651.

But after the switching, there are some patterns not compatible between Java 8 and Java 7, Spark needs its own definition on the patterns rather than depends on Java API.

In this PR, we achieve this by writing the document and shadow the incompatible letters. See more details in [SPARK-31030](https://issues.apache.org/jira/browse/SPARK-31030)

### Why are the changes needed?

For backward compatibility.

### Does this PR introduce any user-facing change?

No.

After we define our own datetime parsing and formatting patterns, it's same to old Spark version.

### How was this patch tested?

Existing and new added UT.

Locally document test:

Closes#27830 from xuanyuanking/SPARK-31030.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR makes the following refinements to the workflow for building docs:

* Install Python and Ruby consistently using pyenv and rbenv across both the docs README and the release Dockerfile.

* Pin the Python and Ruby versions we use.

* Pin all direct Python and Ruby dependency versions.

* Eliminate any use of `sudo pip`, which the Python community discourages, or `sudo gem`.

### Why are the changes needed?

This PR should increase the consistency and reproducibility of the doc-building process by managing Python and Ruby in a more consistent way, and by eliminating unused or outdated code.

Here's a possible example of an issue building the docs that would be addressed by the changes in this PR: https://github.com/apache/spark/pull/27459#discussion_r376135719

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manual tests:

* I was able to build the Docker image successfully, minus the final part about `RUN useradd`.

* I am unable to run `do-release-docker.sh` because I am not a committer and don't have the required GPG key.

* I built the docs locally and viewed them in the browser.

I think I need a committer to more fully test out these changes.

Closes#27534 from nchammas/SPARK-30731-building-docs.

Authored-by: Nicholas Chammas <nicholas.chammas@liveramp.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Updating ML docs for 3.0 changes

### Why are the changes needed?

I am auditing 3.0 ML changes, found some docs are missing or not updated. Need to update these.

### Does this PR introduce any user-facing change?

Yes, doc changes

### How was this patch tested?

Manually build and check

Closes#27762 from huaxingao/spark-doc.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This pr intends to support 32 or more grouping attributes for GROUPING_ID. In the current master, an integer overflow can occur to compute grouping IDs;

e75d9afb2f/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/basicLogicalOperators.scala (L613)

For example, the query below generates wrong grouping IDs in the master;

```

scala> val numCols = 32 // or, 31

scala> val cols = (0 until numCols).map { i => s"c$i" }

scala> sql(s"create table test_$numCols (${cols.map(c => s"$c int").mkString(",")}, v int) using parquet")

scala> val insertVals = (0 until numCols).map { _ => 1 }.mkString(",")

scala> sql(s"insert into test_$numCols values ($insertVals,3)")

scala> sql(s"select grouping_id(), sum(v) from test_$numCols group by grouping sets ((${cols.mkString(",")}), (${cols.init.mkString(",")}))").show(10, false)

scala> sql(s"drop table test_$numCols")

// numCols = 32

+-------------+------+

|grouping_id()|sum(v)|

+-------------+------+

|0 |3 |

|0 |3 | // Wrong Grouping ID

+-------------+------+

// numCols = 31

+-------------+------+

|grouping_id()|sum(v)|

+-------------+------+

|0 |3 |

|1 |3 |

+-------------+------+

```

To fix this issue, this pr change code to use long values for `GROUPING_ID` instead of int values.

### Why are the changes needed?

To support more cases in `GROUPING_ID`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added unit tests.

Closes#26918 from maropu/FixGroupingIdIssue.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR intends to fix typos and phrases in the `/docs` directory. To find them, I run the Intellij typo checker.

### Why are the changes needed?

For better documents.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

N/A

Closes#27819 from maropu/TypoFix-20200306.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

rename the config and make it non-internal.

### Why are the changes needed?

Now we fail the query if duplicated map keys are detected, and provide a legacy config to deduplicate it. However, we must provide a way to get users out of this situation, instead of just rejecting to run the query. This exit strategy should always be there, while legacy config indicates that it may be removed someday.

### Does this PR introduce any user-facing change?

no, just rename a config which was added in 3.0

### How was this patch tested?

add more tests for the fail behavior.

Closes#27772 from cloud-fan/map.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

-c is short for --conf, it was introduced since v1.1.0 but hidden from users until now

### Why are the changes needed?

### Does this PR introduce any user-facing change?

no

expose hidden feature

### How was this patch tested?

Nah

Closes#27802 from yaooqinn/conf.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Fix the migration guide document for `spark.sql.legacy.ctePrecedence.enabled`, which is introduced in #27579.

### Why are the changes needed?

The config value changed.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Document only.

Closes#27782 from xuanyuanking/SPARK-30829-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

ForEachBatch Java example was incorrect

### Why are the changes needed?

Example did not compile

### Does this PR introduce any user-facing change?

Yes, to docs.

### How was this patch tested?

In IDE.

Closes#27740 from roland1982/foreachwriter_java_example_fix.

Authored-by: roland-ondeviceresearch <roland@ondeviceresearch.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Remove automatically resource coordination support from Standalone.

### Why are the changes needed?

Resource coordination is mainly designed for the scenario where multiple workers launched on the same host. However, it's, actually, a non-existed scenario for today's Spark. Because, Spark now can start multiple executors in a single Worker, while it only allow one executor per Worker at very beginning. So, now, it really help nothing for user to launch multiple workers on the same host. Thus, it's not worth for us to bring over complicated implementation and potential high maintain cost for such an impossible scenario.

### Does this PR introduce any user-facing change?

No, it's Spark 3.0 feature.

### How was this patch tested?

Pass Jenkins.

Closes#27722 from Ngone51/abandon_coordination.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

1.Add version information to the configuration of `Kryo`.

2.Update the docs of `Kryo`.

I sorted out some information show below.

Item name | Since version | JIRA ID | Commit ID | Note

-- | -- | -- | -- | --

spark.kryo.registrationRequired | 1.1.0 | SPARK-2102 | efdaeb111917dd0314f1d00ee8524bed1e2e21ca#diff-1f81c62dad0e2dfc387a974bb08c497c |

spark.kryo.registrator | 0.5.0 | None | 91c07a33d90ab0357e8713507134ecef5c14e28a#diff-792ed56b3398163fa14e8578549d0d98 | This is not a release version, do we need to record it?

spark.kryo.classesToRegister | 1.2.0 | SPARK-1813 | 6bb56faea8d238ea22c2de33db93b1b39f492b3a#diff-529fc5c06b9731c1fbda6f3db60b16aa |

spark.kryo.unsafe | 2.1.0 | SPARK-928 | bc167a2a53f5a795d089e8a884569b1b3e2cd439#diff-1f81c62dad0e2dfc387a974bb08c497c |

spark.kryo.pool | 3.0.0 | SPARK-26466 | 38f030725c561979ca98b2a6cc7ca6c02a1f80ed#diff-a3c6b992784f9abeb9f3047d3dcf3ed9 |

spark.kryo.referenceTracking | 0.8.0 | None | 0a8cc309211c62f8824d76618705c817edcf2424#diff-1f81c62dad0e2dfc387a974bb08c497c |

spark.kryoserializer.buffer | 1.4.0 | SPARK-5932 | 2d222fb39dd978e5a33cde6ceb59307cbdf7b171#diff-1f81c62dad0e2dfc387a974bb08c497c |

spark.kryoserializer.buffer.max | 1.4.0 | SPARK-5932 | 2d222fb39dd978e5a33cde6ceb59307cbdf7b171#diff-1f81c62dad0e2dfc387a974bb08c497c |

### Why are the changes needed?

Supplemental configuration version information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exists UT

Closes#27734 from beliefer/add-version-to-kryo-config.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

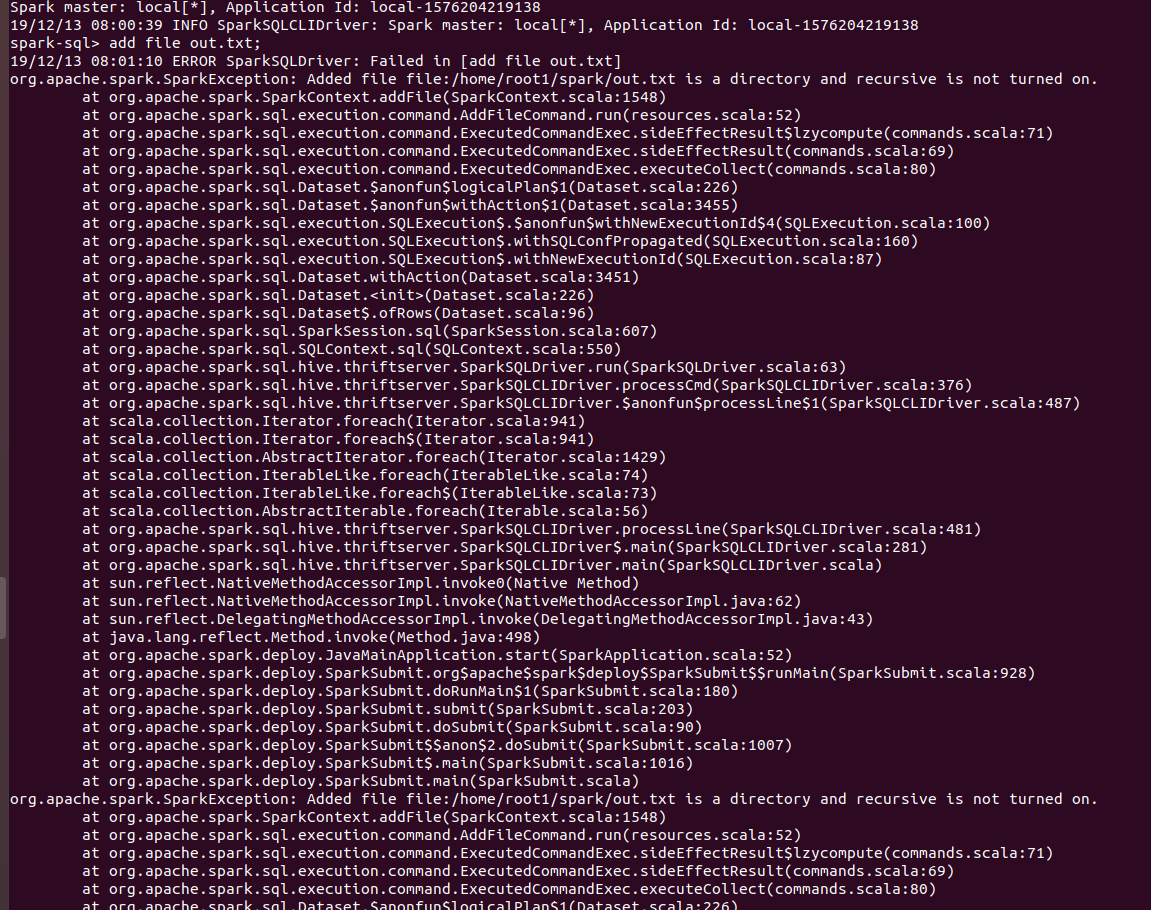

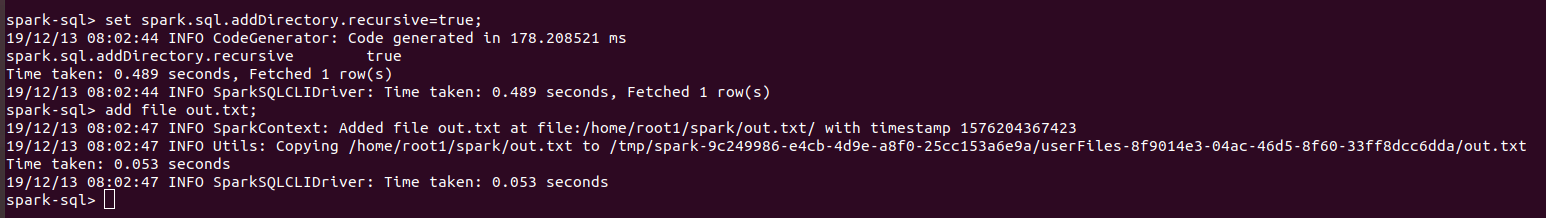

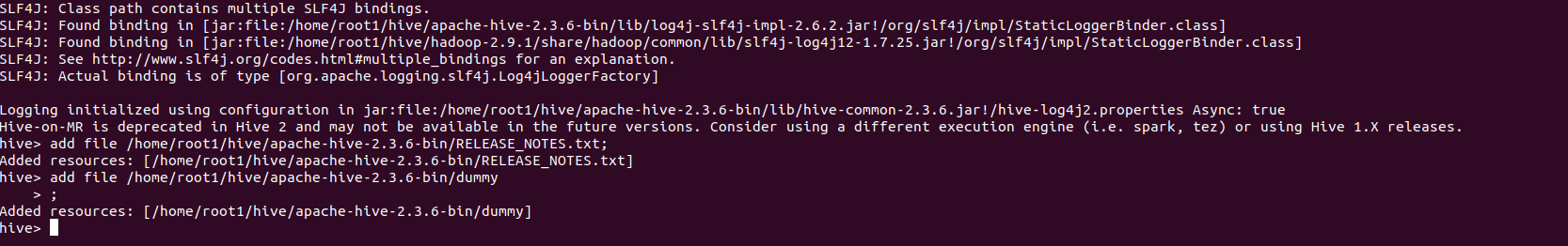

### What changes were proposed in this pull request?

Rename `spark.sql.legacy.addDirectory.recursive.enabled` to `spark.sql.legacy.addSingleFileInAddFile`

### Why are the changes needed?

To follow the naming convention

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs.

Closes#27725 from iRakson/SPARK-30234_CONFIG.

Authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Renamed configuration from `spark.sql.legacy.useHashOnMapType` to `spark.sql.legacy.allowHashOnMapType`.

### Why are the changes needed?

Better readability of configuration.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs.

Closes#27719 from iRakson/SPARK-27619_FOLLOWUP.

Authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR groups all hive upgrade related migration guides inside Spark 3.0 together.

Also add another behavior change of `ScriptTransform` in the new Hive section.

### Why are the changes needed?

Make the doc more clearly to user.

### Does this PR introduce any user-facing change?

No, new doc for Spark 3.0.

### How was this patch tested?

N/A.

Closes#27670 from Ngone51/hive_migration.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1.Add version information to the configuration of `Python`.

2.Update the docs of `Python`.

I sorted out some information show below.

Item name | Since version | JIRA ID | Commit ID | Note

-- | -- | -- | -- | --

spark.python.worker.reuse | 1.2.0 | SPARK-3030 | 2aea0da84c58a179917311290083456dfa043db7#diff-0a67bc4d171abe4df8eb305b0f4123a2 |

spark.python.task.killTimeout | 2.2.2 | SPARK-22535 | be68f86e11d64209d9e325ce807025318f383bea#diff-0a67bc4d171abe4df8eb305b0f4123a2 |

spark.python.use.daemon | 2.3.0 | SPARK-22554 | 57c5514de9dba1c14e296f85fb13fef23ce8c73f#diff-9008ad45db34a7eee2e265a50626841b |

spark.python.daemon.module | 2.4.0 | SPARK-22959 | afae8f2bc82597593595af68d1aa2d802210ea8b#diff-9008ad45db34a7eee2e265a50626841b |

spark.python.worker.module | 2.4.0 | SPARK-22959 | afae8f2bc82597593595af68d1aa2d802210ea8b#diff-9008ad45db34a7eee2e265a50626841b |

spark.executor.pyspark.memory | 2.4.0 | SPARK-25004 | 7ad18ee9f26e75dbe038c6034700f9cd4c0e2baa#diff-6bdad48cfc34314e89599655442ff210 |

### Why are the changes needed?

Supplemental configuration version information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exists UT

Closes#27704 from beliefer/add-version-to-python-config.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

1.Add version information to the configuration of `R`.

2.Update the docs of `R`.

I sorted out some information show below.

Item name | Since version | JIRA ID | Commit ID | Note

-- | -- | -- | -- | --

spark.r.backendConnectionTimeout | 2.1.0 | SPARK-17919 | 2881a2d1d1a650a91df2c6a01275eba14a43b42a#diff-025470e1b7094d7cf4a78ea353fb3981 |

spark.r.numRBackendThreads | 1.4.0 | SPARK-8282 | 28e8a6ea65fd08ab9cefc4d179d5c66ffefd3eb4#diff-697f7f2fc89808e0113efc71ed235db2 |

spark.r.heartBeatInterval | 2.1.0 | SPARK-17919 | 2881a2d1d1a650a91df2c6a01275eba14a43b42a#diff-fe903bf14db371aa320b7cc516f2463c |

spark.sparkr.r.command | 1.5.3 | SPARK-10971 | 9695f452e86a88bef3bcbd1f3c0b00ad9e9ac6e1#diff-025470e1b7094d7cf4a78ea353fb3981 |

spark.r.command | 1.5.3 | SPARK-10971 | 9695f452e86a88bef3bcbd1f3c0b00ad9e9ac6e1#diff-025470e1b7094d7cf4a78ea353fb3981 |

### Why are the changes needed?

Supplemental configuration version information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exists UT

Closes#27708 from beliefer/add-version-to-R-config.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

`hash()` and `xxhash64()` cannot be used on elements of `Maptype`. A new configuration `spark.sql.legacy.useHashOnMapType` is introduced to allow users to restore the previous behaviour.

When `spark.sql.legacy.useHashOnMapType` is set to false:

```

scala> spark.sql("select hash(map())");

org.apache.spark.sql.AnalysisException: cannot resolve 'hash(map())' due to data type mismatch: input to function hash cannot contain elements of MapType; line 1 pos 7;

'Project [unresolvedalias(hash(map(), 42), None)]

+- OneRowRelation

```

when `spark.sql.legacy.useHashOnMapType` is set to true :

```

scala> spark.sql("set spark.sql.legacy.useHashOnMapType=true");

res3: org.apache.spark.sql.DataFrame = [key: string, value: string]

scala> spark.sql("select hash(map())").first()

res4: org.apache.spark.sql.Row = [42]

```

### Why are the changes needed?

As discussed in Jira, SparkSql's map hashcodes depends on their order of insertion which is not consistent with the normal scala behaviour which might confuse users.

Code snippet from JIRA :

```

val a = spark.createDataset(Map(1->1, 2->2) :: Nil)

val b = spark.createDataset(Map(2->2, 1->1) :: Nil)

// Demonstration of how Scala Map equality is unaffected by insertion order:

assert(Map(1->1, 2->2).hashCode() == Map(2->2, 1->1).hashCode())

assert(Map(1->1, 2->2) == Map(2->2, 1->1))

assert(a.first() == b.first())

// In contrast, this will print two different hashcodes:

println(Seq(a, b).map(_.selectExpr("hash(*)").first()))

```

Also `MapType` is prohibited for aggregation / joins / equality comparisons #7819 and set operations #17236.

### Does this PR introduce any user-facing change?

Yes. Now users cannot use hash functions on elements of `mapType`. To restore the previous behaviour set `spark.sql.legacy.useHashOnMapType` to true.

### How was this patch tested?

UT added.

Closes#27580 from iRakson/SPARK-27619.

Authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.1.0-SNAPSHOT.

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27698 from gatorsmile/updateVersion.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Rename config `spark.resources.discovery.plugin` to `spark.resources.discoveryPlugin`.

Also, as a side minor change: labeled `ResourceDiscoveryScriptPlugin` as `DeveloperApi` since it's not for end user.

### Why are the changes needed?

Discovery plugin doesn't need to reserve the "discovery" namespace here and it's more consistent with the interface name `ResourceDiscoveryPlugin` if we use `discoveryPlugin` instead.

### Does this PR introduce any user-facing change?

No, it's newly added in Spark3.0.

### How was this patch tested?

Pass Jenkins.

Closes#27689 from Ngone51/spark_30689_followup.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a FOLLOW-UP PR for review comment on #27208 : https://github.com/apache/spark/pull/27208#pullrequestreview-347451714

This PR documents a new feature `Eventlog Compaction` into the new section of `monitoring.md`, as it only has one configuration on the SHS side and it's hard to explain everything on the description on the single configuration.

### Why are the changes needed?

Event log compaction lacks the documentation for what it is and how it helps. This PR will explain it.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Built docs via jekyll.

> change on the new section

<img width="951" alt="Screen Shot 2020-02-16 at 2 23 18 PM" src="https://user-images.githubusercontent.com/1317309/74599587-eb9efa80-50c7-11ea-942c-f7744268e40b.png">

> change on the table

<img width="1126" alt="Screen Shot 2020-01-30 at 5 08 12 PM" src="https://user-images.githubusercontent.com/1317309/73431190-2e9c6680-4383-11ea-8ce0-815f10917ddd.png">

Closes#27398 from HeartSaVioR/SPARK-30481-FOLLOWUP-document-new-feature.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

1.Add version information to the configuration of `Deploy`.

2.Update the docs of `Deploy`.

I sorted out some information show below.

Item name | Since version | JIRA ID | Commit ID | Note

-- | -- | -- | -- | --

spark.deploy.recoveryMode | 0.8.1 | None | d66c01f2b6defb3db6c1be99523b734a4d960532#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.recoveryMode.factory | 1.2.0 | SPARK-1830 | deefd9d7377a8091a1d184b99066febd0e9f6afd#diff-29dffdccd5a7f4c8b496c293e87c8668 | This configuration appears in branch-1.3, but the version number in the pom.xml file corresponding to the commit is 1.2.0-SNAPSHOT

spark.deploy.recoveryDirectory | 0.8.1 | None | d66c01f2b6defb3db6c1be99523b734a4d960532#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.zookeeper.url | 0.8.1 | None | d66c01f2b6defb3db6c1be99523b734a4d960532#diff-4457313ca662a1cd60197122d924585c |

spark.deploy.zookeeper.dir | 0.8.1 | None | d66c01f2b6defb3db6c1be99523b734a4d960532#diff-a84228cb45c7d5bd93305a1f5bf720b6 |

spark.deploy.retainedApplications | 0.8.0 | None | 46eecd110a4017ea0c86cbb1010d0ccd6a5eb2ef#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.retainedDrivers | 1.1.0 | None | 7446f5ff93142d2dd5c79c63fa947f47a1d4db8b#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.dead.worker.persistence | 0.8.0 | None | 46eecd110a4017ea0c86cbb1010d0ccd6a5eb2ef#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.maxExecutorRetries | 1.6.3 | SPARK-16956 | ace458f0330f22463ecf7cbee7c0465e10fba8a8#diff-29dffdccd5a7f4c8b496c293e87c8668 |

spark.deploy.spreadOut | 0.6.1 | None | bb2b9ff37cd2503cc6ea82c5dd395187b0910af0#diff-0e7ae91819fc8f7b47b0f97be7116325 |

spark.deploy.defaultCores | 0.9.0 | None | d8bcc8e9a095c1b20dd7a17b6535800d39bff80e#diff-29dffdccd5a7f4c8b496c293e87c8668 |

### Why are the changes needed?

Supplemental configuration version information.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exists UT

Closes#27668 from beliefer/add-version-to-deploy-config.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Previous exemple given for spark-streaming-kinesis was true for Apache Spark < 2.3.0. After that the method used in exemple became deprecated:

deprecated("use initialPosition(initialPosition: KinesisInitialPosition)", "2.3.0")

def initialPositionInStream(initialPosition: InitialPositionInStream)

This PR updates the doc on rewriting exemple in Scala/Java (remain unchanged in Python) to adapt Apache Spark 2.4.0 + releases.

### Why are the changes needed?

It introduces some confusion for developers to test their spark-streaming-kinesis exemple.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

In my opinion, the change is only about the documentation level, so I did not add any special test.

Closes#27652 from supaggregator/SPARK-30901.

Authored-by: XU Duo <Duo.XU@canal-plus.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Structured streaming documentation example fix

### Why are the changes needed?

Currently the java example uses incorrect syntax

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

In IDE

Closes#27671 from roland1982/foreachwriter_java_example_fix.

Authored-by: roland-ondeviceresearch <roland@ondeviceresearch.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to throw exception by default when user use untyped UDF(a.k.a `org.apache.spark.sql.functions.udf(AnyRef, DataType)`).

And user could still use it by setting `spark.sql.legacy.useUnTypedUdf.enabled` to `true`.

### Why are the changes needed?

According to #23498, since Spark 3.0, the untyped UDF will return the default value of the Java type if the input value is null. For example, `val f = udf((x: Int) => x, IntegerType)`, `f($"x")` will return 0 in Spark 3.0 but null in Spark 2.4. And the behavior change is introduced due to Spark3.0 is built with Scala 2.12 by default.

As a result, this might change data silently and may cause correctness issue if user still expect `null` in some cases. Thus, we'd better to encourage user to use typed UDF to avoid this problem.

### Does this PR introduce any user-facing change?

Yeah. User will hit exception now when use untyped UDF.

### How was this patch tested?

Added test and updated some tests.

Closes#27488 from Ngone51/spark_26580_followup.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: wuyi <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Revise the documentation of `spark.ui.retainedTasks` to make it clear that the configuration is for one stage.

### Why are the changes needed?

There are configurations for the limitation of UI data.

`spark.ui.retainedJobs`, `spark.ui.retainedStages` and `spark.worker.ui.retainedExecutors` are the total max number for one application, while the configuration `spark.ui.retainedTasks` is the max number for one stage.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

None, just doc.

Closes#27660 from gengliangwang/reviseRetainTask.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

mention the workaround if users do want to use map type as key, and add a test to demonstrate it.

### Why are the changes needed?

it's better to provide an alternative when we ban something.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#27621 from cloud-fan/map.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Improve the CREATE TABLE document:

1. mention that some clauses can come in as any order.

2. refine the description for some parameters.

3. mention how data source table interacts with data source

4. make the examples consistent between data source and hive serde tables.

### Why are the changes needed?

improve doc

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#27638 from cloud-fan/doc.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

fix kubernetes-client version doc

### Why are the changes needed?

correct doc

### Does this PR introduce any user-facing change?

nah

### How was this patch tested?

nah

Closes#27605 from yaooqinn/k8s-version-update.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

mention that `INT96` timestamp is still useful for interoperability.

### Why are the changes needed?

Give users more context of the behavior changes.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#27622 from cloud-fan/parquet.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

[HIVE-15167](https://issues.apache.org/jira/browse/HIVE-15167) removed the `SerDe` interface. This may break custom `SerDe` builds for Hive 1.2. This PR update the migration guide for this change.

### Why are the changes needed?

Otherwise:

```

2020-01-27 05:11:20.446 - stderr> 20/01/27 05:11:20 INFO DAGScheduler: ResultStage 2 (main at NativeMethodAccessorImpl.java:0) failed in 1.000 s due to Job aborted due to stage failure: Task 0 in stage 2.0 failed 4 times, most recent failure: Lost task 0.3 in stage 2.0 (TID 13, 10.110.21.210, executor 1): java.lang.NoClassDefFoundError: org/apache/hadoop/hive/serde2/SerDe

2020-01-27 05:11:20.446 - stderr> at java.lang.ClassLoader.defineClass1(Native Method)

2020-01-27 05:11:20.446 - stderr> at java.lang.ClassLoader.defineClass(ClassLoader.java:756)

2020-01-27 05:11:20.446 - stderr> at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

2020-01-27 05:11:20.446 - stderr> at java.net.URLClassLoader.defineClass(URLClassLoader.java:468)

2020-01-27 05:11:20.446 - stderr> at java.net.URLClassLoader.access$100(URLClassLoader.java:74)

2020-01-27 05:11:20.446 - stderr> at java.net.URLClassLoader$1.run(URLClassLoader.java:369)

2020-01-27 05:11:20.446 - stderr> at java.net.URLClassLoader$1.run(URLClassLoader.java:363)

2020-01-27 05:11:20.446 - stderr> at java.security.AccessController.doPrivileged(Native Method)

2020-01-27 05:11:20.446 - stderr> at java.net.URLClassLoader.findClass(URLClassLoader.java:362)

2020-01-27 05:11:20.446 - stderr> at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

2020-01-27 05:11:20.446 - stderr> at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:352)

2020-01-27 05:11:20.446 - stderr> at java.lang.ClassLoader.loadClass(ClassLoader.java:405)

2020-01-27 05:11:20.446 - stderr> at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

2020-01-27 05:11:20.446 - stderr> at java.lang.Class.forName0(Native Method)

2020-01-27 05:11:20.446 - stderr> at java.lang.Class.forName(Class.java:348)

2020-01-27 05:11:20.446 - stderr> at org.apache.hadoop.hive.ql.plan.TableDesc.getDeserializerClass(TableDesc.java:76)

.....

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manual test

Closes#27492 from wangyum/SPARK-30755.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a follow-up for #23124, add a new config `spark.sql.legacy.allowDuplicatedMapKeys` to control the behavior of removing duplicated map keys in build-in functions. With the default value `false`, Spark will throw a RuntimeException while duplicated keys are found.

### Why are the changes needed?

Prevent silent behavior changes.

### Does this PR introduce any user-facing change?

Yes, new config added and the default behavior for duplicated map keys changed to RuntimeException thrown.

### How was this patch tested?

Modify existing UT.

Closes#27478 from xuanyuanking/SPARK-25892-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch addresses the post-hoc review comment linked here - https://github.com/apache/spark/pull/25670#discussion_r373304076

### Why are the changes needed?

We would like to explicitly document the direct relationship before we finish up structuring of configurations.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

N/A

Closes#27576 from HeartSaVioR/SPARK-28869-FOLLOWUP-doc.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

fix style issue in the k8s document, please go to http://spark.apache.org/docs/3.0.0-preview2/running-on-kubernetes.html and search the keyword`spark.kubernetes.file.upload.path` to jump to the error context

### Why are the changes needed?

doc correctness

### Does this PR introduce any user-facing change?

Nah

### How was this patch tested?

Nah

Closes#27582 from yaooqinn/k8s-doc.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add doc for recommended pandas and pyarrow versions.

### Why are the changes needed?

The recommended versions are those that have been thoroughly tested by Spark CI. Other versions may be used at the discretion of the user.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

NA

Closes#27587 from BryanCutler/python-doc-rec-pandas-pyarrow-SPARK-30834-3.0.

Lead-authored-by: Bryan Cutler <cutlerb@gmail.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a follow-up of https://github.com/apache/spark/pull/27489.

It declares the ANSI SQL compliance options as experimental in the documentation.

### Why are the changes needed?

The options are experimental. There can be new features/behaviors in future releases.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Generating doc

Closes#27590 from gengliangwang/ExperimentalAnsi.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Change the link to the Scala API document.

```

$ git grep "#org.apache.spark.package"

docs/_layouts/global.html: <li><a href="api/scala/index.html#org.apache.spark.package">Scala</a></li>

docs/index.md:* [Spark Scala API (Scaladoc)](api/scala/index.html#org.apache.spark.package)

docs/rdd-programming-guide.md:[Scala](api/scala/#org.apache.spark.package), [Java](api/java/), [Python](api/python/) and [R](api/R/).

```

### Why are the changes needed?

The home page link for Scala API document is incorrect after upgrade to 3.0

### Does this PR introduce any user-facing change?

Document UI change only.

### How was this patch tested?

Local test, attach screenshots below:

Before:

After:

Closes#27549 from xuanyuanking/scala-doc.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR address the comment at https://github.com/apache/spark/pull/26496#discussion_r379194091 and improves the migration guide to explicitly note that the legacy environment variable to set in both executor and driver.

### Why are the changes needed?

To clarify this env should be set both in driver and executors.

### Does this PR introduce any user-facing change?

Nope.

### How was this patch tested?

I checked it via md editor.

Closes#27573 from HyukjinKwon/SPARK-29748.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

`spark.sql("select map()")` returns {}.

After these changes it will return map<null,null>

### Why are the changes needed?

After changes introduced due to #27521, it is important to maintain consistency while using map().

### Does this PR introduce any user-facing change?

Yes. Now map() will give map<null,null> instead of {}.

### How was this patch tested?

UT added. Migration guide updated as well

Closes#27542 from iRakson/SPARK-30790.

Authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This pr is a follow up of https://github.com/apache/spark/pull/26200.

In this PR, I modify the description of spark.sql.files.* in sql-performance-tuning.md to keep consistent with that in SQLConf.

### Why are the changes needed?

To keep consistent with the description in SQLConf.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existed UT.

Closes#27545 from turboFei/SPARK-29542-follow-up.

Authored-by: turbofei <fwang12@ebay.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR targets to document the Pandas UDF redesign with type hints introduced at SPARK-28264.

Mostly self-describing; however, there are few things to note for reviewers.

1. This PR replace the existing documentation of pandas UDFs to the newer redesign to promote the Python type hints. I added some words that Spark 3.0 still keeps the compatibility though.

2. This PR proposes to name non-pandas UDFs as "Pandas Function API"

3. SCALAR_ITER become two separate sections to reduce confusion:

- `Iterator[pd.Series]` -> `Iterator[pd.Series]`

- `Iterator[Tuple[pd.Series, ...]]` -> `Iterator[pd.Series]`

4. I removed some examples that look overkill to me.

5. I also removed some information in the doc, that seems duplicating or too much.

### Why are the changes needed?

To document new redesign in pandas UDF.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests should cover.

Closes#27466 from HyukjinKwon/SPARK-30722.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Document updated for `CACHE TABLE` & `UNCACHE TABLE`

### Why are the changes needed?

Cache table creates a temp view while caching data using `CACHE TABLE name AS query`. `UNCACHE TABLE` does not remove this temp view.

These things were not mentioned in the existing doc for `CACHE TABLE` & `UNCACHE TABLE`.

### Does this PR introduce any user-facing change?

Document updated for `CACHE TABLE` & `UNCACHE TABLE` command.

### How was this patch tested?

Manually

Closes#27090 from iRakson/SPARK-27545.

Lead-authored-by: root1 <raksonrakesh@gmail.com>

Co-authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This brings https://github.com/apache/spark/pull/26324 back. It was reverted basically because, firstly Hive compatibility, and the lack of investigations in other DBMSes and ANSI.

- In case of PostgreSQL seems coercing NULL literal to TEXT type.

- Presto seems coercing `array() + array(1)` -> array of int.

- Hive seems `array() + array(1)` -> array of strings

Given that, the design choices have been differently made for some reasons. If we pick one of both, seems coercing to array of int makes much more sense.

Another investigation was made offline internally. Seems ANSI SQL 2011, section 6.5 "<contextually typed value specification>" states:

> If ES is specified, then let ET be the element type determined by the context in which ES appears. The declared type DT of ES is Case:

>

> a) If ES simply contains ARRAY, then ET ARRAY[0].

>

> b) If ES simply contains MULTISET, then ET MULTISET.

>

> ES is effectively replaced by CAST ( ES AS DT )

From reading other related context, doing it to `NullType`. Given the investigation made, choosing to `null` seems correct, and we have a reference Presto now. Therefore, this PR proposes to bring it back.

### Why are the changes needed?

When empty array is created, it should be declared as array<null>.

### Does this PR introduce any user-facing change?

Yes, `array()` creates `array<null>`. Now `array(1) + array()` can correctly create `array(1)` instead of `array("1")`.

### How was this patch tested?

Tested manually

Closes#27521 from HyukjinKwon/SPARK-29462.

Lead-authored-by: HyukjinKwon <gurwls223@apache.org>

Co-authored-by: Aman Omer <amanomer1996@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This is a follow-up for #24938 to tweak error message and migration doc.

### Why are the changes needed?

Making user know workaround if SHOW CREATE TABLE doesn't work for some Hive tables.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing unit tests.

Closes#27505 from viirya/SPARK-27946-followup.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Liang-Chi Hsieh <liangchi@uber.com>

### What changes were proposed in this pull request?

Add the new tab `SQL` in the `Data Types` page.

### Why are the changes needed?

New type added in SPARK-29587.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Locally test by Jekyll.

Closes#27447 from xuanyuanking/SPARK-29587-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a follow-up for #25029, in this PR we throw an AnalysisException when name conflict is detected in nested WITH clause. In this way, the config `spark.sql.legacy.ctePrecedence.enabled` should be set explicitly for the expected behavior.

### Why are the changes needed?

The original change might risky to end-users, it changes behavior silently.

### Does this PR introduce any user-facing change?

Yes, change the config `spark.sql.legacy.ctePrecedence.enabled` as optional.

### How was this patch tested?

New UT.

Closes#27454 from xuanyuanking/SPARK-28228-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Add new config `spark.network.maxRemoteBlockSizeFetchToMem` fallback to the old config `spark.maxRemoteBlockSizeFetchToMem`.

### Why are the changes needed?

For naming consistency.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27463 from xuanyuanking/SPARK-26700-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add migration note for removing `org.apache.spark.ml.image.ImageSchema.readImages`

### Why are the changes needed?

### Does this PR introduce any user-facing change?

### How was this patch tested?

Closes#27467 from WeichenXu123/SC-26286.

Authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the PR, I propose to partially revert the commit 51a6ba0181, and provide a legacy parser based on `FastDateFormat` which is compatible to `SimpleDateFormat`.

To enable the legacy parser, set `spark.sql.legacy.timeParser.enabled` to `true`.

### Why are the changes needed?

To allow users to restore old behavior in parsing timestamps/dates using `SimpleDateFormat` patterns. The main reason for restoring is `DateTimeFormatter`'s patterns are not fully compatible to `SimpleDateFormat` patterns, see https://issues.apache.org/jira/browse/SPARK-30668

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

- Added new test to `DateFunctionsSuite`

- Restored additional test cases in `JsonInferSchemaSuite`.

Closes#27441 from MaxGekk/support-simpledateformat.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This reverts commit b89c3de1a4.

### Why are the changes needed?

`FIRST_VALUE` is used only for window expression. Please see the discussion on https://github.com/apache/spark/pull/25082 .

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

Pass the Jenkins.

Closes#27458 from dongjoon-hyun/SPARK-28310.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a follow-up for #22787. In #22787 we disallowed empty strings for json parser except for string and binary types. This follow-up adds a legacy config for restoring previous behavior of allowing empty string.

### Why are the changes needed?

Adding a legacy config to make migration easy for Spark users.

### Does this PR introduce any user-facing change?

Yes. If set this legacy config to true, the users can restore previous behavior prior to Spark 3.0.0.

### How was this patch tested?

Unit test.

Closes#27456 from viirya/SPARK-25040-followup.

Lead-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Co-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the PR, I propose to update the SQL migration guide, and clarify behavior change of typed `TIMESTAMP` and `DATE` literals for input strings without time zone information - local timestamp and date strings.

### Why are the changes needed?

To inform users that the typed literals may change their behavior in Spark 3.0 because of different sources of the default time zone - JVM system time zone in Spark 2.4 and earlier, and `spark.sql.session.timeZone` in Spark 3.0.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#27435 from MaxGekk/timestamp-lit-migration-guide.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

We have upgraded the built-in Hive from 1.2 to 2.3. This may need to set `spark.sql.hive.metastore.version` and `spark.sql.hive.metastore.jars` according to the version of your Hive metastore. Example:

```

--conf spark.sql.hive.metastore.version=1.2.1 --conf spark.sql.hive.metastore.jars=/root/hive-1.2.1-lib/*

```

Otherwise:

```

org.apache.spark.sql.AnalysisException: org.apache.hadoop.hive.ql.metadata.HiveException: Unable to fetch table spark_27686. Invalid method name: 'get_table_req';

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:110)

at org.apache.spark.sql.hive.HiveExternalCatalog.tableExists(HiveExternalCatalog.scala:841)

at org.apache.spark.sql.catalyst.catalog.ExternalCatalogWithListener.tableExists(ExternalCatalogWithListener.scala:146)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.tableExists(SessionCatalog.scala:431)

at org.apache.spark.sql.execution.command.CreateDataSourceTableCommand.run(createDataSourceTables.scala:52)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:70)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:68)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.executeCollect(commands.scala:79)

at org.apache.spark.sql.Dataset.$anonfun$logicalPlan$1(Dataset.scala:226)

at org.apache.spark.sql.Dataset.$anonfun$withAction$1(Dataset.scala:3487)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$4(SQLExecution.scala:100)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:160)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:87)

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3485)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:226)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:96)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:607)

... 47 elided

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Unable to fetch table spark_27686. Invalid method name: 'get_table_req'

at org.apache.hadoop.hive.ql.metadata.Hive.getTable(Hive.java:1282)

at org.apache.spark.sql.hive.client.HiveClientImpl.getRawTableOption(HiveClientImpl.scala:422)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$tableExists$1(HiveClientImpl.scala:436)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$withHiveState$1(HiveClientImpl.scala:322)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:256)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:255)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:305)

at org.apache.spark.sql.hive.client.HiveClientImpl.tableExists(HiveClientImpl.scala:436)

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$tableExists$1(HiveExternalCatalog.scala:841)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:100)

... 63 more

Caused by: org.apache.thrift.TApplicationException: Invalid method name: 'get_table_req'

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:79)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_get_table_req(ThriftHiveMetastore.java:1567)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_table_req(ThriftHiveMetastore.java:1554)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.getTable(HiveMetaStoreClient.java:1350)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.getTable(SessionHiveMetaStoreClient.java:127)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:173)

at com.sun.proxy.$Proxy38.getTable(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient$SynchronizedHandler.invoke(HiveMetaStoreClient.java:2336)

at com.sun.proxy.$Proxy38.getTable(Unknown Source)

at org.apache.hadoop.hive.ql.metadata.Hive.getTable(Hive.java:1274)

... 74 more

```

### Why are the changes needed?

Improve documentation.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

```SKIP_API=1 jekyll build```:

Closes#27161 from wangyum/SPARK-27686.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This change is to allow custom resource scheduler (GPUs,FPGAs,etc) resource discovery to be more flexible. Users are asking for it to work with hadoop 2.x versions that do not support resource scheduling in YARN and/or also they may not run in an isolated environment.

This change creates a plugin api that users can write their own resource discovery class that allows a lot more flexibility. The user can chain plugins for different resource types. The user specified plugins execute in the order specified and will fall back to use the discovery script plugin if they don't return information for a particular resource.

I had to open up a few of the classes to be public and change them to not be case classes and make them developer api in order for the the plugin to get enough information it needs.

I also relaxed the yarn side so that if yarn isn't configured for resource scheduling we just warn and go on. This helps users that have yarn 3.1 but haven't configured the resource scheduling side on their cluster yet, or aren't running in isolated environment.

The user would configured this like:

--conf spark.resources.discovery.plugin="org.apache.spark.resource.ResourceDiscoveryFPGAPlugin, org.apache.spark.resource.ResourceDiscoveryGPUPlugin"

Note the executor side had to be wrapped with a classloader to make sure we include the user classpath for jars they specified on submission.

Note this is more flexible because the discovery script has limitations such as spawning it in a separate process. This means if you are trying to allocate resources in that process they might be released when the script returns. Other things are the class makes it more flexible to be able to integrate with existing systems and solutions for assigning resources.

### Why are the changes needed?

to more easily use spark resource scheduling with older versions of hadoop or in non-isolated enivronments.

### Does this PR introduce any user-facing change?

Yes a plugin api

### How was this patch tested?

Unit tests added and manual testing done on yarn and standalone modes.

Closes#27410 from tgravescs/hadoop27spark3.

Lead-authored-by: Thomas Graves <tgraves@nvidia.com>

Co-authored-by: Thomas Graves <tgraves@apache.org>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

This patch adds a DDL command `SHOW CREATE TABLE AS SERDE`. It is used to generate Hive DDL for a Hive table.

For original `SHOW CREATE TABLE`, it now shows Spark DDL always. If given a Hive table, it tries to generate Spark DDL.

For Hive serde to data source conversion, this uses the existing mapping inside `HiveSerDe`. If can't find a mapping there, throws an analysis exception on unsupported serde configuration.

It is arguably that some Hive fileformat + row serde might be mapped to Spark data source, e.g., CSV. It is not included in this PR. To be conservative, it may not be supported.

For Hive serde properties, for now this doesn't save it to Spark DDL because it may not useful to keep Hive serde properties in Spark table.

## How was this patch tested?

Added test.

Closes#24938 from viirya/SPARK-27946.

Lead-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Co-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

Add a section to the Configuration page to document configurations for executor metrics.

At the same time, rename spark.eventLog.logStageExecutorProcessTreeMetrics.enabled to spark.executor.processTreeMetrics.enabled and make it independent of spark.eventLog.logStageExecutorMetrics.enabled.

### Why are the changes needed?

Executor metrics are new in Spark 3.0. They lack documentation.

Memory metrics as a whole are always collected, but the ones obtained from the process tree have to be optionally enabled. Making this depend on a single configuration makes for more intuitive behavior. Given this, the configuration property is renamed to better reflect its meaning.

### Does this PR introduce any user-facing change?

Yes, only in that the configurations are all new to 3.0.

### How was this patch tested?

Not necessary.

Closes#27329 from wypoon/SPARK-27324.

Authored-by: Wing Yew Poon <wypoon@cloudera.com>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

### What changes were proposed in this pull request?

- Add `minPartitions` support for Kafka Streaming V1 source.

- Add `minPartitions` support for Kafka batch V1 and V2 source.

- There is lots of refactoring (moving codes to KafkaOffsetReader) to reuse codes.

### Why are the changes needed?

Right now, the "minPartitions" option only works in Kafka streaming source v2. It would be great that we can support it in batch and streaming source v1 (v1 is the fallback mode when a user hits a regression in v2) as well.

### Does this PR introduce any user-facing change?

Yep. The `minPartitions` options is supported in Kafka batch and streaming queries for both data source V1 and V2.

### How was this patch tested?

New unit tests are added to test "minPartitions".

Closes#27388 from zsxwing/kafka-min-partitions.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: Shixiong Zhu <zsxwing@gmail.com>

### What changes were proposed in this pull request?

This PR removes any dependencies on pypandoc. It also makes related tweaks to the docs README to clarify the dependency on pandoc (not pypandoc).

### Why are the changes needed?

We are using pypandoc to convert the Spark README from Markdown to ReST for PyPI. PyPI now natively supports Markdown, so we don't need pypandoc anymore. The dependency on pypandoc also sometimes causes issues when installing Python packages that depend on PySpark, as described in #18981.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually:

```sh

python -m venv venv

source venv/bin/activate

pip install -U pip

cd python/

python setup.py sdist

pip install dist/pyspark-3.0.0.dev0.tar.gz

pyspark --version

```

I also built the PySpark and R API docs with `jekyll` and reviewed them locally.

It would be good if a maintainer could also test this by creating a PySpark distribution and uploading it to [Test PyPI](https://test.pypi.org) to confirm the README looks as it should.

Closes#27376 from nchammas/SPARK-30665-pypandoc.

Authored-by: Nicholas Chammas <nicholas.chammas@liveramp.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

add supported hive features

### Why are the changes needed?

update doc

### Does this PR introduce any user-facing change?

Before change UI info:

After this pr:

### How was this patch tested?

For PR about Spark Doc Web UI, we need to show UI format before and after pr.

We can build our local web server about spark docs with reference `$SPARK_PROJECT/docs/README.md`

You should install python and ruby in your env and also install plugin like below

```sh

$ sudo gem install jekyll jekyll-redirect-from rouge

# Following is needed only for generating API docs

$ sudo pip install sphinx pypandoc mkdocs

$ sudo Rscript -e 'install.packages(c("knitr", "devtools", "rmarkdown"), repos="https://cloud.r-project.org/")'

$ sudo Rscript -e 'devtools::install_version("roxygen2", version = "5.0.1", repos="https://cloud.r-project.org/")'

$ sudo Rscript -e 'devtools::install_version("testthat", version = "1.0.2", repos="https://cloud.r-project.org/")'

```

Then we call `jekyll serve --watch` after build we see below message

```

~/Documents/project/AngersZhu/spark/sql

Moving back into docs dir.

Making directory api/sql

cp -r ../sql/site/. api/sql

Source: /Users/angerszhu/Documents/project/AngersZhu/spark/docs

Destination: /Users/angerszhu/Documents/project/AngersZhu/spark/docs/_site

Incremental build: disabled. Enable with --incremental

Generating...

done in 24.717 seconds.

Auto-regeneration: enabled for '/Users/angerszhu/Documents/project/AngersZhu/spark/docs'

Server address: http://127.0.0.1:4000

Server running... press ctrl-c to stop.

```

Visit http://127.0.0.1:4000 to get your newest change in doc web.

Closes#27106 from AngersZhuuuu/SPARK-30435.

Authored-by: angerszhu <angers.zhu@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR adds `numpy` to the list of things that need to be installed in order to build the API docs. It doesn't add a new dependency; it just documents an existing dependency.

### Why are the changes needed?

You cannot build the PySpark API docs without numpy installed. Otherwise you get this series of errors:

```

$ SKIP_SCALADOC=1 SKIP_RDOC=1 SKIP_SQLDOC=1 jekyll serve

Configuration file: .../spark/docs/_config.yml

Moving to python/docs directory and building sphinx.

sphinx-build -b html -d _build/doctrees . _build/html

Running Sphinx v2.3.1

loading pickled environment... done