### What changes were proposed in this pull request?

Move the rule `RemoveAllHints` after the batch `Resolution`.

### Why are the changes needed?

User-defined hints can be resolved by the rules injected via `extendedResolutionRules` or `postHocResolutionRules`.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added a test case

Closes#25746 from gatorsmile/moveRemoveAllHints.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Added "array indices start at 1" in annotation to make it clear for the usage of function slice, in R Scala Python component

### Why are the changes needed?

It will throw exception if the value stare is 0, but array indices start at 0 most of times in other scenarios.

### Does this PR introduce any user-facing change?

Yes, more info provided to user.

### How was this patch tested?

No tests added, only doc change.

Closes#25704 from sheepstop/master.

Authored-by: sheepstop <yangting617@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR enable `ThriftServerQueryTestSuite` and fix previously flaky test by:

1. Start thriftserver in `beforeAll()`.

2. Disable `spark.sql.hive.thriftServer.async`.

### Why are the changes needed?

Improve test coverage.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

```shell

build/sbt "hive-thriftserver/test-only *.ThriftServerQueryTestSuite " -Phive-thriftserver

build/mvn -Dtest=none -DwildcardSuites=org.apache.spark.sql.hive.thriftserver.ThriftServerQueryTestSuite test -Phive-thriftserver

```

Closes#25868 from wangyum/SPARK-28527-enable.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

Modified widths of some checkboxes in StagePage.

### Why are the changes needed?

When we increase the font size of the browsers or the default font size is big, the labels of checkbox of `Show Additional Metrics` in `StagePage` are wrapped like as follows.

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

Run the following and visit the `Stage Detail` page. Then, increase the font size.

```

$ bin/spark-shell

...

scala> spark.range(100000).groupBy("id").count.collect

```

Closes#25905 from sarutak/adjust-checkbox-width.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

partition path should be qualified to store in catalog.

There are some scenes:

1. ALTER TABLE t PARTITION(b=1) SET LOCATION '/path/x'

should be qualified: file:/path/x

**Hive 2.0.0 does not support for location without schema here.**

```

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. {0} is not absolute or has no scheme information. Please specify a complete absolute uri with scheme information.

```

2. ALTER TABLE t PARTITION(b=1) SET LOCATION 'x'

should be qualified: file:/tablelocation/x

**Hive 2.0.0 does not support for relative location here.**

3. ALTER TABLE t ADD PARTITION(b=1) LOCATION '/path/x'

should be qualified: file:/path/x

**the same with Hive 2.0.0**

4. ALTER TABLE t ADD PARTITION(b=1) LOCATION 'x'

should be qualified: file:/tablelocation/x

**the same with Hive 2.0.0**

Currently only ALTER TABLE t ADD PARTITION(b=1) LOCATION for hive serde table has the expected qualified path. we should make other scenes to be consist with it.

Another change is for alter table location.

## How was this patch tested?

add / modify existing TestCases

Closes#17254 from windpiger/qualifiedPartitionPath.

Authored-by: windpiger <songjun@outlook.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This patch introduces new options "startingOffsetsByTimestamp" and "endingOffsetsByTimestamp" to set specific timestamp per topic (since we're unlikely to set the different value per partition) to let source starts reading from offsets which have equal of greater timestamp, and ends reading until offsets which have equal of greater timestamp.

The new option would be optional of course, and take preference over existing offset options.

## How was this patch tested?

New unit tests added. Also manually tested basic functionality with Kafka 2.0.0 server.

Running query below

```

val df = spark.read.format("kafka")

.option("kafka.bootstrap.servers", "localhost:9092")

.option("subscribe", "spark_26848_test_v1,spark_26848_test_2_v1")

.option("startingOffsetsByTimestamp", """{"spark_26848_test_v1": 1549669142193, "spark_26848_test_2_v1": 1549669240965}""")

.option("endingOffsetsByTimestamp", """{"spark_26848_test_v1": 1549669265676, "spark_26848_test_2_v1": 1549699265676}""")

.load().selectExpr("CAST(value AS STRING)")

df.show()

```

with below records (one string which number part remarks when they're put after such timestamp) in

topic `spark_26848_test_v1`

```

hello1 1549669142193

world1 1549669142193

hellow1 1549669240965

world1 1549669240965

hello1 1549669265676

world1 1549669265676

```

topic `spark_26848_test_2_v1`

```

hello2 1549669142193

world2 1549669142193

hello2 1549669240965

world2 1549669240965

hello2 1549669265676

world2 1549669265676

```

the result of `df.show()` follows:

```

+--------------------+

| value|

+--------------------+

|world1 1549669240965|

|world1 1549669142193|

|world2 1549669240965|

|hello2 1549669240965|

|hellow1 154966924...|

|hello2 1549669265676|

|hello1 1549669142193|

|world2 1549669265676|

+--------------------+

```

Note that endingOffsets (as well as endingOffsetsByTimestamp) are exclusive.

Closes#23747 from HeartSaVioR/SPARK-26848.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR update LICENSE and NOTICE for Hive 2.3. Hive 2.3 newly added jars:

```

dropwizard-metrics-hadoop-metrics2-reporter.jar

HikariCP-2.5.1.jar

hive-common-2.3.6.jar

hive-llap-common-2.3.6.jar

hive-serde-2.3.6.jar

hive-service-rpc-2.3.6.jar

hive-shims-0.23-2.3.6.jar

hive-shims-2.3.6.jar

hive-shims-common-2.3.6.jar

hive-shims-scheduler-2.3.6.jar

hive-storage-api-2.6.0.jar

hive-vector-code-gen-2.3.6.jar

javax.jdo-3.2.0-m3.jar

json-1.8.jar

transaction-api-1.1.jar

velocity-1.5.jar

```

### Why are the changes needed?

We will distribute a binary release based on Hadoop 3.2 / Hive 2.3 in future.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

N/A

Closes#25896 from wangyum/SPARK-29016.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

BarrierCoordinator sets up a TimerTask for a round of global sync. Currently the run method is synchronized on the created TimerTask. But to be synchronized with handleRequest, it should be synchronized on the ContextBarrierState object, not TimerTask object.

### Why are the changes needed?

ContextBarrierState.handleRequest and TimerTask.run both access the internal status of a ContextBarrierState object. If TimerTask doesn't be synchronized on the same ContextBarrierState object, when the timer task is triggered, handleRequest still accepts new request and modify requesters field in the ContextBarrierState object. It makes the behavior inconsistency.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Test locally

Closes#25897 from viirya/SPARK-25903.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR reduce shuffle partitions from 200 to 4 in `SQLQueryTestSuite` to reduce testing time.

### Why are the changes needed?

Reduce testing time.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually tested in my local:

Before:

```

...

[info] - subquery/in-subquery/in-joins.sql (6 minutes, 19 seconds)

[info] - subquery/in-subquery/not-in-joins.sql (2 minutes, 17 seconds)

[info] - subquery/scalar-subquery/scalar-subquery-predicate.sql (45 seconds, 763 milliseconds)

...

Run completed in 1 hour, 22 minutes.

```

After:

```

...

[info] - subquery/in-subquery/in-joins.sql (1 minute, 12 seconds)

[info] - subquery/in-subquery/not-in-joins.sql (27 seconds, 541 milliseconds)

[info] - subquery/scalar-subquery/scalar-subquery-predicate.sql (17 seconds, 360 milliseconds)

...

Run completed in 47 minutes.

```

Closes#25891 from wangyum/SPARK-29203.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

Discuss in https://github.com/apache/spark/pull/25611

If cancel() and close() is called very quickly after the query is started, then they may both call cleanup() before Spark Jobs are started. Then sqlContext.sparkContext.cancelJobGroup(statementId) does nothing.

But then the execute thread can start the jobs, and only then get interrupted and exit through here. But then it will exit here, and no-one will cancel these jobs and they will keep running even though this execution has exited.

So when execute() was interrupted by `cancel()`, when get into catch block, we should call canJobGroup again to make sure the job was canceled.

### Why are the changes needed?

### Does this PR introduce any user-facing change?

NO

### How was this patch tested?

MT

Closes#25743 from AngersZhuuuu/SPARK-29036.

Authored-by: angerszhu <angers.zhu@gmail.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

Do task handling even the task exceeds maxResultSize configured. More details are in the jira description https://issues.apache.org/jira/browse/SPARK-29177 .

### Why are the changes needed?

Without this patch, the zombie tasks will prevent yarn from recycle those containers running these tasks, which will affect other applications.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

unit test and production test with a very large `SELECT` in spark thriftserver.

Closes#25850 from adrian-wang/zombie.

Authored-by: Daoyuan Wang <me@daoyuan.wang>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR supports UPDATE in the parser and add the corresponding logical plan. The SQL syntax is a standard UPDATE statement:

```

UPDATE tableName tableAlias SET colName=value [, colName=value]+ WHERE predicate?

```

### Why are the changes needed?

With this change, we can start to implement UPDATE in builtin sources and think about how to design the update API in DS v2.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New test cases added.

Closes#25626 from xianyinxin/SPARK-28892.

Authored-by: xy_xin <xianyin.xxy@alibaba-inc.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

After the newly added shuffle block fetching protocol in #24565, we can keep this work by extending the FetchShuffleBlocks message.

### What changes were proposed in this pull request?

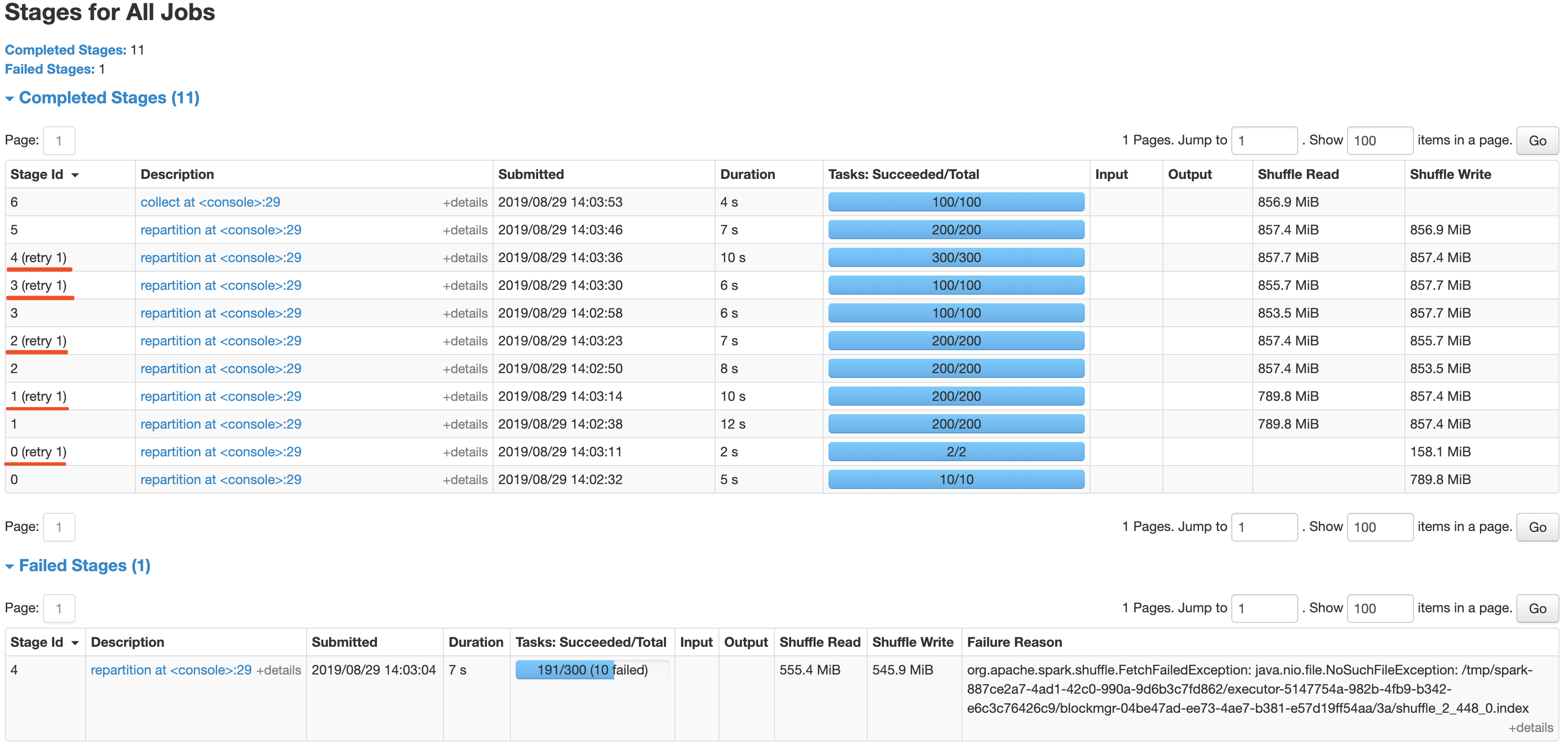

In this patch, we achieve the indeterminate shuffle rerun by reusing the task attempt id(unique id within an application) in shuffle id, so that each shuffle write attempt has a different file name. For the indeterministic stage, when the stage resubmits, we'll clear all existing map status and rerun all partitions.

All changes are summarized as follows:

- Change the mapId to mapTaskAttemptId in shuffle related id.

- Record the mapTaskAttemptId in MapStatus.

- Still keep mapId in ShuffleFetcherIterator for fetch failed scenario.

- Add the determinate flag in Stage and use it in DAGScheduler and the cleaning work for the intermediate stage.

### Why are the changes needed?

This is a follow-up work for #22112's future improvment[1]: `Currently we can't rollback and rerun a shuffle map stage, and just fail.`

Spark will rerun a finished shuffle write stage while meeting fetch failures, currently, the rerun shuffle map stage will only resubmit the task for missing partitions and reuse the output of other partitions. This logic is fine in most scenarios, but for indeterministic operations(like repartition), multiple shuffle write attempts may write different data, only rerun the missing partition will lead a correctness bug. So for the shuffle map stage of indeterministic operations, we need to support rolling back the shuffle map stage and re-generate the shuffle files.

### Does this PR introduce any user-facing change?

Yes, after this PR, the indeterminate stage rerun will be accepted by rerunning the whole stage. The original behavior is aborting the stage and fail the job.

### How was this patch tested?

- UT: Add UT for all changing code and newly added function.

- Manual Test: Also providing a manual test to verify the effect.

```

import scala.sys.process._

import org.apache.spark.TaskContext

val determinateStage0 = sc.parallelize(0 until 1000 * 1000 * 100, 10)

val indeterminateStage1 = determinateStage0.repartition(200)

val indeterminateStage2 = indeterminateStage1.repartition(200)

val indeterminateStage3 = indeterminateStage2.repartition(100)

val indeterminateStage4 = indeterminateStage3.repartition(300)

val fetchFailIndeterminateStage4 = indeterminateStage4.map { x =>

if (TaskContext.get.attemptNumber == 0 && TaskContext.get.partitionId == 190 &&

TaskContext.get.stageAttemptNumber == 0) {

throw new Exception("pkill -f -n java".!!)

}

x

}

val indeterminateStage5 = fetchFailIndeterminateStage4.repartition(200)

val finalStage6 = indeterminateStage5.repartition(100).collect().distinct.length

```

It's a simple job with multi indeterminate stage, it will get a wrong answer while using old Spark version like 2.2/2.3, and will be killed after #22112. With this fix, the job can retry all indeterminate stage as below screenshot and get the right result.

Closes#25620 from xuanyuanking/SPARK-25341-8.27.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This pr proposes to check method bytecode size in `BenchmarkQueryTest`. This metric is critical for performance numbers.

### Why are the changes needed?

For performance checks

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#25788 from maropu/CheckMethodSize.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR add support sorting `Execution Time` and `Duration` columns for `ThriftServerSessionPage`.

### Why are the changes needed?

Previously, it's not sorted correctly.

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

Manually do the following and test sorting on those columns in the Spark Thrift Server Session Page.

```

$ sbin/start-thriftserver.sh

$ bin/beeline -u jdbc:hive2://localhost:10000

0: jdbc:hive2://localhost:10000> create table t(a int);

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.521 seconds)

0: jdbc:hive2://localhost:10000> select * from t;

+----+--+

| a |

+----+--+

+----+--+

No rows selected (0.772 seconds)

0: jdbc:hive2://localhost:10000> show databases;

+---------------+--+

| databaseName |

+---------------+--+

| default |

+---------------+--+

1 row selected (0.249 seconds)

```

**Sorted by `Execution Time` column**:

**Sorted by `Duration` column**:

Closes#25892 from wangyum/SPARK-28599.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR aims to add tag `ExtendedSQLTest` for `SQLQueryTestSuite`.

This doesn't affect our Jenkins test coverage.

Instead, this tag gives us an ability to parallelize them by splitting this test suite and the other suites.

### Why are the changes needed?

`SQLQueryTestSuite` takes 45 mins alone because it has many SQL scripts to run.

<img width="906" alt="time" src="https://user-images.githubusercontent.com/9700541/65353553-4af0f100-dba2-11e9-9f2f-386742d28f92.png">

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

```

build/sbt "sql/test-only *.SQLQueryTestSuite" -Dtest.exclude.tags=org.apache.spark.tags.ExtendedSQLTest

...

[info] SQLQueryTestSuite:

[info] ScalaTest

[info] Run completed in 3 seconds, 147 milliseconds.

[info] Total number of tests run: 0

[info] Suites: completed 1, aborted 0

[info] Tests: succeeded 0, failed 0, canceled 0, ignored 0, pending 0

[info] No tests were executed.

[info] Passed: Total 0, Failed 0, Errors 0, Passed 0

[success] Total time: 22 s, completed Sep 20, 2019 12:23:13 PM

```

Closes#25872 from dongjoon-hyun/SPARK-29191.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Rewrite

```

NOT isnull(x) -> isnotnull(x)

NOT isnotnull(x) -> isnull(x)

```

### Why are the changes needed?

Make LogicalPlan more readable and useful for query canonicalization. Make same condition equal when judge query canonicalization equal

### Does this PR introduce any user-facing change?

NO

### How was this patch tested?

Newly added UTs.

Closes#25878 from AngersZhuuuu/SPARK-29162.

Authored-by: angerszhu <angers.zhu@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is a followup for https://github.com/apache/spark/pull/24981

Seems we mistakenly didn't added `test_pandas_udf_cogrouped_map` into `modules.py`. So we don't have official test results against that PR.

```

...

Starting test(python3.6): pyspark.sql.tests.test_pandas_udf

...

Starting test(python3.6): pyspark.sql.tests.test_pandas_udf_grouped_agg

...

Starting test(python3.6): pyspark.sql.tests.test_pandas_udf_grouped_map

...

Starting test(python3.6): pyspark.sql.tests.test_pandas_udf_scalar

...

Starting test(python3.6): pyspark.sql.tests.test_pandas_udf_window

Finished test(python3.6): pyspark.sql.tests.test_pandas_udf (21s)

...

Finished test(python3.6): pyspark.sql.tests.test_pandas_udf_grouped_map (49s)

...

Finished test(python3.6): pyspark.sql.tests.test_pandas_udf_window (58s)

...

Finished test(python3.6): pyspark.sql.tests.test_pandas_udf_scalar (82s)

...

Finished test(python3.6): pyspark.sql.tests.test_pandas_udf_grouped_agg (105s)

...

```

If tests fail, we should revert that PR.

### Why are the changes needed?

Relevant tests should be ran.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Jenkins tests.

Closes#25890 from HyukjinKwon/SPARK-28840.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Supported special string values for `DATE` type. They are simply notational shorthands that will be converted to ordinary date values when read. The following string values are supported:

- `epoch [zoneId]` - `1970-01-01`

- `today [zoneId]` - the current date in the time zone specified by `spark.sql.session.timeZone`.

- `yesterday [zoneId]` - the current date -1

- `tomorrow [zoneId]` - the current date + 1

- `now` - the date of running the current query. It has the same notion as `today`.

For example:

```sql

spark-sql> SELECT date 'tomorrow' - date 'yesterday';

2

```

### Why are the changes needed?

To maintain feature parity with PostgreSQL, see [8.5.1.4. Special Values](https://www.postgresql.org/docs/12/datatype-datetime.html)

### Does this PR introduce any user-facing change?

Previously, the parser fails on the special values with the error:

```sql

spark-sql> select date 'today';

Error in query:

Cannot parse the DATE value: today(line 1, pos 7)

```

After the changes, the special values are converted to appropriate dates:

```sql

spark-sql> select date 'today';

2019-09-06

```

### How was this patch tested?

- Added tests to `DateFormatterSuite` to check parsing special values from regular strings.

- Tests in `DateTimeUtilsSuite` check parsing those values from `UTF8String`

- Uncommented tests in `date.sql`

Closes#25708 from MaxGekk/datetime-special-values.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Correct a word in a log message.

### Why are the changes needed?

Log message will be more clearly.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Test is not needed.

Closes#25880 from mdianjun/fix-a-word.

Authored-by: madianjun <madianjun@jd.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Refactoring of the `DateTimeUtils.getEpoch()` function by avoiding decimal operations that are pretty expensive, and converting the final result to the decimal type at the end.

### Why are the changes needed?

The changes improve performance of the `getEpoch()` method at least up to **20 times**.

Before:

```

Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------------------------------

cast to timestamp 256 277 33 39.0 25.6 1.0X

EPOCH of timestamp 23455 23550 131 0.4 2345.5 0.0X

```

After:

```

Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------------------------------

cast to timestamp 255 294 34 39.2 25.5 1.0X

EPOCH of timestamp 1049 1054 9 9.5 104.9 0.2X

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By existing test from `DateExpressionSuite`.

Closes#25881 from MaxGekk/optimize-extract-epoch.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Changed the `DateTimeUtils.getMilliseconds()` by avoiding the decimal division, and replacing it by setting scale and precision while converting microseconds to the decimal type.

### Why are the changes needed?

This improves performance of `extract` and `date_part()` by more than **50 times**:

Before:

```

Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------------------------------

cast to timestamp 397 428 45 25.2 39.7 1.0X

MILLISECONDS of timestamp 36723 36761 63 0.3 3672.3 0.0X

```

After:

```

Invoke extract for timestamp: Best Time(ms) Avg Time(ms) Stdev(ms) Rate(M/s) Per Row(ns) Relative

------------------------------------------------------------------------------------------------------------------------

cast to timestamp 278 284 6 36.0 27.8 1.0X

MILLISECONDS of timestamp 592 606 13 16.9 59.2 0.5X

```

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By existing test suite - `DateExpressionsSuite`

Closes#25871 from MaxGekk/optimize-epoch-millis.

Lead-authored-by: Maxim Gekk <max.gekk@gmail.com>

Co-authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Support for dot product with:

- `ml.linalg.Vector`

- `ml.linalg.Vectors`

- `mllib.linalg.Vector`

- `mllib.linalg.Vectors`

### Why are the changes needed?

Dot product is useful for feature engineering and scoring. BLAS routines are already there, just a wrapper is needed.

### Does this PR introduce any user-facing change?

No user facing changes, just some new functionality.

### How was this patch tested?

Tests were written and added to the appropriate `VectorSuites` classes. They can be quickly run with:

```

sbt "mllib-local/testOnly org.apache.spark.ml.linalg.VectorsSuite"

sbt "mllib/testOnly org.apache.spark.mllib.linalg.VectorsSuite"

```

Closes#25818 from phpisciuneri/SPARK-29121.

Authored-by: Patrick Pisciuneri <phpisciuneri@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR aims to add linters and license/dependency checkers to GitHub Action. This excludes `lint-r` intentionally because https://github.com/actions/setup-r is not ready. We can add that later when it becomes available.

### Why are the changes needed?

This will help the PR reviews.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

See the GitHub Action result on this PR.

Closes#25879 from dongjoon-hyun/SPARK-29199.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Credit to vanzin as he found and commented on this while reviewing #25670 - [comment](https://github.com/apache/spark/pull/25670#discussion_r325383512).

This patch proposes to specify UTF-8 explicitly while reading/writer event log file.

### Why are the changes needed?

The event log file is being read/written as default character set of JVM process which may open the chance to bring some problems on reading event log files from another machines. Spark's de facto standard character set is UTF-8, so it should be explicitly set to.

### Does this PR introduce any user-facing change?

Yes, if end users have been running Spark process with different default charset than "UTF-8", especially their driver JVM processes. No otherwise.

### How was this patch tested?

Existing UTs, as ReplayListenerSuite contains "end-to-end" event logging/reading tests (both uncompressed/compressed).

Closes#25845 from HeartSaVioR/SPARK-29160.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

TransportClientFactory.createClient() is called by task and TransportClientFactory.close() is called by executor.

When stop the executor, close() will set workerGroup = null, NPE will occur in createClient which generate many exception in log.

For exception occurs after close(), treated it as an expected Exception

and transform it to InterruptedException which can be processed by Executor.

### Why are the changes needed?

The change can reduce the exception stack trace in log file, and user won't be confused by these excepted exception.

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

New tests are added in TransportClientFactorySuite and ExecutorSuite

Closes#25759 from colinmjj/spark-19147.

Authored-by: colinma <colinma@tencent.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This patch fixes the issue brought by [SPARK-21870](http://issues.apache.org/jira/browse/SPARK-21870): when generating code for parameter type, it doesn't consider array type in javaType. At least we have one, Spark should generate code for BinaryType as `byte[]`, but Spark create the code for BinaryType as `[B` and generated code fails compilation.

Below is the generated code which failed compilation (Line 380):

```

/* 380 */ private void agg_doAggregate_count_0([B agg_expr_1_1, boolean agg_exprIsNull_1_1, org.apache.spark.sql.catalyst.InternalRow agg_unsafeRowAggBuffer_1) throws java.io.IOException {

/* 381 */ // evaluate aggregate function for count

/* 382 */ boolean agg_isNull_26 = false;

/* 383 */ long agg_value_28 = -1L;

/* 384 */ if (!false && agg_exprIsNull_1_1) {

/* 385 */ long agg_value_31 = agg_unsafeRowAggBuffer_1.getLong(1);

/* 386 */ agg_isNull_26 = false;

/* 387 */ agg_value_28 = agg_value_31;

/* 388 */ } else {

/* 389 */ long agg_value_33 = agg_unsafeRowAggBuffer_1.getLong(1);

/* 390 */

/* 391 */ long agg_value_32 = -1L;

/* 392 */

/* 393 */ agg_value_32 = agg_value_33 + 1L;

/* 394 */ agg_isNull_26 = false;

/* 395 */ agg_value_28 = agg_value_32;

/* 396 */ }

/* 397 */ // update unsafe row buffer

/* 398 */ agg_unsafeRowAggBuffer_1.setLong(1, agg_value_28);

/* 399 */ }

```

There wasn't any test for HashAggregateExec specifically testing this, but randomized test in ObjectHashAggregateSuite could encounter this and that's why ObjectHashAggregateSuite is flaky.

### Why are the changes needed?

Without the fix, generated code from HashAggregateExec may fail compilation.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added new UT. Without the fix, newly added UT fails.

Closes#25830 from HeartSaVioR/SPARK-29140.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Update breeze dependency to 1.0.

### Why are the changes needed?

Breeze 1.0 supports Scala 2.13 and has a few bug fixes.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#25874 from srowen/SPARK-28772.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the PR, I propose to change behavior of the `date_part()` function in handling `null` field, and make it the same as PostgreSQL has. If `field` parameter is `null`, the function should return `null` of the `double` type as PostgreSQL does:

```sql

# select date_part(null, date '2019-09-20');

date_part

-----------

(1 row)

# select pg_typeof(date_part(null, date '2019-09-20'));

pg_typeof

------------------

double precision

(1 row)

```

### Why are the changes needed?

The `date_part()` function was added to maintain feature parity with PostgreSQL but current behavior of the function is different in handling null as `field`.

### Does this PR introduce any user-facing change?

Yes.

Before:

```sql

spark-sql> select date_part(null, date'2019-09-20');

Error in query: null; line 1 pos 7

```

After:

```sql

spark-sql> select date_part(null, date'2019-09-20');

NULL

```

### How was this patch tested?

Add new tests to `DateFunctionsSuite for 2 cases:

- `field` = `null`, `source` = a date literal

- `field` = `null`, `source` = a date column

Closes#25865 from MaxGekk/date_part-null.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR aims to extend the existing benchmarks to save JDK9+ result separately.

All `core` module benchmark test results are added. I'll run the other test suites in another PR.

After regenerating all results, we will check JDK11 performance regressions.

### Why are the changes needed?

From Apache Spark 3.0, we support both JDK8 and JDK11. We need to have a way to find the performance regression.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually run the benchmark.

Closes#25873 from dongjoon-hyun/SPARK-JDK11-PERF.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

if threshold<0, convert implict 0 to 1, althought this will break sparsity

### Why are the changes needed?

if `threshold<0`, current impl deal with sparse vector incorrectly.

See JIRA [SPARK-29144](https://issues.apache.org/jira/browse/SPARK-29144) and [Scikit-Learn's Binarizer](https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.Binarizer.html) ('Threshold may not be less than 0 for operations on sparse matrices.') for details.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

added testsuite

Closes#25829 from zhengruifeng/binarizer_throw_exception_sparse_vector.

Authored-by: zhengruifeng <ruifengz@foxmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PRs add Java 11 version to the document.

### Why are the changes needed?

Apache Spark 3.0.0 starts to support JDK11 officially.

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

Manually. Doc generation.

Closes#25875 from dongjoon-hyun/SPARK-29196.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Modify the approach in `DataFrameNaFunctions.fillValue`, the new one uses `df.withColumns` which only address the columns need to be filled. After this change, there are no more ambiguous fileds detected for joined dataframe.

### Why are the changes needed?

Before this change, when you have a joined table that has the same field name from both original table, fillna will fail even if you specify a subset that does not include the 'ambiguous' fields.

```

scala> val df1 = Seq(("f1-1", "f2", null), ("f1-2", null, null), ("f1-3", "f2", "f3-1"), ("f1-4", "f2", "f3-1")).toDF("f1", "f2", "f3")

scala> val df2 = Seq(("f1-1", null, null), ("f1-2", "f2", null), ("f1-3", "f2", "f4-1")).toDF("f1", "f2", "f4")

scala> val df_join = df1.alias("df1").join(df2.alias("df2"), Seq("f1"), joinType="left_outer")

scala> df_join.na.fill("", cols=Seq("f4"))

org.apache.spark.sql.AnalysisException: Reference 'f2' is ambiguous, could be: df1.f2, df2.f2.;

```

### Does this PR introduce any user-facing change?

Yes, fillna operation will pass and give the right answer for a joined table.

### How was this patch tested?

Local test and newly added UT.

Closes#25768 from xuanyuanking/SPARK-29063.

Lead-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR allows non-ascii string as an exception message in Python 2 by explicitly en/decoding in case of `str` in Python 2.

### Why are the changes needed?

Previously PySpark will hang when the `UnicodeDecodeError` occurs and the real exception cannot be passed to the JVM side.

See the reproducer as below:

```python

def f():

raise Exception("中")

spark = SparkSession.builder.master('local').getOrCreate()

spark.sparkContext.parallelize([1]).map(lambda x: f()).count()

```

### Does this PR introduce any user-facing change?

User may not observe hanging for the similar cases.

### How was this patch tested?

Added a new test and manually checking.

This pr is based on #18324, credits should also go to dataknocker.

To make lint-python happy for python3, it also includes a followup fix for #25814Closes#25847 from advancedxy/python_exception_19926_and_21045.

Authored-by: Xianjin YE <advancedxy@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Switch from using a Thread sleep for waiting for commands to finish to just waiting for the command to finish with a watcher & improve the error messages in the SecretsTestsSuite.

### Why are the changes needed?

Currently some of the Spark Kubernetes tests have race conditions with command execution, and the frequent use of eventually makes debugging test failures difficult.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing tests pass after removal of thread.sleep

Closes#25765 from holdenk/SPARK-28937SPARK-28936-improve-kubernetes-integration-tests.

Authored-by: Holden Karau <hkarau@apple.com>

Signed-off-by: Holden Karau <hkarau@apple.com>

### What changes were proposed in this pull request?

This PR allows Python toLocalIterator to prefetch the next partition while the first partition is being collected. The PR also adds a demo micro bench mark in the examples directory, we may wish to keep this or not.

### Why are the changes needed?

In https://issues.apache.org/jira/browse/SPARK-23961 / 5e79ae3b40 we changed PySpark to only pull one partition at a time. This is memory efficient, but if partitions take time to compute this can mean we're spending more time blocking.

### Does this PR introduce any user-facing change?

A new param is added to toLocalIterator

### How was this patch tested?

New unit test inside of `test_rdd.py` checks the time that the elements are evaluated at. Another test that the results remain the same are added to `test_dataframe.py`.

I also ran a micro benchmark in the examples directory `prefetch.py` which shows an improvement of ~40% in this specific use case.

>

> 19/08/16 17:11:36 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

> Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

> Setting default log level to "WARN".

> To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

> Running timers:

>

> [Stage 32:> (0 + 1) / 1]

> Results:

>

> Prefetch time:

>

> 100.228110831

>

>

> Regular time:

>

> 188.341721614

>

>

>

Closes#25515 from holdenk/SPARK-27659-allow-pyspark-tolocalitr-to-prefetch.

Authored-by: Holden Karau <hkarau@apple.com>

Signed-off-by: Holden Karau <hkarau@apple.com>

### What changes were proposed in this pull request?

This patch proposes to increase timeout to wait for executor(s) to be up in SparkContextSuite, as we observed these tests failed due to wait timeout.

### Why are the changes needed?

There's some case that CI build is extremely slow which requires 3x or more time to pass the test.

(https://issues.apache.org/jira/browse/SPARK-29139?focusedCommentId=16934034&page=com.atlassian.jira.plugin.system.issuetabpanels%3Acomment-tabpanel#comment-16934034)

Allocating higher timeout wouldn't bring additional latency, as the code checks the condition with sleeping 10 ms per loop iteration.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A, as the case is not likely to be occurred frequently.

Closes#25864 from HeartSaVioR/SPARK-29139.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR use `java-version` instead of `version` for GitHub Action. More details:

204b974cf4ac25aeee3a

### Why are the changes needed?

The `version` property will not be supported after October 1, 2019.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#25866 from wangyum/java-version.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR is a followup of https://github.com/apache/spark/pull/25838 and proposes to create an actual test case under `src/test`. Previously, compile only test existed at `src/main`.

Also, just changed the wordings in `SerializableConfiguration` just only to describe what it does (remove other words).

### Why are the changes needed?

Tests codes should better exist in `src/test` not `src/main`. Also, it should better test a basic functionality.

### Does this PR introduce any user-facing change?

No except minor doc change.

### How was this patch tested?

Unit test was added.

Closes#25867 from HyukjinKwon/SPARK-29158.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently the checks in the Analyzer require that V2 Tables have BATCH_WRITE defined for all tables that have V1 Write fallbacks. This is confusing as these tables may not have the V2 writer interface implemented yet. This PR adds this table capability to these checks.

In addition, this allows V2 tables to leverage the V1 APIs for DataFrameWriter.save if they do extend the V1_BATCH_WRITE capability. This way, these tables can continue to receive partitioning information and also perform checks for the existence of tables, and support all SaveModes.

### Why are the changes needed?

Partitioned saves through DataFrame.write are otherwise broken for V2 tables that support the V1

write API.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

V1WriteFallbackSuite

Closes#25767 from brkyvz/bwcheck.

Authored-by: Burak Yavuz <brkyvz@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This pr is to propagate all the SQL configurations to executors in `SQLQueryTestSuite`. When the propagation enabled in the tests, a potential bug below becomes apparent;

```

CREATE TABLE num_data (id int, val decimal(38,10)) USING parquet;

....

select sum(udf(CAST(null AS Decimal(38,0)))) from range(1,4): QueryOutput(select sum(udf(CAST(null AS Decimal(38,0)))) from range(1,4),struct<>,java.lang.IllegalArgumentException

[info] requirement failed: MutableProjection cannot use UnsafeRow for output data types: decimal(38,0)) (SQLQueryTestSuite.scala:380)

```

The root culprit is that `InterpretedMutableProjection` has incorrect validation in the interpreter mode: `validExprs.forall { case (e, _) => UnsafeRow.isFixedLength(e.dataType) }`. This validation should be the same with the condition (`isMutable`) in `HashAggregate.supportsAggregate`: https://github.com/apache/spark/blob/master/sql/core/src/main/scala/org/apache/spark/sql/execution/aggregate/HashAggregateExec.scala#L1126

### Why are the changes needed?

Bug fixes.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Added tests in `AggregationQuerySuite`

Closes#25831 from maropu/SPARK-29122.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This is a follow-up of the [review comment](https://github.com/apache/spark/pull/25706#discussion_r321923311).

This patch unifies the default wait time to be 10 seconds as it would fit most of UTs (as they have smaller timeouts) and doesn't bring additional latency since it will return if the condition is met.

This patch doesn't touch the one which waits 100000 milliseconds (100 seconds), to not break anything unintentionally, though I'd rather questionable that we really need to wait for 100 seconds.

### Why are the changes needed?

It simplifies the test code and get rid of various heuristic values on timeout.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

CI build will test the patch, as it would be the best environment to test the patch (builds are running there).

Closes#25837 from HeartSaVioR/MINOR-unify-default-wait-time-for-wait-until-empty.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently the SerializableConfiguration, which makes the Hadoop configuration serializable is private. This makes it public, with a developer annotation.

### Why are the changes needed?

Many data source depend on the Hadoop configuration which may have specific components on the driver. Inside of Spark's own DataSourceV2 implementations this is frequently used (Parquet, Json, Orc, etc.)

### Does this PR introduce any user-facing change?

This provides a new developer API.

### How was this patch tested?

No new tests are added as this only exposes a previously developed & thoroughly used + tested component.

Closes#25838 from holdenk/SPARK-29158-expose-serializableconfiguration-for-dsv2.

Authored-by: Holden Karau <hkarau@apple.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to use `tryWithResource` for ORC file.

### Why are the changes needed?

This is a follow-up to address https://github.com/apache/spark/pull/25006#discussion_r298788206 .

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with the existing tests.

Closes#25842 from dongjoon-hyun/SPARK-28208.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This adds a new write API as proposed in the [SPIP to standardize logical plans](https://issues.apache.org/jira/browse/SPARK-23521). This new API:

* Uses clear verbs to execute writes, like `append`, `overwrite`, `create`, and `replace` that correspond to the new logical plans.

* Only creates v2 logical plans so the behavior is always consistent.

* Does not allow table configuration options for operations that cannot change table configuration. For example, `partitionedBy` can only be called when the writer executes `create` or `replace`.

Here are a few example uses of the new API:

```scala

df.writeTo("catalog.db.table").append()

df.writeTo("catalog.db.table").overwrite($"date" === "2019-06-01")

df.writeTo("catalog.db.table").overwritePartitions()

df.writeTo("catalog.db.table").asParquet.create()

df.writeTo("catalog.db.table").partitionedBy(days($"ts")).createOrReplace()

df.writeTo("catalog.db.table").using("abc").replace()

```

## How was this patch tested?

Added `DataFrameWriterV2Suite` that tests the new write API. Existing tests for v2 plans.

Closes#25681 from rdblue/SPARK-28612-add-data-frame-writer-v2.

Authored-by: Ryan Blue <blue@apache.org>

Signed-off-by: Burak Yavuz <brkyvz@gmail.com>

### What changes were proposed in this pull request?

Added document reference for USE databse sql command

### Why are the changes needed?

For USE database command usage

### Does this PR introduce any user-facing change?

It is adding the USE database sql command refernce information in the doc

### How was this patch tested?

Attached the test snap

Closes#25572 from shivusondur/jiraUSEDaBa1.

Lead-authored-by: shivusondur <shivusondur@gmail.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

This patch proposes to change the log level of logging generated code in case of compile error being occurred in UT. This would help to investigate compilation issue of generated code easier, as currently we got exception message of line number but there's no generated code being logged actually (as in most cases of UT the threshold of log level is at least WARN).

### Why are the changes needed?

This would help investigating issue on compilation error for generated code in UT.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

N/A

Closes#25835 from HeartSaVioR/MINOR-always-log-generated-code-on-fail-to-compile-in-unit-testing.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>