## What changes were proposed in this pull request?

Looks updating documentation from 0.8.0 to 0.12.1 was missed.

## How was this patch tested?

N/A

Closes#24504 from HyukjinKwon/SPARK-27276-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

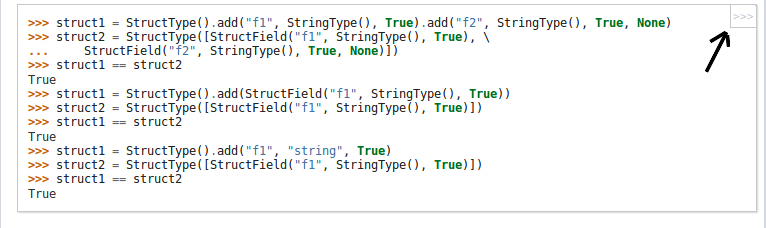

Add a non-intrusive button for python API documentation, which will remove ">>>" prompts and outputs of code - for easier copying of code.

For example: The below code-snippet in the document is difficult to copy due to ">>>" prompts

```

>>> l = [('Alice', 1)]

>>> spark.createDataFrame(l).collect()

[Row(_1='Alice', _2=1)]

```

Becomes this - After the copybutton in the corner of of code-block is pressed - which is easier to copy

```

l = [('Alice', 1)]

spark.createDataFrame(l).collect()

```

## File changes

Made changes to python/docs/conf.py and copybutton.js - thus only modifying sphinx frontend and no changes were made to the documentation itself- Build process for documentation remains the same.

copybutton.js -> This JS snippet was taken from the official python.org documentation site.

## How was this patch tested?

NA

Closes#24456 from sangramga/copybutton.

Authored-by: sangramga <sangramga@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR aims to upgrade Surefire plugin to 3.0.0-M3 to bring [SUREFIRE-1613](https://issues.apache.org/jira/browse/SUREFIRE-1613).

## How was this patch tested?

Pass the Jenkins with the existing tests.

Closes#24501 from dongjoon-hyun/SPARK-27608.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Fix bug in UnivocityParser. makeConverter method didn't work correctly for UsedDefinedType

## How was this patch tested?

A test suite for UnivocityParser has been extended.

Closes#24496 from kalkolab/spark-27591.

Authored-by: Artem Kalchenko <artem.kalchenko@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

On a second look in comments, seems like the JobConf isn't needed anymore here. It was used inconsistently before, it seems, and I don't see any reason a Hadoop Job config is required here anyway.

## How was this patch tested?

Existing tests.

Closes#24491 from srowen/SPARK-26936.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/24144 . #24144 missed one case: when hash aggregate fallback to sort aggregate, the life cycle of UDAF is: INIT -> UPDATE -> MERGE -> FINISH.

However, not all Hive UDAF can support it. Hive UDAF knows the aggregation mode when creating the aggregation buffer, so that it can create different buffers for different inputs: the original data or the aggregation buffer. Please see an example in the [sketches library](7f9e76e9e0/src/main/java/com/yahoo/sketches/hive/cpc/DataToSketchUDAF.java (L107)). The buffer for UPDATE may not support MERGE.

This PR updates the Hive UDAF adapter in Spark to support INIT -> UPDATE -> MERGE -> FINISH, by turning it to INIT -> UPDATE -> FINISH + IINIT -> MERGE -> FINISH.

## How was this patch tested?

a new test case

Closes#24459 from cloud-fan/hive-udaf.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

If a file is too big (>2GB), we should fail fast and do not try to read the file.

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#24483 from mengxr/SPARK-27588.

Authored-by: Xiangrui Meng <meng@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

This patch fixes a bug which YARN file-related configurations are being overwritten when there're some values to assign - e.g. if `--file` is specified as an argument, `spark.yarn.dist.files` is overwritten with the value of argument. After this patch the existing value and new value will be merged before assigning to the value of configuration.

## How was this patch tested?

Added UT, and manually tested with below command:

> ./bin/spark-submit --verbose --files /etc/spark2/conf/spark-defaults.conf.template --master yarn-cluster --class org.apache.spark.examples.SparkPi examples/jars/spark-examples_2.11-2.4.0.jar 10

where the spark conf file has

`spark.yarn.dist.files=file:/etc/spark2/conf/atlas-application.properties.yarn#atlas-application.properties`

```

Spark config:

...

(spark.yarn.dist.files,file:/etc/spark2/conf/atlas-application.properties.yarn#atlas-application.properties,file:///etc/spark2/conf/spark-defaults.conf.template)

...

```

Closes#24465 from HeartSaVioR/SPARK-27575.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

There is a MemorySink v2 already so v1 can be removed. In this PR I've removed it completely.

What this PR contains:

* V1 memory sink removal

* V2 memory sink renamed to become the only implementation

* Since DSv2 sends exceptions in a chained format (linking them with cause field) I've made python side compliant

* Adapted all the tests

## How was this patch tested?

Existing unit tests.

Closes#24403 from gaborgsomogyi/SPARK-23014.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This PR avoids usage of reflective calls in Scala. It removes the import that suppresses the warnings and rewrites code in small ways to avoid accessing methods that aren't technically accessible.

## How was this patch tested?

Existing tests.

Closes#24463 from srowen/SPARK-27571.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

I want to get rid of as much use of `scala.language.existentials` as possible for 3.0. It's a complicated language feature that generates warnings unless this value is imported. It might even be on the way out of Scala: https://contributors.scala-lang.org/t/proposal-to-remove-existential-types-from-the-language/2785

For Spark, it comes up mostly where the code plays fast and loose with generic types, not the advanced situations you'll often see referenced where this feature is explained. For example, it comes up in cases where a function returns something like `(String, Class[_])`. Scala doesn't like matching this to any other instance of `(String, Class[_])` because doing so requires inferring the existence of some type that satisfies both. Seems obvious if the generic type is a wildcard, but, not technically something Scala likes to let you get away with.

This is a large PR, and it only gets rid of _most_ instances of `scala.language.existentials`. The change should be all compile-time and shouldn't affect APIs or logic.

Many of the changes simply touch up sloppiness about generic types, making the known correct value explicit in the code.

Some fixes involve being more explicit about the existence of generic types in methods. For instance, `def foo(arg: Class[_])` seems innocent enough but should really be declared `def foo[T](arg: Class[T])` to let Scala select and fix a single type when evaluating calls to `foo`.

For kind of surprising reasons, this comes up in places where code evaluates a tuple of things that involve a generic type, but is OK if the two parts of the tuple are evaluated separately.

One key change was altering `Utils.classForName(...): Class[_]` to the more correct `Utils.classForName[T](...): Class[T]`. This caused a number of small but positive changes to callers that otherwise had to cast the result.

In several tests, `Dataset[_]` was used where `DataFrame` seems to be the clear intent.

Finally, in a few cases in MLlib, the return type `this.type` was used where there are no subclasses of the class that uses it. This really isn't needed and causes issues for Scala reasoning about the return type. These are just changed to be concrete classes as return types.

After this change, we have only a few classes that still import `scala.language.existentials` (because modifying them would require extensive rewrites to fix) and no build warnings.

## How was this patch tested?

Existing tests.

Closes#24431 from srowen/SPARK-27536.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

Add user guide for binary file data source.

<img width="826" alt="Screen Shot 2019-04-28 at 10 21 26 PM" src="https://user-images.githubusercontent.com/829644/56877594-0488d300-6a04-11e9-9064-5047dfedd913.png">

## How was this patch tested?

(Please explain how this patch was tested. E.g. unit tests, integration tests, manual tests)

(If this patch involves UI changes, please attach a screenshot; otherwise, remove this)

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#24484 from mengxr/SPARK-27472.

Authored-by: Xiangrui Meng <meng@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

Currently `countDistinct("*")` doesn't work. An analysis exception is thrown:

```scala

val df = sql("select id % 100 from range(100000)")

df.select(countDistinct("*")).first()

org.apache.spark.sql.AnalysisException: Invalid usage of '*' in expression 'count';

```

Users need to use `expr`.

```scala

df.select(expr("count(distinct(*))")).first()

```

This limits some API usage like `df.select(count("*"), countDistinct("*))`.

The PR takes the simplest fix that lets analyzer expand star and resolve `count` function.

## How was this patch tested?

Added unit test.

Closes#24482 from viirya/SPARK-27581.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

```

========================================================================

Running Scala style checks

========================================================================

[info] Checking Scala style using SBT with these profiles: -Phadoop-2.7 -Pkubernetes -Phive-thriftserver -Pkinesis-asl -Pyarn -Pspark-ganglia-lgpl -Phive -Pmesos

Scalastyle checks failed at following occurrences:

[error] /home/jenkins/workspace/SparkPullRequestBuilder/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/v2/FileScan.scala:29:0: org.apache.spark.sql.sources.Filter is in wrong order relative to org.apache.spark.sql.sources.v2.reader..

[error] Total time: 17 s, completed Apr 29, 2019 3:09:43 AM

```

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/104987/console

## How was this patch tested?

manual tests:

```

dev/scalastyle

```

Closes#24487 from wangyum/SPARK-27580.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

The method `QueryPlan.sameResult` is used for comparing logical plans in order to:

1. cache data in CacheManager

2. uncache data in CacheManager

3. Reuse subqueries

4. etc...

Currently the method `sameReuslt` always return false for `BatchScanExec`. We should fix it by implementing `doCanonicalize` for the node.

## How was this patch tested?

Unit test

Closes#24475 from gengliangwang/sameResultForV2.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

https://issues.apache.org/jira/browse/SPARK-25250 reports a bug that, a task which is failed with `CommitDeniedException` gets retried many times.

This can happen when a stage has 2 task set managers, one is zombie, one is active. A task from the zombie TSM completes, and commits to a central coordinator(assuming it's a file writing task). Then the corresponding task from the active TSM will fail with `CommitDeniedException`. `CommitDeniedException.countTowardsTaskFailures` is false, so the active TSM will keep retrying this task, until the job finishes. This wastes resource a lot.

#21131 firstly implements that a previous successful completed task from zombie `TaskSetManager` could mark the task of the same partition completed in the active `TaskSetManager`. Later #23871 improves the implementation to cover a corner case that, an active `TaskSetManager` hasn't been created when a previous task succeed.

However, #23871 has a bug and was reverted in #24359. With hindsight, #23781 is fragile because we need to sync the states between `DAGScheduler` and `TaskScheduler`, about which partitions are completed.

This PR proposes a new fix:

1. When `DAGScheduler` gets a task success event from an earlier attempt, notify the `TaskSchedulerImpl` about it

2. When `TaskSchedulerImpl` knows a partition is already completed, ask the active `TaskSetManager` to mark the corresponding task as finished, if the task is not finished yet.

This fix covers the corner case, because:

1. If `DAGScheduler` gets the task completion event from zombie TSM before submitting the new stage attempt, then `DAGScheduler` knows that this partition is completed, and it will exclude this partition when creating task set for the new stage attempt. See `DAGScheduler.submitMissingTasks`

2. If `DAGScheduler` gets the task completion event from zombie TSM after submitting the new stage attempt, then the active TSM is already created.

Compared to the previous fix, the message loop becomes longer, so it's likely that, the active task set manager has already retried the task multiple times. But this failure window won't be too big, and we want to avoid the worse case that retries the task many times until the job finishes. So this solution is acceptable.

## How was this patch tested?

a new test case.

Closes#24375 from cloud-fan/fix2.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

A follow-up task from SPARK-25348. To save I/O cost, Spark shouldn't attempt to read the file if users didn't request the `content` column. For example:

```

spark.read.format("binaryFile").load(path).filter($"length" < 1000000).count()

```

## How was this patch tested?

Unit test added.

Please review http://spark.apache.org/contributing.html before opening a pull request.

Closes#24473 from WeichenXu123/SPARK-27534.

Lead-authored-by: Xiangrui Meng <meng@databricks.com>

Co-authored-by: WeichenXu <weichen.xu@databricks.com>

Signed-off-by: Xiangrui Meng <meng@databricks.com>

## What changes were proposed in this pull request?

Update the `docs/building-spark.md`. Otherwise:

```

mvn package -DskipTests=true

...

[INFO] --- maven-enforcer-plugin:3.0.0-M2:enforce (enforce-versions) spark-parent_2.12 ---

[WARNING] Rule 0: org.apache.maven.plugins.enforcer.RequireMavenVersion failed with message:

Detected Maven Version: 3.6.0 is not in the allowed range 3.6.1.

...

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-enforcer-plugin:3.0.0-M2:enforce (enforce-versions) on project spark-parent_2.12: Some Enforcer rules have failed. Look above for specific messages explaining why the rule failed. -> [Help 1]

[ERROR]

...

```

## How was this patch tested?

Just test `https://archive.apache.org/dist/maven/maven-3/3.6.1/binaries/apache-maven-3.6.1-bin.zip` is avilable.

Closes#24477 from wangyum/SPARK-27467.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This PR addresses SPARK-23619: https://issues.apache.org/jira/browse/SPARK-23619

It adds additional comments indicating the default column names for the `explode` and `posexplode`

functions in Spark-SQL.

Functions for which comments have been updated so far:

* stack

* inline

* explode

* posexplode

* explode_outer

* posexplode_outer

## How was this patch tested?

This is just a change in the comments. The package builds and tests successfullly after the change.

Closes#23748 from jashgala/SPARK-23619.

Authored-by: Jash Gala <jashgala@amazon.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

some minor updates:

- `Execute` action miss `name` message

- typo in SS document

- typo in SQLConf

## How was this patch tested?

N/A

Closes#24466 from uncleGen/minor-fix.

Authored-by: uncleGen <hustyugm@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Change spark-token-provider-kafka-0-10 dependency on spark-core to be provided

## How was this patch tested?

Ran existing unit tests

Closes#24384 from koertkuipers/feat-kafka-token-provider-fix-deps.

Authored-by: Koert Kuipers <koert@tresata.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

## What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/24012 , to add the corresponding capabilities for streaming.

## How was this patch tested?

existing tests

Closes#24129 from cloud-fan/capability.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

We can get the latest downloadable Spark versions from https://dist.apache.org/repos/dist/release/spark/

## How was this patch tested?

manually.

Closes#24454 from cloud-fan/test.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Right now Kafka source v2 doesn't support null values. The issue is in org.apache.spark.sql.kafka010.KafkaRecordToUnsafeRowConverter.toUnsafeRow which doesn't handle null values.

## How was this patch tested?

add new unit tests

Closes#24441 from uncleGen/SPARK-27494.

Authored-by: uncleGen <hustyugm@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

When there are mismatched types among cases or else values in case when expression, current error message is hard to read to figure out what and where the mismatch is.

This patch simply improves the error message for mismatched types for case when.

Before:

```scala

scala> spark.range(100).select(when('id === 1, array(struct('id * 123456789 + 123456789 as "x"))).otherwise(array(struct('id * 987654321 + 987654321 as

"y"))))

org.apache.spark.sql.AnalysisException: cannot resolve 'CASE WHEN (`id` = CAST(1 AS BIGINT)) THEN array(named_struct('x', ((`id` * CAST(123456789 AS BI

GINT)) + CAST(123456789 AS BIGINT)))) ELSE array(named_struct('y', ((`id` * CAST(987654321 AS BIGINT)) + CAST(987654321 AS BIGINT)))) END' due to data

type mismatch: THEN and ELSE expressions should all be same type or coercible to a common type;;

```

After:

```scala

scala> spark.range(100).select(when('id === 1, array(struct('id * 123456789 + 123456789 as "x"))).otherwise(array(struct('id * 987654321 + 987654321 as

"y"))))

org.apache.spark.sql.AnalysisException: cannot resolve 'CASE WHEN (`id` = CAST(1 AS BIGINT)) THEN array(named_struct('x', ((`id` * CAST(123456789 AS BI

GINT)) + CAST(123456789 AS BIGINT)))) ELSE array(named_struct('y', ((`id` * CAST(987654321 AS BIGINT)) + CAST(987654321 AS BIGINT)))) END' due to data

type mismatch: THEN and ELSE expressions should all be same type or coercible to a common type, got CASE WHEN ... THEN array<struct<x:bigint>> ELSE arr

ay<struct<y:bigint>> END;;

```

## How was this patch tested?

Added unit test.

Closes#24453 from viirya/SPARK-27551.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

```

========================================================================

Building Spark

========================================================================

[info] Building Spark (w/Hive 1.2.1) using SBT with these arguments: -Phadoop-3.2 -Pkubernetes -Phive-thriftserver -Pkinesis-asl -Pyarn -Pspark-ganglia-lgpl -Phive -Pmesos test:package streaming-kinesis-asl-assembly/assembly

```

`(w/Hive 1.2.1)` is incorrect when testing hadoop-3.2, It's should be (w/Hive 2.3.4).

This pr removes `(w/Hive 1.2.1)` in run-tests.py.

## How was this patch tested?

N/A

Closes#24451 from wangyum/run-tests-invalid-info.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

As discovered by users making use of this feature, there is a bug in the

declaration of the `R_IMAGE_NAME` variable that causes the default name to

not be properly set to `spark-r` but rather to just `-r`

## How was this patch tested?

Verified that the image name for the R image is now appropriately populated in the integration test script via Bash debug output.

NB - The fact that this wasn't spotted earlier highlights the fact that currently the K8S integration test suite does not have any tests for the R image as if it had this would have failed integration testing in the original PR #23846Closes#24449 from rvesse/SPARK-26729.

Authored-by: Rob Vesse <rvesse@dotnetrdf.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

update `HiveExternalCatalogVersionsSuite` to test 2.4.2, as 2.4.1 will be removed from Mirror Network soon.

## How was this patch tested?

N/A

Closes#24452 from cloud-fan/release.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This patch makes several test flakiness fixes.

## How was this patch tested?

N/A

Closes#24434 from gatorsmile/fixFlakyTest.

Lead-authored-by: gatorsmile <gatorsmile@gmail.com>

Co-authored-by: Hyukjin Kwon <gurwls223@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

Kind of related to https://github.com/gatorsmile/spark/pull/5 - let's update genjavadoc to see if it generates fewer spurious javadoc errors to begin with.

## How was this patch tested?

Existing docs build

Closes#24443 from srowen/genjavadoc013.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Adds the Spark ML Interaction transformer to PySpark

## How was this patch tested?

- Added Python doctest

- Ran the newly added example code

- Manually confirmed that a PipelineModel that contains an Interaction transformer can now be loaded in PySpark

Closes#24426 from Andrew-Crosby/pyspark-interaction-transformer.

Lead-authored-by: Andrew-Crosby <37139900+Andrew-Crosby@users.noreply.github.com>

Co-authored-by: Andrew-Crosby <andrew.crosby@autotrader.co.uk>

Signed-off-by: Bryan Cutler <cutlerb@gmail.com>

## What changes were proposed in this pull request?

This is a follow-up of https://github.com/apache/spark/pull/24395. This PR update `plugins.sbt`, too.

## How was this patch tested?

Pass the Jenkins.

Closes#24444 from dongjoon-hyun/SPARK-ASM71-2.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

## What changes were proposed in this pull request?

Migrate JSON to File Data Source V2

## How was this patch tested?

Unit test

Closes#24058 from gengliangwang/jsonV2.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

In some corner cases, `JobGenerator` thread (including some other EventLoop threads) may exit for some fatal error, like OOM, but Spark Streaming job keep running with no batch job generating. Currently, we only report any non-fatal error.

```

override def run(): Unit = {

try {

while (!stopped.get) {

val event = eventQueue.take()

try {

onReceive(event)

} catch {

case NonFatal(e) =>

try {

onError(e)

} catch {

case NonFatal(e) => logError("Unexpected error in " + name, e)

}

}

}

} catch {

case ie: InterruptedException => // exit even if eventQueue is not empty

case NonFatal(e) => logError("Unexpected error in " + name, e)

}

}

```

In this PR, we double check if event thread alive when post Event

## How was this patch tested?

existing unit tests

Closes#24400 from uncleGen/SPARK-27503.

Authored-by: uncleGen <hustyugm@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

hadoop-2.7 gets `commons-logging` version from `hive-metastore`:

```

[INFO] +- org.spark-project.hive:hive-metastore:jar:1.2.1.spark2:compile

[INFO] | +- com.jolbox:bonecp:jar:0.8.0.RELEASE:compile

[INFO] | +- commons-cli:commons-cli:jar:1.2:compile

[INFO] | +- commons-logging:commons-logging:jar:1.1.3:compile

```

But Hive removes `commons-logging` since [HIVE-12237(Hive 2.0.0)](https://issues.apache.org/jira/browse/HIVE-12237), so hadoop-3.2 gets `commons-logging` from `commons-httpclient`:

```

[INFO] +- commons-httpclient:commons-httpclient:jar:3.1:compile

[INFO] | \- commons-logging:commons-logging:jar:1.0.4:compile

```

Thus. we may hint `LogConfigurationException`:

```

bin/spark-sql --conf spark.sql.hive.metastore.version=1.2.2 --conf spark.sql.hive.metastore.jars=file:///apache/hive-1.2.2-bin/lib/*

...

Caused by: org.apache.commons.logging.LogConfigurationException: Invalid class loader hierarchy. You have more than one version of 'org.apache.commons.logging.Log' visible, which is not allowed.

at org.apache.commons.logging.impl.LogFactoryImpl.getLogConstructor(LogFactoryImpl.java:385)

... 43 more

```

This pr upgrade `commons-logging` to 1.1.3 for hadoop-3.2 to fix this issue.

## How was this patch tested?

manual tests

Closes#24388 from wangyum/SPARK-27481.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR is follow up of https://github.com/apache/spark/pull/24286. As gatorsmile pointed out that column with null value is inaccurate as well.

```

> select key from test;

2

NULL

1

spark-sql> desc extended test key;

col_name key

data_type int

comment NULL

min 1

max 2

num_nulls 1

distinct_count 2

```

The distinct count should be distinct_count + 1 when column contains null value.

## How was this patch tested?

Existing tests & new UT added.

Closes#24436 from pengbo/aggregation_estimation.

Authored-by: pengbo <bo.peng1019@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Added new JSON benchmarks related to date and timestamps operations:

- Write date/timestamp to JSON files

- `to_json()` and `from_json()` for dates and timestamps

- Read date/timestamps from JSON files, and infer schemas

- Parse and infer schemas from `Dataset[String]`

Also existing JSON benchmarks are ported on `NoOp` datasource.

Closes#24430 from MaxGekk/json-datetime-benchmark.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Added new CSV benchmarks related to date and timestamps operations:

- Write date/timestamp to CSV files

- `to_csv()` and `from_csv()` for dates and timestamps

- Read date/timestamps from CSV files, and infer schemas

- Parse and infer schemas from `Dataset[String]`

Also existing CSV benchmarks are ported on `NoOp` datasource.

Closes#24429 from MaxGekk/csv-timestamp-benchmark.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

In the PR, I propose to use the `TIMESTAMP_MICROS` logical type for timestamps written to parquet files. The type matches semantically to Catalyst's `TimestampType`, and stores microseconds since epoch in UTC time zone. This will allow to avoid conversions of microseconds to nanoseconds and to Julian calendar. Also this will reduce sizes of written parquet files.

## How was this patch tested?

By existing test suites.

Closes#24425 from MaxGekk/parquet-timestamp_micros.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Currently "EXPLAIN DESC TABLE" is special cased and outputs a single row relation as following.

Current output:

```sql

spark-sql> EXPLAIN DESCRIBE TABLE t;

== Physical Plan ==

*(1) Scan OneRowRelation[]

```

This is not consistent with how we handle explain processing for other commands. In this PR, the inconsistency is handled by removing the special handling for "describe table".

After change:

```sql

spark-sql> EXPLAIN DESC EXTENDED t

== Physical Plan ==

Execute DescribeTableCommand

+- DescribeTableCommand `t`, true

```

## How was this patch tested?

Added new tests in SQLQueryTestSuite.

Closes#24427 from dilipbiswal/describe_table_explain2.

Authored-by: Dilip Biswal <dbiswal@us.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Otherwise, tests that use tables from multiple sessions will run into issues if they access the same table. The correct location is in shared state.

A couple other minor test improvements.

cc gatorsmile srinathshankar

## How was this patch tested?

Existing unit tests.

Closes#24302 from ericl/test-conflicts.

Lead-authored-by: Eric Liang <ekl@databricks.com>

Co-authored-by: Eric Liang <ekhliang@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

In the PR, I propose to parse strings to timestamps in microsecond precision by the ` to_timestamp()` function if the specified pattern contains a sub-pattern for seconds fractions.

Closes#24342

## How was this patch tested?

By `DateFunctionsSuite` and `DateExpressionsSuite`

Closes#24420 from MaxGekk/to_timestamp-microseconds3.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

This increases the minimum support version of pyarrow to 0.12.1 and removes workarounds in pyspark to remain compatible with prior versions. This means that users will need to have at least pyarrow 0.12.1 installed and available in the cluster or an `ImportError` will be raised to indicate an upgrade is needed.

## How was this patch tested?

Existing tests using:

Python 2.7.15, pyarrow 0.12.1, pandas 0.24.2

Python 3.6.7, pyarrow 0.12.1, pandas 0.24.0

Closes#24298 from BryanCutler/arrow-bump-min-pyarrow-SPARK-27276.

Authored-by: Bryan Cutler <cutlerb@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Added tests to check migration from `INT96` to `TIMESTAMP_MICROS` (`INT64`) for timestamps in parquet files. In particular:

- Append `TIMESTAMP_MICROS` timestamps to **existing parquet** files with `INT96` timestamps

- Append `TIMESTAMP_MICROS` timestamps to a table with `INT96` timestamps

- Append `INT96` to `TIMESTAMP_MICROS` timestamps in **parquet files**

- Append `INT96` to `TIMESTAMP_MICROS` timestamps in a **table**

Closes#24417 from MaxGekk/parquet-timestamp-int64-tests.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

## What changes were proposed in this pull request?

Add note about spark.executor.heartbeatInterval change to migration guide

See also https://github.com/apache/spark/pull/24329

## How was this patch tested?

N/A

Closes#24432 from srowen/SPARK-27419.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>