### What changes were proposed in this pull request?

This patch fixes the intermittent test failure on ThriftServerQueryTestSuite/ThriftServerWithSparkContextSuite, getting ConnectException when querying to thrift server.

(https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/115646/testReport/)

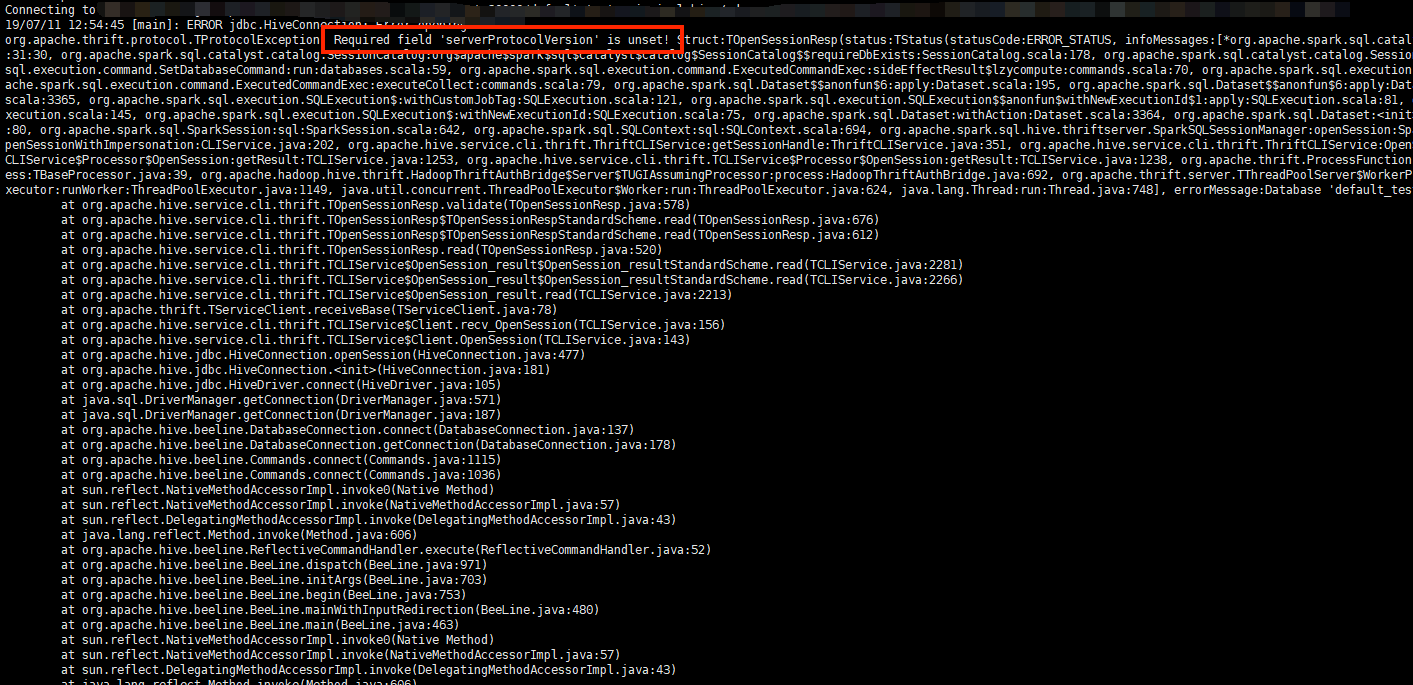

The relevant unit test log messages are following:

```

19/12/23 13:33:01.875 pool-1-thread-1 INFO AbstractService: Service:ThriftBinaryCLIService is started.

19/12/23 13:33:01.875 pool-1-thread-1 INFO AbstractService: Service:HiveServer2 is started.

...

19/12/23 13:33:01.888 pool-1-thread-1 INFO ThriftServerWithSparkContextSuite: HiveThriftServer2 started successfully

...

19/12/23 13:33:01.909 pool-1-thread-1-ScalaTest-running-ThriftServerWithSparkContextSuite INFO ThriftServerWithSparkContextSuite:

===== TEST OUTPUT FOR o.a.s.sql.hive.thriftserver.ThriftServerWithSparkContextSuite: 'SPARK-29911: Uncache cached tables when session closed' =====

...

19/12/23 13:33:02.017 pool-1-thread-1-ScalaTest-running-ThriftServerWithSparkContextSuite INFO Utils: Supplied authorities: localhost:15441

19/12/23 13:33:02.018 pool-1-thread-1-ScalaTest-running-ThriftServerWithSparkContextSuite INFO Utils: Resolved authority: localhost:15441

19/12/23 13:33:02.078 HiveServer2-Background-Pool: Thread-213 INFO BaseSessionStateBuilder$$anon$2: Optimization rule 'org.apache.spark.sql.catalyst.optimizer.ConvertToLocalRelation' is excluded from the optimizer.

19/12/23 13:33:02.078 HiveServer2-Background-Pool: Thread-213 INFO BaseSessionStateBuilder$$anon$2: Optimization rule 'org.apache.spark.sql.catalyst.optimizer.ConvertToLocalRelation' is excluded from the optimizer.

19/12/23 13:33:02.121 pool-1-thread-1-ScalaTest-running-ThriftServerWithSparkContextSuite WARN HiveConnection: Failed to connect to localhost:15441

19/12/23 13:33:02.124 pool-1-thread-1-ScalaTest-running-ThriftServerWithSparkContextSuite INFO ThriftServerWithSparkContextSuite:

===== FINISHED o.a.s.sql.hive.thriftserver.ThriftServerWithSparkContextSuite: 'SPARK-29911: Uncache cached tables when session closed' =====

19/12/23 13:33:02.143 Thread-35 INFO ThriftCLIService: Starting ThriftBinaryCLIService on port 15441 with 5...500 worker threads

19/12/23 13:33:02.327 pool-1-thread-1 INFO HiveServer2: Shutting down HiveServer2

19/12/23 13:33:02.328 pool-1-thread-1 INFO ThriftCLIService: Thrift server has stopped

```

(Here the error is logged as `WARN HiveConnection: Failed to connect to localhost:15441` - the actual stack trace can be seen on Jenkins test summary.)

The reason of test failure: Thrift(Binary|Http)CLIService prepare and launch the service asynchronously (in new thread), which suites are not waiting for completion and just start running tests, ends up with race condition.

That can be easily reproduced, via adding artificial sleep in `ThriftBinaryCLIService.run()` here:

ba3f6330dd/sql/hive-thriftserver/v2.3/src/main/java/org/apache/hive/service/cli/thrift/ThriftBinaryCLIService.java (L49)

(Note that `sleep` should be added before initializing server socket. E.g. Line 57)

This patch changes the test initialization logic to try executing simple query to wait until the service is available. The patch also refactors the code to apply the change both ThriftServerQueryTestSuite and ThriftServerWithSparkContextSuite easily.

### Why are the changes needed?

This patch fixes the intermittent failure observed here:

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/115646/testReport/

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Artificially made the test fail consistently (by the approach described above), and confirmed the patch fixed the test.

Closes#27001 from HeartSaVioR/SPARK-30345.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

1. Revert "Preparing development version 3.0.1-SNAPSHOT": 56dcd79

2. Revert "Preparing Spark release v3.0.0-preview2-rc2": c216ef1

### Why are the changes needed?

Shouldn't change master.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

manual test:

https://github.com/apache/spark/compare/5de5e46..wangyum:revert-masterCloses#26915 from wangyum/revert-master.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

Reprocess all PostgreSQL dialect related PRs, listing in order:

- #25158: PostgreSQL integral division support [revert]

- #25170: UT changes for the integral division support [revert]

- #25458: Accept "true", "yes", "1", "false", "no", "0", and unique prefixes as input and trim input for the boolean data type. [revert]

- #25697: Combine below 2 feature tags into "spark.sql.dialect" [revert]

- #26112: Date substraction support [keep the ANSI-compliant part]

- #26444: Rename config "spark.sql.ansi.enabled" to "spark.sql.dialect.spark.ansi.enabled" [revert]

- #26463: Cast to boolean support for PostgreSQL dialect [revert]

- #26584: Make the behavior of Postgre dialect independent of ansi mode config [keep the ANSI-compliant part]

### Why are the changes needed?

As the discussion in http://apache-spark-developers-list.1001551.n3.nabble.com/DISCUSS-PostgreSQL-dialect-td28417.html, we need to remove PostgreSQL dialect form code base for several reasons:

1. The current approach makes the codebase complicated and hard to maintain.

2. Fully migrating PostgreSQL workloads to Spark SQL is not our focus for now.

### Does this PR introduce any user-facing change?

Yes, the config `spark.sql.dialect` will be removed.

### How was this patch tested?

Existing UT.

Closes#26763 from xuanyuanking/SPARK-30125.

Lead-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Co-authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

improve the temporary functions test in SingleSessionSuite by verifying the result in a query

### Why are the changes needed?

### Does this PR introduce any user-facing change?

### How was this patch tested?

Closes#26812 from leoluan2009/SPARK-30179.

Authored-by: Luan <xuluan@ebay.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In this PR, we propose to use the value of `spark.sql.source.default` as the provider for `CREATE TABLE` syntax instead of `hive` in Spark 3.0.

And to help the migration, we introduce a legacy conf `spark.sql.legacy.respectHiveDefaultProvider.enabled` and set its default to `false`.

### Why are the changes needed?

1. Currently, `CREATE TABLE` syntax use hive provider to create table while `DataFrameWriter.saveAsTable` API using the value of `spark.sql.source.default` as a provider to create table. It would be better to make them consistent.

2. User may gets confused in some cases. For example:

```

CREATE TABLE t1 (c1 INT) USING PARQUET;

CREATE TABLE t2 (c1 INT);

```

In these two DDLs, use may think that `t2` should also use parquet as default provider since Spark always advertise parquet as the default format. However, it's hive in this case.

On the other hand, if we omit the USING clause in a CTAS statement, we do pick parquet by default if `spark.sql.hive.convertCATS=true`:

```

CREATE TABLE t3 USING PARQUET AS SELECT 1 AS VALUE;

CREATE TABLE t4 AS SELECT 1 AS VALUE;

```

And these two cases together can be really confusing.

3. Now, Spark SQL is very independent and popular. We do not need to be fully consistent with Hive's behavior.

### Does this PR introduce any user-facing change?

Yes, before this PR, using `CREATE TABLE` syntax will use hive provider. But now, it use the value of `spark.sql.source.default` as its provider.

### How was this patch tested?

Added tests in `DDLParserSuite` and `HiveDDlSuite`.

Closes#26736 from Ngone51/dev-create-table-using-parquet-by-default.

Lead-authored-by: wuyi <yi.wu@databricks.com>

Co-authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

## What changes were proposed in this pull request?

[HIVE-12063](https://issues.apache.org/jira/browse/HIVE-12063) improved pad decimal numbers with trailing zeros to the scale of the column. The following description is copied from the description of HIVE-12063.

> HIVE-7373 was to address the problems of trimming tailing zeros by Hive, which caused many problems including treating 0.0, 0.00 and so on as 0, which has different precision/scale. Please refer to HIVE-7373 description. However, HIVE-7373 was reverted by HIVE-8745 while the underlying problems remained. HIVE-11835 was resolved recently to address one of the problems, where 0.0, 0.00, and so on cannot be read into decimal(1,1).

However, HIVE-11835 didn't address the problem of showing as 0 in query result for any decimal values such as 0.0, 0.00, etc. This causes confusion as 0 and 0.0 have different precision/scale than 0.

The proposal here is to pad zeros for query result to the type's scale. This not only removes the confusion described above, but also aligns with many other DBs. Internal decimal number representation doesn't change, however.

**Spark SQL**:

```sql

// bin/spark-sql

spark-sql> select cast(1 as decimal(38, 18));

1

spark-sql>

// bin/beeline

0: jdbc:hive2://localhost:10000/default> select cast(1 as decimal(38, 18));

+----------------------------+--+

| CAST(1 AS DECIMAL(38,18)) |

+----------------------------+--+

| 1.000000000000000000 |

+----------------------------+--+

// bin/spark-shell

scala> spark.sql("select cast(1 as decimal(38, 18))").show(false)

+-------------------------+

|CAST(1 AS DECIMAL(38,18))|

+-------------------------+

|1.000000000000000000 |

+-------------------------+

// bin/pyspark

>>> spark.sql("select cast(1 as decimal(38, 18))").show()

+-------------------------+

|CAST(1 AS DECIMAL(38,18))|

+-------------------------+

| 1.000000000000000000|

+-------------------------+

// bin/sparkR

> showDF(sql("SELECT cast(1 as decimal(38, 18))"))

+-------------------------+

|CAST(1 AS DECIMAL(38,18))|

+-------------------------+

| 1.000000000000000000|

+-------------------------+

```

**PostgreSQL**:

```sql

postgres=# select cast(1 as decimal(38, 18));

numeric

----------------------

1.000000000000000000

(1 row)

```

**Presto**:

```sql

presto> select cast(1 as decimal(38, 18));

_col0

----------------------

1.000000000000000000

(1 row)

```

## How was this patch tested?

unit tests and manual test:

```sql

spark-sql> select cast(1 as decimal(38, 18));

1.000000000000000000

```

Spark SQL Upgrading Guide:

Closes#26697 from wangyum/SPARK-28461.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Support JDBC/ODBC tab for HistoryServer WebUI. Currently from Historyserver we can't access the JDBC/ODBC tab for thrift server applications. In this PR, I am doing 2 main changes

1. Refactor existing thrift server listener to support kvstore

2. Add history server plugin for thrift server listener and tab.

### Why are the changes needed?

Users can access Thriftserver tab from History server for both running and finished applications,

### Does this PR introduce any user-facing change?

Support for JDBC/ODBC tab for the WEBUI from History server

### How was this patch tested?

Add UT and Manual tests

1. Start Thriftserver and Historyserver

```

sbin/stop-thriftserver.sh

sbin/stop-historyserver.sh

sbin/start-thriftserver.sh

sbin/start-historyserver.sh

```

2. Launch beeline

`bin/beeline -u jdbc:hive2://localhost:10000`

3. Run queries

Go to the JDBC/ODBC page of the WebUI from History server

Closes#26378 from shahidki31/ThriftKVStore.

Authored-by: shahid <shahidki31@gmail.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

## What changes were proposed in this pull request?

[HIVE-12063](https://issues.apache.org/jira/browse/HIVE-12063) improved pad decimal numbers with trailing zeros to the scale of the column. The following description is copied from the description of HIVE-12063.

> HIVE-7373 was to address the problems of trimming tailing zeros by Hive, which caused many problems including treating 0.0, 0.00 and so on as 0, which has different precision/scale. Please refer to HIVE-7373 description. However, HIVE-7373 was reverted by HIVE-8745 while the underlying problems remained. HIVE-11835 was resolved recently to address one of the problems, where 0.0, 0.00, and so on cannot be read into decimal(1,1).

However, HIVE-11835 didn't address the problem of showing as 0 in query result for any decimal values such as 0.0, 0.00, etc. This causes confusion as 0 and 0.0 have different precision/scale than 0.

The proposal here is to pad zeros for query result to the type's scale. This not only removes the confusion described above, but also aligns with many other DBs. Internal decimal number representation doesn't change, however.

**Spark SQL**:

```sql

// bin/spark-sql

spark-sql> select cast(1 as decimal(38, 18));

1

spark-sql>

// bin/beeline

0: jdbc:hive2://localhost:10000/default> select cast(1 as decimal(38, 18));

+----------------------------+--+

| CAST(1 AS DECIMAL(38,18)) |

+----------------------------+--+

| 1.000000000000000000 |

+----------------------------+--+

// bin/spark-shell

scala> spark.sql("select cast(1 as decimal(38, 18))").show(false)

+-------------------------+

|CAST(1 AS DECIMAL(38,18))|

+-------------------------+

|1.000000000000000000 |

+-------------------------+

// bin/pyspark

>>> spark.sql("select cast(1 as decimal(38, 18))").show()

+-------------------------+

|CAST(1 AS DECIMAL(38,18))|

+-------------------------+

| 1.000000000000000000|

+-------------------------+

// bin/sparkR

> showDF(sql("SELECT cast(1 as decimal(38, 18))"))

+-------------------------+

|CAST(1 AS DECIMAL(38,18))|

+-------------------------+

| 1.000000000000000000|

+-------------------------+

```

**PostgreSQL**:

```sql

postgres=# select cast(1 as decimal(38, 18));

numeric

----------------------

1.000000000000000000

(1 row)

```

**Presto**:

```sql

presto> select cast(1 as decimal(38, 18));

_col0

----------------------

1.000000000000000000

(1 row)

```

## How was this patch tested?

unit tests and manual test:

```sql

spark-sql> select cast(1 as decimal(38, 18));

1.000000000000000000

```

Spark SQL Upgrading Guide:

Closes#25214 from wangyum/SPARK-28461.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

add document to address https://github.com/apache/spark/pull/26612#discussion_r349844327

### Why are the changes needed?

help people understand how to use --CONFIG_DIM

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#26661 from cloud-fan/test.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This is a follow-up according to liancheng 's advice.

- https://github.com/apache/spark/pull/26619#discussion_r349326090

### Why are the changes needed?

Previously, we chose the full version to be carefully. As of today, it seems that `Apache Hive 2.3` branch seems to become stable.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the compile combination on GitHub Action.

1. hadoop-2.7/hive-1.2/JDK8

2. hadoop-2.7/hive-2.3/JDK8

3. hadoop-3.2/hive-2.3/JDK8

4. hadoop-3.2/hive-2.3/JDK11

Also, pass the Jenkins with `hadoop-2.7` and `hadoop-3.2` for (1) and (4).

(2) and (3) is not ready in Jenkins.

Closes#26645 from dongjoon-hyun/SPARK-RENAME-HIVE-DIRECTORY.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This is follow up of #26543

See https://github.com/apache/spark/pull/26543#discussion_r348934276

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exist UT.

Closes#26628 from LantaoJin/SPARK-29911_FOLLOWUP.

Authored-by: LantaoJin <jinlantao@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

allow the sql test files to specify different dimensions of config sets during testing. For example,

```

--CONFIG_DIM1 a=1

--CONFIG_DIM1 b=2,c=3

--CONFIG_DIM2 x=1

--CONFIG_DIM2 y=1,z=2

```

This example defines 2 config dimensions, and each dimension defines 2 config sets. We will run the queries 4 times:

1. a=1, x=1

2. a=1, y=1, z=2

3. b=2, c=3, x=1

4. b=2, c=3, y=1, z=2

### Why are the changes needed?

Currently `SQLQueryTestSuite` takes a long time. This is because we run each test at least 3 times, to check with different codegen modes. This is not necessary for most of the tests, e.g. DESC TABLE. We should only check these codegen modes for certain tests.

With the --CONFIG_DIM directive, we can do things like: test different join operator(broadcast or shuffle join) X different codegen modes.

After reducing testing time, we should be able to run thrifter server SQL tests with config settings.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

test only

Closes#26612 from cloud-fan/test.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The local temporary view is session-scoped. Its lifetime is the lifetime of the session that created it. But now cache data is cross-session. Its lifetime is the lifetime of the Spark application. That's will cause the memory leak if cache a local temporary view in memory when the session closed.

In this PR, we uncache the cached data of local temporary view when session closed. This PR doesn't impact the cached data of global temp view and persisted view.

How to reproduce:

1. create a local temporary view v1

2. cache it in memory

3. close session without drop table v1.

The application will hold the memory forever. In a long running thrift server scenario. It's worse.

```shell

0: jdbc:hive2://localhost:10000> CACHE TABLE testCacheTable AS SELECT 1;

CACHE TABLE testCacheTable AS SELECT 1;

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (1.498 seconds)

0: jdbc:hive2://localhost:10000> !close

!close

Closing: 0: jdbc:hive2://localhost:10000

0: jdbc:hive2://localhost:10000 (closed)> !connect 'jdbc:hive2://localhost:10000'

!connect 'jdbc:hive2://localhost:10000'

Connecting to jdbc:hive2://localhost:10000

Enter username for jdbc:hive2://localhost:10000:

lajin

Enter password for jdbc:hive2://localhost:10000:

***

Connected to: Spark SQL (version 3.0.0-SNAPSHOT)

Driver: Hive JDBC (version 1.2.1.spark2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

1: jdbc:hive2://localhost:10000> select * from testCacheTable;

select * from testCacheTable;

Error: Error running query: org.apache.spark.sql.AnalysisException: Table or view not found: testCacheTable; line 1 pos 14;

'Project [*]

+- 'UnresolvedRelation [testCacheTable] (state=,code=0)

```

<img width="1047" alt="Screen Shot 2019-11-15 at 2 03 49 PM" src="https://user-images.githubusercontent.com/1853780/68923527-7ca8c180-07b9-11ea-9cc7-74f276c46840.png">

### Why are the changes needed?

Resolve memory leak for thrift server

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manual test in UI storage tab

And add an UT

Closes#26543 from LantaoJin/SPARK-29911.

Authored-by: LantaoJin <jinlantao@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR add guides for `ThriftServerQueryTestSuite`.

### Why are the changes needed?

Add guides

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

N/A

Closes#26587 from wangyum/SPARK-28527-FOLLOW-UP.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Add 3 interval output types which are named as `SQL_STANDARD`, `ISO_8601`, `MULTI_UNITS`. And we add a new conf `spark.sql.dialect.intervalOutputStyle` for this. The `MULTI_UNITS` style displays the interval values in the former behavior and it is the default. The newly added `SQL_STANDARD`, `ISO_8601` styles can be found in the following table.

Style | conf | Year-Month Interval | Day-Time Interval | Mixed Interval

-- | -- | -- | -- | --

Format With Time Unit Designators | MULTI_UNITS | 1 year 2 mons | 1 days 2 hours 3 minutes 4.123456 seconds | interval 1 days 2 hours 3 minutes 4.123456 seconds

SQL STANDARD | SQL_STANDARD | 1-2 | 3 4:05:06 | -1-2 3 -4:05:06

ISO8601 Basic Format| ISO_8601| P1Y2M| P3DT4H5M6S|P-1Y-2M3D-4H-5M-6S

### Why are the changes needed?

for ANSI SQL support

### Does this PR introduce any user-facing change?

yes,interval out now has 3 output styles

### How was this patch tested?

add new unit tests

cc cloud-fan maropu MaxGekk HyukjinKwon thanks.

Closes#26418 from yaooqinn/SPARK-29783.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

What changes were proposed in this pull request?

Some of the columns of JDBC/ODBC tab Session info in Web UI are hard to understand.

Add tool tip for Start time, finish time , Duration and Total Execution

Why are the changes needed?

To improve the understanding of the WebUI

Does this PR introduce any user-facing change?

No

How was this patch tested?

manual test

Closes#26138 from PavithraRamachandran/JDBC_tooltip.

Authored-by: Pavithra Ramachandran <pavi.rams@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Rename config "spark.sql.ansi.enabled" to "spark.sql.dialect.spark.ansi.enabled"

### Why are the changes needed?

The relation between "spark.sql.ansi.enabled" and "spark.sql.dialect" is confusing, since the "PostgreSQL" dialect should contain the features of "spark.sql.ansi.enabled".

To make things clearer, we can rename the "spark.sql.ansi.enabled" to "spark.sql.dialect.spark.ansi.enabled", thus the option "spark.sql.dialect.spark.ansi.enabled" is only for Spark dialect.

For the casting and arithmetic operations, runtime exceptions should be thrown if "spark.sql.dialect" is "spark" and "spark.sql.dialect.spark.ansi.enabled" is true or "spark.sql.dialect" is PostgresSQL.

### Does this PR introduce any user-facing change?

Yes, the config name changed.

### How was this patch tested?

Existing UT.

Closes#26444 from xuanyuanking/SPARK-29807.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This pr is to support `--import` directive to load queries from another test case in SQLQueryTestSuite.

This fix comes from the cloud-fan suggestion in https://github.com/apache/spark/pull/26479#discussion_r345086978

### Why are the changes needed?

This functionality might reduce duplicate test code in `SQLQueryTestSuite`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Run `SQLQueryTestSuite`.

Closes#26497 from maropu/ImportTests.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Provide Ellipses in Statement column , just like description in Jobs page .

### Why are the changes needed?

When a query is executed the whole query statement is displayed no matter how big it is. When bigger queries are executed, it covers a large portion of the page display, when we have multiple queries it is difficult to scroll down to view all.

### Does this PR introduce any user-facing change?

No

Before:

After:

### How was this patch tested?

Manual

Closes#26364 from PavithraRamachandran/ellipse_JDBC.

Authored-by: Pavithra Ramachandran <pavi.rams@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

remove the leading "interval" in `CalendarInterval.toString`.

### Why are the changes needed?

Although it's allowed to have "interval" prefix when casting string to int, it's not recommended.

This is also consistent with pgsql:

```

cloud0fan=# select interval '1' day;

interval

----------

1 day

(1 row)

```

### Does this PR introduce any user-facing change?

yes, when display a dataframe with interval type column, the result is different.

### How was this patch tested?

updated tests.

Closes#26401 from cloud-fan/interval.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Added UT for the classes `ThriftServerPage.scala` and `ThriftServerSessionPage.scala`

### Why are the changes needed?

Currently, there are no UTs for testing Thriftserver UI page

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

UT

Closes#26403 from shahidki31/ut.

Authored-by: shahid <shahidki31@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Current checkstyle checking folder can't cover all folder.

Since for support multi version hive, we have some divided hive folder.

We should check it too.

### Why are the changes needed?

Fix build bug

### Does this PR introduce any user-facing change?

NO

### How was this patch tested?

NO

Closes#26385 from AngersZhuuuu/SPARK-29742.

Authored-by: angerszhu <angers.zhu@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently, JDBC/ODBC tab in the WEBUI doesn't support hiding table. Other tabs in the web ui like, Jobs, stages, SQL etc supports hiding table (refer https://github.com/apache/spark/pull/22592).

In this PR, added the support for hide table in the jdbc/odbc tab also.

### Why are the changes needed?

Spark ui about the contents of the form need to have hidden and show features, when the table records very much. Because sometimes you do not care about the record of the table, you just want to see the contents of the next table, but you have to scroll the scroll bar for a long time to see the contents of the next table.

### Does this PR introduce any user-facing change?

No, except support of hide table

### How was this patch tested?

Manually tested

Closes#26353 from shahidki31/hideTable.

Authored-by: shahid <shahidki31@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

To push the built jars to maven release repository, we need to remove the 'SNAPSHOT' tag from the version name.

Made the following changes in this PR:

* Update all the `3.0.0-SNAPSHOT` version name to `3.0.0-preview`

* Update the sparkR version number check logic to allow jvm version like `3.0.0-preview`

**Please note those changes were generated by the release script in the past, but this time since we manually add tags on master branch, we need to manually apply those changes too.**

We shall revert the changes after 3.0.0-preview release passed.

### Why are the changes needed?

To make the maven release repository to accept the built jars.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

### What changes were proposed in this pull request?

This patch fixes the issue that external listeners are not initialized properly when `spark.sql.hive.metastore.jars` is set to either "maven" or custom list of jar.

("builtin" is not a case here - all jars in Spark classloader are also available in separate classloader)

The culprit is lazy initialization (lazy val or passing builder function) & thread context classloader. HiveClient leverages IsolatedClientLoader to properly load Hive and relevant libraries without issue - to not mess up with Spark classpath it uses separate classloader with leveraging thread context classloader.

But there's a messed-up case - SessionState is being initialized while HiveClient changed the thread context classloader from Spark classloader to Hive isolated one, and streaming query listeners are loaded from changed classloader while initializing SessionState.

This patch forces initializing SessionState in SparkSQLEnv to avoid such case.

### Why are the changes needed?

ClassNotFoundException could occur in spark-sql with specific configuration, as explained above.

### Does this PR introduce any user-facing change?

No, as I don't think end users assume the classloader of external listeners is only containing jars for Hive client.

### How was this patch tested?

New UT added which fails on master branch and passes with the patch.

The error message with master branch when running UT:

```

java.lang.IllegalArgumentException: Error while instantiating 'org.apache.spark.sql.hive.HiveSessionStateBuilder':;

org.apache.spark.sql.AnalysisException: java.lang.IllegalArgumentException: Error while instantiating 'org.apache.spark.sql.hive.HiveSessionStateBuilder':;

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:109)

at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:221)

at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:147)

at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:137)

at org.apache.spark.sql.hive.thriftserver.SparkSQLEnv$.init(SparkSQLEnv.scala:59)

at org.apache.spark.sql.hive.thriftserver.SparkSQLEnvSuite.$anonfun$new$2(SparkSQLEnvSuite.scala:44)

at org.apache.spark.sql.hive.thriftserver.SparkSQLEnvSuite.withSystemProperties(SparkSQLEnvSuite.scala:61)

at org.apache.spark.sql.hive.thriftserver.SparkSQLEnvSuite.$anonfun$new$1(SparkSQLEnvSuite.scala:43)

at scala.runtime.java8.JFunction0$mcV$sp.apply(JFunction0$mcV$sp.java:23)

at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85)

at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83)

at org.scalatest.OutcomeOf$.outcomeOf(OutcomeOf.scala:104)

at org.scalatest.Transformer.apply(Transformer.scala:22)

at org.scalatest.Transformer.apply(Transformer.scala:20)

at org.scalatest.FunSuiteLike$$anon$1.apply(FunSuiteLike.scala:186)

at org.apache.spark.SparkFunSuite.withFixture(SparkFunSuite.scala:149)

at org.scalatest.FunSuiteLike.invokeWithFixture$1(FunSuiteLike.scala:184)

at org.scalatest.FunSuiteLike.$anonfun$runTest$1(FunSuiteLike.scala:196)

at org.scalatest.SuperEngine.runTestImpl(Engine.scala:286)

at org.scalatest.FunSuiteLike.runTest(FunSuiteLike.scala:196)

at org.scalatest.FunSuiteLike.runTest$(FunSuiteLike.scala:178)

at org.apache.spark.SparkFunSuite.org$scalatest$BeforeAndAfterEach$$super$runTest(SparkFunSuite.scala:56)

at org.scalatest.BeforeAndAfterEach.runTest(BeforeAndAfterEach.scala:221)

at org.scalatest.BeforeAndAfterEach.runTest$(BeforeAndAfterEach.scala:214)

at org.apache.spark.SparkFunSuite.runTest(SparkFunSuite.scala:56)

at org.scalatest.FunSuiteLike.$anonfun$runTests$1(FunSuiteLike.scala:229)

at org.scalatest.SuperEngine.$anonfun$runTestsInBranch$1(Engine.scala:393)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.scalatest.SuperEngine.traverseSubNodes$1(Engine.scala:381)

at org.scalatest.SuperEngine.runTestsInBranch(Engine.scala:376)

at org.scalatest.SuperEngine.runTestsImpl(Engine.scala:458)

at org.scalatest.FunSuiteLike.runTests(FunSuiteLike.scala:229)

at org.scalatest.FunSuiteLike.runTests$(FunSuiteLike.scala:228)

at org.scalatest.FunSuite.runTests(FunSuite.scala:1560)

at org.scalatest.Suite.run(Suite.scala:1124)

at org.scalatest.Suite.run$(Suite.scala:1106)

at org.scalatest.FunSuite.org$scalatest$FunSuiteLike$$super$run(FunSuite.scala:1560)

at org.scalatest.FunSuiteLike.$anonfun$run$1(FunSuiteLike.scala:233)

at org.scalatest.SuperEngine.runImpl(Engine.scala:518)

at org.scalatest.FunSuiteLike.run(FunSuiteLike.scala:233)

at org.scalatest.FunSuiteLike.run$(FunSuiteLike.scala:232)

at org.apache.spark.SparkFunSuite.org$scalatest$BeforeAndAfterAll$$super$run(SparkFunSuite.scala:56)

at org.scalatest.BeforeAndAfterAll.liftedTree1$1(BeforeAndAfterAll.scala:213)

at org.scalatest.BeforeAndAfterAll.run(BeforeAndAfterAll.scala:210)

at org.scalatest.BeforeAndAfterAll.run$(BeforeAndAfterAll.scala:208)

at org.apache.spark.SparkFunSuite.run(SparkFunSuite.scala:56)

at org.scalatest.tools.SuiteRunner.run(SuiteRunner.scala:45)

at org.scalatest.tools.Runner$.$anonfun$doRunRunRunDaDoRunRun$13(Runner.scala:1349)

at org.scalatest.tools.Runner$.$anonfun$doRunRunRunDaDoRunRun$13$adapted(Runner.scala:1343)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.scalatest.tools.Runner$.doRunRunRunDaDoRunRun(Runner.scala:1343)

at org.scalatest.tools.Runner$.$anonfun$runOptionallyWithPassFailReporter$24(Runner.scala:1033)

at org.scalatest.tools.Runner$.$anonfun$runOptionallyWithPassFailReporter$24$adapted(Runner.scala:1011)

at org.scalatest.tools.Runner$.withClassLoaderAndDispatchReporter(Runner.scala:1509)

at org.scalatest.tools.Runner$.runOptionallyWithPassFailReporter(Runner.scala:1011)

at org.scalatest.tools.Runner$.run(Runner.scala:850)

at org.scalatest.tools.Runner.run(Runner.scala)

at org.jetbrains.plugins.scala.testingSupport.scalaTest.ScalaTestRunner.runScalaTest2(ScalaTestRunner.java:133)

at org.jetbrains.plugins.scala.testingSupport.scalaTest.ScalaTestRunner.main(ScalaTestRunner.java:27)

Caused by: java.lang.IllegalArgumentException: Error while instantiating 'org.apache.spark.sql.hive.HiveSessionStateBuilder':

at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$$instantiateSessionState(SparkSession.scala:1054)

at org.apache.spark.sql.SparkSession.$anonfun$sessionState$2(SparkSession.scala:156)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.SparkSession.sessionState$lzycompute(SparkSession.scala:154)

at org.apache.spark.sql.SparkSession.sessionState(SparkSession.scala:151)

at org.apache.spark.sql.SparkSession.$anonfun$new$3(SparkSession.scala:105)

at scala.Option.map(Option.scala:230)

at org.apache.spark.sql.SparkSession.$anonfun$new$1(SparkSession.scala:105)

at org.apache.spark.sql.internal.SQLConf$.get(SQLConf.scala:164)

at org.apache.spark.sql.hive.client.HiveClientImpl.newState(HiveClientImpl.scala:183)

at org.apache.spark.sql.hive.client.HiveClientImpl.<init>(HiveClientImpl.scala:127)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:300)

at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:421)

at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:314)

at org.apache.spark.sql.hive.HiveExternalCatalog.client$lzycompute(HiveExternalCatalog.scala:68)

at org.apache.spark.sql.hive.HiveExternalCatalog.client(HiveExternalCatalog.scala:67)

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$databaseExists$1(HiveExternalCatalog.scala:221)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:99)

... 58 more

Caused by: java.lang.ClassNotFoundException: test.custom.listener.DummyQueryExecutionListener

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.spark.util.Utils$.classForName(Utils.scala:206)

at org.apache.spark.util.Utils$.$anonfun$loadExtensions$1(Utils.scala:2746)

at scala.collection.TraversableLike.$anonfun$flatMap$1(TraversableLike.scala:245)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at scala.collection.TraversableLike.flatMap(TraversableLike.scala:245)

at scala.collection.TraversableLike.flatMap$(TraversableLike.scala:242)

at scala.collection.AbstractTraversable.flatMap(Traversable.scala:108)

at org.apache.spark.util.Utils$.loadExtensions(Utils.scala:2744)

at org.apache.spark.sql.util.ExecutionListenerManager.$anonfun$new$1(QueryExecutionListener.scala:83)

at org.apache.spark.sql.util.ExecutionListenerManager.$anonfun$new$1$adapted(QueryExecutionListener.scala:82)

at scala.Option.foreach(Option.scala:407)

at org.apache.spark.sql.util.ExecutionListenerManager.<init>(QueryExecutionListener.scala:82)

at org.apache.spark.sql.internal.BaseSessionStateBuilder.$anonfun$listenerManager$2(BaseSessionStateBuilder.scala:293)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.internal.BaseSessionStateBuilder.listenerManager(BaseSessionStateBuilder.scala:293)

at org.apache.spark.sql.internal.BaseSessionStateBuilder.build(BaseSessionStateBuilder.scala:320)

at org.apache.spark.sql.SparkSession$.org$apache$spark$sql$SparkSession$$instantiateSessionState(SparkSession.scala:1051)

... 80 more

```

Closes#26258 from HeartSaVioR/SPARK-29604.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

To push the built jars to maven release repository, we need to remove the 'SNAPSHOT' tag from the version name.

Made the following changes in this PR:

* Update all the `3.0.0-SNAPSHOT` version name to `3.0.0-preview`

* Update the PySpark version from `3.0.0.dev0` to `3.0.0`

**Please note those changes were generated by the release script in the past, but this time since we manually add tags on master branch, we need to manually apply those changes too.**

We shall revert the changes after 3.0.0-preview release passed.

### Why are the changes needed?

To make the maven release repository to accept the built jars.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#26243 from jiangxb1987/3.0.0-preview-prepare.

Lead-authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Co-authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

In this PR, extend the support of pagination to session table in `JDBC/PDBC` .

### Why are the changes needed?

Some times we may connect a lot client and there a many session info shown in session tab.

make it can be paged for better view.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manuel verify.

After pr:

<img width="1440" alt="Screen Shot 2019-10-25 at 4 19 27 PM" src="https://user-images.githubusercontent.com/46485123/67555133-50ae9900-f743-11e9-8724-9624a691f232.png">

<img width="1434" alt="Screen Shot 2019-10-25 at 4 19 38 PM" src="https://user-images.githubusercontent.com/46485123/67555165-5906d400-f743-11e9-819e-73f86a333dd3.png">

Closes#26253 from AngersZhuuuu/SPARK-29599.

Lead-authored-by: angerszhu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

In the PR https://github.com/apache/spark/pull/26215, we supported pagination for sqlstats table in JDBC/ODBC server page. In this PR, we are extending the support of pagination to sqlstats session table by making use of existing pagination classes in https://github.com/apache/spark/pull/26215.

### Why are the changes needed?

Support pagination for sqlsessionstats table in JDBC/ODBC server page in the WEBUI. It will easier for user to analyse the table and it may fix the potential issues like oom while loading the page, that may occur similar to the SQL page (refer #22645)

### Does this PR introduce any user-facing change?

There will be no change in the sqlsessionstats table in JDBC/ODBC server page execpt pagination support.

### How was this patch tested?

Manually verified.

Before:

After:

Closes#26246 from shahidki31/SPARK_29589.

Authored-by: shahid <shahidki31@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Supports pagination for SQL Statisitcs table in the JDBC/ODBC tab using existing Spark pagination framework.

### Why are the changes needed?

It will easier for user to analyse the table and it may fix the potential issues like oom while loading the page, that may occur similar to the SQL page (refer https://github.com/apache/spark/pull/22645)

### Does this PR introduce any user-facing change?

There will be no change in the `SQLStatistics` table in JDBC/ODBC server page execpt pagination support.

### How was this patch tested?

Manually verified.

Before PR:

After PR:

Closes#26215 from shahidki31/jdbcPagination.

Authored-by: shahid <shahidki31@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR test `ThriftServerQueryTestSuite` in an asynchronous way.

### Why are the changes needed?

The default value of `spark.sql.hive.thriftServer.async` is `true`.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

```

build/sbt "hive-thriftserver/test-only *.ThriftServerQueryTestSuite" -Phive-thriftserver

build/mvn -Dtest=none -DwildcardSuites=org.apache.spark.sql.hive.thriftserver.ThriftServerQueryTestSuite test -Phive-thriftserver

```

Closes#26172 from wangyum/SPARK-29516.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

This pr refine the code in ThriftServerQueryTestSuite.blackList to reuse the black list of SQLQueryTestSuite instead of duplicating all test cases from SQLQueryTestSuite.blackList.

### Why are the changes needed?

To reduce code duplication.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

N/A

Closes#26188 from fuwhu/SPARK-TBD.

Authored-by: fuwhu <bestwwg@163.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

When adaptive execution is enabled, the Spark users who connected from JDBC always get adaptive execution error whatever the under root cause is. It's very confused. We have to check the driver log to find out why.

```shell

0: jdbc:hive2://localhost:10000> SELECT * FROM testData join testData2 ON key = v;

SELECT * FROM testData join testData2 ON key = v;

Error: Error running query: org.apache.spark.SparkException: Adaptive execution failed due to stage materialization failures. (state=,code=0)

0: jdbc:hive2://localhost:10000>

```

For example, a job queried from JDBC failed due to HDFS missing block. User still get the error message `Adaptive execution failed due to stage materialization failures`.

The easiest way to reproduce is changing the code of `AdaptiveSparkPlanExec`, to let it throws out an exception when it faces `StageSuccess`.

```scala

case class AdaptiveSparkPlanExec(

events.drainTo(rem)

(Seq(nextMsg) ++ rem.asScala).foreach {

case StageSuccess(stage, res) =>

// stage.resultOption = Some(res)

val ex = new SparkException("Wrapper Exception",

new IllegalArgumentException("Root cause is IllegalArgumentException for Test"))

errors.append(

new SparkException(s"Failed to materialize query stage: ${stage.treeString}", ex))

case StageFailure(stage, ex) =>

errors.append(

new SparkException(s"Failed to materialize query stage: ${stage.treeString}", ex))

```

### Why are the changes needed?

To make the error message more user-friend and more useful for query from JDBC.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually test query:

```shell

0: jdbc:hive2://localhost:10000> CREATE TEMPORARY VIEW testData (key, value) AS SELECT explode(array(1, 2, 3, 4)), cast(substring(rand(), 3, 4) as string);

CREATE TEMPORARY VIEW testData (key, value) AS SELECT explode(array(1, 2, 3, 4)), cast(substring(rand(), 3, 4) as string);

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.225 seconds)

0: jdbc:hive2://localhost:10000> CREATE TEMPORARY VIEW testData2 (k, v) AS SELECT explode(array(1, 1, 2, 2)), cast(substring(rand(), 3, 4) as int);

CREATE TEMPORARY VIEW testData2 (k, v) AS SELECT explode(array(1, 1, 2, 2)), cast(substring(rand(), 3, 4) as int);

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.043 seconds)

```

Before:

```shell

0: jdbc:hive2://localhost:10000> SELECT * FROM testData join testData2 ON key = v;

SELECT * FROM testData join testData2 ON key = v;

Error: Error running query: org.apache.spark.SparkException: Adaptive execution failed due to stage materialization failures. (state=,code=0)

0: jdbc:hive2://localhost:10000>

```

After:

```shell

0: jdbc:hive2://localhost:10000> SELECT * FROM testData join testData2 ON key = v;

SELECT * FROM testData join testData2 ON key = v;

Error: Error running query: java.lang.IllegalArgumentException: Root cause is IllegalArgumentException for Test (state=,code=0)

0: jdbc:hive2://localhost:10000>

```

Closes#25960 from LantaoJin/SPARK-29283.

Authored-by: lajin <lajin@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

Support FETCH_PRIOR fetching in Thriftserver, and report correct fetch start offset it TFetchResultsResp.results.startRowOffset

The semantics of FETCH_PRIOR are as follow: Assuming the previous fetch returned a block of rows from offsets [10, 20)

* calling FETCH_PRIOR(maxRows=5) will scroll back and return rows [5, 10)

* calling FETCH_PRIOR(maxRows=10) again, will scroll back, but can't go earlier than 0. It will nevertheless return 10 rows, returning rows [0, 10) (overlapping with the previous fetch)

* calling FETCH_PRIOR(maxRows=4) again will again return rows starting from offset 0 - [0, 4)

* calling FETCH_NEXT(maxRows=6) after that will move the cursor forward and return rows [4, 10)

##### Client/server backwards/forwards compatibility:

Old driver with new server:

* Drivers that don't support FETCH_PRIOR will not attempt to use it

* Field TFetchResultsResp.results.startRowOffset was not set, old drivers don't depend on it.

New driver with old server

* Using an older thriftserver with FETCH_PRIOR will make the thriftserver return unsupported operation error. The driver can then recognize that it's an old server.

* Older thriftserver will return TFetchResultsResp.results.startRowOffset=0. If the client driver receives 0, it can know that it can not rely on it as correct offset. If the client driver intentionally wants to fetch from 0, it can use FETCH_FIRST.

### Why are the changes needed?

It's intended to be used to recover after connection errors. If a client lost connection during fetching (e.g. of rows [10, 20)), and wants to reconnect and continue, it could not know whether the request got lost before reaching the server, or on the response back. When it issued another FETCH_NEXT(10) request after reconnecting, because TFetchResultsResp.results.startRowOffset was not set, it could not know if the server will return rows [10,20) (because the previous request didn't reach it) or rows [20, 30) (because it returned data from the previous request but the connection got broken on the way back). Now, with TFetchResultsResp.results.startRowOffset the client can know after reconnecting which rows it is getting, and use FETCH_PRIOR to scroll back if a fetch block was lost in transmission.

Driver should always use FETCH_PRIOR after a broken connection.

* If the Thriftserver returns unsuported operation error, the driver knows that it's an old server that doesn't support it. The driver then must error the query, as it will also not support returning the correct startRowOffset, so the driver cannot reliably guarantee if it hadn't lost any rows on the fetch cursor.

* If the driver gets a response to FETCH_PRIOR, it should also have a correctly set startRowOffset, which the driver can use to position itself back where it left off before the connection broke.

* If FETCH_NEXT was used after a broken connection on the first fetch, and returned with an startRowOffset=0, then the client driver can't know if it's 0 because it's the older server version, or if it's genuinely 0. Better to call FETCH_PRIOR, as scrolling back may anyway be possibly required after a broken connection.

This way it is implemented in a backwards/forwards compatible way, and doesn't require bumping the protocol version. FETCH_ABSOLUTE might have been better, but that would require a bigger protocol change, as there is currently no field to specify the requested absolute offset.

### Does this PR introduce any user-facing change?

ODBC/JDBC drivers connecting to Thriftserver may now implement using the FETCH_PRIOR fetch order to scroll back in query results, and check TFetchResultsResp.results.startRowOffset if their cursor position is consistent after connection errors.

### How was this patch tested?

Added tests to HiveThriftServer2Suites

Closes#26014 from juliuszsompolski/SPARK-29349.

Authored-by: Juliusz Sompolski <julek@databricks.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

When inserting a value into a column with the different data type, Spark performs type coercion. Currently, we support 3 policies for the store assignment rules: ANSI, legacy and strict, which can be set via the option "spark.sql.storeAssignmentPolicy":

1. ANSI: Spark performs the type coercion as per ANSI SQL. In practice, the behavior is mostly the same as PostgreSQL. It disallows certain unreasonable type conversions such as converting `string` to `int` and `double` to `boolean`. It will throw a runtime exception if the value is out-of-range(overflow).

2. Legacy: Spark allows the type coercion as long as it is a valid `Cast`, which is very loose. E.g., converting either `string` to `int` or `double` to `boolean` is allowed. It is the current behavior in Spark 2.x for compatibility with Hive. When inserting an out-of-range value to a integral field, the low-order bits of the value is inserted(the same as Java/Scala numeric type casting). For example, if 257 is inserted to a field of Byte type, the result is 1.

3. Strict: Spark doesn't allow any possible precision loss or data truncation in store assignment, e.g., converting either `double` to `int` or `decimal` to `double` is allowed. The rules are originally for Dataset encoder. As far as I know, no mainstream DBMS is using this policy by default.

Currently, the V1 data source uses "Legacy" policy by default, while V2 uses "Strict". This proposal is to use "ANSI" policy by default for both V1 and V2 in Spark 3.0.

### Why are the changes needed?

Following the ANSI SQL standard is most reasonable among the 3 policies.

### Does this PR introduce any user-facing change?

Yes.

The default store assignment policy is ANSI for both V1 and V2 data sources.

### How was this patch tested?

Unit test

Closes#26107 from gengliangwang/ansiPolicyAsDefault.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR adds 2 changes regarding exception handling in `SQLQueryTestSuite` and `ThriftServerQueryTestSuite`

- fixes an expected output sorting issue in `ThriftServerQueryTestSuite` as if there is an exception then there is no need for sort

- introduces common exception handling in those 2 suites with a new `handleExceptions` method

### Why are the changes needed?

Currently `ThriftServerQueryTestSuite` passes on master, but it fails on one of my PRs (https://github.com/apache/spark/pull/23531) with this error (https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/111651/testReport/org.apache.spark.sql.hive.thriftserver/ThriftServerQueryTestSuite/sql_3/):

```

org.scalatest.exceptions.TestFailedException: Expected "

[Recursion level limit 100 reached but query has not exhausted, try increasing spark.sql.cte.recursion.level.limit

org.apache.spark.SparkException]

", but got "

[org.apache.spark.SparkException

Recursion level limit 100 reached but query has not exhausted, try increasing spark.sql.cte.recursion.level.limit]

" Result did not match for query #4 WITH RECURSIVE r(level) AS ( VALUES (0) UNION ALL SELECT level + 1 FROM r ) SELECT * FROM r

```

The unexpected reversed order of expected output (error message comes first, then the exception class) is due to this line: https://github.com/apache/spark/pull/26028/files#diff-b3ea3021602a88056e52bf83d8782de8L146. It should not sort the expected output if there was an error during execution.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing UTs.

Closes#26028 from peter-toth/SPARK-29359-better-exception-handling.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

For issue mentioned in [SPARK-29022](https://issues.apache.org/jira/browse/SPARK-29022)

Spark SQL CLI can't use class as serde class in jars add by SQL `ADD JAR`.

When we create table with `serde` class contains by jar added by SQL 'ADD JAR'.

We can create table with `serde` class construct success since we call `HiveClientImpl.createTable` under `withHiveState` method, it will add `clientLoader.classLoader` to `HiveClientImpl.state.getConf.classLoader`.

Jars added by SQL `ADD JAR` will be add to

1. `sparkSession.sharedState.jarClassLoader`.

2. 'HiveClientLoader.clientLoader.classLoader'

In Current spark-sql MODE, `HiveClientImpl.state` will use CliSessionState created when initialize

SparkSQLCliDriver, When we select data from table, it will check `serde` class, when call method `HiveTableScanExec#addColumnMetadataToConf()` to check for table desc serde class.

```

val deserializer = tableDesc.getDeserializerClass.getConstructor().newInstance()

deserializer.initialize(hiveConf, tableDesc.getProperties)

```

`getDeserializer` will use CliSessionState's hiveConf's classLoader in `Spark SQL CLI` mode.

But when we call `ADD JAR` in spark, the jar won't be added to `Classloader of CliSessionState' conf `, then `ClassNotFound` error happen.

So we reset `CliSessionState conf's classLoader ` to `sharedState.jarClassLoader` when `sharedState.jarClassLoader` has added jar passed by `HIVEAUXJARS`

Then when we use `ADD JAR ` to add jar, jar path will be added to CliSessionState's conf's ClassLoader

### Why are the changes needed?

Fix bug

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

ADD UT

Closes#25729 from AngersZhuuuu/SPARK-29015.

Authored-by: angerszhu <angers.zhu@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR aims to remove `scalatest` deprecation warnings with the following changes.

- `org.scalatest.mockito.MockitoSugar` -> `org.scalatestplus.mockito.MockitoSugar`

- `org.scalatest.selenium.WebBrowser` -> `org.scalatestplus.selenium.WebBrowser`

- `org.scalatest.prop.Checkers` -> `org.scalatestplus.scalacheck.Checkers`

- `org.scalatest.prop.GeneratorDrivenPropertyChecks` -> `org.scalatestplus.scalacheck.ScalaCheckDrivenPropertyChecks`

### Why are the changes needed?

According to the Jenkins logs, there are 118 warnings about this.

```

grep "is deprecated" ~/consoleText | grep scalatest | wc -l

118

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

After Jenkins passes, we need to check the Jenkins log.

Closes#25982 from dongjoon-hyun/SPARK-29307.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Scala 2.13 emits a deprecation warning for procedure-like declarations:

```

def foo() {

...

```

This is equivalent to the following, so should be changed to avoid a warning:

```

def foo(): Unit = {

...

```

### Why are the changes needed?

It will avoid about a thousand compiler warnings when we start to support Scala 2.13. I wanted to make the change in 3.0 as there are less likely to be back-ports from 3.0 to 2.4 than 3.1 to 3.0, for example, minimizing that downside to touching so many files.

Unfortunately, that makes this quite a big change.

### Does this PR introduce any user-facing change?

No behavior change at all.

### How was this patch tested?

Existing tests.

Closes#25968 from srowen/SPARK-29291.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Some of the columns of JDBC/ODBC server tab in Web UI are hard to understand.

We have documented it at SPARK-28373 but I think it is better to have some tooltips in the SQL statistics table to explain the columns

The columns with new tooltips are finish time, close time, execution time and duration

Improvements in UIUtils can be used in other tables in WebUI to add tooltips

### Why are the changes needed?

It is interesting to improve the undestanding of the WebUI

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit tests are added and manual test.

Closes#25723 from planga82/feature/SPARK-29019_tooltipjdbcServer.

Lead-authored-by: Unknown <soypab@gmail.com>

Co-authored-by: Pablo <soypab@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR moves Hive test jars(`hive-contrib-*.jar` and `hive-hcatalog-core-*.jar`) from maven dependency to local file.

### Why are the changes needed?

`--jars` can't be tested since `hive-contrib-*.jar` and `hive-hcatalog-core-*.jar` are already in classpath.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

manual test

Closes#25690 from wangyum/SPARK-27831-revert.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

After https://github.com/apache/spark/pull/25158 and https://github.com/apache/spark/pull/25458, SQL features of PostgreSQL are introduced into Spark. AFAIK, both features are implementation-defined behaviors, which are not specified in ANSI SQL.

In such a case, this proposal is to add a configuration `spark.sql.dialect` for choosing a database dialect.

After this PR, Spark supports two database dialects, `Spark` and `PostgreSQL`. With `PostgreSQL` dialect, Spark will:

1. perform integral division with the / operator if both sides are integral types;

2. accept "true", "yes", "1", "false", "no", "0", and unique prefixes as input and trim input for the boolean data type.

### Why are the changes needed?

Unify the external database dialect with one configuration, instead of small flags.

### Does this PR introduce any user-facing change?

A new configuration `spark.sql.dialect` for choosing a database dialect.

### How was this patch tested?

Existing tests.

Closes#25697 from gengliangwang/dialect.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Rename the package pgSQL to postgreSQL

### Why are the changes needed?

To address the comment in https://github.com/apache/spark/pull/25697#discussion_r328431070 . The official full name seems more reasonable.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing unit tests.

Closes#25936 from gengliangwang/renamePGSQL.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

This PR enable `ThriftServerQueryTestSuite` and fix previously flaky test by:

1. Start thriftserver in `beforeAll()`.

2. Disable `spark.sql.hive.thriftServer.async`.

### Why are the changes needed?

Improve test coverage.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

```shell

build/sbt "hive-thriftserver/test-only *.ThriftServerQueryTestSuite " -Phive-thriftserver

build/mvn -Dtest=none -DwildcardSuites=org.apache.spark.sql.hive.thriftserver.ThriftServerQueryTestSuite test -Phive-thriftserver

```

Closes#25868 from wangyum/SPARK-28527-enable.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

Discuss in https://github.com/apache/spark/pull/25611

If cancel() and close() is called very quickly after the query is started, then they may both call cleanup() before Spark Jobs are started. Then sqlContext.sparkContext.cancelJobGroup(statementId) does nothing.

But then the execute thread can start the jobs, and only then get interrupted and exit through here. But then it will exit here, and no-one will cancel these jobs and they will keep running even though this execution has exited.

So when execute() was interrupted by `cancel()`, when get into catch block, we should call canJobGroup again to make sure the job was canceled.

### Why are the changes needed?

### Does this PR introduce any user-facing change?

NO

### How was this patch tested?

MT

Closes#25743 from AngersZhuuuu/SPARK-29036.

Authored-by: angerszhu <angers.zhu@gmail.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

This PR add support sorting `Execution Time` and `Duration` columns for `ThriftServerSessionPage`.

### Why are the changes needed?

Previously, it's not sorted correctly.

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

Manually do the following and test sorting on those columns in the Spark Thrift Server Session Page.

```

$ sbin/start-thriftserver.sh

$ bin/beeline -u jdbc:hive2://localhost:10000

0: jdbc:hive2://localhost:10000> create table t(a int);

+---------+--+

| Result |

+---------+--+

+---------+--+

No rows selected (0.521 seconds)

0: jdbc:hive2://localhost:10000> select * from t;

+----+--+

| a |

+----+--+

+----+--+

No rows selected (0.772 seconds)

0: jdbc:hive2://localhost:10000> show databases;

+---------------+--+

| databaseName |

+---------------+--+

| default |

+---------------+--+

1 row selected (0.249 seconds)

```

**Sorted by `Execution Time` column**:

**Sorted by `Duration` column**:

Closes#25892 from wangyum/SPARK-28599.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Currently, there are new configurations for compatibility with ANSI SQL:

* `spark.sql.parser.ansi.enabled`

* `spark.sql.decimalOperations.nullOnOverflow`

* `spark.sql.failOnIntegralTypeOverflow`

This PR is to add new configuration `spark.sql.ansi.enabled` and remove the 3 options above. When the configuration is true, Spark tries to conform to the ANSI SQL specification. It will be disabled by default.

### Why are the changes needed?

Make it simple and straightforward.

### Does this PR introduce any user-facing change?

The new features for ANSI compatibility will be set via one configuration `spark.sql.ansi.enabled`.

### How was this patch tested?

Existing unit tests.

Closes#25693 from gengliangwang/ansiEnabled.

Lead-authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Co-authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

ThriftServerSessionPage displays timestamp 0 (1970/01/01) instead of nothing if query finish time and close time are not set.

Change it to display nothing, like ThriftServerPage.

### Why are the changes needed?

Obvious bug.

### Does this PR introduce any user-facing change?

Finish time and Close time will be displayed correctly on ThriftServerSessionPage in JDBC/ODBC Spark UI.

### How was this patch tested?

Manual test.

Closes#25762 from juliuszsompolski/SPARK-29056.

Authored-by: Juliusz Sompolski <julek@databricks.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

Current Spark Thrift Server return TypeInfo includes

1. INTERVAL_YEAR_MONTH

2. INTERVAL_DAY_TIME

3. UNION

4. USER_DEFINED

Spark doesn't support INTERVAL_YEAR_MONTH, INTERVAL_YEAR_MONTH, UNION

and won't return USER)DEFINED type.

This PR overwrite GetTypeInfoOperation with SparkGetTypeInfoOperation to exclude types which we don't need.

In hive-1.2.1 Type class is `org.apache.hive.service.cli.Type`

In hive-2.3.x Type class is `org.apache.hadoop.hive.serde2.thrift.Type`

Use ThrifrserverShimUtils to fit version problem and exclude types we don't need

### Why are the changes needed?

We should return type info of Spark's own type info

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manuel test & Added UT

Closes#25694 from AngersZhuuuu/SPARK-28982.

Lead-authored-by: angerszhu <angers.zhu@gmail.com>

Co-authored-by: AngersZhuuuu <angers.zhu@gmail.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

### What changes were proposed in this pull request?

The intent to use the --hiveconf/--hivevar parameter is just an initialization value, so setting it once in ```SparkSQLSessionManager#openSession``` is sufficient, and each time the ```SparkExecuteStatementOperation``` setting causes the variable to not be modified.

### Why are the changes needed?

It is wrong to set the --hivevar/--hiveconf variable in every ```SparkExecuteStatementOperation```, which prevents variable updates.

### Does this PR introduce any user-facing change?

```

cat <<EOF > test.sql

select '\${a}', '\${b}';

set b=bvalue_MOD_VALUE;

set b;

EOF

beeline -u jdbc:hive2://localhost:10000 --hiveconf a=avalue --hivevar b=bvalue -f test.sql

```

current result:

```

+-----------------+-----------------+--+

| avalue | bvalue |

+-----------------+-----------------+--+

| avalue | bvalue |

+-----------------+-----------------+--+

+-----------------+-----------------+--+

| key | value |

+-----------------+-----------------+--+

| b | bvalue |

+-----------------+-----------------+--+

1 row selected (0.022 seconds)

```

after modification:

```

+-----------------+-----------------+--+

| avalue | bvalue |

+-----------------+-----------------+--+

| avalue | bvalue |

+-----------------+-----------------+--+

+-----------------+-----------------+--+

| key | value |

+-----------------+-----------------+--+

| b | bvalue_MOD_VALUE|

+-----------------+-----------------+--+

1 row selected (0.022 seconds)

```

### How was this patch tested?

modified the existing unit test

Closes#25722 from cxzl25/fix_SPARK-26598.

Authored-by: sychen <sychen@ctrip.com>

Signed-off-by: Yuming Wang <wgyumg@gmail.com>

## What changes were proposed in this pull request?

`bin/spark-shell` support query interval value:

```scala

scala> spark.sql("SELECT interval 3 months 1 hours AS i").show(false)

+-------------------------+

|i |

+-------------------------+

|interval 3 months 1 hours|

+-------------------------+

```

But `sbin/start-thriftserver.sh` can't support query interval value:

```sql

0: jdbc:hive2://localhost:10000/default> SELECT interval 3 months 1 hours AS i;

Error: java.lang.IllegalArgumentException: Unrecognized type name: interval (state=,code=0)

```

This PR maps `CalendarIntervalType` to `StringType` for `TableSchema` to make Thriftserver support query interval value because we do not support `INTERVAL_YEAR_MONTH` type and `INTERVAL_DAY_TIME`:

02c33694c8/sql/hive-thriftserver/v1.2.1/src/main/java/org/apache/hive/service/cli/Type.java (L73-L78)

[SPARK-27791](https://issues.apache.org/jira/browse/SPARK-27791): Support SQL year-month INTERVAL type

[SPARK-27793](https://issues.apache.org/jira/browse/SPARK-27793): Support SQL day-time INTERVAL type

## How was this patch tested?

unit tests

Closes#25277 from wangyum/Thriftserver-support-interval-type.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

This PR ignores Thrift server `ThriftServerQueryTestSuite`.

### Why are the changes needed?

This ThriftServerQueryTestSuite test case led to frequent Jenkins build failure.

### Does this PR introduce any user-facing change?

Yes.

### How was this patch tested?

N/A

Closes#25592 from wangyum/SPARK-28527-f1.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR implements `SparkGetCatalogsOperation` for Thrift Server metadata completeness.

### Why are the changes needed?

Thrift Server metadata completeness.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit test

Closes#25555 from wangyum/SPARK-28852.

Authored-by: Yuming Wang <yumwang@ebay.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

## What changes were proposed in this pull request?

While processing the Rowdata in the server side ColumnValue BigDecimal type value processed by server has to converted to the HiveDecmal data type for successful processing of query using Hive ODBC client.As per current logic corresponding to the Decimal column datatype, the Spark server uses BigDecimal, and the ODBC client uses HiveDecimal. If the data type does not match, the client fail to parse

Since this handing was missing the query executed in Hive ODBC client wont return or provides result to the user even though the decimal type column value data present.

## How was this patch tested?

Manual test report and impact assessment is done using existing test-cases

Before fix

After Fix

Closes#23899 from sujith71955/master_decimalissue.

Authored-by: s71955 <sujithchacko.2010@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

This PR port [HIVE-10646](https://issues.apache.org/jira/browse/HIVE-10646) to fix Hive 0.12's JDBC client can not handle `NULL_TYPE`:

```sql

Connected to: Hive (version 3.0.0-SNAPSHOT)

Driver: Hive (version 0.12.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 0.12.0 by Apache Hive

0: jdbc:hive2://localhost:10000> select null;

org.apache.thrift.transport.TTransportException

at org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:132)

at org.apache.thrift.transport.TTransport.readAll(TTransport.java:84)

at org.apache.thrift.transport.TSaslTransport.readLength(TSaslTransport.java:346)

at org.apache.thrift.transport.TSaslTransport.readFrame(TSaslTransport.java:423)

at org.apache.thrift.transport.TSaslTransport.read(TSaslTransport.java:405)

```

Server log:

```

19/08/07 09:34:07 ERROR TThreadPoolServer: Error occurred during processing of message.

java.lang.NullPointerException

at org.apache.hive.service.cli.thrift.TRow$TRowStandardScheme.write(TRow.java:388)

at org.apache.hive.service.cli.thrift.TRow$TRowStandardScheme.write(TRow.java:338)

at org.apache.hive.service.cli.thrift.TRow.write(TRow.java:288)

at org.apache.hive.service.cli.thrift.TRowSet$TRowSetStandardScheme.write(TRowSet.java:605)

at org.apache.hive.service.cli.thrift.TRowSet$TRowSetStandardScheme.write(TRowSet.java:525)

at org.apache.hive.service.cli.thrift.TRowSet.write(TRowSet.java:455)

at org.apache.hive.service.cli.thrift.TFetchResultsResp$TFetchResultsRespStandardScheme.write(TFetchResultsResp.java:550)

at org.apache.hive.service.cli.thrift.TFetchResultsResp$TFetchResultsRespStandardScheme.write(TFetchResultsResp.java:486)

at org.apache.hive.service.cli.thrift.TFetchResultsResp.write(TFetchResultsResp.java:412)

at org.apache.hive.service.cli.thrift.TCLIService$FetchResults_result$FetchResults_resultStandardScheme.write(TCLIService.java:13192)

at org.apache.hive.service.cli.thrift.TCLIService$FetchResults_result$FetchResults_resultStandardScheme.write(TCLIService.java:13156)

at org.apache.hive.service.cli.thrift.TCLIService$FetchResults_result.write(TCLIService.java:13107)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:58)

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)

at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:53)