### What changes were proposed in this pull request?

This PR fixes an issue that `sbt unidoc` fails with JDK11.

With the current master, `sbt unidoc` fails because the generated Java sources cause syntax error.

As of JDK11, the default doclet seems to refuse such syntax error.

Usually, it's enough to specify `--ignore-source-errors` option when `javadoc` runs to suppress the syntax error but unfortunately, we will then get an internal error.

```

[error] javadoc: error - An internal exception has occurred.

[error] (java.lang.NullPointerException)

[error] Please file a bug against the javadoc tool via the Java bug reporting page

[error] (http://bugreport.java.com) after checking the Bug Database (http://bugs.java.com)

[error] for duplicates. Include error messages and the following diagnostic in your report. Thank you.

[error] java.lang.NullPointerException

[error] at jdk.compiler/com.sun.tools.javac.code.Types.erasure(Types.java:2340)

[error] at jdk.compiler/com.sun.tools.javac.code.Types$14.visitTypeVar(Types.java:2398)

[error] at jdk.compiler/com.sun.tools.javac.code.Types$14.visitTypeVar(Types.java:2348)

[error] at jdk.compiler/com.sun.tools.javac.code.Type$TypeVar.accept(Type.java:1659)

[error] at jdk.compiler/com.sun.tools.javac.code.Types$DefaultTypeVisitor.visit(Types.java:4857)

[error] at jdk.compiler/com.sun.tools.javac.code.Types.erasure(Types.java:2343)

[error] at jdk.compiler/com.sun.tools.javac.code.Types.erasure(Types.java:2329)

[error] at jdk.compiler/com.sun.tools.javac.model.JavacTypes.erasure(JavacTypes.java:134)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.toolkit.util.Utils$5.visitTypeVariable(Utils.java:1069)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.toolkit.util.Utils$5.visitTypeVariable(Utils.java:1048)

[error] at jdk.compiler/com.sun.tools.javac.code.Type$TypeVar.accept(Type.java:1695)

[error] at java.compiler11.0.9.1/javax.lang.model.util.AbstractTypeVisitor6.visit(AbstractTypeVisitor6.java:104)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.toolkit.util.Utils.asTypeElement(Utils.java:1086)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.LinkInfoImpl.setContext(LinkInfoImpl.java:410)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.LinkInfoImpl.<init>(LinkInfoImpl.java:285)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.LinkFactoryImpl.getTypeParameterLink(LinkFactoryImpl.java:184)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.LinkFactoryImpl.getTypeParameterLinks(LinkFactoryImpl.java:167)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.toolkit.util.links.LinkFactory.getLink(LinkFactory.java:196)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.HtmlDocletWriter.getLink(HtmlDocletWriter.java:679)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.HtmlDocletWriter.addPreQualifiedClassLink(HtmlDocletWriter.java:814)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.HtmlDocletWriter.addPreQualifiedStrongClassLink(HtmlDocletWriter.java:839)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.AbstractTreeWriter.addPartialInfo(AbstractTreeWriter.java:185)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.AbstractTreeWriter.addLevelInfo(AbstractTreeWriter.java:92)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.AbstractTreeWriter.addLevelInfo(AbstractTreeWriter.java:94)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.AbstractTreeWriter.addTree(AbstractTreeWriter.java:129)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.AbstractTreeWriter.addTree(AbstractTreeWriter.java:112)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.PackageTreeWriter.generatePackageTreeFile(PackageTreeWriter.java:115)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.PackageTreeWriter.generate(PackageTreeWriter.java:92)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.formats.html.HtmlDoclet.generatePackageFiles(HtmlDoclet.java:312)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.toolkit.AbstractDoclet.startGeneration(AbstractDoclet.java:210)

[error] at jdk.javadoc/jdk.javadoc.internal.doclets.toolkit.AbstractDoclet.run(AbstractDoclet.java:114)

[error] at jdk.javadoc/jdk.javadoc.doclet.StandardDoclet.run(StandardDoclet.java:72)

[error] at jdk.javadoc/jdk.javadoc.internal.tool.Start.parseAndExecute(Start.java:588)

[error] at jdk.javadoc/jdk.javadoc.internal.tool.Start.begin(Start.java:432)

[error] at jdk.javadoc/jdk.javadoc.internal.tool.Start.begin(Start.java:345)

[error] at jdk.javadoc/jdk.javadoc.internal.tool.Main.execute(Main.java:63)

[error] at jdk.javadoc/jdk.javadoc.internal.tool.Main.main(Main.java:52)

```

I found the internal error happens when a generated Java class is from a Scala class which is package private and generic.

I also found that if we don't generate class hierarchy tree in the JavaDoc, we can suppress the internal error for JDK11 and later.

### Why are the changes needed?

Make the build success with sbt and JDK11.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I confirmed the following command successfully finish with JDK8 and JDK11.

```

$ build/sbt -Phive -Phive-thriftserver -Pyarn -Pkubernetes -Pmesos -Pspark-ganglia-lgpl -Pkinesis-asl -Phadoop-cloud clean unidoc

```

I also confirmed html files are successfully generated under `target/javaunidoc`.

Closes#31023 from sarutak/fix-genjavadoc-java11.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch proposes to add latest offset to source progress for streaming queries.

### Why are the changes needed?

Currently we record start and end offsets per source in streaming process. Latest offset is an important information for streaming process but the progress lacks of this info. We can use it to track the process lag and adjust streaming queries. We should add latest offset to source progress.

### Does this PR introduce _any_ user-facing change?

Yes, for new metric about latest source offset in source progress.

### How was this patch tested?

Unit test. Manually test in Spark cluster:

```

"description" : "KafkaV2[Subscribe[page_view_events]]",

"startOffset" : {

"page_view_events" : {

"2" : 582370921,

"4" : 391910836,

"1" : 631009201,

"3" : 406601346,

"0" : 195799112

}

},

"endOffset" : {

"page_view_events" : {

"2" : 583764414,

"4" : 392338002,

"1" : 632183480,

"3" : 407101489,

"0" : 197304028

}

},

"latestOffset" : {

"page_view_events" : {

"2" : 589852545,

"4" : 394204277,

"1" : 637313869,

"3" : 409286602,

"0" : 203878962

}

},

"numInputRows" : 4999997,

"inputRowsPerSecond" : 29287.70501405811,

```

Closes#30988 from viirya/latest-offset.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR aims to upgrade SBT to 1.4.6 to fix the SBT regression.

### Why are the changes needed?

[SBT 1.4.6](https://github.com/sbt/sbt/releases/tag/v1.4.6) has the following fixes

- Updates to Coursier 2.0.8, which fixes the cache directory setting on Windows

- Fixes performance regression in shell tab completion

- Fixes match error when using withDottyCompat

- Fixes thread-safety in AnalysisCallback handler

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs.

Closes#30993 from dongjoon-hyun/SPARK-SBT-1.4.6.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR adds logic to build logical writes introduced in SPARK-33779.

Note: This PR contains a subset of changes discussed in PR #29066.

### Why are the changes needed?

These changes are the next step as discussed in the [design doc](https://docs.google.com/document/d/1X0NsQSryvNmXBY9kcvfINeYyKC-AahZarUqg3nS1GQs/edit#) for SPARK-23889.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#30806 from aokolnychyi/spark-33808.

Authored-by: Anton Okolnychyi <aokolnychyi@apple.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR aims to use ListBuffer instead of Stack in SparkBuild.scala to remove deprecation warning.

### Why are the changes needed?

Stack is deprecated in Scala 2.12.0.

```scala

% build/sbt compile

...

[warn] /Users/william/spark/project/SparkBuild.scala:1112:25:

class Stack in package mutable is deprecated (since 2.12.0):

Stack is an inelegant and potentially poorly-performing wrapper around List.

Use a List assigned to a var instead.

[warn] val stack = new Stack[File]()

```

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manual.

Closes#30860 from williamhyun/SPARK-33854.

Authored-by: William Hyun <williamhyun3@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR aims to convert `EventLogFileReader`'s derived classes into `package private`.

- SingleFileEventLogFileReader

- RollingEventLogFilesFileReader

`EventLogFileReader` itself is used in `scheduler` module during tests.

### Why are the changes needed?

This classes were designed to be internal. This PR hides it explicitly to reduce the maintenance burden.

### Does this PR introduce _any_ user-facing change?

Yes, but these were exposed accidentally.

### How was this patch tested?

Pass CIs.

Closes#30814 from dongjoon-hyun/SPARK-33790.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to upgrade SBT to 1.4.5 to support Apple Silicon.

### Why are the changes needed?

The following is the release note including `sbt 1.4.5 adds support for Apple silicon (AArch64 also called ARM64)`.

- https://github.com/sbt/sbt/releases/tag/v1.4.5

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs.

Closes#30817 from dongjoon-hyun/SPARK-33821.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

`FsHistoryProvider#checkForLogs` already has `FileStatus` when constructing `SingleFileEventLogFileReader`, and there is no need to get the `FileStatus` again when `SingleFileEventLogFileReader#fileSizeForLastIndex`.

### Why are the changes needed?

This can reduce a lot of rpc calls and improve the speed of the history server.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

exist ut

Closes#30780 from cxzl25/SPARK-33790.

Authored-by: sychen <sychen@ctrip.com>

Signed-off-by: Jungtaek Lim <kabhwan.opensource@gmail.com>

### What changes were proposed in this pull request?

There are too many compilation warnings in Scala 2.13, this pr add some `-Wconf:msg= regexes` rules to `SparkBuild.scala` to suppress compilation warnings and the suppressed will not be printed to the console.

The suppressed compilation warnings includes:

- All warnings related to `method\value\type\object\trait\inheritance` deprecated since 2.13

- All warnings related to `Widening conversion from XXX to YYY is deprecated because it loses precision`

- Auto-application to `()` is deprecated. Supply the empty argument list `()` explicitly to invoke method `methodName`, or remove the empty argument list from its definition (Java-defined methods are exempt).In Scala 3, an unapplied method like this will be eta-expanded into a function.

- method with a single empty parameter list overrides method without any parameter list

- method without a parameter list overrides a method with a single empty one

Not suppressed compilation warnings includes:

- Unicode escapes in triple quoted strings are deprecated, use the literal character instead.

- view bounds are deprecated

- symbol literal is deprecated

### Why are the changes needed?

Suppress unimportant compilation warnings in Scala 2.13

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#30760 from LuciferYang/SPARK-33775.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

TO FIX flaky tests:

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/132345/testReport/

```

org.apache.spark.sql.hive.thriftserver.HiveThriftHttpServerSuite.JDBC query execution

org.apache.spark.sql.hive.thriftserver.HiveThriftHttpServerSuite.Checks Hive version

org.apache.spark.sql.hive.thriftserver.HiveThriftHttpServerSuite.SPARK-24829 Checks cast as float

```

The root cause here is a jar conflict issue.

`NewCookie.isHttpOnly` is not defined in the `jsr311-api.jar` which conflicts

The transitive artifact `jsr311-api.jar` of `hadoop-client` is excluded at the maven side. See https://issues.apache.org/jira/browse/SPARK-27179.

The Jenkins PR builder and Github Action use `SBT` as the compiler tool.

First, the exclusion rule from maven is not followed by sbt, so I was able to see `jsr311-api.jar` from maven cache to be added to the classpath directly. **This seems to be a bug of `sbt-pom-reader` plugin but I'm not that sure.**

Then I added an `ExcludeRule` for the `hive-thriftserver` module at the SBT side and did see the `jsr311-api.jar` gone, but the CI jobs still failed with the same error.

I added a trace log in ThriftHttpServlet

```s

ERROR ThriftHttpServlet: !!!!!!!!! Suspect???????? --->

file:/home/jenkins/workspace/SparkPullRequestBuilder/assembly/target/scala-2.12/jars/jsr311-api-1.1.1.jar

```

And the log pointed out that the assembly phase copied it to `assembly/target/scala-2.12/jars/` which will be added to the classpath too. With the help of SBT `dependencyTree` tool, I saw the `jsr311-api` again as a transitive of `jersery-core` from `yarn` module with a `test` scope. So **This seems to be another bug from the SBT side of the `sbt-assembly` plugin.** It copied a test scope transitive artifact to the assembly output.

In this PR, I defined some rules in SparkBuild.scala to bypass the potential bugs from the SBT side.

First, exclude the `jsr311` from all over the project and then add it back separately to the YARN module for SBT.

Additionally, the HiveThriftServerSuites was reflected for reducing flakiness too, but not related to the bugs I have found so far.

### Why are the changes needed?

fix test here

### Does this PR introduce _any_ user-facing change?

NO

### How was this patch tested?

passing jenkins and ga

Closes#30643 from yaooqinn/HiveThriftHttpServerSuite.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR is to show Slowpoke notifications in the log when running tests using SBT.

For example, the test case "zero sized blocks" in ExternalShuffleServiceSuite enters the infinite loop. After this change, the log file will have a notification message every 5 minute when the test case running longer than two minutes. Below is an example message.

```

[info] ExternalShuffleServiceSuite:

[info] - groupByKey without compression (101 milliseconds)

[info] - shuffle non-zero block size (3 seconds, 186 milliseconds)

[info] - shuffle serializer (3 seconds, 189 milliseconds)

[info] *** Test still running after 2 minute, 1 seconds: suite name: ExternalShuffleServiceSuite, test name: zero sized blocks.

[info] *** Test still running after 7 minute, 1 seconds: suite name: ExternalShuffleServiceSuite, test name: zero sized blocks.

[info] *** Test still running after 12 minutes, 1 seconds: suite name: ExternalShuffleServiceSuite, test name: zero sized blocks.

[info] *** Test still running after 17 minutes, 1 seconds: suite name: ExternalShuffleServiceSuite, test name: zero sized blocks.

```

### Why are the changes needed?

When the tests/code has bug and enters the infinite loop, it is hard to tell which test cases hit some issues from the log, especially when we are running the tests in parallel. It would be nice to show the Slowpoke notifications.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Manual testing in my local dev environment.

Closes#30621 from gatorsmile/addSlowpoke.

Authored-by: Xiao Li <gatorsmile@gmail.com>

Signed-off-by: Yuming Wang <yumwang@ebay.com>

### What changes were proposed in this pull request?

This PR aims to update `master` branch version to 3.2.0-SNAPSHOT.

### Why are the changes needed?

Start to prepare Apache Spark 3.2.0.

### Does this PR introduce _any_ user-facing change?

N/A.

### How was this patch tested?

Pass the CIs.

Closes#30606 from dongjoon-hyun/SPARK-3.2.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

TL;DR:

- This PR completes the support of archives in Spark itself instead of Yarn-only

- It makes `--archives` option work in other cluster modes too and adds `spark.archives` configuration.

- After this PR, PySpark users can leverage Conda to ship Python packages together as below:

```python

conda create -y -n pyspark_env -c conda-forge pyarrow==2.0.0 pandas==1.1.4 conda-pack==0.5.0

conda activate pyspark_env

conda pack -f -o pyspark_env.tar.gz

PYSPARK_DRIVER_PYTHON=python PYSPARK_PYTHON=./environment/bin/python pyspark --archives pyspark_env.tar.gz#environment

```

- Issue a warning that undocumented and hidden behavior of partial archive handling in `spark.files` / `SparkContext.addFile` will be deprecated, and users can use `spark.archives` and `SparkContext.addArchive`.

This PR proposes to add Spark's native `--archives` in Spark submit, and `spark.archives` configuration. Currently, both are supported only in Yarn mode:

```bash

./bin/spark-submit --help

```

```

Options:

...

Spark on YARN only:

--queue QUEUE_NAME The YARN queue to submit to (Default: "default").

--archives ARCHIVES Comma separated list of archives to be extracted into the

working directory of each executor.

```

This `archives` feature is useful often when you have to ship a directory and unpack into executors. One example is native libraries to use e.g. JNI. Another example is to ship Python packages together by Conda environment.

Especially for Conda, PySpark currently does not have a nice way to ship a package that works in general, please see also https://hyukjin-spark.readthedocs.io/en/stable/user_guide/python_packaging.html#using-zipped-virtual-environment (PySpark new documentation demo for 3.1.0).

The neatest way is arguably to use Conda environment by shipping zipped Conda environment but this is currently dependent on this archive feature. NOTE that we are able to use `spark.files` by relying on its undocumented behaviour that untars `tar.gz` but I don't think we should document such ways and promote people to more rely on it.

Also, note that this PR does not target to add the feature parity of `spark.files.overwrite`, `spark.files.useFetchCache`, etc. yet. I documented that this is an experimental feature as well.

### Why are the changes needed?

To complete the feature parity, and to provide a better support of shipping Python libraries together with Conda env.

### Does this PR introduce _any_ user-facing change?

Yes, this makes `--archives` works in Spark instead of Yarn-only, and adds a new configuration `spark.archives`.

### How was this patch tested?

I added unittests. Also, manually tested in standalone cluster, local-cluster, and local modes.

Closes#30486 from HyukjinKwon/native-archive.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR intends to fix typos in the sub-modules:

* `R`

* `common`

* `dev`

* `mlib`

* `external`

* `project`

* `streaming`

* `resource-managers`

* `python`

Split per srowen https://github.com/apache/spark/pull/30323#issuecomment-728981618

NOTE: The misspellings have been reported at 706a726f87 (commitcomment-44064356)

### Why are the changes needed?

Misspelled words make it harder to read / understand content.

### Does this PR introduce _any_ user-facing change?

There are various fixes to documentation, etc...

### How was this patch tested?

No testing was performed

Closes#30402 from jsoref/spelling-R_common_dev_mlib_external_project_streaming_resource-managers_python.

Authored-by: Josh Soref <jsoref@users.noreply.github.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR aims to update SBT from 1.4.2 to 1.4.4.

### Why are the changes needed?

This will bring the latest bug fixes.

- https://github.com/sbt/sbt/releases/tag/v1.4.3

- https://github.com/sbt/sbt/releases/tag/v1.4.4

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs.

Closes#30453 from williamhyun/sbt143.

Authored-by: William Hyun <williamhyun3@gmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Move "unused-imports" check config to `SparkBuild.scala` and make it SBT specific.

### Why are the changes needed?

Make unused-imports check for SBT specific.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Pass the Jenkins or GitHub Action

Closes#30441 from LuciferYang/SPARK-33441-FOLLOWUP.

Authored-by: yangjie01 <yangjie01@baidu.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to update SBT from 1.4.1 to 1.4.2.

### Why are the changes needed?

This will bring the latest bug fixes.

- https://github.com/sbt/sbt/releases/tag/v1.4.2

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs.

Closes#30268 from williamhyun/sbt.

Authored-by: William Hyun <williamhyun3@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR proposes to switch the class loader strategy from `ScalaLibrary` to `Flat` (see https://www.scala-sbt.org/1.x/docs/In-Process-Classloaders.html):

https://github.com/apache/spark/runs/1314691686

```

Error: java.util.MissingResourceException: Can't find bundle for base name org.scalactic.ScalacticBundle, locale en

Error: at java.util.ResourceBundle.throwMissingResourceException(ResourceBundle.java:1581)

Error: at java.util.ResourceBundle.getBundleImpl(ResourceBundle.java:1396)

Error: at java.util.ResourceBundle.getBundle(ResourceBundle.java:782)

Error: at org.scalactic.Resources$.resourceBundle$lzycompute(Resources.scala:8)

Error: at org.scalactic.Resources$.resourceBundle(Resources.scala:8)

Error: at org.scalactic.Resources$.pleaseDefineScalacticFillFilePathnameEnvVar(Resources.scala:256)

Error: at org.scalactic.source.PositionMacro$PositionMacroImpl.apply(PositionMacro.scala:65)

Error: at org.scalactic.source.PositionMacro$.genPosition(PositionMacro.scala:85)

Error: at sun.reflect.GeneratedMethodAccessor34.invoke(Unknown Source)

Error: at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

Error: at java.lang.reflect.Method.invoke(Method.java:498)

```

See also https://github.com/sbt/sbt/issues/5736

### Why are the changes needed?

To make the build unflaky.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

GitHub Actions build in this test.

Closes#30198 from HyukjinKwon/SPARK-33297.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR aims to upgrade SBT from 1.4.0 to 1.4.1.

### Why are the changes needed?

SBT 1.4.1 is a maintenance release at 1.4.x line. There are many bug fixes already.

- https://github.com/sbt/sbt/releases/tag/v1.4.1 (Released on 2020-10-19)

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CI and check [the Jenkins log](https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/130185/testReport).

```

========================================================================

Building Spark

========================================================================

[info] Building Spark using SBT with these arguments: -Phadoop-3.2 -Phive-2.3 -Phive -Pspark-ganglia-lgpl -Pkinesis-asl -Pyarn -Phadoop-cloud -Phive-thriftserver -Pkubernetes -Pmesos test:package streaming-kinesis-asl-assembly/assembly

Using /usr/java/jdk1.8.0_191 as default JAVA_HOME.

Note, this will be overridden by -java-home if it is set.

Attempting to fetch sbt

Launching sbt from build/sbt-launch-1.4.1.jar

[info] [launcher] getting org.scala-sbt sbt 1.4.1 (this may take some time)...

downloading https://repo1.maven.org/maven2/org/scala-sbt/sbt/1.4.1/sbt-1.4.1.jar ...

```

Closes#30137 from dongjoon-hyun/SBT_1.4.1.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Liang-Chi Hsieh <viirya@gmail.com>

### What changes were proposed in this pull request?

This PR proposes to remove an obsolete comment about adding the `sbt-dependency-graph` back in SBT plugins.

### Why are the changes needed?

sbt-dependency-graph is now built-in from SBT 1.4.0, see https://github.com/sbt/sbt/releases/tag/v1.4.0.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Manually tested `./build/sbt dependencyTree`.

Closes#30085 from HyukjinKwon/SPARK-33109.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Upgrade sbt to release 1.4.0

### Why are the changes needed?

Bring built-in `dependencyTree` instead of removed `sbt-dependency-graph` plugin, that doesn't work with sbt used in build.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Should pass all the tests.

Closes#30070 from gemelen/feature/sbt-1.4.

Authored-by: Denis Pyshev <git@gemelen.net>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Current solution in build file to enable build failure on compilation warnings with exclusion of deprecation ones is not portable after SBT version 1.3.13 (build import fails with compilation error with SBT 1.4) and could be replaced with more robust and maintainable, especially since Scala 2.13.2 with similar built-in functionality.

Additionally, warnings were fixed to pass the build, with as few changes as possible:

warnings in 2.12 compilation fixed in code,

warnings in 2.13 compilation covered by configuration to be addressed separately

### Why are the changes needed?

Unblocks upgrade to SBT after 1.3.13.

Enhances build file maintainability.

Allows fine tune of warnings configuration in scope of Scala 2.13 compilation.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

`build/sbt`'s `compile` and `Test/compile` for both Scala 2.12 and 2.13 profiles.

Closes#29995 from gemelen/feature/warnings-reporter.

Authored-by: Denis Pyshev <git@gemelen.net>

Signed-off-by: Sean Owen <srowen@gmail.com>

This PR aims to rename hdpVersion to versionValue.

Use the general variable name.

No.

Pass the CI.

Closes#30008 from williamhyun/sbt.

Authored-by: William Hyun <williamhyun3@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Rename manually added resolver for local Ivy repo.

Create configuration to publish to local Ivy repo similar to Maven one.

Use `publishLocal` to publish both to local Maven and Ivy repos instead

of custom task `localPublish` (renamed from `publish-local` of sbt 0.13.x).

### Why are the changes needed?

There are two resolvers (bootResolvers's "local" and manually added "local") that point to the same local Ivy repo, but have different configurations, which led to excessive warnings in logs and, potentially, resolution issues.

Changeset fixes that case, observable in sbt output as

```

[warn] Multiple resolvers having different access mechanism configured with same name 'local'. To avoid conflict, Remove duplicate project resolvers (`resolvers`) or rename publishing resolve

r (`publishTo`).

```

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Executed `build/sbt`'s `publishLocal` task on individual module and on root project.

Closes#30006 from gemelen/feature/local-resolvers.

Authored-by: Denis Pyshev <git@gemelen.net>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR aims to remove `sbt-dependency-graph` SBT plugin.

### Why are the changes needed?

`sbt-dependency-graph` officially doesn't support SBT 1.3.x and it's broken due to `NoSuchMethodError`. This cannot be fixed in `sbt-dependency-graph` side at SBT 1.3.x

- https://github.com/sbt/sbt-dependency-graph

> Note: Under sbt >= 1.3.x some features might currently not work as expected or not at all (like dependencyLicenses).

```

$ build/sbt dependencyTree

Launching sbt from build/sbt-launch-1.3.13.jar

[info] welcome to sbt 1.3.13 (AdoptOpenJDK Java 1.8.0_252)

...

[error] java.lang.NoSuchMethodError: sbt.internal.LibraryManagement$.cachedUpdate(Lsbt/librarymanagement/DependencyResolution;Lsbt/librarymanagement/ModuleDescriptor;Lsbt/util/CacheStoreFactory;Ljava/lang/String;Lsbt/librarymanagement/UpdateConfiguration;Lscala/Function1;ZZZLsbt/librarymanagement/UnresolvedWarningConfiguration;Lsbt/librarymanagement/EvictionWarningOptions;ZLsbt/internal/librarymanagement/CompatibilityWarningOptions;Lsbt/util/Logger;)Lsbt/librarymanagement/UpdateReport;

```

**ALTERNATIVES**

- One alternative is `coursier`, but it requires `coursier-based sbt launcher` which is more intrusive.

- https://get-coursier.io/docs/sbt-coursier.html#sbt-13x

> you'll have to use the coursier-based sbt launcher, via its custom sbt-extras launcher for example.

- Another alternative is moving to `SBT 1.4.0` which uses `sbt-dependency-graph` as a built-in, but it's still new and will requires many change.

So, this PR aims to remove the broken plugin simply.

### Does this PR introduce _any_ user-facing change?

No. This is a dev-only change.

### How was this patch tested?

Manual.

```

$ build/sbt dependencyTree

...

[error] Not a valid command: dependencyTree

[error] Not a valid project ID: dependencyTree

[error] Not a valid key: dependencyTree (similar: dependencyOverrides, sbtDependency, dependencyResolution)

[error] dependencyTree

[error] ^

```

Closes#29997 from dongjoon-hyun/remove_depedencyTree.

Lead-authored-by: Dongjoon Hyun <dongjoon@apache.org>

Co-authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Migrate sbt-launcher URL to download one for sbt 1.x.

Update plugins versions where required by sbt update.

Change sbt version to be used to latest released at the moment, 1.3.13

Adjust build settings according to plugins and sbt changes.

### Why are the changes needed?

Migration to sbt 1.x:

1. enhances dev experience in development

2. updates build plugins to bring there new features/to fix bugs in them

3. enhances build performance on sbt side

4. eases movement to Scala 3 / dotty

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

All existing tests passed, both on Jenkins and via Github Actions, also manually for Scala 2.13 profile.

Closes#29286 from gemelen/feature/sbt-1.x.

Authored-by: Denis Pyshev <git@gemelen.net>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR fixes `SparkBuild.scala` to recognize build settings for Scala 2.13.

In `SparkBuild.scala`, a variable `scalaBinaryVersion` is hardcoded as `2.12`.

So, an environment variable `SPARK_SCALA_VERSION` is also to be `2.12`.

This issue causes some test suites (e.g. `SparkSubmitSuite`) to be error.

```

===== TEST OUTPUT FOR o.a.s.deploy.SparkSubmitSuite: 'user classpath first in driver' =====

20/10/02 08:55:30.234 redirect stderr for command /home/kou/work/oss/spark-scala-2.13/bin/spark-submit INFO Utils: Error: Could not find or load m

ain class org.apache.spark.launcher.Main

20/10/02 08:55:30.235 redirect stderr for command /home/kou/work/oss/spark-scala-2.13/bin/spark-submit INFO Utils: /home/kou/work/oss/spark-scala-

2.13/bin/spark-class: line 96: CMD: bad array subscript

```

The reason of this error is that environment variables `SPARK_JARS_DIR` and `LAUNCH_CLASSPATH` is defined in `bin/spark-class` as follows.

```

SPARK_JARS_DIR="${SPARK_HOME}/assembly/target/scala-$SPARK_SCALA_VERSION/jars"

LAUNCH_CLASSPATH="${SPARK_HOME}/launcher/target/scala-$SPARK_SCALA_VERSION/classes:$LAUNCH_CLASSPATH"

```

### Why are the changes needed?

To build for Scala 2.13 successfully.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Tests for `core` module finish successfully.

```

build/sbt -Pscala-2.13 clean "core/test"

```

Closes#29927 from sarutak/fix-sparkbuild-for-scala-2.13.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR refactors the way we propagate the options from the `SparkSession.Builder` to the` SessionState`. This currently done via a mutable map inside the SparkSession. These setting settings are then applied **after** the Session. This is a bit confusing when you expect something to be set when constructing the `SessionState`. This PR passes the options as a constructor parameter to the `SessionStateBuilder` and this will set the options when the configuration is created.

### Why are the changes needed?

It makes it easier to reason about the configurations set in a SessionState than before. We recently had an incident where someone was using `SparkSessionExtensions` to create a planner rule that relied on a conf to be set. While this is in itself probably incorrect usage, it still illustrated this somewhat funky behavior.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests.

Closes#29752 from hvanhovell/SPARK-32879.

Authored-by: herman <herman@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR changes SparkBuild.scala to ignore deprecation warnings for build with Scala 2.13 and sbt.

Actually, deprecation warnings are already ignored for Scala 2.12 but string matching logic for deprecation warnings should be changed for Scala 2.13.

Currently, if a warning message contains `is deprecated`, it's ignored but some warnings contain "are deprecated` and `will be deprecated`.

```

[error] [warn] /home/kou/work/oss/spark-scala-2.13/core/src/main/scala/org/apache/spark/deploy/SparkSubmit.scala:656: multiarg infix syntax looks\

like a tuple and will be deprecated

[error] [warn] if (opt.clOption != null) { childArgs += (opt.clOption, opt.value) }

```

```

[error] [warn] /home/kou/work/oss/spark-scala-2.13/core/src/main/scala/org/apache/spark/rdd/SequenceFileRDDFunctions.scala:35: view bounds are de\

precated; use an implicit parameter instead.

[error] example: instead of `def f[A <% Int](a: A)` use `def f[A](a: A)(implicit ev: A => Int)`

[error] [warn] class SequenceFileRDDFunctions[K <% Writable: ClassTag, V <% Writable : ClassTag](

```

### Why are the changes needed?

Enable to build Spark with Scala 2.13 and sbt.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Build with the following command and confirmed deprecation warnings are not treated as fatal ( Build itself doesn't pass due to another problem).

`build/sbt -Pscala-2.13 package`

Closes#29741 from sarutak/scala-2.13-deprecated-warning.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

If graceful decommissioning is enabled, Spark's dynamic scaling uses this instead of directly killing executors.

### Why are the changes needed?

When scaling down Spark we should avoid triggering recomputes as much as possible.

### Does this PR introduce _any_ user-facing change?

Hopefully their jobs run faster or at the same speed. It also enables experimental shuffle service free dynamic scaling when graceful decommissioning is enabled (using the same code as the shuffle tracking dynamic scaling).

### How was this patch tested?

For now I've extended the ExecutorAllocationManagerSuite for both core & streaming.

Closes#29367 from holdenk/SPARK-31198-use-graceful-decommissioning-as-part-of-dynamic-scaling.

Lead-authored-by: Holden Karau <hkarau@apple.com>

Co-authored-by: Holden Karau <holden@pigscanfly.ca>

Signed-off-by: Holden Karau <hkarau@apple.com>

### What changes were proposed in this pull request?

This PR proposes to enable `corssPaths` back for now to match with the build as it was.

It still indeterministically doesn't run JUnit tests given my observation, and this PR basically reverts the partial fix from https://github.com/apache/spark/pull/29057.

See also https://github.com/apache/spark/pull/29205 for the full context.

### Why are the changes needed?

To prevent the side effects from crossPaths such as SPARK_PREPEND_CLASSES or tests that run conditionally if the test classes are present in PySpark.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

Manually tested:

```bash

build/sbt -Phadoop-2.7 -Phive -Phive-2.3 -Phive-thriftserver -DskipTests clean test:package

./python/run-tests --python-executable=python3 --testname="pyspark.sql.tests.test_dataframe QueryExecutionListenerTests"

```

Closes#29218 from HyukjinKwon/SPARK-32408-1.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This reverts commit 026b0b926d.

### Why are the changes needed?

As HyukjinKwon pointed out in https://github.com/apache/spark/pull/29133#issuecomment-663339240, there is no JUnit test report after https://github.com/apache/spark/pull/29133. Let's revert https://github.com/apache/spark/pull/29133 for now and find a better solution to improve the log output later.

### Does this PR introduce _any_ user-facing change?

No, dev-only.

### How was this patch tested?

GitHub Actions build

Closes#29219 from gengliangwang/revertErrorOnly.

Authored-by: Gengliang Wang <gengliang.wang@databricks.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

Add a new parallel test group for all `hive.execution` suites.

### Why are the changes needed?

Base on the tests, it can reduce the Jenkins testing time.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Existing tests.

Closes#28977 from xuanyuanking/parallelTest.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR will remove references to these "blacklist" and "whitelist" terms besides the blacklisting feature as a whole, which can be handled in a separate JIRA/PR.

This touches quite a few files, but the changes are straightforward (variable/method/etc. name changes) and most quite self-contained.

### Why are the changes needed?

As per discussion on the Spark dev list, it will be beneficial to remove references to problematic language that can alienate potential community members. One such reference is "blacklist" and "whitelist". While it seems to me that there is some valid debate as to whether these terms have racist origins, the cultural connotations are inescapable in today's world.

### Does this PR introduce _any_ user-facing change?

In the test file `HiveQueryFileTest`, a developer has the ability to specify the system property `spark.hive.whitelist` to specify a list of Hive query files that should be tested. This system property has been renamed to `spark.hive.includelist`. The old property has been kept for compatibility, but will log a warning if used. I am open to feedback from others on whether keeping a deprecated property here is unnecessary given that this is just for developers running tests.

### How was this patch tested?

Existing tests should be suitable since no behavior changes are expected as a result of this PR.

Closes#28874 from xkrogen/xkrogen-SPARK-32036-rename-blacklists.

Authored-by: Erik Krogen <ekrogen@linkedin.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

This PR aims to run the Spark tests in Github Actions.

To briefly explain the main idea:

- Reuse `dev/run-tests.py` with SBT build

- Reuse the modules in `dev/sparktestsupport/modules.py` to test each module

- Pass the modules to test into `dev/run-tests.py` directly via `TEST_ONLY_MODULES` environment variable. For example, `pyspark-sql,core,sql,hive`.

- `dev/run-tests.py` _does not_ take the dependent modules into account but solely the specified modules to test.

Another thing to note might be `SlowHiveTest` annotation. Running the tests in Hive modules takes too much so the slow tests are extracted and it runs as a separate job. It was extracted from the actual elapsed time in Jenkins:

So, Hive tests are separated into to jobs. One is slow test cases, and the other one is the other test cases.

_Note that_ the current GitHub Actions build virtually copies what the default PR builder on Jenkins does (without other profiles such as JDK 11, Hadoop 2, etc.). The only exception is Kinesis https://github.com/apache/spark/pull/29057/files#diff-04eb107ee163a50b61281ca08f4e4c7bR23

### Why are the changes needed?

Last week and onwards, the Jenkins machines became very unstable for many reasons:

- Apparently, the machines became extremely slow. Almost all tests can't pass.

- One machine (worker 4) started to have the corrupt `.m2` which fails the build.

- Documentation build fails time to time for an unknown reason in Jenkins machine specifically. This is disabled for now at https://github.com/apache/spark/pull/29017.

- Almost all PRs are basically blocked by this instability currently.

The advantages of using Github Actions:

- To avoid depending on few persons who can access to the cluster.

- To reduce the elapsed time in the build - we could split the tests (e.g., SQL, ML, CORE), and run them in parallel so the total build time will significantly reduce.

- To control the environment more flexibly.

- Other contributors can test and propose to fix Github Actions configurations so we can distribute this build management cost.

Note that:

- The current build in Jenkins takes _more than 7 hours_. With Github actions it takes _less than 2 hours_

- We can now control the environments especially for Python easily.

- The test and build look more stable than the Jenkins'.

### Does this PR introduce _any_ user-facing change?

No, dev-only change.

### How was this patch tested?

Tested at https://github.com/HyukjinKwon/spark/pull/4Closes#29057 from HyukjinKwon/migrate-to-github-actions.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Force to initialize Hadoop VersionInfo in HiveExternalCatalog to make sure Hive can get the Hadoop version when using the isolated classloader.

### Why are the changes needed?

This is a regression in Spark 3.0.0 because we switched the default Hive execution version from 1.2.1 to 2.3.7.

Spark allows the user to set `spark.sql.hive.metastore.jars` to specify jars to access Hive Metastore. These jars are loaded by the isolated classloader. Because we also share Hadoop classes with the isolated classloader, the user doesn't need to add Hadoop jars to `spark.sql.hive.metastore.jars`, which means when we are using the isolated classloader, hadoop-common jar is not available in this case. If Hadoop VersionInfo is not initialized before we switch to the isolated classloader, and we try to initialize it using the isolated classloader (the current thread context classloader), it will fail and report `Unknown` which causes Hive to throw the following exception:

```

java.lang.RuntimeException: Illegal Hadoop Version: Unknown (expected A.B.* format)

at org.apache.hadoop.hive.shims.ShimLoader.getMajorVersion(ShimLoader.java:147)

at org.apache.hadoop.hive.shims.ShimLoader.loadShims(ShimLoader.java:122)

at org.apache.hadoop.hive.shims.ShimLoader.getHadoopShims(ShimLoader.java:88)

at org.apache.hadoop.hive.metastore.ObjectStore.getDataSourceProps(ObjectStore.java:377)

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:268)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:76)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:136)

at org.apache.hadoop.hive.metastore.RawStoreProxy.<init>(RawStoreProxy.java:58)

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:67)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStore(HiveMetaStore.java:517)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:482)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:544)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:370)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:78)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:84)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:5762)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:219)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:67)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1548)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:86)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:132)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3080)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3108)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3349)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:217)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:204)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:331)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:292)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:262)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:247)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:543)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:511)

at org.apache.spark.sql.hive.client.HiveClientImpl.newState(HiveClientImpl.scala:175)

at org.apache.spark.sql.hive.client.HiveClientImpl.<init>(HiveClientImpl.scala:128)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.spark.sql.hive.client.IsolatedClientLoader.createClient(IsolatedClientLoader.scala:301)

at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:431)

at org.apache.spark.sql.hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:324)

at org.apache.spark.sql.hive.HiveExternalCatalog.client$lzycompute(HiveExternalCatalog.scala:72)

at org.apache.spark.sql.hive.HiveExternalCatalog.client(HiveExternalCatalog.scala:71)

at org.apache.spark.sql.hive.client.HadoopVersionInfoSuite.$anonfun$new$1(HadoopVersionInfoSuite.scala:63)

at org.scalatest.OutcomeOf.outcomeOf(OutcomeOf.scala:85)

at org.scalatest.OutcomeOf.outcomeOf$(OutcomeOf.scala:83)

```

Technically, This is indeed an issue of Hadoop VersionInfo which has been fixed: https://issues.apache.org/jira/browse/HADOOP-14067. But since we are still supporting old Hadoop versions, we should fix it.

Why this issue starts to happen in Spark 3.0.0?

In Spark 2.4.x, we use Hive 1.2.1 by default. It will trigger `VersionInfo` initialization in the static codes of `Hive` class. This will happen when we load `HiveClientImpl` class because `HiveClientImpl.clent` method refers to `Hive` class. At this moment, the thread context classloader is not using the isolcated classloader, so it can access hadoop-common jar on the classpath and initialize it correctly.

In Spark 3.0.0, we use Hive 2.3.7. The static codes of `Hive` class are not accessing `VersionInfo` because of the change in https://issues.apache.org/jira/browse/HIVE-11657. Instead, accessing `VersionInfo` happens when creating a `Hive` object (See the above stack trace). This happens here https://github.com/apache/spark/blob/v3.0.0/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala#L260. But we switch to the isolated classloader before calling `HiveClientImpl.client` (See https://github.com/apache/spark/blob/v3.0.0/sql/hive/src/main/scala/org/apache/spark/sql/hive/client/HiveClientImpl.scala#L283). This is exactly what I mentioned above: `If Hadoop VersionInfo is not initialized before we switch to the isolated classloader, and we try to initialize it using the isolated classloader (the current thread context classloader), it will fail`

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

The new regression test added in this PR.

Note that the new UT doesn't fail with the default profiles (-Phadoop-3.2) because it's already fixed at Hadoop 3.1. Please use the following to verify this.

```

build/sbt -Phadoop-2.7 -Phive "hive/testOnly *.HadoopVersionInfoSuite"

```

Closes#29059 from zsxwing/SPARK-32256.

Authored-by: Shixiong Zhu <zsxwing@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

#28671 introduced a change where the order in which CANCELED state for SparkExecuteStatementOperation is set was changed. Before setting the state to CANCELED, `cleanup()` was called which kills the jobs, causing an exception to be thrown inside `execute()`. This causes the state to transiently become ERROR before being set to CANCELED. This PR fixes the order.

### Why are the changes needed?

Bug: wrong operation state is set.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Unit test in SparkExecuteStatementOperationSuite.scala.

Closes#28912 from alismess-db/execute-statement-operation-cleanup-order.

Authored-by: Ali Smesseim <ali.smesseim@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Add a generic ClassificationSummary trait

### Why are the changes needed?

Add a generic ClassificationSummary trait so all the classification models can use it to implement summary.

Currently in classification, we only have summary implemented in ```LogisticRegression```. There are requests to implement summary for ```LinearSVCModel``` in https://issues.apache.org/jira/browse/SPARK-20249 and to implement summary for ```RandomForestClassificationModel``` in https://issues.apache.org/jira/browse/SPARK-23631. If we add a generic ClassificationSummary trait and put all the common code there, we can easily add summary to ```LinearSVCModel``` and ```RandomForestClassificationModel```, and also add summary to all the other classification models.

We can use the same approach to add a generic RegressionSummary trait to regression package and implement summary for all the regression models.

### Does this PR introduce _any_ user-facing change?

### How was this patch tested?

existing tests

Closes#28710 from huaxingao/summary_trait.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR brings https://github.com/apache/spark/pull/28751 back

- It once reverted by 4a25200 because of inevitable maven test failure

- See related updates in this followup a0187cd6b5

- And reverted again because of the flakiness of the added unit tests

- In this PR, The flakiness reason found is caused by the hive metastore connection that the SparkSQLCLIService trying to create which turns out is unnecessary at all. This metastore client points to a dummy metastore server only.

- Also, add some cleanups for SharedThriftServer trait in before and after to prevent its configurations being polluted or polluting others

### Why are the changes needed?

fix flaky test

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

passing sbt and maven tests

Closes#28835 from yaooqinn/SPARK-31926-F.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR brings 02f32cfae4 back which reverted by 4a25200cd7 because of maven test failure

diffs newly made:

1. add a missing log4j file to test resources

2. Call `SessionState.detachSession()` to clean the thread local one in `afterAll`.

3. Not use dedicated JVMs for sbt test runner too

### Why are the changes needed?

fix the maven test

### Does this PR introduce _any_ user-facing change?

no

### How was this patch tested?

add new tests

Closes#28797 from yaooqinn/SPARK-31926-NEW.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Please refer https://issues.apache.org/jira/browse/SPARK-24634 to see rationalization of the issue.

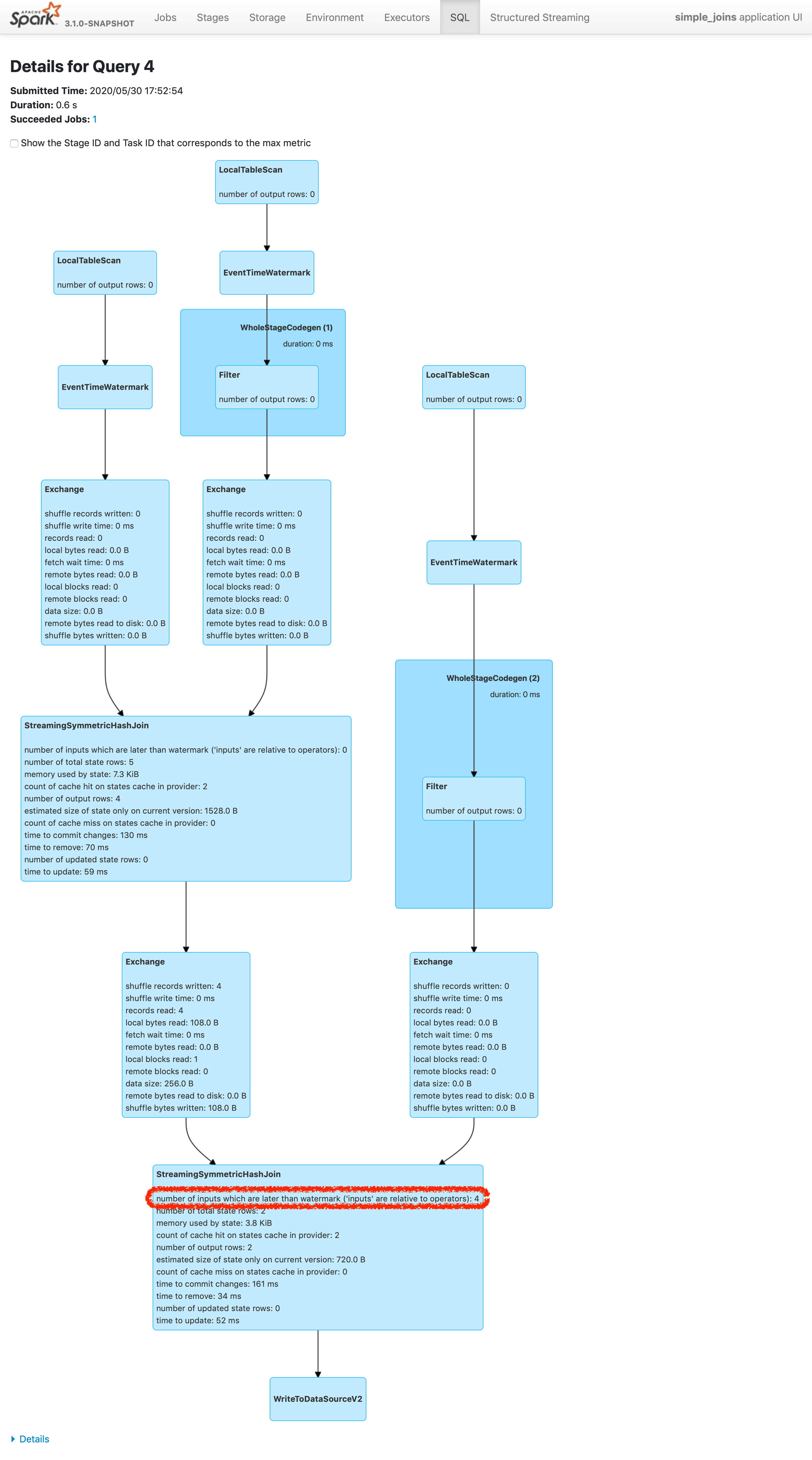

This patch adds a new metric to count the number of inputs arrived later than watermark plus allowed delay. To make changes simpler, this patch doesn't count the exact number of input rows which are later than watermark plus allowed delay. Instead, this patch counts the inputs which are dropped in the logic of operator. The difference of twos are shown in streaming aggregation: to optimize the calculation, streaming aggregation "pre-aggregates" the input rows, and later checks the lateness against "pre-aggregated" inputs, hence the number might be reduced.

The new metric will be provided via two places:

1. On Spark UI: check the metrics in stateful operator nodes in query execution details page in SQL tab

2. On Streaming Query Listener: check "numLateInputs" in "stateOperators" in QueryProcessEvent.

### Why are the changes needed?

Dropping late inputs means that end users might not get expected outputs. Even end users may indicate the fact and tolerate the result (as that's what allowed lateness is for), but they should be able to observe whether the current value of allowed lateness drops inputs or not so that they can adjust the value.

Also, whatever the chance they have multiple of stateful operators in a single query, if Spark drops late inputs "between" these operators, it becomes "correctness" issue. Spark should disallow such possibility, but given we already provided the flexibility, at least we should provide the way to observe the correctness issue and decide whether they should make correction of their query or not.

### Does this PR introduce _any_ user-facing change?

Yes. End users will be able to retrieve the information of late inputs via two ways:

1. SQL tab in Spark UI

2. Streaming Query Listener

### How was this patch tested?

New UTs added & existing UTs are modified to reflect the change.

And ran manual test reproducing SPARK-28094.

I've picked the specific case on "B outer C outer D" which is enough to represent the "intermediate late row" issue due to global watermark.

https://gist.github.com/jammann/b58bfbe0f4374b89ecea63c1e32c8f17

Spark logs warning message on the query which means SPARK-28074 is working correctly,

```

20/05/30 17:52:47 WARN UnsupportedOperationChecker: Detected pattern of possible 'correctness' issue due to global watermark. The query contains stateful operation which can emit rows older than the current watermark plus allowed late record delay, which are "late rows" in downstream stateful operations and these rows can be discarded. Please refer the programming guide doc for more details.;

Join LeftOuter, ((D_FK#28 = D_ID#87) AND (B_LAST_MOD#26-T30000ms = D_LAST_MOD#88-T30000ms))

:- Join LeftOuter, ((C_FK#27 = C_ID#58) AND (B_LAST_MOD#26-T30000ms = C_LAST_MOD#59-T30000ms))

: :- EventTimeWatermark B_LAST_MOD#26: timestamp, 30 seconds

: : +- Project [v#23.B_ID AS B_ID#25, v#23.B_LAST_MOD AS B_LAST_MOD#26, v#23.C_FK AS C_FK#27, v#23.D_FK AS D_FK#28]

: : +- Project [from_json(StructField(B_ID,StringType,false), StructField(B_LAST_MOD,TimestampType,false), StructField(C_FK,StringType,true), StructField(D_FK,StringType,true), value#21, Some(UTC)) AS v#23]

: : +- Project [cast(value#8 as string) AS value#21]

: : +- StreamingRelationV2 org.apache.spark.sql.kafka010.KafkaSourceProvider3a7fd18c, kafka, org.apache.spark.sql.kafka010.KafkaSourceProvider$KafkaTable396d2958, org.apache.spark.sql.util.CaseInsensitiveStringMapa51ee61a, [key#7, value#8, topic#9, partition#10, offset#11L, timestamp#12, timestampType#13], StreamingRelation DataSource(org.apache.spark.sql.SparkSessiond221af8,kafka,List(),None,List(),None,Map(inferSchema -> true, startingOffsets -> earliest, subscribe -> B, kafka.bootstrap.servers -> localhost:9092),None), kafka, [key#0, value#1, topic#2, partition#3, offset#4L, timestamp#5, timestampType#6]

: +- EventTimeWatermark C_LAST_MOD#59: timestamp, 30 seconds

: +- Project [v#56.C_ID AS C_ID#58, v#56.C_LAST_MOD AS C_LAST_MOD#59]

: +- Project [from_json(StructField(C_ID,StringType,false), StructField(C_LAST_MOD,TimestampType,false), value#54, Some(UTC)) AS v#56]

: +- Project [cast(value#41 as string) AS value#54]

: +- StreamingRelationV2 org.apache.spark.sql.kafka010.KafkaSourceProvider3f507373, kafka, org.apache.spark.sql.kafka010.KafkaSourceProvider$KafkaTable7b6736a4, org.apache.spark.sql.util.CaseInsensitiveStringMapa51ee61b, [key#40, value#41, topic#42, partition#43, offset#44L, timestamp#45, timestampType#46], StreamingRelation DataSource(org.apache.spark.sql.SparkSessiond221af8,kafka,List(),None,List(),None,Map(inferSchema -> true, startingOffsets -> earliest, subscribe -> C, kafka.bootstrap.servers -> localhost:9092),None), kafka, [key#33, value#34, topic#35, partition#36, offset#37L, timestamp#38, timestampType#39]

+- EventTimeWatermark D_LAST_MOD#88: timestamp, 30 seconds

+- Project [v#85.D_ID AS D_ID#87, v#85.D_LAST_MOD AS D_LAST_MOD#88]

+- Project [from_json(StructField(D_ID,StringType,false), StructField(D_LAST_MOD,TimestampType,false), value#83, Some(UTC)) AS v#85]

+- Project [cast(value#70 as string) AS value#83]

+- StreamingRelationV2 org.apache.spark.sql.kafka010.KafkaSourceProvider2b90e779, kafka, org.apache.spark.sql.kafka010.KafkaSourceProvider$KafkaTable36f8cd29, org.apache.spark.sql.util.CaseInsensitiveStringMapa51ee620, [key#69, value#70, topic#71, partition#72, offset#73L, timestamp#74, timestampType#75], StreamingRelation DataSource(org.apache.spark.sql.SparkSessiond221af8,kafka,List(),None,List(),None,Map(inferSchema -> true, startingOffsets -> earliest, subscribe -> D, kafka.bootstrap.servers -> localhost:9092),None), kafka, [key#62, value#63, topic#64, partition#65, offset#66L, timestamp#67, timestampType#68]

```

and we can find the late inputs from the batch 4 as follows:

which represents intermediate inputs are being lost, ended up with correctness issue.

Closes#28607 from HeartSaVioR/SPARK-24634-v3.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR proposes to support guava version configurable from command line for sbt.

### Why are the changes needed?

#28455 added the configurability for Maven but not for sbt.

sbt is usually faster than Maven so it's useful for developers.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I confirmed the guava version is changed with the following commands.

```

$ build/sbt "inspect tree clean" | grep guava

[info] +-spark/*:dependencyOverrides = Set(com.google.guava:guava:14.0.1, xerces:xercesImpl:2.12.0, jline:jline:2.14.6, org.apache.avro:avro:1.8.2)

```

```

$ build/sbt -Dguava.version=25.0-jre "inspect tree clean" | grep guava

[info] +-spark/*:dependencyOverrides = Set(com.google.guava:guava:25.0-jre, xerces:xercesImpl:2.12.0, jline:jline:2.14.6, org.apache.avro:avro:1.8.2)

```

Closes#28822 from sarutak/guava-version-for-sbt.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

When` org.apache.spark.sql.hive.thriftserver.HiveThriftServer2#startWithContext` called,

it starts `ThriftCLIService` in the background with a new Thread, at the same time we call `ThriftCLIService.getPortNumber,` we might not get the bound port if it's configured with 0.

This PR moves the TServer/HttpServer initialization code out of that new Thread.

### Why are the changes needed?

Fix concurrency issue, improve test robustness.

### Does this PR introduce _any_ user-facing change?

NO

### How was this patch tested?

add new tests

Closes#28751 from yaooqinn/SPARK-31926.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Add instance weight support in LogisticRegressionSummary

### Why are the changes needed?

LogisticRegression, MulticlassClassificationEvaluator and BinaryClassificationEvaluator support instance weight. We should support instance weight in LogisticRegressionSummary too.

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

Add new tests

Closes#28657 from huaxingao/weighted_summary.

Authored-by: Huaxin Gao <huaxing@us.ibm.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

This PR mainly adds two things.

1. Real headless browser support for UI test

2. A test suite using headless Chrome as one instance of those browsers.

Also, for environment where Chrome and Chrome driver is not installed, `ChromeUITest` tag is added to filter out the test suite.

By default, test suites with `ChromeUITest` is disabled.

### Why are the changes needed?

In the current master, there are two problems for UI test.

1. Lots of tests especially JavaScript related ones are done manually.

Appearance is better to be confirmed by our eyes but logic should be tested by test cases ideally.

2. Compared to the real web browsers, HtmlUnit doesn't seem to support JavaScript enough.

I added a JavaScript related test before for SPARK-31534 using HtmlUnit which is simple library based headless browser for test.

The test I added works somehow but some JavaScript related error is shown in unit-tests.log.

```

======= EXCEPTION START ========

Exception class=[net.sourceforge.htmlunit.corejs.javascript.JavaScriptException]

com.gargoylesoftware.htmlunit.ScriptException: Error: TOOLTIP: Option "sanitizeFn" provided type "window" but expected type "(null|function)". (http://192.168.1.209:60724/static/jquery-3.4.1.min.js#2)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine$HtmlUnitContextAction.run(JavaScriptEngine.java:904)

at net.sourceforge.htmlunit.corejs.javascript.Context.call(Context.java:628)

at net.sourceforge.htmlunit.corejs.javascript.ContextFactory.call(ContextFactory.java:515)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine.callFunction(JavaScriptEngine.java:835)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine.callFunction(JavaScriptEngine.java:807)

at com.gargoylesoftware.htmlunit.InteractivePage.executeJavaScriptFunctionIfPossible(InteractivePage.java:216)

at com.gargoylesoftware.htmlunit.javascript.background.JavaScriptFunctionJob.runJavaScript(JavaScriptFunctionJob.java:52)

at com.gargoylesoftware.htmlunit.javascript.background.JavaScriptExecutionJob.run(JavaScriptExecutionJob.java:102)

at com.gargoylesoftware.htmlunit.javascript.background.JavaScriptJobManagerImpl.runSingleJob(JavaScriptJobManagerImpl.java:426)

at com.gargoylesoftware.htmlunit.javascript.background.DefaultJavaScriptExecutor.run(DefaultJavaScriptExecutor.java:157)

at java.lang.Thread.run(Thread.java:748)

Caused by: net.sourceforge.htmlunit.corejs.javascript.JavaScriptException: Error: TOOLTIP: Option "sanitizeFn" provided type "window" but expected type "(null|function)". (http://192.168.1.209:60724/static/jquery-3.4.1.min.js#2)

at net.sourceforge.htmlunit.corejs.javascript.Interpreter.interpretLoop(Interpreter.java:1009)

at net.sourceforge.htmlunit.corejs.javascript.Interpreter.interpret(Interpreter.java:800)

at net.sourceforge.htmlunit.corejs.javascript.InterpretedFunction.call(InterpretedFunction.java:105)

at net.sourceforge.htmlunit.corejs.javascript.ContextFactory.doTopCall(ContextFactory.java:413)

at com.gargoylesoftware.htmlunit.javascript.HtmlUnitContextFactory.doTopCall(HtmlUnitContextFactory.java:252)

at net.sourceforge.htmlunit.corejs.javascript.ScriptRuntime.doTopCall(ScriptRuntime.java:3264)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine$4.doRun(JavaScriptEngine.java:828)

at com.gargoylesoftware.htmlunit.javascript.JavaScriptEngine$HtmlUnitContextAction.run(JavaScriptEngine.java:889)

... 10 more

JavaScriptException value = Error: TOOLTIP: Option "sanitizeFn" provided type "window" but expected type "(null|function)".

== CALLING JAVASCRIPT ==

function () {

throw e;

}

======= EXCEPTION END ========

```

I tried to upgrade HtmlUnit to 2.40.0 but what is worse, the test become not working even though it works on real browsers like Chrome, Safari and Firefox without error.

```

[info] UISeleniumSuite:

[info] - SPARK-31534: text for tooltip should be escaped *** FAILED *** (17 seconds, 745 milliseconds)

[info] The code passed to eventually never returned normally. Attempted 2 times over 12.910785232 seconds. Last failure message: com.gargoylesoftware.htmlunit.ScriptException: ReferenceError: Assignment to undefined "regeneratorRuntime" in strict mode (http://192.168.1.209:62132/static/vis-timeline-graph2d.min.js#52(Function)#1)

```

To resolve those problems, it's better to support headless browser for UI test.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

I tested with following patterns. Both Chrome and Chrome driver should be installed to test.

1. sbt / with default excluded tags (ChromeUISeleniumSuite is expected to be skipped and SQLQueryTestSuite is expected to succeed)

`build/sbt -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver "testOnly org.apache.spark.ui.ChromeUISeleniumSuite org.apache.spark.sql.SQLQueryTestSuite"

2. sbt / overwrite default excluded tags as empty string (Both suites are expected to succeed)

`build/sbt -Dtest.default.exclude.tags= -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver "testOnly org.apache.spark.ui.ChromeUISeleniumSuite org.apache.spark.sql.SQLQueryTestSuite"

3. sbt / set `test.exclude.tags` to `org.apache.spark.tags.ExtendedSQLTest` (Both suites are expected to be skipped)

`build/sbt -Dtest.exclude.tags=org.apache.spark.tags.ExtendedSQLTest -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver "testOnly org.apache.spark.ui.ChromeUISeleniumSuite org.apache.spark.sql.SQLQueryTestSuite"

4. Maven / with default excluded tags (ChromeUISeleniumSuite is expected to be skipped and SQLQueryTestSuite is expected to succeed)

`build/mvn -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver -Dtest=none -DwildcardSuites=org.apache.spark.ui.ChromeUISeleniumSuite,org.apache.spark.sql.SQLQueryTestSuite test`

5. Maven / overwrite default excluded tags as empty string (Both suites are expected to succeed)

`build/mvn -Dtest.default.exclude.tags= -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver -Dtest=none -DwildcardSuites=org.apache.spark.ui.ChromeUISeleniumSuite,org.apache.spark.sql.SQLQueryTestSuite test`

6. Maven / set `test.exclude.tags` to `org.apache.spark.tags.ExtendedSQLTest` (Both suites are expected to be skipped)

`build/mvn -Dtest.exclude.tags=org.apache.spark.tags.ExtendedSQLTest -Dspark.test.webdriver.chrome.driver=/path/to/chromedriver -Dtest=none -DwildcardSuites=org.apache.spark.ui.ChromeUISeleniumSuite,org.apache.spark.sql.SQLQueryTestSuite test`

Closes#28627 from sarutak/real-headless-browser-support-take2.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR aims to upgrade `commons-io` from 2.4 to 2.5 for Apache Spark 3.1.

### Why are the changes needed?

Since Hadoop 3.1, `commons-io` 2.5 is used.

- https://issues.apache.org/jira/browse/HADOOP-15261

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the Jenkins with Hadoop-3.2 profile.

Maven dependency is verified via `test-dependencies.sh` automatically. SBT dependency can be verified like the following manually.

```

build/sbt -Phadoop-3.2 "core/dependencyTree" | grep commons-io:commons-io | head -n1

[info] | | +-commons-io:commons-io:2.5

```

Closes#28665 from dongjoon-hyun/SPARK-31858.

Authored-by: Dongjoon Hyun <dongjoon@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>