### What changes were proposed in this pull request?

A followup of https://github.com/apache/spark/pull/27936 to update document.

### Why are the changes needed?

correct document

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#27950 from cloud-fan/null.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

pattern `''` means literal `'`

```sql

select date_format(to_timestamp("11111904-01-23 15:02:01", 'y-MM-dd HH:mm:ss'), "y-MM-dd HH:mm:ss''SSSSSSSSS");

5377-02-14 06:27:19'000000519

```

0946a9514f missed this case and this pr add it back.

### Why are the changes needed?

bugfix

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

add ut

Closes#27949 from yaooqinn/SPARK-31150-2.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

Prpend `-` to the compare result instead of creating a new reverse comparator for each compare when sorting in DESC order in InterpretedOrdering.

### Why are the changes needed?

Currently, we'll create a new reverse comparator for each compare in InterpretedOrdering, which could generate lots of small and instant object and hurt JVM when there're plenty of data.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#27938 from Ngone51/reverse_comparator.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The meaning of 'u' was day number of the week in SimpleDateFormat, it was changed to year in DateTimeFormatter. Now we keep the old meaning of 'u' by substituting 'u' to 'e' internally and use DateTimeFormatter to parse the pattern string. In DateTimeFormatter, the 'e' and 'c' also represents day-of-week. e.g.

```sql

select date_format(timestamp '2019-10-06', 'yyyy-MM-dd uuuu');

select date_format(timestamp '2019-10-06', 'yyyy-MM-dd uuee');

select date_format(timestamp '2019-10-06', 'yyyy-MM-dd eeee');

```

Because of the substitution, they all goes to `.... eeee` silently. The users may congitive problems of their meanings, so we should mark them as illegal pattern characters to stay the same as before.

This pr move the method `convertIncompatiblePattern` from `DatetimeUtils` to `DateTimeFormatterHelper` object, since it is quite specific for `DateTimeFormatterHelper` class.

And 'e' and 'c' char checking in this method.

Besides,`convertIncompatiblePattern` has a bug that will lose the last `'` if it ends with it, this pr fixes this too. e.g.

```sql

spark-sql> select date_format(timestamp "2019-10-06", "yyyy-MM-dd'S'");

20/03/18 11:19:45 ERROR SparkSQLDriver: Failed in [select date_format(timestamp "2019-10-06", "yyyy-MM-dd'S'")]

java.lang.IllegalArgumentException: Pattern ends with an incomplete string literal: uuuu-MM-dd'S

spark-sql> select to_timestamp("2019-10-06S", "yyyy-MM-dd'S'");

NULL

```

### Why are the changes needed?

avoid vagueness

bug fix

### Does this PR introduce any user-facing change?

no, these are not exposed yet

### How was this patch tested?

add ut

Closes#27939 from yaooqinn/SPARK-31176.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

```

<extract expression> ::= EXTRACT <left paren> <extract field> FROM <extract source> <right paren>

<extract source> ::= <datetime value expression> | <interval value expression>

```

We now only support datetime values as extract source for `extract` expression but it's alternative function `date_part` supports both datetime and interval.

This pr adds interval value support for `extract` expression as extract source

### Why are the changes needed?

For ANSI compliance and the semantic consistency between extract and `date_part`, we support intervals for extract expressions.

### Does this PR introduce any user-facing change?

yes, in the `extract(abc from xyz)` expression, the `xyz` can be intervals

### How was this patch tested?

add unit tests

Closes#27876 from yaooqinn/SPARK-31119.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Make `size(null)` return null under ANSI mode, regardless of the `spark.sql.legacy.sizeOfNull` config.

### Why are the changes needed?

In https://github.com/apache/spark/pull/27834, we change the result of `size(null)` to be -1 to match the 2.4 behavior and avoid breaking changes.

However, it's true that the "return -1" behavior is error-prone when being used with aggregate functions. The current ANSI mode controls a bunch of "better behaviors" like failing on overflow. We don't enable these "better behaviors" by default because they are too breaking. The "return null" behavior of `size(null)` is a good fit of the ANSI mode.

### Does this PR introduce any user-facing change?

No as ANSI mode is off by default.

### How was this patch tested?

new tests

Closes#27936 from cloud-fan/null.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This PR is to support parsing timestamp values with variable length second fraction parts.

e.g. 'yyyy-MM-dd HH:mm:ss.SSSSSS[zzz]' can parse timestamp with 0~6 digit-length second fraction but fail >=7

```sql

select to_timestamp(v, 'yyyy-MM-dd HH:mm:ss.SSSSSS[zzz]') from values

('2019-10-06 10:11:12.'),

('2019-10-06 10:11:12.0'),

('2019-10-06 10:11:12.1'),

('2019-10-06 10:11:12.12'),

('2019-10-06 10:11:12.123UTC'),

('2019-10-06 10:11:12.1234'),

('2019-10-06 10:11:12.12345CST'),

('2019-10-06 10:11:12.123456PST') t(v)

2019-10-06 03:11:12.123

2019-10-06 08:11:12.12345

2019-10-06 10:11:12

2019-10-06 10:11:12

2019-10-06 10:11:12.1

2019-10-06 10:11:12.12

2019-10-06 10:11:12.1234

2019-10-06 10:11:12.123456

select to_timestamp('2019-10-06 10:11:12.1234567PST', 'yyyy-MM-dd HH:mm:ss.SSSSSS[zzz]')

NULL

```

Since 3.0, we use java 8 time API to parse and format timestamp values. when we create the `DateTimeFormatter`, we use `appendPattern` to create the build first, where the 'S..S' part will be parsed to a fixed-length(= `'S..S'.length`). This fits the formatting part but too strict for the parsing part because the trailing zeros are very likely to be truncated.

### Why are the changes needed?

improve timestamp parsing and more compatible with 2.4.x

### Does this PR introduce any user-facing change?

no, the related changes are newly added

### How was this patch tested?

add uts

Closes#27906 from yaooqinn/SPARK-31150.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

After https://github.com/apache/spark/pull/27542, `map()` returns `map<null, null>` instead of `map<string, string>`. However, this breaks queries which union `map()` and other maps.

The reason is, `TypeCoercion` rules and `Cast` think it's illegal to cast null type map key to other types, as it makes the key nullable, but it's actually legal. This PR fixes it.

### Why are the changes needed?

To avoid breaking queries.

### Does this PR introduce any user-facing change?

Yes, now some queries that work in 2.x can work in 3.0 as well.

### How was this patch tested?

new test

Closes#27926 from cloud-fan/bug.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR is kind of a followup of #26808. It leverages the helper method for aliasing in built-in SQL expressions to use the alias as its output column name where it's applicable.

- `Expression`, `UnaryMathExpression` and `BinaryMathExpression` search the alias in the tags by default.

- When the naming is different in its implementation, it has to be overwritten for the expression specifically. E.g., `CallMethodViaReflection`, `Remainder`, `CurrentTimestamp`,

`FormatString` and `XPathDouble`.

This PR fixes the aliases of the functions below:

| class | alias |

|--------------------------|------------------|

|`Rand` |`random` |

|`Ceil` |`ceiling` |

|`Remainder` |`mod` |

|`Pow` |`pow` |

|`Signum` |`sign` |

|`Chr` |`char` |

|`Length` |`char_length` |

|`Length` |`character_length`|

|`FormatString` |`printf` |

|`Substring` |`substr` |

|`Upper` |`ucase` |

|`XPathDouble` |`xpath_number` |

|`DayOfMonth` |`day` |

|`CurrentTimestamp` |`now` |

|`Size` |`cardinality` |

|`Sha1` |`sha` |

|`CallMethodViaReflection` |`java_method` |

Note: `EqualTo`, `=` and `==` aliases were excluded because it's unable to leverage this helper method. It should fix the parser.

Note: this PR also excludes some instances such as `ToDegrees`, `ToRadians`, `UnaryMinus` and `UnaryPositive` that needs an explicit name overwritten to make the scope of this PR smaller.

### Why are the changes needed?

To respect expression name.

### Does this PR introduce any user-facing change?

Yes, it will change the output column name.

### How was this patch tested?

Manually tested, and unittests were added.

Closes#27901 from HyukjinKwon/31146.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

It's not needed at all as now we replace "y" with "u" if there is no "G". So the era is either explicitly specified (e.g. "yyyy G") or can be inferred from the year (e.g. "uuuu").

### Why are the changes needed?

By default we use "uuuu" as the year pattern, which indicates the era already. If we set a default era, it can get conflicted and fail the parsing.

### Does this PR introduce any user-facing change?

yea, now spark can parse date/timestamp with negative year via the "yyyy" pattern, which will be converted to "uuuu" under the hood.

### How was this patch tested?

new tests

Closes#27707 from cloud-fan/bug.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This reverts commit 47d6e80a2e.

### Why are the changes needed?

There is no standard requiring that `div` must return the type of the operand, and always returning long type looks fine. This is kind of a cosmetic change and we should avoid it if it breaks existing queries. This is similar to reverting TRIM function parameter order change.

### Does this PR introduce any user-facing change?

Yes, change the behavior of `div` back to be the same as 2.4.

### How was this patch tested?

N/A

Closes#27835 from cloud-fan/revert2.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

spark.sql.legacy.timeParser.enabled should be removed from SQLConf and the migration guide

spark.sql.legacy.timeParsePolicy is the right one

### Why are the changes needed?

fix doc

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

Pass the jenkins

Closes#27889 from yaooqinn/SPARK-31131.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

AQE has a perf regression when using the default settings: if we coalesce the shuffle partitions into one or few partitions, we may leave many CPU cores idle and the perf is worse than with AQE off (which leverages all CPU cores).

Technically, this is not a bad thing. If there are many queries running at the same time, it's better to coalesce shuffle partitions into fewer partitions. However, the default settings of AQE should try to avoid any perf regression as possible as we can.

This PR changes the default value of minPartitionNum when coalescing shuffle partitions, to be `SparkContext.defaultParallelism`, so that AQE can leverage all the CPU cores.

### Why are the changes needed?

avoid AQE perf regression

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing tests

Closes#27879 from cloud-fan/aqe.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the error message, adding an example for typed Scala UDF.

### Why are the changes needed?

Help user to know how to migrate to typed Scala UDF.

### Does this PR introduce any user-facing change?

No, it's a new error message in Spark 3.0.

### How was this patch tested?

Pass Jenkins.

Closes#27884 from Ngone51/spark_31010_followup.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR reverts https://github.com/apache/spark/pull/26051 and https://github.com/apache/spark/pull/26066

### Why are the changes needed?

There is no standard requiring that `size(null)` must return null, and returning -1 looks reasonable as well. This is kind of a cosmetic change and we should avoid it if it breaks existing queries. This is similar to reverting TRIM function parameter order change.

### Does this PR introduce any user-facing change?

Yes, change the behavior of `size(null)` back to be the same as 2.4.

### How was this patch tested?

N/A

Closes#27834 from cloud-fan/revert.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

`DateTimeUtilsSuite.daysToMicros and microsToDays` takes 30 seconds, which is too long for a UT.

This PR changes the test to check random data, to reduce testing time. Now this test takes 1 second.

### Why are the changes needed?

make test faster

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#27873 from cloud-fan/test.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to change conversion of java.sql.Timestamp/Date values to/from internal values of Catalyst's TimestampType/DateType before cutover day `1582-10-15` of Gregorian calendar. I propose to construct local date-time from microseconds/days since the epoch. Take each date-time component `year`, `month`, `day`, `hour`, `minute`, `second` and `second fraction`, and construct java.sql.Timestamp/Date using the extracted components.

### Why are the changes needed?

This will rebase underlying time/date offset in the way that collected java.sql.Timestamp/Date values will have the same local time-date component as the original values in Gregorian calendar.

Here is the example which demonstrates the issue:

```sql

scala> sql("select date '1100-10-10'").collect()

res1: Array[org.apache.spark.sql.Row] = Array([1100-10-03])

```

### Does this PR introduce any user-facing change?

Yes, after the changes:

```sql

scala> sql("select date '1100-10-10'").collect()

res0: Array[org.apache.spark.sql.Row] = Array([1100-10-10])

```

### How was this patch tested?

By running `DateTimeUtilsSuite`, `DateFunctionsSuite` and `DateExpressionsSuite`.

Closes#27807 from MaxGekk/rebase-timestamp-before-1582.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When encoding Java Beans to Spark DataFrame, respecting `javax.annotation.Nonnull` and producing non-null fields.

### Why are the changes needed?

When encoding Java Beans to Spark DataFrame, non-primitive types are encoded as nullable fields. Although It works for most cases, it can be an issue under a few situations, e.g. the one described in the JIRA ticket when saving DataFrame to Avro format with non-null field.

We should allow Spark users more flexibility when creating Spark DataFrame from Java Beans. Currently, Spark users cannot create DataFrame with non-nullable fields in the schema from beans with non-nullable properties.

Although it is possible to project top-level columns with SQL expressions like `AssertNotNull` to make it non-null, for nested fields it is more tricky to do it similarly.

### Does this PR introduce any user-facing change?

Yes. After this change, Spark users can use `javax.annotation.Nonnull` to annotate non-null fields in Java Beans when encoding beans to Spark DataFrame.

### How was this patch tested?

Added unit test.

Closes#27851 from viirya/SPARK-31071.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

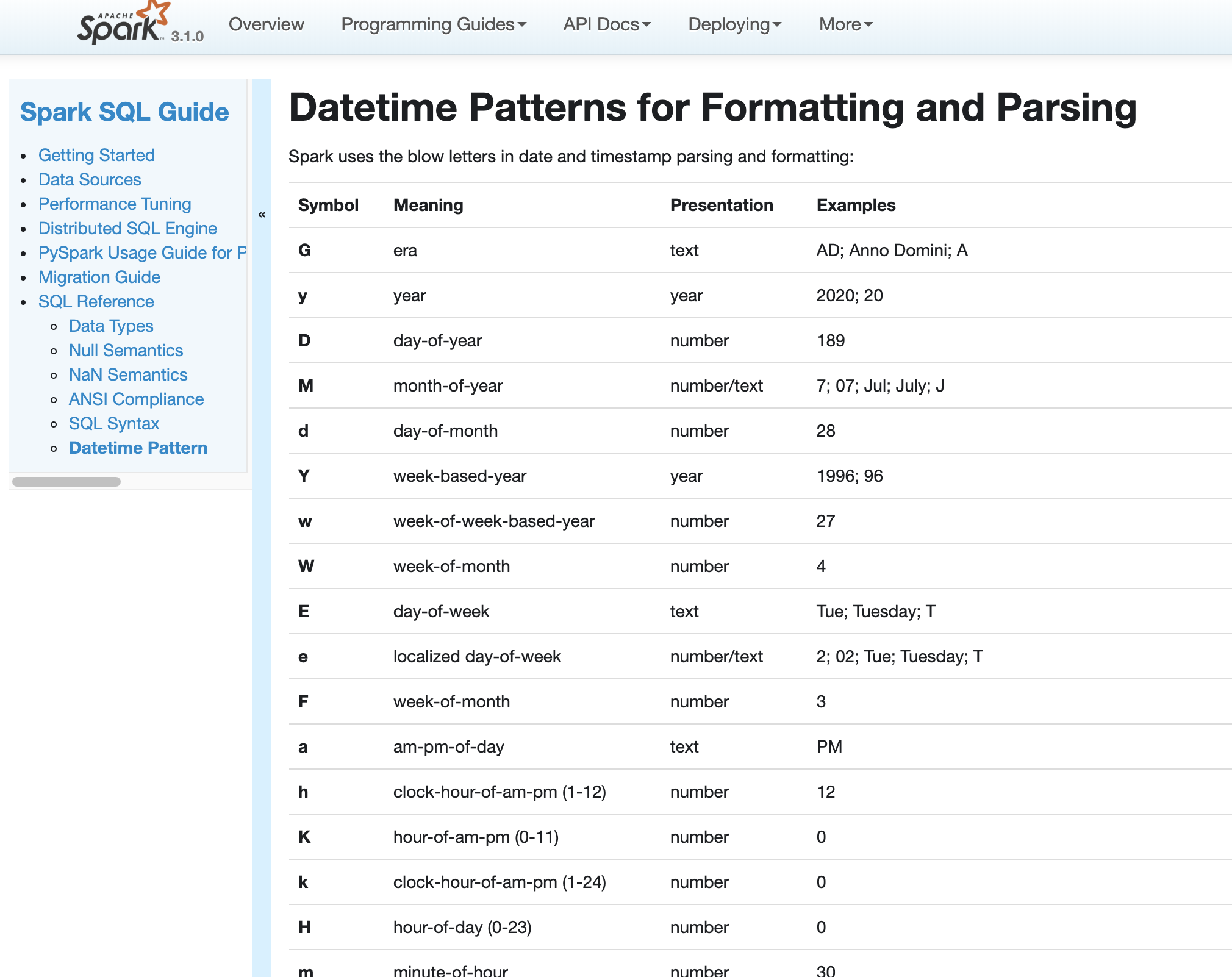

In Spark version 2.4 and earlier, datetime parsing, formatting and conversion are performed by using the hybrid calendar (Julian + Gregorian).

Since the Proleptic Gregorian calendar is de-facto calendar worldwide, as well as the chosen one in ANSI SQL standard, Spark 3.0 switches to it by using Java 8 API classes (the java.time packages that are based on ISO chronology ). The switching job is completed in SPARK-26651.

But after the switching, there are some patterns not compatible between Java 8 and Java 7, Spark needs its own definition on the patterns rather than depends on Java API.

In this PR, we achieve this by writing the document and shadow the incompatible letters. See more details in [SPARK-31030](https://issues.apache.org/jira/browse/SPARK-31030)

### Why are the changes needed?

For backward compatibility.

### Does this PR introduce any user-facing change?

No.

After we define our own datetime parsing and formatting patterns, it's same to old Spark version.

### How was this patch tested?

Existing and new added UT.

Locally document test:

Closes#27830 from xuanyuanking/SPARK-31030.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Currently, we parse interval from multi units strings or from date-time/year-month pattern strings, the former handles all whitespace, the latter not or even spaces.

### Why are the changes needed?

behavior consistency

### Does this PR introduce any user-facing change?

yes, interval in date-time/year-month like

```

select interval '\n-\t10\t 12:34:46.789\t' day to second

-- !query 126 schema

struct<INTERVAL '-10 days -12 hours -34 minutes -46.789 seconds':interval>

-- !query 126 output

-10 days -12 hours -34 minutes -46.789 seconds

```

is valid now.

### How was this patch tested?

add ut.

Closes#26815 from yaooqinn/SPARK-30189.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

RuleExecutor already support metering for analyzer/optimizer rules. By providing such information in `PlanChangeLogger`, user can get more information when debugging rule changes .

This PR enhanced `PlanChangeLogger` to display RuleExecutor metrics. This can be easily done by calling the existing API `resetMetrics` and `dumpTimeSpent`, but there might be conflicts if user is also collecting total metrics of a sql job. Thus I introduced `QueryExecutionMetrics`, as the snapshot of `QueryExecutionMetering`, to better support this feature.

Information added to `PlanChangeLogger`

```

=== Metrics of Executed Rules ===

Total number of runs: 554

Total time: 0.107756568 seconds

Total number of effective runs: 11

Total time of effective runs: 0.047615486 seconds

```

### Why are the changes needed?

Provide better plan change debugging user experience

### Does this PR introduce any user-facing change?

Only add more debugging info of `planChangeLog`, default log level is TRACE.

### How was this patch tested?

Update existing tests to verify the new logs

Closes#27846 from Eric5553/ExplainRuleExecMetrics.

Authored-by: Eric Wu <492960551@qq.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes two things:

1. Convert `null` to `string` type during schema inference of `schema_of_json` as JSON datasource does. This is a bug fix as well because `null` string is not the proper DDL formatted string and it is unable for SQL parser to recognise it as a type string. We should match it to JSON datasource and return a string type so `schema_of_json` returns a proper DDL formatted string.

2. Let `schema_of_json` respect `dropFieldIfAllNull` option during schema inference.

### Why are the changes needed?

To let `schema_of_json` return a proper DDL formatted string, and respect `dropFieldIfAllNull` option.

### Does this PR introduce any user-facing change?

Yes, it does.

```scala

import collection.JavaConverters._

import org.apache.spark.sql.functions._

spark.range(1).select(schema_of_json(lit("""{"id": ""}"""))).show()

spark.range(1).select(schema_of_json(lit("""{"id": "a", "drop": {"drop": null}}"""), Map("dropFieldIfAllNull" -> "true").asJava)).show(false)

```

**Before:**

```

struct<id:null>

struct<drop:struct<drop:null>,id:string>

```

**After:**

```

struct<id:string>

struct<id:string>

```

### How was this patch tested?

Manually tested, and unittests were added.

Closes#27854 from HyukjinKwon/SPARK-31065.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

This is a follow up for https://github.com/apache/spark/pull/27650 where allow None provider for create table. Here we are doing the same thing for ReplaceTable.

### Why are the changes needed?

Although currently the ASTBuilder doesn't seem to allow `replace` without `USING` clause. This would allow `DataFrameWriterV2` to use the statements instead of commands directly.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests

Closes#27838 from yuchenhuo/SPARK-30902.

Authored-by: Yuchen Huo <yuchen.huo@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The newly added catalog APIs are marked as Experimental but other DS v2 APIs are marked as Evolving.

This PR makes it consistent and mark all Connector APIs as Evolving.

### Why are the changes needed?

For consistency.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

N/A

Closes#27811 from cloud-fan/tag.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Dongjoon Hyun <dongjoon@apache.org>

### What changes were proposed in this pull request?

Introduced a new parameter `emptyCollection` for `CreateMap` and `CreateArray` functiion to remove dependency on SQLConf.get.

### Why are the changes needed?

This allows to avoid the issue when the configuration change between different phases of planning, and this can silently break a query plan which can lead to crashes or data corruption.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs.

Closes#27657 from iRakson/SPARK-30899.

Authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This pr intends to support 32 or more grouping attributes for GROUPING_ID. In the current master, an integer overflow can occur to compute grouping IDs;

e75d9afb2f/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/plans/logical/basicLogicalOperators.scala (L613)

For example, the query below generates wrong grouping IDs in the master;

```

scala> val numCols = 32 // or, 31

scala> val cols = (0 until numCols).map { i => s"c$i" }

scala> sql(s"create table test_$numCols (${cols.map(c => s"$c int").mkString(",")}, v int) using parquet")

scala> val insertVals = (0 until numCols).map { _ => 1 }.mkString(",")

scala> sql(s"insert into test_$numCols values ($insertVals,3)")

scala> sql(s"select grouping_id(), sum(v) from test_$numCols group by grouping sets ((${cols.mkString(",")}), (${cols.init.mkString(",")}))").show(10, false)

scala> sql(s"drop table test_$numCols")

// numCols = 32

+-------------+------+

|grouping_id()|sum(v)|

+-------------+------+

|0 |3 |

|0 |3 | // Wrong Grouping ID

+-------------+------+

// numCols = 31

+-------------+------+

|grouping_id()|sum(v)|

+-------------+------+

|0 |3 |

|1 |3 |

+-------------+------+

```

To fix this issue, this pr change code to use long values for `GROUPING_ID` instead of int values.

### Why are the changes needed?

To support more cases in `GROUPING_ID`.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added unit tests.

Closes#26918 from maropu/FixGroupingIdIssue.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

In the PR, I propose:

1. To replace matching by `Literal` in `ExprUtils.evalSchemaExpr()` to checking foldable property of the `schema` expression.

2. To replace matching by `Literal` in `ExprUtils.evalTypeExpr()` to checking foldable property of the `schema` expression.

3. To change checking of the input parameter in the `SchemaOfCsv` expression, and allow foldable `child` expression.

4. To change checking of the input parameter in the `SchemaOfJson` expression, and allow foldable `child` expression.

### Why are the changes needed?

This should improve Spark SQL UX for `from_csv`/`from_json`. Currently, Spark expects only literals:

```sql

spark-sql> select from_csv('1,Moscow', replace('dpt_org_id INT, dpt_org_city STRING', 'dpt_org_', ''));

Error in query: Schema should be specified in DDL format as a string literal or output of the schema_of_csv function instead of replace('dpt_org_id INT, dpt_org_city STRING', 'dpt_org_', '');; line 1 pos 7

spark-sql> select from_json('{"id":1, "city":"Moscow"}', replace('dpt_org_id INT, dpt_org_city STRING', 'dpt_org_', ''));

Error in query: Schema should be specified in DDL format as a string literal or output of the schema_of_json function instead of replace('dpt_org_id INT, dpt_org_city STRING', 'dpt_org_', '');; line 1 pos 7

```

and only string literals are acceptable as CSV examples by `schema_of_csv`/`schema_of_json`:

```sql

spark-sql> select schema_of_csv(concat_ws(',', 0.1, 1));

Error in query: cannot resolve 'schema_of_csv(concat_ws(',', CAST(0.1BD AS STRING), CAST(1 AS STRING)))' due to data type mismatch: The input csv should be a string literal and not null; however, got concat_ws(',', CAST(0.1BD AS STRING), CAST(1 AS STRING)).; line 1 pos 7;

'Project [unresolvedalias(schema_of_csv(concat_ws(,, cast(0.1 as string), cast(1 as string))), None)]

+- OneRowRelation

spark-sql> select schema_of_json(regexp_replace('{"item_id": 1, "item_price": 0.1}', 'item_', ''));

Error in query: cannot resolve 'schema_of_json(regexp_replace('{"item_id": 1, "item_price": 0.1}', 'item_', ''))' due to data type mismatch: The input json should be a string literal and not null; however, got regexp_replace('{"item_id": 1, "item_price": 0.1}', 'item_', '').; line 1 pos 7;

'Project [unresolvedalias(schema_of_json(regexp_replace({"item_id": 1, "item_price": 0.1}, item_, )), None)]

+- OneRowRelation

```

### Does this PR introduce any user-facing change?

Yes, after the changes users can pass any foldable string expression as the `schema` parameter to `from_csv()/from_json()`. For the example above:

```sql

spark-sql> select from_csv('1,Moscow', replace('dpt_org_id INT, dpt_org_city STRING', 'dpt_org_', ''));

{"id":1,"city":"Moscow"}

spark-sql> select from_json('{"id":1, "city":"Moscow"}', replace('dpt_org_id INT, dpt_org_city STRING', 'dpt_org_', ''));

{"id":1,"city":"Moscow"}

```

After change the `schema_of_csv`/`schema_of_json` functions accept foldable expressions, for example:

```sql

spark-sql> select schema_of_csv(concat_ws(',', 0.1, 1));

struct<_c0:double,_c1:int>

spark-sql> select schema_of_json(regexp_replace('{"item_id": 1, "item_price": 0.1}', 'item_', ''));

struct<id:bigint,price:double>

```

### How was this patch tested?

Added new test to `CsvFunctionsSuite` and to `JsonFunctionsSuite`.

Closes#27804 from MaxGekk/foldable-arg-csv-json-func.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR aims to show a deprecation warning on two-parameter TRIM/LTRIM/RTRIM function usages based on the community decision.

- https://lists.apache.org/thread.html/r48b6c2596ab06206b7b7fd4bbafd4099dccd4e2cf9801aaa9034c418%40%3Cdev.spark.apache.org%3E

### Why are the changes needed?

For backward compatibility, SPARK-28093 is reverted. However, from Apache Spark 3.0.0, we should give a safe guideline to use SQL syntax instead of the esoteric function signatures.

### Does this PR introduce any user-facing change?

Yes. This shows a directional warning.

### How was this patch tested?

Pass the Jenkins with a newly added test case.

Closes#27643 from dongjoon-hyun/SPARK-30886.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR adds an internal config for changing the logging level of adaptive execution query plan evolvement.

### Why are the changes needed?

To make AQE debugging easier.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added UT.

Closes#27798 from maryannxue/aqe-log-level.

Authored-by: maryannxue <maryannxue@apache.org>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to respect hidden parameters by using `stringArgs` in `Expression.toString `. By this, we can show the strings properly in some cases such as `NonSQLExpression`.

### Why are the changes needed?

To respect "hidden" arguments in the string representation.

### Does this PR introduce any user-facing change?

Yes, for example, on the top of https://github.com/apache/spark/pull/27657,

```scala

val identify = udf((input: Seq[Int]) => input)

spark.range(10).select(identify(array("id"))).show()

```

shows hidden parameter `useStringTypeWhenEmpty`.

```

+---------------------+

|UDF(array(id, false))|

+---------------------+

| [0]|

| [1]|

...

```

whereas:

```scala

spark.range(10).select(array("id")).show()

```

```

+---------+

|array(id)|

+---------+

| [0]|

| [1]|

...

```

### How was this patch tested?

Manually tested as below:

```scala

val identify = udf((input: Boolean) => input)

spark.range(10).select(identify(exists(array(col("id")), _ % 2 === 0))).show()

```

Before:

```

+-------------------------------------------------------------------------------------+

|UDF(exists(array(id), lambdafunction(((lambda 'x % 2) = 0), lambda 'x, false), true))|

+-------------------------------------------------------------------------------------+

| true|

| false|

| true|

...

```

After:

```

+-------------------------------------------------------------------------------+

|UDF(exists(array(id), lambdafunction(((lambda 'x % 2) = 0), lambda 'x, false)))|

+-------------------------------------------------------------------------------+

| true|

| false|

| true|

...

```

Closes#27788 from HyukjinKwon/arguments-str-repr.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

There are two implementation of quoteIfNeeded. One is in `org.apache.spark.sql.connector.catalog.CatalogV2Implicits.quote` and the other is in `OrcFiltersBase.quoteAttributeNameIfNeeded`. This PR will consolidate them into one.

### Why are the changes needed?

Simplify the codebase.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs.

Closes#27814 from dbtsai/SPARK-31058.

Authored-by: DB Tsai <d_tsai@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

### What changes were proposed in this pull request?

When introducing AQE to others, I feel the config names are a bit incoherent and hard to use.

This PR refines the config names:

1. remove the "shuffle" prefix. AQE is all about shuffle and we don't need to add the "shuffle" prefix everywhere.

2. `targetPostShuffleInputSize` is obscure, rename to `advisoryShufflePartitionSizeInBytes`.

3. `reducePostShufflePartitions` doesn't match the actual optimization, rename to `coalesceShufflePartitions`

4. `minNumPostShufflePartitions` is obscure, rename it `minPartitionNum` under the `coalesceShufflePartitions` namespace

5. `maxNumPostShufflePartitions` is confusing with the word "max", rename it `initialPartitionNum`

6. `skewedJoinOptimization` is too verbose. skew join is a well-known terminology in database area, we can just say `skewJoin`

### Why are the changes needed?

Make the config names easy to understand.

### Does this PR introduce any user-facing change?

deprecate the config `spark.sql.adaptive.shuffle.targetPostShuffleInputSize`

### How was this patch tested?

N/A

Closes#27793 from cloud-fan/aqe.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to change `DateTimeUtils.stringToTimestamp` to support any valid time zone id at the end of input string. After the changes, the function accepts zone ids in the formats:

- no zone id. In that case, the function uses the local session time zone from the SQL config `spark.sql.session.timeZone`

- -[h]h:[m]m

- +[h]h:[m]m

- Z

- Short zone id, see https://docs.oracle.com/javase/8/docs/api/java/time/ZoneId.html#SHORT_IDS

- Zone ID starts with 'UTC+', 'UTC-', 'GMT+', 'GMT-', 'UT+' or 'UT-'. The ID is split in two, with a two or three letter prefix and a suffix starting with the sign. The suffix must be in the formats:

- +|-h[h]

- +|-hh[:]mm

- +|-hh:mm:ss

- +|-hhmmss

- Region-based zone IDs in the form `{area}/{city}`, such as `Europe/Paris` or `America/New_York`. The default set of region ids is supplied by the IANA Time Zone Database (TZDB).

### Why are the changes needed?

- To use `stringToTimestamp` as a substitution of removed `stringToTime`, see https://github.com/apache/spark/pull/27710#discussion_r385020173

- Improve UX of Spark SQL by allowing flexible formats of zone ids. Currently, Spark accepts only `Z` and zone offsets that can be inconvenient when a time zone offset is shifted due to daylight saving rules. For instance:

```sql

spark-sql> select cast('2015-03-18T12:03:17.123456 Europe/Moscow' as timestamp);

NULL

```

### Does this PR introduce any user-facing change?

Yes. After the changes, casting strings to timestamps allows time zone id at the end of the strings:

```sql

spark-sql> select cast('2015-03-18T12:03:17.123456 Europe/Moscow' as timestamp);

2015-03-18 12:03:17.123456

```

### How was this patch tested?

- Added new test cases to the `string to timestamp` test in `DateTimeUtilsSuite`.

- Run `CastSuite` and `AnsiCastSuite`.

Closes#27753 from MaxGekk/stringToTimestamp-uni-zoneId.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

rename the config and make it non-internal.

### Why are the changes needed?

Now we fail the query if duplicated map keys are detected, and provide a legacy config to deduplicate it. However, we must provide a way to get users out of this situation, instead of just rejecting to run the query. This exit strategy should always be there, while legacy config indicates that it may be removed someday.

### Does this PR introduce any user-facing change?

no, just rename a config which was added in 3.0

### How was this patch tested?

add more tests for the fail behavior.

Closes#27772 from cloud-fan/map.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The `spark.sql.session.timeZone` config can accept any string value including invalid time zone ids, then it will fail other queries that rely on the time zone. We should do the value checking in the set phase and fail fast if the zone value is invalid.

### Why are the changes needed?

improve configuration

### Does this PR introduce any user-facing change?

yes, will fail fast if the value is a wrong timezone id

### How was this patch tested?

add ut

Closes#27792 from yaooqinn/SPARK-31038.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Currently, the user cannot specify the session catalog name (`spark_catalog`) in qualified column names for v1 tables:

```

SELECT spark_catalog.default.t.i FROM spark_catalog.default.t

```

fails with `cannot resolve 'spark_catalog.default.t.i`.

This is inconsistent with v2 table behavior where catalog name can be used:

```

SELECT testcat.ns1.tbl.id FROM testcat.ns1.tbl.id

```

This PR proposes to fix the inconsistency and allow the user to specify session catalog name in column names for v1 tables.

### Why are the changes needed?

Fixing an inconsistent behavior.

### Does this PR introduce any user-facing change?

Yes, now the following query works:

```

SELECT spark_catalog.default.t.i FROM spark_catalog.default.t

```

### How was this patch tested?

Added new tests.

Closes#27776 from imback82/spark_catalog_col_name_resolution.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This is a follow-up work for #27441. For the cases of new TimestampFormatter return null while legacy formatter can return a value, we need to throw an exception instead of silent change. The legacy config will be referenced in the error message.

### Why are the changes needed?

Avoid silent result change for new behavior in 3.0.

### Does this PR introduce any user-facing change?

Yes, an exception is thrown when we detect legacy formatter can parse the string and the new formatter return null.

### How was this patch tested?

Extend existing UT.

Closes#27537 from xuanyuanking/SPARK-30668-follow.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

There are few V1 commands such as `REFRESH TABLE` that still allow `spark_catalog.t` because they run the commands with parsed table names without trying to load them in the catalog. This PR addresses this issue.

The PR also addresses the issue brought up in https://github.com/apache/spark/pull/27642#discussion_r382402104.

### Why are the changes needed?

To fix a bug where for some V1 commands, `spark_catalog.t` is allowed.

### Does this PR introduce any user-facing change?

Yes, a bug is fixed and `REFRESH TABLE spark_catalog.t` is not allowed.

### How was this patch tested?

Added new test.

Closes#27718 from imback82/fix_TempViewOrV1Table.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Before Spark 3.0, the JSON/CSV parser has a special behavior that, when the parser fails to parse a timestamp/date, fallback to another way to parse it, to support some legacy format. The fallback was removed by https://issues.apache.org/jira/browse/SPARK-26178 and https://issues.apache.org/jira/browse/SPARK-26243.

This PR adds back this legacy fallback. Since we switch the API to do datetime operations, we can't be exactly the same as before. Here we add back the support of the legacy formats that are common (examples of Spark 2.4):

1. the fields can have one or two letters

```

scala> sql("""select from_json('{"time":"1123-2-22 2:22:22"}', 'time Timestamp')""").show(false)

+-------------------------------------------+

|jsontostructs({"time":"1123-2-22 2:22:22"})|

+-------------------------------------------+

|[1123-02-22 02:22:22] |

+-------------------------------------------+

```

2. the separator between data and time can be "T" as well

```

scala> sql("""select from_json('{"time":"2000-12-12T12:12:12"}', 'time Timestamp')""").show(false)

+---------------------------------------------+

|jsontostructs({"time":"2000-12-12T12:12:12"})|

+---------------------------------------------+

|[2000-12-12 12:12:12] |

+---------------------------------------------+

```

3. the second fraction can be arbitrary length

```

scala> sql("""select from_json('{"time":"1123-02-22T02:22:22.123456789123"}', 'time Timestamp')""").show(false)

+----------------------------------------------------------+

|jsontostructs({"time":"1123-02-22T02:22:22.123456789123"})|

+----------------------------------------------------------+

|[1123-02-15 02:22:22.123] |

+----------------------------------------------------------+

```

4. date string can end up with any chars after "T" or space

```

scala> sql("""select from_json('{"time":"1123-02-22Tabc"}', 'time date')""").show(false)

+----------------------------------------+

|jsontostructs({"time":"1123-02-22Tabc"})|

+----------------------------------------+

|[1123-02-22] |

+----------------------------------------+

```

5. remove "GMT" from the string before parsing

```

scala> sql("""select from_json('{"time":"GMT1123-2-22 2:22:22.123"}', 'time Timestamp')""").show(false)

+--------------------------------------------------+

|jsontostructs({"time":"GMT1123-2-22 2:22:22.123"})|

+--------------------------------------------------+

|[1123-02-22 02:22:22.123] |

+--------------------------------------------------+

```

### Why are the changes needed?

It doesn't hurt to keep this legacy support. It just makes the parsing more relaxed.

### Does this PR introduce any user-facing change?

yes, to make 3.0 support parsing most of the csv/json values that were supported before.

### How was this patch tested?

new tests

Closes#27710 from cloud-fan/bug2.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

We have supported generators in SQL aggregate expressions by SPARK-28782.

But, the generator(explode) query with aggregate functions in DataFrame failed as follows;

```

// SPARK-28782: Generator support in aggregate expressions

scala> spark.range(3).toDF("id").createOrReplaceTempView("t")

scala> sql("select explode(array(min(id), max(id))) from t").show()

+---+

|col|

+---+

| 0|

| 2|

+---+

// A failure case handled in this pr

scala> spark.range(3).select(explode(array(min($"id"), max($"id")))).show()

org.apache.spark.sql.AnalysisException:

The query operator `Generate` contains one or more unsupported

expression types Aggregate, Window or Generate.

Invalid expressions: [min(`id`), max(`id`)];;

Project [col#46L]

+- Generate explode(array(min(id#42L), max(id#42L))), false, [col#46L]

+- Range (0, 3, step=1, splits=Some(4))

at org.apache.spark.sql.catalyst.analysis.CheckAnalysis.failAnalysis(CheckAnalysis.scala:49)

at org.apache.spark.sql.catalyst.analysis.CheckAnalysis.failAnalysis$(CheckAnalysis.scala:48)

at org.apache.spark.sql.catalyst.analysis.Analyzer.failAnalysis(Analyzer.scala:129)

```

The root cause is that `ExtractGenerator` wrongly replaces a project w/ aggregate functions

before `GlobalAggregates` replaces it with an aggregate as follows;

```

scala> sql("SET spark.sql.optimizer.planChangeLog.level=warn")

scala> spark.range(3).select(explode(array(min($"id"), max($"id")))).show()

20/03/01 12:51:58 WARN HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveReferences ===

!'Project [explode(array(min('id), max('id))) AS List()] 'Project [explode(array(min(id#72L), max(id#72L))) AS List()]

+- Range (0, 3, step=1, splits=Some(4)) +- Range (0, 3, step=1, splits=Some(4))

20/03/01 12:51:58 WARN HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ExtractGenerator ===

!'Project [explode(array(min(id#72L), max(id#72L))) AS List()] Project [col#76L]

!+- Range (0, 3, step=1, splits=Some(4)) +- Generate explode(array(min(id#72L), max(id#72L))), false, [col#76L]

! +- Range (0, 3, step=1, splits=Some(4))

20/03/01 12:51:58 WARN HiveSessionStateBuilder$$anon$1:

=== Result of Batch Resolution ===

!'Project [explode(array(min('id), max('id))) AS List()] Project [col#76L]

!+- Range (0, 3, step=1, splits=Some(4)) +- Generate explode(array(min(id#72L), max(id#72L))), false, [col#76L]

! +- Range (0, 3, step=1, splits=Some(4))

// the analysis failed here...

```

To avoid the case in `ExtractGenerator`, this pr addes a condition to ignore generators having aggregate functions.

A correct sequence of rules is as follows;

```

20/03/01 13:19:06 WARN HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ResolveReferences ===

!'Project [explode(array(min('id), max('id))) AS List()] 'Project [explode(array(min(id#27L), max(id#27L))) AS List()]

+- Range (0, 3, step=1, splits=Some(4)) +- Range (0, 3, step=1, splits=Some(4))

20/03/01 13:19:06 WARN HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$GlobalAggregates ===

!'Project [explode(array(min(id#27L), max(id#27L))) AS List()] 'Aggregate [explode(array(min(id#27L), max(id#27L))) AS List()]

+- Range (0, 3, step=1, splits=Some(4)) +- Range (0, 3, step=1, splits=Some(4))

20/03/01 13:19:06 WARN HiveSessionStateBuilder$$anon$1:

=== Applying Rule org.apache.spark.sql.catalyst.analysis.Analyzer$ExtractGenerator ===

!'Aggregate [explode(array(min(id#27L), max(id#27L))) AS List()] 'Project [explode(_gen_input_0#31) AS List()]

!+- Range (0, 3, step=1, splits=Some(4)) +- Aggregate [array(min(id#27L), max(id#27L)) AS _gen_input_0#31]

! +- Range (0, 3, step=1, splits=Some(4))

```

### Why are the changes needed?

A bug fix.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added tests.

Closes#27749 from maropu/ExplodeInAggregate.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

For a v2 table created with `CREATE TABLE testcat.ns1.ns2.tbl (id bigint, name string) USING foo`, the following works as expected

```

SELECT testcat.ns1.ns2.tbl.id FROM testcat.ns1.ns2.tbl

```

, but a query with qualified column name with star(*)

```

SELECT testcat.ns1.ns2.tbl.* FROM testcat.ns1.ns2.tbl

[info] org.apache.spark.sql.AnalysisException: cannot resolve 'testcat.ns1.ns2.tbl.*' given input columns 'id, name';

```

fails to resolve. And this PR proposes to fix this issue.

### Why are the changes needed?

To fix a bug as describe above.

### Does this PR introduce any user-facing change?

Yes, now `SELECT testcat.ns1.ns2.tbl.* FROM testcat.ns1.ns2.tbl` works as expected.

### How was this patch tested?

Added new test.

Closes#27766 from imback82/fix_star_expression.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

A query below failed in the master;

```

scala> sql("select array(array(1, 2), array(3)) ar").select(explode(explode($"ar"))).show()

20/03/01 13:51:56 ERROR Executor: Exception in task 0.0 in stage 0.0 (TID 0)/ 1]

java.lang.ClassCastException: scala.collection.mutable.ArrayOps$ofRef cannot be cast to org.apache.spark.sql.catalyst.util.ArrayData

at org.apache.spark.sql.catalyst.expressions.ExplodeBase.eval(generators.scala:313)

at org.apache.spark.sql.execution.GenerateExec.$anonfun$doExecute$8(GenerateExec.scala:108)

at scala.collection.Iterator$$anon$11.nextCur(Iterator.scala:484)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:490)

at scala.collection.Iterator$ConcatIterator.hasNext(Iterator.scala:222)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

...

```

This pr modified the `hasNestedGenerator` code in `ExtractGenerator` for correctly catching nested inner generators.

### Why are the changes needed?

A bug fix.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added tests.

Closes#27750 from maropu/HandleNestedGenerators.

Authored-by: Takeshi Yamamuro <yamamuro@apache.org>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This patch fixes several incorrect uses of `assume()` in our tests.

If a call to `assume(condition)` fails then it will cause the test to be marked as skipped instead of failed: this feature allows test cases to be skipped if certain prerequisites are missing. For example, we use this to skip certain tests when running on Windows (or when Python dependencies are unavailable).

In contrast, `assert(condition)` will fail the test if the condition doesn't hold.

If `assume()` is accidentally substituted for `assert()`then the resulting test will be marked as skipped in cases where it should have failed, undermining the purpose of the test.

This patch fixes several such cases, replacing certain `assume()` calls with `assert()`.

Credit to ahirreddy for spotting this problem.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests.

Closes#27754 from JoshRosen/fix-assume-vs-assert.

Lead-authored-by: Josh Rosen <rosenville@gmail.com>

Co-authored-by: Josh Rosen <joshrosen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This patch fixes the bug of UnsafeRow which misses to handle the UDT specifically, in `isFixedLength` and `isMutable`. These methods don't check its SQL type for UDT, always treating UDT as variable-length, and non-mutable.

It doesn't bring any issue if UDT is used to represent complicated type, but when UDT is used to represent some type which is matched with fixed length of SQL type, it exposes the chance of correctness issues, as these informations sometimes decide how the value should be handled.

We got report from user mailing list which suspected as mapGroupsWithState looks like handling UDT incorrectly, but after some investigation it was from GenerateUnsafeRowJoiner in shuffle phase.

0e2ca11d80/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/codegen/GenerateUnsafeRowJoiner.scala (L32-L43)

Here updating position should not happen on fixed-length column, but due to this bug, the value of UDT having fixed-length as sql type would be modified, which actually corrupts the value.

### Why are the changes needed?

Misclassifying of the type of length for UDT can corrupt the value when the row is presented to the input of GenerateUnsafeRowJoiner, which brings correctness issue.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New UT added.

Closes#27747 from HeartSaVioR/SPARK-30993.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Rename `spark.sql.legacy.addDirectory.recursive.enabled` to `spark.sql.legacy.addSingleFileInAddFile`

### Why are the changes needed?

To follow the naming convention

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs.

Closes#27725 from iRakson/SPARK-30234_CONFIG.

Authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Renamed configuration from `spark.sql.legacy.useHashOnMapType` to `spark.sql.legacy.allowHashOnMapType`.

### Why are the changes needed?

Better readability of configuration.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs.

Closes#27719 from iRakson/SPARK-27619_FOLLOWUP.

Authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When `CREATE TABLE` SQL statement does not specify the provider, leave it to the catalog implementations to decide.

### Why are the changes needed?

It's super weird if we set the default provider to parquet when creating a table in a JDBC catalog.

### Does this PR introduce any user-facing change?

Yes, v2 catalog will not see a "provider" property in table properties if it's not specified in `CREATE TABLE` SQL statement. V2 catalog is new in 3.0.

### How was this patch tested?

new tests

Closes#27650 from cloud-fan/create_table.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

When aliasing in nested column pruning in Project on top of Generate, we should exclude Generate outputs.

### Why are the changes needed?

Right now we would prune nested columns in Project on top of Generate. It is possible that referred nested columns are from Generate's outputs, not from its child. To address that case, we should exclude Generate outputs when aliasing in nested column pruning.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Unit test.

Closes#27702 from viirya/fix-nested-pruning.

Lead-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Co-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

In this PR, I addressed the comment from https://github.com/apache/spark/pull/27672#discussion_r383719562 to use `intercept` instead of `try-catch` block to assert failures in the IntervalUtilsSuite

### Why are the changes needed?

improve tests

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

Nah

Closes#27700 from yaooqinn/intervaltest.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Takeshi Yamamuro <yamamuro@apache.org>

### What changes were proposed in this pull request?

This PR follows https://github.com/apache/spark/pull/25074 and improves the implement.

### Why are the changes needed?

Improve code.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Exists UT

Closes#27699 from beliefer/improve-boolean-test.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

`hash()` and `xxhash64()` cannot be used on elements of `Maptype`. A new configuration `spark.sql.legacy.useHashOnMapType` is introduced to allow users to restore the previous behaviour.

When `spark.sql.legacy.useHashOnMapType` is set to false:

```

scala> spark.sql("select hash(map())");

org.apache.spark.sql.AnalysisException: cannot resolve 'hash(map())' due to data type mismatch: input to function hash cannot contain elements of MapType; line 1 pos 7;

'Project [unresolvedalias(hash(map(), 42), None)]

+- OneRowRelation

```

when `spark.sql.legacy.useHashOnMapType` is set to true :

```

scala> spark.sql("set spark.sql.legacy.useHashOnMapType=true");

res3: org.apache.spark.sql.DataFrame = [key: string, value: string]

scala> spark.sql("select hash(map())").first()

res4: org.apache.spark.sql.Row = [42]

```

### Why are the changes needed?

As discussed in Jira, SparkSql's map hashcodes depends on their order of insertion which is not consistent with the normal scala behaviour which might confuse users.

Code snippet from JIRA :

```

val a = spark.createDataset(Map(1->1, 2->2) :: Nil)

val b = spark.createDataset(Map(2->2, 1->1) :: Nil)

// Demonstration of how Scala Map equality is unaffected by insertion order:

assert(Map(1->1, 2->2).hashCode() == Map(2->2, 1->1).hashCode())

assert(Map(1->1, 2->2) == Map(2->2, 1->1))

assert(a.first() == b.first())

// In contrast, this will print two different hashcodes:

println(Seq(a, b).map(_.selectExpr("hash(*)").first()))

```

Also `MapType` is prohibited for aggregation / joins / equality comparisons #7819 and set operations #17236.

### Does this PR introduce any user-facing change?

Yes. Now users cannot use hash functions on elements of `mapType`. To restore the previous behaviour set `spark.sql.legacy.useHashOnMapType` to true.

### How was this patch tested?

UT added.

Closes#27580 from iRakson/SPARK-27619.

Authored-by: iRakson <raksonrakesh@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR proposes to fix an issue where qualified columns are not matched for v2 tables if current catalog/namespace are used.

For v1 tables, you can currently perform the following:

```SQL

SELECT default.t.id FROM t;

```

For v2 tables, the following fails:

```SQL

USE testcat.ns1.ns2;

SELECT testcat.ns1.ns2.t.id FROM t;

org.apache.spark.sql.AnalysisException: cannot resolve '`testcat.ns1.ns2.t.id`' given input columns: [t.id, t.point]; line 1 pos 7;

```

### Why are the changes needed?

It is a bug since qualified column names cannot match if current catalog/namespace are used.

### Does this PR introduce any user-facing change?

Yes, now the following works:

```SQL

USE testcat.ns1.ns2;

SELECT testcat.ns1.ns2.t.id FROM t;

```

### How was this patch tested?

Added new tests

Closes#27532 from imback82/qualifed_col_respect_current.

Authored-by: Terry Kim <yuminkim@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch is to bump the master branch version to 3.1.0-SNAPSHOT.

### Why are the changes needed?

N/A

### Does this PR introduce any user-facing change?

N/A

### How was this patch tested?

N/A

Closes#27698 from gatorsmile/updateVersion.

Authored-by: gatorsmile <gatorsmile@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Use the average size of the non-skewed partitions as the target size when splitting skewed partitions, instead of ADAPTIVE_EXECUTION_SKEWED_PARTITION_SIZE_THRESHOLD

### Why are the changes needed?

The goal of skew join optimization is to make the data distribution move even. So it makes more sense the use the average size of the non-skewed partitions as the target size.

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

existing tests

Closes#27669 from cloud-fan/aqe.

Authored-by: Wenchen Fan <wenchen@databricks.com>

Signed-off-by: Xiao Li <gatorsmile@gmail.com>

### What changes were proposed in this pull request?

In the PR, I propose to replace:

1. `millisToDays()` by `microsToDays()` which accepts microseconds since the epoch and returns days since the epoch in the specified time zone. The last one is the internal representation of Catalyst's DateType.

2. `daysToMillis()` by `daysToMicros()` which accepts days since the epoch in some time zone and returns the number of microseconds since the epoch. The last one is internal representation of Catalyst's TimestampType.

3. `fromMillis()` by `millisToMicros()`

4. `toMillis()` by `microsToMillis()`

### Why are the changes needed?

Spark stores timestamps in microseconds precision, so, there is no actual need to convert dates to milliseconds, and then to microseconds. As examples, look at DateTimeUtils functions `monthsBetween()` and `truncTimestamp()`.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By existing test suites UnivocityParserSuite, DateExpressionsSuite, ComputeCurrentTimeSuite, DateTimeUtilsSuite, DateFunctionsSuite, JsonSuite, StreamSuite.

Closes#27618 from MaxGekk/replace-millis-by-micros.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

The current behavior of interval multiply and divide follows the ANSI SQL standard when overflow, it is compatible with other operations when `spark.sql.ansi.enabled` is true, but not compatible when `spark.sql.ansi.enabled` is false.

When `spark.sql.ansi.enabled` is false, as the factor is a double value, so it should use java's rounding or truncation behavior for casting double to integrals. when divided by zero, it returns `null`. we also follow the natural rules for intervals as defined in the Gregorian calendar, so we do not add the month fraction to days but add days fraction to microseconds.

### Why are the changes needed?

Make interval multiply and divide's overflow behavior consistent with other interval operations

### Does this PR introduce any user-facing change?

no, these are new features in 3.0

### How was this patch tested?

add uts

Closes#27672 from yaooqinn/SPARK-30919.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR fixes a bug in nested column aliasing by taking the data type of the referenced nested fields into account when calculating the number of extracted columns. After this PR this query runs without issues:

```

SELECT explodedvalue.*

FROM VALUES array(named_struct('nested', named_struct('a', 1, 'b', 2))) AS (value)

LATERAL VIEW explode(value) AS explodedvalue

```

This is a regression from Spark 2.4.

### Why are the changes needed?

To fix a bug.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Added new UT.

Closes#27675 from peter-toth/SPARK-30870.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

This PR adds a new `nullOnOverflow` parameter to `MakeDecimal` so as to avoid its value depending on `SQLConf.get` and change during planning.

### Why are the changes needed?

This allows to avoid the issue when the configuration change between different phases of planning, and this can silently break a query plan which can lead to crashes or data corruption.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing UTs.

Closes#27656 from peter-toth/SPARK-30898.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR adds a new `followThreeValuedLogic` parameter to `ArrayExists` so as to avoid its value depending on `SQLConf.get` and change during planning.

### Why are the changes needed?

This allows to avoid the issue when the configuration change between different phases of planning, and this can silently break a query plan which can lead to crashes or data corruption.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing UTs.

Closes#27655 from peter-toth/SPARK-30897.

Authored-by: Peter Toth <peter.toth@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Merge Into is currently missing additional validation around:

1. The lack of any WHEN statements

2. The first WHEN MATCHED statement needs to have a condition if there are two WHEN MATCHED statements.

3. Single use of UPDATE/DELETE

This PR introduces these validations.

(1) is required, because otherwise the MERGE statement is useless.

(2) is required, because otherwise the second WHEN MATCHED condition becomes dead code

(3) is up for debate, but the idea there is that a single expression should be sufficient to specify when you would like to update or delete your records. We restrict it for now to reduce surface area and ambiguity.

### Why are the changes needed?

To ease DataSource developers when building implementations for MERGE

### Does this PR introduce any user-facing change?

Adds additional validation checks

### How was this patch tested?

Unit tests

Closes#27677 from brkyvz/mergeChecks.

Authored-by: Burak Yavuz <brkyvz@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When skewed join optimization split more skewed readers, the plan may be very large and can not be shown in ui quickly. The config `spark.sql.adaptive.skewedJoinOptimization.skewedPartitionMaxSplits` is to resolve the above ui shown issue. And after [PR#27493](https://github.com/apache/spark/pull/27493) combined the skewed readers into one, we not need this config.

### Why are the changes needed?

remove the unnecessary config

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

existing test

Closes#27673 from JkSelf/removeMaxSplitNum.

Authored-by: jiake <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

- Use `Math.multiplyExact()` in `DateTimeUtils.fromMillis()` to prevent silent overflow in conversion milliseconds to microseconds.

- Use `DateTimeUtils.fromMillis()` in all places where milliseconds are converted to microseconds

- Use `DateTimeUtils.toMillis()` in all places where microseconds are converted to milliseconds

### Why are the changes needed?

1. To prevent silent arithmetic overflow while multiplying by 1000 in `fromMillis()`. Instead of it, `new ArithmeticException("long overflow")` will be thrown, and handled accordantly.

2. To correctly round microseconds in conversion to milliseconds. For example, `1965-01-01 10:11:12.123456` is represented as `-157700927876544` in micro precision. In milliseconds precision the above needs to be represented as `-157700927877` or `1965-01-01 10:11:12.123`.

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

By `TimestampFormatterSuite`, `CastSuite`, `DateExpressionsSuite`, `IntervalExpressionsSuite`, `ExpressionParserSuite`, `ExpressionParserSuite`, `DateTimeUtilsSuite`, `IntervalUtilsSuite`

Closes#27676 from MaxGekk/millis-2-micros-overflow.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to explain in the description of `removedSQLConfigs` when removed SQL configs should NOT be placed to the map.

### Why are the changes needed?

To make the cases when SQL configs should be added to `removedSQLConfigs` more clear. Recently, `spark.sql.variable.substitute.depth` was removed from the map by #27646 because it contradicts to the condition described by the PR.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

By `./dev/scalastyle`

Closes#27653 from MaxGekk/removedSQLConfigs-comment.

Authored-by: Maxim Gekk <max.gekk@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Spark `ConfigEntry` and `ConfigBuilder` missing Spark version information of each configuration at release. This is not good for Spark user when they visiting the page of spark configuration.

http://spark.apache.org/docs/latest/configuration.html

The new Spark SQL config docs looks like:

```

> SET -v

spark.sql.adaptive.enabled false When true, enable adaptive query execution.

spark.sql.adaptive.nonEmptyPartitionRatioForBroadcastJoin 0.2 The relation with a non-empty partition ratio lower than this config will not be considered as the build side of a broadcast-hash join in adaptive execution regardless of its size.This configuration only has an effect when 'spark.sql.adaptive.enabled' is enabled.

spark.sql.adaptive.optimizeSkewedJoin.enabled true When true and adaptive execution is enabled, a skewed join is automatically handled at runtime.

spark.sql.adaptive.optimizeSkewedJoin.skewedPartitionFactor 10 A partition is considered as a skewed partition if its size is larger than this factor multiple the median partition size and also larger than spark.sql.adaptive.optimizeSkewedJoin.skewedPartitionSizeThreshold

spark.sql.adaptive.optimizeSkewedJoin.skewedPartitionMaxSplits 5 Configures the maximum number of task to handle a skewed partition in adaptive skewedjoin.

spark.sql.adaptive.optimizeSkewedJoin.skewedPartitionSizeThreshold 64MB Configures the minimum size in bytes for a partition that is considered as a skewed partition in adaptive skewed join.

spark.sql.adaptive.shuffle.fetchShuffleBlocksInBatch.enabled true Whether to fetch the continuous shuffle blocks in batch. Instead of fetching blocks one by one, fetching continuous shuffle blocks for the same map task in batch can reduce IO and improve performance. Note, multiple continuous blocks exist in single fetch request only happen when 'spark.sql.adaptive.enabled' and 'spark.sql.adaptive.shuffle.reducePostShufflePartitions.enabled' is enabled, this feature also depends on a relocatable serializer, the concatenation support codec in use and the new version shuffle fetch protocol.

spark.sql.adaptive.shuffle.localShuffleReader.enabled true When true and 'spark.sql.adaptive.enabled' is enabled, this enables the optimization of converting the shuffle reader to local shuffle reader for the shuffle exchange of the broadcast hash join in probe side.

spark.sql.adaptive.shuffle.maxNumPostShufflePartitions <undefined> The advisory maximum number of post-shuffle partitions used in adaptive execution. This is used as the initial number of pre-shuffle partitions. By default it equals to spark.sql.shuffle.partitions. This configuration only has an effect when 'spark.sql.adaptive.enabled' and 'spark.sql.adaptive.shuffle.reducePostShufflePartitions.enabled' is enabled.

```

**Note**: Because there are so many configuration items that are exposed and require a lot of finishing, I will add the version numbers of these configuration items in another PR.

### Why are the changes needed?

Supplemental configuration version information.

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

Exists UT

Closes#27592 from beliefer/add-version-to-config.

Authored-by: beliefer <beliefer@163.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

The style of `EXPLAIN FORMATTED` output needs to be improved. We’ve already got some observations/ideas in

https://github.com/apache/spark/pull/27368#discussion_r376694496https://github.com/apache/spark/pull/27368#discussion_r376927143

Observations/Ideas:

1. Using comma as the separator is not clear, especially commas are used inside the expressions too.

2. Show the column counts first? For example, `Results [4]: …`

3. Currently the attribute names are automatically generated, this need to refined.

4. Add arguments field in common implementations as `EXPLAIN EXTENDED` did by calling `argString` in `TreeNode.simpleString`. This will eliminate most existing minor differences between

`EXPLAIN EXTENDED` and `EXPLAIN FORMATTED`.

5. Another improvement we can do is: the generated alias shouldn't include attribute id. collect_set(val, 0, 0)#123 looks clearer than collect_set(val#456, 0, 0)#123

This PR is currently addressing comments 2 & 4, and open for more discussions on improving readability.

### Why are the changes needed?

The readability of `EXPLAIN FORMATTED` need to be improved, which will help user better understand the query plan.

### Does this PR introduce any user-facing change?

Yes, `EXPLAIN FORMATTED` output style changed.

### How was this patch tested?

Update expect results of test cases in explain.sql

Closes#27509 from Eric5553/ExplainFormattedRefine.

Authored-by: Eric Wu <492960551@qq.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

- Add missing `since` annotation.

- Don't show classes under `org.apache.spark.sql.dynamicpruning` package in API docs.

- Fix the scope of `xxxExactNumeric` to remove it from the API docs.

### Why are the changes needed?

Avoid leaking APIs unintentionally in Spark 3.0.0.

### Does this PR introduce any user-facing change?

No. All these changes are to avoid leaking APIs unintentionally in Spark 3.0.0.

### How was this patch tested?

Manually generated the API docs and verified the above issues have been fixed.

Closes#27560 from xuanyuanking/SPARK-30809.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In the PR, I propose to add the `legacySizeOfNull ` parameter to the `Size` expression, and pass the value of `spark.sql.legacy.sizeOfNull` if `legacySizeOfNull` is not provided on creation of `Size`.

### Why are the changes needed?