### What changes were proposed in this pull request?

Needs to improve the Column name and tooltips for the Fair Scheduler Pool Table.

### Why are the changes needed?

Need to correct SchedulingMode column name to -> 'Scheduling Mode' and tooltips need to add for Minimum Share, Pool Weight and Scheduling Mode (require meaning full Tool tips for the end user to understand.)

### Does this PR introduce any user-facing change?

YES

### How was this patch tested?

Manual Testing.

Closes#27047 from 07ARB/SPARK-30384.

Lead-authored-by: 07ARB <ankitrajboudh@gmail.com>

Co-authored-by: Ankitraj <8948111+07ARB@users.noreply.github.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

There is a deadlock between `LiveListenerBus#stop` and `AsyncEventQueue#removeListenerOnError`.

We can reproduce as follows:

1. Post some events to `LiveListenerBus`

2. Call `LiveListenerBus#stop` and hold the synchronized lock of `bus`(5e92301723/core/src/main/scala/org/apache/spark/scheduler/LiveListenerBus.scala (L229)), waiting until all the events are processed by listeners, then remove all the queues

3. Event queue would drain out events by posting to its listeners. If a listener is interrupted, it will call `AsyncEventQueue#removeListenerOnError`, inside it will call `bus.removeListener`(7b1b60c758/core/src/main/scala/org/apache/spark/scheduler/AsyncEventQueue.scala (L207)), trying to acquire synchronized lock of bus, resulting in deadlock

This PR removes the `synchronized` from `LiveListenerBus.stop` because underlying data structures themselves are thread-safe.

### Why are the changes needed?

To fix deadlock.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

New UT.

Closes#26924 from wangshuo128/event-queue-race-condition.

Authored-by: Wang Shuo <wangshuo128@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This patch proposes to only convert first few elements of collection accumulators in `LiveEntityHelpers.newAccumulatorInfos`.

### Why are the changes needed?

One Spark job on our cluster uses collection accumulator to collect something and has encountered an exception like:

```

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOf(Arrays.java:3332)

at java.lang.AbstractStringBuilder.ensureCapacityInternal(AbstractStringBuilder.java:124)

at java.lang.AbstractStringBuilder.append(AbstractStringBuilder.java:448)

at java.lang.StringBuilder.append(StringBuilder.java:136)

at java.lang.StringBuilder.append(StringBuilder.java:131)

at java.util.AbstractCollection.toString(AbstractCollection.java:462)

at java.util.Collections$UnmodifiableCollection.toString(Collections.java:1035)

at org.apache.spark.status.LiveEntityHelpers$$anonfun$newAccumulatorInfos$2$$anonfun$apply$3.apply(LiveEntity.scala:596)

at org.apache.spark.status.LiveEntityHelpers$$anonfun$newAccumulatorInfos$2$$anonfun$apply$3.apply(LiveEntity.scala:596)

at scala.Option.map(Option.scala:146)

at org.apache.spark.status.LiveEntityHelpers$$anonfun$newAccumulatorInfos$2.apply(LiveEntity.scala:596)

at org.apache.spark.status.LiveEntityHelpers$$anonfun$newAccumulatorInfos$2.apply(LiveEntity.scala:591)

```

`LiveEntityHelpers.newAccumulatorInfos` converts `AccumulableInfo`s to `v1.AccumulableInfo` by calling `toString` on accumulator's value. For collection accumulator, it might take much more memory when in string representation, for example, collection accumulator of long values, and cause OOM (in this job, the driver memory is 6g).

Looks like the results of `newAccumulatorInfos` are used in api and ui. For such usage, it also does not make sense to have very long string of complete collection accumulators.

### Does this PR introduce any user-facing change?

Yes. Collection accumulator now only shows first few elements in api and ui.

### How was this patch tested?

Unit test.

Manual test. Launched a Spark shell, ran:

```scala

val accum = sc.collectionAccumulator[Long]("Collection Accumulator Example")

sc.range(0, 10000, 1, 1).foreach(x => accum.add(x))

accum.value

```

<img width="2533" alt="Screen Shot 2019-12-30 at 2 03 43 PM" src="https://user-images.githubusercontent.com/68855/71602488-6eb2c400-2b0d-11ea-8725-dba36478198f.png">

Closes#27038 from viirya/partial-collect-accu.

Lead-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Co-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

UI and event logs redact sensitive information. But the monitoring URL, https://spark.apache.org/docs/latest/monitoring.html#rest-api , specifically /applications/[app-id]/environment does not, which is a security issue.

### What changes were proposed in this pull request?

REST api response is redacted before sending it

### Why are the changes needed?

If no redaction is done for rest API call, it can leak security information

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Tested manually

Closes#27018 from ajithme/redactrest.

Authored-by: Ajith <ajith2489@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

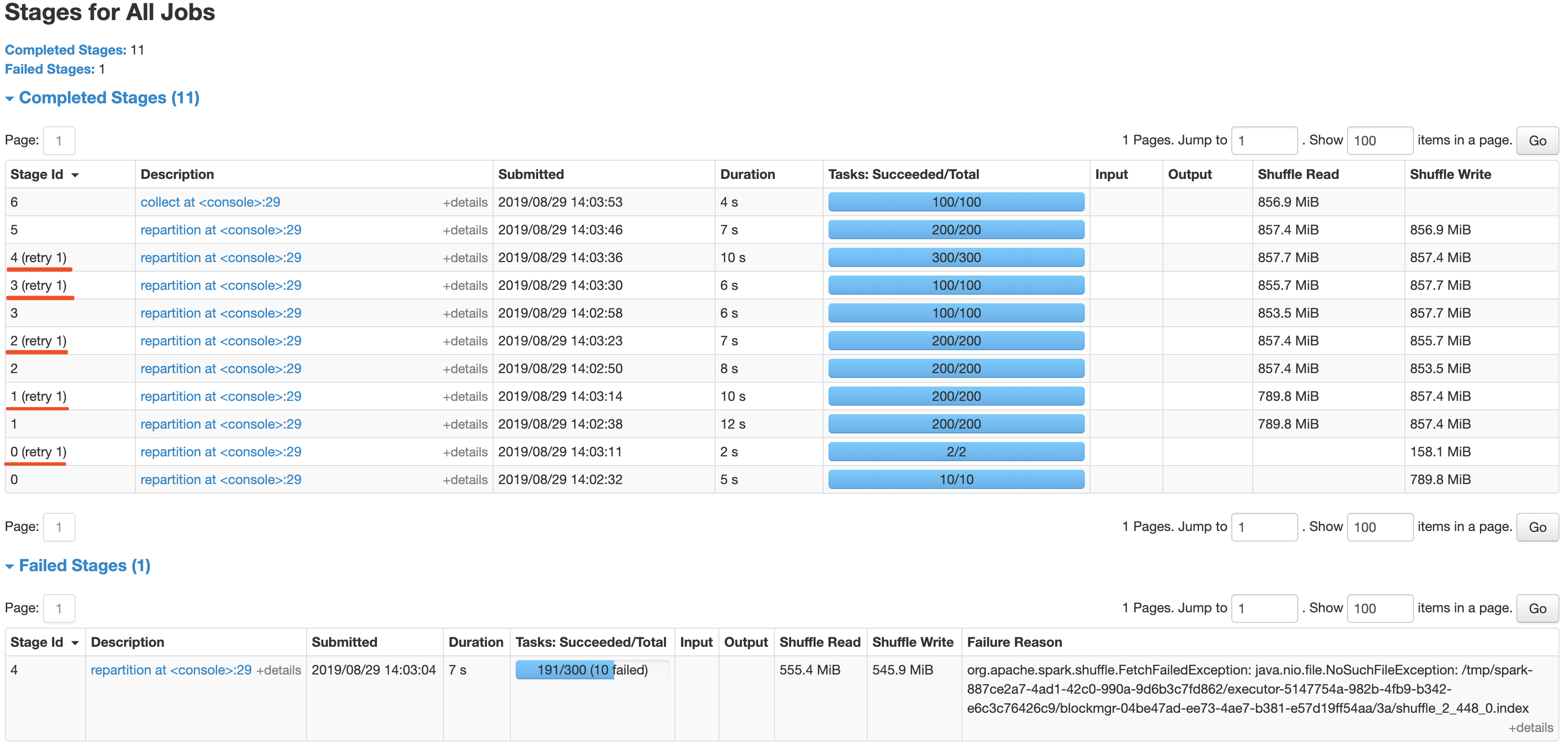

### What changes were proposed in this pull request?

Remove meaning less tooltip from All pages.

### Why are the changes needed?

If we can't come up with meaningful tooltips, then tooltips not require to add.

### Does this PR introduce any user-facing change?

Yes

tooltips like highlight in above image got removed

### How was this patch tested?

Manual test.

Closes#27043 from 07ARB/SPARK-30383.

Authored-by: 07ARB <ankitrajboudh@gmail.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This patch fixes the flaky test failure on MasterSuite, "SPARK-27510: Master should avoid dead loop while launching executor failed in Worker".

The culprit of test failure was ironically the test ran too fast; the interval of `eventually` is by default "15 ms", but it took only "8 ms" from submitting driver to removing app from master.

```

19/12/23 15:45:06.533 dispatcher-event-loop-6 INFO Master: Registering worker localhost:9999 with 10 cores, 3.6 GiB RAM

19/12/23 15:45:06.534 dispatcher-event-loop-6 INFO Master: Driver submitted org.apache.spark.FakeClass

19/12/23 15:45:06.535 dispatcher-event-loop-6 INFO Master: Launching driver driver-20191223154506-0000 on worker 10001

19/12/23 15:45:06.536 dispatcher-event-loop-9 INFO Master: Registering app name

19/12/23 15:45:06.537 dispatcher-event-loop-9 INFO Master: Registered app name with ID app-20191223154506-0000

19/12/23 15:45:06.537 dispatcher-event-loop-9 INFO Master: Launching executor app-20191223154506-0000/0 on worker 10001

19/12/23 15:45:06.537 dispatcher-event-loop-10 INFO Master: Removing executor app-20191223154506-0000/0 because it is FAILED

...

19/12/23 15:45:06.542 dispatcher-event-loop-19 ERROR Master: Application name with ID app-20191223154506-0000 failed 10 times; removing it

```

Given the interval is already tiny, instead of lowering interval, the patch considers above case as well when verifying the status.

### Why are the changes needed?

We observed intermittent test failure in Jenkins build which should be fixed.

https://amplab.cs.berkeley.edu/jenkins/job/SparkPullRequestBuilder/115664/testReport/

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Modified UT.

Closes#27004 from HeartSaVioR/SPARK-30348.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Remove it from `CoarseGrainedSchedulerBackend` when `HeartbeatReceiver` recognizes a lost executor.

### Why are the changes needed?

Currently, an application may hang if we don't remove a lost executor from `CoarseGrainedSchedulerBackend` as it may happens due to:

1) In `expireDeadHosts()`, `HeartbeatReceiver` calls `scheduler.executorLost()`;

2) Before `HeartbeatReceiver` calls `sc.killAndReplaceExecutor()`(which would mark the lost executor as "pendingToRemove") in a separate thread, `CoarseGrainedSchedulerBackend` may begins to launch tasks on that executor without realizing it has been lost indeed.

3) If that lost executor doesn't shut down gracefully, `CoarseGrainedSchedulerBackend ` may never receive a disconnect event. As a result, tasks launched on that lost executor become orphans. While at the same time, driver just thinks that those tasks are still running and waits forever.

Removing the lost executor from `CoarseGrainedSchedulerBackend` would let `TaskSetManager` mark those tasks as failed which avoids app hang. Furthermore, it cleans up records in `executorDataMap`, which may never be removed in such case.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Updated existed tests.

Close#24350.

Closes#26980 from Ngone51/SPARK-27348.

Lead-authored-by: yi.wu <yi.wu@databricks.com>

Co-authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

Currently SPARK history server display the classpath entries in the Environment tab with classpaths redacted, this is because EventLog file has the entry values redacted while writing. But when same is seen from a running application UI, its seen that it is not redacted. Classpath entries redact is not needed and can be avoided

### What changes were proposed in this pull request?

Event logs will not redect the classpath entries

### Why are the changes needed?

Redact of classpath entries is not needed

### Does this PR introduce any user-facing change?

NO

### How was this patch tested?

Tested manually to verify on UI

Closes#27016 from ajithme/redactui.

Authored-by: Ajith <ajith2489@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Unify `DriverEndpoint. executorIsAlive()` and `CoarseGrainedSchedulerBackend .isExecutorActive()`.

### Why are the changes needed?

`DriverEndPoint` has method `executorIsAlive()` to check wether an executor is alive/active, while `CoarseGrainedSchedulerBackend` has method `isExecutorActive()` to do the same work. But, `isExecutorActive()` seems forget to consider `executorsPendingLossReason`. Unify these two methods makes behavior be consistent between `DriverEndPoint` and `CoarseGrainedSchedulerBackend` and make code more easier to maintain.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#27012 from Ngone51/unify-is-executor-alive.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Settings to quiet for Class `ExecutorAllocationManager` that request message too verbose. otherwise, this class generates too many messages like

`INFO spark.ExecutorAllocationManager: Request to remove executorIds: 890`

when we enabled DRA.

### Why are the changes needed?

Log level improvement.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Closes#26925 from cfmcgrady/quiet-request-executor-remove-message.

Authored-by: Fu Chen <cfmcgrady@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Change config name from `spark.eventLog.allowErasureCoding` to `spark.eventLog.allowErasureCoding.enabled`.

### Why are the changes needed?

To follow the other boolean conf naming convention.

### Does this PR introduce any user-facing change?

No, it's newly added in Spark 3.0.

### How was this patch tested?

Tested manually and pass Jenkins.

Closes#26998 from Ngone51/SPARK-25855-FOLLOWUP.

Authored-by: yi.wu <yi.wu@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

We added shuffle block fetch optimization in SPARK-9853. In ShuffleBlockFetcherIterator, we merge single blocks into batch blocks. During merging, we should count merged blocks for `maxBlocksInFlightPerAddress`, not original single blocks.

### Why are the changes needed?

If `maxBlocksInFlightPerAddress` is specified, like set it to 1, it should mean one batch block, not one original single block. Otherwise, it will conflict with batch shuffle fetch.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Unit test.

Closes#26930 from viirya/respect-max-blocks-inflight.

Lead-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Co-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Fixed typo in `docs` directory and in other directories

1. Find typo in `docs` and apply fixes to files in all directories

2. Fix `the the` -> `the`

### Why are the changes needed?

Better readability of documents

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

No test needed

Closes#26976 from kiszk/typo_20191221.

Authored-by: Kazuaki Ishizaki <ishizaki@jp.ibm.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Remove usages of Guava that no longer work in Guava 27, and replace with workalikes. I'll comment on key types of changes below.

### Why are the changes needed?

Hadoop 3.2.1 uses Guava 27, so this helps us avoid problems running on Hadoop 3.2.1+ and generally lowers our exposure to Guava.

### Does this PR introduce any user-facing change?

Should not be, but see notes below on hash codes and toString.

### How was this patch tested?

Existing tests will verify whether these changes break anything for Guava 14.

I manually built with an updated version and it compiles with Guava 27; tests running manually locally now.

Closes#26911 from srowen/SPARK-30272.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Refactor the code brought by #26637 .

No more dummy StageInfo and its side-effects are needed at all.

This change also enable users to set job description to empty jobs though.

### Why are the changes needed?

The previous approach introduced dummy StageInfo and this causes side-effects.

### Does this PR introduce any user-facing change?

Yes. Description set by user will be shown in the AllJobsPage.

### How was this patch tested?

Manual test and newly added unit test.

Closes#26703 from sarutak/fix-ui-for-empty-job2.

Lead-authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Co-authored-by: Dongjoon Hyun <dhyun@apple.com>

Co-authored-by: Kousuke Saruta <sarutak@oss.nttdata.co.jp>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

We should not append keys to BytesToBytesMap to be its max capacity.

### Why are the changes needed?

BytesToBytesMap.append allows to append keys until the number of keys reaches MAX_CAPACITY. But once the the pointer array in the map holds MAX_CAPACITY keys, next time call of lookup will hang forever.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually test by:

```java

Test

public void testCapacity() {

TestMemoryManager memoryManager2 =

new TestMemoryManager(

new SparkConf()

.set(package$.MODULE$.MEMORY_OFFHEAP_ENABLED(), true)

.set(package$.MODULE$.MEMORY_OFFHEAP_SIZE(), 25600 * 1024 * 1024L)

.set(package$.MODULE$.SHUFFLE_SPILL_COMPRESS(), false)

.set(package$.MODULE$.SHUFFLE_COMPRESS(), false));

TaskMemoryManager taskMemoryManager2 = new TaskMemoryManager(memoryManager2, 0);

final long pageSizeBytes = 8000000 + 8; // 8 bytes for end-of-page marker

final BytesToBytesMap map = new BytesToBytesMap(taskMemoryManager2, 1024, pageSizeBytes);

try {

for (long i = 0; i < BytesToBytesMap.MAX_CAPACITY + 1; i++) {

final long[] value = new long[]{i};

boolean succeed = map.lookup(value, Platform.LONG_ARRAY_OFFSET, 8).append(

value,

Platform.LONG_ARRAY_OFFSET,

8,

value,

Platform.LONG_ARRAY_OFFSET,

8);

}

map.free();

} finally {

map.free();

}

}

```

Once the map was appended to 536870912 keys (MAX_CAPACITY), the next lookup will hang.

Closes#26914 from viirya/fix-bytemap2.

Authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

When `spark.shuffle.useOldFetchProtocol` is enabled then switching off the direct disk reading of host-local shuffle blocks and falling back to remote block fetching (and this way avoiding the `GetLocalDirsForExecutors` block transfer message which is introduced from Spark 3.0.0).

### Why are the changes needed?

In `[SPARK-27651][Core] Avoid the network when shuffle blocks are fetched from the same host` a new block transfer message is introduced, `GetLocalDirsForExecutors`. This new message could be sent to the external shuffle service and as it is not supported by the previous version of external shuffle service it should be avoided when `spark.shuffle.useOldFetchProtocol` is true.

In the migration guide I changed the exception type as `org.apache.spark.network.shuffle.protocol.BlockTransferMessage.Decoder#fromByteBuffer`

throws a IllegalArgumentException with the given text and uses the message type which is just a simple number (byte). I have checked and this is true for version 2.4.4 too.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

This specific case (considering one extra boolean to switch off host local disk reading feature) is not tested but existing tests were run.

Closes#26869 from attilapiros/SPARK-30235.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

Refactor test cases added by https://github.com/apache/spark/pull/26714, to improve code compactness.

### How was this patch tested?

Tested locally.

Closes#26916 from jiangxb1987/SPARK-25100.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

Even we set spark.history.fs.numReplayThreads to a large number, such as 30.

The history server still replays logs slowly.

We found that, if there is a straggler in a batch of replay tasks, all the other threads will wait for this

straggler.

In this PR, we create processing to save the logs which are being replayed.

So that the replay tasks can execute Asynchronously.

It can accelerate the speed to replay logs for history server.

No.

UT.

Closes#25797 from turboFei/SPARK-29043.

Authored-by: turbofei <fwang12@ebay.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

The PR adds a new config option to configure an address for the

proxy server, and a new handler that intercepts redirects and replaces

the URL with one pointing at the proxy server. This is needed on top

of the "proxy base path" support because redirects use full URLs, not

just absolute paths from the server's root.

### Why are the changes needed?

Spark's web UI has support for generating links to paths with a

prefix, to support a proxy server, but those do not apply when

the UI is responding with redirects. In that case, Spark is sending

its own URL back to the client, and if it's behind a dumb proxy

server that doesn't do rewriting (like when using stunnel for HTTPS

support) then the client will see the wrong URL and may fail.

### Does this PR introduce any user-facing change?

Yes. It's a new UI option.

### How was this patch tested?

Tested with added unit test, with Spark behind stunnel, and in a

more complicated app using a different HTTPS proxy.

Closes#26873 from vanzin/SPARK-30240.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Fix the bug when invoking saveAsNewAPIHadoopDataset to store data, the job will fail because the class TaskCommitMessage hasn't be registered if serializer is KryoSerializer and spark.kryo.registrationRequired is true

## How was this patch tested?

UT

Closes#26714 from deshanxiao/SPARK-25100.

Authored-by: xiaodeshan <xiaodeshan@xiaomi.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

The value of non-Spark config properties ignored in spark-submit is no longer logged.

### Why are the changes needed?

The value isn't really needed in the logs, and could contain potentially sensitive info. While we can redact the values selectively too, I figured it's more robust to just not log them at all here, as the values aren't important in this log statement.

### Does this PR introduce any user-facing change?

Other than the change to logging above, no.

### How was this patch tested?

Existing tests

Closes#26893 from srowen/SPARK-30263.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In the current implementation of `SparkShellLoggingFilter`, if the log level of the root logger and the log level of a message are different, whether a message should logged is decided based on log4j's configuration but whether the message should be output to the REPL's console is not cared.

So, if the log level of the root logger is `DEBUG`, the log level of REPL's logger is `WARN` and the log level of a message is `INFO`, the message will output to the REPL's console even though `INFO < WARN`.

https://github.com/apache/spark/pull/26798/files#diff-bfd5810d8aa78ad90150e806d830bb78L237

The ideal behavior should be like as follows and implemented them in this change.

1. If the log level of a message is greater than or equal to the log level of the root logger, the message should be logged but whether the message is output to the REPL's console should be decided based on whether the log level of the message is greater than or equal to the log level of the REPL's logger.

2. If a log level or custom appenders are explicitly defined for a category, whether a log message via the logger corresponding to the category is logged and output to the REPL's console should be decided baed on the log level of the category.

We can confirm whether a log level or appenders are explicitly set to a logger for a category by `Logger#getLevel` and `Logger#getAllAppenders.hasMoreElements`.

### Why are the changes needed?

This is a bug breaking a compatibility.

#9816 enabled REPL's log4j configuration to override root logger but #23675 seems to have broken the feature.

You can see one example when you modifies the default log4j configuration like as follows.

```

# Change the log level for rootCategory to DEBUG

log4j.rootCategory=DEBUG, console

...

# The log level for repl.Main remains WARN

log4j.logger.org.apache.spark.repl.Main=WARN

```

If you launch REPL with the configuration, INFO level logs appear even though the log level for REPL is WARN.

```

・・・

19/12/08 23:31:38 INFO Utils: Successfully started service 'sparkDriver' on port 33083.

19/12/08 23:31:38 INFO SparkEnv: Registering MapOutputTracker

19/12/08 23:31:38 INFO SparkEnv: Registering BlockManagerMaster

19/12/08 23:31:38 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

19/12/08 23:31:38 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

19/12/08 23:31:38 INFO SparkEnv: Registering BlockManagerMasterHeartbeat

・・・

```

Before #23675 was applied, those INFO level logs are not shown with the same log4j.properties.

### Does this PR introduce any user-facing change?

Yes. The logging behavior for REPL is fixed.

### How was this patch tested?

Manual test and newly added unit test.

Closes#26798 from sarutak/fix-spark-shell-loglevel.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

Adding tooltip to Stages tab for better usability.

### Why are the changes needed?

There are a few common points of confusion in the UI that could be clarified with tooltips. We

should add tooltips to explain.

### Does this PR introduce any user-facing change?

Yes

### How was this patch tested?

Manual

Closes#26859 from sharangk/tooltip1.

Authored-by: sharan.gk <sharan.gk@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

sparkContext.addFile and sparkContext.addJar fails when file path contains spaces

### Why are the changes needed?

When uploading a file to the spark context via the addFile and addJar function, an exception is thrown when file path contains a space character. Escaping the space with %20 or

or + doesn't change the result.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Add test case.

Closes#26773 from 07ARB/SPARK-30126.

Authored-by: 07ARB <ankitrajboudh@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch changes the condition to check if BytesToBytesMap should grow up its internal array. Specifically, it changes to compare by the capacity of the array, instead of its size.

### Why are the changes needed?

One Spark job on our cluster hangs forever at BytesToBytesMap.safeLookup. After inspecting, the long array size is 536870912.

Currently in BytesToBytesMap.append, we only grow the internal array if the size of the array is less than its MAX_CAPACITY that is 536870912. So in above case, the array can not be grown up, and safeLookup can not find an empty slot forever.

But it is wrong because we use two array entries per key, so the array size is twice the capacity. We should compare the current capacity of the array, instead of its size.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

This issue only happens when loading big number of values into BytesToBytesMap, so it is hard to do unit test. This is tested manually with internal Spark job.

Closes#26828 from viirya/fix-bytemap.

Lead-authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Co-authored-by: Liang-Chi Hsieh <liangchi@uber.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

See https://issues.apache.org/jira/browse/SPARK-30195 for the background; I won't repeat it here. This is sort of a grab-bag of related issues.

### Why are the changes needed?

To cross-compile with Scala 2.13 later.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests for 2.12. I've been manually checking that this actually resolves the compile problems in 2.13 separately.

Closes#26826 from srowen/SPARK-30195.

Authored-by: Sean Owen <srowen@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Adding tooltip for jobs tab column - Job Id (Job Group), Description ,Submitted, Duration, Stages, Tasks

Before:

After:

### Why are the changes needed?

Jobs tab do not have any tooltip for the columns, Some page provide tooltip , inorder to resolve the inconsistency and for better user experience.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manual

Closes#26384 from PavithraRamachandran/jobTab_tooltip.

Authored-by: Pavithra Ramachandran <pavi.rams@gmail.com>

Signed-off-by: Sean Owen <srowen@gmail.com>

### What changes were proposed in this pull request?

Added `shutdownHook` for shutdown method of executor plugin. This will ensure that shutdown method will be called always.

### Why are the changes needed?

Whenever executors are not going down gracefully, i.e getting killed due to idle time or getting killed forcefully, shutdown method of executors plugin is not getting called. Shutdown method can be used to release any resources that plugin has acquired during its initialisation. So its important to make sure that every time a executor goes down shutdown method of plugin gets called.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Tested Manually.

Closes#26810 from iRakson/Executor_Plugin.

Authored-by: root1 <raksonrakesh@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This PR add an optional spark conf for speculation to allow speculative runs for stages where there are only a few tasks.

```

spark.speculation.task.duration.threshold

```

If provided, tasks would be speculatively run if the TaskSet contains less tasks than the number of slots on a single executor and the task is taking longer time than the threshold.

### Why are the changes needed?

This change helps avoid scenarios where there is single executor that could hang forever due to disk issue and we unfortunately assigned the single task in a TaskSet to that executor and cause the whole job to hang forever.

### Does this PR introduce any user-facing change?

yes. If the new config `spark.speculation.task.duration.threshold` is provided and the TaskSet contains less tasks than the number of slots on a single executor and the task is taking longer time than the threshold, then speculative tasks would be submitted for the running tasks in the TaskSet.

### How was this patch tested?

Unit tests are added to TaskSetManagerSuite.

Closes#26614 from yuchenhuo/SPARK-29976.

Authored-by: Yuchen Huo <yuchen.huo@databricks.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

## What changes were proposed in this pull request?

This PR proposes to add instrumentation of memory usage via the Spark Dropwizard/Codahale metrics system. Memory usage metrics are available via the Executor metrics, recently implemented as detailed in https://issues.apache.org/jira/browse/SPARK-23206.

Additional notes: This takes advantage of the metrics poller introduced in #23767.

## Why are the changes needed?

Executor metrics bring have many useful insights on memory usage, in particular on the usage of storage memory and executor memory. This is useful for troubleshooting. Having the information in the metrics systems allows to add those metrics to Spark performance dashboards and study memory usage as a function of time, as in the example graph https://issues.apache.org/jira/secure/attachment/12962810/Example_dashboard_Spark_Memory_Metrics.PNG

## Does this PR introduce any user-facing change?

Adds `ExecutorMetrics` source to publish executor metrics via the Dropwizard metrics system. Details of the available metrics in docs/monitoring.md

Adds configuration parameter `spark.metrics.executormetrics.source.enabled`

## How was this patch tested?

Tested on YARN cluster and with an existing setup for a Spark dashboard based on InfluxDB and Grafana.

Closes#24132 from LucaCanali/memoryMetricsSource.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Imran Rashid <irashid@cloudera.com>

### What changes were proposed in this pull request?

Follow up on https://github.com/apache/spark/pull/26654#discussion_r353826162

Instead of OrderingUtil or Utils.nanSafeCompare{Doubles,Floats}, just use java.lang.{Double,Float}.compare directly. All work identically w.r.t. NaN when used to `compare`.

### Why are the changes needed?

Simplification of the previous change, which existed to support Scala 2.13 migration.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing tests

Closes#26761 from srowen/SPARK-30009.2.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This proposes to introduce a naming convention for Spark metrics configuration parameters used to enable/disable metrics source reporting using the Dropwizard metrics library: `spark.metrics.sourceNameCamelCase.enabled` and update 2 parameters to use this naming convention.

### Why are the changes needed?

Currently Spark has a few parameters to enable/disable metrics reporting. Their naming pattern is not uniform and this can create confusion. Currently we have:

`spark.metrics.static.sources.enabled`

`spark.app.status.metrics.enabled`

`spark.sql.streaming.metricsEnabled`

### Does this PR introduce any user-facing change?

Update parameters for enabling/disabling metrics reporting new in Spark 3.0: `spark.metrics.static.sources.enabled` -> `spark.metrics.staticSources.enabled`, `spark.app.status.metrics.enabled` -> `spark.metrics.appStatusSource.enabled`.

Note: `spark.sql.streaming.metricsEnabled` is left unchanged as it is already in use in Spark 2.x.

### How was this patch tested?

Manually tested

Closes#26692 from LucaCanali/uniformNamingMetricsEnableParameters.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Move some classes extending Scala collections into parallel source trees, to support 2.13; other minor collection-related modifications.

Modify some classes extending Scala collections to work with 2.13 as well as 2.12. In many cases, this means introducing parallel source trees, as the type hierarchy changed in ways that one class can't support both.

### Why are the changes needed?

To support building for Scala 2.13 in the future.

### Does this PR introduce any user-facing change?

There should be no behavior change.

### How was this patch tested?

Existing tests. Note that the 2.13 changes are not tested by the PR builder, of course. They compile in 2.13 but can't even be tested locally. Later, once the project can be compiled for 2.13, thus tested, it's possible the 2.13 implementations will need updates.

Closes#26728 from srowen/SPARK-30012.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Disable continuous shuffle block fetching when the old fetch protocol in use.

### Why are the changes needed?

The new feature of continuous shuffle block fetching depends on the latest version of the shuffle fetch protocol. We should keep this constraint in `BlockStoreShuffleReader.fetchContinuousBlocksInBatch`.

### Does this PR introduce any user-facing change?

Users will not get the exception related to continuous shuffle block fetching when old version of the external shuffle service is used.

### How was this patch tested?

Existing UT.

Closes#26663 from xuanyuanking/SPARK-30025.

Authored-by: Yuanjian Li <xyliyuanjian@gmail.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Support JDBC/ODBC tab for HistoryServer WebUI. Currently from Historyserver we can't access the JDBC/ODBC tab for thrift server applications. In this PR, I am doing 2 main changes

1. Refactor existing thrift server listener to support kvstore

2. Add history server plugin for thrift server listener and tab.

### Why are the changes needed?

Users can access Thriftserver tab from History server for both running and finished applications,

### Does this PR introduce any user-facing change?

Support for JDBC/ODBC tab for the WEBUI from History server

### How was this patch tested?

Add UT and Manual tests

1. Start Thriftserver and Historyserver

```

sbin/stop-thriftserver.sh

sbin/stop-historyserver.sh

sbin/start-thriftserver.sh

sbin/start-historyserver.sh

```

2. Launch beeline

`bin/beeline -u jdbc:hive2://localhost:10000`

3. Run queries

Go to the JDBC/ODBC page of the WebUI from History server

Closes#26378 from shahidki31/ThriftKVStore.

Authored-by: shahid <shahidki31@gmail.com>

Signed-off-by: Gengliang Wang <gengliang.wang@databricks.com>

### What changes were proposed in this pull request?

Improve conf `IO_WARNING_LARGEFILETHRESHOLD` (a.k.a `spark.io.warning.largeFileThreshold`):

* reword documentation

* change type from `long` to `bytes`

### Why are the changes needed?

Improvements according to https://github.com/apache/spark/pull/25134#discussion_r350570804 & https://github.com/apache/spark/pull/25134#discussion_r350570917.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#26691 from Ngone51/SPARK-28366-followup.

Authored-by: wuyi <ngone_5451@163.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

In current implementation, job name for empty jobs is not shown so I've made change to show it.

### Why are the changes needed?

To make debug easier.

### Does this PR introduce any user-facing change?

Yes. Before applying my change, the `Job Page` will show as follows as the result of submitting a job which contains no partitions.

After applying my change, the page will show a display like a following screenshot.

### How was this patch tested?

Manual test.

Closes#26637 from sarutak/fix-ui-for-empty-job.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

## What changes were proposed in this pull request?

Before this PR `ShuffleBlockFetcherIterator` was partitioning the block fetches into two distinct sets: local reads and remote fetches. Within this PR (when the feature is enabled by "spark.shuffle.readHostLocalDisk.enabled") a new category is introduced: host-local reads. They are shuffle block fetches where although the block manager is different they are running on the same host along with the requester.

Moreover to get the local directories of the other executors/block managers a new RPC message is introduced `GetLocalDirs` which is sent the the block manager master where it is answered as `BlockManagerLocalDirs`. In `BlockManagerMasterEndpoint` for answering this request the `localDirs` is extracted from the `BlockManagerInfo` and stored separately in a hash map called `executorIdLocalDirs`. Because the earlier used `blockManagerInfo` contains data for the alive block managers (see `org.apache.spark.storage.BlockManagerMasterEndpoint#removeBlockManager`).

Now `executorIdLocalDirs` knows all the local dirs up to the application start (like the external shuffle service does) so in case of an RDD recalculation both host-local shuffle blocks and disk persisted RDD blocks on the same host can be served by reading the files behind the blocks directly.

## How was this patch tested?

### Unit tests

`ExternalShuffleServiceSuite`:

- "SPARK-27651: host local disk reading avoids external shuffle service on the same node"

`ShuffleBlockFetcherIteratorSuite`:

- "successful 3 local reads + 4 host local reads + 2 remote reads"

And with extending existing suites where shuffle metrics was tested.

### Manual tests

Running Spark on YARN in a 4 nodes cluster with 6 executors and having 12 shuffle blocks.

```

$ grep host-local experiment.log

19/07/30 03:57:12 INFO storage.ShuffleBlockFetcherIterator: Getting 12 (1496.8 MB) non-empty blocks including 2 (299.4 MB) local blocks and 2 (299.4 MB) host-local blocks and 8 (1197.4 MB) remote blocks

19/07/30 03:57:12 DEBUG storage.ShuffleBlockFetcherIterator: Start fetching host-local blocks: shuffle_0_2_1, shuffle_0_6_1

19/07/30 03:57:12 DEBUG storage.ShuffleBlockFetcherIterator: Got host-local blocks in 38 ms

19/07/30 03:57:12 INFO storage.ShuffleBlockFetcherIterator: Getting 12 (1496.8 MB) non-empty blocks including 2 (299.4 MB) local blocks and 2 (299.4 MB) host-local blocks and 8 (1197.4 MB) remote blocks

19/07/30 03:57:12 DEBUG storage.ShuffleBlockFetcherIterator: Start fetching host-local blocks: shuffle_0_0_0, shuffle_0_8_0

19/07/30 03:57:12 DEBUG storage.ShuffleBlockFetcherIterator: Got host-local blocks in 35 ms

```

Closes#25299 from attilapiros/SPARK-27651.

Authored-by: “attilapiros” <piros.attila.zsolt@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

Make separate source trees for Scala 2.12/2.13 in order to accommodate mutually-incompatible support for Ordering of double, float.

Note: This isn't the last change that will need a split source tree for 2.13. But this particular change could go several ways:

- (Split source tree)

- Inline the Scala 2.12 implementation

- Reflection

For this change alone any are possible, and splitting the source tree is a bit overkill. But if it will be necessary for other JIRAs (see umbrella SPARK-25075), then it might be the easiest way to implement this.

### Why are the changes needed?

Scala 2.13 split Ordering.Double into Ordering.Double.TotalOrdering and Ordering.Double.IeeeOrdering. Neither can be used in a single build that supports 2.12 and 2.13.

TotalOrdering works like java.lang.Double.compare. IeeeOrdering works like Scala 2.12 Ordering.Double. They differ in how NaN is handled - compares always above other values? or always compares as 'false'? In theory they have different uses: TotalOrdering is important if floating-point values are sorted. IeeeOrdering behaves like 2.12 and JVM comparison operators.

I chose TotalOrdering as I think we care more about stable sorting, and because elsewhere we rely on java.lang comparisons. It is also possible to support with two methods.

### Does this PR introduce any user-facing change?

Pending tests, will see if it obviously affects any sort order. We need to see if it changes NaN sort order.

### How was this patch tested?

Existing tests so far.

Closes#26654 from srowen/SPARK-30009.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

* Replace hard-coded conf `spark.scheduler.listenerbus.eventqueue` with a constant variable(`LISTENER_BUS_EVENT_QUEUE_PREFIX `) defined in `config/package.scala`.

* Update documentation for `spark.scheduler.listenerbus.eventqueue.capacity` in both `config/package.scala` and `docs/configuration.md`.

### Why are the changes needed?

* Better code maintainability

* Better user guidance of the conf

### Does this PR introduce any user-facing change?

No behavior changes but user will see the updated document.

### How was this patch tested?

Pass Jenkins.

Closes#26676 from Ngone51/SPARK-28574-followup.

Authored-by: wuyi <ngone_5451@163.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Use the same function (`codecName(conf: SparkConf)`) between `EventLogFileWriter` and `SparkContext` to get the consistent codec name for EventLogger.

### Why are the changes needed?

#24921 added a new conf for EventLogger's compression codec. We should reflect this change into `SparkContext` as well. Though I didn't find any places that `SparkContext.eventLogCodec` really takes an effect, I think it'd be better to have it as a right value.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass Jenkins.

Closes#26665 from Ngone51/consistent-eventLogCodec.

Authored-by: wuyi <ngone_5451@163.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This PR replace `TokenChecker` with `RegexChecker` in `scalastyle` and fixes the missed instances.

### Why are the changes needed?

This will remove the old `comons-lang2` dependency from `core` module

**BEFORE**

```

$ dev/scalastyle

Scalastyle checks failed at following occurrences:

[error] /Users/dongjoon/PRS/SPARK-SerializationUtils/core/src/test/scala/org/apache/spark/util/PropertiesCloneBenchmark.scala:23:7: Use Commons Lang 3 classes (package org.apache.commons.lang3.*) instead

[error] of Commons Lang 2 (package org.apache.commons.lang.*)

[error] Total time: 23 s, completed Nov 25, 2019 11:47:44 AM

```

**AFTER**

```

$ dev/scalastyle

Scalastyle checks passed.

```

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Pass the GitHub Action linter.

Closes#26666 from dongjoon-hyun/SPARK-29081-2.

Authored-by: Dongjoon Hyun <dhyun@apple.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

…sks metrics for disk store

### What changes were proposed in this pull request?

After https://github.com/apache/spark/pull/23088 task Summary table in the stage page shows successful tasks metrics for lnMemory store. In this PR, it added for disk store also.

### Why are the changes needed?

Now both InMemory and disk store will be consistent in showing the task summary table in the UI, if there are non successful tasks

### Does this PR introduce any user-facing change?

no

### How was this patch tested?

Added UT. Manually verified

Test steps:

1. add the config in spark-defaults.conf -> **spark.history.store.path /tmp/store**

2. sbin/start-hitoryserver

3. bin/spark-shell

4. `sc.parallelize(1 to 1000, 2).map(x => throw new Exception("fail")).count`

Closes#26508 from shahidki31/task.

Authored-by: shahid <shahidki31@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

This PR is adding the base classes needed for Stage level scheduling. Its adding a ResourceProfile and the executor and task resource request classes. These are made private for now until we get all the parts implemented, at which point this will become public interfaces. I am adding them first as all the other subtasks for this feature require these classes. If people have better ideas on breaking this feature up please let me know.

See https://issues.apache.org/jira/browse/SPARK-29415 for more detailed design.

### Why are the changes needed?

New API for stage level scheduling. Its easier to add these first because the other jira for this features will all use them.

### Does this PR introduce any user-facing change?

Yes adds API to create a ResourceProfile with executor/task resources, see the spip jira https://issues.apache.org/jira/browse/SPARK-27495

Example of the api:

val rp = new ResourceProfile()

rp.require(new ExecutorResourceRequest("cores", 2))

rp.require(new ExecutorResourceRequest("gpu", 1, Some("/opt/gpuScripts/getGpus")))

rp.require(new TaskResourceRequest("gpu", 1))

### How was this patch tested?

Tested using Unit tests added with this PR.

Closes#26284 from tgravescs/SPARK-29415.

Authored-by: Thomas Graves <tgraves@nvidia.com>

Signed-off-by: Thomas Graves <tgraves@apache.org>

### What changes were proposed in this pull request?

Add a new conf named `spark.shuffle.mapStatus.compression.codec` for user to decide which codec should be used(default by `zstd`) for `MapStatus` compression.

### Why are the changes needed?

We already have this functionality for `broadcast`/`rdd`/`shuffle`/`shuflleSpill`,

so it might be better to have the same functionality for `MapStatus` as well.

### Does this PR introduce any user-facing change?

Yes, user now could use `spark.shuffle.mapStatus.compression.codec` to decide which codec should be used during `MapStatus` compression.

### How was this patch tested?

N/A

Closes#26611 from Ngone51/SPARK-29939.

Authored-by: wuyi <ngone_5451@163.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Add extra classnames to table headers in EnvironmentPage tables in Spark UI.

### Why are the changes needed?

SparkUI uses sorttable.js to provide the sort functionality in different tables. This library tries to guess the datatype of each column during initialization phase - numeric/alphanumeric etc and uses it to sort the columns whenever user clicks on a column. That way it guesses incorrect data type for environment tab.

sorttable.js has way to hint the datatype of table columns explicitly. This is done by passing custom HTML class attribute.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manually tested sorting in tables in Environment tab in Spark UI.

Closes#26638 from prakharjain09/SPARK-29681-SPARK-UI-SORT.

Authored-by: Prakhar Jain <prakjai@microsoft.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Fix a bug related to Timelineview that does not preserve open/close state properly.

### Why are the changes needed?

To preserve open/close state is originally intended but it doesn't work.

### Does this PR introduce any user-facing change?

Yes. open/close state for Timeineview is to be preserved so if you open Timelineview in Stage page and go to another page, and then go back to Stage page, Timelineview should keep open.

### How was this patch tested?

Manual test.

Closes#26607 from sarutak/fix-timeline-view-state.

Authored-by: Kousuke Saruta <sarutak@oss.nttdata.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

In production environment, my PySpark application occurs an exception and it's message as below:

```

19/10/28 16:15:03 ERROR Executor: Exception in task 0.0 in stage 0.0 (TID 0)

org.apache.spark.SparkException: No port number in pyspark.daemon's stdout

at org.apache.spark.api.python.PythonWorkerFactory.startDaemon(PythonWorkerFactory.scala:204)

at org.apache.spark.api.python.PythonWorkerFactory.createThroughDaemon(PythonWorkerFactory.scala:122)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:95)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:117)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:108)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD$$anonfun$7.apply(RDD.scala:337)

at org.apache.spark.rdd.RDD$$anonfun$7.apply(RDD.scala:335)

at org.apache.spark.storage.BlockManager$$anonfun$doPutIterator$1.apply(BlockManager.scala:1182)

at org.apache.spark.storage.BlockManager$$anonfun$doPutIterator$1.apply(BlockManager.scala:1156)

at org.apache.spark.storage.BlockManager.doPut(BlockManager.scala:1091)

at org.apache.spark.storage.BlockManager.doPutIterator(BlockManager.scala:1156)

at org.apache.spark.storage.BlockManager.getOrElseUpdate(BlockManager.scala:882)

at org.apache.spark.rdd.RDD.getOrCompute(RDD.scala:335)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:286)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

```

At first, I think a physical node has many ports are occupied by a large number of processes.

But I found the total number of ports in use is only 671.

```

[yarnr1115 ~]$ netstat -a | wc -l 671

671

```

I checked the code of PythonWorkerFactory in line 204 and found:

```

daemon = pb.start()

val in = new DataInputStream(daemon.getInputStream)

try {

daemonPort = in.readInt()

} catch {

case _: EOFException =>

throw new SparkException(s"No port number in $daemonModule's stdout")

}

```

I added some code here:

```

logError("Meet EOFException, daemon is alive: ${daemon.isAlive()}")

logError("Exit value: ${daemon.exitValue()}")

```

Then I recurrent the exception and it's message as below:

```

19/10/28 16:15:03 ERROR PythonWorkerFactory: Meet EOFException, daemon is alive: false

19/10/28 16:15:03 ERROR PythonWorkerFactory: Exit value: 139

19/10/28 16:15:03 ERROR Executor: Exception in task 0.0 in stage 0.0 (TID 0)

org.apache.spark.SparkException: No port number in pyspark.daemon's stdout

at org.apache.spark.api.python.PythonWorkerFactory.startDaemon(PythonWorkerFactory.scala:206)

at org.apache.spark.api.python.PythonWorkerFactory.createThroughDaemon(PythonWorkerFactory.scala:122)

at org.apache.spark.api.python.PythonWorkerFactory.create(PythonWorkerFactory.scala:95)

at org.apache.spark.SparkEnv.createPythonWorker(SparkEnv.scala:117)

at org.apache.spark.api.python.BasePythonRunner.compute(PythonRunner.scala:108)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD$$anonfun$7.apply(RDD.scala:337)

at org.apache.spark.rdd.RDD$$anonfun$7.apply(RDD.scala:335)

at org.apache.spark.storage.BlockManager$$anonfun$doPutIterator$1.apply(BlockManager.scala:1182)

at org.apache.spark.storage.BlockManager$$anonfun$doPutIterator$1.apply(BlockManager.scala:1156)

at org.apache.spark.storage.BlockManager.doPut(BlockManager.scala:1091)

at org.apache.spark.storage.BlockManager.doPutIterator(BlockManager.scala:1156)

at org.apache.spark.storage.BlockManager.getOrElseUpdate(BlockManager.scala:882)

at org.apache.spark.rdd.RDD.getOrCompute(RDD.scala:335)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:286)

at org.apache.spark.api.python.PythonRDD.compute(PythonRDD.scala:65)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:52)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:288)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

```

I think the exception message has caused me a lot of confusion.

This PR will add meaningful log for exception information.

### Why are the changes needed?

In order to clarify the exception and try three times default.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Exists UT.

Closes#26510 from beliefer/improve-except-message.

Authored-by: gengjiaan <gengjiaan@360.cn>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

Use JUnit assertions in tests uniformly, not JVM assert() statements.

### Why are the changes needed?

assert() statements do not produce as useful errors when they fail, and, if they were somehow disabled, would fail to test anything.

### Does this PR introduce any user-facing change?

No. The assertion logic should be identical.

### How was this patch tested?

Existing tests.

Closes#26581 from srowen/assertToJUnit.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This PR update the local reader task number from 1 to multi `partitionStartIndices.length`.

### Why are the changes needed?

Improve the performance of local shuffle reader.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Existing UTs

Closes#26516 from JkSelf/improveLocalShuffleReader.

Authored-by: jiake <ke.a.jia@intel.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

When a task fails with OOM exception, the `UnsafeInMemorySorter.array` could be `null`. In the meanwhile, the `cleanupResources()` on task completion would call `UnsafeInMemorySorter.getMemoryUsage` in turn, and that lead to another NPE thrown.

### Why are the changes needed?

Check if `array` is null in `UnsafeInMemorySorter.getMemoryUsage` and it should help to avoid NPE.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

It was tested manually.

Closes#26349 from ayudovin/fix-npe-in-listener.

Authored-by: yudovin <artsiom.yudovin@profitero.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

Adding tooltip for Thread Dump - Thread Locks

Before:

After:

### Why are the changes needed?

Thread Dump tab do not have any tooltip for the columns, Some page provide tooltip , inorder to resolve the inconsistency and for better user experience.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Manual

Closes#26386 from PavithraRamachandran/threadDump_tooltip.

Authored-by: Pavithra Ramachandran <pavi.rams@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

This is a followup of https://github.com/apache/spark/pull/23977 I made a mistake related to this line: 3725b1324f (diff-71c2cad03f08cb5f6c70462aa4e28d3aL112)

Previously,

1. the reader iterator for R worker read some initial data eagerly during RDD materialization. So it read the data before actual execution. For some reasons, in this case, it showed standard error from R worker.

2. After that, when error happens during actual execution, stderr wasn't shown: 3725b1324f (diff-71c2cad03f08cb5f6c70462aa4e28d3aL260)

After my change 3725b1324f (diff-71c2cad03f08cb5f6c70462aa4e28d3aL112), it now ignores 1. case and only does 2. of previous code path, because 1. does not happen anymore as I avoided to such eager execution (which is consistent with PySpark code path).

This PR proposes to do only 1. before/after execution always because It is pretty much possible R worker was failed during actual execution and it's best to show the stderr from R worker whenever possible.

### Why are the changes needed?

It currently swallows standard error from R worker which makes debugging harder.

### Does this PR introduce any user-facing change?

Yes,

```R

df <- createDataFrame(list(list(n=1)))

collect(dapply(df, function(x) {

stop("asdkjasdjkbadskjbsdajbk")

x

}, structType("a double")))

```

**Before:**

```

Error in handleErrors(returnStatus, conn) :

org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 13.0 failed 1 times, most recent failure: Lost task 0.0 in stage 13.0 (TID 13, 192.168.35.193, executor driver): org.apache.spark.SparkException: R worker exited unexpectedly (cranshed)

at org.apache.spark.api.r.RRunner$$anon$1.read(RRunner.scala:130)

at org.apache.spark.api.r.BaseRRunner$ReaderIterator.hasNext(BaseRRunner.scala:118)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage2.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$1.hasNext(WholeStageCodegenExec.scala:726)

at org.apache.spark.sql.execution.SparkPlan.$anonfun$getByteArrayRdd$1(SparkPlan.scala:337)

at org.apache.spark.

```

**After:**

```

Error in handleErrors(returnStatus, conn) :

org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 1.0 failed 1 times, most recent failure: Lost task 0.0 in stage 1.0 (TID 1, 192.168.35.193, executor driver): org.apache.spark.SparkException: R unexpectedly exited.

R worker produced errors: Error in computeFunc(inputData) : asdkjasdjkbadskjbsdajbk

at org.apache.spark.api.r.BaseRRunner$ReaderIterator$$anonfun$1.applyOrElse(BaseRRunner.scala:144)

at org.apache.spark.api.r.BaseRRunner$ReaderIterator$$anonfun$1.applyOrElse(BaseRRunner.scala:137)

at scala.runtime.AbstractPartialFunction.apply(AbstractPartialFunction.scala:38)

at org.apache.spark.api.r.RRunner$$anon$1.read(RRunner.scala:128)

at org.apache.spark.api.r.BaseRRunner$ReaderIterator.hasNext(BaseRRunner.scala:113)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegen

```

### How was this patch tested?

Manually tested and unittest was added.

Closes#26517 from HyukjinKwon/SPARK-26923-followup.

Authored-by: HyukjinKwon <gurwls223@apache.org>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

This PR support defer render the spark UI page.

### Why are the changes needed?

When there are many items, such as tasks and application lists, the renderer of dataTables is heavy, we can enable deferRender to optimize it.

See details in https://datatables.net/examples/ajax/defer_render.html

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Not needed.

Closes#26482 from turboFei/SPARK-29857-defer-render.

Authored-by: turbofei <fwang12@ebay.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

We already know task attempts that do not clean up output files in staging directory can cause job failure (SPARK-27194). There was proposals trying to fix it by changing output filename, or deleting existing output files. These proposals are not reliable completely.

The difficulty is, as previous failed task attempt wrote the output file, at next task attempt the output file is still under same staging directory, even the output file name is different.

If the job will go to fail eventually, there is no point to re-run the task until max attempts are reached. For the jobs running a lot of time, re-running the task can waste a lot of time.

This patch proposes to let Spark detect such file already exist exception and stop the task set early.

### Why are the changes needed?

For now, if FileAlreadyExistsException is thrown during data writing job in SQL, the job will continue re-running task attempts until max failure number is reached. It is no point for re-running tasks as task attempts will also fail because they can not write to the existing file too. We should stop the task set early.

### Does this PR introduce any user-facing change?

Yes. If FileAlreadyExistsException is thrown during data writing job in SQL, no more task attempts are re-tried and the task set will be stoped early.

### How was this patch tested?

Unit test.

Closes#26312 from viirya/stop-taskset-if-outputfile-exists.

Authored-by: Liang-Chi Hsieh <viirya@gmail.com>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Add `LaunchedExecuto`r message and send it to the driver when the executor if fully constructed, then the driver can assign the associated executor's totalCores to freeCores for making offers.

### Why are the changes needed?

The executors send RegisterExecutor messages to the driver when onStart.

The driver put the executor data in “the ready to serve map” if it could be, then send RegisteredExecutor back to the executor. The driver now can make an offer to this executor.

But the executor is not fully constructed yet. When it received RegisteredExecutor, it start to construct itself, initializing block manager, maybe register to the local shuffle server in the way of retrying, then start the heart beating to driver ...

The task allocated here may fail if the executor fails to start or cannot get heart beating to the driver in time.

Sometimes, even worse, when dynamic allocation and blacklisting is enabled and when the runtime executor number down to min executor setting, and those executors receive tasks before fully constructed and if any error happens, the application may be blocked or tear down.

### Does this PR introduce any user-facing change?

NO

### How was this patch tested?

Closes#25964 from yaooqinn/SPARK-29287.

Authored-by: Kent Yao <yaooqinn@hotmail.com>

Signed-off-by: Xingbo Jiang <xingbo.jiang@databricks.com>

### What changes were proposed in this pull request?

With this change, executor's bindAddress is passed as an input parameter for RPCEnv.create.

A previous PR https://github.com/apache/spark/pull/21261 which addressed the same, was using a Spark Conf property to get the bindAddress which wouldn't have worked for multiple executors.

This PR is to enable anyone overriding CoarseGrainedExecutorBackend with their custom one to be able to invoke CoarseGrainedExecutorBackend.main() along with the option to configure bindAddress.

### Why are the changes needed?

This is required when Kernel-based Virtual Machine (KVM)'s are used inside Linux container where the hostname is not the same as container hostname.

### Does this PR introduce any user-facing change?

No

### How was this patch tested?

Tested by running jobs with executors on KVMs inside a linux container.

Closes#26331 from nishchalv/SPARK-29670.

Lead-authored-by: Nishchal Venkataramana <nishchal@apple.com>

Co-authored-by: nishchal <nishchal@apple.com>

Signed-off-by: DB Tsai <d_tsai@apple.com>

SPARK-29397 added new interfaces for creating driver and executor

plugins. These were added in a new, more isolated package that does

not pollute the main o.a.s package.

The old interface is now redundant. Since it's a DeveloperApi and

we're about to have a new major release, let's remove it instead of

carrying more baggage forward.

Closes#26390 from vanzin/SPARK-29399.

Authored-by: Marcelo Vanzin <vanzin@cloudera.com>

Signed-off-by: HyukjinKwon <gurwls223@apache.org>

### What changes were proposed in this pull request?

All tooltips message will display in centre.

### Why are the changes needed?

Some time tooltips will hide the data of column and tooltips display position will be inconsistent in UI.

### Does this PR introduce any user-facing change?

yes.

### How was this patch tested?

Manual test.

Closes#26263 from 07ARB/SPARK-29570.

Lead-authored-by: Ankitraj <8948111+07ARB@users.noreply.github.com>

Co-authored-by: 07ARB <ankitrajboudh@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

Executor's heartbeat will send synchronously to BlockManagerMaster to let it know that the block manager is still alive. In a heavy cluster, it will timeout and cause block manager re-register unexpected.

This improvement will separate a heartbeat endpoint from the driver endpoint. In our production environment, this was really helpful to prevent executors from unstable up and down.

### Why are the changes needed?

`BlockManagerMasterEndpoint` handles many events from executors like `RegisterBlockManager`, `GetLocations`, `RemoveShuffle`, `RemoveExecutor` etc. In a heavy cluster/app, it is always busy. The `BlockManagerHeartbeat` event also was handled in this endpoint. We found it may timeout when it's busy. So we add a new endpoint `BlockManagerMasterHeartbeatEndpoint` to handle heartbeat separately.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Exist UTs

Closes#25971 from LantaoJin/SPARK-29298.

Lead-authored-by: lajin <lajin@ebay.com>

Co-authored-by: Alan Jin <lajin@ebay.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

Print events that take too long time to process, to help find out what type of events is slow.

Introduce two extra configs:

* **spark.scheduler.listenerbus.logSlowEvent.enabled** Whether to enable log the events that are slow

* **spark.scheduler.listenerbus.logSlowEvent.threshold** The time threshold of whether an event is considered to be slow.

### How was this patch tested?

N/A

Closes#25702 from jiangxb1987/SPARK-29001.

Authored-by: Xingbo Jiang <xingbo.jiang@databricks.com>

Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request?

This patch adds `JsonDeserialize` annotation for the field which type is `Option[Long]` in LogInfo/AttemptInfoWrapper. It hits https://github.com/FasterXML/jackson-module-scala/wiki/FAQ#deserializing-optionint-and-other-primitive-challenges - other existing json models take care of this, but we missed to add annotation to these classes.

### Why are the changes needed?

Without this change, SHS will throw ClassNotFoundException when rebuilding App UI.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Manually tested.

Closes#26397 from HeartSaVioR/SPARK-29755.

Authored-by: Jungtaek Lim (HeartSaVioR) <kabhwan.opensource@gmail.com>

Signed-off-by: Marcelo Vanzin <vanzin@cloudera.com>

### What changes were proposed in this pull request?

The Spark metrics system produces many different metrics and not all of them are used at the same time. This proposes to introduce a configuration parameter to allow disabling the registration of metrics in the "static sources" category.

### Why are the changes needed?

This allows to reduce the load and clutter on the sink, in the cases when the metrics in question are not needed. The metrics registerd as "static sources" are under the namespaces CodeGenerator and HiveExternalCatalog and can produce a significant amount of data, as they are registered for the driver and executors.

### Does this PR introduce any user-facing change?

It introduces a new configuration parameter `spark.metrics.register.static.sources.enabled`

### How was this patch tested?

Manually tested.

```

$ cat conf/metrics.properties

*.sink.prometheusServlet.class=org.apache.spark.metrics.sink.PrometheusServlet

*.sink.prometheusServlet.path=/metrics/prometheus

master.sink.prometheusServlet.path=/metrics/master/prometheus

applications.sink.prometheusServlet.path=/metrics/applications/prometheus

$ bin/spark-shell

$ curl -s http://localhost:4040/metrics/prometheus/ | grep Hive

metrics_local_1573330115306_driver_HiveExternalCatalog_fileCacheHits_Count 0

metrics_local_1573330115306_driver_HiveExternalCatalog_filesDiscovered_Count 0

metrics_local_1573330115306_driver_HiveExternalCatalog_hiveClientCalls_Count 0

metrics_local_1573330115306_driver_HiveExternalCatalog_parallelListingJobCount_Count 0

metrics_local_1573330115306_driver_HiveExternalCatalog_partitionsFetched_Count 0

$ bin/spark-shell --conf spark.metrics.static.sources.enabled=false

$ curl -s http://localhost:4040/metrics/prometheus/ | grep Hive

```

Closes#26320 from LucaCanali/addConfigRegisterStaticMetrics.

Authored-by: Luca Canali <luca.canali@cern.ch>

Signed-off-by: Dongjoon Hyun <dhyun@apple.com>

### What changes were proposed in this pull request?

Explicitly clear registered metrics when `MetricsSystem` shuts down.

### Why are the changes needed?

See https://issues.apache.org/jira/browse/SPARK-29795 for a complete explanation. The TL;DR is there is some evidence this could leak resources after Spark is shut down, and that may be a minor issue in Spark 3+ for apps or tests that re-start SparkContexts in the same JVM.

### Does this PR introduce any user-facing change?

The possible difference here is that, after Spark is stopped, metrics are no longer available. It's unclear to me whether this is intended behavior anyway.

### How was this patch tested?

See https://issues.apache.org/jira/browse/SPARK-29795 for more context:

- Spark 3 already passes tests without this change

- Spark 2.4 does too, as exists in branch-2.4 now

- Spark 2.4 fails tests if metrics 4.x is used, without this change

The last point is not directly relevant, as Spark 2.4 will not use metrics 4.x. It's evidence that it addresses some potential issue, however.

Closes#26427 from srowen/SPARK-29795.

Authored-by: Sean Owen <sean.owen@databricks.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

### What changes were proposed in this pull request?

As it has been discussed in https://github.com/apache/spark/pull/26397#discussion_r343726691 `FsHistoryProvider` import section has to be cleaned up.

### Why are the changes needed?

Unused imports.

### Does this PR introduce any user-facing change?

No.

### How was this patch tested?

Existing unit tests.

Closes#26436 from gaborgsomogyi/SPARK-29755.

Authored-by: Gabor Somogyi <gabor.g.somogyi@gmail.com>

Signed-off-by: Sean Owen <sean.owen@databricks.com>

## What changes were proposed in this pull request?

This PR proposes to add **Single threading model design (pinned thread model)** mode which is an experimental mode to sync threads on PVM and JVM. See https://www.py4j.org/advanced_topics.html#using-single-threading-model-pinned-thread

### Multi threading model

Currently, PySpark uses this model. Threads on PVM and JVM are independent. For instance, in a different Python thread, callbacks are received and relevant Python codes are executed. JVM threads are reused when possible.